Professional Documents

Culture Documents

CIS 674 Introduction To Data Mining: Srinivasan Parthasarathy Srini@cse - Ohio-State - Edu Office Hours: TTH 2-3:18PM DL317

Uploaded by

Eko UtomoOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

CIS 674 Introduction To Data Mining: Srinivasan Parthasarathy Srini@cse - Ohio-State - Edu Office Hours: TTH 2-3:18PM DL317

Uploaded by

Eko UtomoCopyright:

Available Formats

Prentice Hall 1

CIS 674

Introduction to Data Mining

Srinivasan Parthasarathy

srini@cse.ohio-state.edu

Office Hours: TTH 2-3:18PM

DL317

Prentice Hall 2

Introduction Outline

Define data mining

Data mining vs. databases

Basic data mining tasks

Data mining development

Data mining issues

Goal: Provide an overview of data mining.

Prentice Hall 3

Introduction

Data is produced at a phenomenal rate

Our ability to store has grown

Users expect more sophisticated

information

How?

UNCOVER HIDDEN INFORMATION

DATA MINING

Prentice Hall 4

Data Mining

Objective: Fit data to a model

Potential Result: Higher-level meta

information that may not be obvious when

looking at raw data

Similar terms

Exploratory data analysis

Data driven discovery

Deductive learning

Prentice Hall 5

Data Mining Algorithm

Objective: Fit Data to a Model

Descriptive

Predictive

Preferential Questions

Which technique to choose?

ARM/Classification/Clustering

Answer: Depends on what you want to do with data?

Search Strategy Technique to search the data

Interface? Query Language?

Efficiency

Prentice Hall 6

Database Processing vs. Data

Mining Processing

Query

Well defined

SQL

Query

Poorly defined

No precise query language

Output

Precise

Subset of database

Output

Fuzzy

Not a subset of database

Prentice Hall 7

Query Examples

Database

Data Mining

Find all customers who have purchased milk

Find all items which are frequently purchased

with milk. (association rules)

Find all credit applicants with last name of Smith.

Identify customers who have purchased more

than $10,000 in the last month.

Find all credit applicants who are poor credit

risks. (classification)

Identify customers with similar buying habits.

(Clustering)

Prentice Hall 8

Data Mining Models and Tasks

Prentice Hall 9

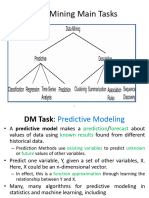

Basic Data Mining Tasks

Classification maps data into predefined

groups or classes

Supervised learning

Pattern recognition

Prediction

Regression is used to map a data item to a

real valued prediction variable.

Clustering groups similar data together into

clusters.

Unsupervised learning

Segmentation

Partitioning

Prentice Hall 10

Basic Data Mining Tasks

(contd)

Summarization maps data into subsets with

associated simple descriptions.

Characterization

Generalization

Link Analysis uncovers relationships among

data.

Affinity Analysis

Association Rules

Sequential Analysis determines sequential patterns.

Prentice Hall 11

Ex: Time Series Analysis

Example: Stock Market

Predict future values

Determine similar patterns over time

Classify behavior

Prentice Hall 12

Data Mining vs. KDD

Knowledge Discovery in Databases

(KDD): process of finding useful

information and patterns in data.

Data Mining: Use of algorithms to extract

the information and patterns derived by

the KDD process.

Knowledge Discovery Process

Data mining: the core of

knowledge discovery

process.

Data Cleaning

Data Integration

Databases

Preprocessed

Data

Task-relevant Data

Data transformations

Selection

Data Mining

Knowledge Interpretation

Prentice Hall 14

KDD Process Ex: Web Log

Selection:

Select log data (dates and locations) to use

Preprocessing:

Remove identifying URLs

Remove error logs

Transformation:

Sessionize (sort and group)

Data Mining:

Identify and count patterns

Construct data structure

Interpretation/Evaluation:

Identify and display frequently accessed sequences.

Potential User Applications:

Cache prediction

Personalization

Prentice Hall 15

Data Mining Development

Similarity Measures

Hierarchical Clustering

IR Systems

Imprecise Queries

Textual Data

Web Search Engines

Bayes Theorem

Regression Analysis

EM Algorithm

K-Means Clustering

Time Series Analysis

Neural Networks

Decision Tree Algorithms

Algorithm Design Techniques

Algorithm Analysis

Data Structures

Relational Data Model

SQL

Association Rule Algorithms

Data Warehousing

Scalability Techniques

HIGH PERFORMANCE

DATA MINING

Prentice Hall 16

KDD Issues

Human Interaction

Overfitting

Outliers

Interpretation

Visualization

Large Datasets

High Dimensionality

Prentice Hall 17

KDD Issues (contd)

Multimedia Data

Missing Data

Irrelevant Data

Noisy Data

Changing Data

Integration

Application

Prentice Hall 18

Social Implications of DM

Privacy

Profiling

Unauthorized use

Prentice Hall 19

Data Mining Metrics

Usefulness

Return on Investment (ROI)

Accuracy

Space/Time

Prentice Hall 20

Database Perspective on Data

Mining

Scalability

Real World Data

Updates

Ease of Use

Prentice Hall 21

Outline of Todays Class

Statistical Basics

Point Estimation

Models Based on Summarization

Bayes Theorem

Hypothesis Testing

Regression and Correlation

Similarity Measures

Prentice Hall 22

Point Estimation

Point Estimate: estimate a population

parameter.

May be made by calculating the parameter for a

sample.

May be used to predict value for missing data.

Ex:

R contains 100 employees

99 have salary information

Mean salary of these is $50,000

Use $50,000 as value of remaining employees

salary.

Is this a good idea?

Prentice Hall 23

Estimation Error

Bias: Difference between expected value and

actual value.

Mean Squared Error (MSE): expected value of

the squared difference between the estimate

and the actual value:

Why square?

Root Mean Square Error (RMSE)

Prentice Hall 24

Jackknife Estimate

J ackknife Estimate: estimate of parameter is

obtained by omitting one value from the set of

observed values.

Treat the data like a population

Take samples from this population

Use these samples to estimate the parameter

Let (hat) be an estimate on the entire pop.

Let

(j)

(hat) be an estimator of the same form

with observation j deleted

Allows you to examine the impact of outliers!

Prentice Hall 25

Maximum Likelihood

Estimate (MLE)

Obtain parameter estimates that maximize

the probability that the sample data occurs for

the specific model.

Joint probability for observing the sample

data by multiplying the individual probabilities.

Likelihood function:

Maximize L.

Prentice Hall 26

MLE Example

Coin toss five times: {H,H,H,H,T}

Assuming a perfect coin with H and T equally

likely, the likelihood of this sequence is:

However if the probability of a H is 0.8 then:

Prentice Hall 27

MLE Example (contd)

General likelihood formula:

Estimate for p is then 4/5 = 0.8

Prentice Hall 28

Expectation-Maximization (EM)

Solves estimation with incomplete data.

Obtain initial estimates for parameters.

Iteratively use estimates for missing data

and continue until convergence.

Prentice Hall 29

EM Example

Prentice Hall 30

EM Algorithm

Prentice Hall 31

Bayes Theorem Example

Credit authorizations (hypotheses):

h

1

=authorize purchase, h

2

= authorize after

further identification, h

3

=do not authorize,

h

4

= do not authorize but contact police

Assign twelve data values for all

combinations of credit and income:

From training data: P(h

1

) = 60%; P(h

2

)=20%;

P(h

3

)=10%; P(h

4

)=10%.

1 2 3 4

Excellent x

1

x

2

x

3

x

4

Good x

5

x

6

x

7

x

8

Bad x

9

x

10

x

11

x

12

Prentice Hall 32

Bayes Example(contd)

Training Data:

ID Income Credit Class x

i

1 4 Excellent h

1

x

4

2 3 Good h

1

x

7

3 2 Excellent h

1

x

2

4 3 Good h

1

x

7

5 4 Good h

1

x

8

6 2 Excellent h

1

x

2

7 3 Bad h

2

x

11

8 2 Bad h

2

x

10

9 3 Bad h

3

x

11

10 1 Bad h

4

x

9

Prentice Hall 33

Bayes Example(contd)

Calculate P(x

i

|h

j

) and P(x

i

)

Ex: P(x

7

|h

1

)=2/6; P(x

4

|h

1

)=1/6; P(x

2

|h

1

)=2/6;

P(x

8

|h

1

)=1/6; P(x

i

|h

1

)=0 for all other x

i

.

Predict the class for x

4

:

Calculate P(h

j

|x

4

) for all h

j

.

Place x

4

in class with largest value.

Ex:

P(h

1

|x

4

)=(P(x

4

|h

1

)(P(h

1

))/P(x

4

)

=(1/6)(0.6)/0.1=1.

x

4

in class h

1

.

Prentice Hall 34

Other Statistical Measures

Chi-Squared

O observed value

E Expected value based on hypothesis.

Jackknife Estimate

estimate of parameter is obtained by omitting one value from the

set of observed values.

Regression

Predict future values based on past values

Linear Regression assumes linear relationship exists.

y = c

0

+ c

1

x

1

+ + c

n

x

n

Find values to best fit the data

Correlation

Prentice Hall 35

Similarity Measures

Determine similarity between two objects.

Similarity characteristics:

Alternatively, distance measure measure how

unlike or dissimilar objects are.

Prentice Hall 36

Similarity Measures

Prentice Hall 37

Distance Measures

Measure dissimilarity between objects

Prentice Hall 38

Information Retrieval

Information Retrieval (IR): retrieving desired

information from textual data.

Library Science

Digital Libraries

Web Search Engines

Traditionally keyword based

Sample query:

Find all documents about data mining.

DM: Similarity measures;

Mine text/Web data.

Prentice Hall 39

Information Retrieval (contd)

Similarity: measure of how close a

query is to a document.

Documents which are close enough

are retrieved.

Metrics:

Precision = |Relevant and Retrieved|

|Retrieved|

Recall = |Relevant and Retrieved|

|Relevant|

Prentice Hall 40

IR Query Result Measures and

Classification

IR Classification

You might also like

- Alternate Tuning Guide: Bill SetharesDocument96 pagesAlternate Tuning Guide: Bill SetharesPedro de CarvalhoNo ratings yet

- 3d Control Sphere Edge and Face StudyDocument4 pages3d Control Sphere Edge and Face Studydjbroussard100% (2)

- Dep 32.32.00.11-Custody Transfer Measurement Systems For LiquidDocument69 pagesDep 32.32.00.11-Custody Transfer Measurement Systems For LiquidDAYONo ratings yet

- 02.data Preprocessing PDFDocument31 pages02.data Preprocessing PDFsunil100% (1)

- Business AnalyticsDocument21 pagesBusiness Analyticssharath_rakkiNo ratings yet

- DTS Modul Data Science MethodologyDocument56 pagesDTS Modul Data Science Methodologydancent sutanto100% (1)

- CIS 674 Introduction To Data Mining: Srinivasan Parthasarathy Srini@cse - Ohio-State - Edu Office Hours: TTH 2-3:18PM DL317Document40 pagesCIS 674 Introduction To Data Mining: Srinivasan Parthasarathy Srini@cse - Ohio-State - Edu Office Hours: TTH 2-3:18PM DL317Deepak SomanNo ratings yet

- Part 1Document40 pagesPart 1chocteddyNo ratings yet

- Data Mining Unit 1Document91 pagesData Mining Unit 1ChellapandiNo ratings yet

- 2.1 Introduction To Satistical Data AnalysisDocument15 pages2.1 Introduction To Satistical Data Analysisshan4600No ratings yet

- Presentation 1Document28 pagesPresentation 1Nisar MohammadNo ratings yet

- Data Science PDFDocument11 pagesData Science PDFsredhar sNo ratings yet

- CAS CS 565, Data MiningDocument30 pagesCAS CS 565, Data MiningKutale TukuraNo ratings yet

- Unit 4 Intro DMDocument30 pagesUnit 4 Intro DMJuee JamsandekarNo ratings yet

- What Is Data Mining?: Dama-NcrDocument36 pagesWhat Is Data Mining?: Dama-NcrPallavi DikshitNo ratings yet

- What Is Data Mining?: Dama-NcrDocument36 pagesWhat Is Data Mining?: Dama-NcrGautam AndavarapuNo ratings yet

- Introduction To Big Data & Basic Data AnalysisDocument47 pagesIntroduction To Big Data & Basic Data AnalysismaninagaNo ratings yet

- Data MiningDocument26 pagesData MiningJam OneNo ratings yet

- DIgitization Week 7Document6 pagesDIgitization Week 7Ilion BarbosoNo ratings yet

- Data MiningDocument29 pagesData MiningMiel9226No ratings yet

- Lecture-4: Introduction To Data ScienceDocument41 pagesLecture-4: Introduction To Data ScienceSaif Ali KhanNo ratings yet

- UNIT I - Introduction - DataScience - NewDocument34 pagesUNIT I - Introduction - DataScience - NewSid SNo ratings yet

- Week 1Document54 pagesWeek 1veceki2439No ratings yet

- Data Warehousing and MiningDocument56 pagesData Warehousing and Mininglucky28augustNo ratings yet

- IOT DomainDocument70 pagesIOT DomainLucky MahantoNo ratings yet

- Dwina DM 03 Persiapan 2018Document82 pagesDwina DM 03 Persiapan 2018Hanny Febrii ElizabethNo ratings yet

- Unit 1 - Exploratory Data Analysis FundamentalsDocument47 pagesUnit 1 - Exploratory Data Analysis Fundamentalspatilamrutak2003No ratings yet

- Data Analytics: The Process of Inspecting, Celaning & Transforming, Modeling Data For Business Decision MakingDocument21 pagesData Analytics: The Process of Inspecting, Celaning & Transforming, Modeling Data For Business Decision MakingSabrina ShaikNo ratings yet

- Cosc6211 Advanced Concepts in Data Mining: WeekendDocument33 pagesCosc6211 Advanced Concepts in Data Mining: Weekendjemal yahyaaNo ratings yet

- Data Mining & Visualization: Submitted By: Anubhooti Gupta 08PG0347Document15 pagesData Mining & Visualization: Submitted By: Anubhooti Gupta 08PG0347Vinay ThanduNo ratings yet

- What Is Data Mining?: Dama-NcrDocument36 pagesWhat Is Data Mining?: Dama-NcrSam KameNo ratings yet

- Week 1-2Document3 pagesWeek 1-2Âįmân ŚhêikhNo ratings yet

- Data Mining: Preparing DataDocument83 pagesData Mining: Preparing Dataicobes urNo ratings yet

- Bda 4Document18 pagesBda 4indusunkari200No ratings yet

- Jigsaw Academy-Foundation Course Topic DetailsDocument10 pagesJigsaw Academy-Foundation Course Topic DetailsAtul SinghNo ratings yet

- Lab 1Document21 pagesLab 1Lili WeiNo ratings yet

- Data Mining Process: Dr. Gaurav DixitDocument18 pagesData Mining Process: Dr. Gaurav DixitParmarth KhannaNo ratings yet

- Notes Module 2Document28 pagesNotes Module 2Tejaswini GirishNo ratings yet

- Intro Generative AI PCADocument49 pagesIntro Generative AI PCAShinyDharNo ratings yet

- Data MiningDocument87 pagesData MiningMegha ShenoyNo ratings yet

- Data Mining PreprocessingDocument82 pagesData Mining PreprocessingTri Indah SariNo ratings yet

- Data Mining Techniques for Classification and PredictionDocument79 pagesData Mining Techniques for Classification and PredictionNora AsteriaNo ratings yet

- CS2032 2 Marks & 16 Marks With AnswersDocument30 pagesCS2032 2 Marks & 16 Marks With AnswersThiyagarajan Ganesan100% (1)

- ppt 2.1.1 (1)Document9 pagesppt 2.1.1 (1)20bcs3236 ayush pratap singhNo ratings yet

- Data Analytics TechniquesDocument71 pagesData Analytics TechniquesLeonard Andrew MesieraNo ratings yet

- Bia Unit-3 Part-2Document43 pagesBia Unit-3 Part-2Nishant GuptaNo ratings yet

- Intro To Data and Data ScienceDocument9 pagesIntro To Data and Data Sciencemajorproject611No ratings yet

- CPS 196.03: Information Management and Mining: Shivnath BabuDocument30 pagesCPS 196.03: Information Management and Mining: Shivnath BabuAlokaShivakumarNo ratings yet

- Bi 348 Chapter 01Document11 pagesBi 348 Chapter 01Yvonne YangNo ratings yet

- Moving From Business Analyst To Data Analyst To Data Scientist (PDFDrive)Document51 pagesMoving From Business Analyst To Data Analyst To Data Scientist (PDFDrive)شريف ناجي عبد الجيد محمدNo ratings yet

- Chapter 0 Intorduction To MLDocument26 pagesChapter 0 Intorduction To MLStray DogNo ratings yet

- Association Rule Mining With RDocument58 pagesAssociation Rule Mining With Rwirapong chansanamNo ratings yet

- Data Mining - GDi Techno SolutionsDocument145 pagesData Mining - GDi Techno SolutionsGdi Techno SolutionsNo ratings yet

- Data Analysis and Interpretation: IpdetDocument55 pagesData Analysis and Interpretation: IpdetJaved KhanNo ratings yet

- 3 DM ClassificationDocument55 pages3 DM Classificationdawit gebreyohansNo ratings yet

- Chapter 2 Research Methods - Lecture 4 Part 1Document9 pagesChapter 2 Research Methods - Lecture 4 Part 1mhlongoandiswa002No ratings yet

- CSC 3301-Lecture06 Introduction To Machine LearningDocument56 pagesCSC 3301-Lecture06 Introduction To Machine LearningAmalienaHilmyNo ratings yet

- BANA7038 Chapter 01 (3feDocument33 pagesBANA7038 Chapter 01 (3feAshish GuptaNo ratings yet

- Introduction To Big Data & Basic Data AnalysisDocument47 pagesIntroduction To Big Data & Basic Data Analysissuyashdwivedi23No ratings yet

- Iba Pre Mid SemDocument65 pagesIba Pre Mid SemRenu Poddar100% (1)

- Data Science Revealed: With Feature Engineering, Data Visualization, Pipeline Development, and Hyperparameter TuningFrom EverandData Science Revealed: With Feature Engineering, Data Visualization, Pipeline Development, and Hyperparameter TuningNo ratings yet

- data science course training in india hyderabad: innomatics research labsFrom Everanddata science course training in india hyderabad: innomatics research labsNo ratings yet

- Technical Specification of Heat Pumps ElectroluxDocument9 pagesTechnical Specification of Heat Pumps ElectroluxAnonymous LDJnXeNo ratings yet

- Google Earth Learning Activity Cuban Missile CrisisDocument2 pagesGoogle Earth Learning Activity Cuban Missile CrisisseankassNo ratings yet

- Guia de Usuario GPS Spectra SP80 PDFDocument118 pagesGuia de Usuario GPS Spectra SP80 PDFAlbrichs BennettNo ratings yet

- Emergency Management of AnaphylaxisDocument1 pageEmergency Management of AnaphylaxisEugene SandhuNo ratings yet

- CTR Ball JointDocument19 pagesCTR Ball JointTan JaiNo ratings yet

- Grading System The Inconvenient Use of The Computing Grades in PortalDocument5 pagesGrading System The Inconvenient Use of The Computing Grades in PortalJm WhoooNo ratings yet

- Journals OREF Vs ORIF D3rd RadiusDocument9 pagesJournals OREF Vs ORIF D3rd RadiusironNo ratings yet

- CS709 HandoutsDocument117 pagesCS709 HandoutsalexNo ratings yet

- Form 4 Additional Mathematics Revision PatDocument7 pagesForm 4 Additional Mathematics Revision PatJiajia LauNo ratings yet

- Ball Valves Pentair Valves and ControlsDocument16 pagesBall Valves Pentair Valves and ControlsABDUL KADHARNo ratings yet

- Gapped SentencesDocument8 pagesGapped SentencesKianujillaNo ratings yet

- Oxygen Cost and Energy Expenditure of RunningDocument7 pagesOxygen Cost and Energy Expenditure of Runningnb22714No ratings yet

- ArDocument26 pagesArSegunda ManoNo ratings yet

- Command List-6Document3 pagesCommand List-6Carlos ArbelaezNo ratings yet

- Uses and Soxhlet Extraction of Apigenin From Parsley Petroselinum CrispumDocument6 pagesUses and Soxhlet Extraction of Apigenin From Parsley Petroselinum CrispumEditor IJTSRDNo ratings yet

- 202112fuji ViDocument2 pages202112fuji ViAnh CaoNo ratings yet

- Open Far CasesDocument8 pagesOpen Far CasesGDoony8553No ratings yet

- Todo Matic PDFDocument12 pagesTodo Matic PDFSharrife JNo ratings yet

- EA Linear RegressionDocument3 pagesEA Linear RegressionJosh RamosNo ratings yet

- Sinclair User 1 Apr 1982Document68 pagesSinclair User 1 Apr 1982JasonWhite99No ratings yet

- Form 709 United States Gift Tax ReturnDocument5 pagesForm 709 United States Gift Tax ReturnBogdan PraščevićNo ratings yet

- Lecture NotesDocument6 pagesLecture NotesRawlinsonNo ratings yet

- Srimanta Sankaradeva Universityof Health SciencesDocument3 pagesSrimanta Sankaradeva Universityof Health SciencesTemple RunNo ratings yet

- Test Bank For Fundamental Financial Accounting Concepts 10th by EdmondsDocument18 pagesTest Bank For Fundamental Financial Accounting Concepts 10th by Edmondsooezoapunitory.xkgyo4100% (47)

- Big Joe Pds30-40Document198 pagesBig Joe Pds30-40mauro garciaNo ratings yet

- SOP-for RecallDocument3 pagesSOP-for RecallNilove PervezNo ratings yet

- Breaking NewsDocument149 pagesBreaking NewstigerlightNo ratings yet