Professional Documents

Culture Documents

Operating System 5

Uploaded by

Seham123123Original Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Operating System 5

Uploaded by

Seham123123Copyright:

Available Formats

CPU Scheduling

Basic Concepts

Scheduling Criteria

Scheduling Algorithms

Multiple-Processor Scheduling

Thread Scheduling

UNIX example

Basic Concepts

Maximum CPU utilization obtained

with multiprogramming

CPUI/O Burst Cycle Process

execution consists of a cycle of CPU

execution and I/O wait

CPU times are generally much

shorter than I/O times.

CPU-I/O Burst Cycle

Process A

Process B

Histogram of CPU-burst Times

Schedulers

Process migrates among several queues

Device queue, job queue, ready queue

Scheduler selects a process to run from these queues

Long-term scheduler:

load a job in memory

Runs infrequently

Short-term scheduler:

Select ready process to run on CPU

Should be fast

Middle-term scheduler

Reduce multiprogramming or memory consumption

CPU Scheduler

CPU scheduling decisions may take place when a

process:

1. Switches from running to waiting state (by sleep).

2. Switches from running to ready state (by yield).

3. Switches from waiting to ready (by an interrupt).

4. Terminates (by exit).

Scheduling under 1 and 4 is nonpreemptive.

All other scheduling is preemptive.

Dispatcher

Dispatcher module gives control of the

CPU to the process selected by the short-

term scheduler; this involves:

switching context

switching to user mode

jumping to the proper location in the user

program to restart that program

Dispatch latency time it takes for the

dispatcher to stop one process and start

another running

Scheduling Criteria

CPU utilization keep the CPU as busy as possible

Throughput # of processes that complete their execution

per time unit

Turnaround time (TAT) amount of time to execute a

particular process

Waiting time amount of time a process has been waiting

in the ready queue

Response time amount of time it takes from when a

request was submitted until the first response is produced,

not output (for time-sharing environment)

The perfect CPU scheduler

Minimize latency: response or job completion time

Maximize throughput: Maximize jobs / time.

Maximize utilization: keep I/O devices busy.

Recurring theme with OS scheduling

Fairness: everyone makes progress, no one starves

Scheduling Algorithms FCFS

First-come First-served (FCFS) (FIFO)

Jobs are scheduled in order of arrival

Non-preemptive

Problem:

Average waiting time depends on arrival order

Troublesome for time-sharing systems

Convoy effect short process behind long process

Advantage: really simple!

First Come First Served

Scheduling

Example: Process Burst Time

P

1

24

P

2

3

P

3

3

Suppose that the processes arrive in the order: P

1

,

P

2

, P

3

Suppose that the processes arrive in the order: P

2

,

P

3

, P

1

.

P

1

P

3

P

2

6 3 30 0

P

1

P

2

P

3

24 27 30 0

Waiting time for P

1

= 0; P

2

= 24; P

3

= 27

Average waiting time: (0 + 24 + 27)/3 = 17

Waiting time for P

1

= 6;

P

2

= 0

;

P

3

= 3

Average waiting time: (6 + 0 + 3)/3 = 3

Shortest-Job-First (SJR)

Scheduling

Associate with each process the length of its

next CPU burst. Use these lengths to schedule

the process with the shortest time

Two schemes:

nonpreemptive once CPU given to the process it

cannot be preempted until completes its CPU burst

preemptive if a new process arrives with CPU burst

length less than remaining time of current executing

process, preempt. This scheme is know as the

Shortest-Remaining-Time-First (SRTF)

SJF is optimal gives minimum average waiting

time for a given set of processes

Shortest Job First Scheduling

Example: Process Arrival Time Burst Time

P

1

0 7

P

2

2 4

P

3

4 1

P

4

5 4

Non preemptive SJF

P

1

P

3

P

2

7

P

1

(7)

16 0

P

4

8 12

Average waiting time = (0 + 6 + 3 + 7)/4 = 4

2

4 5

P

2

(4)

P

3

(1)

P

4

(4)

P

1

s wating time = 0

P

2

s wating time = 6

P

3

s wating time = 3

P

4

s wating time = 7

Shortest Job First Scheduling

Contd

Example: Process Arrival Time Burst Time

P

1

0 7

P

2

2 4

P

3

4 1

P

4

5 4

Preemptive SJF

P

1

P

3

P

2

4 2

11 0

P

4

5 7

P

2

P

1

16

Average waiting time = (9 + 1 + 0 +2)/4 = 3

P

1

(7)

P

2

(4)

P

3

(1)

P

4

(4)

P

1

s wating time = 9

P

2

s wating time = 1

P

3

s wating time = 0

P

4

s wating time = 2

P

1

(5)

P

2

(2)

Burst

Time

Arrival

Time

Process

5 0 P

1

3 2 P

2

1 4 P

3

3 5 P

4

P

1

P

3

P

2

7 0

P

4

9

11

5

2 p2

p1=3

p2=3 p1

p1 , p2

burst time

4 p3=1

p1=1

p1

burst time

p1 5

process

process

burst time

p3 p2 p4

Shortest Job First Scheduling

Contd

Optimal scheduling

However, there are no accurate

estimations to know the length of the next

CPU burst

Optimal for minimizing queueing time, but impossible to

implement. Tries to predict the process to schedule based on

previous history.

Predicting the time the process will use on its next schedule:

t( n+1 ) = w * t( n ) + ( 1 - w ) * T( n )

Here: t(n+1) is time of next burst.

t(n) is time of current burst.

T(n) is average of all previous bursts .

W is a weighting factor emphasizing current or previous

bursts.

Shortest Job First Scheduling

Contd

A priority number (integer) is associated with each process

The CPU is allocated to the process with the highest priority

(smallest integer highest priority in Unix but lowest in Java).

Preemptive

Non-preemptive

SJF is a priority scheduling where priority is the predicted next CPU

burst time.

Problem Starvation low priority processes may never execute.

Solution Aging as time progresses increase the priority of the

process.

Priority Scheduling

Round Robin (RR)

Each process gets a small unit of CPU time

(time quantum), usually 10-100 milliseconds.

After this time has elapsed, the process is

preempted and added to the end of the ready

queue.

If there are n processes in the ready queue and

the time quantum is q, then each process gets

1/n of the CPU time in chunks of at most q time

units at once. No process waits more than (n-

1)q time units.

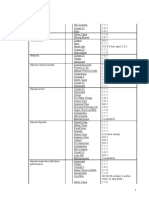

time quantum = 20

Process Burst Time Wait Time

P

1

53 57 +24 = 81

P

2

17 20

P

3

68 37 + 40 + 17= 94

P

4

24 57 + 40 = 97

Round Robin Scheduling

P

1

P

2

P

3

P

4

P

1

P

3

P

4

P

1

P

3

P

3

0 20 37 57 77 97 117 121 134 154 162

Average wait time = (81+20+94+97)/4 = 73

57

20

37

57

24

40

40

17

P

1

(53)

P

2

(17)

P

3

(68)

P

4

(24)

P

1

(33) P

1

(13)

P

3

(48)

P

3

(28)

P

3

(8)

P

4

(4)

Typically, higher average turnaround than SJF, but better

response.

Performance

q large FCFS

q small q must be large with respect to context switch,

otherwise overhead is too high.

Round Robin Scheduling

Turnaround Time Varies With

The Time Quantum

TAT can be improved if most process

finish their next CPU burst in a single

time quantum.

Multilevel Queue

Ready queue is partitioned into separate queues:

EX:

foreground (interactive)

background (batch)

Each queue has its own scheduling algorithm

EX

foreground RR

background FCFS

Scheduling must be done between the queues

Fixed priority scheduling; (i.e., serve all from foreground then from

background). Possibility of starvation.

Time slice each queue gets a certain amount of CPU time which it can

schedule amongst its processes;

EX

80% to foreground in RR

20% to background in FCFS

Multilevel Queue Scheduling

Multi-level Feedback Queues

Implement multiple ready queues

Different queues may be scheduled using different algorithms

Just like multilevel queue scheduling, but assignments are not static

Jobs move from queue to queue based on feedback

Feedback = The behavior of the job,

EX does it require the full quantum for computation, or

does it perform frequent I/O ?

Need to select parameters for:

Number of queues

Scheduling algorithm within each queue

When to upgrade and downgrade a job

Example of Multilevel Feedback

Queue

Three queues:

Q

0

RR with time quantum 8 milliseconds

Q

1

RR time quantum 16 milliseconds

Q

2

FCFS

Scheduling

A new job enters queue Q

0

which is served FCFS.

When it gains CPU, job receives 8 milliseconds (RR).

If it does not finish in 8 milliseconds, job is moved to

queue Q

1

.

At Q

1

job is again served FCFS and receives 16

additional milliseconds (RR). If it still does not

complete, it is preempted and moved to queue Q

2

.

AT Q

2

job is served FCFS

Multilevel Feedback Queues

Multiple-Processor Scheduling

CPU scheduling more complex when multiple

CPUs are available

Different rules for homogeneous or heterogeneous

processors.

Load sharing in the distribution of work, such that

all processors have an equal amount to do.

Asymmetric multiprocessing only one processor

accesses the system data structures, alleviating

the need for data sharing

Symmetric multiprocessing (SMP) each

processor is self-scheduling

Each processor can schedule from a common ready

queue OR each one can use a separate ready queue.

Thread Scheduling

On operating system that support threads the

kernel-threads (not processes) that are being

scheduled by the operating system.

Local Scheduling (process-contention-scope

PCS ) How the threads library decides which

thread to put onto an available LWP

PTHREAD_SCOPE_PROCESS

Global Scheduling (system-contention-scope

SCS ) How the kernel decides which kernel

thread to run next

PTHREAD_SCOPE_PROCESS

Linux Scheduling

Two algorithms: time-sharing and real-time

Time-sharing

Prioritized credit-based process with most credits is

scheduled next

Credit subtracted when timer interrupt occurs

When credit = 0, another process chosen

When all processes have credit = 0, recrediting occurs

Based on factors including priority and history

Real-time

Defined by Posix.1b

Real time Tasks assigned static priorities. All other tasks

have dynamic (changeable) priorities.

The Relationship Between Priorities and

Time-slice length

List of Tasks Indexed According to

Prorities

Conclusion

Weve looked at a number of different

scheduling algorithms.

Which one works the best is application

dependent.

General purpose OS will use priority based,

round robin, preemptive

Real Time OS will use priority, no

preemption.

References

Some slides from

Text book slides

Kelvin Sung - University of Washington,

Bothell

Jerry Breecher - WPI

Einar Vollset - Cornell University

You might also like

- Web DevelopmentDocument10 pagesWeb DevelopmentTeam MarketingNo ratings yet

- Python ProgramsDocument6 pagesPython ProgramsdsreNo ratings yet

- Structured Analysis And Design Technique A Complete Guide - 2020 EditionFrom EverandStructured Analysis And Design Technique A Complete Guide - 2020 EditionNo ratings yet

- Unit-2 Operating SystemDocument74 pagesUnit-2 Operating SystemSaroj VarshneyNo ratings yet

- Programming For Problem Solving-NotesDocument52 pagesProgramming For Problem Solving-NotesVeeramaniNo ratings yet

- Operating SystemDocument20 pagesOperating SystemTony StarkNo ratings yet

- Os Process Scheduling AlgorithmsDocument4 pagesOs Process Scheduling Algorithmsثقف نفسكNo ratings yet

- Module 1Document11 pagesModule 1Rishav GoelNo ratings yet

- The Cocomo ModelDocument9 pagesThe Cocomo ModelSukhveer SangheraNo ratings yet

- PIC - Module 1 - Part 1Document12 pagesPIC - Module 1 - Part 1ParameshNo ratings yet

- Code of Ethics (Corporate or Business Ethics)Document19 pagesCode of Ethics (Corporate or Business Ethics)arnab_bhattacharj_26100% (1)

- OOPS TrainingDocument37 pagesOOPS Trainingkris2tmgNo ratings yet

- Cocomo PDFDocument14 pagesCocomo PDFPriyanshi TiwariNo ratings yet

- Introduction To C++ OOPs ConceptDocument20 pagesIntroduction To C++ OOPs ConceptANKIT YADAVNo ratings yet

- UNIT-1 Assembly Language ProgrammingDocument30 pagesUNIT-1 Assembly Language ProgrammingIshan Tiwari100% (1)

- C Programming While and Do..Document7 pagesC Programming While and Do..Durga DeviNo ratings yet

- Chapter 12345question AnswersDocument37 pagesChapter 12345question AnswershkgraoNo ratings yet

- 01 Introduction of System ProgrammingDocument12 pages01 Introduction of System ProgrammingSrinu RaoNo ratings yet

- Basic Computer Skills WorksheetDocument4 pagesBasic Computer Skills WorksheetDharma LingamNo ratings yet

- Introduction To HCI-Lect#1Document29 pagesIntroduction To HCI-Lect#1zain199012No ratings yet

- Object Oriented Programming CS504D SyllabusDocument2 pagesObject Oriented Programming CS504D SyllabusSuvadeep BhattacharjeeNo ratings yet

- DSA NotesDocument87 pagesDSA NotesAtefrachew SeyfuNo ratings yet

- Data Structures Lab Manual PDFDocument0 pagesData Structures Lab Manual PDFayjagadish0% (1)

- Introduction To Computer Security'Document19 pagesIntroduction To Computer Security'Aarti SoniNo ratings yet

- Operating SystemDocument74 pagesOperating Systemsunnysaini090No ratings yet

- C++ ProgramsDocument7 pagesC++ Programsdnimish100% (1)

- Classical IPC ProblemsDocument15 pagesClassical IPC ProblemsFariha Nuzhat MajumdarNo ratings yet

- Basic Concepts of Object Oriented ProgrammingDocument3 pagesBasic Concepts of Object Oriented ProgrammingHEMALATHANo ratings yet

- PHP BasicsDocument37 pagesPHP Basicsramragav0% (1)

- Computer Network Lab PDFDocument79 pagesComputer Network Lab PDFSAFIKURESHI MONDALNo ratings yet

- # All Words Fullform Related ComputerDocument8 pages# All Words Fullform Related ComputerPushpa Rani100% (1)

- Introduction To OpenOffice CalcDocument7 pagesIntroduction To OpenOffice CalcAina Shoib100% (1)

- Review of Structured Programming in CDocument64 pagesReview of Structured Programming in CSahil AhujaNo ratings yet

- Operating System Assignment For SYBCA: The Following Assignment Have To Be Submitted On 23 Jan 2012. OS QuestionsDocument3 pagesOperating System Assignment For SYBCA: The Following Assignment Have To Be Submitted On 23 Jan 2012. OS QuestionsPriti Khemani TalrejaNo ratings yet

- Object Oriented ApproachDocument3 pagesObject Oriented ApproachGaurav SharmaNo ratings yet

- Intro - To - Data Structure - Lec - 1Document29 pagesIntro - To - Data Structure - Lec - 1Xafran KhanNo ratings yet

- Object Oriented Programming Using C++: ETCS-258Document27 pagesObject Oriented Programming Using C++: ETCS-258nin culusNo ratings yet

- Computer Networks Notes (17CS52) PDFDocument174 pagesComputer Networks Notes (17CS52) PDFKeerthiNo ratings yet

- CommunicationDocument26 pagesCommunicationAnamika SainiNo ratings yet

- Digital Computer: Hardware SoftwareDocument13 pagesDigital Computer: Hardware SoftwareAshis MeherNo ratings yet

- ED Viva Questions PDFDocument27 pagesED Viva Questions PDFAYUSH ACHARYANo ratings yet

- Principles of Operating System Questions and AnswersDocument15 pagesPrinciples of Operating System Questions and Answerskumar nikhilNo ratings yet

- Programming LanguageDocument1 pageProgramming Languagekj201992No ratings yet

- Round Robin Algorithm With ExamplesDocument6 pagesRound Robin Algorithm With ExamplesSikandar Javid100% (1)

- 1 Introduction To OS NotesDocument5 pages1 Introduction To OS NotesCarlo GatonNo ratings yet

- Real Time Operating SystemsDocument35 pagesReal Time Operating SystemsNeerajBooraNo ratings yet

- Introduction To OOPS and C++Document48 pagesIntroduction To OOPS and C++Pooja Anjali100% (1)

- QuizDocument7 pagesQuizPiyush KhandelwalNo ratings yet

- Web EngineeringDocument21 pagesWeb EngineeringMohsin GNo ratings yet

- C Programming Important QuestionsDocument6 pagesC Programming Important QuestionsamardotsNo ratings yet

- Operating System MCA33Document173 pagesOperating System MCA33api-3732063100% (2)

- Module Csc211Document12 pagesModule Csc211happy dacasinNo ratings yet

- What Is A ComputerDocument4 pagesWhat Is A ComputerMehfooz Ur RehmanNo ratings yet

- Object Oriented Programming Using C++Document16 pagesObject Oriented Programming Using C++prassuNo ratings yet

- Viva Questions-Cpp-CbseDocument5 pagesViva Questions-Cpp-Cbsekhyati007100% (1)

- OPERATING SYSTEM - WordDocument16 pagesOPERATING SYSTEM - WordlinuNo ratings yet

- Tutorial Basic of C++ Lesson 1 enDocument7 pagesTutorial Basic of C++ Lesson 1 enqrembiezsNo ratings yet

- CPU ScheduleDocument29 pagesCPU ScheduleGaganSharma100% (1)

- Propositional LogicDocument26 pagesPropositional LogicSeham123123No ratings yet

- Signed and Unsigned NumbersDocument37 pagesSigned and Unsigned NumbersSeham123123No ratings yet

- Digital Logic & DesignDocument61 pagesDigital Logic & DesignSeham123123No ratings yet

- Feasibility Analysis and Requirements DeterminationDocument64 pagesFeasibility Analysis and Requirements DeterminationSeham123123No ratings yet

- Chapter 3Document38 pagesChapter 3Seham123123No ratings yet

- SeizuresDocument50 pagesSeizuresPoonam AggarwalNo ratings yet

- SeizuresDocument50 pagesSeizuresPoonam AggarwalNo ratings yet

- Operating System 4Document33 pagesOperating System 4Seham123123No ratings yet

- L4 - XHTML 3Document11 pagesL4 - XHTML 3Seham123123No ratings yet

- L2 - XHTML 1Document47 pagesL2 - XHTML 1Seham123123No ratings yet

- Question 1: Two Students Are Building A Robot To Participate in The LocalDocument2 pagesQuestion 1: Two Students Are Building A Robot To Participate in The LocalSeham123123No ratings yet

- Uml ObjectiveDocument10 pagesUml Objectivevipul_garadNo ratings yet

- Academic Events Searching System (AES) : CS305 Software EngineeringDocument4 pagesAcademic Events Searching System (AES) : CS305 Software EngineeringSeham123123No ratings yet

- Question 1: Two Students Are Building A Robot To Participate in The LocalDocument2 pagesQuestion 1: Two Students Are Building A Robot To Participate in The LocalSeham123123No ratings yet

- Modern Systems Analysis and Design: Managing The Information Systems ProjectDocument17 pagesModern Systems Analysis and Design: Managing The Information Systems ProjectSeham123123No ratings yet

- Modern Systems Analysis and Design: Managing The Information Systems ProjectDocument17 pagesModern Systems Analysis and Design: Managing The Information Systems ProjectSeham123123No ratings yet

- Afectiuni Si SimptomeDocument22 pagesAfectiuni Si SimptomeIOANA_ROX_DRNo ratings yet

- Your Heart: Build Arms Like ThisDocument157 pagesYour Heart: Build Arms Like ThisNightNo ratings yet

- Mechanical Advantage HomeworkDocument8 pagesMechanical Advantage Homeworkafeurbmvo100% (1)

- NARS Fall 2011 Press File PDFDocument19 pagesNARS Fall 2011 Press File PDFheather_dillowNo ratings yet

- 10 - Enzymes - PPT - AutoRecoveredDocument65 pages10 - Enzymes - PPT - AutoRecoveredFaith WilliamsNo ratings yet

- Relasi FuzzyDocument10 pagesRelasi FuzzySiwo HonkaiNo ratings yet

- QTM - Soap Battle CaseDocument7 pagesQTM - Soap Battle CaseAshish Babaria100% (1)

- BLANCHARD-The Debate Over Laissez Faire, 1880-1914Document304 pagesBLANCHARD-The Debate Over Laissez Faire, 1880-1914fantasmaNo ratings yet

- Tamil NaduDocument64 pagesTamil Nadushanpaga priyaNo ratings yet

- ELC609F12 Lec0 IntroductionDocument16 pagesELC609F12 Lec0 IntroductionMohammed El-AdawyNo ratings yet

- University of Engineering and Technology TaxilaDocument5 pagesUniversity of Engineering and Technology TaxilagndfgNo ratings yet

- Toptica AP 1012 Laser Locking 2009 05Document8 pagesToptica AP 1012 Laser Locking 2009 05Tushar GuptaNo ratings yet

- Logistics Operation PlanningDocument25 pagesLogistics Operation PlanningLeonard AntoniusNo ratings yet

- Master of Business Administration in Aviation Management MbaamDocument10 pagesMaster of Business Administration in Aviation Management MbaamAdebayo KehindeNo ratings yet

- Offsetting Macro-Shrinkage in Ductile IronDocument13 pagesOffsetting Macro-Shrinkage in Ductile IronmetkarthikNo ratings yet

- ScheduleDocument1 pageScheduleparag7676No ratings yet

- Art and Geography: Patterns in The HimalayaDocument30 pagesArt and Geography: Patterns in The HimalayaBen WilliamsNo ratings yet

- Case-Study - Decision Making Under UncertaintyDocument21 pagesCase-Study - Decision Making Under UncertaintyMari GhviniashviliNo ratings yet

- Fantasy AGE - Spell SheetDocument2 pagesFantasy AGE - Spell SheetpacalypseNo ratings yet

- VAM Must Sumitomo 1209 PDFDocument4 pagesVAM Must Sumitomo 1209 PDFnwohapeterNo ratings yet

- Catalogue: Packer SystemDocument56 pagesCatalogue: Packer SystemChinmoyee Sharma100% (1)

- EASA - Design OrganisationsDocument30 pagesEASA - Design Organisationsyingqi.yangNo ratings yet

- Food - Forage - Potential - and - Carrying - Capacity - Rusa Kemampo - MedKonDocument9 pagesFood - Forage - Potential - and - Carrying - Capacity - Rusa Kemampo - MedKonRotten AnarchistNo ratings yet

- Leaflet CycleManager Ep CycleManager ErDocument7 pagesLeaflet CycleManager Ep CycleManager ErValeska ArdilesNo ratings yet

- Phonetics ReportDocument53 pagesPhonetics ReportR-jhay Mepusa AceNo ratings yet

- Scientific American Psychology 2nd Edition Licht Test BankDocument44 pagesScientific American Psychology 2nd Edition Licht Test Bankpurelychittra3ae3100% (24)

- Double-Outlet Right Ventricle With An An Intact Interventricular Septum and Concurrent Hypoplastic Left Ventricle in A CalfDocument6 pagesDouble-Outlet Right Ventricle With An An Intact Interventricular Septum and Concurrent Hypoplastic Left Ventricle in A CalfYoga RivaldiNo ratings yet

- Coastal Management NotesDocument2 pagesCoastal Management Notesapi-330338837No ratings yet

- Arc 2019-2020Document95 pagesArc 2019-2020AEN HTM DD1 HTM DD1No ratings yet

- CM1000 ManualDocument12 pagesCM1000 ManualyounesNo ratings yet