Professional Documents

Culture Documents

Web Question Answering - Presentation

Uploaded by

George KarlosCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Web Question Answering - Presentation

Uploaded by

George KarlosCopyright:

Available Formats

Is More Always Better ?

Dumais et al, Microsoft Research

A presentation by George Karlos

What is a Question Answering System ?

Combines information retrieval and natural language processing Answer questions (posed in natural language)

Retrieve answers

No documents

No passages

TREC Question Answering Track

Fact-based, short-answer questions

Who killed Abraham Lincoln? How tall is Mount Everest?

Dumais et als System

Focus on same type of questions Techniques are more broadly applicable

More Data

Other QA groups:

Variety of linguistic resources

(part-ofspeech tagging, syntactic parsing, semantic relations, named entity extraction, dictionaries, Wordnet, etc. )

Higher Accuracy

Use Web as source

Dumais et als System:

WEB Redundancy !!!

multiple, differently phrased, answer occurrences

Small information source Hard to find answers

Likely only 1 answer exists Complex relations between Q & A

Anaphor resolution, synonymy, alternate syntactic formulations, indirect answers (NLP)

Web More likely to find answers in simple relation to the question. Less likely to deal with NLP systems difficulties.

Enables Simple Query Rewrites

More sources that include answers in a simple, related to

the question form. e.g. Who killed Abraham Lincoln?

1. ______ killed Abraham Lincoln. 2. Abraham Lincoln was killed by ______ etc.

Facilitates Answer Mining

Improves efficiency even if no obvious answers are found.

e.g. How many times did Bjorn Borg win Wimbledon?

1. 2. 3. 4. Bjorn Borg <text> <text> Wimbledon <text> <text> 5 <text> Wimbledon <text> <text> <text> Bjorn Borg <text> 37 <text> <text> Bjorn Borg <text> <text> 5 <text> <text> Wimbledon 5 <text> <text> Wimbleton <text> <text> Bjorn Borg

5 is the most frequent number Most likely the correct answer.

Four main components:

Rewrite Query N-gram Mining

N-gram Filtering/Reweighting N-gram Tiling

Rewrite Query

Rewrite query in a way that a possible answer might be formed

e.g. When was Abraham Lincoln born?

Abraham Lincoln was born on <DATE>

7 categories of questions Different sets of rewrite rules 1-5 rewrite types

Rewrite Query

Output : [string , L/R/- , weight]

Reformulated search query Position answer is expected How much the answer is preferred

High precision query Abraham Lincoln was born on more likely to be correct

Lower precision query Abraham AND Lincoln AND Born

Rewrite Query

Simple string manipulations

Where is the Louvre museum located?

The Louvre Museum is located in

1.

2.

More rewrites Proper rewrite guaranteed to be found

W1 IS W2 W3 . Wn W1 W2 IS W3.Wn etc.

Using a parser can result in proper rewrite not be found

Final rewrite: ANDing of non-stop words Louvre Museum AND located Stop Words ( in, the, etc..) Important indicators of likely answers

N-Gram Mining

N-Grams

Collections of words or letters that frequently appear on the web

1. 2. 3. 4.

1-,2-,3-grams are extracted from the summaries Scored based on the weight of the query rewrite that retrieved them Scores summed Final score based on rewrite rules and number of unique summaries in which it occurred

N-Gram Filtering/Reweighting

Query assigned one of seven question types (who, what, how many, etc..) System determines what filters to apply

Boost score of a potential answer Remove strings from the candidate list

Answers analyzed and rescored

N-Gram Tiling

Answer tiling algorithm

Merges similar answers Assembles longer answers out of answer fragments

e.g. A B C and B C D A B C D Algorithm stops when no n-grams can be further tiled.

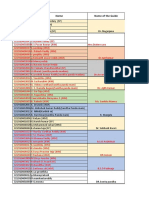

TREC vs. WEB

AND Rewrites Only, Top 100 (Google)

MRR

Web1 TREC, Contiguous Snippet

NumCorrect 281 117

PropCorrect 0.562 0.234

0.450 0.186

TREC, Non-Contiguous Snippet

0.187

128

0.256

AND Rewrites Only, Top 100 (MSNSearch)

MRR

Web1 TREC, Contiguous Snippet TREC, Non-Contiguous Snippet

Lack of redundancy in TREC !

NumCorrect 281 117 128

PropCorrect 0.562 0.234 0.256

0.450 0.186 0.187

Web2, Contiguous Snippet

Web2, Non-Contiguous Snippet

0.355

0.383

227

243

0.454

0.486

TREC & WEB(Google) Combined

Larger source. Better?

Trec 0.262 MRR

Web 0.416 MRR

Combined QA results

MRR: 0.433 NumCorrect: 283 4.1% improvement

QA Accuracy and number of snippets

More is better up to a limit

Smaller collections Lower Accuracy

Snippet quality less important

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- 2083 Perfect Future - 3rd Ed ENGDocument188 pages2083 Perfect Future - 3rd Ed ENGekhae.yogaNo ratings yet

- Internal Audit in Focus 2024 1709245839Document11 pagesInternal Audit in Focus 2024 1709245839burcuNo ratings yet

- Engineering Graduates Internship Program PDFDocument6 pagesEngineering Graduates Internship Program PDFAshish RoyNo ratings yet

- SATW-Swiss AI StrategyDocument20 pagesSATW-Swiss AI StrategyCiobanu CristianNo ratings yet

- Module 5 AIMLDocument18 pagesModule 5 AIMLgovardhanhl5411No ratings yet

- Ucm Cs Course WorkDocument8 pagesUcm Cs Course Workg1hulikewes2100% (2)

- What Will Schools of The Future Look LikeDocument4 pagesWhat Will Schools of The Future Look LikeLinh NguyenNo ratings yet

- Ec 467 Pattern RecognitionDocument2 pagesEc 467 Pattern RecognitionJustin SajuNo ratings yet

- K Means Clustering Solved Numerical - 5 Minutes EngineeringDocument8 pagesK Means Clustering Solved Numerical - 5 Minutes Engineeringpriyanshidubey2008No ratings yet

- HR Priorities For 2023 Final v4Document17 pagesHR Priorities For 2023 Final v4harsNo ratings yet

- Digital Politics AI, Big Data and The Future of DDocument16 pagesDigital Politics AI, Big Data and The Future of DRone Eleandro dos SantosNo ratings yet

- Resume ColumbiaDocument1 pageResume ColumbiaRadhika AwastiNo ratings yet

- Page 1 of 1574Document1,574 pagesPage 1 of 1574ThuraNo ratings yet

- Schedule First Presentation (Moodle Upload)Document12 pagesSchedule First Presentation (Moodle Upload)321910402038 gitamNo ratings yet

- Interface Zero (OEF) (2019)Document273 pagesInterface Zero (OEF) (2019)241again100% (13)

- WAT TopicsDocument13 pagesWAT TopicsAyusman AryaNo ratings yet

- C1a - Anomaly DetectionDocument12 pagesC1a - Anomaly DetectionHII TOH PING / UPMNo ratings yet

- 7 - Elena Ianos-Schiller - EDUCATION, MENTALITY AND CULTURE IN THE INTERNET ERADocument16 pages7 - Elena Ianos-Schiller - EDUCATION, MENTALITY AND CULTURE IN THE INTERNET ERAtopsy-turvyNo ratings yet

- Wallstreetjournal 20171124 TheWallStreetJournalDocument42 pagesWallstreetjournal 20171124 TheWallStreetJournalTevinWaiguruNo ratings yet

- AI Basics PDFDocument66 pagesAI Basics PDFMonish VenkatNo ratings yet

- Advanced Python Training in DelhiDocument3 pagesAdvanced Python Training in Delhiaidm 1234No ratings yet

- Economist Iot Business Index 2020 ArmDocument30 pagesEconomist Iot Business Index 2020 ArmFelix VentourasNo ratings yet

- SoundtoVisualSceneGenerationbyAudio To VisualLatentAlignmentDocument11 pagesSoundtoVisualSceneGenerationbyAudio To VisualLatentAlignmentDenis BeliNo ratings yet

- Image RestorationDocument12 pagesImage RestorationHarikesh MauryaNo ratings yet

- Assessment Task 5. Information Age - CANDELARIODocument3 pagesAssessment Task 5. Information Age - CANDELARIOMicah Alexis CandelarioNo ratings yet

- UGC Approved Journal ListDocument68 pagesUGC Approved Journal Listamit_coolbuddy20No ratings yet

- Kaggle Competitions - How To WinDocument74 pagesKaggle Competitions - How To Winsvejed123No ratings yet

- TuesdayDocument31 pagesTuesdayClark JoshuaNo ratings yet

- (Vijayan Sugumaran, Arun Kumar Sangaiah, ArunkumarDocument379 pages(Vijayan Sugumaran, Arun Kumar Sangaiah, ArunkumardikesmNo ratings yet

- Embedded SystemsDocument34 pagesEmbedded Systems402 ABHINAYA KEERTHINo ratings yet