Professional Documents

Culture Documents

Case Study

Uploaded by

Aastha VyasOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Case Study

Uploaded by

Aastha VyasCopyright:

Available Formats

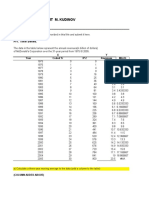

AASTHA VYAS-12001 ABHISHEK C PANDURANGI-12002

Survey of 1200 employees found that 25% of US workers believed that they could accomplish at least 50% more on the job each day. Barriers to Productivity

Not supervising the work-37% Not involving in Decision Making-34% Not rewarding for performance-29% No Promotion Opportunities-29% No training-28% Not Hiring right people-26%

A survey of 231 HR specialists reported the following for Compensation and Benefits

Managing Performance-12.12% Team Based Pay-10.39% Competency Based Pay-9.96%

ECS/Watson Wyatt Data Services studied 1500 companies. 75% use variable pay for middle managers Two-thirds was given as annual bonus 26% of supervisors were given cash rewards like bonus

Edward Perlin Associates surveyed 63 companies with data-processing professionals Average Annual Compensation including salary and bonus for:

Security Managers-$79,900 Security Heads-$94,800 Security Specialists-$42,900

Survey of 4800 members of Institute of Management Accountant reported Average Annual Salary in the 30-39 year age bracket

$57,397 for certified management accountant $47,332 for uncertified management accountant

Average Annual Salary in the 19-29 year age bracket

$40,185 for certified management accountant $31,008 for uncertified management accountant

A survey of 1935 internet workers by Association of Internet Professions Average Annual Salary for

Online Services Manager-$59,781 Software Development Person-$64,024 Media Production Person-$42,455

How can the national average salary for computer security positions be estimated using sample data? How much error is involved in such an estimation? How much confidence do we have in this estimation?

n=63, Assume Standard Deviation=$5,500 Mean=$79,900 Confidence Level=95% Thus confidence interval=($78,542, $81,258) The point estimate would have an error of $1358

How does a business researcher use sample information to estimate population parameters like the mean national average annual salary for accountants? For internet workers? What is the error in such a process? Are we certain of the results?

A survey was conducted to estimate the population parameters Point Estimate has been used to estimate the mean national average salary A point estimate is as good as the sample, if random samples are taken the estimate may vary. An interval estimate is preferred over point estimate.

One survey reported that 37% of the workers felt that companies are not supervising enough. This figure came from a survey of 1200 employees and is only a sample statistic. Can we say from that 37% of all employees in the US feel the same way? Why or why not? Can we use the 37% as an estimate for the population parameter? If we do, how much error is there; and how much confidence do we have in the final results?

n=1200 Confidence Level=95% P=.37, 1-P=.63 Confidence Interval=(.343,.397) Error=.027 or 2.7% It can be not assumed to be same for the US population

A survey of 231 HR specialists found that 12.12% cited that managing performance is the dominant issue facing compensation and benefits managers. Can this figure be used to represent all compensations and benefits managers? If so, how much potential error is there in doing so. Since this sample size is 231 and the sample size for the employee survey on productivity uses a sample size of 1200, does the accuracy of the results of two surveys differ? Does the size of the sample enter into the predictability of a surveys result?

n=231 Confidence level= 90% P=.1212 or 12.12% Confidence interval= (0.0862, 0.1562) Error=0.035 Increasing the ample size to 1200 will bring the error down to 0.015, and the accuracy will differ. Thus, increasing the sample size will reduce the error.

Why did these research companies choose to sample 1200, 231, 1500, 63, and 4800 firms or people respectively? What is the rationale for determining how many to sample other than to sample as few as possible to save time or money? Is a minimum sample size necessary to accomplish estimation?

Size of acceptable error and level of confidence should be taken into confidence Size of acceptable error and size of sample are inversely proportional Considering large samples would incur high costs. To minimize costs, highest error tolerable should be taken into account.

Thank You

You might also like

- Raise Your Team's Employee Engagement Score: A Manager's GuideFrom EverandRaise Your Team's Employee Engagement Score: A Manager's GuideNo ratings yet

- Learning & GrowthDocument40 pagesLearning & GrowthAlamin SheikhNo ratings yet

- Testing in Industrial & Business SettingsDocument30 pagesTesting in Industrial & Business SettingsspdeyNo ratings yet

- The Power of Stay Interviews for Engagement and RetentionFrom EverandThe Power of Stay Interviews for Engagement and RetentionNo ratings yet

- Business StatisticsDocument12 pagesBusiness StatisticsRahul ChhabraNo ratings yet

- Employee Engagement Sample ResultsDocument38 pagesEmployee Engagement Sample ResultskanikasinghalNo ratings yet

- Integrated Talent Management Scorecards: Insights From World-Class Organizations on Demonstrating ValueFrom EverandIntegrated Talent Management Scorecards: Insights From World-Class Organizations on Demonstrating ValueNo ratings yet

- Project Report On CapgeminiDocument11 pagesProject Report On CapgeminiKarunakar ChittigalaNo ratings yet

- Analyzing Levels of Employee Satisfaction - LG Electronics-2019Document12 pagesAnalyzing Levels of Employee Satisfaction - LG Electronics-2019MohmmedKhayyum100% (1)

- 1 StartDocument11 pages1 StartVikash KumarNo ratings yet

- Making Human Capital Analytics Work: Measuring the ROI of Human Capital Processes and OutcomesFrom EverandMaking Human Capital Analytics Work: Measuring the ROI of Human Capital Processes and OutcomesNo ratings yet

- HOW Engaged Are My Employees?Document8 pagesHOW Engaged Are My Employees?Chinmay ShirsatNo ratings yet

- L BRM Assign 7.1docxDocument17 pagesL BRM Assign 7.1docxSanskriti SinghNo ratings yet

- SynopsisDocument16 pagesSynopsisROHIT SharmaNo ratings yet

- Chapter 1: Introduction 1.1 BackgroundDocument3 pagesChapter 1: Introduction 1.1 BackgroundkashNo ratings yet

- 2013 SalaryreportDocument21 pages2013 SalaryreportgabrielocioNo ratings yet

- The Power of Employee Appreciation. 5 Best Practices in Employee Recognition.Document54 pagesThe Power of Employee Appreciation. 5 Best Practices in Employee Recognition.Norberts Erts100% (2)

- Measuring and Maximizing Training Impact: Bridging the Gap between Training and Business ResultFrom EverandMeasuring and Maximizing Training Impact: Bridging the Gap between Training and Business ResultNo ratings yet

- Employee Referrals Program in 5 StepsDocument35 pagesEmployee Referrals Program in 5 StepsFacecontact100% (4)

- The Five Myths of Predictive AnalyticsDocument7 pagesThe Five Myths of Predictive AnalyticsKrystal LechnerNo ratings yet

- BRM AssignmentDocument18 pagesBRM AssignmentSanskriti SinghNo ratings yet

- Quality and Service In Private Clubs - What Every Manager Needs to KnowFrom EverandQuality and Service In Private Clubs - What Every Manager Needs to KnowNo ratings yet

- SAP Salary Survey Year 2013Document20 pagesSAP Salary Survey Year 2013Ryan CorominasNo ratings yet

- A Value Proposition Is A Statement That Answers TheDocument4 pagesA Value Proposition Is A Statement That Answers TheJohnson PoojariNo ratings yet

- Oup Case StudyDocument4 pagesOup Case StudyAnonymous raI6uYNo ratings yet

- HR MetricsDocument30 pagesHR MetricsJhanvi Thakkar100% (3)

- HR Metrics and HR AnalyticsDocument24 pagesHR Metrics and HR AnalyticsSwikriti ChandaNo ratings yet

- Employee Loyalty Index - 0Document22 pagesEmployee Loyalty Index - 0vilmaNo ratings yet

- Rewards & Recognition Trends Report 2014Document7 pagesRewards & Recognition Trends Report 2014generationpoetNo ratings yet

- Submitted in Partial Fulfillment of The Requirement For The Award of The Diploma ofDocument40 pagesSubmitted in Partial Fulfillment of The Requirement For The Award of The Diploma ofZara KhanNo ratings yet

- Data Analytics FactsheetDocument2 pagesData Analytics FactsheetmatrneosonNo ratings yet

- "To Study The Employee Job Satisfaction and Employee Retention of Reliance CommunicationDocument78 pages"To Study The Employee Job Satisfaction and Employee Retention of Reliance CommunicationAshwani kumarNo ratings yet

- A Winning Approach To Employee Success HBRAS QWDocument12 pagesA Winning Approach To Employee Success HBRAS QWcoolsar25No ratings yet

- A Winning Approach To Employee Success HBRAS QWDocument12 pagesA Winning Approach To Employee Success HBRAS QWFrancisco MuñozNo ratings yet

- Research - How Employee Experience Impacts Your Bottom LineDocument9 pagesResearch - How Employee Experience Impacts Your Bottom LineFaldiela IsaacsNo ratings yet

- Employee Engagement 2013 ReportDocument14 pagesEmployee Engagement 2013 ReportHarshadaNo ratings yet

- A Joosr Guide to... The Carrot Principle by Adrian Gostick and Chester Elton: How the Best Managers Use Recognition to Engage Their People, Retain Talent, and Accelerate PerformanceFrom EverandA Joosr Guide to... The Carrot Principle by Adrian Gostick and Chester Elton: How the Best Managers Use Recognition to Engage Their People, Retain Talent, and Accelerate PerformanceNo ratings yet

- Employee MotivationDocument18 pagesEmployee MotivationMuhammad SabirNo ratings yet

- MetricsDocument9 pagesMetricsPriya YadavNo ratings yet

- Total Quality Management Now Applies To Manaéiné TalentDocument5 pagesTotal Quality Management Now Applies To Manaéiné TalentAhmed ZidanNo ratings yet

- Employee turnover in the IT industryDocument39 pagesEmployee turnover in the IT industrylamein200275% (4)

- Determinants of Employee Retention in Banking Sector: Presented by Irfan Amir Ali 08-0053Document34 pagesDeterminants of Employee Retention in Banking Sector: Presented by Irfan Amir Ali 08-0053Faisal ShamimNo ratings yet

- Accidents Waiting to Happen: Best Practices in Workers’ Comp AdministrationFrom EverandAccidents Waiting to Happen: Best Practices in Workers’ Comp AdministrationNo ratings yet

- Measuring Customer Satisfaction: Exploring Customer Satisfaction’s Relationship with Purchase BehaviorFrom EverandMeasuring Customer Satisfaction: Exploring Customer Satisfaction’s Relationship with Purchase BehaviorRating: 4.5 out of 5 stars4.5/5 (6)

- Employee Engagement Sample ResultsDocument38 pagesEmployee Engagement Sample ResultsParulNo ratings yet

- 80 RecommendationDocument21 pages80 RecommendationRashaNo ratings yet

- Employee Retention Program - Recruit and Retain Talent Team – Executive SummaryDocument17 pagesEmployee Retention Program - Recruit and Retain Talent Team – Executive SummarySamruddha ShedgeNo ratings yet

- Human Resources ManagementDocument12 pagesHuman Resources ManagementRamiEssoNo ratings yet

- 199 Pre-Written Employee Performance Appraisals: The Complete Guide to Successful Employee Evaluations And DocumentationFrom Everand199 Pre-Written Employee Performance Appraisals: The Complete Guide to Successful Employee Evaluations And DocumentationNo ratings yet

- A Study On Employees Job SatisfactionDocument22 pagesA Study On Employees Job Satisfactionchristopherjunior juniorNo ratings yet

- Pineapple Tech 2023 HR ReportDocument8 pagesPineapple Tech 2023 HR ReportRYa1shNo ratings yet

- Your Best Employee Is About To QuitDocument33 pagesYour Best Employee Is About To QuitArijit MukherjeeNo ratings yet

- Calculating ROI of EPM WP 0108Document7 pagesCalculating ROI of EPM WP 0108luisrebagliatiNo ratings yet

- Employee Satisfaction DissertationDocument6 pagesEmployee Satisfaction Dissertationballnimasal1972100% (1)

- Full Papers For BBAY AwardsDocument162 pagesFull Papers For BBAY AwardsDr. Mohammad Noor AlamNo ratings yet

- BPSM Mod 6 Implementing StrategyDocument35 pagesBPSM Mod 6 Implementing StrategyAastha VyasNo ratings yet

- QUALITATIVE RESEARCH METHODSDocument28 pagesQUALITATIVE RESEARCH METHODSAastha VyasNo ratings yet

- BPSM Mod 5 Internal AnalysisDocument27 pagesBPSM Mod 5 Internal AnalysisAastha VyasNo ratings yet

- Interview QuestionsDocument230 pagesInterview QuestionsHimanshu TipreNo ratings yet

- Problem DefinitionDocument4 pagesProblem DefinitionAastha VyasNo ratings yet

- Total Quality Management (TQM) : 2009-11 PGDM Term 5 Elective Course Credits: 3 Faculty: Dr. R. JagadeeshDocument39 pagesTotal Quality Management (TQM) : 2009-11 PGDM Term 5 Elective Course Credits: 3 Faculty: Dr. R. JagadeeshAastha VyasNo ratings yet

- 11.overview of International Financial Environment & Intl Monetary SystemDocument12 pages11.overview of International Financial Environment & Intl Monetary SystemAastha VyasNo ratings yet

- SHINEDocument19 pagesSHINEruchit2809No ratings yet

- Full Screen and Product TestingDocument25 pagesFull Screen and Product TestingAastha VyasNo ratings yet

- Procedure For Developing A QuesionnaireDocument7 pagesProcedure For Developing A QuesionnaireAastha VyasNo ratings yet

- Research DesignDocument11 pagesResearch DesignAastha Vyas0% (1)

- SHINEDocument19 pagesSHINEruchit2809No ratings yet

- Mesurement and ScalingDocument15 pagesMesurement and ScalingAastha VyasNo ratings yet

- Marketing Research Provides InsightsDocument27 pagesMarketing Research Provides InsightsAastha VyasNo ratings yet

- Concept Testing: Prof M R SureshDocument16 pagesConcept Testing: Prof M R SureshAastha VyasNo ratings yet

- TATA ACE Sales and Distribution Channel StrategiesDocument19 pagesTATA ACE Sales and Distribution Channel StrategiesAastha VyasNo ratings yet

- SHINEDocument19 pagesSHINEruchit2809No ratings yet

- ZOOM-Tech: Sharp Beyond Innovation .Document16 pagesZOOM-Tech: Sharp Beyond Innovation .Aastha VyasNo ratings yet

- ICACI2023 Reviewer InstructionsDocument10 pagesICACI2023 Reviewer Instructionssundeep siddulaNo ratings yet

- A Comparative Study On Selecting Acoustic Modeling Units in Deep Neural Networks Based Large Vocabulary Chinese Speech RecognitionDocument6 pagesA Comparative Study On Selecting Acoustic Modeling Units in Deep Neural Networks Based Large Vocabulary Chinese Speech RecognitionGalaa GantumurNo ratings yet

- Chapter 1 - Nature of ResearchDocument11 pagesChapter 1 - Nature of ResearchJullien LagadiaNo ratings yet

- MA321 FINAL EXAM - TIME SERIES, REGRESSION, ANOVA ANALYSISDocument33 pagesMA321 FINAL EXAM - TIME SERIES, REGRESSION, ANOVA ANALYSISEliott SanchezNo ratings yet

- GIS, Remote Sensing - Applications in The Health SciencesDocument231 pagesGIS, Remote Sensing - Applications in The Health ScienceslynxnetNo ratings yet

- Credit Card Fraud DetectionDocument14 pagesCredit Card Fraud DetectionSnehal Jain100% (1)

- An Achievement TestDocument20 pagesAn Achievement TestBiswadip Dutta100% (2)

- Statistic ASM1Document12 pagesStatistic ASM1Loan Nguyen ThuyNo ratings yet

- AnovaDocument15 pagesAnovarahila akNo ratings yet

- Random Student Drug TestDocument4 pagesRandom Student Drug TestChristian Joseph OpianaNo ratings yet

- Percepatan Regenerasi Petani pada Komunitas Usaha Tani Sayuran di Kecamatan Samarang Kabupaten Garut Provinsi Jawa BaratDocument12 pagesPercepatan Regenerasi Petani pada Komunitas Usaha Tani Sayuran di Kecamatan Samarang Kabupaten Garut Provinsi Jawa Barat097 ATHALLA SYAMBETHANo ratings yet

- Bayesian Belief Networks: CS 2740 Knowledge RepresentationDocument21 pagesBayesian Belief Networks: CS 2740 Knowledge RepresentationalenjamesjosephNo ratings yet

- Chapter 6 - L5 L6Document32 pagesChapter 6 - L5 L6hoboslayer97No ratings yet

- HAIMLC501 MathematicsForAIML Lecture 14 Distributions SH2022Document46 pagesHAIMLC501 MathematicsForAIML Lecture 14 Distributions SH2022abhishek mohiteNo ratings yet

- Statistics For Business and Economics: Describing Data: NumericalDocument40 pagesStatistics For Business and Economics: Describing Data: NumericalIbrahim RashidNo ratings yet

- Business Analytics Presentation: Titanic Survival Analysis and PredictionDocument15 pagesBusiness Analytics Presentation: Titanic Survival Analysis and PredictionRumani ChakrabortyNo ratings yet

- Analyzing SEM with Multiple Groups in RDocument10 pagesAnalyzing SEM with Multiple Groups in RIra SepriyaniNo ratings yet

- Causes, Effects and Deterrence of Insurance Fraud: Evidence From GhanaDocument104 pagesCauses, Effects and Deterrence of Insurance Fraud: Evidence From GhanaNanaAkomeaNo ratings yet

- Forecasting manpower demand and supplyDocument18 pagesForecasting manpower demand and supplyRuchi NandaNo ratings yet

- Toyota DocumentDocument32 pagesToyota Documentrajat0989No ratings yet

- Mendenhall Ch06-+modifiedDocument28 pagesMendenhall Ch06-+modifiedparvesh_goklani9351No ratings yet

- Practical Research 2 ReviewerDocument7 pagesPractical Research 2 ReviewerKirsten MacapallagNo ratings yet

- Data Analysis Courses ComparisonDocument9 pagesData Analysis Courses ComparisonPraveen BoddaNo ratings yet

- Handwriting Without TearsDocument63 pagesHandwriting Without TearsEllen Aquino100% (8)

- Econometrics WS11-12 Course ManualDocument72 pagesEconometrics WS11-12 Course Manualaquiso100% (1)

- Soalan Tugasan Analisis Data PermulaanDocument5 pagesSoalan Tugasan Analisis Data PermulaanHuda HafizNo ratings yet

- A-CAT Corp Forecasting Paper - FinalDocument11 pagesA-CAT Corp Forecasting Paper - FinalunveiledtopicsNo ratings yet

- Chapter 7 Binomial, Normal, and Poisson DistributionsDocument2 pagesChapter 7 Binomial, Normal, and Poisson Distributionsdestu aryantoNo ratings yet

- Predictors of Moral Distress Among NursesDocument9 pagesPredictors of Moral Distress Among NursesAdmasu BelayNo ratings yet