Professional Documents

Culture Documents

Communications201203 DL

Uploaded by

nelsonssOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Communications201203 DL

Uploaded by

nelsonssCopyright:

Available Formats

Turings

Titanic

Machine?

The Netmap

Framework

A Study of

Cyberattacks

What is an

Algorithm?

Training Users vs.

Training Soldiers

Gaining Wisdom

from Crowds

Next-Generation

GPS Navigation

COMMUNICATIONS

OF THE

ACM

cAcM.AcM.org 03/2012 VoL.55 No.3

Association for

Computing Machinery

Sustainable Software for a Sustainable World

Participate in ICSE 2012!

Learn Present Discuss Meet Network Enjoy

ICSE 2012 is for

G

e

n

e

r

a

l

C

h

a

i

r

:

P

r

o

g

r

a

m

C

o

-

C

h

a

i

r

s

:

G

a

i

l

M

u

r

p

h

y

,

U

n

i

v

e

r

s

i

t

y

o

f

B

r

i

t

i

s

h

C

o

l

u

m

b

i

a

,

C

a

n

a

d

a

M

a

u

r

o

P

e

z

z

,

U

n

i

v

e

r

s

i

t

y

o

f

L

u

g

a

n

o

,

S

w

i

t

z

e

r

l

a

n

d

a

n

d

U

n

i

v

e

r

s

i

t

y

o

f

M

i

l

a

n

o

B

i

c

o

c

c

a

,

I

t

a

l

y

M

a

r

t

i

n

G

l

i

n

z

,

U

n

i

v

e

r

s

i

t

y

o

f

Z

u

r

i

c

h

,

S

w

i

t

z

e

r

l

a

n

d

h

t

t

p

:

/

/

w

w

w

.

i

c

s

e

2

0

1

2

.

o

r

g

R

e

g

i

s

t

e

r

N

O

W

June 2-9, 2012

Zurich Switzerland

ICSE 2012

34th International Conference on Software Engineering

Choose from a wealth of keynotes, invited talks, paper presentations,

workshops, tutorials, technical briefings, co-located events, and more.

Check-out the program at http://www.ifi.uzh.ch/icse2012/program.

Academics: Hear about others research results and discuss your

results with your peers

Practitioners: Learn about cutting-edge, state-of-the-art software

technology and talk to the people behind it

Students: Present your best work and meet the leading people in

your field

Barbara

LISKOV

W

H

A

T

D

O

Y

O

U

G

E

T

W

H

E

N

Y

O

U

P

U

T

Frederick P.

BROOKS, JR.

Charles P. (Chuck)

THACKER

Charles W.

BACHMAN

Stephen A.

COOK

Richard M.

KARP

Leslie G.

VALIANT

Marvin

MINSKY

Adi

SHAMIR

Richard E.

STEARNS

John

HOPCROFT

Robert E.

KAHN

Vinton G.

CERF

Robert

TARJAN

Ivan

SUTHERLAND

Butler

LAMPSON

Juris

HARTMANIS

Andrew C.

YAO

Donald E.

KNUTH

Dana S.

SCOTT

Raj

REDDY

Fernando J.

CORBAT

Edward A.

FEIGENBAUM

Alan C.

KAY

William (Velvel)

KAHAN

Frances E.

ALLEN

E. Allen

EMERSON

Niklaus

WIRTH

Ken

THOMPSON

Leonard

ADLEMAN

Ronald

RIVEST

Edmund

CLARKE

Joseph

SIFAKIS

THE ACM TURING

CENTENARY CELEBRATION

A ONCE-IN-A-LIFETIME

EVENT THAT YOULL

NEVER FORGET

AND YOURE INVITED!

CHAIR:

Vint Cerf, 04 Turing Award Winner,

Chief Internet Evangelist, Google

PROGRAM CO-CHAIRS:

Mike Schroeder, Microsoft Research

John Thomas, IBM Research

Moshe Vardi, Rice University

MODERATOR:

Paul Saffo, Managing Director of Foresight, Discern

Analytics and Consulting Associate Professor, Stanford

WHEN:

June 15th & June 16th 2012

WHERE:

Palace Hotel, San Francisco CA

HOW:

Register for the event at turing100.acm.org

REGISTRATION IS FREE-OF-CHARGE!

THE A.M. TURING AWARD, often referred to as the "Nobel Prize" in

computing, was named in honor of Alan Mathison Turing (19121954),

a British mathematician and computer scientist. He made fundamental

advances in computer architecture, algorithms, formalization of

computing, and artificial intelligence. Turing was also instrumental in

British code-breaking work during World War II.

C

M

Y

CM

MY

CY

CMY

K

CENT_Advert.P9.pdf 1 1/28/12 7:55 AM

2 coMMuni caTi ons of The acM | mArCh 2012 | vol. 55 | No. 3

coMMuNicAtioNs of the AcM

Association for Computing Machinery

Advancing Computing as a Science & Profession

Departments

5 Editors Letter

What is an Algorithm?

By Moshe Y. Vardi

6 Letters To The Editor

From Syntax to Semantics for AI

10 BLOG@CACM

Knowledgeable Beginners

Bertrand Meyer presents data

on the computer and programming

knowledge of two groups

of novice CS students.

29 Calendar

114 Careers

Last Byte

118 Puzzled

Solutions and Sources

By Peter Winkler

120 Q&A

Chief Strategist

ACM CEO John White talks about

initiatives to serve the organizations

professional members, increase

international activities, and reform

computer science education.

By Leah Hoffmann

News

13 Gaining Wisdom from Crowds

Online games are harnessing

humans skills to solve scientifc

problems that are currently beyond

the ability of computers.

By Neil Savage

16 Computing with Magnets

Researchers are fnding ways

to develop ultra-effcient and

nonvolatile computer processors out

of nanoscale magnets. A number

of obstacles, however, stand in the

way of their commercialization.

By Gary Anthes

19 Policing the Future

Computer programs and new

mathematical algorithms

are helping law enforcement

agencies better predict when

and where crimes will occur.

By Samuel Greengard

22 Stanford SchoolingGratis!

Stanford Universitys

experiment with online classes

could help transform

computer science education.

By Paul Hyman

23 Computer Science Awards

Scientists worldwide are honored

for their contributions to design,

computing, science, and technology.

By Jack Rosenberger

Viewpoints

24 Computing Ethics

War 2.0: Cyberweapons and Ethics

Considering the basic ethical

questions that must be resolved in

the new realm of cyberwarfare.

By Patrick Lin, Fritz Allhoff,

and Neil C. Rowe

27 Legally Speaking

Do Software Copyrights

Protect What Programs Do?

A case before the European Court of

Justice has signifcant implications

for innovation and competition in

the software industry.

By Pamela Samuelson

30 The Profession of IT

The Idea Idea

What if practices rather than ideas

are the main source of innovation?

By Peter J. Denning

33 Viewpoint

Training Users vs. Training Soldiers:

Experiences from the Battlefeld

How military training methods

can be applied to more effectively

teach computer users.

By Vassilis Kostakos

36 Viewpoint

The Artifciality of

Natural User Interfaces

Toward user-defned

gestural interfaces.

By Alessio Malizia and Andrea Bellucci

mArCh 2012 | vol. 55 | No. 3 | coMMuni caTi ons of The acM 3

03/2012

VoL. 55 No. 03

I

l

l

u

s

t

r

a

t

I

o

n

b

y

J

o

h

n

h

e

r

s

e

y

Practice

40 SAGE: Whitebox Fuzzing

for Security Testing

SAGE has had a remarkable

impact at Microsoft.

By Patrice Godefroid, Michael Y. Levin,

and David Molnar

45 Revisiting Network I/O APIs:

The Netmap Framework

It is possible to achieve huge

performance improvements in

the way packet processing is done

on modern operating systems.

By Luigi Rizzo

52 The Hyperdimensional Tar Pit

Make a guess, double the number,

and then move to the next larger

unit of time.

By Poul-Henning Kamp

Articles development led by

queue.acm.org

Contributed Articles

54 MobiCon: A Mobile

Context-Monitoring Platform

User context is defned by data

generated through everyday physical

activity in sensor-rich, resource-

limited mobile environments.

By Youngki Lee, S.S. Iyengar,

Chulhong Min, Younghyun Ju,

Seungwoo Kang, Taiwoo Park,

Jinwon Lee, Yunseok Rhee,

and Junehwa Song

66 A Comparative Study of Cyberattacks

Attackers base themselves in

countries of convenience, virtually.

By Seung Hyun Kim, Qiu-Hong Wang,

and Johannes B. Ullrich

Review Articles

74 Turings Titanic Machine?

Embodied and disembodied computing

at the Turing Centenary.

By S. Barry Cooper

84 The Next Generation of GPS

Navigation Systems

A live-view GPS navigation system

ensures that a users cognitive map is

consistent with the navigation map.

By J.Y. Huang, C.H. Tsai,

and S.T. Huang

Research Highlights

96 Technical Perspective

The Benefts of Capability-based

Protection

By Steven D. Gribble

97 A Taste of Capsicum: Practical

Capabilities for UNIX

By Robert N.M. Watson,

Jonathan Anderson, Ben Laurie,

and Kris Kennaway

105 Technical Perspective

A New Way To Search Game Trees

By Michael L. Littman

106 The Grand Challenge of

Computer Go: Monte Carlo

Tree Search and Extensions

By Sylvain Gelly, Levente Kocsis,

Marc Schoenauer, Michle Sebag,

David Silver, Csaba Szepesvri,

and Olivier Teytaud

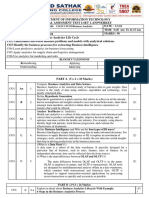

about the cover:

Celebrations around

the world are expected

throughout 2012 marking

the 100th anniversary of

the birth of alan turing.

this months cover

features the pioneering

work of sculptor stephen

Kettle; his statue of turing

is made of over half a

million individual pieces

of 500-million-year-old

Welsh slate, chosen

because of turings

fondness for north Wales. s. barry Coopers cover story

on page 74 explores turings legacy.

Turings

Titanic

Machine?

The Netmap

Framework

A Study of

Cyberattacks

What is an

Algorithm?

Training Users vs.

Training Soldiers

Gaining Wisdom

fromCrowds

Next-Generation

GPS Navigation

COMMUNICATIONS

OF THE

ACM

cAcM.AcM.org 03/2012 VoL.55 No.3

Association for

Computing Machinery

52

4 coMMuni caTi ons of The acM | mArCh 2012 | vol. 55 | No. 3

communications of the acm

trusted insights for computings leading professionals.

Communications of the ACM is the leading monthly print and online magazine for the computing and information technology felds.

Communications is recognized as the most trusted and knowledgeable source of industry information for todays computing professional.

Communications brings its readership in-depth coverage of emerging areas of computer science, new trends in information technology,

and practical applications. Industry leaders use Communications as a platform to present and debate various technology implications,

public policies, engineering challenges, and market trends. The prestige and unmatched reputation that Communications of the ACM

enjoys today is built upon a 50-year commitment to high-quality editorial content and a steadfast dedication to advancing the arts,

sciences, and applications of information technology.

ACM, the worlds largest educational

and scientifc computing society, delivers

resources that advance computing as a

science and profession. ACM provides the

computing felds premier Digital Library

and serves its members and the computing

profession with leading-edge publications,

conferences, and career resources.

Executive Director and CEO

John White

Deputy Executive Director and COO

Patricia ryan

Director, Offce of Information Systems

Wayne Graves

Director, Offce of Financial Services

russell harris

Director, Offce of SIG Services

Donna Cappo

Director, Offce of Publications

bernard rous

Director, Offce of Group Publishing

scott e. Delman

ACm CoUNCil

President

alain Chesnais

Vice-President

barbara G. ryder

Secretary/Treasurer

alexander l. Wolf

Past President

Wendy hall

Chair, SGB Board

Vicki hanson

Co-Chairs, Publications Board

ronald boisvert and Jack Davidson

Members-at-Large

Vinton G. Cerf; Carlo Ghezzi;

anthony Joseph; Mathai Joseph;

Kelly lyons; Mary lou soffa; salil Vadhan

SGB Council Representatives

G. scott owens; andrew sears;

Douglas terry

BoArd ChAirS

Education Board

andrew McGettrick

Practitioners Board

stephen bourne

reGioNAl CoUNCil ChAirS

ACM Europe Council

Fabrizio Gagliardi

ACM India Council

anand s. Deshpande, PJ narayanan

ACM China Council

Jiaguang sun

PUBliCATioNS BoArd

Co-Chairs

ronald F. boisvert; Jack Davidson

Board Members

Marie-Paule Cani; nikil Dutt; Carol hutchins;

Joseph a. Konstan; ee-Peng lim;

Catherine McGeoch; M. tamer ozsu;

Vincent shen; Mary lou soffa

ACM U.S. Public Policy Offce

Cameron Wilson, Director

1828 l street, n.W., suite 800

Washington, DC 20036 usa

t (202) 659-9711; F (202) 667-1066

Computer Science Teachers Association

Chris stephenson,

executive Director

STAFF

DIRECTOR OF GROUP PUBLIShInG

scott e. Delman

publisher@cacm.acm.org

Executive Editor

Diane Crawford

Managing Editor

thomas e. lambert

Senior Editor

andrew rosenbloom

Senior Editor/news

Jack rosenberger

Web Editor

David roman

Editorial Assistant

Zarina strakhan

Rights and Permissions

Deborah Cotton

Art Director

andrij borys

Associate Art Director

alicia Kubista

Assistant Art Directors

Mia angelica balaquiot

brian Greenberg

Production Manager

lynn Daddesio

Director of Media Sales

Jennifer ruzicka

Public Relations Coordinator

Virgina Gold

Publications Assistant

emily Williams

Columnists

alok aggarwal; Phillip G. armour;

Martin Campbell-Kelly;

Michael Cusumano; Peter J. Denning;

shane Greenstein; Mark Guzdial;

Peter harsha; leah hoffmann;

Mari sako; Pamela samuelson;

Gene spafford; Cameron Wilson

CoNTACT PoiNTS

Copyright permission

permissions@cacm.acm.org

Calendar items

calendar@cacm.acm.org

Change of address

acmhelp@acm.org

Letters to the Editor

letters@cacm.acm.org

WeB SiTe

http://cacm.acm.org

AUThor GUideliNeS

http://cacm.acm.org/guidelines

ACm AdverTiSiNG dePArTmeNT

2 Penn Plaza, suite 701, new york, ny

10121-0701

t (212) 626-0686

F (212) 869-0481

Director of Media Sales

Jennifer ruzicka

jen.ruzicka@hq.acm.org

Media Kit acmmediasales@acm.org

Association for Computing Machinery

(ACM)

2 Penn Plaza, suite 701

new york, ny 10121-0701 usa

t (212) 869-7440; F (212) 869-0481

ediToriAl BoArd

EDITOR-In-ChIEF

Moshe y. Vardi

eic@cacm.acm.org

nEWS

Co-chairs

Marc najork and Prabhakar raghavan

Board Members

hsiao-Wuen hon; Mei Kobayashi;

William Pulleyblank; rajeev rastogi;

Jeannette Wing

VIEWPOInTS

Co-chairs

susanne e. hambrusch; John leslie King;

J strother Moore

Board Members

P. anandan; William aspray; stefan bechtold;

Judith bishop; stuart I. Feldman;

Peter Freeman; seymour Goodman;

shane Greenstein; Mark Guzdial;

richard heeks; rachelle hollander;

richard ladner; susan landau;

Carlos Jose Pereira de lucena;

beng Chin ooi; loren terveen

PRACTICE

Chair

stephen bourne

Board Members

eric allman; Charles beeler; David J. brown;

bryan Cantrill; terry Coatta; stuart Feldman;

benjamin Fried; Pat hanrahan; Marshall Kirk

McKusick; erik Meijer; George neville-neil;

theo schlossnagle; Jim Waldo

the Practice section of the CaCM

editorial board also serves as

the editorial board of .

COnTRIBUTED ARTICLES

Co-chairs

al aho and Georg Gottlob

Board Members

robert austin; yannis bakos; elisa bertino;

Gilles brassard; Kim bruce; alan bundy;

Peter buneman; erran Carmel;

andrew Chien; Peter Druschel; blake Ives;

James larus; Igor Markov; Gail C. Murphy;

shree nayar; bernhard nebel; lionel M. ni;

sriram rajamani; Marie-Christine rousset;

avi rubin; Krishan sabnani;

Fred b. schneider; abigail sellen;

ron shamir; Marc snir; larry snyder;

Manuela Veloso; Michael Vitale; Wolfgang

Wahlster; hannes Werthner;

andy Chi-Chih yao

RESEARCh hIGhLIGhTS

Co-chairs

stuart J. russell and Gregory Morrisett

Board Members

Martin abadi; stuart K. Card; Jon Crowcroft;

shaf Goldwasser; Monika henzinger;

Maurice herlihy; Dan huttenlocher;

norm Jouppi; andrew b. Kahng;

Daphne Koller; Michael reiter;

Mendel rosenblum; ronitt rubinfeld;

David salesin; lawrence K. saul;

Guy steele, Jr.; Madhu sudan;

Gerhard Weikum; alexander l. Wolf;

Margaret h. Wright

WEB

Co-chairs

James landay and Greg linden

Board Members

Gene Golovchinsky; Marti hearst;

Jason I. hong; Jeff Johnson; Wendy e. MacKay

ACM Copyright notice

Copyright 2012 by association for

Computing Machinery, Inc. (aCM).

Permission to make digital or hard copies

of part or all of this work for personal

or classroom use is granted without

fee provided that copies are not made

or distributed for proft or commercial

advantage and that copies bear this

notice and full citation on the frst

page. Copyright for components of this

work owned by others than aCM must

be honored. abstracting with credit is

permitted. to copy otherwise, to republish,

to post on servers, or to redistribute to

lists, requires prior specifc permission

and/or fee. request permission to publish

from permissions@acm.org or fax

(212) 869-0481.

For other copying of articles that carry a

code at the bottom of the frst or last page

or screen display, copying is permitted

provided that the per-copy fee indicated

in the code is paid through the Copyright

Clearance Center; www.copyright.com.

Subscriptions

an annual subscription cost is included

in aCM member dues of $99 ($40 of

which is allocated to a subscription to

Communications); for students, cost

is included in $42 dues ($20 of which

is allocated to a Communications

subscription). a nonmember annual

subscription is $100.

ACM Media Advertising Policy

Communications of the ACM and other

aCM Media publications accept advertising

in both print and electronic formats. all

advertising in aCM Media publications is

at the discretion of aCM and is intended

to provide fnancial support for the various

activities and services for aCM members.

Current advertising rates can be found

by visiting http://www.acm-media.org or

by contacting aCM Media sales at

(212) 626-0686.

Single Copies

single copies of Communications of the

ACM are available for purchase. Please

contact acmhelp@acm.org.

CommUNiCATioNS oF The ACm

(Issn 0001-0782) is published monthly

by aCM Media, 2 Penn Plaza, suite 701,

new york, ny 10121-0701. Periodicals

postage paid at new york, ny 10001,

and other mailing offces.

PoSTmASTer

Please send address changes to

Communications of the ACM

2 Penn Plaza, suite 701

new york, ny 10121-0701 usa

Printed in the u.s.a.

P

L

E

A

S

E R E C

Y

C

L

E

T

H

I

S

M A G A

Z

I

N

E

mArCh 2012 | vol. 55 | No. 3 | coMMuni caTi ons of The acM 5

editors letter

The 14

th

International Congress of Logic, Methodology

and Philosophy of Science (CLMPS), held last July in

France, included a special symposium on the subject

of What is an algorithm?

This may seem to be a strange ques-

tion to ask just before the Turing Cen-

tenary Year, which is now being cel-

ebrated by numerous events around

the world (see http://www.turingcen-

tenary.eu/). Didnt Turing answer this

question decisively? Isnt the answer

to the question an algorithm is a Tur-

ing machine?

But confating algorithms with Tur-

ing machines is a misreading of Tur-

ings 1936 paper On Computable

Numbers, with an Application to the

Entscheidungsproblem. Turings aim

was to defne computability, not algo-

rithms. His paper argued that every

function on natural numbers that can

be computed by a human computer

(until the mid-1940s a computer was

a person who computes) can also be

computed by a Turing machine. There

is no claim in the paper that Turing

machines offer a general model for al-

gorithms. (See S. B. Coopers article on

page 74 for a perspective on Turings

model of computation.) So the ques-

tion posed by the special CLMPS sym-

posium is an excellent one.

Incontrovertibly, algorithms con-

stitute one of the central subjects of

study in computer science. Should we

not have by now a clear understand-

ing of what an algorithm is? It is worth

pointing out that mathematicians for-

malized the notion of proof only in the

20th century, even though they have

been using proofs at that point for

about 2,500 years. Analogously, the fact

that we have an intuitive notion of what

an algorithm is does not mean that we

have a formal notion. For example,

when should we say that two programs

describe the same algorithm? This re-

quires a rigorous defnition!

Surprisingly, the two key speakers

in the symposium, Y. Gurevich and Y.

Moschovakis, provided very different

answers to this basic question, even af-

ter focusing on classical sequential al-

gorithms (see in-depth papers at http://

goo.gl/0E7wa and http://goo.gl/HsQHq).

Gurevich argued that every algo-

rithm can be defned in terms of an

abstract state machine. Intuitively, an

abstract state machine is a machine

operating on states, which are arbi-

trary data structures. The key require-

ment is that one step of the machine

can cause only a bounded local change

on the state. This requirement corre-

sponds both to the one-cell-at-a-time

operations of a Turing machine and the

bounded-width operations of von Neu-

mann machines.

Moschovakis, in contrast, argued

that an algorithm is defned in terms of

a recursor, which is a recursive descrip-

tion built on top of arbitrary opera-

tions taken as primitives. For example,

the factorial function can be described

recursively, using multiplication as a

primitive algorithmic operation, while

Euclids greatest-common divisor al-

gorithm can be described recursively,

using the remainder function as a

primitive operation.

So is an algorithm an abstract state

machine or a recursor? Mathemati-

cally, one can show that recursors can

model abstract state machines and ab-

stract state machines can model recur-

sors, but which defnition is primary?

I fnd this debate reminiscent of

the endless argument in computer

science about the advantages and dis-

advantages of imperative and func-

tional programming languages, going

all the way back to Fortran and Lisp.

Since this debate has never been set-

tled and is unlikely to be ever settled,

we learned to live with the coexistence

of imperative and functional pro-

gramming languages.

Physicists learned to live with de

Broglies wave-particle duality, which

asserts that all particles exhibit both

wave and particle properties. Further-

more, in quantum mechanics, clas-

sical concepts such as particle and

wave fail to describe fully the behav-

ior of quantum-scale objects. Perhaps

it is time for us to adopt a similarly nu-

anced view of what an algorithm is.

An algorithm is both an abstract

state machine and a recursor, and nei-

ther view by itself fully describes what

an algorithm is. This algorithmic dual-

ity seems to be a fundamental princi-

ple of computer science.

Moshe Y. Vardi, EdITOR- In- CHIEf

What is an algorithm?

DOI:10.1145/2093548.2093549 Moshe Y. Vardi

is an algorithm

an abstract

state machine

or a recursor?

6 coMMuni caTi ons of The acM | mArCh 2012 | vol. 55 | No. 3

letters to the editor

C

oncerning Moshe Y. VArDi s

Editors Letter Artifcial In-

telligence: Past and Future

(Jan. 2012), Id like to add

that AI wont replace hu-

man reasoning in the near future for

reasons apparent from examining the

context, or meta, of AI. Computer

programs (and hardware logic accel-

erators) do no more than follow rules

and are nothing more than a sequence

of rules. AI is nothing but logic, which

is why John McCarthy said, As soon

as it works, no one calls it AI. Once it

works, it stops being AI and becomes

an algorithm.

One must focus on the context of

AI to begin to address deeper ques-

tions: Who defnes the problems to

be solved? Who defnes the rules by

which problems are to be solved? Who

defnes the tests that prove or disprove

the validity of the solution? Answer

them, and you might begin to address

whether the future still needs comput-

er scientists. Such questions suggest

that the difference between rules and

intelligence is the difference between

syntax and semantics.

Logic is syntax. The semantics are

the why, or the making of rules to

solve why and the making of rules (as

tests) that prove (or disprove) the solu-

tion. Semantics are conditional, and

once why is transformed into what,

something important, as yet unde-

fned, might disappear.

Perhaps the relationship between

intelligence and AI is better under-

stood through an analogy: Intelligence

is sand for casting an object, and AI

is what remains after the sand is re-

moved. AI is evidence that intelligence

was once present. Intelligence is the

crucial but ephemeral scaffolding.

Some might prefer to be pessimis-

tic about the future, as we are unable

to, for example, eliminate all software

bugs or provide total software security.

We know the reasons, but like the dif-

ference between AI and intelligence,

we still have diffculty explaining ex-

actly what they are.

Robert schaefer, Westford, mA

steve Jobs Very Much an engineer

In his Editors Letter Computing for

Humans (Dec. 2011), Moshe Y. Vardi

said, Jobs was very much not an en-

gineer. Sorry, but I knew Jobs fairly

well during his NeXT years (for me,

19891993). Ive also known some of

the greatest engineers of the past cen-

tury, and Jobs was one of them. I used

to say he designed every atom in the

NeXT machine, and since the NeXT

was useful, Jobs was an engineer. Con-

sider this personal anecdote: Jobs and

I were in a meeting at Carnegie Mellon

University, circa 1990, and I mentioned

I had just received one of the frst IBM

workstations (from IBMs Austin facil-

ity). Wanting to see it (in my hardware

lab at the CMU Robotics Institute), the

frst thing he asked for was a screw-

driver, then sat down on the foor and

proceeded to disassemble it, writing

down part numbers, including, signif-

cantly, the frst CD-ROM on a PC. He

then put it back together, powered it

up, and thanked me. Jobs was, in fact, a

better engineer than marketing guy or

sales guy, and it was what he built that

defned him as an engineer.

Robert Thibadeau, Pittsburgh, PA

for consistent code,

Retell the Great Myths

In the same way cultural myths like

the Bhagavat Gita, Exodus, and the Ra-

mayan have been told and retold down

through the ages, the article Coding

Guidelines: Finding the Art in Sci-

ence by Robert Green and Henry Led-

gard (Dec. 2011) should likewise be

retold to help IT managers, as well as

corporate management, understand

the protocols of the cult of program-

ming. However, it generally covered

only the context of writing education-

al, or textbook, and research coding

rather than enterprise coding. Along

with universities, research-based

coding is also done in the enterprise,

following a particular protocol or pat-

tern, appreciating that people are not

the same from generation to genera-

tion even in large organizations.

Writing enterprise applications, pro-

grammers sometimes write code as pre-

scribed in textbooks (see Figure 11 in

the article), but the article made no ex-

plicit mention of Pre or Post conditions

unless necessary. Doing so would help

make the program understandable to

newcomers in the organization.

Keeping names short and simple

might also be encouraged, though not

always. If a name is clear enough to

show the result(s) the function or vari-

able is meant to provide, there is no

harm using long names, provided they

are readable. For example, instead of

isstateavailableforpolicyis-

sue, the organization might encourage

isStateAvailableForPolicyIssue

(in Java) or is _ state _ available _

for _ policy _ issue (in Python).

Compilers dont mind long names;

neither do humans, when they can

read them.

In the same way understanding

code is important when it is read, un-

derstanding a programs behavior dur-

ing its execution is also important. De-

bug statements are therefore essential

for good programming. Poorly handled

code without debug statements in pro-

duction systems has cost many enter-

prises signifcant time and money over

the years. An article like this would do

well to include guidance as to how to

write good (meaningful) debug state-

ments as needed.

Mahesh subramaniya, San Antonio, TX

The article by Robert Green and Henry

Ledgard (Dec. 2011) was superb. In

industry, too little attention generally

goes toward instilling commonsense

programming habits that promote pro-

gram readability and maintainability,

two cornerstones of quality.

Additionally, evidence suggests that

mixing upper- and lowercase contributes

to loss of intelligibility, possibly because

English uppercase letters tend to share

same-size blocky appearance. Consis-

tent use of lowercase may be preferable;

for example, counter may be prefer-

able to Counter. There is a tendency

DOI:10.1145/2093548.2093550

from syntax to semantics for ai

mArCh 2012 | vol. 55 | No. 3 | coMMuni caTi ons of The acM 7

letters to the editor

to use uppercase with abbreviations or

compound names, as in, say, Custom-

erAddress, but some programming

languages distinguish among Custom-

erAddress, Customeraddress, and

customerAddress, so ensuring con-

sistency with multiple program compo-

nents or multiple programmers can be

a challenge. If a programming language

allows certain punctuation marks in

names, then customer_address might

be the optimal name.

It is also easy to construct pro-

grams that read other programs and

return metrics about programming

style. White space, uppercase, verti-

cal alignment, and other program

characteristics can all be quantifed.

Though there may be no absolute

bounds separating good from bad

code, an organizations programmers

can beneft from all this information

if presented properly.

allan Glaser, Wayne, PA

Diversity and competence

Addressing the lack of diversity in

computer science, the Viewpoint

Data Trends on Minorities and

People with Disabilities in Comput-

ing (Dec. 2011) by Valerie Taylor and

Richard Ladner seemed to have been

based solely on the belief that diver-

sity is good per se. However, it offered

no specifc evidence as to why diversi-

ty, however defned, is either essential

or desirable.

I accept that there should be equal

opportunity in all fields and that such

a goal is a special challenge for a field

as intellectually demanding as com-

puter science. However, given that

the authors did not make this point

directly, what exactly does diversity

have to do with competence in com-

puting? Moreover, without some

detailed discussion of the need or

desirability of diversity in computer

science, it is unlikely that specific

policies and programs needed to at-

tract a variety of interested people

can be formulated.

It is at least a moral obligation for

a profession to ensure it imposes no

unjustifable barriers to entry, but only

showing that a situation exists is far

from addressing it.

John c. Bauer,

manotick, ontario, Canada

authors Response:

In the inaugural Broadening Participation

Viewpoint Opening Remarks (Dec. 2009),

the author Ladner outlined three reasons for

broadening participationnumbers, social

justice, and qualitysupporting them with

the argument that diverse teams are more

likely to produce quality products than those

from the same background. Competence is

no doubt a prerequisite for doing anything

well, but diversity and competence combined

is what companies seek to enhance their

bottom lines.

Valerie Taylor, College Station, TX, and

Richard Ladner, Seattle

overestimating Women authors?

The article Gender and Computing

Conference Papers by J. McGrath

Cohoon et al. (Aug. 2011) explored

how women are increasingly publish-

ing in ACM conference proceedings,

with the percentage of women au-

thors going from 7% in 1966 to 27% in

2009. In 2011, we carried out a similar

study of womens participation in the

years 19602010 and found a signif-

cant difference from Cohoon et al. In

1966, less than 3% of the authors of

published computing papers were

women, as opposed to about 16.4% in

2009 and 16.3% in 2010. We have since

sought to identify possible reasons

for that difference: For one, Cohoon

et al. sought to identify the gender of

ACM conference-paper authors based

on author names, referring to a data-

base of names. They analyzed 86,000

papers from more than 3,000 confer-

ences, identifying the gender of 90%

of 356,703 authors. To identify the

gender of unknown or ambiguous

names, they assessed the probability

of a name being either male or female.

Our study considered computing

conferences and journals in the DBLP

database of 1.5 million papers (most

from ACM conferences), including

more than four million authors (more

than 900,000 different people). Like

Cohoon et al., we also identifed gen-

der based on author names from a da-

tabase of names, using two methods

to address ambiguity: The frst (simi-

lar to Cohoon et al.) used the U.S. cen-

sus distribution to predict the gender

of a name; the second assumed am-

biguous names refect the same gen-

der distribution as the general popu-

lation. Our most accurate results were

obtained through the latter method

unambiguous names.

We identifed the gender of more

than 2.6 million authors, leaving out

almost 300,000 ambiguous and 1.1

million unknown names (with 220,000

limited to just initials). We performed

two tests to validate the method, com-

paring our results to a manual gender

identifcation of two subsets (thousands

of authors) of the total population of au-

thors. In each, the results were similar

to those obtained through our automat-

ed method (17.26% and 16.19%).

Finally, Cohoon et al. compared their

results with statistics on the gender of

Ph.D. holders, concluding that the pro-

ductivity of women is greater than that

of men. Recognizing this result contra-

dicts established conclusions, they pro-

posed possible explanations, including

Men and women might tend to publish

in different venues, with women over-

represented at ACM conferences com-

pared to journals, IEEE, and other non-

ACM computing conferences.

However, the contradiction was due

to the fact that their estimation of un-

known or ambiguous names overes-

timated the number of women publish-

ing in ACM conference proceedings.

Jos Mara cavero, Beln Vela,

and Paloma cceres, madrid, Spain

authors Response:

The difference in our results from those

of Cavero et al. likely stems from our use

of a different dataset. We conducted our

analyses exclusively on ACM conference

publications. Cavero et al. included journals,

which have fewer women authors. We

carefully tested and verifed our approach

to unknown and ambiguous names on

data where gender was known, showing

that women were overrepresented among

unknown and ambiguous names. We

could therefore construct a more accurate

dataset that corrected for the miscounting

of women.

J. McGrath cohoon, Charlottesville, vA,

sergey nigai, Zurich, and

Joseph Jofsh Kaye, Palo Alto, CA

Communications welcomes your opinion. to submit a

letter to the editor, please limit yourself to 500 words or

less, and send to letters@cacm.acm.org.

2012 aCM 0001-0782/12/03 $10.00

ACM, Advancing Computing as

a Science and a Profession

Advancing Computing as a Science & Profession

Dear Colleague,

The power of computing technology continues to drive innovation to all corners of the globe,

bringing with it opportunities for economic development and job growth. ACM is ideally positioned

to help computing professionals worldwide stay competitive in this dynamic community.

ACM provides invaluable member benefits to help you advance your career and achieve success in your

chosen specialty. Our international presence continues to expand and we have extended our online

resources to serve needs that span all generations of computing practitioners, educators, researchers, and

students.

ACM conferences, publications, educational efforts, recognition programs, digital resources, and diversity

initiatives are defining the computing profession and empowering the computing professional.

This year we are launching Tech Packs, integrated learning packages on current technical topics created and

reviewed by expert ACM members. The Tech Pack core is an annotated bibliography of resources from the

renowned ACM Digital Library articles from journals, magazines, conference proceedings, Special Interest

Group newsletters, videos, etc. and selections from our many online books and courses, as well an non-

ACM resources where appropriate.

BY BECOMING AN ACM MEMBER YOU RECEIVE:

Timely access to relevant information

Communications of the ACM magazine ACM Tech Packs TechNews email digest Technical Interest Alerts

and ACM Bulletins ACM journals and magazines at member rates full access to the acmqueue website for

practitioners ACM SIG conference discounts the optional ACM Digital Library

Resources that will enhance your career and follow you to new positions

Career & Job Center The Learning Center online books from Safari featuring OReilly and Books24x7

online courses in multiple languages virtual labs e-mentoring services CareerNews email digest access to

ACMs 36 Special Interest Groups an acm.org email forwarding address with spam filtering

ACMs worldwide network of more than 100,000 members ranges from students to seasoned professionals and

includes many renowned leaders in the field. ACM members get access to this network and the advantages that

come from their expertise to keep you at the forefront of the technology world.

Please take a moment to consider the value of an ACM membership for your career and your future in the

dynamic computing profession.

Sincerely,

Alain Chesnais

President

Association for Computing Machinery

CACM app_revised_02_02_11:Layout 1 2/2/11 11:58 AM Page 1

ACM, Advancing Computing as

a Science and a Profession

Advancing Computing as a Science & Profession

Dear Colleague,

The power of computing technology continues to drive innovation to all corners of the globe,

bringingwithit opportunities for economic development andjobgrowth. ACMis ideally positioned

to help computing professionals worldwide stay competitive in this dynamic community.

ACMprovides invaluable member benefits to help you advance your career and achieve success in your

chosen specialty. Our international presence continues to expand and we have extended our online

resources to serve needs that span all generations of computing practitioners, educators, researchers, and

students.

ACM conferences, publications, educational efforts, recognition programs, digital resources, and diversity

initiatives are defining the computing profession and empowering the computing professional.

This year we are launchingTech Packs, integrated learning packages on current technical topics created and

reviewed by expert ACMmembers. The Tech Pack core is an annotated bibliography of resources fromthe

renowned ACMDigital Library articles fromjournals, magazines, conference proceedings, Special Interest

Group newsletters, videos, etc. and selections fromour many online books and courses, as well an non-

ACMresources where appropriate.

BY BECOMINGANACMMEMBERYOURECEIVE:

Timely access to relevant information

Communications of the ACMmagazine ACMTech Packs TechNews email digest Technical Interest Alerts and

ACM Bulletins ACM journals and magazines at member rates full access to the acmqueue website for practi-

tioners ACMSIGconference discounts the optional ACMDigital Library

Resources that will enhance your career and followyou to newpositions

Career & Job Center online books from Safari featuring OReilly and Books24x7 online courses in multiple

languages virtual labs e-mentoring services CareerNews email digest access to ACMs 34 Special Interest

Groups an acm.org email forwarding address with spam filtering

ACMs worldwide network of more than 97,000 members ranges from students to seasoned professionals and

includes many renowned leaders in the field. ACMmembers get access to this network and the advantages that

come from their expertise to keep you at the forefront of the technology world.

Please take a moment to consider the value of an ACM membership for your career and your future in the

dynamic computing profession.

Sincerely,

Alain Chesnais

President

Association for Computing Machinery

Priority Code: AD10

Online

http://www.acm.org/join

Phone

+1-800-342-6626 (US & Canada)

+1-212-626-0500 (Global)

Fax

+1-212-944-1318

membership application &

digital library order form

PROFESSIONAL MEMBERSHIP:

o ACM Professional Membership: $99 USD

o ACM Professional Membership plus the ACM Digital Library:

$198 USD ($99 dues + $99 DL)

o ACM Digital Library: $99 USD (must be an ACM member)

STUDENT MEMBERSHIP:

o ACM Student Membership: $19 USD

o ACM Student Membership plus the ACM Digital Library: $42 USD

o ACM Student Membership PLUS Print CACMMagazine: $42 USD

o ACM Student Membership w/Digital Library PLUS Print

CACMMagazine: $62 USD

choose one membership option:

Name

Address

City State/Province Postal code/Zip

Country E-mail address

Area code & Daytime phone Fax Member number, if applicable

Payment must accompany application. If paying by check or

money order, make payable to ACM, Inc. in US dollars or foreign

currency at current exchange rate.

o Visa/MasterCard o American Express o Check/money order

o Professional Member Dues ($99 or $198) $ ______________________

o ACM Digital Library ($99) $ ______________________

o Student Member Dues ($19, $42, or $62) $ ______________________

Total Amount Due $ ______________________

Card # Expiration date

Signature

Professional membership dues include $40 toward a subscription

to Communications of the ACM. Student membership dues include

$15 toward a subscription to XRDS. Member dues, subscriptions,

and optional contributions are tax-deductible under certain

circumstances. Please consult with your tax advisor.

payment:

RETURN COMPLETED APPLICATION TO:

All new ACM members will receive an

ACM membership card.

For more information, please visit us at www.acm.org

Association for Computing Machinery, Inc.

General Post Oce

P.O. Box 30777

New York, NY 10087-0777

Questions? E-mail us at acmhelp@acm.org

Or call +1-800-342-6626 to speak to a live representative

Satisfaction Guaranteed!

Purposes of ACM

ACM is dedicated to:

1) advancing the art, science, engineering,

and application of information technology

2) fostering the open interchange of

information to serve both professionals and

the public

3) promoting the highest professional and

ethics standards

I agree with the Purposes of ACM:

Signature

ACM Code of Ethics:

http://www.acm.org/serving/ethics.html

You can join ACM in several easy ways:

Or, complete this application and return with payment via postal mail

Special rates for residents of developing countries:

http://www.acm.org/membership/L2-3/

Special rates for members of sister societies:

http://www.acm.org/membership/dues.html

Advancing Computing as a Science & Profession

Please print clearly

CACM app_revised_02_02_11:Layout 1 2/2/11 11:58 AM Page 2

ACM, Advancing Computing as

a Science and a Profession

Advancing Computing as a Science & Profession

Dear Colleague,

The power of computing technology continues to drive innovation to all corners of the globe,

bringingwithit opportunities for economic development andjobgrowth. ACMis ideally positioned

to help computing professionals worldwide stay competitive in this dynamic community.

ACMprovides invaluable member benefits to help you advance your career and achieve success in your

chosen specialty. Our international presence continues to expand and we have extended our online

resources to serve needs that span all generations of computing practitioners, educators, researchers, and

students.

ACM conferences, publications, educational efforts, recognition programs, digital resources, and diversity

initiatives are defining the computing profession and empowering the computing professional.

This year we are launchingTech Packs, integrated learning packages on current technical topics created and

reviewed by expert ACMmembers. The Tech Pack core is an annotated bibliography of resources fromthe

renowned ACMDigital Library articles fromjournals, magazines, conference proceedings, Special Interest

Group newsletters, videos, etc. and selections fromour many online books and courses, as well an non-

ACMresources where appropriate.

BY BECOMINGANACMMEMBERYOURECEIVE:

Timely access to relevant information

Communications of the ACMmagazine ACMTech Packs TechNews email digest Technical Interest Alerts and

ACM Bulletins ACM journals and magazines at member rates full access to the acmqueue website for practi-

tioners ACMSIGconference discounts the optional ACMDigital Library

Resources that will enhance your career and followyou to newpositions

Career & Job Center online books from Safari featuring OReilly and Books24x7 online courses in multiple

languages virtual labs e-mentoring services CareerNews email digest access to ACMs 34 Special Interest

Groups an acm.org email forwarding address with spam filtering

ACMs worldwide network of more than 97,000 members ranges from students to seasoned professionals and

includes many renowned leaders in the field. ACMmembers get access to this network and the advantages that

come from their expertise to keep you at the forefront of the technology world.

Please take a moment to consider the value of an ACM membership for your career and your future in the

dynamic computing profession.

Sincerely,

Alain Chesnais

President

Association for Computing Machinery

10 coMMuni caTi ons of The acM | mArCh 2012 | vol. 55 | No. 3

Follow us on Twitter at http://twitter.com/blogCACM

The Communications Web site, http://cacm.acm.org,

features more than a dozen bloggers in the BLOG@CACM

community. In each issue of Communications, well publish

selected posts or excerpts.

Bertrand Meyer

What Beginning

students already

Know: The evidence

http://cacm.acm.org/

blogs/blog-cacm/104215

January 25, 2011

For the past eight years I have been

teaching the introductory program-

ming course at ETH Zurich, using the

inverted curriculum approach de-

veloped in a number of my earlier ar-

ticles. The course has now produced a

textbook, Touch of Class.

1

It is defnite-

ly objects-frst and most important-

ly contractsfrst, instilling a dose of

systematic reasoning about programs

right from the start.

Since the beginning I have been

facing the problem that Mark Guzdial

describes in his recent blog entry

2

:

that an introductory programming

course is not, for most of todays stu-

dents, a frst brush with program-

ming. We actually have precise data,

collected since the course began in its

current form, to document Guzdials

assertion.

Research conducted with Michela

Pedroni, who played a key role in the

organization of the course until last

We do not know of any incentive for

students to bias their answers one

way or the other; the 2009 and 2010

questionnaires did not show any sig-

nifcant deviation; and informal in-

quiries suggest that the ETH student

profle is not special. In fact, quoting

from the paper:

We did perform a similar test in a

second institution in a different country

[the U.K.]. The results from the student

group at University of York are very simi-

lar to the results at ETH; in particular,

they exhibit no signifcant differences

concerning the computer literacy out-

comes, prior programming knowledge,

and the number of languages that an

average student knows a little, well, and

very well. A comparison is available in a

separate report.

4

Our paper

3

is short and I do not

want to repeat its points here in any

detail; please read it for a precise

picture of what our students already

know when they come in. Let me sim-

ply give the statistics on the answers to

two basic questions: computer experi-

ence and programming language ex-

perience. We asked students how long

they had been using a computer (see

the frst chart).

year, and Manuel Oriol, who is now at

the University of York, led to an ETH

technical report: What Do Beginning

CS Majors Know?

3

It provides detailed,

empirical evidence on the prior pro-

gramming background of entering

computer science students.

Some qualifcations: The infor-

mation was provided by the students

themselves in questionnaires at the

beginning of the course; it covers the

period 20032008; and it applies only

to ETH. But we do not think these fac-

tors fundamentally affect the picture.

Knowledgeable

Beginners

Bertrand Meyer presents data on the computer and programming

knowledge of two groups of novice CS students.

DOI:10.1145/2093548.2093551 http://cacm.acm.org/blogs/blog-cacm

BeRTRanD MeyeR

an introductory

programming course

is not, for most

of todays students,

a frst brush with

programming.

blog@cacm

mArCh 2012 | vol. 55 | No. 3 | coMMuni caTi ons of The acM 11

These are typically 19-year-old stu-

dents. A clear conclusion is that we

need not (as we naively did the frst

time) spend the frst lecture or two

telling them how to use a computer

system.

The second chart summarizes the

students prior programming expe-

rience (again, the paper presents a

more detailed picture).

Eighteen percent of the students

(the proportion ranges from 13%22%

across the years of our study) have

had no prior programming experi-

ence. Thirty percent have had pro-

gramming experience, but not object-

oriented; the range of languages and

approaches, detailed in the paper, is

broad, but particularly contains tools

for programming Web sites, such

as PHP. A little more than half have

object-oriented programming expe-

rience; particularly remarkable are

the 10% who say they have written an

object-oriented system of more than

100 classes, a signifcant size for sup-

posed beginners!

Guzdials point was that There is

no frst; we are teaching students who

already know how to program. Our

fgures confrm this view only in part.

Some students have programmed

before, but not all of them. Quoting

again from our article:

At one end, a considerable fraction of

students have no prior programming ex-

perience at all (between 13% and 22%) or

only moderate knowledge of some of the

cited languages (about 30%). At the pres-

ent stage the evidence does not suggest a

decrease in either of these phenomena.

At the other end, the course faces a

large portion of students with expertise

in multiple programming languages

(about 30% know more than three lan-

guages in depth). In fact, many have

worked in a job where programming was

a substantial part of their work (24% in

2003, 30% in 2004, 26% in 2005, 35% in

2006, 31% in 2007 and 2008).

This is our real challenge: How to

teach an entering student body with

such a variety of prior programming

experience. It is diffcult to imagine

another scientifc or engineering dis-

cipline where instructors face compa-

rable diversity; a professor teaching

Chemistry 101 can have a reasonable

image of what the students know about

the topic. Not so in computer science

and programming. (In a recent discus-

sion, Pamela Zave suggested that our

experience may be comparable to that

of instructors in a frst-year language

course, such as Spanish, where some

of the students will be total beginners

and others speak Spanish at home.)

How do we bring something to all of

them, the crack coder who has already

written a compiler (yes, we have had

this case) or an e-commerce site and

the novice who has never seen an as-

signment before? It was the in-depth

examination of these questions that

led to the design of our course, based

on the outside-in approach of using

both components and progressively

discovering their insides; it also led

to the Touch of Class textbook

1

, whose

preface further discusses these issues.

A specifc question that colleagues

often ask when presented with statis-

tics such as the above is why the expe-

rienced students bother to take a CS

course at all. In fact, these students

know exactly what they are doing.

They realize their practical experience

with programming lacks a theoretical

foundation and have come to the uni-

versity to learn the concepts that will

enable them to progress to the next

stage. This is why it is possible, in the

end, for a single course to have some-

thing to offer to aspiring computer

scientists of all bentsthose who

seem to have been furiously coding

from kindergarten, the total novices,

and those in between.

References

1. bertrand Meyer, Touch of Class: Learning to Program

Well with Objects and Contracts, springer Verlag, 2009,

see details and materials at http://touch.ethz.ch/.

2. Mark Guzdial, Were too Late for First in CS1,http://

cacm.acm.org/blogs/blog-cacm/102624.

3. Michela Pedroni, Manuel oriol, and bertrand

Meyer, What Do Beginning CS Majors Know?,

technical report 631, eth Zurich, Chair of software

engineering, 2009, http://se.ethz.ch/~meyer/

publications/teaching/background.pdf.

4. Michela Pedroni and Manuel oriol, A Comparison of CS

Student Backgrounds at Two Technical Universities,

technical report 613, eth Zurich, 2009, ftp://ftp.inf.

ethz.ch/pub/publications/tech-reports/6xx/613.pdf.

Bertrand Meyer is a professor at eth Zurich and ItMo

(st. Petersburg) and chief architect of eiffel software.

2012 aCM 0001-0782/12/03 $10.00

BeRTRanD MeyeR

it is diffcult

to imagine

another scientifc

or engineering

discipline where

instructors face

comparable diversity;

a professor teaching

chemistry 101 can

have a reasonable

image of what

the students know

about the topic.

not so in computer

science and

programming.

computer experience.

24 yrs: 4%

59 yrs: 42%

10 yrs: 54%

Programming experience.

100 Classes: 10%

None: 18%

No O-O: 30%

Some O-O: 42%

12 coMMuni caTi ons of The acM | mArCh 2012 | vol. 55 | No. 3

ACM

Member

News

a DeDicaTion To

huMan RiGhTs

The fling

cabinets in Jack

Minkers offce

at the University

of Maryland

contain an

estimated 4,000

newspaper articles, letters, and

other items that attest to his

commitment to protecting the

human rights of computer

scientists around the world.

some of these computer

scientists were imprisoned,

with or without a trial. some

have simply disappeared.

countless others were denied

a travel visa and the ability to

attend an academic conference.

To date, Minker has been

involved in some 300 human

rights cases in Argentina, china,

Pakistan, south Africa, and

other countries.

Many think that we should

mind our own business about

these things, that they are solely

the affairs of foreign nations,

says Minker, Professor emeritus

of the computer science

department at the University of

Maryland. But these countries

have signed agreements to

abide by United nations

human-rights treaties.

For his four decades of

dedication, Minker recently

received the 2011 heinz r.

Pagels human rights scientists

Award from the new York

Academy of science. he frst

became involved with human

rights when he joined the

committee of concerned

scientists in 1972. one of his

early and most celebrated cases

involved soviet cyberneticist

Alexander Lerner, who was

forbidden to speak at, let

alone attend, an international

conference in Moscow.

Thanks in large part to Minkers

intervention, Lerner was

allowed to attend and speak at

the conference.

it was a great victory for

intellectual freedom, Minker

says. There was nothing to

warrant not allowing him to

speak. seeking to keep yourself

alive within the scientifc

community by taking part in

a conference does not merit a

human rights violation.

Dennis McCafferty

ACM LAUNCHES

ENHANCED DIGITAL LIBRARY

The new DL simplifies usability, extends

connections, and expands content with:

Broadened citation pages with tabs

for metadata and links to expand

exploration and discovery

Redesigned binders to create

personal, annotatable reading lists for

sharing and exporting

Enhanced interactivity tools to retrieve

data, promote user engagement, and

introduce user-contributed content

Expanded table-of-contents service

for all publications in the DL

Visit the ACM Digital Library at:

dl.acm.org

ACM_DL_Ad_CACM_2.indd 1 2/2/11 1:54:56 PM

N

news

mArCh 2012 | vol. 55 | No. 3 | coMMuni caTi ons of The acM 13

s

C

r

e

e

n

s

h

o

t

C

o

u

r

t

e

s

y

o

F

F

o

l

D

.

I

t

S

cienTisTs AT The Universi-

ty of Washington had strug-

gled for more than a decade

to discover the structure of

a protein that helps the hu-

man immunodefciency virus multi-

ply. Understanding its shape could aid

them in developing drugs to attack the

virus, but the scientists had been un-

able to decipher it. So they turned to

colleagues who had developed Foldit,

an online game that challenges players

to rearrange proteins into their lowest-

energy form, the form they would most

likely take in nature.

Within three weeks the 57,000-plus

players, most of whom had no training

in molecular biology, had the answer,

which was subsequently published

in the journal Nature Structural and

Molecular Biology. The University of

Washington researchers say this was

probably the frst time a long-standing

scientifc problem had been solved us-

ing an online game.

Adrien Treuille, an assistant pro-

fessor of computer science at Carn-

egie Mellon University, and one of the

creators of Foldit, says the game is an

example of a rather startling insight.

Large groups of non-experts could be

better than computers at these really

complex problems, he says. I think

no one quite believed that humans

would be so much better.

There are many problems in science

that computers, despite their capacity

for tackling vast sets of data, cannot yet

solve. But through the clever use of the

Internet and social media, researchers

are harnessing abilities that are innate

to humans but beyond the grasp of

machinesintuition, superior visual

processing, and an understanding of

the real worldto tackle some of these

challenges. Ultimately, the process

may help teach computers how to per-

form these tasks.

Science | DOI:10.1145/2093548.2093553 Neil Savage

Gaining Wisdom

from crowds

Online games are harnessing humans skills to solve scientifc

problems that are currently beyond the ability of computers.

using the online game foldit, 57,000-plus players helped scientists at the university of

Washington solve a long-standing molecular biology problem within three weeks.

14 coMMuni caTi ons of The acM | mArCh 2012 | vol. 55 | No. 3

news

Computers can try to fgure out the

structures of proteins by rearranging

the molecules in every conceivable

combination, but such a computa-

tionally intensive task could take

years. The University of Washington

researchers tried to speed up the pro-

cess using Rosetta@Home, a distrib-

uted program developed by University

of Washington biochemistry profes-

sor David Baker to harness idle CPU

time on personal computers. To en-

courage people to run the program,

they created a graphical representa-

tion of the protein folding that acted

as a screensaver. Unexpectedly, says

Treuille, people started noticing they

could guess whether the computer

was getting closer to or farther from

the answer by watching the graphics,

and that inspired the idea for Foldit.

The games object is to fold a given

protein into the most compact shape

possible, which varies depending on

how its constituent amino acids are

arranged and on the attractive and

repulsive forces between them. With

a little practice, humans can use their

visual intuition to move parts of the

protein around on the screen into a

shape that seems more suitable. Play-

ers scores are based on a statistical

estimate of how likely it is that the

shape is correct.

Treuille has since devised a new

game, EteRNA, which reverses the pro-

cess. In it, players are given shapes they

are not allowed to change, and have to

come up with a sequence of amino ac-

ids they think will best ft. A biochem-

istry lab at Stanford University then

synthesizes the player-designed pro-

teins. The biochemists can compare

the structures that computer models

predict with those the players create,

and perhaps learn the reasons for the

differences. Knowing the structure of

a protein helps scientists understand

its functions.

In some types of crowdsourcing,

computers perform the preliminary

work and then people are asked to

refne it. For instance, Jerome Wald-

ispuhl and Mathiue Blanchette, assis-

tant professors of computer science

at McGill University, have designed

Phylo, a game that helps them align

sequences of DNA to study genetic

diseases. Geneticists can trace the

evolution of genes by comparing them

in closely related species and not-

ing where mutations have changed

one or more of the four nucleotides

that make up DNA. Comparing three

billion nucleotides in each of the 44

genomes of interest would require

far too much computer time, so re-

searchers use heuristics to speed up

the computations, but in the process

lose some accuracy.

Phylo shows humans some snip-

pets of the computers solutions, with

the nucleotides coded by color, and

asks them to improve the alignments.

The challenge is that the matches

are imperfect, because of the muta-

tions, and there can also be gaps in

the sequence. Humans, Waldispuhl

explains, have a much easier time

than computers at identifying a match

that is almost but not quite perfect.

Since the McGill University research-

ers launched Phylo in late 2010, more

than 35,000 people have played the

game, and they have improved ap-

proximately 70% of the alignments

they have been given.

see something, say something

Like Phylo, many crowdsourcing proj-

ects rely on humans vastly superior

image-processing capabilities. A third

of our brain is really wired to specif-

cally deal with images, says Luis von

Ahn, associate professor of computer

science at Carnegie Mellon. He devel-

since researchers

launched Phylo

in late 2010, more

than 35,000 people

have played

the game, and

they have improved

approximately 70%

of the alignments

they have been given.

oped the ESP Game, since acquired

by Google, which shows a photo to

two random people at the same time

and asks them to type a word that de-

scribes what it depicts; the people win

when they both choose the same word.

As they play the game, they also create

tags for the images. Scientists hope

computers can apply machine learn-

ing techniques to the tags and learn to

decipher the images themselves, but

von Ahn says it could be decades before

machines can match people. Eventu-

ally were going to be able to get com-

puters to be able to do everything hu-

mans can do, he says. Its just going

to take a while.

In Duolingo, launched in late 2011,

von Ahn relies on humans understand-

ing of language to get crowds to do what

computers do inadequatelytrans-

late text. Duolingo helps people learn

a foreign language by asking them to

translate sentences from that language

to their own, starting with simple sen-

tences and advancing to more complex

ones as their skills increase. The com-

puter provides one-to-one dictionary

translations of individual words, but

humans use their experience to see

what makes sense in context. If one

million people participated, von Ahn

predicts they could translate every Eng-

lish-language page of Wikipedia into

Spanish in just 80 hours.

Another skill humans bring to

crowdsourcing tasks is their ability to

notice unusual things and ask ques-

tions that were not part of the origi-

nal mandate, says Chris Lintott, a re-

searcher in the physics department

at the University of Oxford. Lintott

runs Zooniverse, a collection of citi-

zen science projects, most of which

ask people to identify objects in astro-

nomical images, from moon craters

to elliptical galaxies. In one project,

a Dutch teacher using Galaxy Zoo to

identify galaxies imaged by the Sloan

Digital Sky Survey noticed a mysteri-

ous glow and wanted to know what it

was. Astronomers followed up on her

discovery, which is now believed to be

the signature of radiation emitted by

a quasar. Another Zooniverse project

asks people to look through scanned

pages of World War I-era Royal Navy

logs and transcribe weather readings;

climatologists hope to use the data to

learn more about climate change. On

news

mArCh 2012 | vol. 55 | No. 3 | coMMuni caTi ons of The acM 15

their own, some users started keep-

ing track of how many people were on

a ships sick list each day, and peaks

in the sick list numbers turned out

to be a signature of the 1918 fu pan-

demic. When people fnd strange

things, they can act as an advocate

for them, Lintott says. If computers

cannot even identify the sought-after

information, they are hardly going to

notice such anomalies, let alone lob-

by for an answer.

Payoffs for Players

Designing crowdsourcing projects

that work well takes effort, says von

Ahn, who tested prototypes of Duolin-

go on Amazon Mechanical Turk, a ser-

vice that pays people small amounts

of money for performing tasks online.

Feedback from those tests helped von

Ahn make the software both easier to

use and more intuitive. He hopes Duo-

lingo will entice users to provide free

translation services by offering them

value in return, in the form of language

lessons. Paying for translation, even at

Mechanical Turks low rates, would be

prohibitively expensive.

Lintott agrees the projects must

provide some type of payoff for users.

There is, of course, the satisfaction of

contributing to science. And for Zooni-

verse users, there is aesthetic pleasure

in the fact that many galaxy images

are strikingly beautiful. For Phylo and

Foldit users, there is the appeal of com-

petition; the score in Foldit is based on

a real measure of how well the protein

is folded, but the score is multiplied by

a large number to make it like players

scores in other online games.

The University of Washingtons

Center for Game Science, where

Foldit was developed, is exploring the

best ways to use what center director

Zoran Popovic calls this symbiotic

architecture between humans and

computers. He hopes to use it to har-

ness human creativity by, for instance,

developing a crowdsourcing project

that will perform the effcient design

of computer chips. That is not as un-

likely as it sounds, Popovic says. The

non-experts using Foldit have already

produced protein-folding algorithms

that are nearly identical to algorithms

that experts had developed but had

not yet published.

The challenge, Popovic says, is to

fgure out how to take advantage of this

symbiosis. Its just a very different ar-

chitecture to compute on, but its high-

ly more productive.

Further Reading

Cooper, S., et al.

The challenge of designing scientifc

discovery games, 5

th

International

Conference on the Foundations of Digital

Games, Monterey, CA, June 1921, 2010.

Khatib, F., et al.

Algorithm discovery by protein folding

game players, Proceedings of the National

Academies of Science 108, 47, november

22, 2011.

Nielsen, M.

Reinventing Discovery: The New Era of

Networked Science, Princeton University

Press, Princeton, nJ, 2011.

Smith, A. M., et al.

Galaxy Zoo supernovae, Monthly Notices

of the Royal Astronomical Society 412, 2,

April 2011.

Von Ahn, L. and Dabbish, L.

Designing games with a purpose,

Communications of the ACM 51, 8,

August 2008.

neil Savage is a science and technology writer based in

lowell, Ma.

2012 aCM 0001-0782/12/03 $10.00