Professional Documents

Culture Documents

Exercise

Uploaded by

Tuti Aryanti HastomoOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Exercise

Uploaded by

Tuti Aryanti HastomoCopyright:

Available Formats

Tutorial exercises: Backpropagation neural networks, Nave Bayes, Decision Trees, k-NN, Associative Classification.

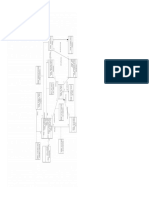

Exercise 1. Suppose we want to classify potential bank customers as good creditors or bad creditors for loan applications. We have a training dataset describing past customers using the following attributes: Marital status {married, single, divorced}, Gender {male, female}, Age {[18..30[, [30..50[, [50..65[, [65+]}, Income {[10K..25K[, [25K..50K[, [50K..65K[, [65K..100K[, [100K+]}. Design a neural network that could be trained to predict the credit rating of an applicant. Exercise 2. Given the following neural network with initialized weights as in the picture, explain the network architecture knowing that we are trying to distinguish between nails and screws and an example of training tupples is as follows: T1{0.6, 0.1, nail}, T2{0.2, 0.3, screw}.

6 -0.4

-0.1

0.6 -0.2

output neurons hidden neurons

5 0.5

0.2 0.1 -0.2

0.1 4 0

-0.1 0.2 0.2

0.6

3 0.1

0.3

-0.4

input neurons

Let the learning rate be 0.1 and the weights be as indicated in the figure above. Do the forward propagation of the signals in the network using T1 as input, then perform the back propagation of the error. Show the changes of the weights.

Exercise 3. Why is the Nave Bayesian classification called nave?

Exercise 4. Nave Bayes for data with nominal attributes Given the training data in the table below (Buy Computer data), predict the class of the following new example using Nave Bayes classification: age<=30, income=medium, student=yes, credit-rating=fair

Exercise 5. Applying Nave Bayes to data with numerical attributes and using the Laplace correction (to be done at your own time, not in class) Given the training data in the table below (Tennis data with some numerical attributes), predict the class of the following new example using Nave Bayes classification: outlook=overcast, temperature=60, humidity=62, windy=false. Tip. You can use Excel or Matlab for the calculations of logarithm, mean and standard deviation. The following Matlab functions can be used: log2 logarithm with base 2, mean mean value, std standard deviation. Type help <function name> (e.g. help mean) for help on how to use the functions and examples.

Exercise 6. Using Weka (to be done at your own time, not in class) Load iris data (iris.arff). Choose 10-fold cross validation. Run the Nave Bayes and Multi-layer percepton (trained with the backpropagation algorithm) classifiers and compare their performance. Which classifier produced the most accurate classification? Which one learns faster?

Exercise 7. k-Nearest neighbours Given the training data in Exercise 4 (Buy Computer data), predict the class of the following new example using k-Nearest Neighbour for k=5: age<=30, income=medium, student=yes, creditrating=fair. For distance measure between neighbours use a simple match of attribute values: distance(A,B)=

w * (a , b )

i =1 i i i

4 where (ai , bi ) is 1 if ai equals bi and 0 otherwise. ai and bi are

either age, income, student or credit_rating. Weights are all 1 except for income it is 2.

Exercise 8. Decision trees Given the training data in Exercise 4 (Buy Computer data), build a decision tree and predict the class of the following new example: age<=30, income=medium, student=yes, credit-rating=fair.

Exercise 9. Associative Classifier Given the training data in Exercise 4 (Buy Computer data), build an associative classifier model by generating all relevant association rules with support and confidence thresholds 10% and 60% respectively. Classify using this model the new example: age<=30, income=medium, student=yes, credit-rating=fair, selecting the rule with the highest confidence. What would be the classification if we chose to vote the class among all rules that apply?

You might also like

- 0580 s08 Ms 3Document5 pages0580 s08 Ms 3Hubbak KhanNo ratings yet

- Sheet 1 Neural NetworkDocument5 pagesSheet 1 Neural Networkdarthvader909No ratings yet

- Programming in C++: Assignment Week 1Document11 pagesProgramming in C++: Assignment Week 1Mitlesh Reddy Yannam100% (1)

- Non-Contiguous Memory Allocation Using PagingDocument56 pagesNon-Contiguous Memory Allocation Using PagingAli BhatiNo ratings yet

- Practice Questions For Quizzes and Midterms - SolutionDocument38 pagesPractice Questions For Quizzes and Midterms - SolutionBrandon KimNo ratings yet

- ASSIGNMENT 1 - Basic Concepts of Analysis and Design of AlgorithmsDocument3 pagesASSIGNMENT 1 - Basic Concepts of Analysis and Design of AlgorithmsHina ChavdaNo ratings yet

- Practical NoDocument41 pagesPractical NoNouman M DurraniNo ratings yet

- Applied Probability HW01-SolDocument3 pagesApplied Probability HW01-SolZunaash RasheedNo ratings yet

- Function WorksheetDocument5 pagesFunction WorksheetVasit SinghNo ratings yet

- Mysql Architecture GuideDocument17 pagesMysql Architecture GuideKramer KramerNo ratings yet

- Final Term Exam Software Engineering: Question No: 1 Short Questions Marks (11 2 22)Document3 pagesFinal Term Exam Software Engineering: Question No: 1 Short Questions Marks (11 2 22)Mirza AbubakrNo ratings yet

- Sample Midterm CSC 201Document3 pagesSample Midterm CSC 201pavanilNo ratings yet

- Cbse Xii Boolean Algebra 130206085155 Phpapp01Document57 pagesCbse Xii Boolean Algebra 130206085155 Phpapp01ayyappan.ashok6713100% (1)

- Ps Exam: Data Structures & Algorithms - Multiple Choice Questions (MCQS) - Objective Set 2Document9 pagesPs Exam: Data Structures & Algorithms - Multiple Choice Questions (MCQS) - Objective Set 2Nikshita RajanNo ratings yet

- KS3 Presentation - 09 Introduction To AlgorithmsDocument39 pagesKS3 Presentation - 09 Introduction To AlgorithmsMugerwa CharlesNo ratings yet

- Data Structures and Algorithm Course OutlineDocument2 pagesData Structures and Algorithm Course OutlineNapsterNo ratings yet

- CDAC Question Paper 2010 With Answers Free PaperDocument3 pagesCDAC Question Paper 2010 With Answers Free Paperloveygupta00756% (9)

- Experiment 7 (I) : Artificial Intelligence & Machine Learning LabDocument4 pagesExperiment 7 (I) : Artificial Intelligence & Machine Learning LabVishal KumarNo ratings yet

- DBMS Quiz Questions and AnswersDocument27 pagesDBMS Quiz Questions and AnswersKimberly Mae HernandezNo ratings yet

- Quiz AssignmentDocument7 pagesQuiz Assignmentprateek100% (1)

- MCS 012Document4 pagesMCS 012S.M. FarhanNo ratings yet

- CS211 Discrete Structures - Final Exam Fall-19 - SolutionDocument11 pagesCS211 Discrete Structures - Final Exam Fall-19 - SolutionK213156 Syed Abdul RehmanNo ratings yet

- Mock Final Exam Python - SolDocument6 pagesMock Final Exam Python - SolDivyang PandyaNo ratings yet

- CIS 2107 Computer Systems and Low-Level Programming Fall 2011 FinalDocument18 pagesCIS 2107 Computer Systems and Low-Level Programming Fall 2011 FinalLimSiEianNo ratings yet

- COSC2429 Assessment 2 functionsDocument3 pagesCOSC2429 Assessment 2 functionsroll_wititNo ratings yet

- Trees: - Linear Access Time of Linked Lists Is ProhibitiveDocument156 pagesTrees: - Linear Access Time of Linked Lists Is ProhibitiveRoshani ShettyNo ratings yet

- Data Types in Java: Multiple Choice Questions (Tick The Correct Answers)Document12 pagesData Types in Java: Multiple Choice Questions (Tick The Correct Answers)cr4satyajit100% (1)

- Theory QBankDocument7 pagesTheory QBankPrashant RautNo ratings yet

- 18CSC305J - Artificial Intelligence Unit IV Question Bank Part ADocument7 pages18CSC305J - Artificial Intelligence Unit IV Question Bank Part Aaxar kumarNo ratings yet

- Exam Paper for Neural Networks CoursesDocument7 pagesExam Paper for Neural Networks CoursesAlshabwani SalehNo ratings yet

- Operating System Questions and AnswersDocument6 pagesOperating System Questions and Answersgak2627100% (2)

- IMP Questions ADADocument7 pagesIMP Questions ADAHeena BaradNo ratings yet

- Final Csi4107 2006Document10 pagesFinal Csi4107 2006heroriss100% (1)

- Aptitude Overflow BookDocument1,049 pagesAptitude Overflow Bookadityapankaj55No ratings yet

- TCS NQT Programming Logic Questions With Answers: C Pragramming 1Document6 pagesTCS NQT Programming Logic Questions With Answers: C Pragramming 1Samkit SanghviNo ratings yet

- MCA 312 Design&Analysis of Algorithm QuestionBankDocument7 pagesMCA 312 Design&Analysis of Algorithm QuestionBanknbprNo ratings yet

- Greedy Algorithms for Knapsack and Activity Selection ProblemsDocument30 pagesGreedy Algorithms for Knapsack and Activity Selection ProblemsRahul Rahul100% (1)

- Test Bank CH 06Document13 pagesTest Bank CH 06RalphNo ratings yet

- Oop Assignment 1 Fa19-Bee-012Document11 pagesOop Assignment 1 Fa19-Bee-012Arbaz ali0% (1)

- Computer Science 1027b Midterm Exam With Solutions: InstructionsDocument14 pagesComputer Science 1027b Midterm Exam With Solutions: InstructionsLing MaNo ratings yet

- Computer Networks 159.334 Answers Tutorial No. 5 Professor Richard HarrisDocument3 pagesComputer Networks 159.334 Answers Tutorial No. 5 Professor Richard HarrisMinh QuangNo ratings yet

- Chapter1p2 Predicate Logic PDFDocument57 pagesChapter1p2 Predicate Logic PDFSagar Sagar100% (1)

- Assignment 2 OOPDocument3 pagesAssignment 2 OOPTayyaba Rahmat100% (2)

- CS 391L Machine Learning Course SyllabusDocument2 pagesCS 391L Machine Learning Course SyllabusOm SinghNo ratings yet

- Programming Fundamentals Lab 08 (1D Array)Document5 pagesProgramming Fundamentals Lab 08 (1D Array)Ahmad AbduhuNo ratings yet

- Final Exam - Design and Analysis of Algorithms - Fall 2010 Semester - SolDocument7 pagesFinal Exam - Design and Analysis of Algorithms - Fall 2010 Semester - SolAbdul RaufNo ratings yet

- Cs 143 Sample MidDocument4 pagesCs 143 Sample MidSeungdo LeeNo ratings yet

- Python MCQ with Answers: 40+ Questions and ExplanationsDocument22 pagesPython MCQ with Answers: 40+ Questions and ExplanationsAmol AdhangaleNo ratings yet

- Esy Homework 05Document2 pagesEsy Homework 05John HoangNo ratings yet

- Top-Down Digital VLSI Design: From Architectures to Gate-Level Circuits and FPGAsFrom EverandTop-Down Digital VLSI Design: From Architectures to Gate-Level Circuits and FPGAsNo ratings yet

- Machine Learning - Lazy Learning: HapterDocument22 pagesMachine Learning - Lazy Learning: HapterSarah HajaratNo ratings yet

- Lecturenotes DecisionTree Spring15Document16 pagesLecturenotes DecisionTree Spring15newforall732No ratings yet

- ML0101EN Clas K Nearest Neighbors CustCat Py v1Document11 pagesML0101EN Clas K Nearest Neighbors CustCat Py v1banicx100% (1)

- DSCI 303 Machine Learning Problem Set 4Document5 pagesDSCI 303 Machine Learning Problem Set 4Anonymous StudentNo ratings yet

- Exercises695Clas SolutionDocument13 pagesExercises695Clas SolutionHunAina Irshad100% (2)

- Assignment 1Document3 pagesAssignment 1RazinNo ratings yet

- Exp 3 Bi 30Document7 pagesExp 3 Bi 30Smaranika PatilNo ratings yet

- Xtra BackupDocument1 pageXtra Backupbenben08No ratings yet

- Flask TrainingDocument1 pageFlask Trainingbenben08No ratings yet

- Mie 1628H - Big Data Final Project Report Apple Stock Price PredictionDocument10 pagesMie 1628H - Big Data Final Project Report Apple Stock Price Predictionbenben08No ratings yet

- VI Tips - Essential Vi/vim Editor SkillsDocument110 pagesVI Tips - Essential Vi/vim Editor SkillsslowtuxNo ratings yet

- Diagrammed e Classes 2Document1 pageDiagrammed e Classes 2benben08No ratings yet

- Configuring and Deploying Mongodb Sharded Cluster in 30 MinutesDocument11 pagesConfiguring and Deploying Mongodb Sharded Cluster in 30 Minutesbenben08No ratings yet

- Edw or LabDocument3 pagesEdw or Labbenben08No ratings yet

- Hadoop ClusterDocument2 pagesHadoop Clusterbenben08No ratings yet

- Test CertifDocument41 pagesTest Certifbenben08No ratings yet

- 06 ExceptionsDocument16 pages06 Exceptionsbenben08No ratings yet

- Create Repository Services - Informatica 9.5Document3 pagesCreate Repository Services - Informatica 9.5benben08No ratings yet

- Hadoop ClusterDocument2 pagesHadoop Clusterbenben08No ratings yet

- JSMIGDocument23 pagesJSMIGanuNo ratings yet

- NameNode and SecondaryDocument1 pageNameNode and Secondarybenben08No ratings yet

- NoSQL Overview and Hands-on ExamplesDocument15 pagesNoSQL Overview and Hands-on Examplesbenben08No ratings yet

- Crisp DMDocument53 pagesCrisp DMMayank GuptaNo ratings yet

- Cloudlab Exercise 5 Lesson 6Document4 pagesCloudlab Exercise 5 Lesson 6benben08No ratings yet

- Demo MDMDocument1 pageDemo MDMbenben08No ratings yet

- Practice Test 3 NewDocument22 pagesPractice Test 3 Newbenben08No ratings yet

- Day 12Document10 pagesDay 12benben08No ratings yet

- Hands-On Lab - Hadoop ComponentsDocument2 pagesHands-On Lab - Hadoop Componentsbenben08No ratings yet

- HANDS Hadoop CloudDocument10 pagesHANDS Hadoop Cloudbenben08No ratings yet

- Movie Recommend mr1 PDFDocument10 pagesMovie Recommend mr1 PDFbenben08No ratings yet

- Code - 1data Scientist PDFDocument2 pagesCode - 1data Scientist PDFbenben08No ratings yet

- Crisp DMDocument53 pagesCrisp DMMayank GuptaNo ratings yet

- Install DiaDocument25 pagesInstall Diabenben08No ratings yet

- Installing Java 9 and EclipseDocument24 pagesInstalling Java 9 and EclipseKushal VenkateshNo ratings yet

- SPARK SQL AND GRAPHX ANALYSISDocument5 pagesSPARK SQL AND GRAPHX ANALYSISbenben08No ratings yet

- Cloudlab Exercise 11 Lesson 11Document2 pagesCloudlab Exercise 11 Lesson 11benben08No ratings yet