Professional Documents

Culture Documents

Stat 3014 Notes 11 Sampling

Uploaded by

Aya PobladorOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Stat 3014 Notes 11 Sampling

Uploaded by

Aya PobladorCopyright:

Available Formats

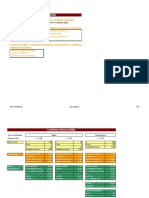

STAT 3014/3914: Sampling Theory

Section outline

1. Simple random samples and stratication.

Finite population correction factor. Sample size determination. Inference over sub-

populations. Stratied sampling, optimal allocation.

2. Ratio and regression estimators.

Ratio estimators. Hartley-Ross estimator. Ratio estimator for stratied samples. Re-

gression estimator.

3. Systematic sampling and cluster sampling.

4. Sampling with unequal probabilities.

Probability proportional to size(PPS) sampling. The Horvitz-Thompson estimator.

References

1. Barnett, V. (1991) Sample Survey Principles & Methods, Edward Arnold, london.

2. Cochran, W.G. (1963) Sampling Techniques, Wiley, New York.

3. McLennan, W. (1999) An Introduction to Sample Surveys, A.B.S. Publications, Can-

berra.

1

1 Simple Random Samples and Stratication.

1.1 The Population.

We have a nite number of elements, N where N is assumed known.

The population is Y

1

. . . Y

N

, where Y

i

is a numerical value associated with i-th element.

We adopt the notation in the classical text by W.G. Cochran where capital letters refer to

characteristics of the population; small letters are used for the corresponding characteristics

of a sample.

Population Total: Y =

N

i=1

Y

i

,

Population Mean: =

Y =

Y

N

=

N

i=1

Y

i

N

,

Population Variance :

2

= N

1

N

i=1

(Y

i

Y )

2

. S

2

=

N

N1

2

.

These are xed (population) quantities, to be estimated.

If we have to consider two numerical values: (Y

i

, X

i

), i = 1, , N an additional

population quantity of interest is

R =

N

i=1

Y

i

N

i=1

X

i

=

Y

X

=

Y

X

the ratio of totals.

1.2 Simple Random Sampling.

Focus on the numerical values Y

i

, i = 1, , N . A random sample of size n is taken with-

out replacement : the observed values y

1

, , y

n

are random variables and are stochastically

dependent. The sampling frame is a list of the values Y

i

, i = 1, , N.

The natural estimator for is =

n

i=1

y

i

n

= y

and hence for Y = N is

Y = N y.

Distributional properties of y are complicated by the dependence of the y

i

s.

Sample variance: s

2

=

n

i=1

(y

i

y)

2

/(n 1)

2

Fundamental Results

E y = .

Var y =

S

2

n

(1

n

N

) =

S

2

n

(1 f) =

2

n

_

N n

N 1

_

Es

2

= S

2

where f is the sampling fraction and the nite population correction (f.p.c.) is 1 f. Recall

s.e. (estimator) =

variance of estimator

Central Limit Property

For large sample size n (n > 30 say), and small to moderate f, we have the approximation

( y )/

_

Var y N(0, 1).

Condence Interval for =

Y .

Replacing S

2

by s

2

, an approximate 95% C.I. for and Y are respectively

y 1.96

s

n

_

1 f

N( y 1.96

s

n

_

1 f)

Sample Size Calculations

To calculate the sample size needed for sampling yet to be carried out, for specied precision,

use past information, or information from a pilot survey.

Suppose we require

Pr{| y | } 0.95

For example, we may want to be at least 95% sure the estimate y of is within 1%

of the actual value of . Thus if we take = 0.01 .

Then

Pr

_

| y |

Var y

Var y

_

0.95

3

i.e.

/

_

Var y 1.96.

Ignoring f.p.c. (i.e. taking f = 0)

n

(1.96)

2

S

2

2

(1.96)

2

s

2

2

y

2

where s

2

and y are estimates from a pilot survey.

1.3 Simple Random Sampling for Attributes.

The above can be applied to estimate the total number, or proportion (or %) of units which

possess some qualitative attribute. Let this subset of the population be C.

Put

Y

i

= 1 if i C

= 0 if i / C

and similarly for y

i

s .

Customary notation:

=

Y = P

A =

N

i=1

Y

i

= Y = NP is the population proportion

y = p is the sample proportion.

Let a =

n

i=1

y

i

= np, Q = 1 P and q = 1 p.. Thus

A = Np = Na/n.

4

Since

N

i=1

(Y

i

Y )

2

=

N

i=1

Y

2

i

N

Y

2

;

= N

Y N

Y

2

= NP(1 P)

we have

S

2

= NP(1 P)/(N 1)

= NPQ/(N 1), and similarly

s

2

= npq/(n 1).

Examples

1. Suppose that a nite population of 1500 students is to graduate. A sample will be

selected and surveyed to estimate the average starting salary for graduates. Determine

the sample size, n, needed to ensure that the sample average starting salary is within

$40 of the population average with probability at least 0.90, if from previous years we

know that the standard deviation of starting salaries is about $400.

2. (i) What size sample must be drawn from a population of size N = 800 in order to

estimate the proportion with a given blood group to within 0.04 (i.e. an absolute

error of 4%) with probability 0.95?

(ii) What sample size is needed if we know that the blood group is present in no more

than 30% of the population?

5

1.4 Simple Random Sampling: Inference over Subpopulations.

Population of size N individuals, simple random sample of size n of individuals, on which

measurements Y

i

, i = 1, , N are considered.

Separate estimates might be wanted for one of a number of subclasses {C

1

, C

2

, } which

are subsets (domains of study) of the population (sampling frame). For example,

POPULATION (SAMPLING FRAME) SUBPOPULATION

Australian population unemployed Queenslanders

retailers supermarkets

the employed the employed working overtime

Denote the total number of items in class C

j

by N

j

. N

j

is generally unknown: but the

techniques of 7.2 - 7.3 tell us that we may estimate N

j

by

Nn

j

/n =

N

j

where n

j

/n is the proportion of the total sample size falling into C

j

.

The same technique as in 7.3 can be attempted to estimate:

Y

C

j

=

iC

j

Y

i

/N

j

, and Y

C

j

=

iC

j

Y

i

.

Put

Y

i

=

_

_

_

Y

i

if i C

j

0 if i / C

j

and correspondingly for the sample values:

y

i

=

_

_

_

y

i

if i C

j

0 if i / C

j

.

Then y

estimates

Y

N

i=1

Y

i

/N , where y

n

i=1

y

i

/n .

Thus we have

N

Y

= Y

C

j

so N y

estimates Y

C

j

and

Y

C

j

= N y

/N

j

estimates

Y

C

j

.

6

The estimators are unbiased.

Var

Y

C

j

=

_

N

N

j

_

2

Var y

=

_

N

N

j

_

2

(S

C

j

)

2

n

_

1

n

N

_

where (S

C

j

)

2

can be estimated by (s

C

j

)

2

:

(S

C

j

)

2

=

N

i=1

(Y

i

Y

)

2

N 1

, (s

C

j

)

2

=

n

i=1

(y

i

y

)

2

n 1

.

Example.

There are 200 children in a village. One dentist takes a simple random sample of 20 and

nds 12 children with at least one decayed tooth and a total of 42 decayed teeth. Another

dentist quickly checks all 200 children and nds 60 with no decayed teeth.

Estimate the total number of decayed teeth.

C

1

: children with at least one decayed tooth C

2

: children with no decayed teeth

N

1

= 140 N

2

= 60

n = 20

n

1

= 12 (n

2

= 8)

jC

1

y

j

= 42 =

n

j=1

y

j

.

Thus y

= 42/20 = 2.1.

The estimate for the total number of decayed teeth, Y

C

1

, is N y

= 200 2.1 = 420,

ignoring the information from the second dentist.

7

If N

j

is not known, we can estimate the total Y

C

j

by N y

but we cannot estimate

Y

C

j

by (N/N

j

) y

. It is natural to consider trying to estimate it by (n/n

j

) y

, that is, taking

Y

C

j

= y

C

j

=

iC

j

y

i

/n

j

= (n/n

j

) y

.

Consider the properties of this estimator:

Theorem 1

E y

C

j

=

Y

C

j

Var y

C

j

_

N

N

j

_

2

S

2

C

j

n

_

1

n

N

_

where S

2

C

j

=

iC

j

(Y

i

Y

C

j

)

2

N 1

, provided we dene E y

C

j

and Var y

C

j

appropriately when

n

j

= 0 .

Note S

2

C

j

can be estimated by

s

2

C

j

=

iC

j

(y

i

y

C

j

)

2

n 1

.

Proof: Use conditional expectations.

E( y

C

j

) = E

n

j

{E( y

C

j

|n

j

)}

= E( y

C

j

|n

j

= 0) Pr(n

j

= 0) +

i1

E( y

C

j

|n

j

= i) Pr(n

j

= i)

=

Y

C

j

Pr(n

j

= 0) +

i1

Y

C

j

Pr(n

j

= i)

=

Y

C

j

,

where for n

j

xed, we are looking at a simple random sample of size n

j

from C

j

.

Further

Var y

C

j

= Var(E( y

C

j

|n

j

))

. .

Y

C

j

+E(Var ( y

C

j

|n

j

))

= 0 + E(Var( y

C

j

|n

j

)).

8

Now, if n

j

> 0

Var ( y

C

j

|n

j

) =

_

_

_

1

n

j

iC

j

(Y

i

Y

C

j

)

2

N

j

1

_

1

n

j

N

j

_

_

_

_

- a simple random sample of size n

j

from C

j

. Now use

n

j

n

N

j

N

(an unbiased estimator).

Var ( y

C

j

|n

j

)

_

N

nN

j

iC

j

(Y

i

Y

C

j

)

2

N

j

1

_

1

n

N

_

_

_

_

N

N

j

_

2

1

n

S

2

C

j

_

1

n

N

_

_

.

Corollary: The estimator

Y

C

j

= N

j

y

C

j

is unbiased for Y

C

j

and has Var

Y

C

j

= (N

j

)

2

Var y

C

j

.

When N

j

is known, the estimator N

j

y

C

j

for Y

C

j

uses more information

(by using N

j

and n

j

) than N y

and so should be a better unbiased estimator.

Summary of the Unbiased Estimators of Y

C

j

Estimator Variance

N

j

known or not N y

N

2

(S

C

j

)

2

n

(1

n

N

)

N

j

known N

j

y

C

j

N

2

S

2

C

j

n

(1

n

N

)

(S

C

j

)

2

=

N

i=1

(Y

i

Y

)

2

N 1

, S

2

C

j

=

iC

j

(Y

i

Y

C

j

)

2

N 1

S

2

C

j

(S

C

j

)

2

.

9

1.5 Stratied Random Sampling

Description

The population of size N is rst divided into subpopulations of N

1

, N

2

, , N

L

units.

The subpopulations, or strata, are mutually exclusive and exhaustive. The N

h

s are assumed

known.

A simple random sample of size n

h

is drawn from the hth stratum, h = 1, , L.

Total sample size :

L

h=1

n

h

= n.

Reasons for Stratication:

1) If estimates of known precision are wanted for certain subdivisions of a population,

and these subpopulations can be sampled from, it is better to treat each subdivision

as a population in its own right, rather than take a simple random sample overall and

from it decide who falls into C

j

and who not, where C

j

denotes stratum j.

2) Administrative convenience, for example, if separate administrative units exist, such as

states of Australia, easier to sample in each individually, and then put the information

together.

3) A natural stratication may exist.

The theory consists of

(i) Properties of estimates for the population mean from a stratied sample, for a given

n

1

, n

2

, , n

L

.

(ii) Choice of n

h

s to obtain maximum precision, for a given total sample size n, or,

more generally, a given total cost.

(iii) For a specied precision choice of the minimum n and corresponding n

h

s .

10

Properties of the Estimates

Further notation

Population Sample

N

h

n

h

Y

hi

i = 1, , N

h

y

hi

, i = 1, , n

h

ith unit for random sample from stratum h

Y

h

=

N

h

i=1

Y

hi

/N

h

y

h

=

n

h

i=1

y

hi

/n

h

S

2

h

s

2

h

Also W

h

=

N

h

N

is the stratum weight and f

h

=

n

h

N

h

is the sampling fraction for stratum h .

Basic quantities of interest: the population mean and/or total.

Y can be written

L

h=1

N

h

Y

h

N

; and as usual Y = N

Y .

Estimator for

Y :

Y = y

st

=

L

h=1

N

h

y

h

N

,

Y = N y

st

.

Clearly E y

st

=

Y , since E y

h

=

Y

h

and the y

h

s are independent, so

Var y

st

=

L

h=1

_

N

h

N

_

2

Var y

h

=

L

h=1

_

N

h

N

_

2

S

2

h

n

h

_

1

n

h

N

h

_

=

L

h=1

W

2

h

S

2

h

n

h

1

N

L

h=1

W

h

S

2

h

.

Proportional Allocation for given total sample size n .

N

h

N

=

n

h

n

, that is, n

h

= n

N

h

N

Var

prop

y

st

=

1

n

_

1

n

N

_

L

h=1

W

h

S

2

h

11

Optimal Allocation

Optimization

Let K denote a cost function where

K = K

0

+

L

h=1

K

h

n

h

, K

0

0, K

h

> 0, h = 1, , L.

Let

V = Var y

st

=

L

h=1

W

2

h

S

2

h

n

h

1

N

L

h=1

W

h

S

2

h

.

Note that if we take K

0

= 0, K

h

= 1, h 1 then K reduces to the total sample size n.

Theorem 2

For a xed value of K , V is minimized by the choices of n

h

where

n

h

= const

N

h

S

h

K

h

, h = 1, , L.

The constant is chosen in accordance with the xed value of K .

For a xed value of V , K is minimized by choice of n

h

s of the above form, where the

constant is chosen in accordance with the xed value of V .

Applications of the Theorem:

(1) Best Precision For Specied Total Sample Size

For xed total sample size n, precision is greatest if

n

h

=

N

h

S

h

h

N

h

S

h

n =

_

W

h

S

h

h

W

h

S

h

_

n, h = 1, , L.

- Optimal Allocation of Sample Size: First determined by Alexander Chuprov (1923),

rediscovered by Neyman ( 1934).

- If total sample size n is xed and cost of sampling a unit in each stratum is the

same, (1) says: In a given stratum take a larger sample if 1) the stratum is larger;

and/or 2) the variability is greater.

- Need to have estimates of S

2

h

from pilot survey or past census to apply the formula.

- Variance under optimal allocation: Var

opt

y

st

=

1

n

_

L

h=1

W

h

S

h

_

2

1

N

L

h=1

W

h

S

2

h

12

(2) Minimal Total Sample Size for Specied Precision

If we require

Pr{| y

st

Y | } 0.95

that is

Pr

_

| y

st

Y |

V ar y

st

V ar y

st

_

0.95

then choose

V ar y

st

1.96 so

2

(1.96)

2

Var y

st

.

Therefore, take V = Var y

st

=

2

(1.96)

2

xed.

The cost function to be minimized is

L

h=1

n

h

= n (total sample size). Set K

0

=

0, K

h

= 1 , so according to the theorem n

h

= c W

h

S

h

where the constant, c, is to

be determined in accordance with specied size of V .

General formula for

V = Var y

st

=

L

h=1

W

2

h

S

2

h

n

h

(ignoring fpc)

=

L

h=1

W

2

h

S

2

h

cW

h

S

h

=

1

c

L

h=1

W

h

S

h

.

Therefore

c =

1

V

L

h=1

W

h

S

h

=

1

V

L

j=1

W

j

S

j

,

and

n

h

=

1

V

W

h

S

h

_

L

j=1

W

j

S

j

_

, h = 1, 2, , L.

(3) Condence Intervals for specied total sample size

Provided each n

h

is moderately large

y

st

Var y

st

N(0, 1), at least approximately. If

using y

st

with optimal allocation, for a given total sample size will give the shortest

C.I.

Length of a (1 ) 100% CI is

2 z

(1

2

)

_

Var y

st

= 2 z

(1

2

)

_

Var

opt

y

st

13

Example (Cochran (1963))

A sample of U.S. colleges was drawn to estimate 1947 enrolments in 196 Teachers Col-

leges. These colleges were separated into 6 strata. The standard deviation for college enrol-

ments, s

j

, in a given stratum was estimated by the 1943 value.

Stratum N

j

s

j

1 13 325

2 18 190

3 26 189

4 42 82

5 73 86

6 24 190

196

The 1943 total enrolment was 56,472. For the 1947 study the aim is to obtain a Standard

Error/Mean ratio of approximately 5%. Determine the sample size required and the optimal

allocation of these values to strata.

14

2 Ratio and Regression Estimators.

2.1 Case Study G.P.s

A simple random sample of GPs in NSW is taken in which each lls out, for each of 100

successive patient encounters, an encounter recording form. Some of the things which are

needed to be estimated: for any specic health problem (for example, hypertension):

1) the average number of occurrences of this health problem for 100 encounters;

2) the average number of occurrences of this problem per 100 health problems.

(Each encounter may involve up to 4 health problems.)

Imagine for the population of N GPs in NSW, two numbers are attached to the ith GP :

Y

i

: the number of occurrences of the health problem hypertension for 100 encounters;

X

i

: the number of problems treated in 100 encounters for that GP;

1) amounts to estimating

Y ,

2) needs estimation of 100 (

Y

i

/

X

i

) i.e. estimation of

R = Y/X =

Y /

X.

2.2 Two Characteristics/Unit. Simple Random Sampling.

Theorem 3

If X

i

and Y

i

are a pair of numerical characteristics dened on every unit of the

population, and y and x are the corresponding means from a simple random sample

without replacement of size n , then

Cov ( x, y) =

1

n

_

_

N

i=1

(Y

i

Y )(X

i

X)

N 1

_

_

_

1

n

N

_

. (2.1)

and

E

_

_

n

i=1

(y

i

y)(x

i

x)

n 1

_

_

=

N

i=1

(Y

i

Y )(X

i

X)

N 1

. (2.2)

15

Proof. Consider the unit characteristic dened as U

i

= X

i

+ Y

i

. The corresponding

sample values are u

i

= x

i

+ y

i

and clearly

Var u =

S

2

U

n

_

1

n

N

_

=

1

n

_

N

i=1

(U

i

U)

2

N 1

_

_

1

n

N

_

.

Now

N

i=1

(U

i

U)

2

=

N

i=1

(X

i

X + Y

i

Y )

2

=

N

i=1

(X

i

X)

2

+

N

i=1

(Y

i

Y )

2

+ 2

N

i=1

(X

i

X)(Y

i

Y ).

Thus

Var ( u) = Var ( x + y) =

= Var ( x) + Var ( y) +

2

n

_

N

i=1

(X

i

X)(Y

i

Y )

N 1

_

_

1

n

N

_

.

But

Var u = Var ( x + y) = Var ( x) + Var ( y) + 2Cov( x, y).

(8.2) is proved in a similar way, beginning with

E

_

_

n

i=1

(u

i

u)

2

n 1

_

_

=

N

i=1

(U

i

U)

2

N 1

.

Frequently, in this two variable per unit situation, we are interested in measuring the ratio

of the two variables.

Examples of this kind occur frequently when the sampling unit comprises a group or

cluster of individuals, and our interest is in the population mean per individual. For ex-

ample, survey might be a household survey and we are interested in average expenditure

on cosmetics/adult female in the population; or the average number of hours/week spent

watching television for child aged 10-15; or the average number of suits/adult male.

16

In order to estimate the last of these items, we would record for the ith household

(1 = 1, , n), i.e. ith sample unit, the number of adult males who live there, x

i

, and the

total number of suits they possess, y

i

. The population parameter to be estimated is clearly

R =

total no. of suits

total no. of adult males

=

N

i=1

Y

i

N

i=1

X

i

.

The corresponding sample estimate is

R = r =

n

i=1

y

i

n

i=1

x

i

=

y

x

.

Theorem 4

(a) E(r) R 0 for large sample size.

(b) For large samples,

Var r

1

n

X

2

_

N

i=1

(Y

i

RX

i

)

2

N 1

_

_

1

n

N

_

.

(Thus

Var r is the standard error of the estimator r of R .)

Proof.

a) Recall E y =

Y , E x =

X and Var( x) = O(n

1

). Thus

Er = E

_

y

x

_

E y

X

= R.

b) Note

r R =

y

x

R

y R x

X

.

Thus, for large sample sizes

Var r E{(r R)

2

}

1

X

2

E{( y R x)

2

}.

Now y R x is the sample mean of the sample r.v.s d

i

= y

i

Rx

i

, i = 1, , n ,

from population D

i

= Y

i

RX

i

, i = 1, , N.

Therefore Var(r) =

E(

d

2

)

X

2

= V ar(

d)/

X

2

, since E

d =

D =

N

i=1

(Y

i

RX

i

)

N

= 0

17

From Section 7.2 on simple random sampling

Var

d =

S

2

D

n

_

1

n

N

_

where

S

2

D

=

N

i=1

(D

i

D)

2

N 1

=

N

i=1

(Y

i

RX

i

)

2

N 1

which completes the assertion.

The estimate of Var(r) generally used is the natural one:

Var(r)

1

n x

2

_

n

i=1

(y

i

rx

i

)

2

n 1

_

_

1

n

N

_

which can be shown to be almost unbiased if n is large.

For hand computation it is quickest to use

n

i=1

(y

i

rx

i

)

2

n 1

=

n

i=1

(y

i

y)

2

n 1

2r

n

i=1

(x

i

x)(y

i

y)

n 1

+ r

2

n

i=1

(x

i

x)

2

n 1

= s

2

y

2r s

xy

+ r

2

s

2

x

.

2.3 Ratio Estimate of the Population Total

The above has focused on the estimation of R =

Y

X

=

X

and indeed this is, as in the Case

Study (8.1) of G.P.s the quantity of primary interest.

On the other hand we see from the above that an estimator of the population total Y

which takes into account the x values is

Y

R

=

y

x

X =

y

x

X = rX

This is known as the ratio estimate of the population total.

Correspondingly

Y

R

=

y

x

X = r

X.

In this estimate we are using not only information on the observed Y

i

values, y

i

, i =

1, , n but also on the corresponding covariates x

i

, i = 1, , n (as well as the, hopefully

known, X or

X). In general we expect this extra information to increase the precision of

Y

R

over the usual

Y = N y.

From Theorem 4

(a) E

Y

R

=

XEr

XR =

Y . (Similarly for E

Y .)

18

(b) Var

Y

R

1

n

_

N

i=1

(Y

i

RX

i

)

2

N 1

_

_

1

n

N

_

.

The variance is estimated by

1

n

_

n

i=1

(y

i

rx

i

)

2

n 1

_

_

1

n

N

_

.

Bias.

The estimator r for R is generally biased , so

Y

R

and

Y

R

are biased also for Y

and

Y . The question of bias is quite important since it inuences to what extent variance

determines accuracy. Recall if is an estimator of , then bias is dened by E . The

mean-squared error is

E(( )

2

) = E(( E + E )

2

)

= E(( E )

2

+ (E( ) )

2

+ 2( E )(E ))

= Var + bias

2

.

Bias measurement for

R = r.

Note

Cov(r, x) = E(r x) ErE x = E

_

y

x

x

_

ErE x

so

Er =

E y

E x

Cov(r, x)

E x

= R

r, x

Var r Var x

E x

.

Therefore R Er =

r, x

Var r Var x

E x

. Therefore

|bias in r|

R

=

|R Er|

_

Var(r)

Var x

X

= coe of variation of x

since |

r, x

| 1. The ratio is small if n is large since Var( x) =

S

2

X

n

_

1

n

N

_

.

Thus if the coecient of variation of x is small the bias of

R is negligible relative to

s.e.

R

of

R . But if n is small, the bias can be large.

19

2.4 The Hartley - Ross Estimator of R, Y and

Y when

X is known

We saw that r as an estimate of R was biased and we could estimate

Y by r

X if we

knew

X (8.3), though this estimator would be biased. The following leads to an unbiased

estimator of R .

Theorem 5

Let V = h(X, Y ) for some xed function of two variables h(, ). Dene V

i

= h(X

i

, Y

i

)

and v

i

= h(x

i

, y

i

) . Then

E

_

v +

N 1

N

X(n 1)

n

i=1

v

i

(x

i

x)

_

=

N

i=1

V

i

X

i

N

X

. (2.3)

Proof. The LHS is

E v +

N 1

N

X

E

_

_

n

i=1

v

i

(x

i

x)

n 1

_

_

=

V +

N 1

N

X

N

i=1

V

i

(X

i

X)

N 1

.

The last step is from (8.2), using V

i

in place of Y

i

, and v

i

in place of y

i

.

Now, returning to the problem of estimation of R from sample readings (x

i

, y

i

), i =

1, , n. Assume X

i

> 0, i = 1, , N , take the function h(s, t) = t/s in Theorem 5.

Then v

i

= y

i

/x

i

, i = 1, , n , and V

i

= Y

i

/X

i

, i = 1, 2, , N , so from (2.3)

E

_

r +

(N 1)

N

X(n 1)

n( y r x)

_

=

X

= R

where r =

1

n

n

i=1

y

i

x

i

. Thus an unbiased estimator of R is

R = r +

(N1)

N

X(n1)

n( y r x)

for which we need to know

X (or X = N

X). The Hartley-Ross estimator for the population

total is

20

Y = X r +

N1

n1

n( y r x).

Why, nally, if we need to know

X for this estimator, why dont we just use

R

1

=

y/

X? Recall E

_

y

X

_

=

E y

X

=

X

= R so

R

1

is unbiased for R. However it does not use as

much information and for small samples we might expect the Hartley-Ross estimator to be

better. There is no general result on the comparison of the variances of y/ x, y/

X, and r +

(N 1)n

N

X(n 1)

( y r x) for all sample sizes. (See Cochran (2nd Ed) Theorem 6.3 6.15.)

Example

In a survey of family size (x

1

), weekly income (x

2

) and weekly expenditure on food (y), we

want to estimate the average weekly expenditure on food per family in the most ecient

way. A simple random sample of 27 families yields the following data:

i

x

1i

= 109,

i

x

2i

= 16277,

i

y

i

= 2831,

x

1

,y

= 0.925,

x

2

,y

= 0.573

The sample covariance matrix for y, x

1

and x

2

is

_

_

_

_

_

_

_

_

_

_

_

547.8234 26.5057 1796.5541

26.5057 1.4986 80.1595

1796.5541 80.1595 17967.0541

_

_

_

_

_

_

_

_

_

_

_

.

From the census data

X

1

= 3.91 and

X

2

= 542.

(a) Estimate the standard errors of the ratio estimators for

Y using x

1

and using x

2

. Com-

pare the standard errors with the s.e. for the simple estimate ignoring the covariates.

Which estimator has the smallest estimated s.e.?

(b) Calculate the best available estimate of the average weekly expenditure on food per

family and give an approximate 95% condence interval for this average.

21

2.5 Ratio Estimation for a Subdomain C

j

by Simple Random Sam-

pling over a Population.

For some xed j we want to estimate:

R

j

=

iC

j

Y

i

_

iC

j

X

i

=

N

i=1

Y

i

_

N

i=1

X

i

, = R

if we put

(Y

i

, X

i

) = (Y

i

, X

i

) if i C

j

= (0, 0) if i / C

j

.

Hence the natural estimator is (from 8.2)

r

=

n

i=1

y

i

/

n

i=1

x

i

=

iC

j

y

i

/

iC

j

x

i

= r

j

where

(x

i

, y

i

) = (x

i

, y

i

) if i C

j

= (0, 0) if i / C

j

and

Var r

j

= Var r

1

(

X

)

2

S

2

D

n

_

1

n

N

_

where

X

=

X

N

can be estimated by x

n

i=1

x

i

n

=

iC

j

x

i

n

S

2

D

=

N

i1

(Y

i

R

i

)

2

N 1

can be estimated by

n

i=1

(y

i

r

i

)

2

n 1

=

iC

j

(y

i

r

j

x

i

)

2

n 1

.

Note: n

j

does not come into any of these calculations.

22

2.6 Ratio Estimation for a Population by Stratied Sampling.

Recall that with stratied sampling we have a total of L subdomains (strata) where we

assume all N

h

s known, and a simple random sample is taken in each subdomain (stratum).

Suppose we want ratio estimates such as the one for NSW doctors, 8.1, for all of Australia

(6 strata: each state is a stratum). We know from 8.2 what to do within each state, for

specied sizes n

h

, h = 1, , L of the random sample in each state. We can estimate R

h

by

r

h

= y

h

/ x

h

and we have its variance from Theorem 4 of 8.2 . But how do we estimate from these r

h

s

the ratio

R =

Y

X

=

L

i=1

Y

i

/

L

i=1

X

i

for the whole population?

2.6.1 The Separate Ratio Estimate

Suppose the stratum totals X

h

, h = 1, , L are known so X =

L

h=1

X

h

is known also.

Then use a weighted average of r

h

s (assuming all X

h

s positive):

R

s

=

h

r

h

X

h

X

.

Since Er

h

R

h

=

Y

h

X

h

E

R

s

Y

h

/X = R

and Var(

R

s

) =

h

_

X

h

X

_

2

Var(r

h

).

For large stratum sample sizes, r

h

will be approximately unbiased for R

h

and

Var(r

h

)

will approximate Var(r

h

) reasonably well.

For moderate and small samples bias is important, and we should consider it here. We

see from 8.4 that in a single stratum

|bias r

h

|

r

h

x

h

X

h

= c.v. of x

h

(c.v. = coecient of variation).

23

Consider the bias of

R

s

:

E(

R

s

R) = E

__

L

h=1

X

h

X

r

h

_

R

_

= E

L

h=1

X

h

X

(r

h

R

h

)

=

L

h=1

X

h

X

E(r

h

R

h

)

=

L

h=1

X

h

X

(bias r

h

).

Therefore assuming all X

h

s positive, as usual, |bias

R

s

| = |E(

R

s

R)|

L

h=1

X

h

X

|bias r

h

| .

This argument eventually leads to

|E(

R

s

R)|

s.e.

R

s

L

_

max

h

r

h

min

h

r

h

_

max

h

x

h

X

h

.

Therefore the ratio on the LHS can be

L times as large as the

x

h

/

X

h

bound on

individual relative biases, so even if these are individually small, the overall bias can be

large.

2.6.2 The Combined Ratio Estimate

Dened by:

R

c

=

h

N

h

y

h

h

N

h

x

h

=

y

st

x

st

=

X

=

X

This estimate in contrast to

R

s

does not require a knowledge of the individual X

h

s .

Theorem 6

Var

R

c

1

X

2

L

h=1

_

N

h

N

_

2

1

n

h

_

N

h

i=1

(Y

ih

Y

h

R(X

ih

X

h

))

2

N

h

1

_

_

1

n

h

N

h

_

.

Proof. First

R

c

R =

y

st

x

st

R =

1

x

st

( y

st

R x

st

)

=

1

x

st

L

h=1

N

h

( y

h

R x

h

)

N

=

1

x

st

_

L

h=1

N

h

d

h

N

_

24

where d

ih

= y

ih

Rx

ih

i = 1, , n

h

and

d

h

=

n

h

i=1

d

ih

n

h

.

R

c

R =

1

x

st

d

st

d

st

where on each individual in the hth stratum we consider a measurement of the form

D

ih

= Y

ih

RX

ih

. Therefore recalling the ideas used in Theorem 4:

Var

R

c

1

X

2

Var

d

st

X

2

L

h=1

_

N

h

N

_

2

S

2

h

(D)

n

h

_

1

n

h

N

h

_

where S

2

h

(D) =

N

h

i=1

(D

ih

D

h

)

2

N

h

1

which in general gives the answer. Note here typically

D

h

= 0 .

If we write

S

2

h

(D) =

{Y

ih

Y

h

R(X

ih

X

h

)}

2

N

h

1

= S

2

Y

h

+ R

2

S

2

X

h

2RS

X

h

Y

h

,

this can be estimated by

n

h

i=1

(d

ih

d

h

)

2

n

h

1

=

n

h

i=1

(y

ih

y

h

R

c

(x

ih

x

h

))

2

n

h

1

= s

2

y

h

+

R

2

c

s

2

x

h

2

R

c

s

x

h

y

h

.

There is less risk of bias in

R

c

than in

R

s

. We can show that

|E

RcR|

Var

Rc

max

h

_

x

h

X

h

_

in

contrast to the nal expression in 8.6.1 .

2.7 The Regression Estimator

In this section, we shall only be concerned with the situation where we have a simple random

sample (x

i

, y

i

), i = 1, , n from the population and we wish to estimate the population total

Y or the population mean,

Y .

If these sample values are plotted in the (x, y) plane it may become apparent (or, indeed,

may be suspected from other considerations) that the relation between the X and Y

measurements is approximately linear.

25

If the line appears to pass through the origin, and X is known, it is sensible to use the

the ratio estimate (8.3) for Y , or

Y , since the estimates

Y

R

=

y

x

X and

Y

R

=

y

x

X

reect this linear relation through the origin, as well as making use of the knowledge of X.

On the other hand, if the relation is roughly a line, but the line does not pass through

the origin, perhaps we can do better. We know that presupposing a relation

y

i

= + x

i

+

i

i = 1, , n

for the obtained sample (x

i

, y

i

), i = 1, , n leads by least squares to

= y

x

=

n

i=1

(x

i

x)(y

i

y)/

n

i=1

(x

i

x)

2

= s

xy

/s

2

x

so the tted values y

i

are given by

y +

(x

i

x) i = 1, , n

and for any specied X

i

value, i = 1, , N , the estimated Y

i

value is

Y

i

= y +

(X

i

x).

It follows that the population total Y is naturally estimated by

Y

LR

=

N

i=1

Y

i

= N y +

(X N x)

= N y +

N(

X x)

and correspondingly

Y

LR

= y +

(

X x).

This, of course also makes use of the known total X (or

X).

26

Theorem 7

(a) Bias = E

Y

LR

Y = NCov(

, x)

(b) Var(

Y

LR

)

N

2

n

S

2

Y

_

1

n

N

_

(1

2

X,Y

) = Var(

Y )(1

2

X,Y

)

where

X,Y

=

N

i=1

(X

i

X)(Y

i

Y )/

_

N

i=1

(X

i

X)

2

N

i=1

(Y

i

Y )

2

.

This shows that in general LR estimation is less variable than the estimate

Y = N y

which does not use the covariate observation.

Proof.

(a) E

Y

LR

= NE y + NE

(

X x)

i.e. E

Y

LR

Y = NE{

( x E x)} = NCov{

, x}.

(b) For large n and N

n

i=1

(x

i

x)(y

i

y)/(n 1)

n

i=1

(x

i

x)

2

/(n 1)

=

s

xy

s

2

x

S

XY

S

2

X

=

S

Y

S

X

X,Y

= , say.

Now Var

Y

LR

N

2

(Var y +

2

Var x 2 Cov( y, x)), approximating

by .

From Theorem 3, equation (8.1) :

Var

Y

LR

= N

2

_

Var y +

2

Var x 2

1

n

N

i=1

(Y

i

Y )(X

i

X)

N 1

_

1

n

N

_

_

= N

2

_

S

2

Y

n

_

1

n

N

_

+

S

2

Y

S

2

X

2

X,Y

S

2

X

n

_

1

n

N

_

2

S

Y

S

X

X,Y

S

XY

n

_

1

n

N

_

_

= N

2

S

2

Y

n

_

1

n

N

_

_

1

2

X,Y

_

.

27

3 Systematic and Cluster Sampling.

3.1 Systematic Sampling

This is a quick and easy method for selecting a sample when the sampling frame is sequenced.

Randomly select one of the rst k units, then select every kth unit thereafter.

The sample size is determined by N and the choice of k: n = [

N

k

] or [

N

k

] + 1. The k

possible samples will only be of equal size if N is a multiple of k. If N = 23 and k = 5 then

the possible samples are units numbered:

S1 S2 S3 S4 S5

1 2 3 4 5

6 7 8 9 10

11 12 13 14 15

16 17 18 19 20

21 22 23

For simplicity we will restrict attention to the case N = nk. (If N is large then the

following results will be approximately true.) Under systematic sampling only k of the

possible

_

N

n

_

samples under SRS are considered. We expect systematic sampling to be

benecial if the k samples are representative of the population. This might be achieved by

selecting the sequencing variable carefully. For example, suppose we have a list of businesses

giving location, industry, and employment size. We want to estimate the amount of overtime

wages paid. We expect the amount of overtime wages to be correlated with the employment

size so sort the list according to employment size and then draw a systematic sample.

3.1.1 The Sample Mean and its Variance.

Denote the sample by y

1

, ..., y

n

. Then

y

sys

=

1

n

n

i=1

y

i

28

can take only one of k possible values:

Y

1

= (Y

1

+ Y

k+1

+ .. + Y

Nk+1

)/n

:

Y

k

= (Y

k

+ Y

2k

+ .. + Y

N

)/n.

Thus

E y

sys

= (

Y

1

+ ... +

Y

k

)/k =

Y ,

so the systematic mean is unbiased for the population mean.

Let Y

is

= Y

i+k(s1)

, for i = 1, 2, ..., k and s = 1, 2, ..., n.

Var( y

sys

) = E( y

sys

Y )

2

=

1

k

k

i=1

(

Y

i

Y )

2

.

Recall

(N 1)S

2

=

N

i=1

(Y

i

Y )

2

=

k

i=1

n

s=1

(Y

is

Y )

2

=

k

i=1

n

s=1

(Y

is

Y

i

)

2

+ n

k

i=1

(

Y

i

Y )

2

= k(n 1)S

2

w

+ knVar( y

sys

).

Thus Var( y

sys

) is the Between cluster variance and S

2

w

is the within cluster variance.

Var( y

sys

) =

N 1

N

S

2

N k

N

S

2

w

.

Systematic sampling is most useful when the variability within systematic samples is larger

than the population variance.

Theorem 8

Systematic sampling leads to more precise estimators of

Y than SRS if and only if S

2

w

>

S

2

.

Proof. The variance under SRS is

S

2

n

(1

n

N

) and we want

S

2

n

(1

n

N

) >

N 1

N

S

2

N k

N

S

2

w

.

29

Thus

k(n 1)S

2

w

> [(N 1)

N n

n

]S

2

= k(n 1)S

2

.

3.2 Cluster Sampling.

If a population has a natural grouping of units into clusters one way of proceeding is to

perform a SRS of the clusters and then include all units in the selected clusters in the

sample. Systematic sampling has this structure but the distinct clusters may not have any

physical signicance.

For cluster sampling we do not need a complete population list, just a list of clusters and

then a list of elements for the selected clusters.

Notation Let N denote the population size, M the number of clusters and M

i

the number

of elements in the ith cluster. Let Y

is

denote element s is cluster i,

Y

i

=

M

i

s=1

Y

is

and

Y

i

= Y

i

/M

i

.

Unbiased estimators for

Y .

The population total is Y =

M

i=1

Y

i

and N =

M

i=1

M

i

. Draw a sample of size m from

the clusters. The observed cluster totals are y

1

, ..., y

m

and

Ey

i

= (Y

1

+ .. + Y

M

)/M = Y/M

as each cluster has probability

1

M

of being drawn under SRS. Thus

Y

CL

=

M

m

m

i=1

y

i

is an

unbiased estimator of Y and

Y

CL

=

M

N

y

is unbiased for

Y .

Var

Y

CL

=

M

2

m

M

i=1

(Y

i

Y

M

)

2

M 1

(1

m

M

),

where

Y

M

= Y/M is the average total per cluster. This variance is estimated by

M

2

m

m

i=1

(y

i

y

)

2

m1

(1

m

M

),

i.e. we use the (adjusted) sample variance of the observed cluster totals.

30

3.2.1 Equal Cluster Sizes

.

The analysis is simpler when the clusters are of equal size, that is, M

i

= N/M =

M, i =

1, 2, .., M. In this case

Y

M

=

M

Y and the between cluster variance is

S

2

b

=

1

M 1

M

i=1

(

i

Y )

2

M =

M

i=1

(Y

i

Y

M

)

2

M(M 1)

.

The within cluster variance is

S

2

w

=

1

N M

M

i=1

s=1

(Y

is

i

)

2

.

Recall from ANOVA

(N 1)S

2

= (N M)S

2

w

+ (M 1)S

2

b

.

From SRS we have an unbiased estimator for S

2

b

,

s

2

b

=

1

M(m1)

m

i=1

(y

i

y

)

2

.

An unbiased estimator for S

2

w

is

s

2

w

=

1

(

M 1)m

m

i=1

s=1

(y

is

y

i

)

2

.

Both components of S

2

can be estimated under cluster sampling unlike systematic sampling

where we only observe one cluster and so cannot estimate the between cluster component.

Comparing SRS and Cluster Sampling.

A sample of m clusters involves m

M = n observations. An estimate of

Y based on a

SRS of the same size has variance

Var(

Y ) =

S

2

m

M

(1

m

M

M

M

) =

S

2

m

M

(1

m

M

).

Var(

Y

CL

) =

1

m

M

2

(S

2

b

M)(1

m

M

)

1

N

2

=

S

2

b

Mm

(1

m

M

).

Thus

Var(

Y

CL

)

Var(

Y )

=

S

2

b

S

2

,

and so the cluster estimator is preferable when S

2

b

< S

2

. Note S

2

b

is minimised when all

cluster means are equal, i.e.

Y

i

=

Y . i = 1, .., M. The worst case occurs when S

2

w

= 0 in

which case each cluster consists of identical responses.

31

3.3 Ratio to Size Estimation.

Y

CL

and

Y

CL

are unbiased estimators for Y and

Y . We can develop other estimators based

on cluster totals and cluster sizes using the ratio estimation approach. Consider the pairs

(Y

1

, M

1

), ..., (Y

M

, M

M

). Observe (y

1

, m

1

), ..., (y

m

, m

m

). Consider the ratio estimator for

Y =

M

i=1

Y

M

i=1

M

i

= R.

The natural estimator is

r =

Y

R

=

m

i=1

y

m

i=1

m

i

.

This estimator does not require knowledge of M or N.

Var(

Y

R

)

1

m

M

2

(

M

i=1

(Y

i

RM

i

)

2

M 1

)(1

m

M

)

and we can estimate

M

i=1

(Y

i

RM

i

)

2

/(M 1) by

m

i=1

(y

i

rm

i

)

2

/(m1).

To estimate Y we need to know N as Y = N

Y . Recalling that R =

Y and

M = N/M,

Y

M

=

M

Y we have

Var(

Y

R

)

1

m

M

2

(

M

i=1

(Y

i

Y M

i

)

2

M 1

)(1

m

M

)

and

Var(

Y

CL

)

1

m

M

2

(

M

i=1

(Y

i

Y

M)

2

M 1

)(1

m

M

).

The two expressions are very close with

Y M

i

replaced by

Y

M in the second expression. The

two variances are equal if M

i

=

M for all i.

32

4 Sampling with Unequal Probabilities.

Simple random sampling and systematic sampling are schemes where every unit in the pop-

ulation has the same chance of being selected. We will now consider unequal probability

sampling. We have encountered an example of this already for under stratied sampling the

units in stratum h have chance

n

h

N

h

of being selected. Being able to vary the probabilities

across strata under optimal allocation leads to increased accuracy and this will apply in

other situations.

4.1 Sampling with Replacement

Using with replacement sampling simplies the calculations and if the sampling fraction

is small this model should give a reasonable approximation to the exact behaviour of the

estimators in w/o replacement sampling. Let p

j

denote the probability of selecting unit Y

j

on the ith draw, so

P(y

i

= Y

j

) = p

j

, j = 1, 2, .., N.

The rst two moments are Ey

i

=

N

j=1

p

j

Y

j

and Var(y

i

) =

N

j=1

p

j

Y

2

j

[

N

j=1

p

j

Y

j

]

2

.

The Hansen and Hurwitz Estimator for the population total Y is

Y

HH

=

1

n

n

i=1

p

1

i

y

i

.

This estimator is unbiased for Y . Under sampling with replacement the (y

i

/p

i

) random

variables are independent so

Var(

Y

HH

) =

1

n

2

n

i=1

Var(y

i

/p

i

) =

1

n

(

N

j=1

Y

2

j

p

j

Y

2

).

The estimator for the variance is (

n

i=1

(y

i

/p

i

)

2

n

Y

2

HH

)/[n(n 1)].

How do we choose the p

j

to minimise the variance?

We can show that the minimum is achieved if we set p

i

= Y

i

/Y , i = 1, .., N. Of course we

cannot use these values in practice as the Y

i

( and Y ) are unknown but we look for another

variable, X

i

, that is known and highly correlated with Y

i

to construct probability estimates.

33

Set p

j

= X

j

/X where X =

N

j=1

X

j

. Then

Y

HH

=

1

n

n

i=1

(

y

i

x

i

)X.

This is known as probability proportional to size (pps) sampling. Note

Var(

Y

HH

) =

X

2

n

Var(

y

i

x

i

).

Example. We wish to estimate the turnover for a population of farms. For each farm

we know X

i

, the estimated value of agricultural operations (EVAO) which we use as a size

variable. From the ABS data set the true value for Y is $20.079m with s.d. $2.314m. From

the sample of size 10

Y

HH

is $17.818m with and estimated s.e. $1.821m.

A natural alternative to the pps sampling here would be to use the ratio estimator

Y

R

= X(

n

i=1

y

i

n

i=1

x

i

)

whereas

Y

HH

= X(

1

n

n

i=1

(

y

i

x

i

).

One is based on the ratio of the averages whilst the other is the average of the ratios.

34

4.2 P.P.S. Sampling without Replacement

If we are sampling without replacement the nature of the population changes after each

selection and so if at each step we select using probabilities proportional to size the overall

scheme will not necessarily be pps. One way to achieve a pps scheme is to use systematic

sampling where only one random selection is necessary.

Let

i

denote the probability the Y

i

is in the selected sample. The Horvitz - Thompson

estimator for Y is

Y

HT

=

n

i=1

(

y

i

i

).

Let

i

= 1 if Y

i

in sample

= 0 otherwise

Write

Y

HT

=

N

i=1

i

(

Y

i

i

).

Using this form E

Y

HT

=

N

i=1

Y

i

/

i

E(

i

) = Y so

Y

HT

is unbiased for Y .

Let

ij

= 1 if both Y

i

and Y

j

are in the sample and set

ij

to 0 otherwise. Note

ii

=

i

.

Let

ij

= E(

ij

). We can use these indicator functions to derive an expression for Var(

Y

HT

).

Var(

Y

HT

) = E(

N

i=1

Y

i

i

Y )

2

=

N

i=1

N

j=1

Y

i

i

Y

j

j

E(

i

j

) Y

2

=

N

i=1

N

j=1

Y

i

Y

j

(

E

ij

j

1)

=

N

i=1

N

j=1

Y

i

i

Y

j

j

(

ij

j

)

=

N

i=1

Y

2

i

1

i

i

+

N

i=1

j=i

Y

i

Y

j

j

(

ij

j

).

We estimate this via

Var(

Y

HT

) =

n

i=1

n

j=1

(1

i

ij

)

y

i

y

j

j

.

35

Note that

E

Var(

Y

HT

) =

N

i=1

N

j=1

(1

i

ij

)

Y

i

Y

j

j

E

ij

I(

ij

> 0)

=

N

i=1

N

j=1

(

ij

j

)

Y

i

Y

j

j

I(

ij

> 0)

= Var(

Y

HT

) +

j

I(

ij

= 0)Y

i

Y

j

.

If all pairs have a positive chance of selection then we have an unbiased estimate for the

variance. Otherwise the bias can be large.

Note:

The variance estimate can be negative (if

i

j

>

ij

). If we restrict the variance to 0

in these cases to counter this we induce bias.

The estimate is not necessarily 0 when the true value is 0.

We know

i

X

i

for some indicator variable X

i

but calculating

ij

depends on the way

the sample is selected. Note n

2

values of

ij

need to be calculated.

Hurtley and Rao gave the following approximation for Var (

Y

HT

)

Var(

Y

HT

)

N

j=1

N

i=1

_

1

n 1

n

i

__

Y

i

Y

j

j

_

2

.

This expression can be estimated by

n

i=1

_

1

n 1

n

i

_

_

y

i

Y

HT

n

_

2

.

36

You might also like

- 5 - Ratio Regression and Difference Estimation - RevisedDocument39 pages5 - Ratio Regression and Difference Estimation - RevisedFiqry ZolkofiliNo ratings yet

- CH 3 Statistical EstimationDocument13 pagesCH 3 Statistical EstimationManchilot Tilahun100% (1)

- Short Quiz StatDocument21 pagesShort Quiz StatKeziah Ventura69% (13)

- Alcohol Use, Depression, and Life Satisfaction Among Older Persons in JamaicaDocument9 pagesAlcohol Use, Depression, and Life Satisfaction Among Older Persons in JamaicaJesus GuerreroNo ratings yet

- Bootstrap Event Study Tests: Peter Westfall ISQS DeptDocument34 pagesBootstrap Event Study Tests: Peter Westfall ISQS DeptNekutenda PanasheNo ratings yet

- Mathematical Foundations of Computer ScienceDocument24 pagesMathematical Foundations of Computer Sciencemurugesh72No ratings yet

- Point and Interval Estimation-26!08!2011Document28 pagesPoint and Interval Estimation-26!08!2011Syed OvaisNo ratings yet

- I. Test of a Mean: σ unknown: X Z n Z N X t s n ttnDocument12 pagesI. Test of a Mean: σ unknown: X Z n Z N X t s n ttnAli Arsalan SyedNo ratings yet

- UMass Stat 516 Solutions Chapter 8Document26 pagesUMass Stat 516 Solutions Chapter 8Ye LinNo ratings yet

- Sampling Theory: Double Sampling (Two Phase Sampling)Document12 pagesSampling Theory: Double Sampling (Two Phase Sampling)andriyesNo ratings yet

- Hypothesis Testing 23.09.2023Document157 pagesHypothesis Testing 23.09.2023Sakshi ChauhanNo ratings yet

- Analisis Statistika: Materi 5 Inferensia Dari Contoh Besar (Inference From Large Samples)Document19 pagesAnalisis Statistika: Materi 5 Inferensia Dari Contoh Besar (Inference From Large Samples)Ma'ruf NurwantaraNo ratings yet

- One-Way ANOVADocument18 pagesOne-Way ANOVATADIWANASHE TINONETSANANo ratings yet

- Sampling Distribution and Techniques The Concept of Statistical InferenceDocument13 pagesSampling Distribution and Techniques The Concept of Statistical InferenceNur Zalikha MasriNo ratings yet

- The Analysis of Variance: I S M T 2002Document31 pagesThe Analysis of Variance: I S M T 2002Juan CamiloNo ratings yet

- FECO Note 1 - Review of Statistics and Estimation TechniquesDocument13 pagesFECO Note 1 - Review of Statistics and Estimation TechniquesChinh XuanNo ratings yet

- STATISTICS FOR BUSINESS - CHAP07 - Hypothesis Testing PDFDocument13 pagesSTATISTICS FOR BUSINESS - CHAP07 - Hypothesis Testing PDFHoang NguyenNo ratings yet

- Statistics ReviewDocument16 pagesStatistics ReviewAlan BatschauerNo ratings yet

- Lecture 5Document27 pagesLecture 5Eslam khedrNo ratings yet

- Normal distributions in ECON1203Document1 pageNormal distributions in ECON1203Anish JoshiNo ratings yet

- Chapter3 Sampling Proportions PercentagesDocument10 pagesChapter3 Sampling Proportions PercentagesHamzaZahidNo ratings yet

- BBA IV Business StatisticsDocument270 pagesBBA IV Business StatisticsVezza JoshiNo ratings yet

- Lecture No.10Document8 pagesLecture No.10Awais RaoNo ratings yet

- Sharp Bounds on Variance in Randomized Experiments (≤40 charsDocument30 pagesSharp Bounds on Variance in Randomized Experiments (≤40 chars何沛予No ratings yet

- Statistics 221 Summary of MaterialDocument5 pagesStatistics 221 Summary of MaterialJames McQueenNo ratings yet

- Ss NotesDocument34 pagesSs Notesnhc209No ratings yet

- Sampling Theory - NotesDocument43 pagesSampling Theory - NotesAnand Huralikoppi100% (2)

- Review of Statistics Basic Concepts: MomentsDocument4 pagesReview of Statistics Basic Concepts: MomentscanteroalexNo ratings yet

- 6 EstimationDocument28 pages6 EstimationesmaelNo ratings yet

- 14 MAY - NR - Normal Distribution and ApplicationsDocument6 pages14 MAY - NR - Normal Distribution and ApplicationsEsha KuttiNo ratings yet

- Chapter2 Sampling Simple Random Sampling PDFDocument23 pagesChapter2 Sampling Simple Random Sampling PDFsricharitha6702No ratings yet

- Inferential Statistic: 1 Estimation of A Population MeanDocument8 pagesInferential Statistic: 1 Estimation of A Population MeanshahzebNo ratings yet

- STAT2170 and STAT6180: Applied Statistics: // // / Karol BinkowskiDocument69 pagesSTAT2170 and STAT6180: Applied Statistics: // // / Karol BinkowskiThanh LeNo ratings yet

- Central Limit Theorem Examples and ExercisesDocument4 pagesCentral Limit Theorem Examples and ExercisesWess SklasNo ratings yet

- Review 2Document10 pagesReview 2Anastasia Monica KhunniegalshottestNo ratings yet

- Point and Interval EstimationDocument10 pagesPoint and Interval EstimationJiten BendleNo ratings yet

- WIN SEM (2020-21) CSE4029 ETH AP2020215000156 Reference Material I 27-Jan-2021 DistibutionDocument7 pagesWIN SEM (2020-21) CSE4029 ETH AP2020215000156 Reference Material I 27-Jan-2021 DistibutionmaneeshmogallpuNo ratings yet

- Statistics 514 Design of Experiments Topic 2 OverviewDocument14 pagesStatistics 514 Design of Experiments Topic 2 OverviewVedha ThangavelNo ratings yet

- Statistical Inference: Prepared By: Antonio E. Chan, M.DDocument227 pagesStatistical Inference: Prepared By: Antonio E. Chan, M.Dश्रीकांत शरमाNo ratings yet

- Lecture 7.1 - Estimation of ParametersDocument8 pagesLecture 7.1 - Estimation of ParametersJunior LafenaNo ratings yet

- Basic Concepts of Inference: Corresponds To Chapter 6 of Tamhane and DunlopDocument40 pagesBasic Concepts of Inference: Corresponds To Chapter 6 of Tamhane and Dunlopakirank1No ratings yet

- Summary of Commonly Used Formulae: 1. Prevalence and IncidenceDocument6 pagesSummary of Commonly Used Formulae: 1. Prevalence and IncidenceJagadish KumarNo ratings yet

- Quantitative Analysis: After Going Through The Chapter Student Shall Be Able To UnderstandDocument14 pagesQuantitative Analysis: After Going Through The Chapter Student Shall Be Able To UnderstandNirmal ShresthaNo ratings yet

- 1.1 Other Sampling Methods: The BootstrapDocument11 pages1.1 Other Sampling Methods: The Bootstrapaditya dasNo ratings yet

- Stat T 3Document39 pagesStat T 3rohanshettymanipal100% (2)

- StatestsDocument20 pagesStatestskebakaone marumoNo ratings yet

- Estimation and Testing of Population ParametersDocument27 pagesEstimation and Testing of Population Parametersthrphys1940No ratings yet

- Regression Analysis Techniques and AssumptionsDocument43 pagesRegression Analysis Techniques and AssumptionsAmer RahmahNo ratings yet

- Evaluating Hypotheses: Jayanta Mukhopadhyay Dept. of Computer Science and EnggDocument17 pagesEvaluating Hypotheses: Jayanta Mukhopadhyay Dept. of Computer Science and EnggUtkarsh PatelNo ratings yet

- Cluster SamplingDocument22 pagesCluster Samplingrhena malahNo ratings yet

- Review 2 PDFDocument10 pagesReview 2 PDFParminder SinghNo ratings yet

- Chapter12 Anova Analysis CovarianceDocument14 pagesChapter12 Anova Analysis CovariancemanopavanNo ratings yet

- Ss-Chapter 12: Sampling: Final and Initial Sample Size DeterminationDocument14 pagesSs-Chapter 12: Sampling: Final and Initial Sample Size Determination1517 Nahin IslamNo ratings yet

- Statistical EstimationDocument28 pagesStatistical Estimationkindalem Worku MDNo ratings yet

- L8 Statistical Estimation 1Document48 pagesL8 Statistical Estimation 1ASHENAFI LEMESANo ratings yet

- Standard ErrorDocument3 pagesStandard ErrorUmar FarooqNo ratings yet

- Hypothesis Tests and Confidence IntervalsDocument6 pagesHypothesis Tests and Confidence IntervalsrpinheirNo ratings yet

- PPS Sampling with Replacement GuideDocument10 pagesPPS Sampling with Replacement GuideKEEME MOTSWAKAENo ratings yet

- Week 5 - 8 Q4 New BaldozaDocument28 pagesWeek 5 - 8 Q4 New BaldozaChelsea Mae AlingNo ratings yet

- Lect 9Document18 pagesLect 9Dharyl BallartaNo ratings yet

- Applications of Derivatives Errors and Approximation (Calculus) Mathematics Question BankFrom EverandApplications of Derivatives Errors and Approximation (Calculus) Mathematics Question BankNo ratings yet

- Learn Statistics Fast: A Simplified Detailed Version for StudentsFrom EverandLearn Statistics Fast: A Simplified Detailed Version for StudentsNo ratings yet

- Unit 1 2000 PDFDocument253 pagesUnit 1 2000 PDFMansiNo ratings yet

- E 141 - 91 R03 - Rte0mqDocument6 pagesE 141 - 91 R03 - Rte0mqGiorgos SiorentasNo ratings yet

- Paper III - Objective III - ALexis Kabayiza - PHD AGBMDocument9 pagesPaper III - Objective III - ALexis Kabayiza - PHD AGBMAlexis kabayizaNo ratings yet

- Sampling DistributionsDocument92 pagesSampling DistributionsBretana joanNo ratings yet

- Practical Research 2.Q2.W1 W6.V.10 25 2021Document64 pagesPractical Research 2.Q2.W1 W6.V.10 25 2021LEONITA JACANo ratings yet

- Association of Ischemic Heart Disease with Lifestyle FactorsDocument14 pagesAssociation of Ischemic Heart Disease with Lifestyle FactorsMaliha KhanNo ratings yet

- Stat&Prob Questionnaire Midterm SecondSemesterDocument9 pagesStat&Prob Questionnaire Midterm SecondSemesterElaiza Mae SegoviaNo ratings yet

- Module 3 Excel Utility Confidence Interval CalculatorDocument6 pagesModule 3 Excel Utility Confidence Interval CalculatorRajat GuptaNo ratings yet

- Hypothesis TestingDocument82 pagesHypothesis TestingNawzi kagimboNo ratings yet

- CS 3341 HW SolutionsDocument3 pagesCS 3341 HW Solutionso3428No ratings yet

- Writing The Third Chapter "Research Methodology": By: Dr. Seyed Ali FallahchayDocument28 pagesWriting The Third Chapter "Research Methodology": By: Dr. Seyed Ali FallahchayMr. CopernicusNo ratings yet

- For The Test StatisticsDocument33 pagesFor The Test StatisticsParis IannisNo ratings yet

- Role of Hotel On Growth and Development of Tourism PDFDocument281 pagesRole of Hotel On Growth and Development of Tourism PDFRabinNo ratings yet

- Module For Estimation of ParametersDocument4 pagesModule For Estimation of ParametersCho DlcrzNo ratings yet

- Determining Sample Size and Sampling ProceduresDocument17 pagesDetermining Sample Size and Sampling ProceduresLara Mariel DelosReyes PaladNo ratings yet

- Sampling - How To Design and Evaluate Research in Education - Jack - Fraenkel, - Norman - Wallen, - Helen - HyunDocument6 pagesSampling - How To Design and Evaluate Research in Education - Jack - Fraenkel, - Norman - Wallen, - Helen - HyunKoldevinkea DinNo ratings yet

- Associations Between Quantitative and Qualitative Job Insecurity and Well BeingDocument7 pagesAssociations Between Quantitative and Qualitative Job Insecurity and Well Beingsn100% (1)

- Repaso 1 - Estadistica, Spring 2022 - WebAssignDocument14 pagesRepaso 1 - Estadistica, Spring 2022 - WebAssignMarlene Hernández OcadizNo ratings yet

- Mango Value Chain Analysis The Case of Boloso Bombe Woreda, Wolaita EthiopiaDocument21 pagesMango Value Chain Analysis The Case of Boloso Bombe Woreda, Wolaita Ethiopiamulejimma100% (1)

- StopwatchDocument4 pagesStopwatchyasin husenNo ratings yet

- List of Correction For Applied Statistics ModuleDocument26 pagesList of Correction For Applied Statistics ModuleThurgah VshinyNo ratings yet

- Sample Size CalculatorDocument2 pagesSample Size CalculatorFebrina Viselita100% (1)

- Statistics and Probability PIVOT Pages 270 340378 384Document78 pagesStatistics and Probability PIVOT Pages 270 340378 384Venice100% (2)