Professional Documents

Culture Documents

Costo de Datacenters

Uploaded by

jornada660Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Costo de Datacenters

Uploaded by

jornada660Copyright:

Available Formats

Cost of Data Center Outages

January 2016

Data Center Performance Benchmark Series

Sponsored by Emerson Network Power

Independently conducted by Ponemon Institute LLC

Publication Date: January 2016

Ponemon Institute© Research Report

Cost of Data Center Outages

Ponemon Institute, January 2016

Table of Contents

Section Page

Part 1 Executive Summary 2

Part 2 Cost Framework 3

Part 3 Benchmark Methods 5

Part 4 Sample of Participating Companies and Data Centers 7

Part 5 Key Findings 9

Key statistics on unplanned data center outages 9

Cost structure of unplanned data center outages 9

Comparison of activity cost categories 11

Distribution of total cost for 15 industry segments 12

Cost for partial and total shutdown 13

Duration for partial and total shutdown 13

Relationship between cost and duration 14

Total cost per minute of an unplanned outage 14

Relationship between cost and data center square footage 15

Cost per square foot of data center by quartile 15

Root causes of unplanned outages 16

Total cost by primary root causes of unplanned outages 17

Part 6 Conclusion 18

Part 7 Caveats 19

Sponsored by Emerson Network Power Page 1

Ponemon Institute© Research Report

Cost of Data Center Outages

Ponemon Institute, January 2016

Part 1. Executive Summary

Ponemon Institute and Emerson Network Power are pleased to present the results of the latest

Cost of Data Center Outages study. Previously published in 2010 and 2013, the purpose of this

third study is to continue to analyze the cost behavior of unplanned data center outages.

According to our new study, the average cost of a data center outage has steadily increased from

$505,502 in 2010 to $740,357 today (or a 38 percent net change).

Our benchmark analysis focuses on representative samples of organizations in different industry

sectors that experienced at least one complete or partial unplanned data center outage during the

past 12 months. Utilizing activity-based costing methods, this year’s analysis is derived from 63

data centers located in the United States. Following are the functional leaders within each

organization who participated in the benchmarking process:

§ Facility manager

§ Chief information officer

§ Data center management

§ Chief information security officer

§ IT operations management

§ IT compliance & audit

§ Operations & engineering

Utilizing activity-based costing, our methods capture information about both direct and indirect

costs, including but not limited to the following areas:

§ Damage to mission-critical data

§ Impact of downtime on organizational productivity

§ Damages to equipment and other assets

§ Cost to detect and remediate systems and core business processes

§ Legal and regulatory impact, including litigation defense cost

§ Lost confidence and trust among key stakeholders

§ Diminishment of marketplace brand and reputation

Following are some of the key findings of our benchmark research involving the 63 data centers:

§ The average cost of a data center outage rose from $690,204 in 2013 to $740,357 in this

report, a 7 percent increase. The cost of downtime has increased 38 percent since the first

study in 2010.

§ Downtime costs for the most data center-dependent businesses are rising faster than

average.

§ Maximum downtime costs increased 32 percent since 2013 and 81 percent since 2010.

Maximum downtime costs for 2016 are $2,409,991.

§ UPS system failure continues to be the number one cause of unplanned data center outages,

accounting for one-quarter of all such events.

§ Cybercrime represents the fastest growing cause of data center outages, rising from 2

percent of outages in 2010 to 18 percent in 2013 to 22 percent in the latest study.

Sponsored by Emerson Network Power Page 2

Ponemon Institute© Research Report

Part 2. Cost Framework

Utilizing activity-based costing, our study addresses eight core process-related activities that

drive a range of expenditures associated with a company’s response to a data center outage. The

activities and cost centers used in our analysis are defined as follows:

§ Detection cost: Activities associated with the initial discovery and subsequent investigation

of the partial or complete outage incident.

§ Containment cost: Activities and associated costs that enable a company to reasonably

prevent an outage from spreading, worsening or causing greater disruption.

§ Recovery cost: Activities and associated costs that relate to bringing the organization’s

networks and core systems back to a state of readiness.

§ Ex-post response cost: All after-the-fact incidental costs associated with business

disruption and recovery.

§ Equipment cost: The cost of new equipment purchases and repairs, including refurbishment.

§ IT productivity loss: The lost time and related expenses associated with IT personnel

downtime.

§ User productivity loss: The lost time and related expenses associated with end-user

downtime.

§ Third-party cost: The cost of contractors, consultants, auditors and other specialists

engaged to help resolve unplanned outages.

In addition to the above process-related activities, most companies experience opportunity costs

associated with the data center outage, which results in lost revenue, business disruption and

average contribution. Accordingly, our cost framework includes the following categories:

§ Lost revenues: The total revenue loss from customers and potential customers because of

their inability to access core systems during the outage period.

§ Business disruption (consequences): The total economic loss of the outage, including

reputational damages, customer churn and lost business opportunities.

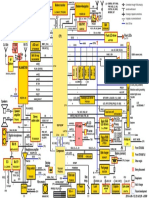

Figure 1 presents the activity-based costing framework used in this research, which consists of

10 discernible categories. As shown, the four internal activities or cost centers include detection,

containment, recovery and ex-post response. Each activity generates direct, indirect and

opportunity costs, respectively. The consequence of the unplanned data center outage includes

equipment repair or replacement, IT productivity loss, end-user productivity loss, third parties

(such as consultants), lost revenues and the overall disruption to core business processes. Taken

together, we then infer the cost of an unplanned data center outage.

Sponsored by Emerson Network Power Page 3

Ponemon Institute© Research Report

Figure 1: Activity-Based Cost Account Framework

Activity Centers Cost Consequences

Detection Equipment

Containment IT Productivity

Activity-Based

User Productivity

Recovery Costing Model

Third Parties

Ex-post Response

Lost Revenue

Direct Costs

Indirect Costs

Business Disruption

Opportunity Costs

Sponsored by Emerson Network Power Page 4

Ponemon Institute© Research Report

Part 3. Benchmark Methods

Our benchmark instrument was designed to collect descriptive information from IT practitioners

and managers of data center facilities about the costs incurred either directly or indirectly as a

result of unplanned outages. The survey design relies upon a shadow costing method used in

applied economic research. This method does not require subjects to provide actual accounting

results, but instead relies on broad estimates based on the experience of individuals within

participating organizations.

The benchmark framework in Figure 1 presents the two separate cost streams used to measure

the total cost of an unplanned outage for each participating organization. These two cost streams

pertain to internal activities and the external consequences experienced by organizations during

or after experiencing an incident. Our benchmark methodology contains questions designed to

elicit the actual experiences and consequences of each incident. This cost study is unique in

addressing the core systems and business process-related activities that drive a range of

expenditures associated with a company’s incident management response.

Within each category, cost estimation is a two-stage process. First, the survey requires

individuals to provide direct cost estimates for each cost category by checking a range variable. A

range variable is used rather than a point estimate to preserve confidentiality (in order to ensure a

higher response rate). Second, the survey requires participants to provide a second estimate for

both indirect cost and opportunity cost, separately. These estimates are calculated based on the

relative magnitude of these costs in comparison to a direct cost within a given category. Finally,

we conduct a follow-up interview to obtain additional facts, including estimated revenue losses as

a result of the outage.

The size and scope of survey items is limited to known cost categories that cut across different

industry sectors. In our experience, a survey focusing on process yields a higher response rate

and better quality of results. We also use a paper instrument, rather than an electronic survey, to

provide greater assurances of confidentiality.

In total, the benchmark instrument contains descriptive costs for each one of the five cost activity

centers. Within each cost activity center, the survey requires respondents to estimate the cost

range to signify direct cost, indirect cost and opportunity cost. These are defined as follows:

§ Direct cost – the direct expense outlay to accomplish a given activity.

§ Indirect cost – the amount of time, effort and other organizational resources spent, but not as

a direct cash outlay.

§ Opportunity cost – the cost resulting from lost business opportunities as a consequence of

reputation diminishment after the outage.

To maintain complete confidentiality, the survey instrument does not capture company-specific

information of any kind. Research materials do not contain tracking codes or other methods that

could link responses to participating companies.

To keep the benchmark instrument to a manageable size, we carefully limit items to only those

cost activities we consider crucial to the measurement of data center outage costs. Based on

discussions with learned experts, the final set of items focuses on a finite set of direct or indirect

cost activities. After collecting benchmark information, each instrument is examined carefully for

consistency and completeness. In this study, four companies were rejected because of

incomplete, inconsistent or blank responses.

The study was launched in June 2015 and fieldwork concluded in October 2015. Recruitment

started with a personalized letter and a follow-up phone call to 631 US-based organizations for

possible participation in our study.

All of these organizations are members of Ponemon Institute’s

benchmark community. This resulted in 78 organizations agreeing to participate. Forty-nine

Sponsored by Emerson Network Power Page 5

Ponemon Institute© Research Report

organizations (63 separate data centers) permitted researchers to complete the benchmark

1

analysis.

Six cases were removed for reliability concerns. Utilizing activity-based costing methods, we

captured cost estimates using a standardized instrument for direct and indirect cost categories.

Specifically, labor (productivity) and overhead costs were allocated to four internal activity centers

and these flow through to six cost consequence categories (see Figure 1).

Total costs were then allocated to only one (the most recent) data center outage experienced by

each organization. We collected information over approximately the same time frame; hence, this

limits our ability to gauge seasonal variation on the total cost of an unplanned data center outage.

1

The Ponemon Institute’s benchmark community is comprised of organizations that have participated in one

or more research studies over the past 14 years.

Sponsored by Emerson Network Power Page 6

Ponemon Institute© Research Report

Part 4. Sample of Participating Companies & Data Centers

The following table summarizes the frequency of companies and separate data centers

participating in the benchmark study. As reported, a total of 16 industries are represented in the

sample.

Our final sample includes a total of 49 separate organizations representing 69 data centers –

which is the unit of analysis. A total of six data center organizations were rejected from the final

sample for incomplete responses to our survey instrument, thus resulting in a final sample of 63

separate data centers.

Table 1: Tally of cost studies by recruited company & data center

Industries Companies Data centers Rejected Final sample

Co-location 4 11 1 10

Communications 1 2 0 2

Consumer products 2 2 0 2

eCommerce 7 7 1 6

Education 2 3 0 3

Financial services 6 11 2 9

Healthcare 5 5 0 5

Hospitality 1 1 0 1

Industrial 4 6 0 6

Media 1 2 0 2

Public sector 4 5 1 4

Research 1 1 0 1

Retail 5 5 0 5

Services 5 7 1 6

Transportation 1 1 0 1

Total 49 69 6 63

The following table summarizes participating data center size according to total square footage

and the duration of both partial and complete unplanned outages. The average size of the data

center in this study is 14,090 square feet and the average outage duration is 95 minutes.

Table 2: Key statistics on data center size and duration of the outage

Key stats Data center square footage Duration in minutes

Average 14,090 95

Maximum 55,000 415

Minimum 1,505 13

Sponsored by Emerson Network Power Page 7

Ponemon Institute© Research Report

Pie Chart 1 summarizes the sample of participating companies’ data centers according to 15

primary industry classifications. As can be seen, ecommerce and financial services are the two

largest industry segments representing 15 percent and 13 percent of the sample, respectively.

Financial service companies include retail banking, payment processors, insurance, brokerage

and investment management companies.

Pie Chart 1: Distribution of participating organizations by industry segment

Computed from 63 benchmarked data centers

2% 2% eCommerce

2%2% 15% Financial services

2%

4% Healthcare

Retail

4%

Services

13% Co-location

8% Industrial

Public sector

Consumer products

Education

8% Communications

10%

Hospitality

Media

8% Research

10%

Transportation

10%

Pie Chart 2 reports the percentage frequency of companies based on their geographic location

according to six regions in the United States. The Northeast represents the largest region (at 24

percent) and the smallest region is the Southwest (at 11 percent).

Pie Chart 2: Distribution of participating organizations by US geographic region

Computed from 63 benchmarked data centers

11%

24%

13% Northeast

Pacific-West

Mid-Atlantic

Midwest

Southeast

14%

Southwest

22%

16%

Sponsored by Emerson Network Power Page 8

Ponemon Institute© Research Report

Part 5. Key Findings

Bar Chart 1 reports key statistics on the cost of unplanned outages as reported in 2010, 2013 and

2016. The cost range, median and mean all consistently show substantial increases in cost over

time. As shown, the maximum cost has more than doubled over six years from just over $1

million to $2.4 million (a 34 percent increase since the last study). Both mean and median costs

increased since 2010 with net changes of 38 and 24 percent respectively. Even though the

minimum data center outage cost decreased between 2013 and 2016, this statistic increased

significantly over six years, with a net change of 58 percent.

Bar Chart 1: Key statistics on data center outages

Comparison of 2010, 2013 and 2016 results

$2,409,991

Maximum $1,734,433

$1,017,746

$740,357

Mean $690,204

$505,502

$648,174

Median $627,418

$507,052

$70,512

Minimum $74,223

$38,969

$- $500,000 $1,000,000 $1,500,000 $2,000,000 $2,500,000 $3,000,000

2016 2013 2010

Bar Chart 2 reports the cost structure on a percentage basis for all cost activities for FY 2010,

2013 and 2016. As shown, the cost mix has remained stable over the past five years. Indirect

cost represents about half and opportunity loss represents 12 percent of total cost of outages.

Bar Chart 2: Percentage cost structure of unplanned data center outages

Comparison of 2010, 2013 and 2016 results

60%

52% 51%

50%

50%

38%

40% 35% 36%

30%

20%

12% 12% 12%

10%

0%

Direct cost Indirect cost Opportunity cost

2010 2013 2016

Sponsored by Emerson Network Power Page 9

Ponemon Institute© Research Report

Table 3 summarizes the cost of unplanned outages for all 63 data centers. Please note that cost

statistics are derived from the analysis of one unplanned outage incident.

Table 3: Cost summary for unplanned outages

Cost categories Total Mean Median Minimum Maximum

Third parties 625,401 9,927 6,970 1,551 27,600

Equipment 597,114 9,478 8,865 1,249 67,783

Ex-post activities 530,964 8,428 11,566 - 36,575

Recovery 1,334,151 21,177 17,570 1,900 58,171

Detection 1,682,856 26,712 22,813 877 69,100

IT productivity 3,898,440 61,880 56,789 6,994 125,600

End-user productivity 8,706,159 138,193 124,551 15,600 456,912

Lost revenue 13,141,737 208,599 197,500 26,591 755,810

Business disruption 16,125,669 255,963 201,550 15,750 812,440

Total cost 46,642,491 740,357 648,174 70,512 2,409,991

Sponsored by Emerson Network Power Page 10

Ponemon Institute© Research Report

Bar Chart 3 reveals a relatively consistent pattern across nine cost categories over five years

(three studies). The cost associated with business disruption, which includes reputation damages

and customer churn, represents the most expensive cost category. Least expensive involves the

engagement of third parties such as consultants to aid in the resolution of the incident.

Bar Chart 3: Comparison of activity cost categories

Comparison of 2010, 2013 and 2016 results

$1,000 omitted

256.0

Business disruption 238.7

179.8

208.6

Lost revenue 183.7

118.1

138.2

End-user productivity 140.5

96.2

61.9

IT productivity 53.6

42.5

26.7

Detection 23.8

22.3

21.2

Recovery 22.0

20.9

8.4

Ex-post activities 9.6

9.5

9.5

Equipment 9.7

9.1

9.9

Third parties 8.6

7.0

- 50.0 100.0 150.0 200.0 250.0 300.0

2016 2013 2010

Sponsored by Emerson Network Power Page 11

Ponemon Institute© Research Report

Bar Chart 4 provides the total cost of unplanned outages for the16 industry segments included in

our benchmark sample. Analysis by industry is limited because of a small sample size; however,

it is interesting to see wide variation across segments ranging from a high of $994,000 (financial

2

services) to a low of $476,000 (public sector).

Bar Chart 4: Distribution of total cost for 15 industry segments

$1,000 omitted

Financial services $994

Communications $970

Healthcare $918

eCommerce $909

Co-location $849

Research $807

Consumer products $781

Industrial $761

Retail $758

Education $648

Transportation $645

Services $570

Hospitality $514

Media $506

Public sector $476

$- $200 $400 $600 $800 $1,000 $1,200

2

Small sample segments limit our ability to draw inferences about industry differences.

Sponsored by Emerson Network Power Page 12

Ponemon Institute© Research Report

Bar Chart 5 compares costs for partial unplanned outages and complete unplanned outages. All

three studies show complete outages are more than twice as expensive as partial outages.

Bar Chart 5: Cost for partial and total shutdown

Comparison of 2010, 2013 and 2016 results

$1,400,000

$946,788

$901,560

$1,200,000

$740,357

$690,240

$680,711

$1,000,000

$505,502

$499,800

$800,000

$350,413

$258,149

$600,000

$400,000

$200,000

$-

2010 2013 2016

Partial unplanned outage Total unplanned outagee Overall average cost

Bar Chart 6 compares the average duration (minutes) of the event for partial and complete

outages. In this year’s study, complete unplanned outages, on average, last 66 minutes longer

than partial outages. It is also interesting to note a U-shape relationship in duration over six years

– wherein unplanned outages on average decreased 15 minutes between 2010 and 2013 and

increased 11 minutes between 2013 and 2016. One possible reason for the increase in duration

time is the rise in cyber attacks, which are difficult to detect and contain.

Bar Chart 6: Duration for partial and total shutdown (measured in minutes)

Comparison of 2010, 2013 and 2016 results

160

134 130

140

119

120

97 95

100 86

80 64

59 56

60

40

20

0

2010 2013 2016

Partial unplanned outage Total unplanned outagee Overall average

Sponsored by Emerson Network Power Page 13

Ponemon Institute© Research Report

Graph 1 shows the relationship between outage cost and duration of the incident. The graph is

organized in ascending order by duration of the outage in minutes. Accordingly, observation 1

has the shortest duration and observation 63 has the longest duration. The regression line is

derived from the analysis of all 63 data centers. Clearly, these results show that the cost of

outage is linearly related to the duration of the outage.

Graph 1: Relationship between cost and duration of unplanned outages

Minutes of down time

$3,000,000

$2,500,000

$2,000,000

$1,500,000

$1,000,000

$500,000

$0

Ascending order by duration of unplanned outages (in minutes)

Total cost Regression

Bar Chart 7 reports the minimum, median, mean and maximum cost per minute of unplanned

outages computed from 63 data centers. This chart shows that the most expensive cost of an

unplanned outage is over $17,000 per minute. On average, the cost of an unplanned outage per

minute is nearly $9,000 per incident.

Bar Chart 7: Total cost per minute of an unplanned outage

Comparison of 2010, 2013 and 2016

$17,244

$16,246

$20,000

$11,080

$15,000

$8,851

$7,908

$7,003

$6,828

$5,617

$10,000

$5,188

$5,000

$939

$926

$573

$-

Minimum Median Mean Maximum

2010 2013 2016

Sponsored by Emerson Network Power Page 14

Ponemon Institute© Research Report

Graph 2 shows the relationship between data center size as measured by square footage and the

total cost of unplanned outages. The regression line is computed from the analysis of all 63 data

centers. Similar to the duration analysis in Graph 1, these results show that the cost of outage is

linearly related to the size of the data center.

Graph 2: Relationship between cost and data center square footage

$3,000,000

$2,500,000

$2,000,000

$1,500,000

$1,000,000

$500,000

$0

Ascending order by data center size (S.F.)

Total cost Regression

Bar Chart 8 reports the mean cost per square foot of unplanned outages based on all 63 data

centers according to quartile. This chart shows that the most expensive cost of an unplanned

outage is $99 per square foot for the smallest quartile of companies. The lowest average is $50

for larger organizations.

Bar Chart 8: Unplanned outage cost per square foot of data center by quartile

Average data center SF is reported for each quartile

$120.0

$99.4

$100.0

$80.9

$80.0

$61.5

$60.0 $50.1

$40.0

$20.0

$-

Qtrl 1 SF=3,301 Qtrl 2 SF=7,540 Qrtl 3 SF=14,030 Qtrl 4 SF=27,275

Average data center SF by quartile (Qtrl)

Sponsored by Emerson Network Power Page 15

Ponemon Institute© Research Report

Bar Chart 9 analyzes the sample of 63 data centers by the primary root cause of unplanned

outage. The “other” category refers to incidents where the root cause could not be determined. As

shown, 25 percent of companies cite UPS system failure as the primary root cause of the incident.

Twenty-two percent cite accidental or human error and cyber attack as the primary root causes of

the outage.

For human error, this is the same percentage as found in 2013, indicating no progress in reducing

what should be an avoidable cause of downtime. The 22 percent figure for cyber crime

represents a 20 percent increase from 2013 and a 167 percent increase from 2010. IT equipment

failure represents only four percent of all outages studied in this research.

Bar Chart 9: Root causes of unplanned outages

Comparison of 2010, 2013 and 2016 results

25%

UPS system failure 24%

29%

22%

Cyber crime (DDoS) 18%

2%

22%

Accidential/human error 22%

24%

11%

Water, heat or CRAC failure 12%

15%

10%

Weather related 12%

12%

6%

Generator failure 7%

10%

4%

IT equipment failure 4%

5%

0%

Other 0%

2%

0% 5% 10% 15% 20% 25% 30% 35%

2016 2013 2010

Sponsored by Emerson Network Power Page 16

Ponemon Institute© Research Report

Bar Chart 10 reports the average cost of outage by primary root cause of the incident. As shown

below, IT equipment failures result in the highest outage cost, followed by cyber crime. The least

expensive root cause appears to be related to weather followed by accidental/ human errors.

Bar Chart 10: Total cost by primary root causes of unplanned outages

Comparison of 2010, 2013 and 2016 results

$1,000 omitted

$995

IT equipment failure $959

$750

$981

Cyber crime (DDoS) $822

$613

$709

UPS system failure $678

$688

$589

Water, heat or CRAC failure $517

$489

$528

Generator failure $501

$463

$489

Accidential/human error $380

$298

$455

Weather related $436

$395

$- $200 $400 $600 $800 $1,000 $1,200

2016 2013 2010

Sponsored by Emerson Network Power Page 17

Ponemon Institute© Research Report

Part 6. Conclusion

The 2016 Cost of Data Center Outages study builds upon the previous two studies to provide

historical perspective on the impact of data center downtime on businesses in various industries.

The findings reveal incremental, and somewhat predicable, increases in the cost of downtime.

Consequently, it can be easy to underestimate the change the industry has endured since 2010.

When the first Cost of Data Center Outages study was published in 2010, Facebook reached 500

million active users. Today the number has grown to 1.5 billion, necessitating a massive data

center development program.

Similar rates of growth have occurred in other data center dependent businesses. According to

research conducted by BI Intelligence, less than 500,000 smartphones were shipped globally in

2011. That number was expected to be over 1.5 billion for 2015, with 64 percent of US adults now

owning a smartphone.

Cloud computing is in the midst of a similar growth spurt today. Goldman Sachs projects a 30

percent CAGR between 2013 and 2018. The Internet of Things will likely drive the next wave of

growth. Specifically, IDC predicts the global IoT market will grow to $1.7 trillion in 2020 from

$655.8 billion in 2014.

All of these developments mean more data flowing across the Internet and through data

centers—and more opportunities for businesses to use technology to grow revenue and improve

business performance. The data center will be central to leveraging those opportunities.

As organizations like Facebook invest millions in data center development, they are exploring

new approaches to data center design and management to both increase agility and reduce the

cost of downtime. However, as this study shows, costs continue to rise and the causes of data

center downtime are very similar today to what they were six years ago—with the notable

exception of the dramatic rise in cyber attacks, which represents a major challenge for data

center operators in the coming years.

This predictable historical pattern highlights the reality that, while there has been a need to move

quickly to support the dramatic changes that occurred in social media, mobile devices and cloud

services, the lifespan of a typical data center is relatively long and will extend across multiple

significant cultural and technology changes. That means data centers designed before anyone

was talking about the Internet of Things will be expected to adapt and play a critical role in

supporting the Internet of Things.

For this most recent study, we are seeing trends in cost and causes of downtime that reflect a

relatively stable industry dealing with many of the same issues as in 2010. But we also

understand that there is a strong undertow of change occurring in the industry and we expect this

change to begin to surface in future Cost of Data Center Outages research.

Sponsored by Emerson Network Power Page 18

Ponemon Institute© Research Report

Part 7. Caveats

This study utilizes a confidential and proprietary benchmark method that has been successfully

deployed in earlier Ponemon Institute research. However, there are inherent limitations to

benchmark research that need to be carefully considered before drawing conclusions from

findings.

§ Non-statistical results: The purpose of this study is descriptive rather than normative

inference. The current study draws upon a representative, non-statistical sample of data

centers, all US-based entities experiencing at least one unplanned outage over the past 12

months. Statistical inferences, margins of error and confidence intervals cannot be applied to

these data given the nature of our sampling plan.

§ Non-response: The current findings are based on a small representative sample of

completed case studies. An initial mailing of benchmark surveys was sent to a benchmark

group of over 600 organizations, all believed to have experienced one or more outages over

the past 12 months. Sixty-three data centers provided usable benchmark surveys. Non-

response bias was not tested so it is always possible companies that did not participate are

substantially different in terms of the methods used to manage the detection, containment

and recovery process, as well as the underlying costs involved.

§ Sampling-frame bias: Because our sampling frame is judgmental, the quality of results is

influenced by the degree to which the frame is representative of the population of companies

and data centers being studied. It is our belief that the current sampling frame is biased

toward companies with more mature data center operations.

§ Company-specific information: The benchmark information is sensitive and confidential.

Thus, the current instrument does not capture company-identifying information. It also allows

individuals to use categorical response variables to disclose demographic information about

the company and industry category. Industry classification relies on self-reported results.

§ Unmeasured factors: To keep the survey concise and focused, we decided to omit other

important variables from our analyses such as leading trends and organizational

characteristics. The extent to which omitted variables might explain benchmark results cannot

be estimated at this time.

§ Extrapolated cost results. The quality of survey research is based upon the integrity of

confidential responses received from benchmarked organizations. While certain checks and

balances can be incorporated into the survey process, there is always the possibility that

respondents did not provide truthful responses. In addition, the use of a cost estimation

technique (termed shadow costing methods) rather than actual cost data could create

significant bias in presented results.

Sponsored by Emerson Network Power Page 19

Ponemon Institute© Research Report

If you have questions or comments about this report, please contact us by letter, phone call or

email:

Ponemon Institute LLC

Attn: Research Department

2308 US 31 North

Traverse City, Michigan 49686 USA

1.800.877.3118

Ponemon Institute

Advancing Responsible Information Management

Ponemon Institute is dedicated to independent research and education that advances responsible

information and privacy management practices within business and government. Our mission is to conduct

high quality, empirical studies on critical issues affecting the management and security of sensitive

information about people and organizations.

As a member of the Council of American Survey Research Organizations (CASRO), we uphold strict

data confidentiality, privacy and ethical research standards. We do not collect any personally identifiable

information from individuals (or company identifiable information in our business research). Furthermore, we

have strict quality standards to ensure that subjects are not asked extraneous, irrelevant or improper

questions.

Sponsored by Emerson Network Power Page 20

Ponemon Institute© Research Report

You might also like

- Datacenter Downtime WP en Na SL 24661 - 51225 - 1Document16 pagesDatacenter Downtime WP en Na SL 24661 - 51225 - 1Sidney SantosNo ratings yet

- 1 14062 Understanding The Cost of Data Center Downtime ENPDocument20 pages1 14062 Understanding The Cost of Data Center Downtime ENPCristian HernandezNo ratings yet

- 2013 Cost of Data Center Outages FINAL 12Document19 pages2013 Cost of Data Center Outages FINAL 12fabweb6326No ratings yet

- Data Center Industry Survey 2013Document28 pagesData Center Industry Survey 2013Pablo SchepensNo ratings yet

- Cost Downtime LISADocument4 pagesCost Downtime LISASajan JoseNo ratings yet

- Taking The Next Leap Forward in Semiconductor SHORTDocument16 pagesTaking The Next Leap Forward in Semiconductor SHORTGerard StehelinNo ratings yet

- Turbonomic Forrester TEI StudyDocument25 pagesTurbonomic Forrester TEI Studywhitsters_onit2925No ratings yet

- Services Cisco Smartnet Tei StudyDocument19 pagesServices Cisco Smartnet Tei StudyBaatar SukhbaatarNo ratings yet

- Justifying A Power Management SystemDocument6 pagesJustifying A Power Management SystemEarl Jenn AbellaNo ratings yet

- Tip para EncuestaDocument5 pagesTip para Encuestadittman12No ratings yet

- ContentServer PDFDocument5 pagesContentServer PDFdittman12No ratings yet

- R1 - Adaptive Profiling For Root-Cause Analysis of Performance Anomalies in Web-Based ApplicationsDocument8 pagesR1 - Adaptive Profiling For Root-Cause Analysis of Performance Anomalies in Web-Based ApplicationsN EMNo ratings yet

- TCO Model For Datacenters - Gartner 2012Document9 pagesTCO Model For Datacenters - Gartner 2012DrikDrikonNo ratings yet

- ETP - STP DigitalizationDocument8 pagesETP - STP DigitalizationHarisomNo ratings yet

- Atde 16 Atde210095Document10 pagesAtde 16 Atde210095Josip StjepandicNo ratings yet

- TJ 15 2021 1 156 161Document6 pagesTJ 15 2021 1 156 161xjoaonetoNo ratings yet

- Advanced Reliability Analysis For Distribution NetworkDocument6 pagesAdvanced Reliability Analysis For Distribution Networkapi-3697505No ratings yet

- Powering Outcomes: Your Guide To Optimizing Data Center Total Cost of Ownership (TCO) Get StartedDocument16 pagesPowering Outcomes: Your Guide To Optimizing Data Center Total Cost of Ownership (TCO) Get StartedJohn DuffinNo ratings yet

- The Digital Mine:: How To Implement Predictive MaintenanceDocument6 pagesThe Digital Mine:: How To Implement Predictive MaintenanceM A GallegosNo ratings yet

- Accenture Green Technology Suite - Data Center EstimatorDocument4 pagesAccenture Green Technology Suite - Data Center EstimatormstegenNo ratings yet

- Artikel 1 PDFDocument13 pagesArtikel 1 PDFanrassNo ratings yet

- 2019 Cost of A Data Breach ReportDocument76 pages2019 Cost of A Data Breach Reporttemp3ror100% (1)

- A Cloud Computing Cost-Benefit Analysis Assessing Green IT BenefitsDocument181 pagesA Cloud Computing Cost-Benefit Analysis Assessing Green IT BenefitsPatrick Petit100% (2)

- Downtime - Data-Center-Uptime - 24661-R05-11Document20 pagesDowntime - Data-Center-Uptime - 24661-R05-11amsaa zafranNo ratings yet

- Forrester TEI of UiPath Automation 2021Document23 pagesForrester TEI of UiPath Automation 2021deivison.ferreiraNo ratings yet

- Lture 5 TCO Cost ModelDocument21 pagesLture 5 TCO Cost ModelDebarshee Mukherjee0% (1)

- Comparison of Learning Techniques For Prediction of Customer Churn in TelecommunicationDocument36 pagesComparison of Learning Techniques For Prediction of Customer Churn in TelecommunicationHsu Let Yee HninNo ratings yet

- Data Centre Design and Operational Best Practices ABBDocument26 pagesData Centre Design and Operational Best Practices ABBYashveer TakooryNo ratings yet

- Energy Monitoring in An IIoT World Whitepaper FinalDocument10 pagesEnergy Monitoring in An IIoT World Whitepaper FinalAmaraa GanzorigNo ratings yet

- Network Capacity CalculationDocument19 pagesNetwork Capacity CalculationVictor Daniel Zambrano MoncadaNo ratings yet

- Ten Key Actions To Reduce It Infrastructure and Operational CostsDocument25 pagesTen Key Actions To Reduce It Infrastructure and Operational CostsDesmond GovenderNo ratings yet

- A Standard Branch Office IT Platform To Optimize and ConsolidateDocument15 pagesA Standard Branch Office IT Platform To Optimize and Consolidategroundloop144No ratings yet

- Total Economic Impact Cisco ThousandEyes End User MonitoringDocument28 pagesTotal Economic Impact Cisco ThousandEyes End User MonitoringSohaib AbidNo ratings yet

- Nadra (ID Card) System: Department of Information TechnologyDocument12 pagesNadra (ID Card) System: Department of Information TechnologySaad AkbarNo ratings yet

- UA-PECO Article April2009Document3 pagesUA-PECO Article April2009ravsreeNo ratings yet

- Science of Computer Programming: Masateru Tsunoda, Akito Monden, Kenichi Matsumoto, Sawako Ohiwa, Tomoki OshinoDocument13 pagesScience of Computer Programming: Masateru Tsunoda, Akito Monden, Kenichi Matsumoto, Sawako Ohiwa, Tomoki Oshinoluis ortegaNo ratings yet

- 2 21571 Forrester StudyPaperDocument18 pages2 21571 Forrester StudyPapereaglepkNo ratings yet

- A Roadmap To Total Cost Ofownership: Building The Cost Basis For The Move To CloudDocument9 pagesA Roadmap To Total Cost Ofownership: Building The Cost Basis For The Move To Cloudnarasi64No ratings yet

- Smart Grid IBMDocument19 pagesSmart Grid IBMmakmohit6037No ratings yet

- Data Center RiskDocument11 pagesData Center Risk3319826No ratings yet

- Feasibility Analysis, Whitten Ch. 9Document15 pagesFeasibility Analysis, Whitten Ch. 9Ramsha ShaikhNo ratings yet

- The Top-Line and The Bottom-Line Impact of Application Performance ChallengesDocument8 pagesThe Top-Line and The Bottom-Line Impact of Application Performance Challengesboka987No ratings yet

- SPEN ManwebCompSpecificFactors AJDocument163 pagesSPEN ManwebCompSpecificFactors AJMark WoodNo ratings yet

- Uptime Detailed SimpleModelDetermingTrueTCODocument9 pagesUptime Detailed SimpleModelDetermingTrueTCOvishwasg123No ratings yet

- Lowering the TCO of Wireless Network Drive Test ToolsDocument7 pagesLowering the TCO of Wireless Network Drive Test ToolsTaouachi TaouachiNo ratings yet

- The ROI of Data Loss Prevention: A Websense White PaperDocument16 pagesThe ROI of Data Loss Prevention: A Websense White PaperAdoliu AlexandruNo ratings yet

- Cost of Data BreechDocument73 pagesCost of Data Breechmids dsmisNo ratings yet

- AST 0136730 Are Your Capacity Management Processes Fit For The Cloud Era VFinalDocument10 pagesAST 0136730 Are Your Capacity Management Processes Fit For The Cloud Era VFinalWalter MacuadaNo ratings yet

- Schneider - Determining Total Cost of Ownership For Data Centers & Networking EquipmentDocument8 pagesSchneider - Determining Total Cost of Ownership For Data Centers & Networking EquipmentHashNo ratings yet

- Vinsun: On-cloud or On-premise ERPDocument4 pagesVinsun: On-cloud or On-premise ERPSachinNo ratings yet

- The 12 Essential Elements of Data Center Facility OperationsDocument43 pagesThe 12 Essential Elements of Data Center Facility OperationsAzriNo ratings yet

- REDUNDANCY IS NOT A FEATURE. IT IS A DESIGN APPROACHDocument12 pagesREDUNDANCY IS NOT A FEATURE. IT IS A DESIGN APPROACHgogo2021No ratings yet

- 1 - Icue49301.2020.9307075Document7 pages1 - Icue49301.2020.9307075Ryan FirmansyahNo ratings yet

- Performance Testing Training - IVS - 2Document37 pagesPerformance Testing Training - IVS - 2api-19974153No ratings yet

- Ibm Data Center StudyDocument20 pagesIbm Data Center StudyAditya Soumava100% (1)

- IT Part 1 AIS Report.Document33 pagesIT Part 1 AIS Report.J-zeil Oliamot PelayoNo ratings yet

- Manutenção Preditiva - CEMADocument4 pagesManutenção Preditiva - CEMAadriano.chotolliNo ratings yet

- Real Time Total Productive Maintenance SystemDocument6 pagesReal Time Total Productive Maintenance SystemGangadhar YerraguntlaNo ratings yet

- Agile Procurement: Volume II: Designing and Implementing a Digital TransformationFrom EverandAgile Procurement: Volume II: Designing and Implementing a Digital TransformationNo ratings yet

- NetOps 2.0 Transformation: The DIRE MethodologyFrom EverandNetOps 2.0 Transformation: The DIRE MethodologyRating: 5 out of 5 stars5/5 (1)

- Autos PDFDocument242 pagesAutos PDFjornada660No ratings yet

- Autos PDFDocument242 pagesAutos PDFjornada660No ratings yet

- Xinu Semaphores Oct 24Document40 pagesXinu Semaphores Oct 24jornada660No ratings yet

- 07 Dep Source2Document8 pages07 Dep Source2Nicolas Fernando Schiappacasse VegaNo ratings yet

- Interfacing Computeiu - Zed Cardiotocography System: Fetal MonitorsDocument2 pagesInterfacing Computeiu - Zed Cardiotocography System: Fetal Monitorsjornada660No ratings yet

- Existing: Interfacing Medical Monitoring Equipment: A Driver ApproachDocument2 pagesExisting: Interfacing Medical Monitoring Equipment: A Driver Approachjornada660No ratings yet

- An4327 PDFDocument39 pagesAn4327 PDFboslim1580No ratings yet

- 09 SssDocument16 pages09 SssHabib AlkhabbazNo ratings yet

- 1611 07329 PDFDocument7 pages1611 07329 PDFjornada660No ratings yet

- AutonomousCar ASPLOS18Document16 pagesAutonomousCar ASPLOS18jornada660No ratings yet

- 06 Dep Source1Document21 pages06 Dep Source1temitopefunmiNo ratings yet

- Agv Vs SDV Otto MotorsDocument6 pagesAgv Vs SDV Otto Motorsjornada660No ratings yet

- Pose EstimationDocument82 pagesPose Estimationjornada660No ratings yet

- 03 Kirchhoff1Document22 pages03 Kirchhoff1lovebua9No ratings yet

- 02 SignalsDocument45 pages02 SignalsumerNo ratings yet

- CS3410 Understanding Computer Systems OrganizationDocument80 pagesCS3410 Understanding Computer Systems Organizationjornada660No ratings yet

- Embedded Linux LabsDocument55 pagesEmbedded Linux Labsjornada660No ratings yet

- MIPS Architecture For Programmers - Vol3Document109 pagesMIPS Architecture For Programmers - Vol3Jet DoanNo ratings yet

- ReadmeDocument1 pageReadmejornada660No ratings yet

- Proceedings of The Ottawa Linux SymposiumDocument8 pagesProceedings of The Ottawa Linux Symposiumjornada660No ratings yet

- ZpuDocument5 pagesZpujornada660No ratings yet

- AbstractDocument1 pageAbstractjornada660No ratings yet

- CachesDocument49 pagesCachesjornada660No ratings yet

- Mips32 4Kc™ Processor Core Datasheet June, 2000: Mul/Div Unit Ejtag Instruction CacheDocument30 pagesMips32 4Kc™ Processor Core Datasheet June, 2000: Mul/Div Unit Ejtag Instruction Cachejornada660No ratings yet

- Reverse-Engineering a Closed Linux Embedded SystemDocument4 pagesReverse-Engineering a Closed Linux Embedded Systemjornada660No ratings yet

- GTA04b7v2 Block Diagram Work in ProgressDocument1 pageGTA04b7v2 Block Diagram Work in Progressjornada660No ratings yet

- PCSpim TutorialDocument3 pagesPCSpim TutorialRyan DeeryNo ratings yet

- MIPS Architecture For Programmers - Vol2Document253 pagesMIPS Architecture For Programmers - Vol2Jet DoanNo ratings yet

- CachesDocument49 pagesCachesjornada660No ratings yet

- Measures of Relative PositionDocument16 pagesMeasures of Relative PositionMcKelly ParateNo ratings yet

- Iii Year B.SC PaperDocument20 pagesIii Year B.SC PaperVikram Singh RajpurohitNo ratings yet

- 2014 AJO - Reliability of Upper Airway Linear, Area, and Volumetric Measurements in Cone-Beam Computed TomographyDocument10 pages2014 AJO - Reliability of Upper Airway Linear, Area, and Volumetric Measurements in Cone-Beam Computed Tomographydrgeorgejose7818No ratings yet

- University Learning Objectives Worksheet GridDocument4 pagesUniversity Learning Objectives Worksheet Gridapi-580356474No ratings yet

- ABR Report - Promoting Women Entrepreneurship Through ItsHerWay - Shweta AgarwalDocument109 pagesABR Report - Promoting Women Entrepreneurship Through ItsHerWay - Shweta AgarwalshwetaNo ratings yet

- Living Apart (Or) Together? Coresidence of Elderly Parents and Their Adult Children in EuropeDocument26 pagesLiving Apart (Or) Together? Coresidence of Elderly Parents and Their Adult Children in EuropeTudor BontideanNo ratings yet

- Drawing Curves Automatically: Procedures As Arguments: Curveto Lineto Stroke Fill ClipDocument9 pagesDrawing Curves Automatically: Procedures As Arguments: Curveto Lineto Stroke Fill CliplaoraculoNo ratings yet

- Advantages and Disadvantages of Job Analysis MethodsDocument3 pagesAdvantages and Disadvantages of Job Analysis MethodsManasi Naphade Mokashi100% (2)

- EconometricsDocument3 pagesEconometricsJoy SecurinNo ratings yet

- Root Cause Analysis TemplateDocument4 pagesRoot Cause Analysis Templatekareem34560% (1)

- Determining The Optimal Mode of Ordering in Mcdonald'S Between Kiosk Machines and Traditional Cashiers Using Analytical Hierarchy Process (Ahp)Document11 pagesDetermining The Optimal Mode of Ordering in Mcdonald'S Between Kiosk Machines and Traditional Cashiers Using Analytical Hierarchy Process (Ahp)Espinosa, JeikyNo ratings yet

- Individual Investors' Learning Behavior and Its Impact On Their Herd Bias: An Integrated Analysis in The Context of Stock TradingDocument24 pagesIndividual Investors' Learning Behavior and Its Impact On Their Herd Bias: An Integrated Analysis in The Context of Stock TradingOptimAds DigMarkNo ratings yet

- Geotechnical Survey of Strata Conditions of Peshawar Region Using GisDocument8 pagesGeotechnical Survey of Strata Conditions of Peshawar Region Using GisAbdul WahabNo ratings yet

- Te 1596Document642 pagesTe 1596Mohammed AL-TuhamiNo ratings yet

- The Effects of Mobile To Teenagers in Socialization SkillsDocument58 pagesThe Effects of Mobile To Teenagers in Socialization SkillsKen Cedric EsmeraldaNo ratings yet

- Bio StatisticsDocument28 pagesBio StatisticsBani AbdullaNo ratings yet

- ANALYSING NEPAL INVESTMENT BANK'S FINANCIAL PERFORMANCEDocument8 pagesANALYSING NEPAL INVESTMENT BANK'S FINANCIAL PERFORMANCENitish PokhrelNo ratings yet

- A Review of Evaluation in Community-Based Art For Health Activity in The UKDocument46 pagesA Review of Evaluation in Community-Based Art For Health Activity in The UKΒάσω ΣυναδίνηNo ratings yet

- UST Civil Engineering Lab Report: Determining Stadia Interval FactorsDocument8 pagesUST Civil Engineering Lab Report: Determining Stadia Interval Factorsmichelle6ompad6gregoNo ratings yet

- Developing The Family Nursing Care PlanDocument8 pagesDeveloping The Family Nursing Care PlanHeide Basing-aNo ratings yet

- Experiences on Time Management of SHS Students Amidst PandemicDocument64 pagesExperiences on Time Management of SHS Students Amidst PandemicEdrake DeleonNo ratings yet

- M.E. InfrastructureDocument21 pagesM.E. InfrastructureGJ ClassroomNo ratings yet

- Concept Explanation: Activity 1 Directions: Answer Each Question IntelligentlyDocument2 pagesConcept Explanation: Activity 1 Directions: Answer Each Question IntelligentlyArianne Valenzuela0% (1)

- Descriptive Statistics: Definition 10.2.1Document16 pagesDescriptive Statistics: Definition 10.2.1anipa mwaleNo ratings yet

- T7 - Study Financial Distress Based On MDADocument12 pagesT7 - Study Financial Distress Based On MDAFadil YmNo ratings yet

- Journal Articles-Linda A. DelgacoDocument9 pagesJournal Articles-Linda A. DelgacoDada Aguilar DelgacoNo ratings yet

- CHAPTER III Sampling and Sampling DistributionDocument48 pagesCHAPTER III Sampling and Sampling DistributionAldrin Dela CruzNo ratings yet

- Antropometría - Heath CarterDocument26 pagesAntropometría - Heath CarterNancy MoralesNo ratings yet

- Business 4.4Document46 pagesBusiness 4.4samerNo ratings yet

- Marketing Research Sampling ProcessDocument11 pagesMarketing Research Sampling ProcessAhel Patrick VitsuNo ratings yet