Professional Documents

Culture Documents

QM1 Typed Notes

Uploaded by

karl hemmingCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

QM1 Typed Notes

Uploaded by

karl hemmingCopyright:

Available Formats

lOMoARcPSD|3666572

Quantitative Methods 1 - Lecture notes - QM 1

Quantitative Methods 1 (University of Melbourne)

StuDocu is not sponsored or endorsed by any college or university

Downloaded by karl hemming (mkwlh1000@gmail.com)

lOMoARcPSD|3666572

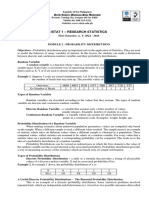

[ECON10005: QUANTITATIVE METHODS 1:

Semester 1, 2013 LECTURE REVISION NOTES]

Lecture One:

Descriptive statistics is a process of concisely summarising the characteristics of sets of data.

Inferential statistics involves constructing estimates of these characteristics, and testing hypotheses

about the world, based on sets of data.

Modelling and analysis combines these to build models that represent relationships and trends in

reality in a systematic way.

Types of Data:

Numerical or quantitative data are real numbers with specific numerical values.

Nominal or qualitative data are non-numerical data sorted into categories on the basis of qualitative

attributes.

Ordinal or ranked data are nominal data that can be ranked.

- The population is the complete set of data that we seek to obtain information about

- The sample is a part of the population that is selected (or sampled) in some way using a

sampling frame

- A characteristic of a population is call a parameter

- A characteristic of a sample is called a statistic

- The difference between our estimate and the true (usually unknown) parameter is the

sampling error

- In a random sample, all population members have an equal chance of being sampled

In a population, a perfect strata would be a group with:

- individual observations that are similar to the other observations in that strata

- different characteristics from other strata in the population

- stratified sampling can improve accuracy

- may be more costly

In a population, a perfect cluster would be a group with:

- individual observations that are different from the other observations in that cluster

- similar characteristics to other clusters in the population

- can reduce costs

- may be less accurate

This is the cost/accuracy trade off

Lecture Two:

Ceteris paribus: the assumption of holding all other variables constant

Cross-sectional data are:

- collected from (across) a number of different entities (such as individuals, households, firms,

regions or countries) at a particular point in time

- usually a random sample (but not always)

- not able to be arranged in any “natural” order (we can sort or rank the data into any order

we choose)

- often (but not only) usefully presented with histograms

Time series data are:

- collected over time on one particular ‘entity’

- data with observations which are likely to depend on what has happened in the past

Downloaded by karl hemming (mkwlh1000@gmail.com)

lOMoARcPSD|3666572

[ECON10005: QUANTITATIVE METHODS 1:

Semester 1, 2013 LECTURE REVISION NOTES]

- data with a natural ordering according to time

- often (but not only) presented as line charts

Lecture Three:

Measures of Centre:

𝚺𝒙

Mean/Average: population: µ sample: x̄ =

𝒏

- easy to calculate

- sensitive to extreme observations

Median: middle number, or average of two middle numbers

- not sensitive to extreme observations

Mode: most frequently occurring number

- only used for finding most common outcome

x̄ – µ = sampling error

If a distribution is uni-modal then we can show that it is:

- Symmetrical if mean = median = mode

- Right-skewed if mean > median > mode

- Left-skewed if mode > median > mean

Measures of Variation:

Population variance measures an average of the squared deviations between each observation and

1

the population mean: σ2 = ∑(𝑥1 − 𝜇)2

𝑁

1

Population standard deviation is the square root of population variance: σ = √𝑁 ∑(𝑥1 − 𝜇)2

Sample variance measures the average of the squared deviations between each observation and the

1

sample mean: s2: 𝑛−1 ∑(𝑥1 − x̄ )2

1

Sample standard deviation is the square root of sample variance: s = √𝑛−1 ∑(𝑥1 − x̄ )2

Coefficient of variation measures the variation in a sample (given by its standard deviation) relative

to that sample’s mean, it is expressed as a percentage to provide a unit-free measurement, letting us

𝑠

compare difference samples: CV = 100 × %

x̄

Lecture Four:

Measures of Association:

Covariance measures the co-variation between two sets of observations.

With a population size N having observations (xi, yi), (x2, y2), (xN, yN) etc. and having μx, μy, being the

respective means of the xi and yi terms, covariance is calculated as,

𝑁

1

𝐶𝑂𝑉(𝑋, 𝑌) = ∑(𝑥𝑖 − 𝜇𝑥 )(𝑦 − 𝜇𝑦 )

𝑁

𝑖−1

Downloaded by karl hemming (mkwlh1000@gmail.com)

lOMoARcPSD|3666572

[ECON10005: QUANTITATIVE METHODS 1:

Semester 1, 2013 LECTURE REVISION NOTES]

If we have a sample of size n, with sample means 𝑥̅ and 𝑦̅, the covariance is calculated as

𝑛

1

𝑐𝑜𝑣(𝑥, 𝑦) = ∑(𝑥𝑖 − 𝑥̅ )(𝑦 − 𝑦̅)

𝑁

𝑖−1

Problems with covariance: it is difficult to interpret the strength of a relationship because covariance

is sensitive to units.

Correlation gives us a measure of association which is not affected by units.

𝑐𝑜𝑣(𝑥,𝑦)

Sample correlation coefficient: 𝑟 = 𝑠𝑥 𝑠𝑦

, sx and sy are sample standard deviations

𝐶𝑂𝑉(𝑋,𝑌)

Population correlation coefficient: 𝜌 = 𝜎𝑥 𝜎𝑦

, σx and σy are population standard deviations

- r=1, perfect positive linear relationship

- r=-1, perfect negative linear relationship

- r=0, no linear relationship

Lecture Five:

A random experiment is a procedure that generates outcomes that are not known with certainty

until observed.

A random variable (RV) is a variable with a value that is determined by the outcome of an

experiment.

A discrete random variable has a countable number (K) of possible outcomes with each having a

specific probability associated with each.

Univariate data has one random variable.

Bivariate data has two random variables.

If X is a random variable with K possible outcomes, then an individual value of X is written as xi,

i=1,2,3…K

The probability of observing X is written as P(X=xi) or p(xi) where

- 0 ≤ 𝑝(𝑥𝑖 ) ≤ 1

- ∑ 𝑝( (𝑥𝑖 ) = 1

- That is, all probabilities must lie between 0 and 1 and all added together equal 1 in total

Expected Value/Mean of a random variable: is the value of x one would expect to get on average

over a large/infinite number of repeated trials: µx = E(X) = ∑ 𝑥𝑖 𝑝(𝑥𝑖 )

Variance of a random variable: is the probability-weighted average of all squared deviations

between each possible outcome with the expected value: σ2 = V(X) = ∑(𝑥𝑖 − µ𝑥 )2 𝑝(𝑥𝑖 ) or

∑ 𝑥𝑖 2 𝑝(𝑥𝑖 ) − µ𝑥 2

Lecture Six:

Rules of Expected Values and Variances:

- E(a) = a V(a) = 0

- E(aX) = aE(X) V(aX) = a2V(X)

- E(a + x) = a + E(X) V(a + X) = V(X)

- E(a + bX) = a + b(X) V(a + bX) = b2V(X)

Downloaded by karl hemming (mkwlh1000@gmail.com)

lOMoARcPSD|3666572

[ECON10005: QUANTITATIVE METHODS 1:

Semester 1, 2013 LECTURE REVISION NOTES]

Binomial Distribution:

- Each experiment is independent

- There are n trials each with two possible outcomes, success = p or failure = q

Binomial random variable is the total number of successes in the n trials

𝑛!

The number of successes is calculated by; P(X = x) = 𝑥!(𝑛−𝑥)! 𝑝 𝑥 (1 − 𝑝)(𝑛−𝑥)

Binomial distributions are written as, X~b(n,p), n=number of trials, p=probability of success.

Lecture Seven:

Discrete random variable has a countable number of possible values

Continuous random variable has an uncountable number of values within an interval of two points

Normal Distribution:

Normal distribution is bell-shaped and symmetrical.

The total area under the curve is equal to 1.

X~N(μ,σ2), X is normally distributed with a mean μ and variance σ2

Standard Normal Distribution:

𝑋−𝜇

Any X value can be standardised; 𝑍 = 𝜎

The standard normal distribution has a mean of 0 and a standard deviation and variance of 1.

The z score shows the number of standard deviations the corresponding observation of x lies away

from the population mean.

Finding an X value for a specific z: 𝑋 = 𝜇 + 𝜎𝑍

Lecture Eight: Good Friday

Downloaded by karl hemming (mkwlh1000@gmail.com)

lOMoARcPSD|3666572

[ECON10005: QUANTITATIVE METHODS 1:

Semester 1, 2013 LECTURE REVISION NOTES]

Lecture Nine:

If we take repeated samples of size n from a population X and record 𝑥̅ for each, the collection of 𝑥̅

can be represented as a random variable 𝑋̅ with its own distribution. This is called a sampling

distribution.

The distribution of 𝑋̅ is different from the distribution of the population X.

The mean of the sampling distribution is 𝜇𝑋̅

The standard deviation of the sampling distribution is 𝜎𝑋̅ , known as the standard error.

- Sampling mean is equal to population mean: 𝜇𝑋̅ = 𝜇

- The standard error is less than standard deviation: 𝜎𝑋̅ < 𝜎

𝜎2

- 𝑉(𝑋̅) = 𝜎𝑋2̅ = 𝑛

𝜎

- √𝑉(𝑋̅) = 𝜎𝑋̅ =

√𝑛

Central Limit Theorem:

If repeated samples are taken from X (n>30) the sampling distribution 𝑋̅ will be approximately

normal, the larger n is, the more accurate this approximation is.

If X is normally distributed, 𝑋̅ will always be normally distributed.

Standardising 𝑋̅:

𝑋̅ − 𝜇𝑋̅ 𝑋̅ − 𝜇

𝑍= =

𝜎𝑋̅ 𝜎/√𝑛

Lecture Ten:

X is a binomial random variable where p is the probability of success and q = 1-p

In general; population proportion:

- 𝜇 = 𝑛𝑝

- 𝜎 2 = 𝑛𝑝𝑞

Population proportion refers to the number of times a specific outcome X occurs within a

𝑋

population: 𝑝 = 𝑁

Samples proportion refers to the number of times a specific outcome X occurs within a sample:

𝑋

𝜌̂ = 𝑛

In general; sampling proportion:

𝑋

- 𝜇𝑝̂ = 𝐸 (𝑛 ) = 𝑝

𝑋 𝑝𝑞

- 𝜎𝑝2̂ = 𝑉 ( ) =

𝑛 𝑛

𝑋

We can approximate the distribution of 𝜌̂ = by a normal distribution with a mean p and variance

𝑛

𝑝𝑞 𝑝𝑞

pq/n: 𝑝̂ ≈ 𝑁(𝑝, ) with a standard error, √ 𝑛 if n𝜌̂ and n𝑞̂ are both ≥ 5

𝑛

Downloaded by karl hemming (mkwlh1000@gmail.com)

lOMoARcPSD|3666572

[ECON10005: QUANTITATIVE METHODS 1:

Semester 1, 2013 LECTURE REVISION NOTES]

Lecture Eleven:

Sample statistics are known functions of sample data.

Before a sample is drawn from a population, a sample statistic is a random variable.

Once the sample is drawn, the statistic becomes a constant and is no longer random.

Random variables have probability distributions.

The distribution of a statistic is called a sampling distribution.

Exact sampling distributions will depend upon the distribution of the populations from which the

sample is drawn i.e. a normal population will produce a normal sample distribution, however if the

sample size is >30, we can use the CLT to approximate normality.

An estimator is a sample statistic which is constructed to have specific properties

Eg. to estimate the centre of a distribution:

̅

Estimator: ĉ = min ∑ni=1(X i − c)2 , ieĉ = X

Eg. to minimise the sum of absolute deviations:

Estimator: 𝑐̂ = 𝑚𝑖𝑛 ∑|𝑋𝑖 − 𝑐|, 𝑖𝑒 𝑐̂ = 𝑚𝑑 (the median)

Principles of Estimation:

Unbiasedness: E(θ̂) = θ the estimator is right on average, in repeated samples

2𝜎

Consistency: Probability of estimator being wrong goes to zero as sample size gets big; 𝑉(𝑋̅) = 𝑛 →

0 𝑎𝑠 𝑛 →∝

Downloaded by karl hemming (mkwlh1000@gmail.com)

lOMoARcPSD|3666572

[ECON10005: QUANTITATIVE METHODS 1:

Semester 1, 2013 LECTURE REVISION NOTES]

We can construct point estimators to makes a specific guess of the parameter value or interval

estimators to guess a range of value in which the parameter may lie.

Samples statistics such as mean, median, variance etc. are all examples of point estimates of

population parameters.

Confidence Intervals:

Rather than finding the probability content of a given interval, confidence intervals find the interval

on the basis of sample data with a given probability content. These intervals can then be used to

guess the location of population parameters.

If 𝑋̅~𝑁(𝜇, 𝜎𝑋2̅ )𝑡ℎ𝑒𝑛 𝑓𝑜𝑟 𝑔𝑖𝑣𝑒𝑛 𝑐𝑜𝑛𝑠𝑡𝑎𝑛𝑡𝑠 𝐿 𝑎𝑛𝑑 𝑈, Pr(𝐿 ≤ 𝑋̅ ≤ 𝑈) = Pr(𝑧𝐿 ≤ 𝑍 ≤ 𝑧𝑈 )

Additionally; Pr(𝑧𝐿 ≤ 𝑍 ≤ 𝑧𝑈 ) = Pr(𝑋̅ − 𝑧𝑈 𝜎𝑋̅ ≤ 𝜇 ≤ 𝑋̅ − 𝑧𝐿 𝜎𝑋̅ = 𝑝 where 𝑧𝐿 , 𝑧𝑈 , 𝜇 are constant

This gives us the confidence interval: [𝑋̅ − 𝑧𝑈 𝜎𝑋̅ , 𝑋̅ − 𝑧𝐿 𝜎𝑋̅ ]

This means in repeated samples of size n, the probability of a randomly chosen interval covering the

true populations mean, μ is p.

The interval is random because 𝑋̅ varies from sample to sample, therefore the interval is random but

μ is not.

The probability, p, is the confidence level of the interval, 𝑝 = 1 − 𝛼

In constructing a confidence interval for μ we would set 𝑧𝐿 = −𝑧𝛼/2 and 𝑧𝑈 = 𝑧𝛼/2

Interpretation of the confidence interval is that we are x% confident the interval covers the mean.

𝜎

The confidence interval estimator is 𝑋̅ ± 𝑧𝛼 ( 𝑛)

2 √

- As the level of confidence increases, the z score becomes more extreme and the interval

widens

- As the population standard deviation increases, the standard error increases and the interval

widens

- As the sample size increases, the standard error decreases so the interval narrows

Lecture Twelve:

If we do not know μ or σ2 we should replace σ2 with s2 and build our confidence intervals using t-

𝑋̅−𝜇

values; 𝑡 = 𝑠 using n-1 degrees of freedom

( )

√𝑛

If the table does not give the degrees of freedom you want, approximate, and write a note of why,

explaining the approximation used.

𝑠

Confidence interval estimator: 𝑋̅ ± 𝑡𝛼,𝑑𝑓 ( 𝑛) this can only be used if 𝑋̅~𝑁

2 √

Downloaded by karl hemming (mkwlh1000@gmail.com)

lOMoARcPSD|3666572

[ECON10005: QUANTITATIVE METHODS 1:

Semester 1, 2013 LECTURE REVISION NOTES]

- A the level of confidence increases, the t score becomes more extreme so the interval

widens

- As the sample standard deviation increases, the standard error increases so the interval

widens

- As the sample size increases, the interval narrows

Comparison of z and t values:

Interval estimators in general:

Let 𝜃̂be the estimator of a parameter 𝜃 with standard error of 𝜃̂ being 𝑠𝜃̂

A (1-α) 100% confident interval for 𝜃 is [𝜃̂ − 𝑐𝑎 𝑠𝜃̂ , 𝜃̂ + 𝑐1−𝑎 𝑠𝜃̂ ] where 𝑐1−𝑎 cuts off an upper tail

2 2 2

probability of α/2.

𝑝̂ 𝑞̂

To find a confidence interval estimation of the proportion we use; 𝑝̂ ± 𝑧𝛼 (√ 𝑛

)

2

- As the level of confidence increases, the z scores become more extreme, widening the

interval

- As 𝑝̂ and 𝑞̂ approach 0.5, the standard error increases so the interval widens

- As the sample size increases, the standard error decreases, so the interval narrows

𝜎

Margin of error is half the width of an interval estimate, which is equal to 𝑧𝑎 ( )

𝑛 2 √

Any specified maximum allowable margin of error is called the error bound, B.

2

𝜎 2 𝜎

𝐵 = 𝑧𝑎 ( ) → 𝐵2 = 𝑧𝑎/2

2 √𝑛 𝑛

𝑧𝑎 𝜎

2 𝜎2

To find the sample size needed for a specified error bound we calculate; 𝑛 = 𝑧𝑎/2 𝐵2

=( 2

𝐵

)2

Downloaded by karl hemming (mkwlh1000@gmail.com)

lOMoARcPSD|3666572

[ECON10005: QUANTITATIVE METHODS 1:

Semester 1, 2013 LECTURE REVISION NOTES]

𝑝̂𝑞̂

For proportions, error bound; 𝐵 = 𝑧𝑎 (√ 𝑛 ) however to find n, we set 𝑝̂ = 0.5 which gives a

2

𝑧𝑎 ̂ ̂

√𝑝𝑞

conservative, wide interval estimate and then solve the equation 𝑛 = ( 2

𝐵

)2

Lecture Thirteen:

- Estimators are statistics that are random variables before the samples is drawn

- We use estimators to guess unknown parameter values

- A point estimator gives a single guess of a parameter

- A confidence interval gives a range of parameter values that are consistent with observed

sample data

The null hypothesis is usually an assertion about a specific value of the parameter and always has the

‘=’ sign.

The null is assumed true unless the evidence in the data supports the notion that it is not true.

The alternative hypothesis is the maintained hypothesis, where true lies if the null is not true.

To reject the null hypothesis, statistically significant evidence must be found.

A Type I error occurs when we reject H0 when H0 is true.

A Type II error occurs when we do not reject H0 when H0 is false.

Values of Z that are evidence in support of H0 are in the acceptance region. All other values of Z are

in the rejection region.

Level of significance is the probability of the test statistic falling into the rejection region given H0 is

true, it is α.

The values of the test statistic that lie on the boundary between the acceptance and rejection

regions are called critical values.

Downloaded by karl hemming (mkwlh1000@gmail.com)

lOMoARcPSD|3666572

[ECON10005: QUANTITATIVE METHODS 1:

Semester 1, 2013 LECTURE REVISION NOTES]

Method:

1. State null and alternative hypotheses

2. Nominate test statistic (z, t etc.) and state its distribution under the null

3. State the decision rule

4. Calculate the test statistic

5. Interpret

Eg. At 5% level of significance, there is sufficient evidence in this sample to reject the null hypothesis

that the average width of paper produced by this machine has not changed.

Lecture 14:

Testing hypotheses about proportions:

For confidence intervals, p is unknown so we find standard error by using 𝑝̂

Because of this, we have two options when hypothesis testing:

1. Replace p with 𝑝̂

2. Replace p with 𝑝0

We tend to use option 2.

One-tailed tests:

If the hypothesis involves < or > then we have a one-tailed tests, the method is the same, however

the shape of the rejection region is different.

With α=0.05 in a one tailed test in the lower tail, the critical value is −𝑧𝑎 = −𝑧0.05 = −1.645

Using p-value with one-tailed test:

The p-value is the marginal level of significance, this means any larger level of significance than the

p-value will put the calculated test statistic in the rejection region and any smaller level of

significance than the p-value will put the calculated test statistic in the acceptance region

The p-value gives the probability of observing a statistic as extreme as the observed value of the test

statistic given that the null hypothesis is true.

For z-statistics:

- For one-tailed tests in the upper tail, the p-value is P(Z>z|H0 is true)

- For one-tailed tests in the lower tail, the p-value is P(Z<z|H0 is true)

- For a two-tailed test, if z is positive, the p-value is 2P(Z>z|H0 is true) and if it is negative the

p-value is 2P(Z<-z|H0 is true) this can also be written as the p-value being 2(Z> |z||H0 is true)

Downloaded by karl hemming (mkwlh1000@gmail.com)

lOMoARcPSD|3666572

[ECON10005: QUANTITATIVE METHODS 1:

Semester 1, 2013 LECTURE REVISION NOTES]

The more extreme the p-value, the more we believe a hypothesis (null or alternative) therefore a

very small p-value suggest the data is unlikely to have come from the population described in the

null, and a big p-value means the observed statistic is very likely to be observed if the null is true

Power of a test:

Let β be the P(Type II error | H0 is false) = P(Accepting H0 | H0 is false)

Therefore β is the probability of making an incorrect decision when H0 is false.

The correct decision when H0 is false is to reject it, the probability of making the correct decision is

P(Rejecting H0 | H0 is false) = 1 – β

1 – β is called the power of the test, and among tests of a given size, we want the ones with the most

power test as these give the greatest probability of making the correct decision.

There is however a trade-off between size and power. If we drive size to 0 so we never make a Type I

error by always accepting the null, we never reject a false null so our power is 0.

Similarly, we can have a power of unity by always rejecting the null, but then we always incorrectly

reject true nulls so our size goes to unity.

Lecture Fifteen:

Working with two populations:

Independent samples: two samples with observations which are unrelated to each other

- A member of one sample cannot be a member in the other

- The samples can have different sizes

- Samples from one population have no effect or influence on the sample from the other

population

- If two random variables are independent, COV[X,Y]=0

Downloaded by karl hemming (mkwlh1000@gmail.com)

lOMoARcPSD|3666572

[ECON10005: QUANTITATIVE METHODS 1:

Semester 1, 2013 LECTURE REVISION NOTES]

Difference between sample means:

𝜎2

If 𝑋~𝑁 or n is large, 𝑋~𝑁(𝜇, 𝑛

)

If X1 and X2 are both random variables and independent samples are taken from each population,

the sample means will be independent random variables;

- 𝐸[𝑋̅1 − 𝑋̅2 ] = 𝜇1 − 𝜇2

𝜎 𝜎 2 2

- 𝑉[𝑋̅1 − 𝑋̅2 ] = 𝑛1 + 𝑛2

1 2

2 2

𝜎 𝜎

- (𝑋̅1 − 𝑋̅2 )~𝑁[(𝜇1 − 𝜇2 ), (𝑛1 + 𝑛2 )]

1 2

Then we can construct interval estimates of (𝜇1 − 𝜇2 ) by using;

𝜎 𝜎 2 2

(𝑋̅1 − 𝑋̅2 ) ± 𝑧𝑎/2 √(𝑛1 + 2 ) and we can test hypothesis about(𝜇1 − 𝜇2 ) by using;

𝑛 1 2

(𝑋̅1 −𝑋̅2 )−(𝜇1 −𝜇2 )

𝑧=

𝜎 2𝜎 2

√( 1 + 2 )

𝑛1 𝑛2

Difference between proportions:

𝑝𝑞

If np≥5 and nq≥5, 𝑝̂ ~𝑁(𝐸[𝑝̂ ], 𝑛

)

If we have independent samples;

- 𝐸[𝑝̂1 − 𝑝̂2 ] = 𝑝1 − 𝑝2

𝑝 𝑞 𝑝 𝑞

- 𝑉[𝑝̂1 − 𝑝̂2 ] = 𝑛1 1 + 𝑛2 2

1 2

𝑝1 𝑞1 𝑝2 𝑞2

If 𝑛1 𝑝̂1 ≥ 5 and 𝑛2 𝑝̂2 ≥ 5 then; (𝑝̂1 − 𝑝̂2 )~𝑁[(𝑝1 − 𝑝2 ), ( 𝑛1

+ 𝑛2

)]

𝑝̂1 𝑞̂1 𝑝̂2 𝑞̂2

Then we can construct interval estimates of (𝑝̂1 − 𝑝̂ 2 ) using; (𝑝̂1 − 𝑝̂2 ) ± 𝑧𝑎/2 √( + ) and

𝑛1 𝑛2

(𝑝̂1 −𝑝̂2 ) −(𝑝1 −𝑝2 )

test hypothesis using 𝑧 = ̂ 𝑞

𝑝

̂ 𝑞

𝑝 ̂

√( 1 1 + 2 2̂ )

𝑛1 𝑛2

Lecture Sixteen: Nominal Univariate and Bivariate tests

Observed frequency is the number of times a particular observation occurred relative to the total

number of observations

Expected frequency is the number of times we would expect that observation to occur if the null

hypothesis were true

For nominal data we use the chi-squared distribution: we sum together the squared differences

between the observed and expected frequencies of k outcomes relative to their expected

(𝑜−𝑒)2

frequencies: 𝜒 2 = ∑ 𝑒

if the observed values are close to expected, the test statistic will be small

With univariate data with k outcomes, the test statistic has degrees of freedom = k-1

Downloaded by karl hemming (mkwlh1000@gmail.com)

lOMoARcPSD|3666572

[ECON10005: QUANTITATIVE METHODS 1:

Semester 1, 2013 LECTURE REVISION NOTES]

2

The critical value for hypothesis testing will be 𝜒𝑎,𝑘−1

2

We reject H0 if 𝜒 2 > 𝜒𝑎,𝑘−1

Interpretation: We conclude there is/is not sufficient evidence in this sample to reject the null

hypothesis in favour of the alternative hypothesis that the distribution of variable is different from

the distribution of other variable at the x% level of significance.

Testing for normality:

We can use the previous method to test whether a univariate distribution first the shape we would

expect if it matched the hypothesised distribution where we have assumed the data came from a

normally distributed population – this is a goodness of fit test

Here the H0: 𝑋~𝑁(𝜇, 𝜎 2 ) and HA: X is not distributed as hypothesised in the null

Testing for independence:

We continue to use the previous method, however as this is bivariate data, we use degrees of

freedom = (r-1)(c-1) i.e. (the number of rows -1) times (the number of columns – 1) for example with

four rows and three columns we would have df=(4-1)(2-1)=3*2=6

Here, H0 is that the two variables are independent and HA is that the two variables are not

independent

If H0 is true, then P(A)=P(AUB) therefore if the null were true, the probability of one variable would

equal the probability of the union of the two for one situation

This means we can calculate the expected frequencies of the data, then we can calculate 𝜒 2 =

(𝑜−𝑒)2 2

∑ and compare it to the critical value. We reject H0 if 𝜒 2 > 𝜒𝑎,𝑘−1

𝑒

Lecture Seventeen: Linear regression models

*finding a linear relationship does not prove causation

1

Covariance: 𝐶𝑂𝑉(𝑋, 𝑌) = 𝑛−1 ∑(𝑥𝑖 − 𝑥̅ )(𝑦𝑖 − 𝑦̅)

𝐶𝑂𝑉(𝑋,𝑌)

Correlation: 𝑟 = 𝑠𝑋 𝑠𝑌

Correlation can be between, [-1, 1] with -1 being a perfectly linear, negative relationship, 1 being a

perfectly linear, positive relationship and 0 being no linear relationship at all.

When X and Y are linearly related, 𝑦 = 𝛽0 + 𝛽1 𝑥1 + 𝜀 where 𝜀 is random error

This term shows that each observation of Y has a random distribution of values for each given value

of X. If 𝜀 is normally distributed, E[𝜀|x]=0 so 𝑦 = 𝛽0 + 𝛽1 𝑥1

We assume errors (𝜀) are random and normally distributed with a mean of zero, so we can construct

estimates of 𝛽0 and 𝛽1 using sample data. Therefore 𝑦̂ = 𝛽̂0 + 𝛽̂1 𝑥1 and the residual, e, is 𝑦 − 𝑦̂

Downloaded by karl hemming (mkwlh1000@gmail.com)

lOMoARcPSD|3666572

[ECON10005: QUANTITATIVE METHODS 1:

Semester 1, 2013 LECTURE REVISION NOTES]

We want the residual for each observation to be as small as possible so the estimated line is more

likely to fit the real one so we aim to minimise the sum of the squared residuals, ∑ 𝑒 2 .

Ordinary Least Squares Method:

∑ 𝑒 2 = ∑(𝑦 − 𝑦̂)2 = ∑(𝑦 − 𝛽̂0 − 𝛽̂1 𝑥)2

To find the values of 𝛽0 and 𝛽1 that will minimise ∑ 𝑒 2 we differentiate ∑ 𝑒 2 with respect to each

coefficient and set the results equal to zero.

𝜕 ∑ 𝑒2

= −2 ∑(𝑦 − 𝛽̂0 − 𝛽̂1 𝑥) = 0 → 𝛽̂0 = 𝑦̅ − 𝛽̂1 𝑥

𝜕𝛽̂0

𝜕 ∑ 𝑒2 ∑ 𝑥𝑦 − 𝑛𝑥̅ 𝑦̅ 𝑆𝑆𝑥𝑦

= −2 ∑ 𝑥(𝑦 − 𝛽̂0 − 𝛽̂1 𝑥) = 0 → 𝛽̂1 = =

𝜕𝛽̂1 ∑ 𝑥 2 − 𝑛𝑥̅ 2 𝑆𝑆𝑥

1

Where 𝑆𝑆𝑥𝑦 = ∑ 𝑥𝑦 − (∑ 𝑥)(∑ 𝑦)

𝑛

OLS estimators 𝛽̂0 and 𝛽̂1 give point estimates of 𝛽0 and 𝛽1 , this method will find the best line of fit

possible for the data.

Properties of the least squares line:

1. OLS is the line of best fit for the data

2. It implies the sum of the residuals is zero

3. It must pass through the means of x and y as these points satisfy the equation 𝛽̂0 = 𝑦̅ − 𝛽̂1 𝑥̅

Lecture Eighteen: Interpreting linear regressions

The estimated intercept coefficient 𝛽̂0 is interpreted as; when the independent variable is equal to 0,

the dependent variable is estimated to be 𝛽̂0 on average.

The estimated slope coefficient 𝛽̂1 is interpreted as; for each increase in 1 of the independent

variable, the dependent variable increases (or decreases) by 𝛽̂1 on average.

The standard error of the estimate:

∑ 𝑒2 ∑(𝑦−𝑦̂)2

With the assumption 𝜀|𝑥~𝑁(0, 𝜎𝜀2 ) we estimate the value of 𝜎𝜀 as; 𝑠𝜀 = √ =√

𝑛−2 𝑛−2

The better the fit of a regression line, the smaller the scatter of observations around the line and

hence the smaller the standard error of the estimate.

Goodness of fit:

The deviation of y from its mean is comprised of two deviations, the explained and unexplained.

R2 measures the proportion of variation in the dependent variable which can be explained by the

variation in the independent variable.

Downloaded by karl hemming (mkwlh1000@gmail.com)

lOMoARcPSD|3666572

[ECON10005: QUANTITATIVE METHODS 1:

Semester 1, 2013 LECTURE REVISION NOTES]

Lecture Nineteen:

Regression assumptions:

- A linear relationship exists between x and y in the population and the data can be modelled

as 𝑦𝑖 = 𝛽0 − 𝛽1 𝑥𝑖 + 𝜀𝑖 , 𝑖 = 1,2,3 … 𝑛

- 𝐸[𝜀𝑖 |𝑥𝑖 ] = 0 →𝐸[𝑦𝑖 |𝑥𝑖 ] = 𝛽0 + 𝛽1 𝑥𝑖

- 𝑉[𝜀𝑖 |𝑥𝑖 ] = 𝜎𝜀2

- 𝐶𝑜𝑣[𝜀𝑖 , 𝜀𝑗 ] = 0, 𝑖 ≠ 𝑗

Sampling distributions of OLS estimators:

Given the assumptions and CLT, 𝛽̂0 ~𝑁(𝛽0 , 𝜎𝛽̂2 ) and 𝛽̂1 ~𝑁(𝛽1 , 𝜎𝛽̂2 )

0 1

̂0 −𝛽0

𝛽 ̂1 −𝛽1

𝛽

From this, we can see that 𝜎𝛽2 ~𝑁(0,1) and 𝜎𝛽2 ~𝑁(0,1)

̂

0 ̂ 1

1 2

Since 𝜎𝛽̂2 and 𝜎𝛽̂2 are unknown, they can be estimated using 𝑠𝜀2 = 𝑛−2 ∑(𝑦𝑖 − 𝛽̂0 − 𝛽̂1 𝑥𝑖 ) and we

0 1

use 𝜎𝜀 instead of 𝜎𝛽̂2 and 𝜎𝛽̂2 .

2

0 1

̂0 −𝛽0

𝛽 ̂1 −𝛽1

𝛽

For this, we then use ~𝑡𝑛−2 and ~𝑡𝑛−2

𝑠𝛽

̂ 𝑠𝛽

̂

0 1

𝜎2

𝜎𝛽̂2 = ∑(𝑥 −𝑥̅

𝜀

)2

- bigger when 𝜎𝜀2 gets bigger i.e. the data gets noisier

1 𝑖

- smaller as n increases

- smaller the greater the variability in x

2

The smaller 𝜎𝛽̂ is, the more precise inferences about 𝛽1 are.

1

Confidence intervals:

𝛽̂1 ± 𝑡𝑎,𝑛−2 𝑠𝛽̂1 however if we can use CLT 𝛽̂1 ± 𝑡𝑎 𝑠𝛽̂1

2 2

Hypothesis testing:

̂𝑗 −𝛽 ∗

𝛽 𝑗

To test a null of the form H0:βj=βj* against an alternative, the test statistic is with either tn-2 or z

𝑠𝛽

̂

𝑗

In testing the hypothesis, we substitute β0 with the β0 in the null hypothesis

Lecture 20: Prediction in regression

Predicting Y for a given X

The estimated regression gives us 𝑦̂ which is our predicted/estimated value of y, E[y|x]

To do this we substitute the y value wanted in our OLS estimate. It measures the y value on average.

Extrapolation: finding a y value for given x outside of the range. The further from the range, the less

accurate our prediction because while our model may be a good fit for our data, the unknown areas

may not fit as well, or not even be linear.

Downloaded by karl hemming (mkwlh1000@gmail.com)

lOMoARcPSD|3666572

[ECON10005: QUANTITATIVE METHODS 1:

Semester 1, 2013 LECTURE REVISION NOTES]

Confidence intervals for expected y values:

1 (𝑥𝑔 −𝑥̅ )2 1 ∑ 𝑒2

ŷ𝑔 ± 𝑡𝑎,𝑛−2 × 𝑠𝑒(𝑦̂𝑔 ), 𝑠𝑒(𝑦̂𝑔 ) = 𝑠𝜀 √𝑛 + 𝑆𝑆𝑥

, 𝑆𝑆𝑥𝑦 = ∑ 𝑥𝑦 − 𝑛 (∑ 𝑥)(∑ 𝑦), 𝑠𝜀 = √𝑛−2

2

This gives the range of values which would cover the average (dependent variable) with a given

independent variable at an x% level of confidence.

To find an interval for a specific y value, we create a prediction interval estimate;

1 (𝑥𝑔 − 𝑥̅ )2

ŷ𝑔 ± 𝑡𝑎,𝑛−2 × 𝑠𝑒(𝑦𝑔 − 𝑦̂𝑔 ), √1 + +

2 𝑛 𝑆𝑆𝑥

This gives the range of values we would expect to cover a single (dependent variable) with a given

independent variable at an x% level of confidence.

Measuring proportionate change:

Proportionate change is (y1-y2)/y2 with percentage change being this multiplied by 100

From calculus, we can determine that proportionate change can be defined using loge, ln

This means we can use ln(𝑦̂) = 𝛽̂0 + 𝛽̂1 𝑥1 to represent a measure of proportionate change in y for a

unit change in x with 100𝛽̂1 being a measure of percentage change in y for a unit change in x.

Similarly, 𝑦̂ = 𝛽̂0 + 𝛽̂1 ln(𝑥1 ) is a measure of change in y for a proportionate change in x with

𝛽̂1 /100 being a measure of change in y for a percentage change in x.

From this, we can see that ln(𝑦̂) = 𝛽̂0 + 𝛽̂1 ln(𝑥1 ) represents a proportionate change in y for a

proportionate change in x which measures elasticity.

When elasticity<|1| it is inelastic with elasticity >|1| being elastic.

Lecture Twenty-one:

Many relationships theorised may be functions of more than one independent variable so we use

multiple regression as a technique to test these theories.

We can either add another independent variable as having its own effect on the dependent variable,

or we can test the relationship of two independent variables’ effects on the dependent variable.

For hypothesis testing, we take 1 degree of freedom for each independent variable, i.e. in single

variable regression we had n-2 degrees of freedom because there was only one independent

variable. In general, degrees of freedom for slope coefficients in regression analysis is n-k-1 where k

is the number of independent variables.

OLS assumes no linear relationships between independent variables. If there is a relationship

between two or more independent variables in a regression, then we have multicollinearity then if

the relationship is perfect, OLS estimates cannot be calculated, and if the relationships are

imperfect, inferences based on OLS estimates will be imprecise.

We test for multicollinearity by checking the correlation coefficients between each pair of

independent variables.

Downloaded by karl hemming (mkwlh1000@gmail.com)

lOMoARcPSD|3666572

[ECON10005: QUANTITATIVE METHODS 1:

Semester 1, 2013 LECTURE REVISION NOTES]

Lecture Twenty-two: Dummy variables

Dummy variables are binary values incorporated into a regression as either a separate term, or an

effect on another independent variable.

𝑦̂ = 𝛽̂0 + 𝛽̂1 𝑥𝑖 + 𝛽̂2 𝑧, z=0 or 1

If z=0 there is no effect on the regression, showing the dummy variable does not affect the

dependent variable, however if it is 1 then 𝛽̂2 will be the extra amount added to the dependent

variable, meaning the intercept of the estimated regression will be (𝛽̂0 + 𝛽̂2 ).

Hypothesis testing:

If we want to know whether the effect of the dummy variable is statistically significant or not at x%

̂2 −𝛽2

𝛽

level of significance, we test H0: β2=0 or not using 𝑡𝑎,𝑛−𝑘−1 with a test statistic of rejecting if

2 𝑠𝛽

̂

2

the test statistic is < -𝑡𝑎,𝑛−𝑘−1 or >𝑡𝑎,𝑛−𝑘−1

2 2

Interpretation: This will conclude that there is/is not sufficient evidence in the sample to suggest

that the dummy variable has an effect on the dependent variable at an x% level of significance.

𝑦̂ = 𝛽̂0 + 𝛽̂1 𝑥𝑖 + 𝛽̂2 𝑥𝑖 𝑧

This measures whether or not the dummy variable has an effect of the slope of a regression. If z=0

there is no effect on the regression, showing the dummy variable does not affect the dependent

variable, however if it is 1 then 𝛽̂2 will be the extra amount added to the dependent variable, per

increase in xi, meaning the slope of the estimated regression will be (𝛽̂1 + 𝛽̂2 )𝑥𝑖 .

We can also test a dummy variable’s effect on slope and intercept by adding the two together, or

test numerous variables by adding more 𝛽̂s.

Downloaded by karl hemming (mkwlh1000@gmail.com)

You might also like

- Introduction Statistics Imperial College LondonDocument474 pagesIntroduction Statistics Imperial College Londoncmtinv50% (2)

- CFA Level 1 Review - Quantitative MethodsDocument10 pagesCFA Level 1 Review - Quantitative MethodsAamirx6450% (2)

- Signals, Spectra and Signal Processing (EC413L1) Name: Date: Section: Rating: Exercises #02 Generation of SequenceDocument8 pagesSignals, Spectra and Signal Processing (EC413L1) Name: Date: Section: Rating: Exercises #02 Generation of SequenceMelric LamparasNo ratings yet

- StatestsDocument20 pagesStatestskebakaone marumoNo ratings yet

- Quantitative Analysis: After Going Through The Chapter Student Shall Be Able To UnderstandDocument14 pagesQuantitative Analysis: After Going Through The Chapter Student Shall Be Able To UnderstandNirmal ShresthaNo ratings yet

- Chapter 4 - Summarizing Numerical DataDocument8 pagesChapter 4 - Summarizing Numerical DataeviroyerNo ratings yet

- Standard ErrorDocument3 pagesStandard ErrorUmar FarooqNo ratings yet

- Summary - Non - Parametric TestsDocument24 pagesSummary - Non - Parametric TestsSurvey_easyNo ratings yet

- Basic - Statistics 30 Sep 2013 PDFDocument20 pagesBasic - Statistics 30 Sep 2013 PDFImam Zulkifli S100% (1)

- Chapter 6Document37 pagesChapter 6FrancoNo ratings yet

- Review of Statistics Basic Concepts: MomentsDocument4 pagesReview of Statistics Basic Concepts: MomentscanteroalexNo ratings yet

- Coursera Statistics One - Notes and FormulasDocument48 pagesCoursera Statistics One - Notes and FormulasLuisSanchezNo ratings yet

- Chapter 3, Summary MeasuresDocument21 pagesChapter 3, Summary MeasuresZoel Dirga DinhiNo ratings yet

- Lecture 1Document10 pagesLecture 1李姿瑩No ratings yet

- MIT Microeconomics 14.32 Final ReviewDocument5 pagesMIT Microeconomics 14.32 Final ReviewddkillerNo ratings yet

- Data Reduction or Structural SimplificationDocument44 pagesData Reduction or Structural SimplificationDaraaraa MulunaaNo ratings yet

- Module 2 in IStat 1 Probability DistributionDocument6 pagesModule 2 in IStat 1 Probability DistributionJefferson Cadavos CheeNo ratings yet

- Module 4 - Statistical MethodsDocument28 pagesModule 4 - Statistical MethodsRathnaNo ratings yet

- Univariate StatisticsDocument4 pagesUnivariate StatisticsDebmalya DuttaNo ratings yet

- STAT1301 Notes: Steps For Scientific StudyDocument31 pagesSTAT1301 Notes: Steps For Scientific StudyjohnNo ratings yet

- Quantitative-Methods Summary-Qm-NotesDocument35 pagesQuantitative-Methods Summary-Qm-NotesShannan RichardsNo ratings yet

- Random Errors in Chemical Analysis: CHM028 Analytical Chemistry For TeachersDocument38 pagesRandom Errors in Chemical Analysis: CHM028 Analytical Chemistry For TeachersJmark Valentos ManalutiNo ratings yet

- Estimation Bertinoro09 Cristiano Porciani 1Document42 pagesEstimation Bertinoro09 Cristiano Porciani 1shikha singhNo ratings yet

- Types of StatisticsDocument7 pagesTypes of StatisticsTahirNo ratings yet

- Jornadas de Estad Istica Aplicada, Universidad de Chimborazo, Riobamba, Ecuador, 10 - 13th June 2013Document28 pagesJornadas de Estad Istica Aplicada, Universidad de Chimborazo, Riobamba, Ecuador, 10 - 13th June 2013Miguel H Villacis ANo ratings yet

- Basic Concepts of Inference: Corresponds To Chapter 6 of Tamhane and DunlopDocument40 pagesBasic Concepts of Inference: Corresponds To Chapter 6 of Tamhane and Dunlopakirank1No ratings yet

- Stat (I) 4-6 MaterialDocument42 pagesStat (I) 4-6 Materialamareadios60No ratings yet

- Lesson Note 7Document14 pagesLesson Note 7Miftah 67No ratings yet

- Formula Help SheetDocument6 pagesFormula Help Sheetleah.efnNo ratings yet

- F (A) P (X A) : Var (X) 0 If and Only If X Is A Constant Var (X) Var (X+Y) Var (X) + Var (Y) Var (X-Y)Document8 pagesF (A) P (X A) : Var (X) 0 If and Only If X Is A Constant Var (X) Var (X+Y) Var (X) + Var (Y) Var (X-Y)PatriciaNo ratings yet

- Lesson Note 5Document13 pagesLesson Note 5Miftah 67No ratings yet

- Data ManagementDocument7 pagesData ManagementJhan Ray Gomez BarrilNo ratings yet

- Review 2 SummaryDocument4 pagesReview 2 Summarydinhbinhan19052005No ratings yet

- Correlation and RegressionDocument59 pagesCorrelation and RegressionKishenthi KerisnanNo ratings yet

- Stat 106Document26 pagesStat 106Bashar Al-Hamaideh100% (1)

- MAE 300 TextbookDocument95 pagesMAE 300 Textbookmgerges15No ratings yet

- INDU6311 Summary StatisticsDocument41 pagesINDU6311 Summary Statisticsabhishek jainNo ratings yet

- Generalized Estimating Equations (Gees)Document40 pagesGeneralized Estimating Equations (Gees)r4adenNo ratings yet

- Univariate StatisticsDocument7 pagesUnivariate StatisticsDebmalya DuttaNo ratings yet

- Chapter 3, Numerical Descriptive Measures: - Data Analysis IsDocument21 pagesChapter 3, Numerical Descriptive Measures: - Data Analysis IsRafee HossainNo ratings yet

- Kinds of VariableDocument33 pagesKinds of VariableArnold de los ReyesNo ratings yet

- Why Divide by (N-1) For Sample Standard DeviationDocument2 pagesWhy Divide by (N-1) For Sample Standard DeviationlaxmivandanaNo ratings yet

- Point and Interval Estimation-26!08!2011Document28 pagesPoint and Interval Estimation-26!08!2011Syed OvaisNo ratings yet

- ECON1203 PASS Week 3Document4 pagesECON1203 PASS Week 3mothermonkNo ratings yet

- Measures of Dispersion TendencyDocument7 pagesMeasures of Dispersion Tendencyashraf helmyNo ratings yet

- Lesson 15 INFERENCES ABOUT THREE OR MORE POPULATION MEANS USING F-TEST (ANOVA)Document21 pagesLesson 15 INFERENCES ABOUT THREE OR MORE POPULATION MEANS USING F-TEST (ANOVA)normurv8940No ratings yet

- Review of Probability and StatisticsDocument34 pagesReview of Probability and StatisticsYahya KhurshidNo ratings yet

- Measures of Central Tendency: MeanDocument7 pagesMeasures of Central Tendency: MeanliliNo ratings yet

- MATH 6200 Lesson 2 PDFDocument31 pagesMATH 6200 Lesson 2 PDFAldrien S. AllanigueNo ratings yet

- SALMAN ALAM SHAH - Definitions of StatisticsDocument16 pagesSALMAN ALAM SHAH - Definitions of StatisticsSaadatNo ratings yet

- Probability PresentationDocument26 pagesProbability PresentationNada KamalNo ratings yet

- Probability Chap 1Document12 pagesProbability Chap 1SahilNo ratings yet

- Quantitative Methods: Describing Data NumericallyDocument32 pagesQuantitative Methods: Describing Data NumericallyABCNo ratings yet

- FORMULASDocument16 pagesFORMULASPhi AnhNo ratings yet

- Measures of Dispersion - 1Document44 pagesMeasures of Dispersion - 1muhardi jayaNo ratings yet

- Presenting and Interpreting Research Data: Kim Charies L. OkitDocument34 pagesPresenting and Interpreting Research Data: Kim Charies L. OkitDodoy TacnaNo ratings yet

- 5a.review of Conventional StatisticsDocument14 pages5a.review of Conventional StatisticsMANNNo ratings yet

- Probability and Statistics Lecture NotesDocument9 pagesProbability and Statistics Lecture NotesKristine Almarez100% (1)

- Quantitative Techniques 1Document37 pagesQuantitative Techniques 1nprash123No ratings yet

- Learn Statistics Fast: A Simplified Detailed Version for StudentsFrom EverandLearn Statistics Fast: A Simplified Detailed Version for StudentsNo ratings yet

- Intro Macro Tutorial 9 (Week 10) : Review QuestionsDocument2 pagesIntro Macro Tutorial 9 (Week 10) : Review Questionskarl hemmingNo ratings yet

- Intro Macro Tutorial 8 (Week 9) : Review QuestionsDocument2 pagesIntro Macro Tutorial 8 (Week 9) : Review Questionskarl hemmingNo ratings yet

- Intro Macro Tutorial 7 (Week 8) : Review QuestionsDocument4 pagesIntro Macro Tutorial 7 (Week 8) : Review Questionskarl hemmingNo ratings yet

- T6 Pretutorial 2019Document2 pagesT6 Pretutorial 2019karl hemmingNo ratings yet

- T8 Intutorial 2019Document1 pageT8 Intutorial 2019karl hemmingNo ratings yet

- T6 Pretutorial 2019Document2 pagesT6 Pretutorial 2019karl hemmingNo ratings yet

- QM1 Handwritten NotesDocument17 pagesQM1 Handwritten Noteskarl hemmingNo ratings yet

- Prelim HSC ResultsDocument6 pagesPrelim HSC Resultskarl hemmingNo ratings yet

- Pre CommunicatesDocument265 pagesPre Communicateskarl hemmingNo ratings yet

- The Riverina Anglican College: 1st Child 2nd Child 3rd ChildDocument1 pageThe Riverina Anglican College: 1st Child 2nd Child 3rd Childkarl hemmingNo ratings yet

- Policy 22 Fees PolicyDocument2 pagesPolicy 22 Fees Policykarl hemmingNo ratings yet

- Basic CalculusDocument3 pagesBasic CalculusRhea Mae PatatagNo ratings yet

- 05 MAS Distributed Constraint OptimizationDocument124 pages05 MAS Distributed Constraint Optimizationhai zhangNo ratings yet

- BradfordDocument3 pagesBradfordBien Emilio B NavarroNo ratings yet

- EE585 Fall2009 Hw3 SolDocument2 pagesEE585 Fall2009 Hw3 Solnoni496No ratings yet

- One-To-One and Inverse FunctionsDocument18 pagesOne-To-One and Inverse FunctionsJaypee de GuzmanNo ratings yet

- Syllabus Data Analysis Course For SociologyDocument2 pagesSyllabus Data Analysis Course For SociologyThe_bespelledNo ratings yet

- Fourier Analysis Made Easy: Time DomainDocument23 pagesFourier Analysis Made Easy: Time Domainprobability2No ratings yet

- Analyzing Missing DataDocument49 pagesAnalyzing Missing Datasana mehmoodNo ratings yet

- Chapter 5 Practical ResearchDocument38 pagesChapter 5 Practical ResearchJan Carlo PandiNo ratings yet

- Vector CalculusDocument37 pagesVector CalculuserNo ratings yet

- Secondary Research MethodsDocument15 pagesSecondary Research Methodselinatheint.uumNo ratings yet

- Data Model Applications - 13-15 PG-B - II - N.S. NilkantanDocument3 pagesData Model Applications - 13-15 PG-B - II - N.S. NilkantanAbhishek Raghav0% (1)

- Efficient Computation of The DFTDocument35 pagesEfficient Computation of The DFTNikita ShakyaNo ratings yet

- Some Continuous Probability DistributionsDocument17 pagesSome Continuous Probability DistributionsJude SantosNo ratings yet

- An1 Derivat - Ro BE 2 71 Mathematical AspectsDocument8 pagesAn1 Derivat - Ro BE 2 71 Mathematical Aspectsvlad_ch93No ratings yet

- Derivadas ParcialesDocument4 pagesDerivadas ParcialesDaren GuerreroNo ratings yet

- MSC Math SPS 2019 20 PDFDocument30 pagesMSC Math SPS 2019 20 PDFRahul kirarNo ratings yet

- Matlab ExamplesDocument12 pagesMatlab ExamplesNazareno BragaNo ratings yet

- Lie BracketDocument2 pagesLie BracketMogaime BuendiaNo ratings yet

- Measures of DispersionDocument25 pagesMeasures of Dispersionroland100% (1)

- Answer All Questions in This Section.: X F X FDocument3 pagesAnswer All Questions in This Section.: X F X Fzoe yinzNo ratings yet

- CHEM1001 Acid Base Part 2Document36 pagesCHEM1001 Acid Base Part 2Dlcm Born To WinNo ratings yet

- Unit 5 and 6 - Inferential Statistics and Regression AnalysisDocument68 pagesUnit 5 and 6 - Inferential Statistics and Regression AnalysisRajdeep SinghNo ratings yet

- Syllabus - Transportation AnalyticsDocument3 pagesSyllabus - Transportation AnalyticsRead LeadNo ratings yet

- Statistics in Criminal Justice PDFDocument801 pagesStatistics in Criminal Justice PDFRoberto Andrés Navarro DolmestchNo ratings yet

- Goleb Transport: Value (EMV)Document3 pagesGoleb Transport: Value (EMV)cutie4everrNo ratings yet

- Micro Prelim SolutionsDocument32 pagesMicro Prelim SolutionsMegan JohnstonNo ratings yet

- Diff. Calc. Module 8 Exponential & Logarithmic FunctionsDocument8 pagesDiff. Calc. Module 8 Exponential & Logarithmic FunctionsWild RiftNo ratings yet

- LAB 3: Difference Equations, Z-Transforms, Pole-Zero Diagrams, Bibo Stability and Quantization EffectsDocument5 pagesLAB 3: Difference Equations, Z-Transforms, Pole-Zero Diagrams, Bibo Stability and Quantization EffectsAnthony BoulosNo ratings yet