Professional Documents

Culture Documents

Call Analysis With Classification Using Speech and Non-Speech

Uploaded by

Olusola Ademola Adesina0 ratings0% found this document useful (0 votes)

18 views4 pagesThis paper reports our recent development of a highly reliable Call Analysis technique. It makes novel use of automatic speech recognition (ASR), speech Utterance Classification and non-speech features. The main ideas include the use of the NGram filler model to improve the ASR accuracy on important words in a message.

Original Description:

Copyright

© Attribution Non-Commercial (BY-NC)

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThis paper reports our recent development of a highly reliable Call Analysis technique. It makes novel use of automatic speech recognition (ASR), speech Utterance Classification and non-speech features. The main ideas include the use of the NGram filler model to improve the ASR accuracy on important words in a message.

Copyright:

Attribution Non-Commercial (BY-NC)

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

18 views4 pagesCall Analysis With Classification Using Speech and Non-Speech

Uploaded by

Olusola Ademola AdesinaThis paper reports our recent development of a highly reliable Call Analysis technique. It makes novel use of automatic speech recognition (ASR), speech Utterance Classification and non-speech features. The main ideas include the use of the NGram filler model to improve the ASR accuracy on important words in a message.

Copyright:

Attribution Non-Commercial (BY-NC)

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 4

International Conference on Spoken Language Processing, pp.

2011-2014, ISCA, Pittsburgh, PA, USA, 2006

Call Analysis with Classification Using Speech and Non-Speech

Features

Yun-Cheng Ju, Ye-Yi Wang, and Alex Acero

Microsoft Corporation

One Microsoft Way, Redmond, WA 98052, USA

{yuncj, yeyiwang, alexac}@microsoft.com

and recorded human speech. For example, the “non-existing

Abstract phone numbers tone” has largely been replaced by recorded

speech. This trend has made the traditional tone detectors

This paper reports our recent development of a highly reliable increasingly less effective. In addition, phone screening

call analysis technique that makes novel use of automatic technology, fueled by the increasing number of unsolicited

speech recognition (ASR), speech utterance classification and telemarketers, also poses new challenges for the voice

non-speech features. The main ideas include the use the N- discriminators to let legitimate automated calls (e.g., the

Gram filler model to improve the ASR accuracy on important notification service) to complete.

words in a message, and the integration of recognized Recently, a few ASR-based approaches have been

utterance with non-speech features such as utterance length, proposed to improve the voice discrimination part of the

and the use of utterance classification technique to interpret problem [4]. However, high word error rates have limited its

the message and extract additional information. Experimental success.

evaluation shows that the use of the utterance length, In this paper, we focus our efforts on voice discriminator

recognized text, and the classifier’s confidence measure and present an improved technique over that described in [2]

reduces the classification error rate to 2.5% of the test sets. to overcome the hurdles in using ASR. We use the N-Gram

Index Terms: Call Analysis, Answering Machine Detection, based filler model [3] from limited training sentences to

ASR, N-Gram Filler, Utterance Classification dramatically improve word accuracy for those information

bearing words in users’ utterances. We use speech utterance

1. Introduction classification to overcome fragility of the keyword spotting

approach by analyzing the whole utterances and perform

Various outbound telephony applications require call concept recognition based on automatically learned features

analysis for identifying the presence of a live person at the from training sentences. In addition, we also use non-speech

other end. A typical scenario is the outbound call features, mostly the utterance length, to form a prior to further

management (OCM) system in an outbound call center (a improve the classification accuracy and to provide a safe fall

typical telemarketing center) where agents can increase their back for failed recognitions. Finally, our speech utterance

efficiency by only attending the calls which are identified as classifier’s capability of extracting critical information (e.g.,

answered by live persons. Another emerging use of the OCM “press 2” in “… if you are not a telemarketer, press 2 to

systems is for outbound telephony notification applications complete the call”) enables legitimate applications to pass the

where, for example, an airline company can notify their phone screening or other automated systems.

customers for flight delay or changes. The ability of robust The rest of the paper is organized as follows. Section 2

call analysis has been one of the few top requests from the briefly reviews the N-Gram based filler model technique and

Microsoft Speech Server customers. describes the recognition language model. Section 3

Prior to the work presented in this paper, most work in this summarizes the utterance verification technique and our

area have been focusing on two components - a tone detector classification model. Section 4 describes the integration of the

for busy, non-existing numbers, Modem, or Fax and a voice classification with non-speech features and evaluates the

discriminator for human voices. Tone detection [1] can be proposed methods. Section 5 concludes the paper.

done accurately for all three types of tones (Special

Information Tones (SIT), Cadence Tones, and Modem/Fax 2. N-Gram Based Filler Model

Tones). However, voice discriminator is more difficult and a

typical “fast voice” approach [2] is to use the zero-crossing The integrated technique is based on our earlier work on

and energy-peak based speech detector algorithm with the N-Gram based filler model [1], which allows us to reduce both

silence and energy timing information presented in the signal. the recognition error rate and the training corpus size. In this

It has been observed that people usually answer the phone by section, we briefly review our model and the related

saying a short “Hello” (or an equivalent greeting in other interpolated approach.

languages) and wait for the other party to respond so there is

an energy burst followed by a longer period of silence. On the 2.1. Interpolated N-Gram Model

other hand, the recorded voice (answering machine) tends to It is well known that statistical language model (SLM)

be speech signals with normal sequence of energy and silence increases robustness of speech recognition. It is also well

periods. known that an SLM can be implemented as a Context Free

With the advent of new technology, many of the Grammar (CFG) [5].

traditional audible tones have been augmented by synthesized

There are several approaches in using SLM for ASR. A though the word accuracy rate is unimpressively at 50%, all of

general-domain N-Gram (typically used for desktop dictation) the information bearing words, as occurred in the training

can be used without development effort, but its performance is sentence, were correctly recognized.

mediocre. A domain-specific LM (completely trained from

in-domain training data) works best, but it sometimes may not 3. Speech Utterance Classification

be practical due to the requirement of a large amount of

domain-specific training data. A domain-adapted LM, on the As perfect speech recognition of either live person or

one hand, works better than the general-domain LM and answering machine is unrealistic, using speech utterance

requires fewer training sentences. However, the size of an classification significantly improves the robustness over

adapted LM, which is no smaller than the general-domain N- techniques based on keyword spotting approaches [6,7]. In

Gram, is prohibitive for dialog turn specific language this section, we first briefly summarize the use of maximum

modeling on a speech server. entropy (Maxent) classifier [8] and then describe the heuristic

approach we used to integrate some non-speech features.

We proposed an interpolated approach where a domain-

specific core LM is trained completely from a limited set of

3.1. Maximum Entropy Classifier

training sentences. At the run time, a general domain garbage

model N-Gram Filler (typically a trimmed down version of Given an acoustic signal A , the task of speech utterance

the dictation grammar) is attached through the unigram back-

classification is to find the destination class Cˆ from a fixed

off state for improved robustness. The interpolation is

achieved by discounting some unigram counts and reserving set C that maximizes the conditional probability P (C | A) :

them for unseen garbage words. The size of the resulting LM Cˆ = arg max P (C | A)

is small (typically a few Kilo bytes) due to the small size of the C ∈C

training sentences required. The N-Gram Filler is relatively

= arg max ∑ P (C | W , A) P (W | A)

large (8 MB in our system) but poses no harm to the speech C ∈C W

server because it is shared among all dialog turns and different (1)

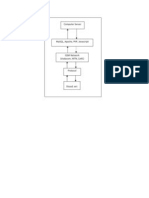

applications. Figure 1 depicts the binary CFG trained from a ≈ arg max ∑ P (C |W ) P (W | A)

C ∈C W

single training sentence “press <digit> to complete …”. In

⎛ ⎞

addition to the three special states (<S>, </S>, and unigram ≈ arg max P ⎜⎜⎜C | arg max P(W | A)⎟⎟⎟

backoff state), states with one word (e.g. “press”) are bigram C ∈C ⎝ W ⎠

states and states with two words (e.g. “press <digit>”) are Here W represents a possible word sequence that is uttered in

trigram states.

A . We made a practical Viterbi approximation by adopting a

two-pass approach, in which an ASR engine obtains the best

hypothesis of W from A in the first pass; and a classifier

takes W as input and identifies its destination class. The text

classifier models the conditional distribution P (C | W ) and

picks the destination according to Eq. (1). The Mexent

classifier models this distribution as

1 ⎛ ⎞

P (C |W ) = exp ⎜⎜ ∑ λi fi (W ,C )⎟⎟⎟ (2)

Z λ (W ) ⎜

⎝ f ∈F

i ⎠⎟

Where F is a set that contains important features (functions

of a destination class and an observation) for classification,

and Z λ (W ) = ∑C exp (∑ f ∈F λi fi (W ,C ))

i

normalizes the

Figure 1: Interpolated N-gram Model. (Transitions

w/o labels are back-off transitions) distribution. We include binary unigram/bigram features in F:

⎧⎪ 1 when u ∈ W ∧ C = c

2.2. Accuracy Improvement for Information-Bearing fu ,c (W ,C ) = ⎪⎨ (3)

⎪⎪ 0 otherwise

Words ⎪⎩

For the concept recognition application described above where u is a word or a word bigram, c is a destination

and other speech utterance classification applications, class.

improvement of word accuracy may be too costly and In Eq. (2), λi ’s are the weights for feature fi , which are

unnecessary. The preliminary experiments showed that our optimized from training data.

interpolated N-gram model can effectively recognize

important (i.e., information bearing) words correctly without Finally, we define the confidence measure as the posterior

acquiring massive training sentences. We believe the ratio:

improvement in word accuracy for information bearing words P (C | W )

is the key to lower the development cost of speech Conf (C | W ) = (4)

P(C ' | W )

applications.

For example, the LM depicted in Figure 1 recognizes the where C ' is the class that has the highest posterior probability

utterance “In order to complete the call, please press 2 now” as among those different from C.

“feel the to complete a call Steve press 2 know”. Even

3.2. Incorporating Non-Speech Features The evaluation is conducted for two tasks. The first task

is to distinguish a live person from the recorded message.

There are many useful non-speech features we can obtain

The other task is to subdivide the recorded message into one

from ASR (e.g., the length of utterance and initial silence). In

of the three categories (Answering Machine, Failed Calls, and

fact they had long been used for this application. It is No Solicitation Screening) and to extract the action necessary

important to find an efficient and effective way to incorporate

to complete the call in the case of phone screening.

those features for better performance. In addition, these

From the training samples, we created a training set of 90

features also provide practical backup strategies when the ASR sentences/phrases in a total of 1061 words (mostly for

fails occasionally. One such a feature is utterance length. We

recorded messages) for both the recognition grammar and call

have observed that the responses of live persons are mostly

classifier training.

shorter than the answering machine messages.

One possible way of incorporating those features is to treat 4.1. Detection of Live Person

them as features in a different dimension and to form joint

features. However, this approach requires more training data. In the first experiment, we investigated the effect of using

We took a simpler, heuristic two-pass approach. When the both speech and non-speech features for the detection of live

response is short, we assume it’s from live person unless there person vs. recorded message as described in section 3.2. Table

is strong evidence otherwise from the word level classifier and 2 shows the break down statistics of the test set according to

vise versa for the long responses. The algorithm is illustrated the utterance duration (D) or the text classifier’s output (T).

in Figure 2. The “Very Short”, “Very Long”, and “Relatively Truth Classification Res. Mix. Both

Short” are set to be 1, 4, and 2.5 seconds observed from the

D: Very Short 955 713 1668

training set.

D: Short 76 298 374

D: Long* 6 35 41

Live D: Very Long* 1 3 4

Person T: No Recognition 334 335 669

T: Live 603 494 1097

T: Recorded* 101 220 321

Total 1038 1049 2087

D: Very Short* 2 0 2

D: Short* 113 62 175

D: Long 209 109 318

D: Very Long 1267 915 2182

Recorded

Message T: No

44 27 71

Recognition*

T: Live* 32 23 55

Figure 2: Call Classifier using both utterance length T: Recorded 1515 1036 2551

and recognized text Total 1591 1086 2677

Table 2. Break down classification results of the test sets. The rows

As will be shown in the results section, this simple marked with (*) represent misclassifications if only the durations or

heuristic significantly improved the performance by text classification is used alone. Our proposed method improves the

recovering the classification errors from those poorly cases in the shaded area.

recognized sentences.

It is observed that using the recognized utterance alone for

4. Experiments & Results the call classification yields the worst result. The overall error

rate is around 9.4% if we treated the cases of no recognition as

We built a prototype system on a pre-release version of live persons ((321 + 71 + 55) / (2087 + 2677)). It is even less

Microsoft Speech Server 2007 to initiate outbound calls. The accurate than only using the utterance length where the error

training samples consists of 1331 connected calls to rate is 4.7% ((41 + 4 + 2 + 175) / (2087 + 2677)). More

residential numbers across the United States. (Table 1). training samples might be needed to reduce the recognition

Hello (420) errors to reduce the classification error rate.

Live Person (508) In our proposed approach, we use the utterance length as

Other Greetings (88)

the first cut and then use the call classification technique for

Answering Machine (809) the cases in the middle range (shaded areas in Table 2).

Recorded Message (823) Failed / Invalid Numbers (7) Table 3 shows our approach is very effective for short

No Solicitation Screening (7) utterances. Only the shaded areas are errors. We believe that

Table 1. Breakdown of the training set in each category is because the short messages are more precise (e.g., “Please

leave your message”) and are also easier to get recognized and

We have two test sets. Test set #1 (Res) is from the 2629 classified correctly.

successfully reached residential numbers different from those The error rates are summarized in Table 4. We found that

in the training set, Test set #2 (Mix) is from the connected we have reached some small business receptionists and their

calls to 2135 mixed residential and business numbers. The reserved phone numbers in the second (Mixed) test set which

second test set contains more varieties and is more there is a slight mismatch to our training set. However, the

challenging. There was no overlapping among the three data

sets.

combined overall error rate (2.5%) is very appropriate for the required amount of the training sentences. This achieves

most telephony applications. significantly higher accuracy for the information-bearing

Truth Classifier’s outcome Res. Mix. Both words observed in the training set and contributed to high

Very Confident is Machine 5 41 46 accuracy in call analysis.

Short

Live In addition to speech content features, non-speech

Otherwise 71 257 328

features are also integrated in the classification framework.

Very Confident is Human 1 9 10

Long Live Strong classification results are obtained on both call analysis

Otherwise 5 26 31 and the new recorded message sub-classification task.

Short Very Confident is Machine 92 52 144 Our integrated approach follows common sense and the

Recorded Otherwise 21 10 31 facts we observed from the sample calls. Non-speech

Long Very Confident is Human 3 4 7 features, specifically the utterance length, are used in the first

Recorded Otherwise 206 105 311 stage of classification. Very short utterances tend to come

Table 3. Classification results from our proposed approach. The from live persons and very long utterances come from

shaded rows represent errors. machines. We only perform utterance classifications for the

relative short and long utterances with different priors. Only

Res. Mix. Both highly confident short answering machine messages are

Utterance Classification Only 6.7% 12.6% 9.4% considered as from answering machines and vise versa for

Duration Only 4.6% 4.7% 4.7% highly confident long live person utterances. In this way, a

Proposed Approach 1.4% 3.9% 2.5% significant number of the recognition and classification errors

are avoided.

Table 4. Overall classification error rates. We have also shown that the use of the N-Gram based

SLM interpolation approach worked well in the task of sub-

4.2. Sub-Classification of Recorded Messages classification of the recorded messages, a typical speech

One advantage of our ASR/speech classification approach utterance classification + slot filling application. We believe

is the capability of understanding the message to keep up with our work opens a new opportunity for two automated

new telephony/speech technology, like the use of recorded telephony systems to interact.

speech in the telephony infrastructure and in the phone We are currently evaluating the performance of the

screening/auto-attendant systems. proposed approach in a bilingual/multi-lingual area without

In this experiment, we demonstrate the performance of the the use of a bi-lingual speech recognizer. Preliminary

proposed approach in classifying the recorded messages into experiments have shown promising results for call analysis.

sub-classes and extracting the actions needed to pass the “No

Solicitation” screening systems. We built a 3-way utterance 6. Acknowledgements

classifier with the same training sentences described earlier. We thank Dong Yu, Li Deng in our group for useful

Table 5 shows the classification results on the recorded discussions and David Ollason, Craig Fisher in the Microsoft

messages in the test sets. Speech Server group for suggesting the research topic.

Res. Mix. Both

7. References

M F N M F N M F N

Machine (M) 1564 4 1017 3 2581 7 [1] L. J. Roybal, “Signal-Recognition Arrangement Using

Failed (F) 1 11 11 51 12 62 Cadence Tables” United States Patent, 5,719,932

No Solici. (N) 2 9 4 2 13 [2] S. C. Leech, “Fast Voice Energy Detection Algorithm for

Table 5. Confusion matrix for classifying the recorded message into the Merlin II Auto-Dialer Call Classifier Release 2

three sub-categories. Numbers in the shaded blocks are errors. (ADCC-M R2),” MT XG1 W60000, 1990.

[3] Dong Yu, Yun Cheng Ju, Ye-Yi Wang, and Alex Acero,

6 out of the 11 misclassified “Failed Call” messages in the “N_Gram Based Filler Model for Robust Grammar

second (Mixed) test sets were not generated by the carrier and Authoring,” Proc. ICASSP, 2006.

the key phrases like “unassigned number”, “call again later for [4] Sharmistha Sarkar Das, Norman Chan, Danny Wages,

further information”, “non-working”, “temporarily out of and John H. L. Hansen. “Application of Automatic

service” were not covered in our limited training sentences. Speech Recognition in Call Classification,” Proc.

The rest of errors were caused by misrecognition. We believe ICASSP, 2002.

more training data can further improve our performance. In [5] G. Riccardi, R. Pieraccini, and E. Bocchieri, “Stochastic

addition, the action needed to pass the No Solicitation Automata for Language Modeling,” Computer Speech

screening system for the 13 cases were all correctly extracted. and Language, Vol. 10, pp. 265-293, 1996.

[6] H.-K. J. Kuo et al., “Discriminative Training for Call

5. Summary and Conclusions Classification and Routing”, Proc. ICSLP, 2002

[7] B. Carpenter and J. Chu-Carroll, “Natural Language Call

Use of ASR to improve call analysis has started to attract Routing: A Robust, Self-organizing Approach,” Proc.

more attention in keeping up with the trend of replacing ICSLP 1998.

audible tones with recorded messages and the popularity of [8] A. L. Berger, S. A. Della Pietra, and V. J. Della Pietra,

the phone screening/auto-attendant systems. Yet most “A Maximum Entropy Approach to Natural Language

practices have not overcome the obstacle of high recognition Processing,” Computational Linguistics, Vol 22, 1996.

word error rates. We have extended our earlier work in the

N-Gram filler model and used the interpolated SLM to reduce

You might also like

- Dynamic Fuzzy String-Matching Model For Information Retrieval Based On Incongruous User QueriesDocument6 pagesDynamic Fuzzy String-Matching Model For Information Retrieval Based On Incongruous User QueriesOlusola Ademola AdesinaNo ratings yet

- Using Clouds CapeDocument8 pagesUsing Clouds CapeOlusola Ademola AdesinaNo ratings yet

- Text Staganography A Novel ApproachDocument8 pagesText Staganography A Novel ApproachOlusola Ademola AdesinaNo ratings yet

- Security and Privacy Issues With Health Care Information TechnologyDocument6 pagesSecurity and Privacy Issues With Health Care Information TechnologyOlusola Ademola AdesinaNo ratings yet

- Profile of Prospective Supervisor FormDocument3 pagesProfile of Prospective Supervisor FormOlusola Ademola AdesinaNo ratings yet

- Survey Report - State of The Art in Digital Steganography Focusing Ascii Texts DocumentDocument10 pagesSurvey Report - State of The Art in Digital Steganography Focusing Ascii Texts DocumentOlusola Ademola AdesinaNo ratings yet

- Steganography Is It Becoming A Double-Edged Sword in Computer SecurityDocument17 pagesSteganography Is It Becoming A Double-Edged Sword in Computer SecurityOlusola Ademola AdesinaNo ratings yet

- Pronunciation Modeling in Spelling CorrectionDocument6 pagesPronunciation Modeling in Spelling CorrectionOlusola Ademola AdesinaNo ratings yet

- 2004 01 21 Presentation SteganographyDocument34 pages2004 01 21 Presentation Steganographyym84545ymNo ratings yet

- J2ME StepbystepDocument36 pagesJ2ME Stepbystepsrinivasvadde100% (11)

- Ipoletse Call Centre Manual 28 2 VersionDocument47 pagesIpoletse Call Centre Manual 28 2 VersionOlusola Ademola AdesinaNo ratings yet

- Interface Interaction Design For Illiterate and Semi-Illiterate MobileDocument26 pagesInterface Interaction Design For Illiterate and Semi-Illiterate MobileOlusola Ademola AdesinaNo ratings yet

- A Demo La PresentationDocument11 pagesA Demo La PresentationOlusola Ademola AdesinaNo ratings yet

- Improving Letter-To-Pronunciation Accuracy With AutomaticDocument4 pagesImproving Letter-To-Pronunciation Accuracy With AutomaticOlusola Ademola AdesinaNo ratings yet

- Intercepting GSM 2008-09-14Document9 pagesIntercepting GSM 2008-09-14Olusola Ademola AdesinaNo ratings yet

- A Java Steganography ToolDocument6 pagesA Java Steganography ToolOlusola Ademola AdesinaNo ratings yet

- ArchitectureDocument1 pageArchitectureOlusola Ademola AdesinaNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5783)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- User Manual - YFB60 - Version 2 0Document12 pagesUser Manual - YFB60 - Version 2 0HITESHNo ratings yet

- Fitness Center 1 Cs Practical For Class 12Document13 pagesFitness Center 1 Cs Practical For Class 12aeeeelvishbhaiiNo ratings yet

- S32K148 EVB: Quick Start GuideDocument55 pagesS32K148 EVB: Quick Start GuideHtet lin AgNo ratings yet

- Android Kotlin Developer: Nanodegree Program SyllabusDocument18 pagesAndroid Kotlin Developer: Nanodegree Program SyllabushydyrSNo ratings yet

- UG496 BT122 Project Configuration Users GuideDocument22 pagesUG496 BT122 Project Configuration Users Guidemar_barudjNo ratings yet

- PreDCR Do and DontDocument31 pagesPreDCR Do and Dontvenkata satyaNo ratings yet

- Chapter 1 C ProgrammingDocument27 pagesChapter 1 C ProgrammingGajanan KumbharNo ratings yet

- Suhana BT Masri: Career ObjectiveDocument7 pagesSuhana BT Masri: Career Objectivesu_aj2827No ratings yet

- CSC134 - Greedy Ringy Manufacturing Company: Project NumberDocument2 pagesCSC134 - Greedy Ringy Manufacturing Company: Project NumberCarly Dixon0% (1)

- COP 4710 - Database Systems - Spring 2004 Homework #3 - 115 PointsDocument5 pagesCOP 4710 - Database Systems - Spring 2004 Homework #3 - 115 Pointsquang140788100% (1)

- MBA IITR Placement BrochureDocument38 pagesMBA IITR Placement Brochureritesh2407No ratings yet

- Cns 001Document5 pagesCns 001Ramin RajabiNo ratings yet

- Data PreprocessingDocument38 pagesData PreprocessingPradhana RizaNo ratings yet

- Sales Flowchart PDFDocument5 pagesSales Flowchart PDFNafee MahatabNo ratings yet

- How to manage software and hardware assetsDocument8 pagesHow to manage software and hardware assetsHirdesh VishawakrmaNo ratings yet

- Poster Product-Overview enDocument1 pagePoster Product-Overview enJoao RobertoNo ratings yet

- Installation - Manual KX-TVM 200Document248 pagesInstallation - Manual KX-TVM 200SERVICE CENTER PABX 085289388205No ratings yet

- Unit-3 2 Multiprocessor SystemsDocument12 pagesUnit-3 2 Multiprocessor SystemsmirdanishmajeedNo ratings yet

- AutoCAD PLANT 3D 2015 System Tools Variables CadgroupDocument24 pagesAutoCAD PLANT 3D 2015 System Tools Variables CadgroupperoooNo ratings yet

- CV VeeraBhadraDocument2 pagesCV VeeraBhadraAnish Kumar DhirajNo ratings yet

- Clocking Command Reference GuideDocument13 pagesClocking Command Reference Guideverma85_amitNo ratings yet

- ECE 391 Exam 1, Spring 2016 SolutionsDocument18 pagesECE 391 Exam 1, Spring 2016 SolutionsJesse ChenNo ratings yet

- BITS Pilani: Computer Programming (MATLAB) Dr. Samir Kale Contact Session: 10.30-12.30Document20 pagesBITS Pilani: Computer Programming (MATLAB) Dr. Samir Kale Contact Session: 10.30-12.30scribd2020No ratings yet

- DCPO Pretest SCRDocument5 pagesDCPO Pretest SCRWill Larsen33% (3)

- 0417 s17 QP 31Document8 pages0417 s17 QP 31Mohamed AliNo ratings yet

- Pv-m4500 Pci TV Tuner CardDocument24 pagesPv-m4500 Pci TV Tuner CardRoberto AlejandroNo ratings yet

- Icts As Trajectories of Change: Livelihood Framework Case of Kudumbashree, IndiaDocument14 pagesIcts As Trajectories of Change: Livelihood Framework Case of Kudumbashree, IndiapmanikNo ratings yet

- SW Vendor Evaluation Matrix TemplateDocument4 pagesSW Vendor Evaluation Matrix Templatemuzaffar30No ratings yet

- Ultimate Guide to Fix and Customize Mi A2 LiteDocument3 pagesUltimate Guide to Fix and Customize Mi A2 LiteThefs LoverzNo ratings yet

- Atomy Membership ApplicationDocument1 pageAtomy Membership ApplicationC.K. TanNo ratings yet