Professional Documents

Culture Documents

Optimizacion Sin Restricciones PDF

Uploaded by

Adrian Villegas BarrientosOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Optimizacion Sin Restricciones PDF

Uploaded by

Adrian Villegas BarrientosCopyright:

Available Formats

26 Stochastic Process Optimization using Aspen Plus®

TABLE 2.2

Gradient-Based Optimization Methods

Steepest Descent Conjugate Gradient

S = −∇f (x )

0 0

S 0 = −∇f (x 0 )

Solve

∇T f (x 0 )S 0

(S 0 )T ∇f (x 0 + α 0 S 0 ) α0 = −

(S 0 )T H ( x 0 )S 0

k +1 k +1

S k +1 = −∇f (x k +1 ) S k +1 = −∇f (x k +1 ) + S k ∇ f (x )∇f (x )

T

∇ f (x )∇f (x k )

T k

Solve

∇T f (x k +1 )S k +1

(S k +1 )T ∇f (x k +1 + α k +1S k +1 ) α k +1 = −

k +1 T

(S ) H ( x k +1 )S k +1

Newtonʼs method

S 0 = −[ H (x 0 )]−1 ∇f (x 0 )

α0 = 1

−1

( )

S k +1 = − H x k +1 ∇f x k +1

( )

α k +1 = 1

optimize Z = z( x )

s.t. (2.19)

h (x ) = 0

Here,the main concern is to obtain an optimal solution for z ( x ), which also

complies with the set of equality constraints. Two strategies to ensure that

are presented here: the method of Lagrange multipliers and the generalized

reduced gradient method.

2.5.1 Method of Lagrange Multipliers

In this method, the optimization problem presented in Equation 2.19 is refor-

mulated to obtain an objective function that involves the original objective,

z ( x ) , and the entire set of equality constraints, hi ( x ) = 0, where i = 1, 2, …, n. The

resultant expression is known as the Lagrangian function and is expressed

as follows:

m

optimize L = z ( x ) + ∑λ h (x) i i (2.20)

i =1

You might also like

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Evolve - L6 - Unit 1 Quiz - BDocument3 pagesEvolve - L6 - Unit 1 Quiz - BMarcos TadeuNo ratings yet

- What Caused Tower Malfunctions in The Last 50 Years-Henry KisterDocument22 pagesWhat Caused Tower Malfunctions in The Last 50 Years-Henry KisterrakeshNo ratings yet

- Application of Digital Twin TechnologyDocument41 pagesApplication of Digital Twin TechnologyHemn Rafiq TofiqNo ratings yet

- Updating the Chemical Engineering Plant Cost Index for the 21st CenturyDocument9 pagesUpdating the Chemical Engineering Plant Cost Index for the 21st CenturyChelsea SkinnerNo ratings yet

- SPT2013 BioExt AAM PDFDocument21 pagesSPT2013 BioExt AAM PDFAdrian Villegas BarrientosNo ratings yet

- The Quanti Cation of Citral in Lemongrass and Lemon Oils by Near-Infrared SpectrosDocument7 pagesThe Quanti Cation of Citral in Lemongrass and Lemon Oils by Near-Infrared SpectrosAdrian Villegas BarrientosNo ratings yet

- 10 10 15Document115 pages10 10 15Meena VelayuthamNo ratings yet

- Thermodynamic Properties, Equations of State, Methods Used To Describe and Predict Phase EquilibriaDocument20 pagesThermodynamic Properties, Equations of State, Methods Used To Describe and Predict Phase EquilibriaanisfathimaNo ratings yet

- 21EI44 - Linear Control Systems - SyllabusDocument4 pages21EI44 - Linear Control Systems - Syllabuskrushnasamy subramaniyanNo ratings yet

- Babok (Business Analysis Body of Knowledge)Document10 pagesBabok (Business Analysis Body of Knowledge)Keyur KhoontNo ratings yet

- FSC ProjectDocument9 pagesFSC ProjectAnonymous 91hJec40rHNo ratings yet

- MLOps Masterclass November 2023Document16 pagesMLOps Masterclass November 2023Ferie De La PeñaNo ratings yet

- Robot Modeling and Control: Graduate Course at Automatic Control Mikael NorrlöfDocument10 pagesRobot Modeling and Control: Graduate Course at Automatic Control Mikael NorrlöfMeryem MimiNo ratings yet

- Artificial Intelligence FoundationsDocument96 pagesArtificial Intelligence FoundationsGunjan SumanNo ratings yet

- Lecture 15 Steady-State Error For Unity Feedback SystemDocument28 pagesLecture 15 Steady-State Error For Unity Feedback SystemRammay Sb100% (1)

- Control System Engineering 2 MarksDocument18 pagesControl System Engineering 2 MarksSeenu CnuNo ratings yet

- Curriculum - Vitae - Format (1) Rafael NuñezDocument3 pagesCurriculum - Vitae - Format (1) Rafael NuñezRafael NuñezNo ratings yet

- AIChE - 2014 - Paper - 24A - Lambda Tuning Methods For Level and Other Integrating Processes-BDocument35 pagesAIChE - 2014 - Paper - 24A - Lambda Tuning Methods For Level and Other Integrating Processes-BscongiundiNo ratings yet

- Food Web - Wikipedia PDFDocument4 pagesFood Web - Wikipedia PDFNadja SoleilNo ratings yet

- 2151705 (2)Document3 pages2151705 (2)Maulik MulaniNo ratings yet

- Tutorial 4 Time ResponseDocument8 pagesTutorial 4 Time ResponseTam PhamNo ratings yet

- Artificialintelligencereport 130426220342 Phpapp01Document30 pagesArtificialintelligencereport 130426220342 Phpapp01AIVaibhav GhubadeNo ratings yet

- Thermodynamics of Irreversible Processes Lecture NotesDocument10 pagesThermodynamics of Irreversible Processes Lecture NotesNatty LopezNo ratings yet

- Importance of Programming For Industrial EngineeringDocument2 pagesImportance of Programming For Industrial EngineeringTati GarcíaNo ratings yet

- 36-Article Text-66-1-10-20230102Document10 pages36-Article Text-66-1-10-20230102M Luthfi Al MudzakiNo ratings yet

- SDLC OoseDocument36 pagesSDLC OoseAnsh AnuragNo ratings yet

- Chapter 3 - ContentsDocument8 pagesChapter 3 - ContentsSaiful MunirNo ratings yet

- Beyond ERP: Towards Intelligent Manufacturing Planning and ControlDocument49 pagesBeyond ERP: Towards Intelligent Manufacturing Planning and ControldedikiNo ratings yet

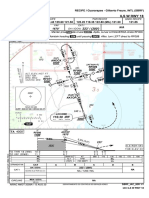

- ILS W RWY 18 Approach Chart for RECIFE / Guararapes - Gilberto Freyre, INTL (SBRFDocument1 pageILS W RWY 18 Approach Chart for RECIFE / Guararapes - Gilberto Freyre, INTL (SBRFRicardo PalermoNo ratings yet

- Systems Development Life Cycle (SDLC)Document45 pagesSystems Development Life Cycle (SDLC)javauaNo ratings yet

- ANN ControlDocument6 pagesANN ControlLissete VergaraNo ratings yet

- t8 - Behavioural ModellingDocument56 pagest8 - Behavioural ModellingNazeyra JamalNo ratings yet

- WameedMUCLecture 2021 9216267Document24 pagesWameedMUCLecture 2021 9216267Ayman OmarNo ratings yet

- M.Tech I Mid QPDocument2 pagesM.Tech I Mid QPsree bvritNo ratings yet