Professional Documents

Culture Documents

OCR Using Image Processing

Uploaded by

Zubair KhalidOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

OCR Using Image Processing

Uploaded by

Zubair KhalidCopyright:

Available Formats

International Journal Of Advancement In Engineering Technology, Management and Applied

Science (IJAETMAS)

ISSN: 2349-3224 || www.ijaetmas.com || Volume 03 - Issue 09 || September - 2016 || PP. 115-122

Character Recognition using Image Processing

Sumit Sharma, Ritik Sharma

National Institute of Technology, Srinagar, India 190006

Seclore Technology Pvt Ltd. Mumbai India, 400072

Abstract

OCR stands for Optical Character Recognition and is the mechanical or electronic translation of

images consisting of text into the editable text. It is mostly used to convert handwritten(taken by

scanner or by other means) into text. Human beings recognize many objects in this manner our eyes

are the "optical mechanism." But while the brain "sees" the input, the ability to comprehend these

signals varies in each person according to many factors.

Digitization of text documents is often combined with the process of optical character recognition

(OCR). Recognizing a character is a normal and easy work for human beings, but to make a machine

or electronic device that does character recognition is a difficult task. Recognizing characters is one of

those things which humans do better than the computer and other electronic devices.

Keywords: Character Recognition System, Camera Captured Document Images, Handheld Device,

Image Segmentation.

Introduction

An Optical Character System enable us to convert a PDF file or a file of scanned images directly into a computer

file, and edit the file using a MS-Word or WordPad. Examples of issues that need to be dealt with in

character recognition of heritage documents are:

Degradation of paper, which often results in high occurrence of noise in the digitized images,

or fragmented (broken) characters.

Characters are not machine-written. If they are manually set, this will result in, for instance,

varying space sizes between characters or (accidentally) touching characters.

An example of implementation of OCR on a scanned image from web with the character recognition

as per Human’s:-

Figure 1: Input image

Culpeper’s Midwife Exlarged.5 .lieonthoughtThn Infant drew in his Nouriflhment by his whole Body;

because it is rare and fpungy, as aSpunge fucks in Water o every Side; and so he thought it fucked

Blood, not only from the Mother’s Veins, but also from the Womb. Democrats and Epicurus, recorded

by Plutarch, that the Child fucked in the Nourishment by its Mouth. And also Hippocrates, Lib. de

Principiis, affirms, that the Child fucked both Nourishment and Brea:h by its Mouth from the Mo-ther

when le breathed,(thought in his other Treatises he feernsto deny

Figure 2: Output image after processing given Input image

www.ijaetmas.com Page 115

International Journal Of Advancement In Engineering Technology, Management and Applied

Science (IJAETMAS)

ISSN: 2349-3224 || www.ijaetmas.com || Volume 03 - Issue 09 || September - 2016 || PP. 115-122

A number of research works on mobile OCR systems have been found. Laine et al. [7] developed a

system for only English capital letters. At first, the captured image is skew corrected by looking for a

line having the highest number of consecutive white pixels and by maximizing the given alignment

criterion. Then, the image is segmented based on X-Y Tree decomposition and recognized by

measuring Manhattan distance based similarity for a set of centroid to boundary features. However,

this work addresses only the English capital letters and the accuracy obtained is not satisfactory for

real life applications.

Under the current work, a character recognition system is presented for recognizing English

characters extracted from camera captured image/graphics embedded text documents such as business

card images

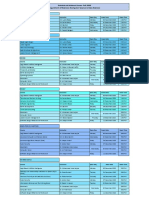

Process/ Methodology -

The process of OCR involves several steps including Image Scanning, Pre-Processing, Segmentation,

Feature Extraction, Post-Processing and Classification.

Image Scanning

Pre-Processing

Segmentation

Feature Extraction

Post Processing

Classification

Editable Text

Flow chart for the entire process of OCR

Image Scanning:- An image scanner is a digital device used to scan images, pictures, printed text and

objects and then convert them to digital images. Image scanners are used in a variety of domestic and

industrial applications like design, reverse engineering, orthotics, gaming and testing. The most

widely used type of scanner in offices or homes is a flatbed scanner, also known as a Xerox machine.

The process of scanning the image with the help of Scanner is known as Image Scanning. It helps in

getting the image of the handwritten text.

Pre-Processing: - Pre-Processing is required for coloured, binary or grey-level images containing

text. Most of the algorithms of OCR works on binary and grey-level image because the computation is

difficult for coloured images. Images may contain background or watermark or any other thing

www.ijaetmas.com Page 116

International Journal Of Advancement In Engineering Technology, Management and Applied

Science (IJAETMAS)

ISSN: 2349-3224 || www.ijaetmas.com || Volume 03 - Issue 09 || September - 2016 || PP. 115-122

different from text making it difficult to extract the text from the scanned image. So, Pre-Processing

helps in removing the above difficulties. The result after Pre-Processing is the binary image

containing text only. Thus, to achieve this, several steps are needed, first, some image enhancement

techniques to remove noise or correct the contrast in the image, second, thresholding(described

below) to remove the background containing any scenes, watermarks and/or noise, third, page

segmentation to separate graphics from text, fourth, character segmentation to separate characters

from each other and, finally, morphological processing to enhance the characters in cases where

thresholding and/or other pre-processing techniques eroded parts of the characters or added pixels to

them. This method is used widely in various character recognition implementations.

Thresholding: Thresholding is a process of converting a grayscale input image to a bi-level image by

using an optimal threshold. The purpose of thresholding is to extract those pixels from some image

which represent an object (either text or other line image data such as graphs, maps). Though the

information is binary the pixels represent a range of intensities. Thus the objective of binarization is to

mark pixels that belong to true foreground regions with a single intensity and background regions

with different intensities.

Figure 3: Thresholding Process

For a thresholding algorithm to be really effective, it should preserve logical and semantic content.

There are two types of thresholding algorithms

1. Global thresholding algorithms

2. Local or adaptive thresholding algorithms

In global thresholding, a single threshold for all the image pixels is used. When the pixel values of the

components and that of background are fairly consistent in their respective values over the entire

image, global thresholding could be used.

Segmentation: - Segmentation is a process that determines the elements of an image.In this process

partitioning a digital image into multiple segments (sets of pixels, also known as super-pixels) is

done. Image segmentation is typically used to locate objects and boundaries (lines, curves, etc.) in

images. The most important point which is necessary to locate the regions of the document where data

is printed and distinguish is from figures and graphics. Text segmentation is the isolation of characters

or words. Many segmentation algorithms in which segment words are used into isolated characters

which are recognized individually. This process of segmentation is performed by iso lating each

connected component. This technique is easy to implement, but problems occurs if characters touch or

if characters are fragmented and consist of several parts. The problems in segmentation are divided

into various categories: Extraction of touching and fragmented characters, distinguishing noise from

text, skewing.

Feature Extraction: - Different characters have different features; on the basis of these features the

characters are recognized. Thus Feature extraction can be defined as the process of extracting

www.ijaetmas.com Page 117

International Journal Of Advancement In Engineering Technology, Management and Applied

Science (IJAETMAS)

ISSN: 2349-3224 || www.ijaetmas.com || Volume 03 - Issue 09 || September - 2016 || PP. 115-122

differentiating features from the matrices of digitized characters. A number of features have been

found in literature on the basis of which the OCR system works to recognize the characters.

According to C. Y. Suen (1986), Features of a character can be classified into two classes: Global or

statistical features and Structural or topological features. Global features are obtained from the

arrangement of points constituting the character matrix. These features can be easily detected as

compared to topological features. Global features are not affected too much by noise or distortions as

compared to topological features. A number of techniques are used for feature extraction; some of

these are: moments, zoning, projection histograms, n-tuples, crossings and distances.

Classification using K-nearest algorithm: - Classification determines the region of feature space in

which an unknown pattern falls. In k-nearest neighbour algorithm (k-NN) is a method for classifying

objects based on closest training examples in the feature space. The k-nearest neighbour algorithm is

amongst the simplest of all other machine learning algorithms: an object is classified by a majority

vote of its neighbours, with the object being assigned to the class most common amongst its k nearest

neighbours (k is a positive integer, typically small). If k = 1, then the object is simply assigned to the

class of its nearest neighbour. Generally, we calculate the Euclidean distance between the test point

and all the reference points in order to find K nearest neighbours, and then arrange the distances in

ascending order and take the reference points corresponding to the k smallest Euclidean distances. A

test sample is then attributed the same class label as the label of the majority of its K nearest

(reference) neighbours.

Post-processing

OCR accuracy can be increased if the output is constrained by a lexicon – a list of words that are

allowed to occur in a document. This might be, for example, all the words in the English language, or

a more technical lexicon for a specific field. This technique can be problematic if the document

contains words not in the lexicon, like proper nouns. Tesseract uses its dictionary to influence the

character segmentation step, for improved accuracy.

The output stream may be a plain text stream or file of characters, but more sophisticated OCR

systems can preserve the original layout of the page and produce, for example, an annotated PDF that

includes both the original image of the page and a searchable textual representation. “Near-neighbour

analysis" can make use of co-occurrence frequencies to correct errors, by noting that certain words are

often seen together. For example, "Washington, D.C." is generally far more common in English than

"Washington DOC".Knowledge of the grammar of the language being scanned can also help

determine if a word is likely to be a verb or a noun, for example, allowing greater

accuracy.The LevenshteinDistance algorithm has also been used in OCR post-processing to further

optimize results from an OCR API.

Editable Text: - Editable Text here means any document or file which can be edited in computer like

any other MS-Word or Word-Pad file. It consists of the text which is given in the input as an image. It

is the output of the OCR system up to 99% accuracy.

Applications: -

1. Licence Plate Recognition.

2. Access control for vehicles Car Park

3. Management Highway / Border monitoring system for vehicles Traffic and

4. Parking flow surveys Vehicle monitoring at toll booths Automatic recording of the text

written on the vehicle surface

www.ijaetmas.com Page 118

International Journal Of Advancement In Engineering Technology, Management and Applied

Science (IJAETMAS)

ISSN: 2349-3224 || www.ijaetmas.com || Volume 03 - Issue 09 || September - 2016 || PP. 115-122

5. Vehicle Monitoring through automated real time alerts for Unauthorized / Barred / Stolen

vehicles.

6. Container number identification

7. Industrial Inspection

8. Document imaging

9. Printed invoices OCR implementations

Some implementations in description: -

1. LicencePlate Recognition System: -

Automatic License Plate Recognition (ALPR) system is an important technique, used

in Intelligent Transportation System. ALPR is an advanced machine vision

technology used to identify vehicles by their license plates without direct human

intervention. There are many applications for license plate recognition for example

automated parking attendant, petrol station forecourt surveillance, speed enforcement,

security, customer identification enabling personalized service, highway electronic

toll collection and traffic monitoring systems. It is sho wn that the license plates are

different shape and size and also have different colour in different countries. In India

the most common license plate colour used for commercial vehicle is yellow and

private cars is white as background and black used as foreground colour. Though in

Indian there is standard format for license plate, as described in fig. 1, which is not

followed which makes the license plate recognition system quite difficult. The Indian

license plate start with two-digit letter “state code” followed by two-digit numeral

followed by single letter after those four consecutive digits.

www.ijaetmas.com Page 119

International Journal Of Advancement In Engineering Technology, Management and Applied

Science (IJAETMAS)

ISSN: 2349-3224 || www.ijaetmas.com || Volume 03 - Issue 09 || September - 2016 || PP. 115-122

Figure4: Input image

Process

Image Capture Image Licence Plate Character Verification Result Indication

Restoration Detection Recognition(OCR)

Res Licence Plate Recognition Engine

Server

APPLICATION SOFTWARE

System

Appliance

Communication

Licence Communication Callobration With

Application

Computer

Plate Verification Retrieval External System

Camera Recognition Camera Control

Server

Wanted Car DB etc.

Lighting Device OS

Licence Plate Recognition Appliance

Figure5: Workflow for LPR

2. Printed invoices OCR recognition: -Testsconsisted of the recognition of two values: invoice

number and date. Invoice number was printed in a fixed area, date might be printed in a few

areas (but each document contained only one date and it had a fixed format). During page

processing, each of these date areas were recognized. Next, all of them were searched for

string in a proper format. First string found was considered as the date. 6 Number of

processed documents was 1000 and all invoice numbers were recognized correctly. Twenty

dates were unrecognized, but they were partly or completely devastated by extra handwritten

notes or stamps. The next test consisted of layout recognition. Invoices contain elements such

as tables, images and stamps. The layout was properly recognized in 50%, 10% of documents

had small defects like lacking one line in table. In 40% of documents the layout recognition

was weak, some of them had more pages than original, some images and stamps were located

in different places, some tables were incomplete.

www.ijaetmas.com Page 120

International Journal Of Advancement In Engineering Technology, Management and Applied

Science (IJAETMAS)

ISSN: 2349-3224 || www.ijaetmas.com || Volume 03 - Issue 09 || September - 2016 || PP. 115-122

Conclusion

This paper tells about OCR system for offline handwritten character recognition. The systems have

the ability to yield excellent results. In this there is the detailed discussion about handwritten character

recognize and include various concepts involved, and boost further advances in the area. The accurate

recognition is directly depending on the nature of the material to be read and by its quality. Pre -

processing techniques used in document images as an initial step in character recognition systems

were presented. The feature extraction step of optical character recognition is the most important. It

can be used with existing OCR methods, especially for English text. This system offers an upper edge

by having an advantage i.e. its scalability, i.e. although it is configured to read a predefined set of

document formats, currently English documents, it can be configured to recognize new types. Future

research aims at new applications such as online character recognition used in mobile devices,

extraction of text from video images, extraction of information from security documents and

processing of historical documents. Recognition is often followed by a post-processing stage. We

hope and foresee that if post-processing is done, the accuracy will be even higher and then it could be

directly implemented on mobile devices. Implementing the presented system with post-processing on

mobile devices is also taken as part of our future work.

www.ijaetmas.com Page 121

International Journal Of Advancement In Engineering Technology, Management and Applied

Science (IJAETMAS)

ISSN: 2349-3224 || www.ijaetmas.com || Volume 03 - Issue 09 || September - 2016 || PP. 115-122

References

1. Himanshu S. Mazumdar, Leena Rawal, “A Learning Algorithm for Self OrganizedMultilayered

Neural Network” CSI Communication, pp.5-6(May 1996)

2. Leena P. Rawal, Himanshu S. Mazumdar, “A DSP based Low Precision Algorithm for Neural

Network using Dynamic Neuron Activation Function” CSI Communication, p.15-18 (April

1996)

3. Leena P. Rawal, Himanshu S. Mazumdar, “A Neural Network Tool Box using C++” CSI

Communication, pp.22-25 (April 1995)

4. Hetcht-Nielsen, "Theory of the back propagation neural network, “in Proc. Int. Joint Conf. Neural

Networks, vol.1, pp.593-611. New York: IEEE Press, June 1989.

5. Lippmann R.P., An introduction to computing with Neural Nets.IEEE ASSP Magazine, pp4-22,

April 1987.

6. Rumelhart and J.L. McClelland Parallel Distributed Processing: Explorations in the

Microstructure of Cognition. Cambridge MA: MIT Press, vol. 1,1986.

7. N. Arica and F. Yarman-Vural, An Overview of Character Recognition Focused on Offline

Handwriting, IEEE Transactions on Systems, Man, and Cybernetics, Part C: Applications and

Reviews, Vol. 31, No.2, pp. 216--233, 2001.

8. Maciej K. GodniakTechnologia Radio Frequency Identification w zastosowaniachkomercyjnych,

RocznikiInformatykiStosowanejWydziałuInformatykiPolitechnikiSzczecińskiejnr 6 (2004) pp.

441-445

9. Marcin Marciniak, Rozpoznawanienadużąskalę,

http://www.informationstandard.pl/artykuly/343061_0/Rozpoznawanie.na.duza.skale. html

10. Rynekusługzarządzaniadokumentami w Polsce http://www.egospodarka.pl/20606,Rynek-uslug-

zarzadzania-dokumentami-wPolsce,1,20,2.html

11. OCR – Rozpoznawaniepismaitekstu (ID resources)

http://www.finereader.pl/wprowadzenie/technologia-ocr

12. RecoStar Professional – Character Recognition (OpenText resources), http://www.captaris-

dt.com/product/recostar-professional/en/

13. Document Capture – Data Capture - Kofax, (Kofax resources) http://www.kofax.com/

14. C. C. Tappert, Cursive Script Recognition by Elastic Matching, IBM Journal of Research and

Development, vol. 26, no. 6, pp.765-771, 1982.

15. Color Image Processing By: RastislavLukac and Konstantions N. Plataniotis

16. Digital Image Processing Using MatlabBy: Gonzalez Woods &Eddins

17. A. F. Mollah, S. Basu, N. Das, R. Sarkar, M. Nasipuri, M. Kundu, “A Fast Skew Correction

Technique for Camera Captured Business Card Images”, Proc. of IEEE INDICON- 2009, pp.

629-632, 18-20 December, Gandhinagar, Gujrat

18. J. Bernsen, “Dynamic thresholding of grey-level images”, Proc. Eighth Int’l Conf. on Pattern

Recognition, pp. 1251- 1255, Paris, 1986.

www.ijaetmas.com Page 122

You might also like

- Optical Character Recognition (OCR) SystemDocument5 pagesOptical Character Recognition (OCR) SystemInternational Organization of Scientific Research (IOSR)No ratings yet

- Optical Character RecognitionDocument17 pagesOptical Character RecognitionVivek Shivalkar100% (1)

- Urdu Optical Character Recognition OCR Thesis Zaheer Ahmad Peshawar Its Soruce Code Is Available On MATLAB Site 21-01-09Document61 pagesUrdu Optical Character Recognition OCR Thesis Zaheer Ahmad Peshawar Its Soruce Code Is Available On MATLAB Site 21-01-09Zaheer Ahmad100% (1)

- Optical Character RecognitionDocument25 pagesOptical Character RecognitionsanjoyjenaNo ratings yet

- Optical Character Recognition: Kaivan Gandhi 60001160012 Rahul Jha 60001160019 Shagun Vasmatkar 60001160061Document7 pagesOptical Character Recognition: Kaivan Gandhi 60001160012 Rahul Jha 60001160019 Shagun Vasmatkar 60001160061kaivanNo ratings yet

- The Optical Capture RecognitionDocument41 pagesThe Optical Capture RecognitionAshish SharmaNo ratings yet

- Optical Character Recognition Software Converts Images to TextDocument33 pagesOptical Character Recognition Software Converts Images to TextnavabdeepNo ratings yet

- MATLAB OCR Algorithm Converts Images to TextDocument5 pagesMATLAB OCR Algorithm Converts Images to Textvikky23791No ratings yet

- 5.0 Best Practices For OCRDocument4 pages5.0 Best Practices For OCRSim SimmaNo ratings yet

- Car Make and Model Recognition Using ImaDocument8 pagesCar Make and Model Recognition Using ImaRAna AtIfNo ratings yet

- Video Clasification PDFDocument114 pagesVideo Clasification PDFArmin M Kardovic100% (1)

- OCR Using TesseractDocument37 pagesOCR Using TesseractVibhakar Sharma100% (1)

- Improving The Efficiency of Tesseract Ocr EngineDocument51 pagesImproving The Efficiency of Tesseract Ocr EngineAzeem TalibNo ratings yet

- Image Processing Research Papers BibliographyDocument81 pagesImage Processing Research Papers BibliographyGauravPawarNo ratings yet

- Machine Learning Concepts and Techniques ExplainedDocument35 pagesMachine Learning Concepts and Techniques ExplainedDipNo ratings yet

- Implementation of Hand Gesture Recognition System To Aid Deaf-Dumb PeopleDocument15 pagesImplementation of Hand Gesture Recognition System To Aid Deaf-Dumb PeopleSanjay ShelarNo ratings yet

- Face RecognitionDocument17 pagesFace RecognitionMomo Nasr100% (2)

- Face Detection Techniques A Review PDFDocument22 pagesFace Detection Techniques A Review PDFRachmad HidayatNo ratings yet

- Biometric Seminar ReportDocument15 pagesBiometric Seminar ReportAbhishekNo ratings yet

- Detecting Fake Images Using Demosaicing AlgorithmsDocument5 pagesDetecting Fake Images Using Demosaicing AlgorithmsShaletXavierNo ratings yet

- Geofencing For Fleet & Freight ManagementDocument4 pagesGeofencing For Fleet & Freight ManagementAlejandro TiqueNo ratings yet

- Implementation of Credit Card Fraud Detection Using Random Forest AlgorithmDocument10 pagesImplementation of Credit Card Fraud Detection Using Random Forest AlgorithmIJRASETPublications100% (1)

- 64 Natural Language Processing Interview Questions and Answers-18 Juli 2019Document30 pages64 Natural Language Processing Interview Questions and Answers-18 Juli 2019Ama AprilNo ratings yet

- Isro CseDocument1 pageIsro Cseankit bansalNo ratings yet

- Text and Face DetectionDocument82 pagesText and Face DetectionGangadhar BiradarNo ratings yet

- Convolution Neural Networks For Hand Gesture RecognationDocument5 pagesConvolution Neural Networks For Hand Gesture RecognationIAES IJAINo ratings yet

- Real-time Semantic Segmentation with Fast AttentionDocument7 pagesReal-time Semantic Segmentation with Fast Attentiontest testNo ratings yet

- Student Chatbot System: Advance Computer ProgrammingDocument24 pagesStudent Chatbot System: Advance Computer ProgrammingMr NeroNo ratings yet

- DNN-Based Smart Attendance SystemDocument18 pagesDNN-Based Smart Attendance SystemNaren DranNo ratings yet

- Transfer Learning With Convolutional Neural Networks For Iris RecognitionDocument18 pagesTransfer Learning With Convolutional Neural Networks For Iris RecognitionAdam HansenNo ratings yet

- Intelligent Video Surveillance System for Suspect Detection Using OpenCVDocument4 pagesIntelligent Video Surveillance System for Suspect Detection Using OpenCVPrasanna0% (1)

- Qustionbank1 12Document40 pagesQustionbank1 12Bhaskar VeeraraghavanNo ratings yet

- How To Write Thesis Proposal On Face RecognitionDocument5 pagesHow To Write Thesis Proposal On Face RecognitionRahajul Amin RajuNo ratings yet

- Internship Report Approval Form and Nutrition Analysis Using Image ClassificationDocument10 pagesInternship Report Approval Form and Nutrition Analysis Using Image Classificationpoi poiNo ratings yet

- Intro To HCI and UsabilityDocument25 pagesIntro To HCI and UsabilityHasnain AhmadNo ratings yet

- Face Recognisation TechnologyDocument31 pagesFace Recognisation Technologysk100vermaNo ratings yet

- Cs1352 Compiler DesignDocument9 pagesCs1352 Compiler Designsindhana100% (1)

- Face RecognitionDocument16 pagesFace RecognitionHarshit AroraNo ratings yet

- AI programming language and conceptsDocument21 pagesAI programming language and conceptsIRFAN HAIDERNo ratings yet

- Analysis of Spam Email Filtering Through Naive Bayes Algorithm Across Different DatasetsDocument4 pagesAnalysis of Spam Email Filtering Through Naive Bayes Algorithm Across Different DatasetsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- CAPTCHA: Telling Humans and Computers Apart AutomaticallyDocument35 pagesCAPTCHA: Telling Humans and Computers Apart AutomaticallySachin ItgampalliNo ratings yet

- Uid 2marks Question and AnswersDocument18 pagesUid 2marks Question and AnswersOscar StevensNo ratings yet

- Automatic Attendance Management System Using Face Recognition PDFDocument5 pagesAutomatic Attendance Management System Using Face Recognition PDFJDNET TECHNOLOGIESNo ratings yet

- MicroProcessor Interview Questions and AnswersDocument2 pagesMicroProcessor Interview Questions and Answersmkmahi3No ratings yet

- Theoretical and Practical Analysis On CNN, MTCNN and Caps-Net Base Face Recognition and Detection PDFDocument35 pagesTheoretical and Practical Analysis On CNN, MTCNN and Caps-Net Base Face Recognition and Detection PDFDarshan ShahNo ratings yet

- ANPR ReportDocument52 pagesANPR ReportPuneet Kumar Singh0% (1)

- A People Counting System Based On Face-DetectionDocument4 pagesA People Counting System Based On Face-DetectionCastaka Agus SNo ratings yet

- Object Detector For Blind PersonDocument20 pagesObject Detector For Blind PersonRabiul islamNo ratings yet

- Spam Detection MLDocument2 pagesSpam Detection MLWT ONo ratings yet

- Face Detection and Face RecognitionDocument7 pagesFace Detection and Face RecognitionPranshu AgrawalNo ratings yet

- Text To Speech Conversion in MATLAB. Access Speech Properties of Windows From MATLAB. - ProgrammerworldDocument1 pageText To Speech Conversion in MATLAB. Access Speech Properties of Windows From MATLAB. - ProgrammerworldamanNo ratings yet

- Class 1-Introduction To Microprocessor PDFDocument36 pagesClass 1-Introduction To Microprocessor PDFDeepika AgrawalNo ratings yet

- Edge Detection and Hough Transform MethodDocument11 pagesEdge Detection and Hough Transform MethodDejene BirileNo ratings yet

- 01 - Handbook of Fingerprint Recognition-Introduction - 2003Document53 pages01 - Handbook of Fingerprint Recognition-Introduction - 2003Carlos PanaoNo ratings yet

- CSE 299 Group ProposalDocument4 pagesCSE 299 Group ProposalSuraj Kumar DasNo ratings yet

- 14 Ways Machine Learning Can Boost Your MarketingDocument10 pages14 Ways Machine Learning Can Boost Your Marketingsentilbalan@gmail.comNo ratings yet

- Hand Gesture Recognition and Voice Conversion For Deaf and DumbDocument8 pagesHand Gesture Recognition and Voice Conversion For Deaf and DumbVeda GorrepatiNo ratings yet

- 1) Gantt ChartDocument20 pages1) Gantt ChartMuhammad Hassaan AliNo ratings yet

- Assignment 2 MLDS LabDocument3 pagesAssignment 2 MLDS LabAmruta MoreNo ratings yet

- Optical Character Recognition: A ReviewDocument71 pagesOptical Character Recognition: A ReviewAnonymous vTrbyfBi3nNo ratings yet

- CS Department-Midterm Exam Datesheet - Fall 2020Document1 pageCS Department-Midterm Exam Datesheet - Fall 2020Zubair KhalidNo ratings yet

- ACADEMIC CALENDAR Fall-2020 - S-2021 PDFDocument1 pageACADEMIC CALENDAR Fall-2020 - S-2021 PDFZubair KhalidNo ratings yet

- Sommhhmhl Smmhhheeeemhhee: MhheemhmhohheiDocument93 pagesSommhhmhl Smmhhheeeemhhee: MhheemhmhohheiZubair KhalidNo ratings yet

- Char Rec On It IonDocument12 pagesChar Rec On It IonMvs NarayanaNo ratings yet

- OptQuest User ManualDocument190 pagesOptQuest User ManualYamal E Askoul TNo ratings yet

- THPS DegradationDocument5 pagesTHPS DegradationAhmad Naim KhairudinNo ratings yet

- VeEX OTN Quick Reference GuideDocument12 pagesVeEX OTN Quick Reference GuideDewan H S SalehinNo ratings yet

- Thesis On Multilevel ModelingDocument6 pagesThesis On Multilevel Modelingsashajoneskansascity100% (2)

- Design of Rigid Pavement CC Road With M30Document2 pagesDesign of Rigid Pavement CC Road With M30Yedla Neelakanteshwar100% (3)

- Matriculation Chemistry Introduction To Organic Compound Part 1 PDFDocument24 pagesMatriculation Chemistry Introduction To Organic Compound Part 1 PDFiki292No ratings yet

- B 2Document12 pagesB 2Mohamed Sayed Abdel GaffarNo ratings yet

- Oracle® Fusion Middleware: Administrator's Guide For Oracle Business Intelligence Applications 11g Release 1 (11.1.1.7)Document76 pagesOracle® Fusion Middleware: Administrator's Guide For Oracle Business Intelligence Applications 11g Release 1 (11.1.1.7)Nicholas JohnNo ratings yet

- Arm Assembly Language ProgrammingDocument170 pagesArm Assembly Language ProgrammingAnup Kumar Yadav100% (4)

- MCB and ELCB PDFDocument35 pagesMCB and ELCB PDFChris AntoniouNo ratings yet

- Planetary AlignmentDocument7 pagesPlanetary AlignmentEbn MisrNo ratings yet

- DCT Dual Clutch TransmissionDocument16 pagesDCT Dual Clutch TransmissionSudharshan SrinathNo ratings yet

- Network of Global Corporate Control. Swiss Federal Institute of Technology in ZurichDocument36 pagesNetwork of Global Corporate Control. Swiss Federal Institute of Technology in Zurichvirtualminded100% (2)

- No.1 PrestressedDocument10 pagesNo.1 PrestressedKristin ArgosinoNo ratings yet

- Westminster Academy, Islamabad: Physics (0625) Topic Test:Sound WavesDocument5 pagesWestminster Academy, Islamabad: Physics (0625) Topic Test:Sound Wavessaimee77No ratings yet

- Final Project Regenerative BrakingDocument6 pagesFinal Project Regenerative Brakingdims irifiyinNo ratings yet

- What Is XRF ?: Prepared by Lusi Mustika SariDocument34 pagesWhat Is XRF ?: Prepared by Lusi Mustika SariBayuNo ratings yet

- Barrels & Actions by Harold HoffmanDocument238 pagesBarrels & Actions by Harold HoffmanNorm71% (7)

- Proportional Chopper Amplifier VB-3A: Min MaxDocument5 pagesProportional Chopper Amplifier VB-3A: Min MaxryujoniNo ratings yet

- Fix Disk & Partition ErrorsDocument2 pagesFix Disk & Partition Errorsdownload181No ratings yet

- Matrix Structural Analysis of BeamsDocument28 pagesMatrix Structural Analysis of BeamsKristine May Maturan0% (1)

- Bio Inorganic ChemistryDocument2 pagesBio Inorganic ChemistryMeghna KumarNo ratings yet

- Sensitive Albuminuria Analysis Using Dye-Binding Based Test StripsDocument24 pagesSensitive Albuminuria Analysis Using Dye-Binding Based Test StripsВалерия БедоеваNo ratings yet

- Unit 10 - Week 9: Assignment 9Document4 pagesUnit 10 - Week 9: Assignment 9shubhamNo ratings yet

- Chapter 3 Step Wise An Approach To Planning Software Projects 976242065Document31 pagesChapter 3 Step Wise An Approach To Planning Software Projects 976242065RiajiminNo ratings yet

- Design and Optimization of Solar Parabolic Trough Collector With Evacuated Absorber by Grey Relational AnalysisDocument9 pagesDesign and Optimization of Solar Parabolic Trough Collector With Evacuated Absorber by Grey Relational AnalysissatishNo ratings yet

- Design of Three Span Steel Composite FlyoverDocument85 pagesDesign of Three Span Steel Composite FlyoverStructural SpreadsheetsNo ratings yet

- Jm-10 Operation Manual Rev02 UnlockedDocument121 pagesJm-10 Operation Manual Rev02 UnlockedAlan Jimenez GonzalezNo ratings yet

- NTSE 2015 Stage I Official Result Karnataka PDFDocument10 pagesNTSE 2015 Stage I Official Result Karnataka PDFAnnu NaikNo ratings yet

- Rigid PavementDocument100 pagesRigid PavementJAY GANDHI100% (1)