Professional Documents

Culture Documents

2017 Usacm Statement AI Algorithms

Uploaded by

dhavalnmodiCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

2017 Usacm Statement AI Algorithms

Uploaded by

dhavalnmodiCopyright:

Available Formats

Association for Computing Machinery

US Public Policy Council (USACM)

usacm.acm.org

facebook.com/usacm

twitter.com/usacm

January 12, 2017

Statement on Algorithmic Transparency and Accountability

Computer algorithms are widely employed throughout our economy and society to make decisions that

have far-reaching impacts, including their applications for education, access to credit, healthcare, and

employment.1 The ubiquity of algorithms in our everyday lives is an important reason to focus on

addressing challenges associated with the design and technical aspects of algorithms and preventing

bias from the onset.

An algorithm is a self-contained step-by-step set of operations that computers and other 'smart' devices

carry out to perform calculation, data processing, and automated reasoning tasks. Increasingly,

algorithms implement institutional decision-making based on analytics, which involves the discovery,

interpretation, and communication of meaningful patterns in data. Especially valuable in areas rich with

recorded information, analytics relies on the simultaneous application of statistics, computer

programming, and operations research to quantify performance.

There is also growing evidence that some algorithms and analytics can be opaque, making it impossible

to determine when their outputs may be biased or erroneous.

Computational models can be distorted as a result of biases contained in their input data and/or their

algorithms. Decisions made by predictive algorithms can be opaque because of many factors, including

technical (the algorithm may not lend itself to easy explanation), economic (the cost of providing

transparency may be excessive, including the compromise of trade secrets), and social (revealing input

may violate privacy expectations). Even well-engineered computer systems can result in unexplained

outcomes or errors, either because they contain bugs or because the conditions of their use changes,

invalidating assumptions on which the original analytics were based.

The use of algorithms for automated decision-making about individuals can result in harmful

discrimination. Policymakers should hold institutions using analytics to the same standards as

institutions where humans have traditionally made decisions and developers should plan and architect

analytical systems to adhere to those standards when algorithms are used to make automated decisions

or as input to decisions made by people.

This set of principles, consistent with the ACM Code of Ethics, is intended to support the benefits of

algorithmic decision-making while addressing these concerns. These principles should be addressed

during every phase of system development and deployment to the extent necessary to minimize

potential harms while realizing the benefits of algorithmic decision-making.

1

Federal Trade Commission. “Big Data: A Tool for Inclusion or Exclusion? Understanding the Issues.” January 2016.

https://www.ftc.gov/reports/big-data-tool-inclusion-or-exclusion-understanding-issues-ftc-report.

ACM US Public Policy Council Page 1 of 2 +1-202-355-1291 x13040

1701 Pennsylvania Ave NW, Suite 300 acmpo@acm.org

Washington, DC 20006 usacm.acm.org

Principles for Algorithmic Transparency and Accountability

1. Awareness: Owners, designers, builders, users, and other stakeholders of analytic systems should be

aware of the possible biases involved in their design, implementation, and use and the potential harm

that biases can cause to individuals and society.

2. Access and redress: Regulators should encourage the adoption of mechanisms that enable

questioning and redress for individuals and groups that are adversely affected by algorithmically

informed decisions.

3. Accountability: Institutions should be held responsible for decisions made by the algorithms that they

use, even if it is not feasible to explain in detail how the algorithms produce their results.

4. Explanation: Systems and institutions that use algorithmic decision-making are encouraged to

produce explanations regarding both the procedures followed by the algorithm and the specific

decisions that are made. This is particularly important in public policy contexts.

5. Data Provenance: A description of the way in which the training data was collected should be

maintained by the builders of the algorithms, accompanied by an exploration of the potential biases

induced by the human or algorithmic data-gathering process. Public scrutiny of the data provides

maximum opportunity for corrections. However, concerns over privacy, protecting trade secrets, or

revelation of analytics that might allow malicious actors to game the system can justify restricting access

to qualified and authorized individuals.

6. Auditability: Models, algorithms, data, and decisions should be recorded so that they can be audited

in cases where harm is suspected.

7. Validation and Testing: Institutions should use rigorous methods to validate their models and

document those methods and results. In particular, they should routinely perform tests to assess and

determine whether the model generates discriminatory harm. Institutions are encouraged to make the

results of such tests public.

Page 2 of 2

You might also like

- Shri Mahakal Bhairav Sadhana (Mantras,Kavach,Stotra,Shabar Mantra) (श्रीमहाकाल भैरव मंत्र साधना)Document25 pagesShri Mahakal Bhairav Sadhana (Mantras,Kavach,Stotra,Shabar Mantra) (श्रीमहाकाल भैरव मंत्र साधना)Gurudev Shri Raj Verma ji83% (29)

- Corruption and PeacekeepingDocument72 pagesCorruption and PeacekeepingdhavalnmodiNo ratings yet

- Schwartz 1994 - Are There Universal Aspects in The Content of Human ValuesDocument27 pagesSchwartz 1994 - Are There Universal Aspects in The Content of Human ValuesdhavalnmodiNo ratings yet

- Cooperation On Artificial Intelligence: EclarationDocument8 pagesCooperation On Artificial Intelligence: EclarationdhavalnmodiNo ratings yet

- Lords Report Highlights UK's AI Strengths and RisksDocument183 pagesLords Report Highlights UK's AI Strengths and RisksAstroNirav Savaliya100% (1)

- Army - fm3 19x30 - Physical SecurityDocument315 pagesArmy - fm3 19x30 - Physical SecurityMeowmix100% (2)

- ILO IOM Terms of ReferenceDocument5 pagesILO IOM Terms of ReferencedhavalnmodiNo ratings yet

- UN Leaflet On LGBT Human RightsDocument2 pagesUN Leaflet On LGBT Human RightsLGBT Asylum NewsNo ratings yet

- Asia Pacific Trans Health BlueprintDocument172 pagesAsia Pacific Trans Health BlueprintBeMbewNo ratings yet

- UNHCR Guidance Note On Claims For Refugee Status Under The 1951 Convention Relating To Sexual Orientation and Gender IdentityDocument18 pagesUNHCR Guidance Note On Claims For Refugee Status Under The 1951 Convention Relating To Sexual Orientation and Gender IdentityLGBT Asylum NewsNo ratings yet

- Dantec - Perception of Technology Among HomelessDocument10 pagesDantec - Perception of Technology Among HomelessdhavalnmodiNo ratings yet

- Battlefield Singularity November 2017Document74 pagesBattlefield Singularity November 2017dhavalnmodiNo ratings yet

- Bartone - Dimensions of Psychological STDocument7 pagesBartone - Dimensions of Psychological STdhavalnmodiNo ratings yet

- Post Colonial ComputingDocument10 pagesPost Colonial ComputingdhavalnmodiNo ratings yet

- Revamping UN Peacekeeping For The 21 CenturyDocument79 pagesRevamping UN Peacekeeping For The 21 CenturydhavalnmodiNo ratings yet

- Cooperation On Artificial Intelligence: EclarationDocument8 pagesCooperation On Artificial Intelligence: EclarationdhavalnmodiNo ratings yet

- Smart Peacekeeping IPIDocument36 pagesSmart Peacekeeping IPIdhavalnmodiNo ratings yet

- Grounded TheoryDocument12 pagesGrounded TheoryKimberly BacorroNo ratings yet

- Joint Declaration Eu Legislative Priorities 2018 19 enDocument2 pagesJoint Declaration Eu Legislative Priorities 2018 19 endhavalnmodiNo ratings yet

- Challenge of Moral Values in DesignDocument17 pagesChallenge of Moral Values in DesigndhavalnmodiNo ratings yet

- Fast Facts March 2018 en 0Document1 pageFast Facts March 2018 en 0dhavalnmodiNo ratings yet

- LGBTQ at UNDocument13 pagesLGBTQ at UNdhavalnmodiNo ratings yet

- Asia Pacific Trans Health BlueprintDocument172 pagesAsia Pacific Trans Health BlueprintBeMbewNo ratings yet

- Japan AI ReportDocument26 pagesJapan AI ReportdhavalnmodiNo ratings yet

- Capstone Doctrine ENGDocument53 pagesCapstone Doctrine ENGemi_lia_07No ratings yet

- Battlefield Singularity November 2017Document74 pagesBattlefield Singularity November 2017dhavalnmodiNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5784)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Openscape Cordless Ip v2Document98 pagesOpenscape Cordless Ip v2babydongdong22No ratings yet

- Arun &associatesDocument12 pagesArun &associatesSujaa SaravanakhumaarNo ratings yet

- Hive Nano 2 Hub User GuideDocument6 pagesHive Nano 2 Hub User GuideLuis Armando Campos BarbaNo ratings yet

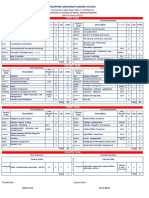

- Philippine Merchant Marine School: First YearDocument5 pagesPhilippine Merchant Marine School: First YearCris Mhar Alejandro100% (1)

- Brochure Imaxeon Salient DualDocument4 pagesBrochure Imaxeon Salient DualQuyet LeNo ratings yet

- Niq Logistics PropozalsDocument20 pagesNiq Logistics PropozalsPelumi Gabriel obafayeNo ratings yet

- E560 23ba23 DSDocument4 pagesE560 23ba23 DSJoao SantosNo ratings yet

- Model QuestionDocument3 pagesModel QuestionParthasarothi SikderNo ratings yet

- Comparision Between Nokia and AppleDocument3 pagesComparision Between Nokia and AppleSushan SthaNo ratings yet

- Project SpecificationDocument8 pagesProject SpecificationYong ChengNo ratings yet

- KSC2016 - Recurrent Neural NetworksDocument66 pagesKSC2016 - Recurrent Neural NetworksshafiahmedbdNo ratings yet

- CISSP VocabularyDocument4 pagesCISSP Vocabularyyared BerhanuNo ratings yet

- HP Laserjet Pro MFP M127 Series: Handle The Essentials With One Affordable, Networked MFPDocument2 pagesHP Laserjet Pro MFP M127 Series: Handle The Essentials With One Affordable, Networked MFPAndrew MarshallNo ratings yet

- System Diagnostics - S7-400 CPU DiagnosticsDocument2 pagesSystem Diagnostics - S7-400 CPU DiagnosticsЮрий КазимиркоNo ratings yet

- Quiz Questions on Internet ConceptsDocument3 pagesQuiz Questions on Internet Conceptsjohn100% (1)

- DC G240 eDocument14 pagesDC G240 eHany Mohamed100% (11)

- CT DatasheetDocument5 pagesCT DatasheetGold JoshuaNo ratings yet

- Nasscom Member List-2017Document25 pagesNasscom Member List-2017Rohit AggarwalNo ratings yet

- 90852A+ Aoyue Hot Air Station en PDFDocument10 pages90852A+ Aoyue Hot Air Station en PDFMatthieuNo ratings yet

- Adcmxl1021-1 - Sensor Analogic 1 Eixos FFT - Ideia de Fazer Do Acell Analog Um Digital Usando Adxl1002Document42 pagesAdcmxl1021-1 - Sensor Analogic 1 Eixos FFT - Ideia de Fazer Do Acell Analog Um Digital Usando Adxl1002FabioDangerNo ratings yet

- Engine Controls/Fuel - I.8L (LUW, LWE) 9-591Document1 pageEngine Controls/Fuel - I.8L (LUW, LWE) 9-591flash_24014910No ratings yet

- 2021 & Beyond: Prevention Through DesignDocument9 pages2021 & Beyond: Prevention Through DesignAgung Ariefat LubisNo ratings yet

- Guidelines PHD Admission 20153Document8 pagesGuidelines PHD Admission 20153manish_chaturvedi_6No ratings yet

- Online Library Management System for W/ro Siheen Polytechnic CollegeDocument2 pagesOnline Library Management System for W/ro Siheen Polytechnic CollegeMekuriaw MezgebuNo ratings yet

- Syg5283thb 380c-8 Camion ConcretoDocument378 pagesSyg5283thb 380c-8 Camion ConcretoAlex MazaNo ratings yet

- Tan Emily Sooy Ee 2012 PDFDocument323 pagesTan Emily Sooy Ee 2012 PDFMuxudiinNo ratings yet

- Vivado Basic TutorialDocument30 pagesVivado Basic Tutorial8885684828No ratings yet

- Duplicate Cleaner Pro log analysisDocument3 pagesDuplicate Cleaner Pro log analysisNestor RodriguezNo ratings yet

- Steam - Receipt For Key SubscriptionDocument1 pageSteam - Receipt For Key SubscriptionLuis Felipe BuenoNo ratings yet

- TriBand Trisector - SSC-760220020 - 2.2m AntennaDocument7 pagesTriBand Trisector - SSC-760220020 - 2.2m AntennaborisNo ratings yet