Professional Documents

Culture Documents

Myanmar - Open Letter To Mark Zuckerberg

Uploaded by

The GuardianOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Myanmar - Open Letter To Mark Zuckerberg

Uploaded by

The GuardianCopyright:

Available Formats

5 April 2018

Yangon, Myanmar

Dear Mark,

We listened to your Vox interview with great interest and were glad to hear of your personal concern and

engagement with the situation in Myanmar.

As representatives of Myanmar civil society organizations and the people who raised the Facebook

Messenger threat to your team’s attention, we were surprised to hear you use this case to praise the

effectiveness of your ‘systems’ in the context of Myanmar. From where we stand, this case exemplifies the

very opposite of effective moderation: it reveals an over-reliance on third parties, a lack of a proper

mechanism for emergency escalation, a reticence to engage local stakeholders around systemic solutions

and a lack of transparency.

Far from being an isolated incident, this case further epitomizes the kind of issues that have been rife on

Facebook in Myanmar for more than four years now and the inadequate response of the Facebook team. It

is therefore instructive to examine this Facebook Messenger incident in more detail, particularly given your

personal engagement with the case.

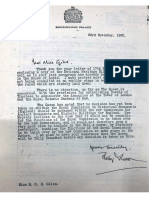

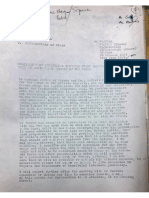

The messages (pictured and translated below) were clear examples of your tools being used to incite real

harm. Far from being stopped, they spread in an unprecedented way, reaching country-wide and causing

widespread fear and at least three violent incidents in the process. The fact that there was no bloodshed is

testament to our community’s resilience and to the wonderful work of peacebuilding and interfaith

organisations. This resilience, however, is eroding daily as our community continues to be exposed to

virulent hate speech and vicious rumours, which Facebook is still not adequately addressing.

Over-reliance on third parties

In your interview, you refer to your detection ‘systems’. We believe your system, in this case, was us - and

we were far from systematic. We identified the messages and escalated them to your team via email on

Saturday the 9th September, Myanmar time. By then, the messages had already been circulating widely for

three days.

The Messenger platform (at least in Myanmar) does not provide a reporting function, which would have

enabled concerned individuals to flag the messages to you. Though these dangerous messages were

deliberately pushed to large numbers of people - many people who received them say they did not

personally know the sender - your team did not seem to have picked up on the pattern. For all of your data,

it would seem that it was our personal connection with senior members of your team which led to the issue

being dealt with.

Lack of a proper mechanism for emergency escalation

Though we are grateful to hear that the case was brought to your personal attention, Mark, it is hard for us

to regard this escalation as successful. It took over four days from when the messages started circulating

for the escalation to reach you, with thousands, if not hundreds of thousands, being reached in the

meantime.

This is not quick enough and highlights inherent flaws in your ability to respond to emergencies. Your

reporting tools, for one, do not provide options for users to flag content as priority. As far as we know, there

are no Burmese speaking Facebook staff to whom Myanmar monitors can directly raise such cases. We

were lucky to have a confident english speaker who was connected enough to escalate the issue. This is

not a viable or sustainable system, and is one which will inherently be subject to delays.

Reticence to engage local stakeholders around systemic solutions

These are not new problems. As well as regular contact and escalations to your team, we have held formal

briefings on these challenges during Facebook visits to Myanmar. By and large though, our engagement

has been limited to your policy team. We are facing major challenges which would warrant the involvement

of your product, engineering and data teams. So far, these direct engagements have not taken place and

our offers to input into the development of systemic solutions have gone unanswered.

Presumably your data team should be able to trace the original sources of flagged messages and posts and

identify repeat offenders, using these insights to inform your moderation and sanctioning. Your engineering

team should be able to detect duplicate posts and ensure that identified hate content gets comprehensively

removed from your platform. We’ve not seen this materialise yet.

Lack of transparency

Seven months after the case mentioned, we have yet to hear from Facebook on the details of what

happened and what measures your team has taken to better respond to such cases in the future. We are

also yet to hear back on many of the issues we raised and suggestions we provided in a subsequent

briefing in December.

The risk of Facebook content sparking open violence is arguably nowhere higher right now than in

Myanmar. We appreciate that progress is an iterative process and that it will require more than this letter

for Facebook to fix these issues.

If you are serious about making Facebook better, however, we urge you to invest more into moderation -

particularly in countries, such as Myanmar, where Facebook has rapidly come to play a dominant role in

how information is accessed and communicated; We urge you to be more intent and proactive in engaging

local groups, such as ours, who are invested in finding solutions, and - perhaps most importantly - we urge

you to be more transparent about your processes, progress and the performance of your interventions, so

as to enable us to work more effectively together.

We hope this will be the start of a solution-driven conversation and remain committed to working with you

and your team towards making Facebook a better and safer place for Myanmar (and other) users.

With our best regards,

You might also like

- The Strangers Public Option: A Call For CMCs Secret Society To Go PublicDocument5 pagesThe Strangers Public Option: A Call For CMCs Secret Society To Go PublicGeogetown StewNo ratings yet

- Ethics Paper AssignmentDocument2 pagesEthics Paper AssignmentHarry DaviesNo ratings yet

- Building Trust Modules GuideDocument11 pagesBuilding Trust Modules GuideAgus SuryonoNo ratings yet

- Why Do People Use FacebookDocument5 pagesWhy Do People Use FacebookKaryl UyNo ratings yet

- Social Data Analytics: Collaboration for the EnterpriseFrom EverandSocial Data Analytics: Collaboration for the EnterpriseRating: 1 out of 5 stars1/5 (1)

- Thesis Statement Social Networking SitesDocument4 pagesThesis Statement Social Networking Sitesbsgnqj4n100% (2)

- Introduction to Social Media Investigation: A Hands-on ApproachFrom EverandIntroduction to Social Media Investigation: A Hands-on ApproachRating: 5 out of 5 stars5/5 (2)

- Thesis Facebook PrivacyDocument4 pagesThesis Facebook Privacypatricemillertulsa100% (2)

- MinigenresDocument6 pagesMinigenresapi-710564578No ratings yet

- HOMEWORK - RikDocument4 pagesHOMEWORK - Rikcortes.riksonNo ratings yet

- Communication PlanDocument8 pagesCommunication Planapi-253134856No ratings yet

- IntroductionDocument5 pagesIntroductionNOOB GAMINGNo ratings yet

- Crisis Communication PlanDocument7 pagesCrisis Communication PlanRikky AbdulNo ratings yet

- Performing The Self: Identity On FacebookDocument69 pagesPerforming The Self: Identity On FacebookSerena WestraNo ratings yet

- Hafsa Farooqi SMC HW 4Document4 pagesHafsa Farooqi SMC HW 4hafsa farooqiNo ratings yet

- Assignment 5. The Dangers of TechnologyDocument1 pageAssignment 5. The Dangers of Technologyronjay julian0% (2)

- Pros and Cons of Social Media Research PaperDocument5 pagesPros and Cons of Social Media Research Papernnactlvkg100% (1)

- Ipsa Jaiswal - 110079Document2 pagesIpsa Jaiswal - 110079Mittal Kirti MukeshNo ratings yet

- Be Jar Whistleblower DocumentsDocument292 pagesBe Jar Whistleblower DocumentsHelen BennettNo ratings yet

- Groundswell, Expanded and Revised Edition: Winning in a World Transformed by Social TechnologiesFrom EverandGroundswell, Expanded and Revised Edition: Winning in a World Transformed by Social TechnologiesNo ratings yet

- Fce ReadingDocument3 pagesFce ReadingCon B de BeaNo ratings yet

- To Simulate My Exploration of The Relationship Between The Fire Service and Social MediaDocument8 pagesTo Simulate My Exploration of The Relationship Between The Fire Service and Social MediaMarlee McCallNo ratings yet

- Why Social Media: The Fire CriticDocument8 pagesWhy Social Media: The Fire CriticMarlee McCallNo ratings yet

- Script CorrectedDocument8 pagesScript CorrectedMarlee McCallNo ratings yet

- Final Draft Research ArticleDocument13 pagesFinal Draft Research Articleapi-509067341No ratings yet

- Create Brand Attraction: A New Strategy That Uses the Laws of Human Attraction to Decode Marketing in a Digital and Social Media AgeFrom EverandCreate Brand Attraction: A New Strategy That Uses the Laws of Human Attraction to Decode Marketing in a Digital and Social Media AgeNo ratings yet

- Zuckerberg Statement To CongressDocument7 pagesZuckerberg Statement To CongressJordan Crook100% (1)

- Penetration Tester's Open Source ToolkitFrom EverandPenetration Tester's Open Source ToolkitRating: 4.5 out of 5 stars4.5/5 (3)

- Social Media Security: Leveraging Social Networking While Mitigating RiskFrom EverandSocial Media Security: Leveraging Social Networking While Mitigating RiskRating: 5 out of 5 stars5/5 (1)

- How To Catch A Cheating Partner And Identify Their LoverFrom EverandHow To Catch A Cheating Partner And Identify Their LoverRating: 5 out of 5 stars5/5 (2)

- The 5 Steps to Better Technical Education: A Framework for Instructional DesignFrom EverandThe 5 Steps to Better Technical Education: A Framework for Instructional DesignNo ratings yet

- The Basics of Cyber Safety: Computer and Mobile Device Safety Made EasyFrom EverandThe Basics of Cyber Safety: Computer and Mobile Device Safety Made EasyRating: 5 out of 5 stars5/5 (2)

- The Single Biggest Mistake in Communication: Improve Your Communication Skills & Connect Better With Others: Communication Mastery SeriesFrom EverandThe Single Biggest Mistake in Communication: Improve Your Communication Skills & Connect Better With Others: Communication Mastery SeriesNo ratings yet

- Instant Networking: The Simple Way to Build Your Business Network and See Results in Just 6 MonthsFrom EverandInstant Networking: The Simple Way to Build Your Business Network and See Results in Just 6 MonthsNo ratings yet

- Social Networking in Recruitment: Build your social networking expertise to give yourself a cost-effective advantage in the hiring marketFrom EverandSocial Networking in Recruitment: Build your social networking expertise to give yourself a cost-effective advantage in the hiring marketRating: 3 out of 5 stars3/5 (2)

- The Manager’s Guide to Handling the Media in Crisis: Saying & Doing the Right Thing When It Matters MostFrom EverandThe Manager’s Guide to Handling the Media in Crisis: Saying & Doing the Right Thing When It Matters MostNo ratings yet

- Effects of a Virtual Community of Practice in a Management-Consulting OrganizationFrom EverandEffects of a Virtual Community of Practice in a Management-Consulting OrganizationNo ratings yet

- The Secure CEO: How to Protect Your Computer Systems, Your Company, and Your JobFrom EverandThe Secure CEO: How to Protect Your Computer Systems, Your Company, and Your JobNo ratings yet

- Avalanche of Leads: Why You Should Use Social Media to Build Your BrandFrom EverandAvalanche of Leads: Why You Should Use Social Media to Build Your BrandNo ratings yet

- Word of Mouse: 101+ Trends in How We Buy, Sell, Live, Learn, Work, and PlayFrom EverandWord of Mouse: 101+ Trends in How We Buy, Sell, Live, Learn, Work, and PlayRating: 5 out of 5 stars5/5 (1)

- Conversations That Count: Developing Communication Skills that Build Lasting ConnectionFrom EverandConversations That Count: Developing Communication Skills that Build Lasting ConnectionRating: 5 out of 5 stars5/5 (1)

- Creative Interventions Workbook: Effective Tools to Stop Interpersonal ViolenceFrom EverandCreative Interventions Workbook: Effective Tools to Stop Interpersonal ViolenceNo ratings yet

- Network Advantage: How to Unlock Value From Your Alliances and PartnershipsFrom EverandNetwork Advantage: How to Unlock Value From Your Alliances and PartnershipsNo ratings yet

- The Art of Small Talk & Winning First Impressions – How to Start Conversations, Build Rapport and Have Relationships That Last!From EverandThe Art of Small Talk & Winning First Impressions – How to Start Conversations, Build Rapport and Have Relationships That Last!No ratings yet

- How to Handle a Crowd: The Art of Creating Healthy and Dynamic Online CommunitiesFrom EverandHow to Handle a Crowd: The Art of Creating Healthy and Dynamic Online CommunitiesRating: 5 out of 5 stars5/5 (1)

- Social Messaging Apps for Marketers: How Social Messaging Apps Are Taking the Place of Social MediaFrom EverandSocial Messaging Apps for Marketers: How Social Messaging Apps Are Taking the Place of Social MediaNo ratings yet

- Summary of Ryan Hartwig & Kent Heckenlively's Behind the Mask of FacebookFrom EverandSummary of Ryan Hartwig & Kent Heckenlively's Behind the Mask of FacebookNo ratings yet

- Innovative Policing: An Instructional and Administrative Guide for Law Enforcement Personnel (Police, Corrections, and Security Officers)From EverandInnovative Policing: An Instructional and Administrative Guide for Law Enforcement Personnel (Police, Corrections, and Security Officers)Rating: 3.5 out of 5 stars3.5/5 (2)

- Cheat Sheet for the Working World: Insights and Experiences of an Operations ManagerFrom EverandCheat Sheet for the Working World: Insights and Experiences of an Operations ManagerNo ratings yet

- International Comparisons at Equivalent Stage of Rollout: Cumulative Doses Administered (12 Apr) : 1,234,681Document1 pageInternational Comparisons at Equivalent Stage of Rollout: Cumulative Doses Administered (12 Apr) : 1,234,681The GuardianNo ratings yet

- Plumbing Poverty in US CitiesDocument32 pagesPlumbing Poverty in US CitiesThe Guardian50% (2)

- Robb Elementary School Attack Response Assessment and RecommendationsDocument26 pagesRobb Elementary School Attack Response Assessment and RecommendationsThe GuardianNo ratings yet

- Memorandum of UnderstandingDocument6 pagesMemorandum of UnderstandingThe Guardian75% (4)

- Letter From Palace, Memo To MR Woodhouse and Memo of MeetingDocument5 pagesLetter From Palace, Memo To MR Woodhouse and Memo of MeetingThe GuardianNo ratings yet

- Civil Service Memos and Letter From Howe To PriorDocument5 pagesCivil Service Memos and Letter From Howe To PriorThe Guardian100% (4)

- Interim National Environmental Standards For Matters of National Environmental SignificanceDocument19 pagesInterim National Environmental Standards For Matters of National Environmental SignificanceThe GuardianNo ratings yet

- Investigation Into Antisemitism in The Labour Party PDFDocument130 pagesInvestigation Into Antisemitism in The Labour Party PDFThe Guardian86% (7)

- Open Letter To The CMOs of England, Wales, Scotland and Northern IrelandDocument4 pagesOpen Letter To The CMOs of England, Wales, Scotland and Northern IrelandThe Guardian100% (11)

- TheGuardian 5 Sept 1975Document1 pageTheGuardian 5 Sept 1975The Guardian100% (1)

- Findings of The Independent AdviserDocument2 pagesFindings of The Independent AdviserThe Guardian0% (1)

- COVID-19 Winter PlanDocument64 pagesCOVID-19 Winter PlanThe Guardian100% (3)

- Climate Science, Awareness and SolutionsDocument5 pagesClimate Science, Awareness and SolutionsThe Guardian100% (10)

- Climate Change Letter UNDocument2 pagesClimate Change Letter UNThe Guardian100% (1)

- Letter To Attorney General 28.10.20Document4 pagesLetter To Attorney General 28.10.20The GuardianNo ratings yet

- Jake BerryDocument1 pageJake BerryThe GuardianNo ratings yet

- Jake Berry 2Document2 pagesJake Berry 2The Guardian100% (1)

- Lord Agnew LetterDocument2 pagesLord Agnew LetterThe Guardian100% (1)

- Ministry of Housing, Communities and Local GovernmentDocument2 pagesMinistry of Housing, Communities and Local GovernmentThe GuardianNo ratings yet

- 'Hsduwphqw Ri $Julfxowxuh:Dwhu DQG (Qylurqphqw &RPPHQWV Rq:Duudjdped 'DP 5Dlvlqj 'Udiw (,6:ruog +hulwdjh $VVHVVPHQWDocument7 pages'Hsduwphqw Ri $Julfxowxuh:Dwhu DQG (Qylurqphqw &RPPHQWV Rq:Duudjdped 'DP 5Dlvlqj 'Udiw (,6:ruog +hulwdjh $VVHVVPHQWThe GuardianNo ratings yet

- Greenhalgh Shridar Open Letter To CMOs PDFDocument3 pagesGreenhalgh Shridar Open Letter To CMOs PDFThe Guardian100% (4)

- Summary of Effectiveness and Harms of NPIsDocument8 pagesSummary of Effectiveness and Harms of NPIsThe Guardian100% (1)

- Amal Clooney Resignation Letter To The UK Foreign SecretaryDocument5 pagesAmal Clooney Resignation Letter To The UK Foreign SecretaryThe Guardian100% (1)

- Slides PDFDocument3 pagesSlides PDFThe GuardianNo ratings yet

- Lawsuit For Plaintiff Santos Saúl Álvarez BarragánDocument24 pagesLawsuit For Plaintiff Santos Saúl Álvarez BarragánThe Guardian100% (2)

- How To Create Your Own Jersey Knitted Bracelet:: Step 1 Step 2 Step 3 Step 4Document2 pagesHow To Create Your Own Jersey Knitted Bracelet:: Step 1 Step 2 Step 3 Step 4The GuardianNo ratings yet

- September 2020 Political UpdateDocument5 pagesSeptember 2020 Political UpdateThe GuardianNo ratings yet

- Make Your Own T Shirt YarnDocument1 pageMake Your Own T Shirt YarnThe GuardianNo ratings yet

- Ico DecisionDocument14 pagesIco DecisionThe GuardianNo ratings yet

- 20200818135134Document15 pages20200818135134The GuardianNo ratings yet

- Demanda Federal Aguas Arecibo NotiCelDocument21 pagesDemanda Federal Aguas Arecibo NotiCelnoticelmesaNo ratings yet

- Bayers AG Case AnalysisDocument4 pagesBayers AG Case AnalysisMukul Verma100% (1)

- Medibank Private Information SheetDocument8 pagesMedibank Private Information SheetJBNo ratings yet

- PDF 2314763281Document2 pagesPDF 2314763281SyedFarhanNo ratings yet

- Cadet Activities Consent FormDocument1 pageCadet Activities Consent Formrobby_dicksonNo ratings yet

- Marching Uniform ContractDocument1 pageMarching Uniform ContractHanson MendezNo ratings yet

- CMS 10791 Good Faith Estimate Model NoticeDocument12 pagesCMS 10791 Good Faith Estimate Model NoticenarendraNo ratings yet

- The Computer Based Patient Record An Essential Technology For Health Care Revised Edition PDFDocument257 pagesThe Computer Based Patient Record An Essential Technology For Health Care Revised Edition PDFرونالين كسترو100% (1)

- Obamacare A Step BackwardsDocument2 pagesObamacare A Step BackwardsGerardFVNo ratings yet

- Cabinet Ministers of IndiaDocument3 pagesCabinet Ministers of IndiaAniket SankpalNo ratings yet

- Hospital Admission FormDocument1 pageHospital Admission FormAsep SetiawanNo ratings yet

- D43 PDFDocument2 pagesD43 PDFArnold RojasNo ratings yet

- TN Govt Revenue Administration Manual 2001Document187 pagesTN Govt Revenue Administration Manual 2001Dr.Sagindar100% (10)

- 2002 657 EC AmmendmentsDocument2 pages2002 657 EC AmmendmentsducngoctrinhNo ratings yet

- Final Notice 2021 2022 13102023Document41 pagesFinal Notice 2021 2022 13102023Diviya SharmaNo ratings yet

- 2020 Etc 04 (E)Document6 pages2020 Etc 04 (E)farid asadiNo ratings yet

- Unit V Pharmacy and ControlDocument10 pagesUnit V Pharmacy and ControlJenesis Cairo CuaresmaNo ratings yet

- H-Metro 15 May 2020 FinalDocument1 pageH-Metro 15 May 2020 FinalTayi Wale ChiiNo ratings yet

- Laws Affecting The Practice of MidwiferyDocument5 pagesLaws Affecting The Practice of MidwiferyAngellie SumilNo ratings yet

- Juris Ra 10643Document44 pagesJuris Ra 10643Rolyn Eve MercadoNo ratings yet

- Alki-Foa 342 PDFDocument9 pagesAlki-Foa 342 PDFErick Francis Suárez VelásquezNo ratings yet

- Room To Roam England's Irish TravellersDocument130 pagesRoom To Roam England's Irish TravellersGundappa GunaNo ratings yet

- RTI 17 Manual MOH R Central Ward-4Document55 pagesRTI 17 Manual MOH R Central Ward-4SwarupaNo ratings yet

- : ﺔﻘﻴﺛﻮﻟا ناﻮﻨﻋ: ﺔﻴﻤﻴﻈﻨﺘﻟا ةﺪﺣﻮﻟا Work Instructions title: Organization Unit: Doc Ref.: ﺔﻘﻴﺛﻮﻟا ﻢﻗرDocument1 page: ﺔﻘﻴﺛﻮﻟا ناﻮﻨﻋ: ﺔﻴﻤﻴﻈﻨﺘﻟا ةﺪﺣﻮﻟا Work Instructions title: Organization Unit: Doc Ref.: ﺔﻘﻴﺛﻮﻟا ﻢﻗرDavidNo ratings yet

- People V OpuranDocument3 pagesPeople V OpuranChloe Hernane100% (2)

- Argumentative EssayDocument4 pagesArgumentative Essaynoreen diorNo ratings yet

- Social JusticeDocument46 pagesSocial JusticeLex AmarieNo ratings yet

- OFF - Campus Activity Proposal Activity Information Constancio E. Aure Sr. National High School - A CalabarzonDocument5 pagesOFF - Campus Activity Proposal Activity Information Constancio E. Aure Sr. National High School - A CalabarzonJames P. Roncales100% (1)

- Acute Abdominal Pain-Paediatric Pathway V2.0Document10 pagesAcute Abdominal Pain-Paediatric Pathway V2.0Dwi PuspitaNo ratings yet

- CLTS Training Guideline - Dari Version - 20th April 2015Document55 pagesCLTS Training Guideline - Dari Version - 20th April 2015abdulsamad totakhil100% (1)