Professional Documents

Culture Documents

10 Web Caching

Uploaded by

Kabir J AbubakarCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

10 Web Caching

Uploaded by

Kabir J AbubakarCopyright:

Available Formats

Web Caching

based on:

Web Caching, Geoff Huston

Web Caching and Zipf-like Distributions:

Evidence and Implications, L. Breslau, P.

Cao, L. Fan, G. Phillips, S. Shenker

On the scale and performance of

cooperative Web proxy caching, A.

Wolman, G. Voelker, N. Sharma, N.

Cardwell, A. Karlin, H. Levy

Web Caching Page 1 of 24

Hypertext Transfer Protocol (HTTP)

based on an end-to-end model (the network is

a passive element)

Pros:

The server can modify the content;

The server can track all content requests;

The server can differentiate between

different clients;

The server can authenticate the client.

Cons:

Popular servers are under continuous high

stress

high number of simultaneously opened

connections

high load on the surrounding network

The network may not be efficiently utilized

Web Caching Page 2 of 24

Why Caching in the Network?

reduce latency by avoiding slow links between

client and origin server

low bandwidth links

congested links

reduce traffic on links

spread load of overloaded origin servers to

caches

Web Caching Page 3 of 24

Caching points

content provider (server caching)

ISP

more than 70% of ISP traffic is Web based;

repeated requests at about 50% of the traffic;

ISPs interested in reducing the transmission

costs;

provide high quality of service to the end

users.

end client

Web Caching Page 4 of 24

Caching Challenges

Cache consistency

identify if an object is stale or fresh

Dynamic content

caches should not store outputs of CGI

scripts (Active Cache?)

Hit counts and personalization

Privacy concerned user

how do you get a user to point to a cache

Access control

legal and security restrictions

Large multimedia files

Web Caching Page 5 of 24

Web cache access pattern - (common

thoughts)

the distribution of page requests follows a

Zipf-like distribution: the relative probability

of a request for the i-th most popular page is

proportional to 1 i with 1

for an infinite sized cache, the hit-ratio grows

in a log-like fashion as a function of the client

population and of the number of requests

the hit-ratio of a web cache grows in a log-like

fashion as a function of cache size

the probability that a document will be

referenced k requests after it was last

referenced is roughly proportional to 1 k

(temporal locality)

Web Caching Page 6 of 24

Web cache access pattern - (P. Cao

Observations)

distribution of web requests follow a a Zipf-

like distribution 0.64, 0.83 (fig. 1)

the 10/90 rule does not apply to web accesses

(70% of accesses to about 25%-40% of the

documents) (fig. 2)

low statistical corellation between the

frequency that a document is accessed and its

size (fig. 3)

low statistic correlation between a documents

access frequency and its average modifications

per request.

request distribution to servers does not follow

a Zipf law.; there is no single server

contributing to the most popular pages (fig. 5)

Web Caching Page 7 of 24

Implications of Zipf-like behavior

Model:

A cache that receives a stream of requests

N = total number of pages in the universe

P N ( i ) = probability that given the arrival of

a page request, it is made for page

i, ( i = 1, N )

Pages ranked in order of their popularity,

page i is the i-th most popular

Zipf-like distribution for the arrivals:

PN ( i ) = i ,

1

N

= 1i

i = 1

no pages are invalidated in the cache.

Web Caching Page 8 of 24

Implications of Zipf-like behavior

infinite cache, finite request stream:

= log ( R ), ( = 1 )

H (R) 11

= ( ( 1 ) ) ( R ) , (0 < < 1)

finite cache, infinite request stream:

= log C, ( = 1 )

H (C ) 1

= C ,0<<1

page request interarrival time:

1 1 k 1 k

d(k) --------------- 1 ----------------- 1 ------------

k log N N log N log N

H(R) = hit ratio

d(k) = probability distribution that the next

request for page iis followed by k-1 requests for

pages other than i

Web Caching Page 9 of 24

Cache Replacement Algorithms

(fig. 9)

GD-Size:

performs best for small sizes of cache.

Perfect-LFU

performs comparably with GD-Size in hit-

ratio and much better in byte hit-ratio for

large cache sizes.

drawback: increased complexity

In-Cache-LFU

performs the worst

Web Caching Page 10 of 24

Web document sharing and proxy

caching

What is the best performance one could

achieve with perfect cooperative caching?

For what range of client populations can

cooperative caching work effectively?

Does the way in which clients are assigned to

caches matter?

What cache hit rates are necessary to achieve

worthwhile decreases in document access

latency?

Web Caching Page 11 of 24

For what range of client populations can

cooperative caching work effectively?

90

80

Request Hit Rate (%)

70

60

50

40

30 Ideal (UW)

20 Cacheable (UW)

Ideal (MS)

10 Cacheable (MS)

0

0 5000 10000 15000 20000 25000 30000

Population

for smaller populations, hit rate increases

rapidly with population (cooperative caching

can be used effectively)

for larger population cooperative caching is

unlikely to bring benefits

Conclusion:

use cooperative caching to adapt to proxy

assignments made for political or geographical

reasons.

Web Caching Page 12 of 24

Object Latency vs. Population

Mean No Cache

Mean Cacheable

Mean Ideal

Median No Cache

Median Cacheable

2000 Median Ideal

Object Latency (ms)

1500

1000

500

0

0 5000 10000 15000 20000

Population

Latency stays unchanged when population

increases

Caching will have little effect on mean and

median latency beyond very small client

population (?!)

Web Caching Page 13 of 24

Byte Hit Rate vs. Population

60

50

Byte Hit Rate (%)

40

30

20 Ideal

Cacheable

10

0

0 5000 10000 15000 20000

Population

same knee at about 2500 clients

shared documents are smaller

Web Caching Page 14 of 24

Bandwith vs. population

Mean Bandwidth (Mbits/s) 5 No Cache

Cacheable

4 Ideal

0

0 5000 10000 15000 20000

Population

while caching reduces bandwidth consumption

there is no benefit to increased client

population.

Web Caching Page 15 of 24

Proxies and organizations

100 Ideal Cooperative

90 Ideal Local

Cacheable Cooperative

80

Cacheable Local

70

Hit Rate (%)

60

50

40

30

20

10

0

978

735

480

425

392

337

251

246

239

228

222

219

213

200

192

15 Largest Organizations

70 UW

Random

60

50

Hit Rate (%)

40

30

20

10

0

978

735

480

425

392

337

251

246

239

228

222

219

213

200

192

15 Largest Organizations

there is some locality in organizational membership, but the impact is

not significant

Web Caching Page 16 of 24

Impact of larger population size

100 Cooperative

Local

Hit Rate (%) 80

60

40

20

0

UW

Ideal

UW

Cacheable

MS

Ideal

MS

Cacheable

unpopular documents are universally

unpopular => unlikely that a miss in one large

group of population to get a hit in another

group.

Web Caching Page 17 of 24

Performance of large scale proxy

caching - model

N = clients in population

n = total number of documents ((aprox. 3.2

billions)

pi = fraction of all requests that are for the i-th

1

most popular document ( p i ---- , = 0.8 )

i

exponential distribution of the requests done

by client with parameter N , where = 590

reqs/day = average client request rate

exponential distribution of time for document

change, with parameter (unpopular

document u = 1 186 days, popular

documents p = 1 14 days)

pc = 0.6 = probability that the documents are

cacheable

E(S) = 7.7KB = avg. document size

E(L) = 1.9 sec = last-byte latency to server

Web Caching Page 18 of 24

Hit rate, latency and bandwidth

100

Cacheable Hit Rate (%)

80

60

40

Slow (14 days, 186 days)

20 Mid Slow (1 day, 186 days)

Mid Fast (1 hour, 85 days)

Fast (5 mins, 85 days)

0

10^2 10^3 10^4 10^5 10^6 10^7 10^8

Population (log)

Hit rate = f(Population) has three areas:

1. the request rate too low to dominate the rate

of change for unpopular documents

2. marks a significant increase in the hit rate of

the unpopular documents

3. the request rate is high enough to cope with

the document modifications

Latency lat req = ( 1 H N ) E(L) + H N lat hit

Bandwidth B N = H N E(S)

Web Caching Page 19 of 24

Document rate of change

100

Cacheable Hit Rate (%)

A

80

B

60

40

C

mu_p

20 mu_u

0

1 2 5 10 30 1 2 6 12 1 2 7 14 30 180

min hour day

Change Interval (log)

100

B A

Cacheable Hit Rate (%)

80

60

40

C

mu_p

20 mu_u

0

1 2 5 10 30 1 2 6 12 1 2 7 14 30 180

min hour day

Change Interval (log)

Proxy cache hit is very sensitive to the change

rates of both popular and unpopular documents

with a decrease on the time scales at which hit

rate is sensitive once the size of the population

increases.

Web Caching Page 20 of 24

Comparing cooperative caching

scheme

Three types of cache schemes: hierarchical, hash

based and summary caches;

Conclusions:

the achievable benefits in terms of latency are

achieved at the level of city-level cooperative

caching;

a flat cooperative scheme is no longer effective

if the served area increases after a limit

in a hierarchical caching scheme the higher

level might be a bottleneck in serving the

requests.

Web Caching Page 21 of 24

Hierarchical Caching (Squid):

a hierarchy of k levels

a client request is forwarded up in the

hierarchy until a cache hit occurs.

a copy of the requested object is then stored in

all caches of the request path

Web Caching Page 22 of 24

Hash-based caching system:

assumes a total of m caches cumulatively

serving a population of size N

upon a request for an URL the client

determines the cache in charge and forwards

the request to it.

if the cache has the URL the page is returned

to the client, otherwise the request is

forwarded to the server

Web Caching Page 23 of 24

Directory based system

(Summary cache)

a total of m caches serving cumulatively a

population of size N

the population is partitioned into

subpopulations of size N/m and each

population is served by a single cache

each cache maintains a directory that

summarizes the documents stored at each of

the other cache in the system

when a client request can not be served by a

local cache, it is randomly forwarded to a

cache which is registered to have the

document. If no document has the document

the request is forwarded to the original server

Web Caching Page 24 of 24

You might also like

- Caching Arch TonDocument15 pagesCaching Arch TonAsk Bulls BearNo ratings yet

- G7 Amazon DynamoDBDocument22 pagesG7 Amazon DynamoDBRAGUPATHI MNo ratings yet

- Keynote: The Disrupting Effects of SAP HANA and In-Memory DatabasesDocument35 pagesKeynote: The Disrupting Effects of SAP HANA and In-Memory DatabasesVENKATESHNo ratings yet

- Cloudflare CDN Whitepaper 19Q4Document9 pagesCloudflare CDN Whitepaper 19Q4Domingos BernardoNo ratings yet

- Taylor MGMT Science10Document23 pagesTaylor MGMT Science10Yen Chin Mok100% (4)

- RDS - Deep Dive On Amazon Relational Database Service (RDS)Document34 pagesRDS - Deep Dive On Amazon Relational Database Service (RDS)Stephen EfangeNo ratings yet

- Speed Up Your Internet Access Using Squid's Refresh PatternsDocument5 pagesSpeed Up Your Internet Access Using Squid's Refresh PatternsSamuel AlloteyNo ratings yet

- General Template - V7000 OC-Y5LFXNJDocument5 pagesGeneral Template - V7000 OC-Y5LFXNJLuis RojasNo ratings yet

- IBM FlashSystem Sales FoundationDocument4 pagesIBM FlashSystem Sales FoundationlimNo ratings yet

- Mathematical Modelling Approach in Web Cache Scheme: Hapiza@tmsk - Uitm.edu - My Norlaila@tmsk - Uitm.edu - MyDocument5 pagesMathematical Modelling Approach in Web Cache Scheme: Hapiza@tmsk - Uitm.edu - My Norlaila@tmsk - Uitm.edu - Mytanwir01No ratings yet

- Cluster Based Content Caching Driven by Popularity PredictionDocument10 pagesCluster Based Content Caching Driven by Popularity PredictionDiego DutraNo ratings yet

- Web App Lecture 5 Responsive DesignDocument40 pagesWeb App Lecture 5 Responsive DesignijasNo ratings yet

- Lec 05Document10 pagesLec 05youmnaxxeidNo ratings yet

- Performance Report For:: Executive SummaryDocument23 pagesPerformance Report For:: Executive SummaryJerome Rivera CastroNo ratings yet

- VSP Tech Presentation CustomerDocument26 pagesVSP Tech Presentation CustomerWeimin ChenNo ratings yet

- Building Modern Applications On AWSDocument66 pagesBuilding Modern Applications On AWSVivek GuptaNo ratings yet

- Slow Drain Detection in CiscoDocument61 pagesSlow Drain Detection in Ciscosalimbsc4203No ratings yet

- Web Caching Easy UnderstandDocument12 pagesWeb Caching Easy Understandapi-3707780No ratings yet

- Caching TechniquesDocument2 pagesCaching TechniquesSe SathyaNo ratings yet

- GTmetrix Report WWW - Girlsaskguys.com 20180514T233804 WY4pDxRZ FullDocument59 pagesGTmetrix Report WWW - Girlsaskguys.com 20180514T233804 WY4pDxRZ FullAdiumvirNo ratings yet

- Locality Characteristics of Web Streams Revisited (SPECTS 2005)Document28 pagesLocality Characteristics of Web Streams Revisited (SPECTS 2005)AsicsNewNo ratings yet

- Cohesity With Cisco UCS v1.3Document104 pagesCohesity With Cisco UCS v1.3Jesper Würfel100% (1)

- Drupalcon Munich 2012 - 1Document30 pagesDrupalcon Munich 2012 - 1roberto guzmanNo ratings yet

- Web Caching AlgDocument4 pagesWeb Caching AlgramachandraNo ratings yet

- CacheMARA Cluster Q2 2018 0Document2 pagesCacheMARA Cluster Q2 2018 0Igor KutsienyoNo ratings yet

- CachingDocument12 pagesCachingrohanparmar1162No ratings yet

- 3q01 GraDocument5 pages3q01 GragrisdipNo ratings yet

- System Design Template by TopcatDocument2 pagesSystem Design Template by TopcatSachin KushwahaNo ratings yet

- Using TLB Speculation To Overcome Page Splintering in Virtual MachinesDocument12 pagesUsing TLB Speculation To Overcome Page Splintering in Virtual MachinesMegan PavaroneNo ratings yet

- Symantec FileStore N8300 Cheat SheetDocument2 pagesSymantec FileStore N8300 Cheat SheetaryamahzarNo ratings yet

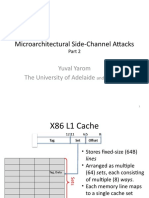

- Microarchitectural Side-Channel Attacks Part 2: Cache AttacksDocument36 pagesMicroarchitectural Side-Channel Attacks Part 2: Cache AttacksKafoorNo ratings yet

- Php_Final_materialDocument68 pagesPhp_Final_materialsingal1006No ratings yet

- Cost-Aware Proxy Caching Algorithm Outperforms LRUDocument15 pagesCost-Aware Proxy Caching Algorithm Outperforms LRUsumitrajput786No ratings yet

- Bandwidth Skimming: A Technique For Cost-Effective Video-on-DemandDocument10 pagesBandwidth Skimming: A Technique For Cost-Effective Video-on-DemandrayyiNo ratings yet

- MinIO Vs HDFS MapReduce Performance ComparisonDocument12 pagesMinIO Vs HDFS MapReduce Performance Comparisonkinan_kazuki104100% (1)

- Aveva - Wonderware - Historian PresentationDocument68 pagesAveva - Wonderware - Historian PresentationefNo ratings yet

- Proxy For: Multimedia Caching Mechanism Quality Adaptive Streaming Applications in The InternetDocument10 pagesProxy For: Multimedia Caching Mechanism Quality Adaptive Streaming Applications in The InternetDeepak SukhijaNo ratings yet

- Compute Caches: NtroductionDocument12 pagesCompute Caches: NtroductionGoblenNo ratings yet

- QConLondon2014 AdrianCockcroft MigratingtoMicroservicesDocument38 pagesQConLondon2014 AdrianCockcroft MigratingtoMicroservicesMNo ratings yet

- Cisco Slow Drain PDFDocument61 pagesCisco Slow Drain PDFAbhishekNo ratings yet

- Heavy Keeper An Accurate Algorithm For Finding Top-K Elephant Flows.Document3 pagesHeavy Keeper An Accurate Algorithm For Finding Top-K Elephant Flows.siva saiNo ratings yet

- Azure Subscription and Service Limits, Quotas, and ConstraintsDocument54 pagesAzure Subscription and Service Limits, Quotas, and ConstraintsSorinNo ratings yet

- USX Deep DiveDocument23 pagesUSX Deep Diveehtisham1No ratings yet

- Design of Parallel Algorithm'S: Faculty Guide: Group MembersDocument49 pagesDesign of Parallel Algorithm'S: Faculty Guide: Group MembersShweta MaddhesiyaNo ratings yet

- GTmetrix Report WWW - Girlsaskguys.com 20180514T234603 WVC1wC2M FullDocument85 pagesGTmetrix Report WWW - Girlsaskguys.com 20180514T234603 WVC1wC2M FullAdiumvirNo ratings yet

- Getting Started With Real-Time Analytics With Kafka and Spark in Microsoft Azure - Joe Plumb.Document44 pagesGetting Started With Real-Time Analytics With Kafka and Spark in Microsoft Azure - Joe Plumb.Samudra BandaranayakeNo ratings yet

- Hitachi-HDS Technology UpdateDocument25 pagesHitachi-HDS Technology Updatekiennguyenh156No ratings yet

- Handle Large Messages in Apache KafkaDocument59 pagesHandle Large Messages in Apache KafkaBùi Văn KiênNo ratings yet

- Azure SQL Database & SQL DW: Data Platform AirliftDocument46 pagesAzure SQL Database & SQL DW: Data Platform AirliftRajaNo ratings yet

- Performance Checklist 1.3Document13 pagesPerformance Checklist 1.3Slobodan DžakićNo ratings yet

- Guide To Content Delivery NetworksDocument2 pagesGuide To Content Delivery NetworkstonalbadassNo ratings yet

- 1067 Ddos-Protection-Networks DS May2018 PDFDocument14 pages1067 Ddos-Protection-Networks DS May2018 PDFMCTCOLTDNo ratings yet

- GTmetrix Report WWW - Mmo Champion - Com 20180514T234510 3OMhA9aV FullDocument33 pagesGTmetrix Report WWW - Mmo Champion - Com 20180514T234510 3OMhA9aV FullAdiumvirNo ratings yet

- Caching TechniquesDocument4 pagesCaching TechniquesKoneri DamodaramNo ratings yet

- Disaster Recovery BSS Data CenterDocument53 pagesDisaster Recovery BSS Data CenterDeepak BhandariNo ratings yet

- Web Cache ThesisDocument8 pagesWeb Cache Thesisjenniferlordmanchester100% (1)

- HTTP 2.0 - TokyoDocument47 pagesHTTP 2.0 - TokyoDouglas SousaNo ratings yet

- Cassandra at TwitterDocument64 pagesCassandra at TwitterChris GoffinetNo ratings yet

- Berkeley Data Analytics Stack (BDAS) Overview: Ion Stoica UC BerkeleyDocument28 pagesBerkeley Data Analytics Stack (BDAS) Overview: Ion Stoica UC BerkeleysurenNo ratings yet

- Strategic Management and Entrepreneurship: Friends or Foes?Document14 pagesStrategic Management and Entrepreneurship: Friends or Foes?perumak3949No ratings yet

- Adkhar Book PDFDocument158 pagesAdkhar Book PDFshahid852hk100% (1)

- Christ in IslamDocument50 pagesChrist in Islamhttp://www.toursofpeace.com/100% (1)

- Job VacanciesDocument2 pagesJob VacanciesKabir J AbubakarNo ratings yet

- An Intelligent Technique For Controlling Web PrefeDocument8 pagesAn Intelligent Technique For Controlling Web PrefeKabir J AbubakarNo ratings yet

- Hybrid Approach For Improvement of Web PageDocument5 pagesHybrid Approach For Improvement of Web PageKabir J AbubakarNo ratings yet

- Real-Time Detection Tracking and Monitoring of Automatically Discovered Events in Social Media 2Document7 pagesReal-Time Detection Tracking and Monitoring of Automatically Discovered Events in Social Media 2Kabir J AbubakarNo ratings yet

- Web PrefetchingDocument182 pagesWeb PrefetchingKabir J AbubakarNo ratings yet

- RNSTE Management NotesDocument71 pagesRNSTE Management Notesantoshdyade100% (1)

- 2 A Survey of Web CachingDocument27 pages2 A Survey of Web CachingKabir J AbubakarNo ratings yet

- A Study on Web Prefetching TechniquesDocument8 pagesA Study on Web Prefetching TechniquesKabir J AbubakarNo ratings yet

- Development of An Internet of Things ArchitectureDocument153 pagesDevelopment of An Internet of Things ArchitectureKabir J AbubakarNo ratings yet

- High Performance P2P Web CachingDocument21 pagesHigh Performance P2P Web CachingJared Friedman100% (9)

- A semantic web approach for dealing with university coursesDocument166 pagesA semantic web approach for dealing with university coursesKabir J AbubakarNo ratings yet

- Jamb Brochure 2018Document1,032 pagesJamb Brochure 2018Kabir J AbubakarNo ratings yet

- Data Mining of Protein DatabasesDocument71 pagesData Mining of Protein DatabasesKabir J AbubakarNo ratings yet

- 1 A Study On Web Caching Architectures and Performance PDFDocument5 pages1 A Study On Web Caching Architectures and Performance PDFKabir J AbubakarNo ratings yet

- A Comparative Study of Content Management Systems Joomla Drupal and Wordpress PDFDocument8 pagesA Comparative Study of Content Management Systems Joomla Drupal and Wordpress PDFKabir J AbubakarNo ratings yet

- A Novel Approach For Web Pre-Fetching and CachingDocument8 pagesA Novel Approach For Web Pre-Fetching and CachingKabir J AbubakarNo ratings yet

- Performance and Cost Effectiveness of Caching in Mobile 2015Document9 pagesPerformance and Cost Effectiveness of Caching in Mobile 2015Kabir J AbubakarNo ratings yet

- WWW Caching-Trends and TechniquesDocument8 pagesWWW Caching-Trends and TechniquesKabir J AbubakarNo ratings yet

- Web CachingDocument30 pagesWeb CachingKabir J AbubakarNo ratings yet

- Boiko WP UnderstandingContentManagement PDFDocument13 pagesBoiko WP UnderstandingContentManagement PDFKabir J AbubakarNo ratings yet

- 6 Design and Performance Analysis of The Web Caching Algorithm For Performance Improvement of The Response SpeedDocument2 pages6 Design and Performance Analysis of The Web Caching Algorithm For Performance Improvement of The Response SpeedKabir J AbubakarNo ratings yet

- Design and Implementation of an Ordering System Using UML, Formal Specification and JavaDocument11 pagesDesign and Implementation of an Ordering System Using UML, Formal Specification and JavaDexter KamalNo ratings yet

- A Framework For Web Content Management System OperDocument9 pagesA Framework For Web Content Management System OperKabir J AbubakarNo ratings yet

- Computer ArchitectureDocument12 pagesComputer Architectureproxytech100% (1)

- 07+ +Flowchart+and+DFDDocument12 pages07+ +Flowchart+and+DFDChuchu SintayehuNo ratings yet

- Project Report On Rich Internet Application For Automatic College Timetable Generation (24th March 2008)Document112 pagesProject Report On Rich Internet Application For Automatic College Timetable Generation (24th March 2008)Anitha Ganesh100% (1)

- Nework Lectue Note BusDocument30 pagesNework Lectue Note BusHAILE KEBEDENo ratings yet

- Websense-V850 v5000g4 Qs PosterDocument2 pagesWebsense-V850 v5000g4 Qs PosterAnonymous 1zmdB7JNo ratings yet

- Comcast Declares War On TorDocument45 pagesComcast Declares War On TorHarry MoleNo ratings yet

- LTRT-57601 CPE SIP Troubleshooting GuideDocument80 pagesLTRT-57601 CPE SIP Troubleshooting GuideIoana CîlniceanuNo ratings yet

- Install GuideDocument189 pagesInstall GuideAdnan RajkotwalaNo ratings yet

- ActivateDocument7 pagesActivateekocu2121No ratings yet

- UI Performance Analysis - An Approach Using JMeter and JProfilerDocument19 pagesUI Performance Analysis - An Approach Using JMeter and JProfileraustinfru0% (1)

- MSF Analysis PtesDocument39 pagesMSF Analysis PtesJon RossiterNo ratings yet

- Microsoft CAS - Top 20 USe Cases - Sept2019 PDFDocument18 pagesMicrosoft CAS - Top 20 USe Cases - Sept2019 PDFasdrubalmartinsNo ratings yet

- Butterfly - Security Project - 1.0Document125 pagesButterfly - Security Project - 1.0Wilson Ganazhapa100% (1)

- Asterisk SecretDocument46 pagesAsterisk SecretyosuaalvinNo ratings yet

- Epscpe100 QSG Gfk-3012cDocument26 pagesEpscpe100 QSG Gfk-3012cJulio Cesar Perez NavarroNo ratings yet

- Oracle® Business Intelligence Publisher: Release Notes Release 10.1.3.4.2Document42 pagesOracle® Business Intelligence Publisher: Release Notes Release 10.1.3.4.2Terry UretaNo ratings yet

- CVDocument4,270 pagesCVAryan Nhemaphuki100% (1)

- WIPC302 WIPC302 WIPC302 WIPC302: Wireless N IP CameraDocument51 pagesWIPC302 WIPC302 WIPC302 WIPC302: Wireless N IP Camerafb888121No ratings yet

- Core Impact User Guide 18.2Document434 pagesCore Impact User Guide 18.2Naveen KumarNo ratings yet

- 21.4.7 Lab - Certificate Authority StoresDocument7 pages21.4.7 Lab - Certificate Authority StoresLISS MADELEN TRAVEZ ANGUETANo ratings yet

- Linux Admin Course DetailsDocument3 pagesLinux Admin Course Detailsrajashekar addepalliNo ratings yet

- Summary: Freelance Senior Analyst-Developer and Project ManagerDocument23 pagesSummary: Freelance Senior Analyst-Developer and Project ManagerashaNo ratings yet

- Using FireBase, FireDac, DataSnap, Rest, Wifi, and FireMonkeyDocument54 pagesUsing FireBase, FireDac, DataSnap, Rest, Wifi, and FireMonkeyMarceloMoreiraCunhaNo ratings yet

- Zimbra AdminDocument208 pagesZimbra AdminVarun AkashNo ratings yet

- Sophos Web Appliance: Advanced Web Protection Made SimpleDocument2 pagesSophos Web Appliance: Advanced Web Protection Made SimpleEmile AnatohNo ratings yet

- MDN 1312DGDocument86 pagesMDN 1312DGLuis Enrique Carmona GutierrezNo ratings yet

- All You Need To Know About Bluecoat ProxyDocument107 pagesAll You Need To Know About Bluecoat ProxyJennifer Nieves100% (2)

- 3GPP EPS AAA InterfacesDocument136 pages3GPP EPS AAA Interfacesmail2smkNo ratings yet

- Squid Configuration InstallationDocument5 pagesSquid Configuration InstallationHamzaNo ratings yet

- Routerbricks: Scaling Software Routers With Modern ServersDocument51 pagesRouterbricks: Scaling Software Routers With Modern ServersCristi TohaneanNo ratings yet

- Dcm4chee Oviyam2 and Weasis Deployed in Windows Environment - Pea Ip AgentDocument23 pagesDcm4chee Oviyam2 and Weasis Deployed in Windows Environment - Pea Ip AgentMANUEL ARMANDO LOPEZ PEREZNo ratings yet

- Reverse Engineering Android App to Find Security VulnerabilitiesDocument7 pagesReverse Engineering Android App to Find Security VulnerabilitiesMinh Nhat TranNo ratings yet

- 3.1-1 Introduction To Server Computing SASMADocument11 pages3.1-1 Introduction To Server Computing SASMACris VidalNo ratings yet