Professional Documents

Culture Documents

BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands On Training)

Uploaded by

D.KESAVARAJAOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands On Training)

Uploaded by

D.KESAVARAJACopyright:

Available Formats

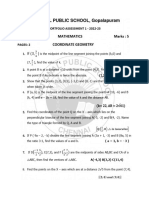

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

One Day Workshop

on

BIG DATA WITH HADOOP, HDFS & MAPREDUCE

(Hands on Training)

Organized By

Department of Computer Science and Engineering,

K.Ramakrishnan College of Technology, Trichy

4th March 2017

Resource Person

Mr.D.Kesavaraja M.E , MBA, (PhD) , MISTE

Assistant Professor,

Department of Computer Science and Engineering,

Dr.Sivanthi Aditanar College of Engineering

Tiruchendur - 628215

ABOUT THE WORKSHOP

This workshop focuses on facilitating the students and faculties to

gain experience to solve the Big Data Problem by Hadoop. Hadoop is an

open source set of tools, based on Java based programming framework,

distributed under apache license. The main objective of Hadoop is to

support running of applications on BIG DATA.

TOPICS TO BE DISCUSSED

Hadoop and its Characteristics

Architecture of Hadoop and HDFS

Hadoop Installation

HDFS Demo

Java Programming in HDFS

Map Reduce Demo

Advantages & Limitations of Hadoop

Job Opportunities in Big Data & Hadoop

Presented By D.Kesavaraja www.k7cloud.in 1|Page

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

Hands on - Live Experiments

E-Resources , Forums and Groups.

Discussion and Clarifications

Knowing is not enough

We must apply

Willing is not enough

We must do

More Details Visit : www.k7cloud.in

: http://k7training.blogspot.in

*************

Setup the one node Apache Hadoop Cluster in CentOS 7

Aim :

To find procedure to set up the one node Hadoop cluster.

Introduction :

Presented By D.Kesavaraja www.k7cloud.in 2|Page

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

Apache Hadoop is an Open Source framework build for distributed Big Data storage

and processing data across computer clusters. The project is based on the following

components:

1. Hadoop Common it contains the Java libraries and utilities needed by other

Hadoop modules.

2. HDFS Hadoop Distributed File System A Java based scalable file system

distributed across multiple nodes.

3. MapReduce YARN framework for parallel big data processing.

4. Hadoop YARN: A framework for cluster resource management.

Procedure :

Step 1: Install Java on CentOS 7

1. Before proceeding with Java installation, first login with root user or a user with

root privileges setup your machine hostname with the following command.

# hostnamectl set-hostname master

Set Hostname in CentOS 7

Also, add a new record in hosts file with your own machine FQDN to point to your

system IP Address.

# vi /etc/hosts

Add the below line:

192.168.1.41 master.hadoop.lan

Presented By D.Kesavaraja www.k7cloud.in 3|Page

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

Set Hostname in /etc/hosts File

Replace the above hostname and FQDN records with your own settings.

2. Next, go to Oracle Java download page and grab the latest version of Java SE

Development Kit 8 on your system with the help of curl command:

# curl -LO -H "Cookie: oraclelicense=accept-securebackup-cookie"

http://download.oracle.com/otn-pub/java/jdk/8u92-b14/jdk-8u92-linux-x64.rpm

Download Java SE Development Kit 8

3. After the Java binary download finishes, install the package by issuing the below

command:

# rpm -Uvh jdk-8u92-linux-x64.rpm

Install Java in CentOS 7

Step 2: Install Hadoop Framework in CentOS 7

4. Next, create a new user account on your system without root powers which well

use it for Hadoop installation path and working environment. The new account home

directory will reside in /opt/hadoop directory.

Presented By D.Kesavaraja www.k7cloud.in 4|Page

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

# useradd -d /opt/hadoop hadoop

# passwd hadoop

5. On the next step visit Apache Hadoop page in order to get the link for the latest

stable version and download the archive on your system.

# curl -O http://apache.javapipe.com/hadoop/common/hadoop-2.7.2/hadoop-

2.7.2.tar.gz

Download Hadoop Package

6. Extract the archive the copy the directory content to hadoop account home path.

Also, make sure you change the copied files permissions accordingly.

# tar xfz hadoop-2.7.2.tar.gz

# cp -rf hadoop-2.7.2/* /opt/hadoop/

# chown -R hadoop:hadoop /opt/hadoop/

Extract-and Set Permissions on Hadoop

7. Next, login with hadoop user and configure Hadoop and Java Environment

Variables on your system by editing the .bash_profile file.

# su - hadoop

$ vi .bash_profile

Append the following lines at the end of the file:

## JAVA env variables

export JAVA_HOME=/usr/java/default

export PATH=$PATH:$JAVA_HOME/bin

Presented By D.Kesavaraja www.k7cloud.in 5|Page

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

export

CLASSPATH=.:$JAVA_HOME/jre/lib:$JAVA_HOME/lib:$JAVA_HOME/lib/tools.jar

## HADOOP env variables

export HADOOP_HOME=/opt/hadoop

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_YARN_HOME=$HADOOP_HOME

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native"

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

Configure Hadoop and Java Environment Variables

8. Now, initialize the environment variables and check their status by issuing the

below commands:

$ source .bash_profile

$ echo $HADOOP_HOME

$ echo $JAVA_HOME

Presented By D.Kesavaraja www.k7cloud.in 6|Page

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

Initialize Linux Environment Variables

9. Finally, configure ssh key based authentication for hadoop account by running the

below commands (replace the hostname or FQDN against the ssh-copy-

id command accordingly).

Also, leave the passphrase filed blank in order to automatically login via ssh.

$ ssh-keygen -t rsa

$ ssh-copy-id master.hadoop.lan

Configure SSH Key Based Authentication

Step 3: Configure Hadoop in CentOS 7

10. Now its time to setup Hadoop cluster on a single node in a pseudo distributed

mode by editing its configuration files.

The location of hadoop configuration files is $HADOOP_HOME/etc/hadoop/, which is

represented in this tutorial by hadoop account home directory (/opt/hadoop/) path.

Once youre logged in with user hadoop you can start editing the following

configuration file.

The first to edit is core-site.xml file. This file contains information about the port

number used by Hadoop instance, file system allocated memory, data store memory

limit and the size of Read/Write buffers.

Presented By D.Kesavaraja www.k7cloud.in 7|Page

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

$ vi etc/hadoop/core-site.xml

Add the following properties between <configuration> ...

</configuration> tags. Use localhost or your machine FQDN for hadoop instance.

<property>

<name>fs.defaultFS</name>

<value>hdfs://master.hadoop.lan:9000/</value>

</property>

Configure Hadoop Cluster

11. Next open and edit hdfs-site.xml file. The file contains information about the

value of replication data, namenode path and datanode path for local file systems.

$ vi etc/hadoop/hdfs-site.xml

Here add the following properties between <configuration> ...

</configuration> tags. On this guide well use/opt/volume/ directory to store our

hadoop file system.

Replace the dfs.data.dir and dfs.name.dir values accordingly.

<property>

<name>dfs.data.dir</name>

<value>file:///opt/volume/datanode</value>

</property>

Presented By D.Kesavaraja www.k7cloud.in 8|Page

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

<property>

<name>dfs.name.dir</name>

<value>file:///opt/volume/namenode</value>

</property>

Configure Hadoop Storage

12. Because weve specified /op/volume/ as our hadoop file system storage, we

need to create those two directories (datanode and namenode) from root account

and grant all permissions to hadoop account by executing the below commands.

$ su root

# mkdir -p /opt/volume/namenode

# mkdir -p /opt/volume/datanode

# chown -R hadoop:hadoop /opt/volume/

# ls -al /opt/ #Verify permissions

# exit #Exit root account to turn back to hadoop user

Presented By D.Kesavaraja www.k7cloud.in 9|Page

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

Configure Hadoop System Storage

13. Next, create the mapred-site.xml file to specify that we are using yarn

MapReduce framework.

$ vi etc/hadoop/mapred-site.xml

Add the following excerpt to mapred-site.xml file:

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

Presented By D.Kesavaraja www.k7cloud.in 10 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

Set Yarn MapReduce Framework

14. Now, edit yarn-site.xml file with the below statements enclosed

between <configuration> ... </configuration> tags:

$ vi etc/hadoop/yarn-site.xml

Add the following excerpt to yarn-site.xml file:

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

Add Yarn Configuration

15. Finally, set Java home variable for Hadoop environment by editing the below line

from hadoop-env.sh file.

$ vi etc/hadoop/hadoop-env.sh

Edit the following line to point to your Java system path.

export JAVA_HOME=/usr/java/default/

Presented By D.Kesavaraja www.k7cloud.in 11 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

Set Java Home Variable for Hadoop

16. Also, replace the localhost value from slaves file to point to your machine

hostname set up at the beginning of this tutorial.

$ vi etc/hadoop/slaves

Step 4: Format Hadoop Namenode

17. Once hadoop single node cluster has been setup its time to initialize HDFS file

system by formatting the /opt/volume/namenode storage directory with the

following command:

$ hdfs namenode -format

Presented By D.Kesavaraja www.k7cloud.in 12 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

Format Hadoop Namenode

Hadoop Namenode Formatting Process

Step 5: Start and Test Hadoop Cluster

18. The Hadoop commands are located in $HADOOP_HOME/sbin directory. In

order to start Hadoop services run the below commands on your console:

$ start-dfs.sh

$ start-yarn.sh

Presented By D.Kesavaraja www.k7cloud.in 13 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

Check the services status with the following command.

$ jps

Start and Test Hadoop Cluster

Alternatively, you can view a list of all open sockets for Apache Hadoop on your

system using the ss command.

$ ss -tul

$ ss -tuln # Numerical output

Presented By D.Kesavaraja www.k7cloud.in 14 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

Check Apache Hadoop Sockets

19. To test hadoop file system cluster create a random directory in the HDFS file

system and copy a file from local file system to HDFS storage (insert data to HDFS).

$ hdfs dfs -mkdir /my_storage

$ hdfs dfs -put LICENSE.txt /my_storage

Check Hadoop Filesystem Cluster

To view a file content or list a directory inside HDFS file system issue the below

commands:

$ hdfs dfs -cat /my_storage/LICENSE.txt

$ hdfs dfs -ls /my_storage/

Presented By D.Kesavaraja www.k7cloud.in 15 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

List Hadoop Filesystem Content

Check Hadoop Filesystem Directory

To retrieve data from HDFS to our local file system use the below command:

$ hdfs dfs -get /my_storage/ ./

Copy Hadoop Filesystem Data to Local System

Get the full list of HDFS command options by issuing:

$ hdfs dfs -help

Step 6: Browse Hadoop Services

20. In order to access Hadoop services from a remote browser visit the following

links (replace the IP Address of FQDN accordingly). Also, make sure the below ports

are open on your system firewall.

For Hadoop Overview of NameNode service.

http://192.168.1.41:50070

Presented By D.Kesavaraja www.k7cloud.in 16 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

Access Hadoop Services

For Hadoop file system browsing (Directory Browse).

http://192.168.1.41:50070/explorer.html

Hadoop Filesystem Directory Browsing

For Cluster and Apps Information (ResourceManager).

http://192.168.1.41:8088

Presented By D.Kesavaraja www.k7cloud.in 17 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

Hadoop Cluster Applications

For NodeManager Information.

http://192.168.1.41:8042

Hadoop NodeManager

Step 7: Manage Hadoop Services

21. To stop all hadoop instances run the below commands:

$ stop-yarn.sh

$ stop-dfs.sh

Presented By D.Kesavaraja www.k7cloud.in 18 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

Stop Hadoop Services

22. In order to enable Hadoop daemons system-wide, login with root user,

open /etc/rc.local file for editing and add the below lines:

$ su - root

# vi /etc/rc.local

Add these excerpt to rc.local file.

su - hadoop -c "/opt/hadoop/sbin/start-dfs.sh"

su - hadoop -c "/opt/hadoop/sbin/start-yarn.sh"

exit 0

Enable Hadoop Services at System-Boot

Presented By D.Kesavaraja www.k7cloud.in 19 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

Then, add executable permissions for rc.local file and enable, start and check

service status by issuing the below commands:

$ chmod +x /etc/rc.d/rc.local

$ systemctl enable rc-local

$ systemctl start rc-local

$ systemctl status rc-local

Enable and Check Hadoop Services

Thats it! Next time you reboot your machine the Hadoop services will be

automatically started for you!

Using FileSystem API to read and write data to

HDFS

Reading data from and writing data to Hadoop Distributed File System

(HDFS) can be done in a lot of ways. Now let us start by using the FileSystem

API to create and write to a file in HDFS, followed by an application to read a file

from HDFS and write it back to the local file system.

Step 1: Once you have downloaded a test dataset, we can write an application to

read a file from the local file system and write the contents to Hadoop Distributed

File System.

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.util.Tool;

import java.io.BufferedInputStream;

import java.io.FileInputStream;

import java.io.InputStream;

import java.io.OutputStream;

Presented By D.Kesavaraja www.k7cloud.in 20 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.util.ToolRunner;

public class HdfsWriter extends Configured implements Tool {

public static final String FS_PARAM_NAME = "fs.defaultFS";

public int run(String[] args) throws Exception {

if (args.length < 2) {

System.err.println("HdfsWriter [local input path] [hdfs output path]");

return 1;

}

String localInputPath = args[0];

Path outputPath = new Path(args[1]);

Configuration conf = getConf();

System.out.println("configured filesystem = " + conf.get(FS_PARAM_NAME));

FileSystem fs = FileSystem.get(conf);

if (fs.exists(outputPath)) {

System.err.println("output path exists");

return 1;

}

OutputStream os = fs.create(outputPath);

InputStream is = new BufferedInputStream(new FileInputStream(localInputPath));

IOUtils.copyBytes(is, os, conf);

return 0;

}

public static void main( String[] args ) throws Exception {

int returnCode = ToolRunner.run(new HdfsWriter(), args);

System.exit(returnCode);

}

}

Step 2: Export the Jar file and run the code from terminal to write a sample file to

HDFS.

[root@localhost student]# vi HdfsWriter.java

[root@localhost student]# /usr/java/jdk1.8.0_91/bin/javac HdfsWriter.java

[root@localhost student]# /usr/java/jdk1.8.0_91/bin/jar cvfe HdfsWriter.jar

HdfsWriter HdfsWriter.class

[root@localhost student]# hadoop jar HdfsWriter.jar a.txt kkk.txt

configured filesystem = hdfs://localhost:9000/

[root@localhost student]# hadoop jar HdfsReader.jar /user/root/kkk.txt kesava.txt

configured filesystem = hdfs://localhost:9000/

Presented By D.Kesavaraja www.k7cloud.in 21 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

Step 3: Verify whether the file is written into HDFS and check the contents of the

file.

[training@localhost ~]$ hadoop fs -cat /user/training/HdfsWriter_sample.txt

Step 4: Next, we write an application to read the file we just created in Hadoop

Distributed File System and write its contents back to the local file system.

import java.io.BufferedOutputStream;

import java.io.FileOutputStream;

import java.io.InputStream;

import java.io.OutputStream;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

public class HdfsReader extends Configured implements Tool {

public static final String FS_PARAM_NAME = "fs.defaultFS";

public int run(String[] args) throws Exception {

if (args.length < 2) {

System.err.println("HdfsReader [hdfs input path] [local output path]");

return 1;

}

Path inputPath = new Path(args[0]);

String localOutputPath = args[1];

Configuration conf = getConf();

System.out.println("configured filesystem = " + conf.get(FS_PARAM_NAME));

FileSystem fs = FileSystem.get(conf);

InputStream is = fs.open(inputPath);

OutputStream os = new BufferedOutputStream(new

FileOutputStream(localOutputPath));

IOUtils.copyBytes(is, os, conf);

return 0;

}

public static void main( String[] args ) throws Exception {

int returnCode = ToolRunner.run(new HdfsReader(), args);

System.exit(returnCode);

}

}

Step 5: Export the Jar file and run the code from terminal to write a sample file to

HDFS.

[root@localhost student]# vi HdfsReader.java

[root@localhost student]# /usr/java/jdk1.8.0_91/bin/javac HdfsReader.java

[root@localhost student]# /usr/java/jdk1.8.0_91/bin/jar cvfe HdfsReader.jar

HdfsReader HdfsReader.class

Presented By D.Kesavaraja www.k7cloud.in 22 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

[root@localhost student]# hadoop jar HdfsReader.jar /user/root/kkk.txt sample.txt

configured filesystem = hdfs://localhost:9000/

Step 6: Verify whether the file is written back into local file system.

[training@localhost ~]$ hadoop fs -cat /user/training/HdfsWriter_sample.txt

Hadoop - MapReduce

MapReduce is a framework using which we can write applications to process huge

amounts of data, in parallel, on large clusters of commodity hardware in a reliable

manner.

What is MapReduce?

MapReduce is a processing technique and a program model for distributed computing

based on java. The MapReduce algorithm contains two important tasks, namely Map

and Reduce. Map takes a set of data and converts it into another set of data, where

individual elements are broken down into tuples (key/value pairs). Secondly, reduce

task, which takes the output from a map as an input and combines those data tuples

into a smaller set of tuples. As the sequence of the name MapReduce implies, the

reduce task is always performed after the map job.

The major advantage of MapReduce is that it is easy to scale data processing over

multiple computing nodes. Under the MapReduce model, the data processing

primitives are called mappers and reducers. Decomposing a data processing

application into mappers and reducers is sometimes nontrivial. But, once we write an

application in the MapReduce form, scaling the application to run over hundreds,

thousands, or even tens of thousands of machines in a cluster is merely a

configuration change. This simple scalability is what has attracted many programmers

to use the MapReduce model.

The Algorithm

Generally MapReduce paradigm is based on sending the computer to where

the data resides!

MapReduce program executes in three stages, namely map stage, shuffle

stage, and reduce stage.

o Map stage : The map or mappers job is to process the input data.

Generally the input data is in the form of file or directory and is stored

in the Hadoop file system (HDFS). The input file is passed to the mapper

function line by line. The mapper processes the data and creates several

small chunks of data.

o Reduce stage : This stage is the combination of the Shuffle stage and

the Reduce stage. The Reducers job is to process the data that comes

from the mapper. After processing, it produces a new set of output,

which will be stored in the HDFS.

During a MapReduce job, Hadoop sends the Map and Reduce tasks to the

appropriate servers in the cluster.

The framework manages all the details of data-passing such as issuing tasks,

verifying task completion, and copying data around the cluster between the

nodes.

Most of the computing takes place on nodes with data on local disks that

reduces the network traffic.

Presented By D.Kesavaraja www.k7cloud.in 23 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

After completion of the given tasks, the cluster collects and reduces the data

to form an appropriate result, and sends it back to the Hadoop server.

Inputs and Outputs (Java Perspective)

The MapReduce framework operates on <key, value> pairs, that is, the framework

views the input to the job as a set of <key, value> pairs and produces a set of <key,

value> pairs as the output of the job, conceivably of different types.

The key and the value classes should be in serialized manner by the framework and

hence, need to implement the Writable interface. Additionally, the key classes have

to implement the Writable-Comparable interface to facilitate sorting by the

framework. Input and Output types of a MapReduce job: (Input) <k1, v1> -> map -

> <k2, v2>-> reduce -> <k3, v3>(Output).

Input Output

Map <k1, v1> list (<k2, v2>)

Reduce <k2, list(v2)> list (<k3, v3>)

Terminology

PayLoad - Applications implement the Map and the Reduce functions, and

form the core of the job.

Mapper - Mapper maps the input key/value pairs to a set of intermediate

key/value pair.

NamedNode - Node that manages the Hadoop Distributed File System

(HDFS).

DataNode - Node where data is presented in advance before any processing

takes place.

MasterNode - Node where JobTracker runs and which accepts job requests

from clients.

SlaveNode - Node where Map and Reduce program runs.

JobTracker - Schedules jobs and tracks the assign jobs to Task tracker.

Task Tracker - Tracks the task and reports status to JobTracker.

Job - A program is an execution of a Mapper and Reducer across a dataset.

Task - An execution of a Mapper or a Reducer on a slice of data.

Task Attempt - A particular instance of an attempt to execute a task on a

SlaveNode.

Presented By D.Kesavaraja www.k7cloud.in 24 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

Example Scenario

Given below is the data regarding the electrical consumption of an organization. It

contains the monthly electrical consumption and the annual average for various

years.

Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec Avg

1979 23 23 2 43 24 25 26 26 26 26 25 26 25

1980 26 27 28 28 28 30 31 31 31 30 30 30 29

1981 31 32 32 32 33 34 35 36 36 34 34 34 34

1984 39 38 39 39 39 41 42 43 40 39 38 38 40

1985 38 39 39 39 39 41 41 41 00 40 39 39 45

If the above data is given as input, we have to write applications to process it and

produce results such as finding the year of maximum usage, year of minimum usage,

and so on. This is a walkover for the programmers with finite number of records.

They will simply write the logic to produce the required output, and pass the data to

the application written.

But, think of the data representing the electrical consumption of all the largescale

industries of a particular state, since its formation.

When we write applications to process such bulk data,

They will take a lot of time to execute.

There will be a heavy network traffic when we move data from source to

network server and so on.

To solve these problems, we have the MapReduce framework.

Input Data

The above data is saved as sample.txtand given as input. The input file looks as

shown below.

1979 23 23 2 43 24 25 26 26 26 26 25 26 25

1980 26 27 28 28 28 30 31 31 31 30 30 30 29

1981 31 32 32 32 33 34 35 36 36 34 34 34 34

1984 39 38 39 39 39 41 42 43 40 39 38 38 40

1985 38 39 39 39 39 41 41 41 00 40 39 39 45

Example Program

Given below is the program to the sample data using MapReduce framework.

package hadoop;

import java.util.*;

import java.io.IOException;

import java.io.IOException;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.conf.*;

Presented By D.Kesavaraja www.k7cloud.in 25 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

import org.apache.hadoop.io.*;

import org.apache.hadoop.mapred.*;

import org.apache.hadoop.util.*;

public class ProcessUnits

{

//Mapper class

public static class E_EMapper extends MapReduceBase implements

Mapper<LongWritable ,/*Input key Type */

Text, /*Input value Type*/

Text, /*Output key Type*/

IntWritable> /*Output value Type*/

{

//Map function

public void map(LongWritable key, Text value,

OutputCollector<Text, IntWritable> output,

Reporter reporter) throws IOException

{

String line = value.toString();

String lasttoken = null;

StringTokenizer s = new StringTokenizer(line,"\t");

String year = s.nextToken();

while(s.hasMoreTokens())

{

lasttoken=s.nextToken();

}

int avgprice = Integer.parseInt(lasttoken);

output.collect(new Text(year), new IntWritable(avgprice));

}

}

//Reducer class

public static class E_EReduce extends MapReduceBase implements

Reducer< Text, IntWritable, Text, IntWritable >

{

//Reduce function

public void reduce( Text key, Iterator <IntWritable> values,

OutputCollector<Text, IntWritable> output, Reporter reporter) throws

IOException

{

int maxavg=30;

int val=Integer.MIN_VALUE;

Presented By D.Kesavaraja www.k7cloud.in 26 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

while (values.hasNext())

{

if((val=values.next().get())>maxavg)

{

output.collect(key, new IntWritable(val));

}

}

}

}

//Main function

public static void main(String args[])throws Exception

{

JobConf conf = new JobConf(ProcessUnits.class);

conf.setJobName("max_eletricityunits");

conf.setOutputKeyClass(Text.class);

conf.setOutputValueClass(IntWritable.class);

conf.setMapperClass(E_EMapper.class);

conf.setCombinerClass(E_EReduce.class);

conf.setReducerClass(E_EReduce.class);

conf.setInputFormat(TextInputFormat.class);

conf.setOutputFormat(TextOutputFormat.class);

FileInputFormat.setInputPaths(conf, new Path(args[0]));

FileOutputFormat.setOutputPath(conf, new Path(args[1]));

JobClient.runJob(conf);

}

}

Save the above program as ProcessUnits.java. The compilation and execution of

the program is explained below.

Compilation and Execution of Process Units Program

Let us assume we are in the home directory of a Hadoop user (e.g. /home/hadoop).

Follow the steps given below to compile and execute the above program.

Step 1

The following command is to create a directory to store the compiled java classes.

$ mkdir units

Step 2

Download Hadoop-core-1.2.1.jar, which is used to compile and execute the

MapReduce program. Visit the following

link http://mvnrepository.com/artifact/org.apache.hadoop/hadoop-core/1.2.1 to

download the jar. Let us assume the downloaded folder is /home/hadoop/.

Presented By D.Kesavaraja www.k7cloud.in 27 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

Step 3

The following commands are used for compiling the ProcessUnits.java program

and creating a jar for the program.

$ javac -classpath hadoop-core-1.2.1.jar -d units ProcessUnits.java

$ jar -cvf units.jar -C units/ .

Step 4

The following command is used to create an input directory in HDFS.

$HADOOP_HOME/bin/hadoop fs -mkdir input_dir

Step 5

The following command is used to copy the input file named sample.txtin the input

directory of HDFS.

$HADOOP_HOME/bin/hadoop fs -put /home/hadoop/sample.txt input_dir

Step 6

The following command is used to verify the files in the input directory.

$HADOOP_HOME/bin/hadoop fs -ls input_dir/

Step 7

The following command is used to run the Eleunit_max application by taking the input

files from the input directory.

$HADOOP_HOME/bin/hadoop jar units.jar hadoop.ProcessUnits input_dir output_dir

Wait for a while until the file is executed. After execution, as shown below, the output

will contain the number of input splits, the number of Map tasks, the number of

reducer tasks, etc.

INFO mapreduce.Job: Job job_1414748220717_0002

completed successfully

14/10/31 06:02:52

INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=61

FILE: Number of bytes written=279400

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=546

HDFS: Number of bytes written=40

HDFS: Number of read operations=9

HDFS: Number of large read operations=0

HDFS: Number of write operations=2 Job Counters

Launched map tasks=2

Launched reduce tasks=1

Data-local map tasks=2

Total time spent by all maps in occupied slots (ms)=146137

Presented By D.Kesavaraja www.k7cloud.in 28 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

Total time spent by all reduces in occupied slots (ms)=441

Total time spent by all map tasks (ms)=14613

Total time spent by all reduce tasks (ms)=44120

Total vcore-seconds taken by all map tasks=146137

Total vcore-seconds taken by all reduce tasks=44120

Total megabyte-seconds taken by all map tasks=149644288

Total megabyte-seconds taken by all reduce tasks=45178880

Map-Reduce Framework

Map input records=5

Map output records=5

Map output bytes=45

Map output materialized bytes=67

Input split bytes=208

Combine input records=5

Combine output records=5

Reduce input groups=5

Reduce shuffle bytes=6

Reduce input records=5

Reduce output records=5

Spilled Records=10

Shuffled Maps =2

Failed Shuffles=0

Merged Map outputs=2

GC time elapsed (ms)=948

CPU time spent (ms)=5160

Physical memory (bytes) snapshot=47749120

Virtual memory (bytes) snapshot=2899349504

Total committed heap usage (bytes)=277684224

File Output Format Counters

Bytes Written=40

Step 8

The following command is used to verify the resultant files in the output folder.

$HADOOP_HOME/bin/hadoop fs -ls output_dir/

Step 9

The following command is used to see the output in Part-00000 file. This file is

generated by HDFS.

$HADOOP_HOME/bin/hadoop fs -cat output_dir/part-00000

Below is the output generated by the MapReduce program.

1981 34

1984 40

Presented By D.Kesavaraja www.k7cloud.in 29 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

1985 45

Step 10

The following command is used to copy the output folder from HDFS to the local file

system for analyzing.

$HADOOP_HOME/bin/hadoop fs -cat output_dir/part-00000/bin/hadoop dfs get

output_dir /home/hadoop

Important Commands

All Hadoop commands are invoked by

the $HADOOP_HOME/bin/hadoop command. Running the Hadoop script without

any arguments prints the description for all commands.

Usage : hadoop [--config confdir] COMMAND

The following table lists the options available and their description.

Options Description

namenode -format Formats the DFS filesystem.

secondarynamenode Runs the DFS secondary namenode.

namenode Runs the DFS namenode.

datanode Runs a DFS datanode.

dfsadmin Runs a DFS admin client.

mradmin Runs a Map-Reduce admin client.

fsck Runs a DFS filesystem checking utility.

fs Runs a generic filesystem user client.

balancer Runs a cluster balancing utility.

oiv Applies the offline fsimage viewer to an fsimage.

fetchdt Fetches a delegation token from the NameNode.

jobtracker Runs the MapReduce job Tracker node.

pipes Runs a Pipes job.

tasktracker Runs a MapReduce task Tracker node.

historyserver Runs job history servers as a standalone daemon.

job Manipulates the MapReduce jobs.

queue Gets information regarding JobQueues.

Presented By D.Kesavaraja www.k7cloud.in 30 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

version Prints the version.

jar <jar> Runs a jar file.

distcp <srcurl> <desturl> Copies file or directories recursively.

distcp2 <srcurl> <desturl> DistCp version 2.

archive -archiveName Creates a hadoop archive.

NAME -p

<parent path> <src>*

<dest>

classpath Prints the class path needed to get the Hadoop jar

and the required libraries.

daemonlog Get/Set the log level for each daemon

How to Interact with MapReduce Jobs

Usage: hadoop job [GENERIC_OPTIONS]

The following are the Generic Options available in a Hadoop job.

GENERIC_OPTIONS Description

-submit <job-file> Submits the job.

-status <job-id> Prints the map and reduce completion percentage

and all job counters.

-counter <job-id> <group- Prints the counter value.

name> <countername>

-kill <job-id> Kills the job.

-events <job-id> Prints the events' details received by jobtracker for

<fromevent-#> <#-of- the given range.

events>

-history [all] <jobOutputDir> Prints job details, failed and killed tip details. More

- history < jobOutputDir> details about the job such as successful tasks and

task attempts made for each task can be viewed

by specifying the [all] option.

-list[all] Displays all jobs. -list displays only jobs which are

yet to complete.

-kill-task <task-id> Kills the task. Killed tasks are NOT counted against

failed attempts.

Presented By D.Kesavaraja www.k7cloud.in 31 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

-fail-task <task-id> Fails the task. Failed tasks are counted against

failed attempts.

-set-priority <job-id> Changes the priority of the job. Allowed priority

<priority> values are VERY_HIGH, HIGH, NORMAL, LOW,

VERY_LOW

To see the status of job

$ $HADOOP_HOME/bin/hadoop job -status <JOB-ID>

e.g.

$ $HADOOP_HOME/bin/hadoop job -status job_201310191043_0004

To see the history of job output-dir

$ $HADOOP_HOME/bin/hadoop job -history <DIR-NAME>

e.g.

$ $HADOOP_HOME/bin/hadoop job -history /user/expert/output

To kill the job

$ $HADOOP_HOME/bin/hadoop job -kill <JOB-ID>

e.g.

$ $HADOOP_HOME/bin/hadoop job -kill job_201310191043_0004

MAP REDUCE

[student@localhost ~]$ su

Password:

[root@localhost student]# su - hadoop

Last login: Wed Aug 31 10:14:26 IST 2016 on pts/1

[hadoop@localhost ~]$ mkdir mapreduce

[hadoop@localhost ~]$ cd mapreduce

[hadoop@localhost mapreduce]$ vi WordCountMapper.java

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.io.*;

import org.apache.hadoop.mapreduce.Mapper;

public class WordCountMapper extends Mapper<LongWritable, Text, Text,

IntWritable>

{

private final static IntWritable one= new IntWritable(1);

private Text word = new Text();

public void map(LongWritable key, Text value, Context context) throws

IOException, InterruptedException

{

String line = value.toString();

StringTokenizer tokenizer = newStringTokenizer (line);

Presented By D.Kesavaraja www.k7cloud.in 32 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

while(tokenizer.hasMoreTokens())

{

word.set(tokenizer.nextToken());

context.write(word,one);

}

}

}

[hadoop@localhost mapreduce]$ vi WordCountReducer.java

import java.io.IOException;

import java.util.Iterator;

import org.apache.hadoop.io.*;

import org.apache.hadoop.mapreduce.Reducer;

public class WordCountReducer extends Reducer<Text, IntWritable, Text,

IntWritable>

{

//Reduce method for just outputting the key from mapper as the value from mapper

is just an empty string

public void reduce(Text key, Iterable<IntWritable> values, Context context)

throws IOException, InterruptedException

{

int sum = 0;

//iterates through all the values available with a key and add them

together and give the final result as the key and sum of its values

for (IntWritable value : values)

{

sum += value.get();

}

context.write(key, new IntWritable(sum));

}

}

[hadoop@localhost mapreduce]$ vi WordCount.java

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

public class WordCount extends Configured implements Tool

Presented By D.Kesavaraja www.k7cloud.in 33 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

{

public int run(String[] args) throws Exception

{

//getting configuration object and setting job name

Configuration conf = getConf();

Job job = new Job(conf, "Word Count hadoop-0.20");

//setting the class names

job.setJarByClass(WordCount.class);

job.setMapperClass(WordCountMapper.class);

job.setReducerClass(WordCountReducer.class);

//setting the output data type classes

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

//to accept the hdfs input and output dir at run time

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

return job.waitForCompletion(true) ? 0 : 1;

}

public static void main(String[] args) throws Exception

{

int res = ToolRunner.run(new Configuration(), newWordCount(), args);

System.exit(res);

}

}

Presented By D.Kesavaraja www.k7cloud.in 34 | P a g e

One Days Workshop on BIG DATA WITH HADOOP, HDFS & MAPREDUCE (Hands on Training)

Presented By D.Kesavaraja www.k7cloud.in 35 | P a g e

You might also like

- Hadoop Admin Download Syllabus PDFDocument4 pagesHadoop Admin Download Syllabus PDFshubham phulariNo ratings yet

- Final Print Py SparkDocument133 pagesFinal Print Py SparkShivaraj KNo ratings yet

- Top 100 Hadoop Interview QuestionsDocument17 pagesTop 100 Hadoop Interview QuestionspatriciaNo ratings yet

- Hadoop & Big DataDocument36 pagesHadoop & Big DataParesh BhatiaNo ratings yet

- ResumeDocument4 pagesResumeshekharNo ratings yet

- Hadoop and Spark Developer ResumeDocument3 pagesHadoop and Spark Developer Resumessinha122No ratings yet

- HiveDocument17 pagesHivepruphiphisNo ratings yet

- Hadoop-Oozie User MaterialDocument183 pagesHadoop-Oozie User MaterialrahulneelNo ratings yet

- Bhanu Busi: E-Mail: Contact No: +91 8897834104Document6 pagesBhanu Busi: E-Mail: Contact No: +91 8897834104ChandanaNo ratings yet

- Apache Spark TutorialsDocument9 pagesApache Spark Tutorialsronics123No ratings yet

- Hadoop Multi Node ClusterDocument7 pagesHadoop Multi Node Clusterchandu102103No ratings yet

- Hadoop Ecosystem ComponentsDocument77 pagesHadoop Ecosystem ComponentsNikita IchaleNo ratings yet

- 7-AdvHive HBaseDocument82 pages7-AdvHive HBasevenkatraji719568No ratings yet

- Dzone Apache Hadoop DeploymentDocument7 pagesDzone Apache Hadoop DeploymentVernFWKNo ratings yet

- Hadoop Interview Questions - HDFSDocument19 pagesHadoop Interview Questions - HDFSg17_ramNo ratings yet

- Servlets & JSPDocument102 pagesServlets & JSPSudheer Reddy PothuraiNo ratings yet

- Big Data Hadoop TrainingDocument13 pagesBig Data Hadoop TrainingVinay Nagnath JokareNo ratings yet

- Hands On Exercises 2013Document51 pagesHands On Exercises 2013Manish JainNo ratings yet

- Real Time Hadoop Interview Questions From Various InterviewsDocument6 pagesReal Time Hadoop Interview Questions From Various InterviewsSaurabh GuptaNo ratings yet

- Bigdata NotesDocument26 pagesBigdata NotesAnil YarlagaddaNo ratings yet

- HadoopDocument30 pagesHadoopSAM7028No ratings yet

- Saavn MapReduce ProjectDocument9 pagesSaavn MapReduce Projectsandesh.herwade100% (1)

- Apache Hue-ClouderaDocument63 pagesApache Hue-ClouderaDheepikaNo ratings yet

- HadoopDocument7 pagesHadoopAmaleswarNo ratings yet

- Hadoop Imp CommandsDocument21 pagesHadoop Imp CommandsaepuriNo ratings yet

- Sqoop CheatsheetDocument3 pagesSqoop CheatsheetPremKumar SivanandanNo ratings yet

- Facebook Hive POCDocument18 pagesFacebook Hive POCJayashree RaviNo ratings yet

- Spark Sample Resume 2Document7 pagesSpark Sample Resume 2pupscribd100% (1)

- Personal Info: Big Data EngineerDocument2 pagesPersonal Info: Big Data EngineerNoble kumarNo ratings yet

- Sreeja.T: SR Hadoop DeveloperDocument7 pagesSreeja.T: SR Hadoop DeveloperAnonymous Kf8Nw5TmzGNo ratings yet

- Hadoop Installation Step by StepDocument6 pagesHadoop Installation Step by StepUmesh NagarNo ratings yet

- 24 Hadoop Interview Questions & AnswersDocument7 pages24 Hadoop Interview Questions & AnswersnalinbhattNo ratings yet

- Hadoop JobTracker: What it is and its role in a clusterDocument8 pagesHadoop JobTracker: What it is and its role in a clusterArunkumar PalathumpattuNo ratings yet

- Basic Linux Commands GuideDocument3 pagesBasic Linux Commands GuideSahjaada AnkeetNo ratings yet

- Deepshikha Agrawal Pushp B.Sc. (IT), MBA (IT) Certification-Hadoop, Spark, Scala, Python, Tableau, ML (Assistant Professor JLBS)Document74 pagesDeepshikha Agrawal Pushp B.Sc. (IT), MBA (IT) Certification-Hadoop, Spark, Scala, Python, Tableau, ML (Assistant Professor JLBS)Ashita PunjabiNo ratings yet

- Hadoop QuestionsDocument41 pagesHadoop QuestionsAmit BhartiyaNo ratings yet

- Hive Using HiveqlDocument38 pagesHive Using HiveqlsulochanaNo ratings yet

- Apache Hive overview - data warehousing on HadoopDocument61 pagesApache Hive overview - data warehousing on HadoopNaveen ReddyNo ratings yet

- What is Big Data? Four V's and Uses in BusinessDocument222 pagesWhat is Big Data? Four V's and Uses in BusinessraviNo ratings yet

- Hadoop Seminar Report IntroductionDocument44 pagesHadoop Seminar Report Introductionnagasrinu20No ratings yet

- Cloudera Certification Dump 410 Anil PDFDocument49 pagesCloudera Certification Dump 410 Anil PDFarunshanNo ratings yet

- Hadoop and BigData LAB MANUALDocument59 pagesHadoop and BigData LAB MANUALharshi33% (3)

- Vijay G Phone Number: 510-921-2473 Professional SummaryDocument5 pagesVijay G Phone Number: 510-921-2473 Professional SummaryharshNo ratings yet

- Aslam, Mohammad Email: Phone: Big Data/Cloud DeveloperDocument6 pagesAslam, Mohammad Email: Phone: Big Data/Cloud DeveloperMadhav GarikapatiNo ratings yet

- ABD00 Notebooks Combined - DatabricksDocument109 pagesABD00 Notebooks Combined - DatabricksBruno TelesNo ratings yet

- Hadoop Developer Training - Hive Lab BookDocument51 pagesHadoop Developer Training - Hive Lab BookKarthick selvamNo ratings yet

- Ram Madhav ResumeDocument6 pagesRam Madhav Resumeramu_uppadaNo ratings yet

- Hadoop Interview QuestionsDocument14 pagesHadoop Interview QuestionsSankar SusarlaNo ratings yet

- SqoopTutorial Ver 2.0Document51 pagesSqoopTutorial Ver 2.0bujjijulyNo ratings yet

- Some of The Frequently Asked Interview Questions For Hadoop Developers AreDocument72 pagesSome of The Frequently Asked Interview Questions For Hadoop Developers AreAmandeep Singh100% (1)

- Linux Command ListDocument8 pagesLinux Command ListhkneptuneNo ratings yet

- Mohit BigData 5yrDocument3 pagesMohit BigData 5yrshreya arunNo ratings yet

- Cloudera Hadoop Admin Notes PDFDocument65 pagesCloudera Hadoop Admin Notes PDFPankaj SharmaNo ratings yet

- Hadoop Professional with Big Data ExperienceDocument3 pagesHadoop Professional with Big Data Experiencearas4mavis1932No ratings yet

- HDFS Interview Questions - Top 15 HDFS Questions AnsweredDocument29 pagesHDFS Interview Questions - Top 15 HDFS Questions Answeredanuja shindeNo ratings yet

- GCP ProductsDocument33 pagesGCP ProductsAshish BatajooNo ratings yet

- Spark Interview 4Document10 pagesSpark Interview 4consaniaNo ratings yet

- SPARK TRANSFORMATIONS MAP(), FILTER(), FLATMAP(), GROUPBY() AND REDUCEBYKEYDocument24 pagesSPARK TRANSFORMATIONS MAP(), FILTER(), FLATMAP(), GROUPBY() AND REDUCEBYKEYchandraNo ratings yet

- Big Data and Data Science WorkshopDocument1 pageBig Data and Data Science WorkshopD.KESAVARAJANo ratings yet

- MAD Lab Manual-K7cloudDocument64 pagesMAD Lab Manual-K7cloudD.KESAVARAJANo ratings yet

- Latex Tips by D.KesavarajaDocument2 pagesLatex Tips by D.KesavarajaD.KESAVARAJANo ratings yet

- BrochureDocument2 pagesBrochureD.KESAVARAJANo ratings yet

- AICTE SPONSORED Faculty Development Programme (FDP) On "DATA SCIENCE RESEARCH AND BIG DATA ANALYTICS"Document28 pagesAICTE SPONSORED Faculty Development Programme (FDP) On "DATA SCIENCE RESEARCH AND BIG DATA ANALYTICS"D.KESAVARAJANo ratings yet

- Agenda NewDocument1 pageAgenda NewD.KESAVARAJANo ratings yet

- DataMining FDPDocument2 pagesDataMining FDPD.KESAVARAJANo ratings yet

- One Day State Level Workshop On BIG DATA ANALYTICSDocument28 pagesOne Day State Level Workshop On BIG DATA ANALYTICSD.KESAVARAJANo ratings yet

- Latex PSNA AgendaDocument5 pagesLatex PSNA AgendaD.KESAVARAJANo ratings yet

- Oxford AgendaDocument1 pageOxford AgendaD.KESAVARAJANo ratings yet

- BrochureDocument2 pagesBrochureD.KESAVARAJANo ratings yet

- Cloud Computing Lab Setup Using Hadoop & Open NebulaDocument46 pagesCloud Computing Lab Setup Using Hadoop & Open NebulaD.KESAVARAJA100% (4)

- One Day State Level Seminar On "Big Data Analytics With Hadoop"Document2 pagesOne Day State Level Seminar On "Big Data Analytics With Hadoop"D.KESAVARAJANo ratings yet

- One Day State Level Seminar On "Big Data Analytics With Hadoop"Document2 pagesOne Day State Level Seminar On "Big Data Analytics With Hadoop"D.KESAVARAJANo ratings yet

- SAP Award of Excellence With Cash Award Rs5000 /-IIT Bombay Top Performers List UpdatedDocument8 pagesSAP Award of Excellence With Cash Award Rs5000 /-IIT Bombay Top Performers List UpdatedD.KESAVARAJANo ratings yet

- Two Days Hands - On National Level Workshop ON CLOUD COMPUTINGDocument3 pagesTwo Days Hands - On National Level Workshop ON CLOUD COMPUTINGD.KESAVARAJANo ratings yet

- MSUCSE - Course MaterialDocument28 pagesMSUCSE - Course MaterialD.KESAVARAJANo ratings yet

- SAP Award of Excellence by IIT BombayDocument1 pageSAP Award of Excellence by IIT BombayD.KESAVARAJANo ratings yet

- Video Processing Using MATLAB: One Day National Level Workshop OnDocument1 pageVideo Processing Using MATLAB: One Day National Level Workshop OnD.KESAVARAJANo ratings yet

- Two Days Hands On Workshop On Cloud Computing Lab Setup Using Hadoop and Open NebulaDocument2 pagesTwo Days Hands On Workshop On Cloud Computing Lab Setup Using Hadoop and Open NebulaD.KESAVARAJANo ratings yet

- Chandy AgendaDocument1 pageChandy AgendaD.KESAVARAJANo ratings yet

- TRP AgendaDocument2 pagesTRP AgendaD.KESAVARAJANo ratings yet

- Open Education ResourceDocument22 pagesOpen Education ResourceD.KESAVARAJANo ratings yet

- TrpbroDocument1 pageTrpbroD.KESAVARAJANo ratings yet

- One Day State Level Seminar On " BIG DATA ANALYTICS With HADOOP"Document1 pageOne Day State Level Seminar On " BIG DATA ANALYTICS With HADOOP"D.KESAVARAJANo ratings yet

- Next Generation of Virtualization Technology Part 4 of 8Document13 pagesNext Generation of Virtualization Technology Part 4 of 8D.KESAVARAJANo ratings yet

- "BIG DATA Analytics With Hadoop": Seminar OnDocument1 page"BIG DATA Analytics With Hadoop": Seminar OnD.KESAVARAJANo ratings yet

- Next Generation of Virtualization Technology Part 2 of 8Document9 pagesNext Generation of Virtualization Technology Part 2 of 8D.KESAVARAJANo ratings yet

- Next Generation of Virtualization Technology Part 3 of 8Document15 pagesNext Generation of Virtualization Technology Part 3 of 8D.KESAVARAJANo ratings yet

- 6 Hrs Agreement FormDocument3 pages6 Hrs Agreement FormLougiebelle DimaanoNo ratings yet

- Hang Out 5 - Unit Test 8Document2 pagesHang Out 5 - Unit Test 8Neila MolinaNo ratings yet

- 39-Article Text-131-1-10-20191111Document9 pages39-Article Text-131-1-10-20191111berlian gurningNo ratings yet

- Sains - Integrated Curriculum For Primary SchoolDocument17 pagesSains - Integrated Curriculum For Primary SchoolSekolah Portal100% (6)

- Oral Presentaton Rubric Trait 4 3 2 1 Non-Verbal Skills Eye ContactDocument3 pagesOral Presentaton Rubric Trait 4 3 2 1 Non-Verbal Skills Eye Contactjoy pamorNo ratings yet

- Web Design Homework Assignment #5: Homework Description - Personal NarrativeDocument2 pagesWeb Design Homework Assignment #5: Homework Description - Personal NarrativeSarun RajNo ratings yet

- Diego Maradona BioDocument2 pagesDiego Maradona Bioapi-254134307No ratings yet

- Chem237LabManual Fall2012 RDocument94 pagesChem237LabManual Fall2012 RKyle Tosh0% (1)

- The Science Behind NightmaresDocument24 pagesThe Science Behind NightmaresBeauty LiciousNo ratings yet

- Cyber Security RoadmapDocument7 pagesCyber Security RoadmapNavin Kumar PottavathiniNo ratings yet

- The 4 Parenting StylesDocument4 pagesThe 4 Parenting StylesJeff Moon100% (3)

- Straight To The Point Evaluation of IEC Materials PDFDocument2 pagesStraight To The Point Evaluation of IEC Materials PDFHasan NadafNo ratings yet

- Motivation LetterDocument2 pagesMotivation Lettercho carl83% (18)

- 4037 w17 Ms 22Document8 pages4037 w17 Ms 22arcanum78No ratings yet

- InTech-A Review of Childhood Abuse Questionnaires and Suggested Treatment ApproachesDocument19 pagesInTech-A Review of Childhood Abuse Questionnaires and Suggested Treatment ApproacheskiraburgoNo ratings yet

- Extension Cord MakingDocument3 pagesExtension Cord MakingAaron VillanuevaNo ratings yet

- Guide To IBM PowerHA SystemDocument518 pagesGuide To IBM PowerHA SystemSarath RamineniNo ratings yet

- Erasmus Module CatalogueDocument9 pagesErasmus Module Catalogueelblaco87No ratings yet

- Fasil Andargie Fenta: LecturerDocument2 pagesFasil Andargie Fenta: Lecturerfasil AndargieNo ratings yet

- G10 - Portfolio Assessment 1 - 2022-2023Document2 pagesG10 - Portfolio Assessment 1 - 2022-2023ayush rajeshNo ratings yet

- Memorial ProgramDocument18 pagesMemorial Programapi-267136654No ratings yet

- Chapter 1-5 Teacher-Attributes (Full Research)Document26 pagesChapter 1-5 Teacher-Attributes (Full Research)Honeyvel Marasigan BalmesNo ratings yet

- Lesson Plan - HomophonesDocument3 pagesLesson Plan - Homophonesapi-359106978No ratings yet

- Songs of The Gorilla Nation My Journey Through AutismDocument3 pagesSongs of The Gorilla Nation My Journey Through Autismapi-399906068No ratings yet

- Mathematics 9 3: Learning Area Grade Level Quarter Date I. Lesson Title Ii. Most Essential Learning Competencies (Melcs)Document4 pagesMathematics 9 3: Learning Area Grade Level Quarter Date I. Lesson Title Ii. Most Essential Learning Competencies (Melcs)Marjury Bernadette BuenNo ratings yet

- 1st Grade PYP Planner Unit 3Document4 pages1st Grade PYP Planner Unit 3Irish CortezNo ratings yet

- Chapter 7Document4 pagesChapter 7Winchelle Joy SubaldoNo ratings yet

- Collaboration and Feeling of Flow With An Online Interactive H5P Video Experiment On Viscosity.Document11 pagesCollaboration and Feeling of Flow With An Online Interactive H5P Video Experiment On Viscosity.Victoria Brendha Pereira FelixNo ratings yet

- Abm-Amethyst Chapter 2Document12 pagesAbm-Amethyst Chapter 2ALLIYAH GRACE ADRIANONo ratings yet

- Six Principles of Special EducationDocument36 pagesSix Principles of Special EducationIsabella Marie GalpoNo ratings yet