Professional Documents

Culture Documents

Data Center Designs White Paper JKCS PDF

Uploaded by

dexterOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Data Center Designs White Paper JKCS PDF

Uploaded by

dexterCopyright:

Available Formats

Jan Kremer Consulting Services (JKCS)

DATA CENTER DESIGN

White Paper

JAN KREMER

CONSULTING SERVICES

Data Center Design White Paper Page 1

Jan Kremer Consulting Services (JKCS)

TABLE OF CONTENTS

1. INTRODUCTION .............................................................................................................................. 4

1.1. DOCUMENT OUTLINE ........................................................................................................................ 4

2. GENERAL DESIGN PRINCIPLES ......................................................................................................... 5

2.1. INTRODUCTION ................................................................................................................................ 5

2.2. GREEN DATACENTERS ....................................................................................................................... 6

2.3. VIRTUALIZED DATA CENTERS .............................................................................................................. 7

2.4. MANAGED SERVICES ......................................................................................................................... 9

2.5. SECURITY ...................................................................................................................................... 11

2.6. ITIL BASED MANAGEMENT AND SERVICES ........................................................................................... 13

2.7. SERVICE ORIENTED ARCHITECTURE (SOA)........................................................................................... 15

2.8. BUSINESS CONTINUITY AND DISASTER RECOVERY.................................................................................. 16

3. DATA CENTER DESIGN EXAMPLE ................................................................................................... 19

3.1. INTRODUCTION .............................................................................................................................. 19

3.2. CCTV AND ACCESS CONTROL ........................................................................................................... 24

3.2.1 Introduction .......................................................................................................................... 24

3.2.2 Physical Access Restrictions details ...................................................................................... 24

3.2.3 Door Control Systems............................................................................................................ 25

3.2.4 Server Area Protection .......................................................................................................... 26

3.2.5 Closed-Circuit Television Coverage ....................................................................................... 26

3.2.6 Access Policies and Procedures ............................................................................................. 27

3.2.7 ISO 27001 .............................................................................................................................. 27

3.2.8 CCTV ...................................................................................................................................... 29

3.2.9 Access Control ....................................................................................................................... 31

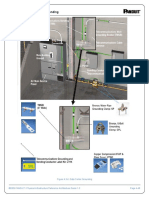

3.3. CABLING ....................................................................................................................................... 32

3.3.1 Introduction .......................................................................................................................... 32

3.3.2 How to Label: ........................................................................................................................ 36

3.3.3 Verification ........................................................................................................................... 39

3.3.4 Network Cabling Infrastructure ............................................................................................ 39

3.3.5 Implementation of Pods........................................................................................................ 41

3.3.6 Top of Rack (ToR) Model ....................................................................................................... 42

3.3.7 End of Row (EoR) Model ....................................................................................................... 44

3.3.8 Point of Distribution (POD) ................................................................................................... 45

3.4. FIRE DETECTION AND SUPPRESSION .................................................................................................... 46

3.4.1 Introduction .......................................................................................................................... 46

3.4.2 Detailed Information ............................................................................................................ 48

3.5. HVAC .......................................................................................................................................... 51

3.5.1 Introduction .......................................................................................................................... 51

3.5.2 Details ................................................................................................................................... 53

3.6. LIGHTING ...................................................................................................................................... 58

3.6.1 Introduction .......................................................................................................................... 58

3.6.2 Occupancy Sensor Application .............................................................................................. 58

3.6.3 Lighting Capacity .................................................................................................................. 60

3.7. MONITORING AND MANAGEMENT .................................................................................................... 61

3.7.1 Introduction .......................................................................................................................... 61

3.7.2 Details ................................................................................................................................... 61

3.8. POWER......................................................................................................................................... 65

Data Center Design White Paper Page 2

Jan Kremer Consulting Services (JKCS)

3.8.1 Introduction .......................................................................................................................... 65

3.8.2 Power Design Includes: ......................................................................................................... 65

3.8.3 Details ................................................................................................................................... 68

3.9. RACKS .......................................................................................................................................... 71

3.9.1 Introduction .......................................................................................................................... 71

3.9.2 Details ................................................................................................................................... 71

3.10. RAISED FLOOR ............................................................................................................................... 72

3.10.1 Introduction ..................................................................................................................... 72

3.10.2 Summary .......................................................................................................................... 75

3.11. RF SHIELDING ................................................................................................................................ 76

3.11.1 Introduction ..................................................................................................................... 76

3.11.2 Details .............................................................................................................................. 76

3.12. WATER DETECTION......................................................................................................................... 77

3.12.1 Introduction ..................................................................................................................... 77

3.12.2 Details .............................................................................................................................. 77

3.12.3 Tracetek from Tyco Thermal Controls .............................................................................. 83

3.13. LABELING ...................................................................................................................................... 86

3.13.1 Introduction ..................................................................................................................... 86

3.13.2 Features ........................................................................................................................... 86

3.13.3 Benefits ............................................................................................................................ 87

3.13.4 Provides ............................................................................................................................ 87

Data Center Design White Paper Page 3

Jan Kremer Consulting Services (JKCS)

1. INTRODUCTION

This white paper provides an overview of Data Center Design principles and sample

Data Center Design

1.1. Document Outline

Chapter 1 provides an introduction and outline of this document.

Chapter 2 provides an overview of general data center design principles.

Chapter 3 provides a sample data center design; it does include sample diagrams for

some of the provided components

Data Center Design White Paper Page 4

Jan Kremer Consulting Services (JKCS)

2. General Design Principles

2.1. Introduction

Knowing what the client needs are the essentials of good data center design, and the

general infrastructure that a data center includes are the basic starting principles.

Now we need to concentrate on its exact scope. How many layers of infrastructure

should the data center include, will it be only server environment for one or many

managed services capabilities, how does the main data center purpose relate to the

disaster recovery data center

capabilities as to scope, capabilities

and service levels and what kind of

tier level is required etc.

Tier levels summary.

Tier I: Basic Site Infrastructure

A Tier I basic data

center has non-

redundant capacity

components and single

non-redundant path

distribution paths serving the sites computer equipment

Tier II: Redundant Capacity Components Site Infrastructure

A Tier II data center has redundant capacity components and single

non-redundant distribution paths serving the sites computer

equipment

Tier III: Concurrently Maintainable Site Infrastructure

A concurrently maintainable data center has redundant capacity

components and multiple distribution paths serving the sites computer

equipment. Generally, only one distribution path serves the computer

equipment at any time.

Tier IV: Fault Tolerant Site Infrastructure

A fault tolerant data center has redundant capacity systems and

multiple distribution paths simultaneously serving the sites computer

equipment

Data Center Design White Paper Page 5

Jan Kremer Consulting Services (JKCS)

2.2. Green Datacenters

Data center cooling is where the greatest energy-efficiency improvements can be

made. And cooling a data center efficiently is impossible without proper floor plan

and air-conditioning design. The fundamental rule in energy- efficient cooling is to

keep hot air and cold air separate. The hot-aisle/cold aisle, raised-floor design has

been the cooling standard for many years, yet surprisingly few data centers

implement this principle fully or correctly.

Hot aisle/cold aisle is a data center floor plan in which rows of cabinets are

configured with air intakes facing the middle of the cold aisle. The cold aisles have

perforated tiles that blow cold air from the computer room air-conditioning (CRAC)

units up through the floor.

The servers hot air returns blow heat exhaust out the back of cabinets into hot aisles.

The hot air is then sucked into the CRAC unit to be cooled and redistributed through

cold aisles.

As computing demands skyrocket, servers in data centers proliferate. And now, the

equation is rapidly spinning out of control as environmental concerns and cost-

efficiency are overwhelmed by server sprawl. excessive energy consumption from

servers running hot leads to high cooling costs, overuse of fossil fuels, pollution,

Data Center Design White Paper Page 6

Jan Kremer Consulting Services (JKCS)

depletion of natural resources and release of harmful co2 as waste. For every kilowatt

of energy consumed by a server, roughly another kilowatt must be expended to cool

that machine. By the end of 2008, the power costs of a server have exceeded the cost

of the server itself. Reduction of the number of servers can be achieved by

implementing a Virtualized Data Center. Using less equipment to do more goes to

the heart of being LEAN & GREEN. Consolidating and virtualizing storage and

using efficient computing practices and power-saving tactics are the route to

achieving environmental efficiency and reduction of cost.

2.3. Virtualized Data Centers

Todays IT organizations are dealing with the consequences of exploding IT

infrastructure growth and complexity. While computing resources continue to

increase in power, organizations are unable to fully utilize them in single application

deployments and cannot change computing resource assignments easily when

application or business requirements change. At the root of the problem is

uncontrolled server sprawl, servers provisioned to

support a single application.

Organizations that implemented hardware

virtualization have unwittingly created a new

problem: OS sprawl. While hardware remains a

considerable cost component, software and

management continue to be the largest cost

considerations. The daily management and

operations functions are daunting, and adding in

business continuity requirements, the costs and

complexity are overwhelming. Moreover, few

tools provide the management and automation to

ease the burden on IT departments. In order to

address these critical challenges, IT organizations

have to find ways to accomplish the following:

Improve the flexibility of computing resource assignment

Decrease complexity to improve manageability of systems

Automate routine tasks

Reduce overall management costs through efficiency

Provide cost-effective data availability and recovery

Increase the return from their infrastructure investment by better utilizing

resources

Data Center Design White Paper Page 7

Jan Kremer Consulting Services (JKCS)

Server virtualization, which enables several applications to run independently on a

single physical server, is an important first step toward achieving a virtualized

environment. But it is only by combining server virtualization with storage

virtualization when enterprises can realize the full benefits of virtualization.

Consolidating resources through data center virtualization techniques can improve

the return on IT investments, boost IT productivity, increase system reliability and

availability, and ultimately enhance the ability of IT to meet the needs of the

business.

Microsoft offers server virtualization technology within their new MS Server 2008

Operating System platform. Windows Server 2008 Hyper-V is a built-in operating

system technology that hosts virtual machines on the Windows Server 2008 platform,

using server hardware virtualization. It provides a scalable and secure platform for

supporting enterprise server virtualization infrastructures. Windows Server 2008

Hyper-V uses Type 1 hypervisor-based virtualization, which runs directly on

hardware, thereby enabling direct access to difficult-to-virtualize processor calls.

Data Center Design White Paper Page 8

Jan Kremer Consulting Services (JKCS)

2.4. Managed Services

Managed Services is a proven and successful business model around the world and

market dynamics are driving companies to it.

Managed Services refers to the outsourcing of IT computing and/or network

infrastructure, operating systems, and/or applications to a third party. The Managed

Services provider assumes responsibility of the entire set of IT processes and

computing/communication capabilities provided to the customer.

The architecting, deployment, 24x7x365 monitoring, and proactive management of

these IT environments, which typically must be always available and always

secure. Services can include the applications, hardware, software, network, etc.

Companies find it advantageous to outsource services that provide key functions such

as security, business continuity, disaster recovery, data integrity, and high

availability, so they can instead focus internal IT resources on core activities and

processes.

Data Center Design White Paper Page 9

Jan Kremer Consulting Services (JKCS)

Companies are facing the fundamental challenge of dealing with increasing IT

complexity and cost, and the need to deliver value from their technology investments.

IT departments are struggling with administrative, operational and maintenance

aspects of day to day IT management, rather than on IT activities that impact revenue

generation and competitive advantage.

The issues they face are:

Downtime business need for always on reliability.

Security expensive and constantly changing security threats.

Keeping pace too much focus on administrative problems vs. business

problems.

Compliance and business regulations increasing governance regulations

and storage requirements.

Data Center Design White Paper Page 10

Jan Kremer Consulting Services (JKCS)

2.5. Security

The increasing multiplicity of data centre locations and often the geographical

dispersion of IT administrators increases the importance of a sound security strategy.

To work effectively, the strategy should establish guidelines and responsibilities to

protect the information assets of a company.

Physical security

Public: areas that all employees can access

Controlled: areas that can and must be locked when unattended

Very controlled: areas where access is restricted to registered or authorized users

The question for many IT managers is how to supplement physical security strategy.

The answer is to give secure, remote access and control of data centre servers and

devices to authorized personnel no matter where they or the devices are located.

Data Center physical security includes components such as:

CCTV System with central control room monitors and video recording

units\

Data Center Access Control System with role based access control for the

different zones and rooms within the Data Center including biometrics

fingerprint scanners (employees only)

Visitor temporary card issuance system for Data Center access for

visitors

Employee Access Card Issuance system with Digital Camera (capturing

digital photo for card surface) and Biometrics Fingerprint Scanner

(Fingerprint minutiae on card contactless chip for 1-1 verification at

access points). Additional Biometrics systems such as Iris and facial

recognition are also supported

Outside CCTV cameras for Data Center perimeter security management

The security systems can utilize the existing IP network for functionality for both

access control requests and CCTV. This reduces the cost and complexity of adding

separate physical lines. Additionally, it will allow for remote monitoring and

management from any Facility.

Logical security

Logical security strategy requires the IT manager to identify and authenticate users.

User IDs need to be established to identify the person connecting to the system.

Logical security includes defining and protecting resources. What resources can users

access when they have been authenticated?

Data Center Design White Paper Page 11

Jan Kremer Consulting Services (JKCS)

Physical and Logical Security Convergence

"CEOs and boards don't really think about security; they think about risk. With too

many security discussions, they kind of glaze over the issue, but when you're talking

with executive management and explaining things to them in terms of risk to the

business, that really gets the business leaders thinking about integration and

convergence of physical security and IT security in the right way."

Practice Leader, Global IT Services Provider

Convergence of logical and physical security brings significant benefits, specifically

identifying areas where the two can interconnect to the greatest positive effect. In

order to make this convergence happen, security management must be integrated

with existing business processes for managing facilities, personnel and IT Systems.

This requires clear organizational ownership on critical management processes such

as:

Enterprise Security Policy

User provisioning and asset management

Security monitoring and auditing

Incident response

Business Continuity Planning

One simple example of this convergence is the usage of a smartcard based Identity

Card which is used for Physical Access Control as well as for authentication of the

cardholder to computers and data. This Smartcard based ID card is based on a combi-

chip, meaning the card has one chip which supports contact (Logical Security for

Computer Authentication with biometrics based identity verification) and a

contactless proximity chip (Physical Security used for access control using the same

biometrics as provided by the contact portion of the chip)

Data Center Design White Paper Page 12

Jan Kremer Consulting Services (JKCS)

2.6. ITIL based Management and Services

The IT Infrastructure Library (ITIL), a set of best practices addressing the delivery of

high-quality, cost-effective IT services, includes best practice guidelines for multiple

IT Operations activities. Release Management and Change Management are two

activities within ITILs IT Service Management (ITSM) disciplines that offer

guidance for deploying changes to IT services. Both Release and Change

Management recommend pre-deployment testing, and best practice guidance sug-

gests that improving these processes also benefits ITSM Incident, Problem, and

Availability Management.

Benefits of ITIL deployment

The key benefits of implementing ITIL:

Improving IT and business alignment

Improved productivity

Ensuring best practice

Implementation of ITIL can be costly, so where can an organization expect to recover

those costs?

Data Center Design White Paper Page 13

Jan Kremer Consulting Services (JKCS)

Here is a list of some of the benefits:

ITIL has become the de facto best practice for running IT. The wide

spread adoption of ITIL within an industry will provide guides to what

works and what doesnt.

ITIL brings with it a common dictionary, an item that has been lacking in

the present IT world.

Improved financial management of IT and a better matching of the

services of IT to the needs of the overall organization.

Improved relationship between IT and the organization for which it

provide services.

Improved utilization of the IT infrastructure.

Improved utilization of IT personnel.

Improved reputation of IT within the organization that IT services

Data Center Design White Paper Page 14

Jan Kremer Consulting Services (JKCS)

2.7. Service Oriented Architecture (SOA)

There are many definitions for Service-Oriented Architecture in current use. The

most widely accepted definition is that SOA is a set of architectural principles that

help build modular systems based on services or units of IT functionality.

These services, either at the business or technical level, are offered by one party, the

service provider, or consumed by another. This idea of a well- defined contract that

is fulfilled by a provider and used by another consuming party is central to SOA

principles. Providers and consumers can reside in the same organization or in

separate ones even in separate companies.

Much like the Internet before it, SOA is sweeping through companies and industries,

upending the competitive order. Thanks to SOA, companies are fast commissioning

new products and services, at lower cost and with less labor, often with the

technology assets they have right in hand. Most important, SOA is helping to put IT

squarely where it belongs: in the hands of the business executive, under whose

direction it can create the most value.

Data Center Design White Paper Page 15

Jan Kremer Consulting Services (JKCS)

2.8. Business Continuity and Disaster Recovery

IT managers today must be ready for the unexpected, especially in consideration of

new industry and government rules concerning data protection and disaster recovery.

Disaster recovery initiatives, of course, have been around for some time; however, it

is only recently that several new technologies have emerged that are changing the

way we think about disaster recovery and business continuity planning.

These technologies focus on WAN optimization, traffic redirection, data replication,

and secure remote access. Together, they represent a new methodology for

organizations seeking to consolidate cost and equipment, reduce management time,

and ensure applications are always available when disaster strikes.

The recovery time objective (RTO) is the maximum allowable downtime after an

outage for recovering systems, applications, and functions (see Figure below). RTO

Data Center Design White Paper Page 16

Jan Kremer Consulting Services (JKCS)

provides the basis for developing cost-effective recovery strategies and for

determining when and how to implement these recovery strategies during a disaster

situation

Business Continuity Planning

The results from both a 2004 IDC study and a current study highlight a continuing

trend among companies looking to reduce overall downtime and increase overall

availability. Through business continuity planning, the change in downtime over a

four-year period has dropped more than 53% from 20.4 hours in 2003 to an expected

9.5 hours in 2007. This converts to a shift in availability from 97.2% to 98.7% over

the same period. When these results are viewed with regard to business impact,

adding nearly 11 hours of monthly uptime converts to 132 hours annually, or 5.5

24-hour days.

This additional amount of time could translate to a significant amount of potential

revenue loss were your company not able to meet these higher availability

requirements. Additionally, as you look to increase the availability of your IT

environments and business processes, you will need to integrate more advanced

Data Center Design White Paper Page 17

Jan Kremer Consulting Services (JKCS)

means of achieving these results. The impact of reaching these high-availability goals

will likely require greater levels of expertise, automation, and, ultimately, capital

investment.

Disaster Recovery Planning

A Disaster Recovery Plan covers the data, hardware and software critical for a

business to restart operations in the event of a natural or human-caused disaster. It

should also include plans for coping with the unexpected or sudden loss of key

personnel. The analysis phase in the development of a BCP (Business Continuity

Plan) manual consists of an impact analysis, threat analysis, and impact scenarios

with the resulting BCP plan requirement documentation.

Data Center Design White Paper Page 18

Jan Kremer Consulting Services (JKCS)

3. Data Center Design Example

3.1. Introduction

This section provides Data Center Design examples for the following components

This document represents the second deliverable for this project which is a Low

Level design for the main components of the Data Center such as:

General Design

o Floor Plan

o Final layout for the Communications Room and Power Distribution Room

o Labeling and Mapping

o Shielding

Power System Design

o Final Design for the Generator Sets

o Final Floor Plans for the Generator Sets room

o Final Design for the UPS systems

o Overhead power cabling since water piping is under raised floor

Cooling/AC high level design

o Basic design for using water chillers

o Models of chillers recommended

o Water piping under raised floor

Detailed Cabling Design based on TIA 942 and TIA 568-A and B

Detailed design for a Data Center Monitoring System

Detailed design for Fire Protection and Detection based on FM200

Detailed design for Water Leakage detection and monitoring whole room

Overall Design Summary

Knowing what the client needs are the essentials of good data center design, and the

general infrastructure that a datacenter includes are the basic starting principles now

we need to concentrate on its exact scope.

The TIA-942 standard provides several requirements and recommendations for

cabling management. The data center must be designed with separate racks and

pathways for each media type, and power and communications cables must be placed

in separate ducts.

Data Center Design White Paper Page 19

Jan Kremer Consulting Services (JKCS)

Data Center Design White Paper Page 20

Jan Kremer Consulting Services (JKCS)

The design must where possible meet Tier 4 requirements based on the Tier 4

standards defined by the Uptime institute. Where physical existing building

restrictions do not allow for certain components being Tier 4 they must be Tier 3. See

a quick overview summary of Tier 3 and Tier 4 below.

Tier III: Concurrently Maintainable Site Infrastructure

- A concurrently maintainable datacenter has redundant capacity components and multiple

distribution paths serving the sites computer equipment. Generally, only one

distribution path serves the computer equipment at any time.

- Each and every capacity component and element of the distribution paths can be removed

from service on a planned basis without causing any of the computer equipment to be

shut down

- Annual Site Caused IT Downtime (actual field data) 1.6 hours

Data Center Design White Paper Page 21

Jan Kremer Consulting Services (JKCS)

- Representative Site Availability 99.98%

Tier IV: Fault Tolerant Site Infrastructure

- A fault tolerant datacenter has redundant capacity systems and multiple distribution paths

simultaneously serving the sites computer equipment

- A single worst-case failure of any capacity system, capacity component or distribution

element will not impact the computer equipment.

- Annual Site Caused IT Downtime (actual field data) 0.8 hours

- Representative Site Availability 99.99%

Data Center Design White Paper Page 22

Jan Kremer Consulting Services (JKCS)

Data Center Design White Paper Page 23

Jan Kremer Consulting Services (JKCS)

3.2. CCTV and Access Control

3.2.1 Introduction

All elements of the Data Center physical security deliverables must be installed and

tested including:

CCTV System within Data Center (Computer Room) with central control

room monitors and video recording units.

Datacenter Access Control System with role based access control for the

different zones and rooms within the Datacenter including biometrics

fingerprint scanners (employees only).

Visitor temporary card issuance system for Data Center access for

visitors. (Optional)

Employee Access Card issuance system with Digital Camera (capturing

digital photo for card surface) and Biometrics Fingerprint Scanner

(Fingerprint minutiae on card contactless chip for 1-1 verification at

access points).

Outside CCTV (around the inside building entrance door(s) to the

Computer Room) and cameras for Datacenter perimeter (outside

Generator Set/UPS building for security management.

The security systems will utilize the existing IP network for functionality for both

access control requests and CCTV. This reduces the cost and complexity of adding

separate physical lines. Additionally, it will allow for remote monitoring and

management.

3.2.2 Physical Access Restrictions details

The most fundamental way of physically protecting the items housed in a Datacenter

is control over who can enter and who can enter in which location(s) of the Data

Center. Door Locks, Access Control Systems, fencing and lockable server cabinets

each prohibit someone from entering, that is unauthorized personnel seeing obtaining

sensitive information.

The most fundamental way of physically protecting the items housed in a Datacenter

is control over who can enter and ensure that the who is really the authorized

person to enter the Datacenter and its sub locations. Smartcard access control systems

with biometrics will not only ensure that controlled access is ensured but also at all

times a central control monitions system will always know who is where at all

times.

Data Center Design White Paper Page 24

Jan Kremer Consulting Services (JKCS)

3.2.3 Door Control Systems

A Datacenter related to manager services has several levels of access control security

such as:

Level 1: Main Access to Datacenter Facility

o This includes all personnel allowed access to the Datacenter which

includes Operators, Engineers, Management and Administration

Level 2: Access to the different Computer Rooms (Computer Room areas

such as Communications Room and Power Distribution Room), each

Computer Room area which serves different functionality should have

their own access control

Level 3: Access to Rack/Cabinets and rooms that contain secure hardware

and software such as:

o Systems containing Certification Authority hardware and software

o Smartcard Key Management Authority (KMA) hardware and

software

o Key Generation and Key Distribution hardware and software

including HSMs

Access control should be established using contactless smartcards which store on the

chip (suggest 16-32Kb) the information of the cardholder for access control to the

different Datacenter security levels:

Name, Phone, Position, and Company organization group

Security Access level

Biometrics including digital photo and two fingerprint minutiae

Access control doors must have a contactless smartcard reader with fingerprint

scanner. Each card reader for each location will perform the required check. When

the person holding the card requires to access the Datacenter, and any higher level

security rooms he holds his card close to the reader, the system logs:

Date and Time accessing (and leaving)

Name etc

Then validates the Fingerprint scanned from the reader against the minutiae in the

card, when OK validates the security level allowed and opens the door or rejects

access.

All secure areas including leaving the data center will force also the employee (or

visitor when given temporary pass) to use the card on a reader in the exit area in

order to open the door for leaving. This system now can also be utilized for:

Security audits

Time Management for employees for maintaining a log when employees were

present (automated time sheets)

Data Center Design White Paper Page 25

Jan Kremer Consulting Services (JKCS)

3.2.4 Server Area Protection

3.2.4.1 Cages

Although most Datacenters have hard-walled rooms, sometimes it has been chosen to

surround a specific server area with wire mesh fencing. This called a cage, such

fencing is most commonly used to sub divide a large computer room area (with

raised floors) to add additional physical security to certain select servers and

networking devices. You could go as far as creating these cages in a direct one to one

relationship as to your server zoning such as zones for:

Web Servers protected by a DMZ including firewall(s) and Intrusion Detection

Systems (IDS)

Separate zones for Application and Database Servers

Separate zones for security sensitive servers such as for:

o Certification Authority

o Key Management Authority

o Key Generation Systems for Security Cards and other PKI functions

Network and Systems Management servers such as HP OpenView and

CiscoWorks etc.

Cages can then have their own access control with the related security level related to

the server group and functions

3.2.4.2 Locking Cabinets

Another additional physical security level is to ensure that all server, network

devices, HSM devices, network management systems racks (Cabinets) are lockable

and that these cabinets are locked with proper management control over the keys for

these cabinets. This means the access control to these keys must be clearly defined

and their usage tested in practice especially for exceptional emergency conditions

3.2.5 Closed-Circuit Television Coverage

Card Reader logs can track who enters and leaves the Datacenter, bur for real time

surveillance of who enters your server environment, installation of closed-circuit

television is strongly recommended. Cameras should be placed at strategic locations

outside and inside the Datacenter and should be monitored by security personnel as

well as recorded on an Audio/Video recording system. All these physical access

control systems should be integrated with each other and complement each other.

Data Center Design White Paper Page 26

Jan Kremer Consulting Services (JKCS)

3.2.6 Access Policies and Procedures

Each Datacenter needs a proper access policy that defines who is allowed to enter

each of security levels defined, and also under what circumstances. This is usually

done by Job Classification. This classification must be done for all persons who

possible may have to be in these secure areas. A visitor systems access policy must

also be defined which could be for example that no visitor (even having a temporary

entry batch) can never be entering, leaving or walking around the premised without

the presence of an authorized employee.

3.2.7 ISO 27001

We recommend the implementation of an overall security policy based on ISO 27001

Information Security is a business requirement in all organizations in todays world.

These requirements are driven either by business need or by regulations. Many

organizations find it difficult to derive a framework for defining the requirements.

ISO 27001, the Information Security Management System works as a framework

from where the organization can start the information security management

initiative.

There are several reasons why an organization should implement ISO 27001 standard

and the primary one is the business demand. The ISO 27001 certification confirms

that certain levels of protection are in place so as to protect the information / data

handled.

ISO 27001 presents the requirements to implement and operate an Information

Security Management System (ISMS). Below is an interpretation of the major

requirements and deliverables of each phase of the ISMS implementation method

established by using ISO 27001.

Our methodology for assessing and managing information risks, as well as for the

development of information security policy and procedures will be based on

ISO27001:2005 international standard and best practices.

Data Center Design White Paper Page 27

Jan Kremer Consulting Services (JKCS)

Phases involved in implementing ISO 27001

There are different ways of implementing ISO 27001 and exact phases that apply to

one organization may not be able applicable for another one. The following phases

are from a high-level overview perspective and will be covered throughout the

project phases. A unique method of implementation might be produced for each

organization depending on the organizations structure and goals.

1. Define the scope and boundaries the ISMS.

2. Identify the organization Information Security policies and procedures.

3. Define the risk assessment methodology and criteria for accepting risks.

4. Identify Information assets and assess the business impact upon the loss of

confidentiality, integrity or availability of the assets.

5. Identify and evaluate the risks:

Identify threat and vulnerabilities related to the assets.

Evaluate the impact and likelihood for these threats and

vulnerabilities, and the controls currently in place.

Estimate the level of risks based on the risk assessment

methodology.

Determine whether risks are acceptable or need treatment based on

the risk acceptance criteria.

6. Identify the options for treating the risks, whether accept, avoid, transfer

or reduce the risks by Appling additional controls.

7. Select the ISO 27001 controls which are applicable for mitigating the risks

identified.

8. Define how to measure the effectiveness of the selected controls or group

of controls and how to calculate the residual risks.

9. Document the statement of applicability.

10. Prepare risk treatment plan.

11. Implement the risk treatment plan and document it. Perform Security

Awareness training for the ISMS users.

12. Conduct Internal Audit for the implemented ISMS to measure the

effectiveness of the ISMS and perform if needed any corrective and

preventive actions.

Data Center Design White Paper Page 28

Jan Kremer Consulting Services (JKCS)

3.2.8 CCTV

The CCTV implementation should be based on IP CCTV solutions making use of

existing or new network cabling using the CCTV cameras as standard IP configures

network devices.

In addition Power over Ethernet could also be used to power the cameras

For the computer room the CCTV cameras should be installed as a minimum at:

Each corner of the main computer room

Monitoring the entrance of the Communications Room

Monitoring the door between the UPS room and the computer room

Monitoring the entrance door to the computer room

Monitoring the hallway to the computer room

In the middle of the computer room on each side

Data Center Design White Paper Page 29

Jan Kremer Consulting Services (JKCS)

Data Center Design White Paper Page 30

Jan Kremer Consulting Services (JKCS)

3.2.9 Access Control

System Overview

Fingerprint based access control readers for entering and leaving the Computer

Room as a minimum

Manual access desk in corridor as to moving to the Computer Room entrance

door with sign-in sign-out register

Manual check in and out using register should be performed

Visitor process:

o Visitors should NEVER be given access to the computer room without

authorized employee guidance throughout the visitor presence in the

computer room

o Sign out must be performed when visitor leaves

Maintenance Engineer process:

o Engineer must sign in at entrance desk

o Engineer will be given temporary maintenance and support access card

o Engineer uses card to enter computer room

His presence in room is now logged in room presence system

Security at all times knows who is in the computer room in

case of fire emergency etc.

o Uses same card to exit the computer room which clears the record him

being in the room in cases of emergencies

o Special engineering card maybe required for accessing the

communications room with higher access control authority

3.2.9.1 Access Control Levels

Only limited personnel that have a need for presence in the Computer Room or High

Level Management should have access card with the proper authority to access the

computer room. The Computer Room must be identified as a high access control

zone indication so normal personnel can never use their existing ID card to enter the

Computer Room.

Data Center Design White Paper Page 31

Jan Kremer Consulting Services (JKCS)

3.3. Cabling

3.3.1 Introduction

Basic principles of a network cabling infrastructure include:

Creating a network cabling infrastructure

Points of Distribution

Avoiding Spaghetti

Labeling and Color Coding

Verification

3.3.1.1 Creating the Infrastructure

The connectivity requirements are based on device connection requirements which

are obviously defined. The most important element of the cabling infrastructure is

VERY SIMPLE, labeling and documenting that data in detail based on the TIA 606-

A Standard. Cabling must be based on the TIA-942 and TIA -568A and 568B

standards as well as the TIA-606-A Labeling and Documenting Standards.

3.3.1.2 Points of Distribution

A Point of Distribution (POD) is a rack of devices that manage a number of RLUs.

See next page(s) to explain how this relates to the TIA-942 standards.

3.3.1.3 Avoiding Spaghetti

Cabling installations must always consider:

Calculate proper cabling lengths

Perform standard labeling and document this in the TIA 606_A database

Router Cables using the design documented

Avoid messy cabling routing

3.3.1.4 Labelling and Colour Coding

Every component of the Data Center infrastructure is to be labeled in an independent

manner consistent with the overall scheme. For purposes of tracking the fiber, the

most important things to keep in mind with the labeling system are buildings,

telecommunication rooms, fiber panels, port numbers, pedestal labels, and of course

the fiber itself.

Data Center Design White Paper Page 32

Jan Kremer Consulting Services (JKCS)

These individual identifiers can be combined to create an overall and accurate picture

of a cabling plant. Test reports will use a combination of these pieces to completely

identify any piece of the cabling plant, where it is connected and the pathway that it

follows. This requires that every piece of equipment should be labeled.

Fiber cable should be labeled on the outside jacket of the cable. Fiber panels should

be labeled on the outside of the box. Individual modules or ports inside a fiber panel

should be clearly labeled. Documentation should be located inside the fiber panel

that clearly identifies what fiber strands are connected to which bulkhead. Under no

circumstances should a technician need to open the installer's side of an LIU in order

to determine the identifier for a bulkhead or what fiber is attached to that bulkhead.

3.3.1.5 Reading a Name

A name is constructed combining the pertinent labels from the appropriate

infrastructure elements. These names will be used in documentation to track each

component of the infrastructure. Below is an example of a single mode fiber label.

For composite fiber cables, the identifier would be shown as below.

Data Center Design White Paper Page 33

Jan Kremer Consulting Services (JKCS)

Order of the termination points in the label is decided alphanumerically, not based on

physical location itself.

Numeric identifiers for cables and cable strands are used solely to differentiate

themselves from other cables sharing their same characteristics. A cable should only

be identified with a 0047-1A/0193-1A, FMM2 if there is already a 0047-1A/0193-

1A, FMM1 in existence.

3.3.1.6 Examples

Fiber examples:

0047-1A/0193-1A, FMM1

Cable terminates in Building 047, Telecommunications Room 1A

Cable terminates in Building 193, Telecommunications Room 1A

This is the first multimode cable connecting these rooms in these buildings

0047-1A/0193-1A, FSM1.1

Cable terminates in Building 047, Telecommunications Room 1A

Cable terminates in Building 193, Telecommunications Room 1A

This is the first strand in the first single mode cable connecting these rooms in these

buildings

0047-1A/0193-1A, FCM1

Cable terminates in Building 047, Telecommunications Room 1A

Data Center Design White Paper Page 34

Jan Kremer Consulting Services (JKCS)

Cable terminates in Building 193, Telecommunications Room 1A

This is the first fiber composite cable connecting these rooms in these buildings

0047-1A/0193-1A, FCM1.SM1

Cable terminates in Building 047, Telecommunications Room 1A

Cable terminates in Building 193, Telecommunications Room 1A

This is the first strand of single mode fiber in the first composite cable connecting

these rooms in these buildings

Hardware examples:

0047-1A-1FPL1

Fiber panel is located in Building 047, Telecommunications Room 1A

Fiber panel is mounted in rack number 1.

This is the first fiber panel, in the first rack, in Telco Room 1A

0047-1A-WFPL1.1/1

Fiber panel is located in Building 047, Telecommunications Room 1A

Fiber panel is mounted on the wall.

This is the first bulkhead position in the first module of this fiber panel

PCB001-WFPL1.2/4

Fiber panel is located in Pathway Cabinet #1

Fiber panel is mounted on the wall.

This is the fourth bulkhead position in the second module in this fiber panel.

3.3.1.7 The Standard in Implementation

Implementing a new labeling scheme is going to be a long multi-step process. The

first and most important step of which is to make sure that any new installations are

labeled in accordance with the new scheme.

New installations should follow the scheme as laid out above.

Data Center Design White Paper Page 35

Jan Kremer Consulting Services (JKCS)

3.3.2 How to Label:

3.3.2.1 Fiber Optic cable

1) The fiber optic cable should be labeled on the outside jacket of the cable within

8 inches of the breakout point for the individual strands. This label will follow the

conventions outlined above with a typical label being 0147-1A/0147-3A, FSM1.

2) When deciding which end of the fiber to denote first in the label, use the lower

alpha numeric characters first. For example, 0147-1A/0347-1A, FSM1 would be

proper and 0347-1A/0147-1A, FSM1 would not.

3) Individual fiber strands should be inserted into any fiber panel following the

standard color code for fiber with Blue being first and so on. This color code should

be followed so it can be read from left to right and from up to down for each

module as viewed from the front of the fiber panel. In the documentation, strand

numbers will begin at 1 and ascend in keeping with the color code. i.e. blue=1,

orange=2, green=3, and so on.

Blue-Orange-Green-Brown-Slate-White-Red-Black-Yellow-Violet-Rose-Aqua

3.3.2.2 A Fiber Panel

Outside

1) A fiber panel should be assigned an independent identifier and be labeled with it in

the upper right hand corner of the front of the LIU. Appropriate identifiers include

FPL1, FPL2, and so on.

2) A fiber panel should have a list of all fiber cables that are held in the box itself.

Often times, this will just be one fiber cable but could be much more. This list

should be preceded with an introduction of 'This FPL holds:' or the like to prevent

confusion between the fiber name and the recorded name of the fiber panel. This list

should be in the upper left hand corner of the fiber panel.

3) In the event that both ends of a particular fiber cable terminate in the same room, the

name of that cable on the front of the fiber panel should be followed by an additional

label that specifies the rack and fiber panel numbers on both ends of that cable. For

example, 0019-2A/0019-2A, FMM1 followed by WFPL6/1FPL1 would

communicate that one end of the cable terminates in a wall mounted fiber panel

labeled fpl6 and a rack mounted fiber panel labeled fpl1 in rack 1. This additional

Data Center Design White Paper Page 36

Jan Kremer Consulting Services (JKCS)

label does not add to the cable name for record purposes but exists solely to assist

technicians in the field

Inside

1) Fibers should be installed in each module of a fiber panel from left to right and

up to down in accordance as you look at the face of the bulkheads with the standard

color code for fiber installation.

2) Each fiber termination should be labeled on the boot by a number that

corresponds to its placement in the color-code of the cable

Numbers should begin at 1 and ascend from there with duplicate numbers used for

different types of fiber strands in one cable. For example, a composite fiber cable will

have multiple strands designated with a 1 to correspond to the first MM fiber cable

and the first SM fiber cable. Numbers will not refresh for different binder groups,

only for different classifications of fiber.

3) Each bulkhead will have an independent identifier. In a fiber panel that has been

subdivided in to modules, label the modules with numbers beginning with 1 and

ascending. The individual bulkheads need not be labeled and they will be identified

with numbers that begin with 1 and will be read from left to right or up to down in

accordance with the orientation of the module. In fiber panels that have not been

subdivided, the individual bulkheads will need to be identified with a number. If the

fiber panel does not come preprinted, the installer will be responsible for labeling the

bulkheads.

Data Center Design White Paper Page 37

Jan Kremer Consulting Services (JKCS)

4) A documentation page will be supplied inside the panel and should be marked

with which fiber strand matches up to which bulkhead. The installer may create a

simple spreadsheet similar to that pictured below. In this case, labeling should make

clear the identity of each bulkhead and the fiber strand that is connected to it. At this

time, copies of this spreadsheet should be sent to Network Services.

<Fiber Panel # 0047-1A-WFPL1

Module / Port Fiber Identifier

1/1 0047-1A/0149-3A, FMM1.1

1/2 0047-1A/0149-3A, FMM1.2

1/3 0047-1A/0149-3A, FMM1.3

1/4 0047-1A/0149-3A, FMM1.4

1/5 0047-1A/0149-3A, FMM1.5

1/6 0047-1A/0149-3A, FMM1.6

2/1 0047-1A/0149-3A, FMM1.7

2/2 0047-1A/0149-3A, FMM1.8

2/3 0047-1A/0149-3A, FMM1.9

2/4 0047-1A/0149-3A, FMM1.10

2/5 0047-1A/0149-3A, FMM1.11

2/6 0047-1A/0149-3A, FMM1.12

This is the first fiber panel mounted on the wall in Telco Room 1A in Building

#0047. Bulkhead #1 holds the first strand of the first fiber cable between Telco

Room 1A of Building #0047 and Telco Room 3A of Building #0149.

5) At no time should the labeling inside a fiber panel require a technician or

engineer to open the installer's side of the fiber panel to retrieve labeling information.

Bulkhead or module position labels should be apparent from a grid work sheet or

labeled explicitly by the installer.

3.3.2.3 A Communications Cabinet

Communications Cabinets are to be labeled with their standard label being

in the form of PCB###. For example, cabinet #4 would be PCB004.

Cabinets should be labeled outside on the most visible side.

Cabinets should be labeled inside as well. The inside label will be applied

to the interior of the fiber side door with the locking assembly.

Data Center Design White Paper Page 38

Jan Kremer Consulting Services (JKCS)

3.3.2.4 A Telecommunications Room

Telecommunications rooms should be labeled with the floor they are on and a letter

designation to prevent their confusion with other Telco rooms on the same floor. 1A

would designate the first floor telecommunications closet and have a designation of

A.

Unless previously labeled, Telco Rooms should be labeled on the interior of the

doorjamb near the property decal. Final labeling should consist of a plastic sign on

the outside door of the Telecommunications Room. This sign should designate the

use of the room as a Telecommunications Room and display the appropriate

identifier for that specific room; Telecommunications Room 1A, for example.

3.3.2.5 A Telecommunications Rack

Telecommunications rooms should be labeled numerically beginning with 1 and

ascending as more racks are added to the room. The rack should be clearly labeled

along the top crossbar of the rack. For purposes of this labeling standard, a

telecommunications rack is considered to be any structure capable of holding

telecommunications terminations and electronic hardware. This includes but is not

limited to 7ft free standing racks, free standing enclosures, 3-4ft wall mounted fixed

racks, and wall mounted enclosures and so on.

3.3.2.6 Conduit

An installed conduit should be labeled with the point of origin, point of termination

and a unique identifier to differentiate it from other conduit sharing the same

pathway. This label follows the same guidelines as discussed above. 0147-1A/0347-

1A, PCO1 would designate the first conduit running between building 147

telecommunications room 1A and building 347 telecommunications room 1A.

Labels should be affixed to both ends of the conduit. Labels are to be applied within

6 inches of the termination of each end of the conduit.

3.3.3 Verification

During implementation each and every patch panel port MUST be verified and

certified by the installer as part of that contract. Obviously cable testing equipment

and additional tools must be utilized to ensure proper cabling installations.

3.3.4 Network Cabling Infrastructure

The recommended network cabling structure will be based on overhead cable trays

which reduce cabling spaghetti under the raised floor. This also prevents

unnecessary obstructions to the cold air flow under the raised floors and prevents

complications with the Water Detection Cable. We also recommend that the Power

Cabling will also be in separate overhead trays considering the placement of

chilled water piping under the raised floor.

Data Center Design White Paper Page 39

Jan Kremer Consulting Services (JKCS)

Data Center Design White Paper Page 40

Jan Kremer Consulting Services (JKCS)

When deploying large volumes of servers inside the data center it is extremely

important that the design footprint is scalable.

However, access models vary between each network, and can often be extremely

complex to design. The integrated network topologies discussed in this guide take a

modular, platform-based approach in order to scale up or down as required within a

cabinet or room.

It is assumed that all compute resources incorporate resilient network, power, and

storage resources. This assumption translates to multiple LAN, SAN, and power

connections within the physical layer infrastructure. One way to simplify the design

and simultaneously incorporate a scalable layout is to divide the raised floor space

into modular, easily duplicated sub-areas.

The logical architecture is divided into three discrete layers, and the physical

infrastructure is designed and divided into manageable sub-areas called Pods. This

divides a typical data center with multiple zones and Pods distributed throughout the

room; core and aggregation layer switches are located in each zone for redundancy,

and access layer switches are located in each Pod to support the computer resources

within the Pod.

3.3.5 Implementation of Pods

Data Center Design White Paper Page 41

Jan Kremer Consulting Services (JKCS)

3.3.6 Top of Rack (ToR) Model

The design characteristic of a ToR model is the inclusion of an access layer switch in

each server cabinet, so the physical layer solution must be designed to support the

switching hardware and access-layer connections. One cabling benefit of deploying

access layer switches in each server cabinet is the ability to link to the aggregation

layer using long-reach small form factor fiber connectivity. The use of fiber

eliminates any reach or pathway challenges presented by copper connectivity to

allow greater flexibility in selecting the physical location of network equipment.

Figure below shows a typical logical ToR network topology, illustrating the various

redundant links and distribution of connectivity between access and aggregation

switches. This example utilizes the Cisco Nexus 7010 for the aggregation layer and a

Cisco Catalyst 4948 for the access layer. The Cisco Catalyst 4948 provides 10GbE

links routed out of the cabinet back to the aggregation layer and 1GbE links for

server access connections within the cabinet.

Once the logical topology has been defined, the next step is to map a physical layer

solution directly to that topology. With a ToR model it is important to understand the

number of network connections needed for each server resource. The basic rule

governing the number of ToR connections is that any server deployment requiring

more than 48 links requires an additional access layer switch in each cabinet to

support the higher link volume. For example, if thirty (30) 1 RU servers that each

require three copper and two fiber connections are deployed within a 45 RU cabinet,

an additional access layer switch is needed for each cabinet. Figure below shows the

typical rear view ToR design including cabinet connectivity requirements at

aggregation and access layers.

Data Center Design White Paper Page 42

Jan Kremer Consulting Services (JKCS)

Data Center Design White Paper Page 43

Jan Kremer Consulting Services (JKCS)

3.3.7 End of Row (EoR) Model

In an EoR model, server cabinets contain patch fields but not access switches. In this

model, the total number of servers per cabinet and I/Os per server determines the

number of switches used in each Pod, which then drives the physical layer design

decisions.

The typical EoR Pod contains two Cisco Nexus or Cisco Catalyst switches for

redundancy. The length of each row within the Pod is determined by the density of

the network switching equipment as well as the distance from the server to the

switch.

For example, if each server cabinet in the row utilizes 48 connections and the switch

has a capacity for 336 connections, the row would have the capacity to support up to

seven server cabinets with complete network redundancy, as long as the seven

cabinets are within the maximum cable length to the switching equipment.

Top View of EoR Cabinet

Data Center Design White Paper Page 44

Jan Kremer Consulting Services (JKCS)

3.3.8 Point of Distribution (POD)

One way to simplify the design and simultaneously incorporate a scalable layout is to

divide the raised floor space into modular, easily duplicated sub-areas. Figure below

illustrates the modular building blocks used in order to design scalability into the

network architecture at both OSI Layers 1 and 2. The logical architecture is divided

into three discrete layers, and the physical infrastructure is designed and divided into

manageable sub-areas called Pods.

This example shows a typical data center with two zones and 20 Pods distributed

throughout the room; core and aggregation layer switches are located in each zone

for redundancy, and access layer switches are located in each Pod to support the

computer resources within the Pod.

Data Center Design White Paper Page 45

Jan Kremer Consulting Services (JKCS)

3.4. Fire detection and suppression

3.4.1 Introduction

Several steps must be taken to avoid fires such as:

No Smoking

No combustible materials

Always check HVAC reheat coils

Check the sprinkler/FM200 fire suppression system frequently

Preserve the data center Cocoon. Maintain the secure data center perimeter

Ensure you have a disaster response plan in place in case worst case happens

Provide easy access to fire extinguishers

The first line of fire defense and containment is the actual building structure. The

rooms and storage rooms of the data center must be isolated by fire resistant walls.

The floor and ceiling must be constructed of noncombustible or limited combustible

material. Also the HVAC system must be dedicated to the data center only.

3.4.1.1 Fire Detection Systems

The early warning fire detection system must have the following features:

Must be a heat detection type system

Installed and maintained in accordance with NFPA 72E (NFPA 2001)

Each installation should be engineered for the specific area it must protect

Some detection must be provided under the raised floor

Considering the noise in a data center, visual alerts must be provided

3.4.1.2 Fire Suppression Systems

The FM200 solution is the recommended suppression system currently available. The

FM200 uses the gas hepta-fluoropropane which is quickly dispersed around the

equipment. It works literally by removing heat energy from the fire to the extent that

the combustion reaction cannot be sustained.

It works quickly, is safe for people, does not damage the hardware or electrical

circuits and does not require a post-discharge cleanup effort. With FM200 a data

center can be back in business almost immediately after a fire.

The Datacenter will consist of a gaseous fire suppression system using FM200.

FM200 works by physically cooling the fire at a molecular level, and is safe for use

Data Center Design White Paper Page 46

Jan Kremer Consulting Services (JKCS)

around operating electronic devices and in human occupied areas. Fire detection in

the Data Center will use cross zoned photo-electric and ionization spot detectors.

Additionally, High Sensitivity Smoke Detection (HSSD) will be used for the earlier

possible detection of combustion. The Fire detection system will be integrated into

the IP network. This will allow the use of existing infrastructure instead of running

dedicated lines, and allow for remote monitoring and control. The remainder of the

Datacenter will be protected to local code standards utilizing hand held fire

extinguishers as applicable.

3.4.1.3 Manual Fire Suppression

Manual means of fire suppression must always be available on hand in the event the

automatic systems fail. The following backup systems must be available:

Portable Fire Extinguishers

o Portable extinguishers must be placed at strategic locations throughout the

data center location. They should be placed unobstructed and clearly

marked. Also Tile Lifters must be placed in all locations so that manual

fire extinguishers can be used under the raised floor when needed.

Manual Pull Stations

o Manual pull stations must be installed at strategic points in the data center

room. In areas where gas suppression systems are used, there must be a

means of manual abort.

Data Center Design White Paper Page 47

Jan Kremer Consulting Services (JKCS)

3.4.2 Detailed Information

The Chemetron Fire Systems Gamma Series Systems are automatic suppression

systems using the FM-200 chemical agent and consisting of four basic components

and their associated accessories.

FM-200 Components

Control Panels

Detection and Alarm Devices

Completer Kits

3.4.2.1 Features

The FM-200 components consist of agent containers, container supports (racks),

and discharge nozzles.

The control panel is the brains of the system and is used to monitor the detection

and accessories.

The detection, alarm devices, and accessories are the external devices that act as

the eyes and voice of the system as they give audible or visual signals.

The completer kits consist of warning signs, hoses, connection fittings, pressure

gauges or solenoid valves, and the actuator required to operate the cylinder valve.

The system and its components are agency tested for total flooding applications and

should be used in accordance with the guidelines contained in National Fire

Protection Association 2001. A total flooding application can be defined as injecting

FM-200 into an enclosure or volume having the structural integrity to retain the agent

during and after discharge.

The design of such a system requires that the FM-200 chemical agent be discharged

from its container within 10 seconds and be thoroughly mixed throughout the

protected volume, reaching a minimum concentration level of 6.25%, but not

exceeding 9% in normally occupied spaces.

FM 200 is a halocarbon agent accepted as an alternative to Halon for total flooding

fire suppression systems. After receiving the fire signal, FM 200 is discharged totally

from the cylinders within 10 seconds to fill up the space uniformly at the design

concentration to extinguish the fire. The agent is retained at its design concentration

in the space for a period-called 'Hold Time'-to extinguish the fire.

Data Center Design White Paper Page 48

Jan Kremer Consulting Services (JKCS)

After Hold time, when the fire is extinguished, the agent is exhausted from the space

by exhaust fans before any inspection is performed. For the design of the system,

NFPA Code 2001, "Standard on Clean Agent Fire Extinguishing Systems" is

followed.

FM 200 design includes determination of the agent quantity, piping layout, pressure

drop through the piping and accessories, as well as fixing the location and quantities

of discharge nozzles for uniform distribution of the agent throughout the space. This

also includes determining the filling density in the agent cylinders to take care of the

pressure drop through the system, for determining the number of cylinders.

From above, the agent quantity required for total flooding of the space is determined

independently based on the design concentration of the agent necessary for the type

of fire to be extinguished, Hold Time for extinguishing the fire, additional quantity

required to take care of the leakage, etc.

Tentative pipe sizing and pipe routing with nozzle location are done by the owner or

the engineer in harmony with the other facilities in the space. This is, however,

finalized by the agent supplier's authorized system designer based on the pressure

drop software program for two-phase flow of the agent.

To take care of the system pressure drop and to establish the required pressure at the

nozzles, the authorized agent determines the agent fill density in the cylinder. They

also finalize the number of cylinders based on the fill density and their standard

cylinder size.

The areas to be protected are identified from the fire risk analysis of the plant and the

various codes (like NFPA, etc). The requirements are guided by the functional

criticality of the system protected, amount of loss involved, fire insurance premium,

etc

A typical case of protecting a power station using the FM 200 total suppression

system is the basis for the following design information. Design Code: NFPA 2001,

"Clean Agent Fire Extinguishing System," is the governing code for designing the

system, and NFPA 72, "National Fire Alarm Code," is followed for fixing the fire

alarm system, an important part of the clean agent total suppression system.

Data Center Design White Paper Page 49

Jan Kremer Consulting Services (JKCS)

Agent Concentration: Since FM 200 is the most expensive item of the total system, a

careful analysis is required before fixing the required concentration and the total

quantity of the agent.

Regarding design concentration of the agent, there are various guidelines available,

such as:

120% of cup burner value verified by listing/approval tests, minimum design

concentration (%V/V) of FM 200 is 7%, (refer to Table 4-7.5 Weight and Storage

Volume Equivalent data for New Technology Halocarbon Gaseous alternatives'

SFPE Handbook on Fire Protection Engineering).

The same agent concentration of 7% is accepted by Factory Mutual (FM) as the

design agent concentration.

Underwriters' Laboratories (UL), however, recommends the agent design

concentration as 7.44%.

To satisfy both FM and UL, it seems prudent to consider the design concentration as

7.44% by volume. The FM 200 supplier's authorized agent normally recommends 7%

as the design concentration, based on their experience with the type of fire

anticipated in the areas protected. Increase of the agent concentration from 7% to

7.44% has the repercussion on the cost of the agent. If possible, the recommendation

of the AHJ (Authority of Jurisdiction) should be solicited before fixing the agent

design concentration.

The maximum limit of the FM 200 concentration is restricted by NFPA 2001 due to

the safety considerations of the toxicological and physical effects on human life.

The recommended FM-200 installation will include 2 large Gas containers placed on

the right wall next to one of the main pillars and include app. 300 nozzles distributed

over the Computer Room floor space as well as the Communications Room

HP OpenView integration is established through the Chemetron detection and alarm

devices which are viewed and monitored under HP OpenView as SNMP devices.

Data Center Design White Paper Page 50

Jan Kremer Consulting Services (JKCS)

3.5. HVAC

3.5.1 Introduction

HVAC and other environmental controls are essential for a data center. Computer

Hardware requires a balanced and appropriate environment for continuous system

operation.

Temperatures and relative humidity levels outside of the specified operating ranges

or extreme swings in conditions can lead to unreliable components or system failures.

Control of these environmental factors also has an effect on the control of

electrostatic discharge and corrosion of system components.

This introduction section includes:

Reasons for control

Temperature Requirements

Relative Humidity

Electrostatic Discharge

3.5.1.1 Reasons for control

Computer rooms require precise and adaptable temperature control because:

Need for cooling

o Data Centers have a dense heat load

Cooling is needed where required

o Heat load varies across an area of equipment placement

Precise cooling is needed

o Data Center cooling require higher sensible heat ratio than office areas and

precision systems require 85 to 100% cooling while normal comfort

systems require much less