Professional Documents

Culture Documents

Cartwright Pardo Acmmm14

Uploaded by

Robert RobinsonCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Cartwright Pardo Acmmm14

Uploaded by

Robert RobinsonCopyright:

Available Formats

SynthAssist: An Audio Synthesizer Programmed with

Vocal Imitation

Mark Cartwright

Bryan Pardo

Northwestern University

EECS Department

Northwestern University

EECS Department

mcartwright@u.northwestern.edu

pardo@northwestern.edu

ABSTRACT

While programming an audio synthesizer can be difficult, if

a user has a general idea of the sound they are trying to

program, they may be able to imitate it with their voice. In

this technical demonstration, we demonstrate SynthAssist, a

system that allows the user to program an audio synthesizer

using vocal imitation and interactive feedback. This system

treats synthesizer programming as an audio information retrieval task. To account for the limitations of the human

voice, it compares vocal imitations to synthesizer sounds by

using both absolute and relative temporal shapes of relevant

audio features, and it refines the query and feature weights

using relevance feedback.

Categories and Subject Descriptors

H.5.5. [Sound and Music Computing]: Systems; H.5.2.

[User Interfaces]: Interaction styles; H.5.1. [Multimedia

Information Systems]: Audio input/output

many, many presets (e.g. Native Instruments Kore Browser).

However, searching through a vast number of presets can be

a task as daunting as using a complex synthesizer.

In this technical demonstration, we demonstrate SynthAssist [1], a system that interactively helps the user find their

desired sound (synthesizer patch) in the space of sounds generated by an audio synthesizer. The goal is to make synthesizers more accessible, letting users focus on high-level

goals (e.g. sound brassy) instead of low-level controls (e.g.

What does the LFO1Asym knob do?). SynthAssist accomplishes this by allowing the user to communicate their

desired sound with a vocal imitation of the sound, a method

of communication shown to be effective in a recent study

[2]. The inspiration for SynthAssist was how one might interact with a professional music producer or sound designer:

imitate the desired outcome vocally (e.g. make a sound

that goes like <sound effect made vocally>), have the producer design a few options based on the example, give them

evaluative feedback (e.g that doesnt sound as good as the

previous example.)

General Terms

Human Factors

Keywords

Audio; music; synthesis; vocal imitation

1.

INTRODUCTION

As software-based audio synthesizers have become more

advanced, their interfaces have become more complex and

therefore harder to use. Many synthesizer interfaces have

one hundred or more controls (e.g. Apples ES2 synthesizer

has 125 controls), many of which are difficult to understand

without significant synthesis experience. The search space

of even simple synthesizers ( 15 controls) is complex enough

that it is difficult for novices to actualize their ideas. Even

for experienced users, the tedium of these interfaces takes

them out of their creative flow state, hampering productivity. Some manufacturers address this problem by having

Permission to make digital or hard copies of part or all of this work for personal or

classroom use is granted without fee provided that copies are not made or distributed

for profit or commercial advantage, and that copies bear this notice and the full citation on the first page. Copyrights for third-party components of this work must be

honored. For all other uses, contact the owner/author(s). Copyright is held by the

author/owner(s).

MM14, November 37, 2014, Orlando, Florida, USA.

ACM 978-1-4503-3063-3/14/11.

http://dx.doi.org/10.1145/2647868.2654880.

2.

SYNTHASSSIST SYSTEM

With SynthAssist, a user can quickly and easily search

through thousands of synthesizer sounds to find the desired

option. The interaction for a session in SynthAssist is as

follows:

1. To communicate their desired audio concept, the user

provides one or more input examples by either recording a new sound (e.g. vocalize an example) or choosing

a prerecorded sound.

2. The user rates how close each example is to the target

sound.

3. Based on the ratings, SynthAssist estimates what the

target sound must be like.

4. SynthAssist generates suggestions from the synthesizer

that are similar to the estimated target sound.

5. The user rates how close the suggestions are to the

desired target sound.

6. If a suggestion is good enough, return the synthesizer

parameters. Else, repeat Steps 3 through 6.

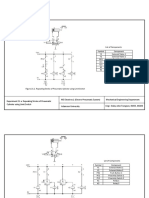

Figure 1 shows a screenshot of SynthAssist. Each suggestion is represented by one of the colored circles. When

a user clicks or moves a suggestion, the synthesizer sound

TOP RESULTS

ZOOM SLIDER

PREVIOUS

RATING

RESULT

TO RATE

Figure 1: Screenshot of SynthAssist

vocalization(s)

extract

features

refine

query

example(s)

rate

results

NO

performed by the user

performed by the machine

update

feature

weights

search

database

find

sound?

results

database

YES

return

synth

paramaters

Figure 2: System flow of SynthAssist.

changes. Users rate how similar suggestions are to their target by moving them closer to or farther from the center,

hub, circle. If a suggestion is irrelevant, the user can inform SynthAssist and remove it from the screen by double

clicking on it. Dragging a suggestion to the center of the

circle indicates indicates this is the desired sound and terminates the interaction. The overall system architecture is

shown in Figure 2.

In the SynthAssist system, we want to support vocal imitation queries. Our approach is described in [1]. While the

human voice has a limited timbral range, it can very expressive with respect to how it changes in time. For example,

your voice may not be able to exactly imitate your favorite

Moog bass sound, but you may be able to imitate how the

sound changes over the course of a note (e.g. pitch, loudness, brightness, noisiness, etc.). When comparing sounds

in SynthAssist, we focus on these changes over the course of

a note.

In this demonstration, 10,000 parameter combinations and

their resulting sounds have been sampled from an audio synthesizer. Absolute and relative time series of several audio features are extracted from both the query and sampled

sounds. The time series of the query are compared to those

of the sampled sounds using dynamic time warping. The

distance measure and query are refined using a relevance

feedback mechanism for time series.

3.

THE DEMONSTRATION

In the demonstration of SynthAssist, a computer will be

set up running the SynthAssist software. Users will be able

to record their vocal imitations and use them as input to the

system to interactively program a synthesizer sound. Users

can interact with the system either by itself or in a musical

context, programming a sound for an existing keyboard line

in a song. In both modes, the user can use a piano keyboard

to play the synthesizer.

A video of an example search session and an explanation of the system can be found at http://music.eecs.

northwestern.edu/videos/SynthAssist.mov.

4.

ACKNOWLEDGMENTS

This work was supported by NSF Grant Nos. IIS-1116384

and DGE-0824162.

5.

REFERENCES

[1] M. Cartwright and B. Pardo. Synthassist: Querying an

audio synthesizer by vocal imitation. In Conference on

New Interfaces for Musical Expression, London, 2014.

[2] G. Lemaitre and D. Rocchesso. On the effectiveness of

vocal imitations and verbal descriptions of sounds. The

Journal of the Acoustical Society of America,

135(2):862873, 2014.

You might also like

- Synthesizer Cookbook: How to Use Filters: Sound Design for Beginners, #2From EverandSynthesizer Cookbook: How to Use Filters: Sound Design for Beginners, #2Rating: 2.5 out of 5 stars2.5/5 (3)

- MUSICA: An AI System for Creating Jazz Music with Natural LanguageDocument6 pagesMUSICA: An AI System for Creating Jazz Music with Natural LanguageRobinha RobsNo ratings yet

- Ambient Sound SpacesDocument16 pagesAmbient Sound SpacesEsa KhoirinnisaNo ratings yet

- Grouploop: A Collaborative, Network-Enabled Audio Feedback InstrumentDocument4 pagesGrouploop: A Collaborative, Network-Enabled Audio Feedback InstrumentJosep M ComajuncosasNo ratings yet

- FollowPlay: A MAX Program for Interactive Composition and Real-Time MusicDocument4 pagesFollowPlay: A MAX Program for Interactive Composition and Real-Time MusicÁine MauraNo ratings yet

- ISSE: An Interactive Source Separation Editor: April 2014Document11 pagesISSE: An Interactive Source Separation Editor: April 2014hhyjNo ratings yet

- Stochastic Synthesizer Patch Exploration in EdisynDocument12 pagesStochastic Synthesizer Patch Exploration in EdisynjimNo ratings yet

- OM Sound ProcessingDocument6 pagesOM Sound ProcessingjerikleshNo ratings yet

- Interactive Processing For InstrumentsDocument3 pagesInteractive Processing For InstrumentsÁine MauraNo ratings yet

- A Meta-Instrument For Interactive, On-The-Fly Machine LearningDocument6 pagesA Meta-Instrument For Interactive, On-The-Fly Machine LearningRafael CabralNo ratings yet

- LL: Listening and Learning in An Interactive Improvisation SystemDocument7 pagesLL: Listening and Learning in An Interactive Improvisation SystemDANANo ratings yet

- Computer Vision For Music IdentificationDocument8 pagesComputer Vision For Music IdentificationQuỳnh NguyễnNo ratings yet

- Music Recommendation System Based On Usage History and Automatic Genre Classification PDFDocument2 pagesMusic Recommendation System Based On Usage History and Automatic Genre Classification PDFProton ChaosrikulNo ratings yet

- Listen to Music by Mood with X-Voice PlayerDocument15 pagesListen to Music by Mood with X-Voice PlayerMunna KumarNo ratings yet

- Music Recommendation System IJERTV8IS070267 PDFDocument2 pagesMusic Recommendation System IJERTV8IS070267 PDFdrftgyhNo ratings yet

- Audio Information Retrieval (AIR) Tools: George TzanetakisDocument12 pagesAudio Information Retrieval (AIR) Tools: George TzanetakisMai NguyễnNo ratings yet

- A System For Data-Driven Concatenative Sound SynthesisDocument6 pagesA System For Data-Driven Concatenative Sound SynthesisGiovanni IacovellaNo ratings yet

- User Manual PDFDocument164 pagesUser Manual PDFArturo GuzmanNo ratings yet

- Music Score Alignment and Computer Accompaniment: Roger B. Dannenberg and Christopher RaphaelDocument8 pagesMusic Score Alignment and Computer Accompaniment: Roger B. Dannenberg and Christopher RaphaelfhyraaNo ratings yet

- 16SCSE101080 - PIYUSH CHAUHANfinalreport-converted - Piyush ChauhanDocument19 pages16SCSE101080 - PIYUSH CHAUHANfinalreport-converted - Piyush ChauhanAnkit SinghNo ratings yet

- Online music player generates playlists from YouTubeDocument19 pagesOnline music player generates playlists from YouTubeAmit KumarNo ratings yet

- AES 122 PaperDocument7 pagesAES 122 PaperMario HdezNo ratings yet

- Music Recommender SystemDocument69 pagesMusic Recommender SystemNightdive StudiosNo ratings yet

- A Functional Taxonomy of Music Generation SystemsDocument30 pagesA Functional Taxonomy of Music Generation Systemsdorien82No ratings yet

- Ixi Software: The Interface As InstrumentDocument4 pagesIxi Software: The Interface As InstrumentAlisa KobzarNo ratings yet

- Music Genre Classification: Instrumentation and Control Engineering MINOR PROJECT (2019-2023)Document17 pagesMusic Genre Classification: Instrumentation and Control Engineering MINOR PROJECT (2019-2023)ShiwaliNo ratings yet

- Decoding Performance DataDocument12 pagesDecoding Performance DataJunaid AkramNo ratings yet

- Signal Flow & Plugins - How To Mix Music (Part 2)Document17 pagesSignal Flow & Plugins - How To Mix Music (Part 2)SteveJonesNo ratings yet

- Timbre Space As A Musical Control Structure by David L. Wessel PDFDocument8 pagesTimbre Space As A Musical Control Structure by David L. Wessel PDFSama PiaNo ratings yet

- Interactive CompositionDocument2 pagesInteractive CompositionipiresNo ratings yet

- Music Player Webapp and Existing Applications Is That It IsDocument6 pagesMusic Player Webapp and Existing Applications Is That It IsUmesh MauryaNo ratings yet

- Ripples: A Human-Machine Musical Duet: Andrew R. BrownDocument3 pagesRipples: A Human-Machine Musical Duet: Andrew R. BrownKwangHoon HanNo ratings yet

- Aes2001 Bonada PDFDocument10 pagesAes2001 Bonada PDFjfkNo ratings yet

- MMulti AnalyzerDocument28 pagesMMulti AnalyzerFabian GonzalezNo ratings yet

- Audio Processing Using MatlabDocument51 pagesAudio Processing Using MatlabYogyogyog PatelNo ratings yet

- MMultiAnalyzer PDFDocument28 pagesMMultiAnalyzer PDFFabian GonzalezNo ratings yet

- Strategies For Interaction: Computer Music, Performance, and MultimediaDocument4 pagesStrategies For Interaction: Computer Music, Performance, and MultimediafelipeNo ratings yet

- Music Recommender System: Politecnico Di MilanoDocument69 pagesMusic Recommender System: Politecnico Di Milanokarlos_alvanNo ratings yet

- Pure DataDocument349 pagesPure DataKelly Johnson100% (1)

- 2020 TroiseDocument31 pages2020 TroiseChandra TjongNo ratings yet

- ReportDocument10 pagesReportNightdive StudiosNo ratings yet

- Spectral Approach to Modeling Singing VoiceDocument10 pagesSpectral Approach to Modeling Singing VoiceNathalia Parra GarzaNo ratings yet

- Creating and Exploring The Huge Space Called Sound: Interactive Evolution As A Composition ToolDocument10 pagesCreating and Exploring The Huge Space Called Sound: Interactive Evolution As A Composition ToolOscar MiaauNo ratings yet

- Microtonic User GuideDocument44 pagesMicrotonic User GuideDaylen444No ratings yet

- Mymtv: A Personalized and Interactive Music ChannelDocument1 pageMymtv: A Personalized and Interactive Music ChannelcyrcosNo ratings yet

- Introducing The CDP Sound Transformation Programs: Release 7 (MAC / PC)Document10 pagesIntroducing The CDP Sound Transformation Programs: Release 7 (MAC / PC)Fabian Gonzalez RamirezNo ratings yet

- Evolutionary MusicDocument11 pagesEvolutionary MusicKrvin KesNo ratings yet

- Practical Training ReportDocument25 pagesPractical Training ReportYash NehraNo ratings yet

- CAE102 2 Learner Resource Effects and Signal Processors ENG V2 Inc SidechainDocument14 pagesCAE102 2 Learner Resource Effects and Signal Processors ENG V2 Inc SidechainToby BryceNo ratings yet

- Microtonic Drum MachineDocument41 pagesMicrotonic Drum MachinelasseeinarNo ratings yet

- Automatic Music GenerationDocument16 pagesAutomatic Music Generation174013 BARATH SNo ratings yet

- Ilovepdf MergedDocument16 pagesIlovepdf MergedRavindra PrasadNo ratings yet

- Srs (Spotify) NewDocument19 pagesSrs (Spotify) Newradhikarajvanshi1424No ratings yet

- Facial Expression Based Music Recommendation System: IjarcceDocument11 pagesFacial Expression Based Music Recommendation System: IjarcceYogesh BaranwalNo ratings yet

- Audio Visualizer Using EmotionDocument7 pagesAudio Visualizer Using EmotionVinayagam MariappanNo ratings yet

- Recording Your Modular SynthesizerDocument10 pagesRecording Your Modular SynthesizerSimone Titanium TomaselliNo ratings yet

- Iclc2016 Roberts WakefieldDocument9 pagesIclc2016 Roberts WakefieldpipunxNo ratings yet

- PscsDocument3 pagesPscsRobert RobinsonNo ratings yet

- AIEthics WhitepaperDocument9 pagesAIEthics WhitepaperRobert RobinsonNo ratings yet

- Derivative RulesDocument1 pageDerivative Rulesitsfantastic1No ratings yet

- Piano PatternDocument2 pagesPiano PatternRonald Kharismawan100% (5)

- SylaDocument2 pagesSylaRobert RobinsonNo ratings yet

- C07005Document14 pagesC07005Robert RobinsonNo ratings yet

- Calculus Cheat Sheet Limits Definitions Limit at InfinityDocument11 pagesCalculus Cheat Sheet Limits Definitions Limit at Infinityapi-1192241886% (7)

- Artificial IntelligenceDocument2 pagesArtificial IntelligenceRobert RobinsonNo ratings yet

- 20 4 PDFDocument11 pages20 4 PDFRobert RobinsonNo ratings yet

- Derivative IntegralsDocument1 pageDerivative IntegralsDerek_Chandler_214No ratings yet

- Classical & Advanced Optimization Techniques PDFDocument12 pagesClassical & Advanced Optimization Techniques PDFBarathNo ratings yet

- Ant Colony Optimization for Image ProcessingDocument3 pagesAnt Colony Optimization for Image ProcessingRobert RobinsonNo ratings yet

- Pmed 1000387 PDFDocument16 pagesPmed 1000387 PDFRobert RobinsonNo ratings yet

- Nursing Informatics: The Future Is HereDocument10 pagesNursing Informatics: The Future Is HereRobert RobinsonNo ratings yet

- 133 SylDocument9 pages133 SylRobert RobinsonNo ratings yet

- Educating The Future E-Health Professional NurseDocument1 pageEducating The Future E-Health Professional NurseRobert RobinsonNo ratings yet

- Syllabus - Master Programme in Health InformaticsDocument8 pagesSyllabus - Master Programme in Health InformaticsRobert RobinsonNo ratings yet

- Principles of Public Health Informatics I SyllabusDocument7 pagesPrinciples of Public Health Informatics I SyllabusRobert RobinsonNo ratings yet

- Difference Between RISC and CISC ArchitectureDocument4 pagesDifference Between RISC and CISC ArchitectureRobert RobinsonNo ratings yet

- HW10 11Document3 pagesHW10 11Robert RobinsonNo ratings yet

- HW10 11Document3 pagesHW10 11Robert RobinsonNo ratings yet

- PC Maintenance PDFDocument128 pagesPC Maintenance PDFBirhanu AtnafuNo ratings yet

- 6th Central Pay Commission Salary CalculatorDocument15 pages6th Central Pay Commission Salary Calculatorrakhonde100% (436)

- PythonHist LegendDocument1 pagePythonHist LegendRobert RobinsonNo ratings yet

- The CPU, Instruction Fetch & Execute: 2.1 A Bog Standard ArchitectureDocument15 pagesThe CPU, Instruction Fetch & Execute: 2.1 A Bog Standard ArchitectureRobert RobinsonNo ratings yet

- MIDI-LAB, A Powerful Visual Basic Program For Creating Midi MusicDocument12 pagesMIDI-LAB, A Powerful Visual Basic Program For Creating Midi MusicijseaNo ratings yet

- The Changing Memory HierarchyDocument3 pagesThe Changing Memory HierarchyRobert RobinsonNo ratings yet

- 1.2intro To IT CSE 1010e Classtest Nov06Document9 pages1.2intro To IT CSE 1010e Classtest Nov06RamoneYoungyachtDavisNo ratings yet

- Arranging For Strings2 PDFDocument7 pagesArranging For Strings2 PDFRobert RobinsonNo ratings yet

- KANSAS CITY Hyatt Regency Hotel Walkways CollapseDocument8 pagesKANSAS CITY Hyatt Regency Hotel Walkways CollapseRafran RoslyNo ratings yet

- BK - Scrum and CMMIDocument132 pagesBK - Scrum and CMMIcoolgoroNo ratings yet

- Tugas Dinamika Struktur Shape Function - Kelompok 6Document8 pagesTugas Dinamika Struktur Shape Function - Kelompok 6Mochammad Choirul RizkyNo ratings yet

- Lets Play BingoDocument17 pagesLets Play BingoRosana SanchezNo ratings yet

- Workman GTX: Utility VehicleDocument36 pagesWorkman GTX: Utility VehicleDaniel Carrillo BarriosNo ratings yet

- Leadership Learnings From Chhatrapati SHDocument5 pagesLeadership Learnings From Chhatrapati SHSagar RautNo ratings yet

- Drilling: Drilling Is A Cutting Process That Uses A Drill Bit To Cut or Enlarge A Hole of Circular Cross-Section inDocument9 pagesDrilling: Drilling Is A Cutting Process That Uses A Drill Bit To Cut or Enlarge A Hole of Circular Cross-Section inAekanshNo ratings yet

- Guide to Rubber Expansion JointsDocument7 pagesGuide to Rubber Expansion JointsHu HenryNo ratings yet

- Philippines - Media LandscapesDocument38 pagesPhilippines - Media LandscapesGuillian Mae PalconeNo ratings yet

- Culata JD 6466Document4 pagesCulata JD 6466TECNOTRAC AldanaNo ratings yet

- PBV20N2 Service Manual PDFDocument244 pagesPBV20N2 Service Manual PDFJack Norhy100% (1)

- How To Read Architect DrawingDocument32 pagesHow To Read Architect DrawingKutty Mansoor50% (2)

- VisQ Queue Manager System Guide Version 10.3Document27 pagesVisQ Queue Manager System Guide Version 10.3MSC Nastran Beginner100% (1)

- Operations and Service 69UG15: Diesel Generator SetDocument64 pagesOperations and Service 69UG15: Diesel Generator SetAnonymous NYymdHgyNo ratings yet

- Stock PartDocument20 pagesStock PartGReyNo ratings yet

- MELHORES SITES DE INFOPRODUTOS PLR E SUAS RANKS NO ALEXADocument8 pagesMELHORES SITES DE INFOPRODUTOS PLR E SUAS RANKS NO ALEXAAlexandre Silva100% (2)

- 1989 Volvo 740 Instruments and ControlsDocument107 pages1989 Volvo 740 Instruments and Controlsskyliner538No ratings yet

- Huffman & ShannonDocument30 pagesHuffman & ShannonDhamodharan SrinivasanNo ratings yet

- Cisco CCNA SecurityDocument85 pagesCisco CCNA SecurityPaoPound HomnualNo ratings yet

- Energy Equity Epic (Sengkang) Pty., Ltd. Central Gas Processing Plant Kampung Baru FieldDocument2 pagesEnergy Equity Epic (Sengkang) Pty., Ltd. Central Gas Processing Plant Kampung Baru FieldAsbar AmriNo ratings yet

- List of ComponentsDocument2 pagesList of ComponentsRainwin TamayoNo ratings yet

- Hitachi SetFree MiniVRF 0120LRDocument52 pagesHitachi SetFree MiniVRF 0120LRAhmed AzadNo ratings yet

- Manual ReaperDocument466 pagesManual ReaperJuan Camilo Arroyave ArangoNo ratings yet

- Aqm Mallcom ReportDocument12 pagesAqm Mallcom ReportHarshita TiwariNo ratings yet

- How To Setup CM Notify SyncDocument2 pagesHow To Setup CM Notify SyncascfsfNo ratings yet

- MTO Response Letter 0609Document3 pagesMTO Response Letter 0609hwy7and8No ratings yet

- 24C02BN Su18Document26 pages24C02BN Su18Dwp BhaskaranNo ratings yet

- HRIS1Document24 pagesHRIS1UMESH VINAYAK ARVINDEKARNo ratings yet

- Sample:OPS-English Evaluation FormDocument3 pagesSample:OPS-English Evaluation FormshieroNo ratings yet

- Awardees List - 11th VKA 2019 (Final)Document14 pagesAwardees List - 11th VKA 2019 (Final)ANKIT DWIVEDINo ratings yet