Professional Documents

Culture Documents

Cascaded Ficon Ga TB 017 01

Uploaded by

Peter KidiavaiOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Cascaded Ficon Ga TB 017 01

Uploaded by

Peter KidiavaiCopyright:

Available Formats

MAINFRAME

Technical Brief:

Cascaded FICON in a Brocade Environment

Cascaded FICON introduces the open systems SAN concept of the Inter-Switch

Links (ISLs). IBM now supports the flow of traffic from the processor through

two FICON directors connected via an ISL and on to the peripheral devices

such as disk and tape. This paper discusses the benefits and some technical

aspects of cascaded FICON in a Brocade environment.

MAINFRAME

Technical Brief

CONTENTS

Technical Brief: Cascaded FICON in a Brocade Environment...........................................................................................................................................................1

Contents.................................................................................................................................................................................................................................................................2

Introduction...........................................................................................................................................................................................................................................................3

The Evolution from ESCON to FICON Cascading.....................................................................................................................................................................................4

What is Cascaded FICON?.......................................................................................................................................................4

High Availability (HA), Disaster Recovery (DR), and Business Continuity (BC)......................................................................5

Benefits of FICON Cascading .........................................................................................................................................................................................................................7

Optimizing Use of Storage Resources.....................................................................................................................................8

Cascaded FICON Performance................................................................................................................................................9

Buffer-to-Buffer Credit Management ..........................................................................................................................................................................................................9

About BB Credits ....................................................................................................................................................................10

Packet Flow and Credits ........................................................................................................................................................................... 10

Buffer-to-Buffer Flow Control.................................................................................................................................................................... 10

Implications of Asset Deployment.........................................................................................................................................11

Configuring BB Credit Allocations on FICON Directors........................................................................................................................... 12

BB Credit Exhaustion and Frame Pacing Delay...................................................................................................................................... 12

What is the difference between frame pacing and frame latency?...................................................................................................... 14

What can you do to eliminate or circumvent frame pacing delay?....................................................................................................... 14

How can you make improvements? ........................................................................................................................................................ 15

Dynamic Allocation of BB Credits ............................................................................................................................................................ 15

Technical Discussion of FICON Cascading.............................................................................................................................................................................................16

Fabric Addressing Support ....................................................................................................................................................16

High Integrity Enterprise Fabrics ...........................................................................................................................................19

Managing Cascaded FICON Environments and ISLs: Link Balancing and Aggregation .....................................................19

Best Practices for FICON Cascaded Link Management....................................................................................................................................................................22

Terms and Definitions............................................................................................................................................................22

Frame-level Trunking Implementation ..................................................................................................................................22

Brocade M-Series Director Open Trunking ...........................................................................................................................24

Use of Data Rate Statistics by Open Trunking........................................................................................................................................ 26

Rerouting Decision Making ...................................................................................................................................................................... 26

Checks on the Cost Function ................................................................................................................................................................... 27

Periodic Rerouting..................................................................................................................................................................................... 27

Algorithms to Gather Data........................................................................................................................................................................ 28

Summary of Open Trunking Parameters................................................................................................................................................. 29

Fabric Tuning Using Open Trunking......................................................................................................................................................... 30

Open Trunking Enhancements................................................................................................................................................................. 30

Open Trunking Summary .......................................................................................................................................................................... 31

Controlling FICON Cascaded Links in More Demanding Environments ..............................................................................32

Preferred Path on M-Series FICON Switches .......................................................................................................................................... 32

Prohibit Paths ............................................................................................................................................................................................ 33

Traffic Isolation Zones on B-Series FICON Switches ............................................................................................................35

TI Zones Best Practices ............................................................................................................................................................................ 37

Summary ...........................................................................................................................................................................................................................................................38

Appendix: Fibre Channel Class 4 Class of Service (CoS)..................................................................................................................................................................39

Cascaded FICON in a Brocade environment

2 of 40

MAINFRAME

Technical Brief

INTRODUCTION

Prior to the introduction of support for cascaded FICON director connectivity on IBM zSeries mainframes in

January 2003, only a single level of FICON directors was supported for connectivity between a processor

and peripheral devices. Cascaded FICON introduced the open systems Storage Area Network (SAN) concept

of the Inter-Switch Links (ISLs). IBM now supports the flow of traffic from the processor through two FICON

directors connected via an ISL to the peripheral devices, such as disk and tape.

This paper starts with a brief discussion of cascaded FICON, its applications, and the benefits of a cascaded

FICON architecture. The next section provides a technical discussion of bufferto-buffer credits (BB credits),

open exchanges, and performance. The final section describes management of a cascaded FICON

architecture, including ISL trunking and the Traffic Isolation capabilities unique to Brocade.

FICON, like most technological advancements, evolved from the limitations of its predecessorthe IBM

Enterprise System Connection (ESCON) protocola successful storage network protocol for mainframe

systems considered the parent of the modern SAN. IBM Fiber Connection (FICON) was initially developed to

address the limitations of the ESCON protocol. In particular, FICON addresses ESCON addressing,

bandwidth and distance limitations. FICON has evolved rapidly since the initial FICON bridge mode (FCV)

implementations came to the data center, from FCV to single director FICON Native (FC) implementations,

to configurations that intermix Fibre Channel (FC) and open systems Fibre Channel (FCP), and now to

cascaded fabrics of FICON directors. FICON support of cascaded directors is available, has been supported

on the IBM zSeries since 2003, and is supported on the System z processors as well.

Cascaded FICON allows a FICON Native (FC) channel or a FICON CTC channel to connect a zSeries/System z

server to another similar server or peripheral device such as disk, tape library, or printer via two Brocade

FICON directors or switches. A FICON channel in FICON Native mode connects one or more processor

images to an FC link, which connects to the first FICON director, then dynamically through the first director

to one or more ports, and from there to a second cascaded FICON director. From the second director there

are Fibre Channel links to FICON Control Unit (CU) ports on attached devices. These FICON directors can be

geographically separate, providing greater flexibility and fiber cost savings. All FICON directors connected

together in a cascaded FICON architecture must be from the same vendor (such as Brocade). Initial support

by IBM is limited to a single hop between cascaded FICON directors; however, the directors can be

configured in a hub-star architecture with up to 24 directors in the fabric.

NOTE: In this paper the term switch is used to reference a Brocade hardware platform (switch, director, or

backbone) unless otherwise indicated.

Cascaded FICON allows Brocade customers tremendous flexibility and the potential for fabric cost savings

in their FICON architectures. It is extremely important for business continuity/disaster recovery

implementations. Customers looking at these types of implementations can realize significant potential

savings in their fiber infrastructure costs and channel adapters by reducing the number of channels for

connecting two geographically separate sites with high availability FICON connectivity at increased

distances.

Brocade (via the acquisitions of CNT/Inrange and McDATA) has a long and distinguished history of working

closely with IBM in the mainframe environment. This history includes manufacture of IBMs 9032 line of

ESCON directors, the CD/9000 ESCON directors, the FICON bridge cards, and the first FICON Native (FC)

directors with the McDATA ED-5000 and Inrange FC/9000. Brocades second generation of FICON

directors, the legacy McDATA Intrepid 6064, Brocade M6140, Brocade 24000, and Brocade Mi10K are the

foundation of many FICON storage networks. Brocade continues to lead the way in cascaded FICON with the

Brocade 48000 Director and the Brocade DCX Backbone.

Cascaded FICON in a Brocade environment

3 of 40

MAINFRAME

Technical Brief

THE EVOLUTION FROM ESCON TO FICON CASCADING

In 1990 the ESCON channel architecture was introduced as the way to address the limitations of parallel

(bus and tag) architectures. ESCON provided noticeable, measurable improvements in distance capabilities,

switching topologies and, most importantly, response time and service time performance. By the end of the

1990s, ESCONs strengths over parallel channels had become its weaknesses. FICON evolved in the late

1990s to address the technical limitations of ESCON in bandwidth, distances, and channel/device

addressing with the following features:

Increased number of concurrent connections

Increased distance

Increased channel device addressing support

Increased link bandwidth

Increased distance to data droop effect

Greater exploitation of priority I/O queuing

Initially, the FICON (FC-SB-2) architecture did not allow the connection of multiple FICON directors. (Neither

does ESCON except when static connections of chained ESCON directors were used to extend ESCON

distances.) Both ESCON and FICON defined a single byte for the link address, the link address being the

port attached to this director. This changed in January 2003. Now it is possible to have two-director

configurations and separate geographic sites. This is done by adding the domain field of the Fibre Channel

destination ID to the link address to specify the exiting director and the link address of that director.

What is Cascaded FICON?

Cascaded FICON refers to an implementation of FICON that involves one or more FICON channel paths to be

defined over two FICON switches connected to each other using an Inter-Switch Link (ISL). The processor

interface is connected to one switch, while the storage interface is connected to the other. This

configuration is supported for both disk and tape, with multiple processors, disk subsystems, and tape

subsystems sharing the ISLs between the directors. Multiple ISLs between the directors are also supported.

Cascading between a director and a switch, for example from a Brocade 48000 director to a Brocade 5000

is also supported.

There are hardware and software requirements specific to cascaded FICON:

The FICON directors themselves must be from the same vendor (that is, both should be from Brocade)

The mainframes must be zSeries machines or System z processors: z800, 890, 900, 990, z9 BC or z9

EC. Cascaded FICON requires 64-bit architecture to support the 2-byte addressing scheme. Cascaded

FICON is not supported on 9672 G5/G6 mainframes.

z/OS version 1.4 or greater, and/or z/OS version 1.3 with required PTFs/MCLs to support 2-byte link

addressing (DRV3g and MCL (J11206) or later)

The high integrity fabric feature for the FICON switch must be installed on all switches involved in the

cascaded architecture. For Brocade M-Series directors or switches, this is known as SANtegrity Binding,

and it requires M-EOS firmware version 4.0 or later. For the Brocade 5000 Switch and 24000 and

48000 Directors, this requires Secure Fabric OS (SFOS).

Cascaded FICON in a Brocade environment

4 of 40

MAINFRAME

Technical Brief

High Availability (HA), Disaster Recovery (DR), and Business Continuity (BC)

The greater bandwidth of and distance capabilities of FICON over ESCON are starting to make it an

essential and cost-effective component in HA/DR/BC solutions, the primary reason mainframe installations

are adopting cascaded FICON architectures. Since Sept 11, 2001, more and more companies are bringing

DR/BC in-house (insourcing) and companies are building the mainframe component of their new DR/BC

data centers using FICON rather than ESCON. Until IBM released cascaded FICON, the FICON architecture

was limited to a single domain due to the single-byte addressing limitations inherited from ESCON. FICON

cascading allows the end user to have a greater maximum distance between sites (up to an unrepeated

distance of 36 km at 2 Gbit/sec bandwidth). For details, see Tables 1 and 2.

Following September 11, 2001, industry participants met with government agencies, including the United

States Securities and Exchange Commission (SEC), the Federal Reserve, the New York State Banking

Department, and the Office of the Comptroller of the Currency. These meetings were held specifically to

formulate and analyze the lessons learned from the events of September 11, 2001. These agencies

released an interagency white paper, and the SEC released its own paper, on best practices to strengthen

the IT resilience of the US financial system. These events underlined how critical it is for an enterprise to be

prepared for disastereven more for large enterprise mainframe customers. Disaster recovery is no longer

limited to problems such as fires or a small flood. Companies now need to consider and plan for the

possibility of the destruction of their entire data center and the people that work in it. A great many articles,

books and other publications have discussed the IT lessons learned from September 11, 2001:

To manage business continuity, it is critical to maintain geographical separation of facilities and

resources. Any resource that cannot be replaced from external sources within the Recovery Time

Objective (RTO) should be available within the enterprise. It is also preferable to have these resources

(buildings, hardware, software, data, and staff) in multiple locations. Cascaded FICON gives the

geographical separation required; ESCON does not.

The most successful DR/BC implementations are often based on as much automation as possible,

since key staff and skills may no longer be present after a disaster strikes.

Financial, government, military, and other enterprises now have critical RTOs measured in seconds and

minutes and not days and hours. For these end users it has become increasingly necessary to

implement insourced DR solution. This means that the facilities and equipment needed for the

HA/DR/BC solution are owned by the enterprise itself. In addition, cascaded FICON allows for

considerable cost savings compared with ESCON.

A regional disaster could cause multiple organizations to declare disasters and initiate recovery actions

simultaneously. This is highly likely to severely stress the capacity of business recovery services

(outsourced) in the vicinity of the regional disaster. Business continuity service companies typically

work on a first come, first served basis. So when a regional disaster occurs, these outsourcing

facilities can fill up quickly and be overwhelmed. Also, a companys contract with the BC/DR outsourcer

may stipulate that the customer has the use of the facility only for a limited time (for example, 45 days).

This may spur companies with BC/DR outsourcing contracts to a)consider changing outsourcing firms,

b) re-negotiate an existing contract, or c) study the requirements and feasibility for insourcing their

BC/DR and creating their own DR site. Depending on an organizations RTO and Recovery Point

Objective (RPO), option c) may be the best alternative.

The recovery site must have adequate hardware and the hardware at the recovery site must be

compatible with the hardware at the primary site. Organizations must plan for their recovery site to

have a) sufficient server processing capacity, b) sufficient storage capacity, and c) sufficient networking

and storage networking capacity to enable all business critical applications to be run from the recovery

site. The installed server capacity at the recovery site may be used to meet day-to-day needs (assuming

BC/DR is insourced). Fallback capacity may be provided via several means, including workload

prioritization (test, development, production, and data warehouse).

Cascaded FICON in a Brocade environment

5 of 40

MAINFRAME

Technical Brief

Fallback capacity may also be provided via a capacity upgrade scheme based on changing a license

agreement versus installing additional capacity. IBM System z and zSeries servers have the Capacity

Backup Option (CBU). Unfortunately in the open systems world, this feature is not common. Many

organizations will take a calculated risk with open systems and not purchase two duplicate servers (one

for production at the primary data center and a second for the DR data center). Therefore, open

systems DR planning account for this possibility and pose the question What can I lose?

A robust BC/DR solution must be based on as much automation as possible. It is too risky to assume

that key personnel with critical skills will be available to restore IT services. Regional disasters impact

personal lives as well. Personal crises and the need to take care of families, friends, and loved ones will

take a priority for IT workers. Also, key personnel may not be able to travel and will be unable to get to

the recovery site. Mainframe installations are increasingly looking to automate switching resources

from one site to another. One way to do this in a mainframe environment is with a cascaded FICON

Geographically Dispersed Parallel Sysplex (GDPS).

If an organization is to maintain business continuity, it is critical to maintain sufficient geographical

separation of facilities, resources, and personnel. If a resource cannot be replaced from external

sources within the RTO, it needs to be available internally and in multiple locations. This statement

holds true for hardware resources, employees, data, and even buildings. An organization also needs to

have a secondary disaster recovery plan. Companies that successfully recover to their designated

secondary site after losing their entire primary data center quickly come to the realization that all of

their data is now in one location. If disaster events continue or if there is not sufficient geographic

separation and a recovery site is also incapacitated, there is no further recourse (no secondary plan)

for most organizations.

What about the companies that initially recover a third party site with contractual agreements calling

for them to vacate the facility within a specified time period? What happens when you do not have a

primary site to go back to? The prospect of further regional disasters necessitates asking the question

What is our secondary disaster recovery plan?

This has led many companies to seriously consider implementing a three-site BC/DR strategy. What

this strategy entails is two sites within the same geographic vicinity to facilitate high availability and a

third, remote site for disaster recovery. The major objection to a three-site strategy is

telecommunication costs, but as with any major decision, a proper risk vs. cost analysis should be

performed.

Asynchronous remote mirroring becomes a more attractive option to organizations insourcing BC/DR

and/or increasing the distance between sites. While synchronous remote mirroring is popular, many

organizations are starting to give serious consideration to greater distances between sites and to a

strategy of asynchronous remote mirroring to allow further separation between their primary and

secondary sites.

HA/DR/BC implementations including GDPS, remote Direct-Attached Storage Device (DASD) mirroring,

electronic tape/virtual tape vaulting, and remote DR sites are all facilitated by cascaded FICON.

Cascaded FICON in a Brocade environment

6 of 40

MAINFRAME

Technical Brief

BENEFITS OF FICON CASCADING

Cascaded FICON delivers to the mainframe space many of the same benefits of open systems SANs. It

allows for simpler infrastructure management, decreased infrastructure cost of ownership, and higher data

availability. This higher data availability is important in delivering a more robust enterprise DR strategy.

Further benefits are realized when the ISLs connect switches in two or more locations and/or are extended

over long distances. Figure 1 shows a non-cascaded two-site environment.

Figure 1. Two sites in a non-cascaded FICON environment

In Figure 1, all hosts have access to all of the disk and tape subsystems at both locations. The host

channels at one location are extended to the Brocade 48000 or Brocade DCX (FICON) platforms at the

other location to allow for cross-site storage access. If each line represents two FICON channels, then this

configuration would need a total of 16 extended links; and these links would be utilized only to the extent

that the host has activity to the remote devices.

The most obvious benefit of cascaded versus non-cascaded is the reduction in the number of links across

the Wide Area Network (WAN). Figure 2 shows a cascaded, two-site FICON environment.

In this configuration, if each line represents two channels, only 4 extended links are required. Since FICON

is a packet-switched protocol (versus the circuit-switched ESCON protocol), multiple devices can share the

ISLs, and multiple I/Os can be processed across the ISLs at the same time. This allows for the reduction of

number of links between sites and allows for more efficient utilization of the links in place. In addition, ISLs

can be added as the environment grows and traffic patterns dictate.

This is the key way in which a cascaded FICON implementation can reduce the cost of the enterprise

architecture. In Figure 2, the cabling schema for both intersite and intrasite has been simplified. Fewer

intrasite cables translate into decreased cabling hardware and management costs. It also reduces the

number of FICON adapters, director ports, and host channel card ports required, thus decreasing the

connectivity cost for mainframes and storage devices as well. In Figure 2, the sharing of links between the

two sites reduces the number of physical channels between sites, thereby lowering the cost by

consolidating channels and the number of director ports. The faster the channel speeds between sites, the

Cascaded FICON in a Brocade environment

7 of 40

MAINFRAME

Technical Brief

better the intersite cost savings from this consolidation. So, with 4 Gbit/sec FICON and 10 Gbit/sec FICON

available, the more attractive this option becomes.

Another benefit to this approach, especially over long distances, is that the Brocade FICON director typically

has many more buffer credits per port than do the processor and the disk or tape subsystem cards. More

buffer credits allow for a link to be extended to greater distances without significantly impacting response

times to the host.

Figure 2. Two sites in a cascaded FICON environment

Optimizing Use of Storage Resources

ESCON limits the amount of terabytes (TB) that a customer can realistically have in a single DASD array,

because of device addressing limitations. Rather than filling a frame to capacity, additional frames need to

be purchased, wasting capacity. For example, running Mod 3 volumes in an ESCON environment typically

leads to running out of available addresses between 3.3 and 3.5 TB. This is significant because it requires

more disk array footprints at each site, and:

The technology of DASD arrays places a limit on the number of CU ports inside, and there is a limit of

8 links per LCU. These 8 links can only perform so fast.

This also limits the I/O density (I/Os per GB per second) into and out of the frame, placing a cap on the

amount of disk space the frame can support and still supply reasonable I/O response times.

Cascaded FICON lets customers fully utilize their old disk arrays, preventing them from having to throttle

back I/O loads and make the most efficient use of technologies such as Parallel Access Volumes (PAVs).

Additionally, a cascaded FICON environment requires fewer fiber adapters on storage devices and

mainframes.

Cascaded FICON allows for Total Cost of Ownership (TCO) savings in an installations mainframe

tape/virtual tape environment. FICON platforms such as the Brocade 48000 and DCX are 5 nines

devices. The typical enterprise-class tape drive is only 2 or 3 nines at best due to all of the moving

mechanical parts. A FICON port on a Brocade DCX (or any FICON enterprise-class platform) typically costs

twice as much as a FICON port on a Brocade 5000 FICON switch. (The FICON switch is not a 5 nines

Cascaded FICON in a Brocade environment

8 of 40

MAINFRAME

Technical Brief

device, while the FICON director is.) However, it may not make sense to connect 3 nines tape drives to 5

nines directors, when the best reliability achieved is that of the lowest common denominator (the tape

drive). Depending on your exact configuration, it can make more financial sense to connect tape drives to

Brocade 5000 FICON switches cascaded to a Brocade DCX (FICON), thus saving the more expensive

director ports for host and/or DASD connectivity.

Cascaded FICON Performance

Seven main factors affect the performance of a cascaded FICON director configuration (IBM white paper on

Cascaded FICON director performance considerations, Cronin and Bassener):

1.

The number of ISLs between the two cascaded FICON directors and the routing of traffic across ISLs

2.

The number of FICON/FICON Express channels whose traffic is being routed across the ISLs

3.

The ISL link speed

4.

Contention for director ports associated with the ISLs

5.

The nature of the I/O workload (I/O rates, block sizes, use of data chaining, and read/write ratio)

6.

The distances of the paths between the components of the configuration (the FICON channel links from

processor(s) to the first director, the ISLs between directors, and the links from the second director to

the storage control unit ports)

7.

The number of switch port buffer to buffer credits

The last factorthe number of buffer-to-buffer credits and the management of buffer to buffer credits

is typically the one examined most carefully, and the one that is most often misunderstood.

BUFFER-TO-BUFFER CREDIT MANAGEMENT

The introduction of the FICON I/O protocol to the mainframe I/O subsystem provided the ability to process

data rapidly and efficiently. And as a result of two main changes that FICON made to the mainframe

channel I/O infrastructure, the requirements for a new Resource Measurement Facility (RMF) record came

into being. The first change was that unlike ESCON, FICON uses buffer credits to account for packet

delivery. The second change was the introduction of FICON cascading, which was not possible with ESCON.

Buffer-to-buffer credits (BB credits) and their management in a FICON environment is often a

misunderstood concept. Buffer-to-buffer credit management does have an impact on performance over

distances in cascaded FICON environments. At present, there is no good way to track BB credits being used.

At initial configuration, BB credits are allocated but not managed. As a result, the typical FICON shop

assigns a large number of BB credits for long-distance traffic. Just as assigning too many aliases to a base

address in managing dynamic PAVs can lead to configuration issues due to addressing constraints,

assigning too many BB credits can lead to director configuration issues, which can require outages to

resolve. Mechanisms for detecting BB credit starvation in a FICON environment are extremely limited.

This section reviews the concept of BB credits, including current schema for allocating them. It then

discusses the only way to detect BB credit starvation on FICON directors, including the concept of frame

pacing delay. Finally a mechanism to count BB credits used is outlined, and then another theoretical

drawing board concept is described: dynamic allocation of BB credits on an individual I/O basis similar to

the new HyperPAVs concept for DASD.

Cascaded FICON in a Brocade environment

9 of 40

MAINFRAME

Technical Brief

About BB Credits

This section is an overview of BB credits; for a more detailed discussion, consult Robert Kembels

Fibre Channel Consultant series.

Packet Flow and Credits

The fundamental objective of flow control is to prevent a transmitter from overrunning a receiver by allowing

the receiver to pace the transmitter, managing each I/O as a unique instance. At extended distances,

pacing signal delays can result in degraded performance. Buffer-to-buffer credit flow control is used to

transmit frames from the transmitter to the receiver and pacing signals back from the receiver to the

transmitter. The basic information carrier in the FC protocol is the frame. Other than ordered sets, which are

used for communication of low-level link conditions, all information is contained in the frames. A good

analogy to a frame is an envelope: When you send a letter via the United States Postal Service (USPS), the

letter is encapsulated in an envelope. When sending data via a FICON network, the data is encapsulated

in a frame (although service times in a FICON network are better than those of the USPS).

To prevent a target device (either host or storage) from being sent more frames than it has buffer

memory to store (overrun), the FC architecture provides a flow control mechanism based on a system

of credits. Each credit represents the ability of the receiver to accept a frame. Simply stated, a

transmitter cannot send more frames to a receiver than the receiver can store in its buffer memory.

Once the transmitter exhausts the frame count of the receiver, it must wait for the receiver to credit

back frames to the transmitter. A good analogy is a pre-paid calling card: there are a certain number

of minutes, and you can talk until there is no more time on the card.

Flow control exists at both the physical and logical level. The physical level is called buffer-to-buffer

flow control and manages the flow of frames between transmitters and receivers. The logical level

is called end-to-end flow control and it manages the flow of a logical operation between two end

nodes. It is important to note that a single end-to-end operation may have made multiple transmitterto-receiver pair hops (end-to-end frame transmissions) to reach its destination. However, the presence

of intervening directors and/or ISLs is transparent to end-to-end flow control. Buffer-to-buffer flow

control is the more crucial subject in a cascaded FICON environment.

Buffer-to-Buffer Flow Control

Buffer-to-buffer flow control is flow control between two optically adjacent ports in the I/O path (that is,

transmission control over individual network links). Each FC port has dedicated sets of hardware buffers

for send and receive operations. These buffers are more commonly known as BB credits.

The number of available BB credits defines the maximum amount of data that can be transmitted prior

to an acknowledgment from the receiver. BB credits are physical memory resources incorporated in the

Application Specific Integrated Circuit (ASIC) that manages the port. It is important to note that these

memory resources are limited. Moreover, the cost of the ASICs increases as a function of the size of the

memory resource. One important aspect of Fibre Channel is that adjacent nodes do not have to have the

same number of credits. Rather, adjacent ports communicate with each other during Fabric LOGIn (FLOGI)

and Port LOGIn (PLOGI) to determine the number of credits available for the send and receive ports on each

node.

Cascaded FICON in a Brocade environment

10 of 40

MAINFRAME

Technical Brief

A BB credit can transport a 2,112-byte frame of data. The FICON FC-SB-2 and FC-SB-3 ULPs use 64 bytes

of this frame for addressing and control, leaving 2 K available for z/OS data. In the event that a 2 Gbit/sec

transmitter is sending full 2,112-byte frames, 1 credit is required for every 1 km of fiber between the sender

and receiver. Unfortunately, z/OS disk workloads rarely produce full credits. For a 4 K transfer, the average

frame size is 819 bytes. Therefore, 5 credits would be required per km of distance as a result of the

decreased average frame size. It is important to note that increasing the fiber speed increases the number

of credits required to support a given distance. In other words, every time the distance doubles, the number

of required BB credits doubles to avoid transmission delays for a specified distance.

BB credits are used by Class 2 and Class 3 service and rely on the receiver sending back receiver-readies

(R_RDY) to the transmitter. As was previously discussed, node pairs communicate their number of credits

available during FLOGI/PLOGI. This value is used by the transmitter to track the consumption of receive

buffers and pace transmissions if necessary. FICON directors track the available BB credits in the following

manner:

Before any data frames are sent, the transmitter sets a counter equal to the BB credit value

communicated by its receiver during FLOGI.

For each data frame sent by the transmitter, the counter is decremented by one.

Upon receipt of a data frame, the receiver sends a status frame (R_RDY) to the transmitter, indicating

that the data frame was received and that the buffer is ready to receive another data frame.

For each R_RDY received by the transmitter, the counter is incremented by one.

As long as the transmitter count is a non-zero value, the transmitter is free to continue sending data. This

mechanism allows for the transmitter to have a maximum number of data frames in transit equal to the

value of BB credit, with an inspection of the transmitter counter indicating the number of receive buffers.

The flow of frame transmission between adjacent ports is regulated by the receiving ports presentation

of R_RDYs; in other words, BB credits has no end-to end-component. The sender decrements the BB credit

by one for each R_RDY received. The initial value of the BB credit count must be non-zero. The rate of frame

transmission is regulated by the receiving port based on the availability of buffers to hold received frames.

It should be noted that the FC-FS specification allows the transmitter to be initialized at zero, or at the value

of the BB credit count and either count up or down on frame transmit. Different switch vendors can handle

this using either method, and the counting would be handled accordingly.

Implications of Asset Deployment

There are four implications of asset deployment to consider when planning BB-credit allocations:

For write-intensive applications across an ISL (tape and disk replication) the BB credit value advertised

by the E_Port on the target gates performance. In other words, the number of BB credits on the target

cascaded FICON director is the major factor.

For read-intensive applications across an ISL (regular transactions) the BB credit value advertised

by the E_Port on the host gates performance. In other words, the number of BB credits at the local

location is the major factor.

Two ports do not negotiate BB credits down to the lowest common value. A receiver simply advertises

BB credits to a linked transmitter.

The depletion of BB credits at any point between an initiator and a target will gate overall throughput.

Cascaded FICON in a Brocade environment

11 of 40

MAINFRAME

Technical Brief

Configuring BB Credit Allocations on FICON Directors

There have been two FICON switch architectures for BB credit allocation. The first, which was prevalent on

early FICON directors such as the Inrange/CNT FC9000 and McDATA 6064, had a range of BB credits that

could be assigned to each individual port. Each port on a port card had a range of BB credits (for example 4

through 120) that could be assigned to it during the switch configuration process. Simple rules of thumb on

a table/matrix were used to determine the number of BB credits to use. Unfortunately, these tables did not

consider workload characteristics or z/OS particulars. Since changing the BB credit allocation was an offline

operation, most installations would calculate what they needed, set the allocation, and (assuming it was

correct) not look at it again. Best practice was typically to maximize BB credits used on ports being used for

distance traffic, since each port could theoretically be set to the maximum available BB credits without

penalizing other ports on the port card. Some installations would even maximize the BB credit allocation

on short-distance ports, so they would not have to worry about it. However, this could cause other kinds of

problems in recovery scenarios.

The second FICON switch architecture, on the market today in products from Brocade and Cisco, has a pool

of available BB credits for each port card in the director. Each port on the port card has a maximum setting.

However, since there is a large pool of BB credits that must be shared among all ports on a port card, there

must be better allocation planning. It is no longer enough to simply use distance rules of thumb. Workload

characteristics of traffic need to be better understood. Also, as 4 Gbit/sec FICON Express4 becomes

prevalent and 8 Gbit/sec FICON Express8 follows, intra-data-center distances become something to

consider when deciding how to allocate the pool of available BB credits. It no longer is enough to say that

a port is internal to the data center or campus and assign it the minimum number of credits. This pooled

architecture and careful capacity planning it necessitates make it more critical than ever to have a way to

track actual BB credit usage in a cascaded FICON environment.

What follows is a discussion of what happens when you exhaust available BB credits and the concept of

frame pacing delay.

BB Credit Exhaustion and Frame Pacing Delay

Similar to the ESCON directors that preceded them, FICON switches have a feature called Control Unit Port

(CUP). Among the many functions of the CUP feature is an ability to provide host control functions such as

blocking and unblocking ports, safe switching, and in-band host communication functions such as port

monitoring and error reporting. Enabling CUP on FICON switches while also enabling RMF 74 subtype 7

(RMF 74-7) records for the z/OS system, yields a new RMF report called the FICON Director Activity

Report. Data is collected for each RMF interval if FCD is specified in the ERBRMFnn parmlib member.

RMF will format one of these reports per interval per each FICON switch that has CUP enabled and the

parmlib specified. This RMF report contains meaningful data on FICON I/O performancein particular,

frame pacing delay. Note that frame pacing delay is the only indication available to indicate a BB credit

starvation issue on a given port.

Frame pacing delay has been around since FC SAN was first implemented in the late 1990s by our open

systems friends. But until the increased use of cascaded FICON, its relevance in the mainframe space has

been completely overlooked. If frame pacing delay is occurring, then the buffer credits have reached zero

on a port for an interval of 2.5 microseconds and no more data can be transmitted until a credit has been

added back to the buffer credit pool for that port. Frame pacing delay causes unpredictable performance

delays. These delays generally result in longer FICON connect time and/or longer PEND times that show up

on the volumes attached to these links. Note that only when using switched FICON and only when CUP is

enabled on the FICON switching device(s) can RMF provide the report that provides frame pacing delay

information. Only the RFM 74-7 FICON Director Activity Report provides FICON frame pacing delay

information. You cannot get this information from any other source today.

Cascaded FICON in a Brocade environment

12 of 40

MAINFRAME

Technical Brief

Figure 3. Sample FICON Director Activity report (RMF 74-7)

The fourth column from the left in Figure 3 is the column where frame pacing delay is reported. Any number

other than 0 (zero) in this column is an indication of frame pacing delay occurring. If there is a non-zero

number it reflects the number of times that I/O was delayed for 2.5 microseconds or longer due to buffer

credits falling to zero. Figure 3 shows an optimal situation, zeros down the entire column indicating that

enough buffer credits are always available to transfer FICON frames.

Figure 4. Frame pacing delay indications in RMF 74-7 record

But in Figure 4, you can see that on the FICON Director Activity Report for switch ID 6E, an M6140 director,

there were at least three instances when port 4, a cascaded link, suffered frame pacing delays during this

RMF reporting interval. This would have resulted in unpredictable performance across this cascaded link

during this period of time. The next few sections provide answers to questions that arise in this discussion.

Cascaded FICON in a Brocade environment

13 of 40

MAINFRAME

Technical Brief

What is the difference between frame pacing and frame latency?

Frame pacing is an FC4 application data exchange measurement and/or throttling mechanism. It uses

buffer credits to provide a flow control mechanism for FICON to assure delivery of data across the FICON

fabric. When all buffer credits for a port are exhausted, a frame pacing delay can occur. Frame latency, on

the other hand, is a frame delivery measurement, similar to measuring frame friction. Each element that

handles the frame contributes to this latency measurement (CHPID port, switch/director, storage port

adapter, link distance, and so on). Frame latency is the average amount of time it takes to deliver a frame

from the source port to the destination port.

What can you do to eliminate or circumvent frame pacing delay?

If a long-distance link is running out of buffer credits, then it might be possible to enable additional buffer

credits for that link in an attempt to provide an adequate pool of buffer credits for the frames being

delivered over that link. But the number of buffer credits required to handle specific workloads across

distance is surprising, as shown in Table 1.

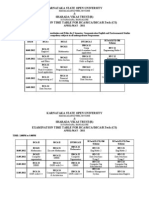

Table 1. Frame size, link speed, and distance determine buffer credit requirements

Frame

Buffer Credits Required to 50 km

Payload %

Payload Bytes

1 Gbit/sec

2 Gbit/sec

4 Gbit/sec

8 Gbit/sec

10 Gbit/sec

100%

2112

25

49

98

196

290

75%

1584

33

65

130

259

383

50%

1056

48

96

191

381

563

25%

528

91

181

362

723

1069

10%

211

197

393

785

1569

2318

5%

106

321

641

1281

2561

3784

1%

21

656

1312

2624

5248

7755

Keep in mind that tape workloads generally have larger payloads in a FICON frame, while DASD workloads

might have much smaller frame payloads. Some say the average payload size for DASD is often about 800

to 1500 bytes. By using the FICON Director Activity reports for your enterprise, you can gain an

understanding of your own average read and write frames sizes on a port-by-port basis.

To help you, columns five and six of the FICON Director Activity report in Figure 3 show the average read

frame size and the average write frame size for the frame traffic on each port. These columns are useful

when you are trying to figure out how many buffer credits will be needed for a long-distance link or possibly

to solve a local frame pacing delay issue.

Cascaded FICON in a Brocade environment

14 of 40

MAINFRAME

Technical Brief

How can you make improvements?

Even with the new FICON directors and the ability to assign BB credits to each port from a pool of available

credits on each port card, it is still not easy. The best hope for end users is to make a correct allocation

and then monitor the RMF 74-7 report for frame pacing delay to indicate that they are out of BB credits.

They can then make the necessary adjustments to the BB credit allocations to crucial ports, such as the ISL

ports on either end of a cascaded link. However, any adjustments made will merely be a guestimate,

since the exact number being used is not indicated. A helpful analogy is a car without a fuel gauge in which

you have to rely on EPA MPG estimates to calculate how many miles you could drive on a full tank of gas.

This estimate would not reflect driving characteristics, and in the end, the only accurate indication that the

gas tank is empty is a coughing engine that stops running.

Individual ports track BB credit availability, as was discussed earlier, and the mechanism by which this

occurs was described. So it is a matter of creating a reporting mechanism. This is similar to a situation with

monitoring open exchanges, discussed in a paper by Dr. H. Pat Artis, who made a sound case for why open

exchange management is crucial in a FICON environment. He proved the correlation between

response/service time skyrocketing and open exchange saturation, demonstrated how channel busy

and bus busy metrics are not correlated to response/service time, and recommended a range of open

exchanges to use for managing a FICON environment. Since RMF does not report open exchange counts,

he derived a formula using z/OS response time metrics to calculate open exchanges. Commercial software

such as MXG and RMF Magic use this to help users better manage their FICON environments.

Similar to open exchanges, the data needed to calculate BB credit usage is currently available in RMF, and

all that is needed are some mathematical calculations. As an area of future exploration, the RMF 74-7

record (FICON Director Activity report) could be updated with these two additional fields and the appropriate

interfaces added between the FICON switches and CUP code. Switch management software could also be

enhanced to include these two valuable metrics.

Dynamic Allocation of BB Credits

The technique used in BB credit allocation is very similar to the early technique used in managing PAV

aliases. The simple approach used was called static assignment. With static assignment, the storage

subsystem utility was used to statically assign alias addresses to base addresses. While a generous static

assignment policy could help to ensure sufficient performance for a base address, it resulted in ineffective

utilization of the alias addresses (since nobody knew what the optimal number of aliases was for a given

base), which put pressure on the 64 K device address limit. Users would tend to assign an equal number of

addresses to each base, often taking a very conservative approach, resulting in PAV alias overallocation.

An effort to address this was undertaken by IBM with WorkLoad Manager (WLM) support for dynamic alias

assignment. WLM was allowed to dynamically reassign aliases from a pool to base addresses to meet

workload goals. Since this can be somewhat lethargic, users of dynamic PAVs still tend to overconfigure

aliases and are pushing the 64 K device address limitation. Users face what you could call a PAV

performance paradox: they need the performance of PAVs, tend to overconfigure alias addresses, and are

close to exhausting the z/OS device addressing limit.

Perhaps a similar dynamic allocation of BB credits, in particular for new FICON switch architectures having

pools of assignable credits on each port card, would be a very beneficial enhancement for end users.

Perhaps an interface between the FICON directors and WLM could be developed to allow WLM to

dynamically assign BB credits. At the same time, since Quality of Service (QoS) is an emerging topic

for FICON, an interface could be developed between the FICON switches and WLM for functionality with

dynamic channel path management and priority I/O queuing to enable true end-to-end QOS.

In October 2006, IBM announced HyperPAVs for the DS8000 storage subsystem family to address the PAV

performance paradox. HyperPAVs increase the agility of the alias assignment algorithm. The primary

difference between the traditional PAV alias management is that aliases are dynamically assigned to

individual I/Os by the z/OS I/O Supervisor (IOS) rather than being statically or dynamically assigned to

Cascaded FICON in a Brocade environment

15 of 40

MAINFRAME

Technical Brief

a base address by WLM. The RMF 78-3 (I/O queuing) record has also been expanded. A similar

feature/functionality and interface between FICON switches and the z/OS IOS would be the ultimate in

BB credit allocation: true dynamic allocation of BB credits on an individual I/O basis.

This section has reviewed flow control, basics of BB credit theory, frame pacing delay, current BB credit

allocations methods and presented some proposals for a) counting BB credit usage and b) enhancing how

BB credits are allocated and managed.

TECHNICAL DISCUSSION OF FICON CASCADING

As stated earlier, cascaded FICON is limited to zSeries and System z processors only with the hardware and

software requirements outlined earlier.

In Figure 2, note that a cascaded FICON switch configuration involves at least three FC links:

Between the FICON channel card on the mainframe (known as an N_Port) and the FICON directors FC

adapter card (which is considered an F_Port)

Between the two FICON directors via E_Ports (the link between E_Ports on the switches is an interswitch link)

Link from the F_Port to a FICON adapter card in the control unit port (N_Port) of the storage device.

The physical paths are the actual FC links connected by the FICON switches providing the physical

transmission path between a channel and a control unit. Note that the links between the cascaded FICON

switches may be multiple ISLs, both for redundancy and to ensure adequate I/O bandwidth.

Fabric Addressing Support

Single-byte addressing refers to the link address definition in the Input-Output Configuration Program

(IOCP). Two-byte addressing (cascading) allows IOCP to specify link addresses for any number of domains by

including the domain address with the link address. This allows the FICON configuration to create

definitions in IOCP that span more than one switch.

Figure 5 shows that the FC-FS 24 bit FC port address identifier is divided into three fields:

Domain

Area

AL Port

In a cascaded FICON environment, 16 bits of the 24-bit address must be defined for the zSeries server to

access a FICON CU. The FICON switches provide the remaining byte used to make up the full 3-byte FC port

address of the CU being accessed. The AL_Port (arbitrated loop) value is not used in FICON and is set to a

constant value. The zSeries domain and area fields are referred to as the F_Ports port address field.

It is a 2-byte value, and when defining access to a CU attached to this port using the zSeries Hardware

Configuration Definition (HCD) or IOCP, the port address is referred to as the link address. Figure 5 further

illustrates this, and Figure 6 is an example of a cascaded FICON IOCP gen.

Cascaded FICON in a Brocade environment

16 of 40

MAINFRAME

Technical Brief

Figure 5. Fabric addressing support (a)

Figure 6. Fabric addressing support (b)

Cascaded FICON in a Brocade environment

17 of 40

MAINFRAME

Technical Brief

The connections between the two directors are established through the Exchange of Link Parameters (ELP).

The switches pause for a FLOGI, and assuming that the device is another switch, they initiate an ELP

exchange. This results in the formation of the ISL connection(s).

In a cascaded FICON configuration, three additional steps occur beyond the normal FICON switched pointto-point communication initialization. A much more detailed discussion of the entire FICON initialization

procedure can be found in Chapter 3 of the IBM Redbook, FICON Native Implementation and Reference

Guide, pp 23-43.

The three basic steps are:

1.

If a 2-byte link address is found in the CU macro in IOCDS, a Query Security Attribute (QSA) command

is sent by the host to check with the fabric controller on the directors if the directors have the high

integrity fabric features installed.

2.

The director responds to the QSA.

3.

If it is an affirmative response, indicating that a high integrity fabric is present (fabric binding and

insistent domain ID), the login continues. If not, login stops and the ISLs are treated as invalid (not a

good thing).

Figure 7. Sample IOCP coding for FICON cascaded switch configuration

Cascaded FICON in a Brocade environment

18 of 40

MAINFRAME

Technical Brief

High Integrity Enterprise Fabrics

Data integrity is paramount in a mainframe or any data center environment. End-to-end data integrity must

be maintained throughout a cascaded FICON environment to ensure that any changes made to the data

stream are always detected and that the data is always delivered to the correct end point. Brocade M-Series

FICON directors in a cascaded environment use a software feature know as SANtegrity to achieve this. The

SANtegrity feature key must be installed and operational in the Brocade Enterprise Fabric Connectivity

Manager (EFCM). Brocade 24000 and 48000 FICON directors and the Brocade 5000 FICON switch use

Secure Fabric OS.

What does high integrity fabric architecture and support entail?

Support of Insistent Domain IDs. This means that a FICON switch will not be allowed to automatically

change its address when a duplicate switch address is added to the enterprise fabric. Intentional

manual operator action is required to change a FICON directors address. Insistent Domain IDs prohibit

the use of dynamic Domain IDs, ensuring that predictable Domain IDs are being enforced in the fabric.

For example, suppose a FICON director has this feature enabled, and a new FICON director is

connected to it via an ISL in an effort to build a cascaded FICON fabric. If this new FICON director

attempts to join the fabric with a domain ID that is already in use, the new director is segmented into a

separate fabric. It also makes certain that duplicate Domain IDs are not used in the same fabric.

Fabric Binding. Fabric binding enables companies to allow only FICON switches that are configured to

support high-integrity fabrics to be added to the FICON SAN. For example, a Brocade M-Series FICON

director without an activated SANtegrity feature key cannot connect to an M-Series FICON

fabric/director with an activated SANtegrity feature key. The FICON directors that you wish to connect

to the fabric must be added to the fabric membership list of the directors already in the fabric. This

membership list is composed of the acceptable FICON directors World Wide Name (WWN) and

Domain ID. Using the Domain ID ensures that there will be no address conflicts, that is, duplicate

domain IDs when the fabrics are merged. The two connected FICON directors then exchange their

membership list. This membership list is a Switch Fabric Internal Link Service (SW_ILS) function, which

ensures a consistent and unified behavior across all potential fabric access points.

Managing Cascaded FICON Environments and ISLs: Link Balancing and Aggregation

Even in over-provisioned storage networks, there may be hot spots of congestion, with some paths

running at their limit while others go relatively unused. In other words, the storage network may be a

performance bottleneck even if it has sufficient capacity to deliver all I/O without constraint. This typically

happens when a network does not have the intelligence to load balance across all available paths. The

unused paths may still be of some value for redundancy, but not for performance. Brocade has several

options for supporting more evenly balanced cascaded FICON networks.

NOTE: The FICON and SAN FC protocol (the FC-SW standard) utilizes path routing services that are based on

the industry-standard Fabric Shortest Path First (FSPF) algorithm of that FC protocol. This is not the CHPID

path; it is the connections between FICON switching devices (which cause a network to be created) that will

utilize FSPF.

FSPF allows a fabric (created when CHPIDs and storage ports are connected through one or more FICON

switching devices) composed of more than one switching device (also called a storage network) to

automatically determine the shortest route from each switch to any other switch. FSPF selects what it

considers to be the most efficient path to follow when moving frames through a FICON fabric. FSPF

identifies all the possible routes (multiple path connections) through the fabric and then manages initial

route selection as well as sub-second path rerouting in the event of a link or node failure.

Cascaded FICON in a Brocade environment

19 of 40

MAINFRAME

Technical Brief

The Brocade 5000 (FICON), Brocade 24000 and 48000 (FICON) Directors, and the Brocade DCX (FICON)

Backbone support source-port route balancing via FSPF. This is known as Dynamic Load Sharing (DLS) and

is part of the base FOS as long as fabric and E_Port functions are present. FSPF makes calculations based

on the topology of a FICON network and determines the cost between end points. In many cascaded FICON

topologies, there is more than one equal-cost path across ISLs. Which path to use can be controlled on a

per-port basis from the source switch. By default, FSPF attempts to spread connections from different ports

across available paths at the source-post level. FSPF can re-allocate routes whenever in-order delivery can

still be assured (DLS). This may happen when a fabric rebuild occurs, when device cables are moved, or

when ports are brought online after being disabled. DLS does a best effort job of distributing I/O by

balancing source port routes.

However, some ports may still carry more traffic than others, and DLS cannot predict which ISLs will be

hot when it sets up routes since they must be allocated before I/O begins. Also, since traffic patterns tend

to change over time, no matter how routes were distributed initially, it would still be possible for hot spots to

appear later. Changing the route allocation randomly at runtime could cause out-of-order delivery, which is

undesirable in mainframe environments. Balancing the number of routes allocated to a given path is not

the same as balancing I/O, and so DLS does not do a perfect job of balancing traffic. DLS is useful, and

since it is free and works automatically, it is frequently used. However, DLS does not solve or prevent most

performance problems, so there is a need for more evenly balanced methods, such as trunking.

On Brocade M-Series FICON switches, FSPF works automatically by maintaining a link state database that

keeps track of the links on all switches in the FICON fabric and also associates a cost with each link in the

fabric. Although the link state database is kept on all FICON switches in the fabric, it is maintained and

synchronized on a fabric-wide basis. Therefore, every switch knows what every other switch knows about

connections of host, storage, and switch ports in the fabric. Then FSPF associates a cost with each ISL

between switching devices in the FICON fabric and ultimately chooses the lowest-cost path from a host

source port, between switches, to a destination storage port. And it does this in both directions, so it would

also choose the lowest-cost path from a storage source port, between switches, to a destination host port.

The process works as follows. FSPF is invoked at PLOGI. At initial power on of the mainframe complex and

after the fabric build and security processes have been fulfilled, individual ports supported by the fabric

begin their initial PLOGI process. As each port (CHPID and storage port) logs into a cascaded FICON fabric,

FSPF assigns that port (whether it will ever need to use a cascaded link or not) to route I/O over a specific

cascaded link. Once all ports have logged in to the fabric, I/O processing can begin. If any port is taken

offline and then put back online, it will go through PLOGI again and the same or a different cascaded link

might be assigned to it.

There is one problem with FSPF routingit is static. FSPF decisions are made in the absence of data

workflow that may prove to be inappropriate for the real-world patterns of data access between mainframe

and storage ports. Since FSPF cannot know what I/O activity will occur across any specific link, it is only

concerned about providing network connectivity. It has only a very shallow concern about performance

number of hops (which for FICON is 1 so that metric is always equal) and speed of each cascaded link

(which can be different and can result in costing each cascaded link as a lower-to-higher cost link).

FSPF static routing can result in some cascaded links being over-congested (due to a shortage of buffer

credits and/or high utilization of bandwidth) and other cascaded links being under-utilized. FSPF does not

take this into account as its only real function is to ensure that connectivity has been established. Although

mainframe end users have long exploited the MVS and z/OS ability to provide automatic CHPID I/O loadbalancing mechanisms, there is not an automatic load-balancing mechanism built into the FC-SW-2 or FCSW-3 protocol when cascaded links are used.

Cascaded FICON in a Brocade environment

20 of 40

MAINFRAME

Technical Brief

So on one hand a FICON cascaded fabric allows you to have tremendous flexibility and ultra high availability

in the I/O architecture. You can typically enjoy decreased storage and infrastructure costs, expanded

infrastructure consolidation options, ease of total infrastructure management, thousands more device

addresses, access to additional storage control units per path, optimized use of all of your storage

resources, and higher data availability. Also, higher data availability in a cascaded FICON zSeries or z9

environment implies better, more robust DR/BC solutions for your enterprise. So from that point of view,

FICON cascading has many positive benefits.

But on the other hand a plain, FSPF-governed, unmanaged FICON cascaded environment injects

unpredictability into enterprise mainframe operations where predictability has always ruled. So you must

take back control of your FICON cascaded environment to restore predictability to mainframe operations

and stable, reliable, and predictable I/O performance to applications.

All vendors provide the following:

Some form of cascaded link trunking

A choice of link speeds for the deployment of cascaded links

A means of influencing FSPF by configuring a preferred path (cascaded link) between the FICON

switches on a port-by-port basis

A means to prevent a frame in a FICON switching device from transferring from a source port to a

blocked destination portincluding cascaded link port.

But what do these mechanisms mean to you and how do you decide what to use to control your

environment to obtain the results you want? First you have to know what you want to accomplish. Often you

want to have the system automatically take care of itself and to adjust to changing conditions for

management simplicity and elasticity of the I/O system in general to respond to situational workloads and

unusual events. For other enterprises, it might be rigid control over the environment even if it means more

work in managing the environment and less elasticity in meeting shifts in I/O workload hour to hour, day to

day. So choosing the correct management strategy means that you must have a general understanding of

each of the cascaded link control mechanisms, so that you can wisely plan your environment.

The next section presents best practices in FICON cascaded link management.

Cascaded FICON in a Brocade environment

21 of 40

MAINFRAME

Technical Brief

BEST PRACTICES FOR FICON CASCADED LINK MANAGEMENT

The best recommendation to start with is to avoid managing FICON cascaded links manually! By doing so

you will circumvent much tedious workwork that is prone to error and is always static in nature. Instead,

implement FICON cascaded path management, which automatically responds to changing I/O workloads

and provides a simple, labor-free but elegant solution to a complex management problem. This simplified

management scheme can be deployed through a combination of using the free, automatic FSPF process

and enabling a form of ISL trunking on each switching device in the FICON fabric.

This section explores ISL trunking in greater detail. Brocade offers several trunking options for the Brocade

5000, 24000, 48000, and DCX platforms; Brocade M-Series FICON directors offer a software- based

trunking feature known as Open Trunking.

Terms and Definitions

Backpressure. A condition in which a frame is ready to be sent out of a port but there is no transmit BB

credit available for it to be sent as a result of flow control from the receiving device.

Bandwidth. The maximum transfer bit-rate that a link is capable of sustaining; also referenced in this

document as capacity.

Domain. A unique FC identifier assigned to each switch in a fabric; a common part of the FC addresses

assigned to devices attached to a given switch.

Fabric Shortest Path First (FSPF). A standard protocol executed by each switch in a fabric, by which the

shortest paths to every destination domain are computed output to a table that gives the transmit ISLs

allowed when sending to each domain. Each such transmit ISL is on a shortest path to the domain, and

FSPF allows any one of them to be used.

Flow. FC frame traffic arriving in a switch on a specific receive port that is destined for a device in a

specific destination FC domain elsewhere in the fabric. All frames for the same domain arriving on the

receive port are said to be in the same flow.

Oversubscription. A condition that occurs when an attempt is made to use more resources than are

available, for example when two devices could source data at 1 Gbit/sec and their traffic is routed

through one 1 Gbit/sec ISL, the ISL is oversubscribed.

Frame-level Trunking Implementation

Trunking allows traffic to be evenly balanced across ISLs while preserving in order delivery. Brocade offers

hardware (ASIC)-based, frame-level trunking and exchange-level trunking on the Brocade 5000, 24000,

48000, and DCX platforms. The frame-level method balances I/O such that each successive frame may go

down a different physical ISL, and the receiving switch ensures that the frames are forwarded onward in

their original order. Figure 8 shows a frame-level trunk between two FICON switches. For this to work there

must be high intelligence in both the transmitting and receiving switches.

At the software level, switches must be able to auto-detect that forming a trunk group is possible, program

the group into hardware, display and manage the group of links as a single logical entity, calculate the

optimal link costs, and manage low-level parameters such as buffer-to-buffer credits and Virtual Channels

optimally. Management software must represent the trunk group properly. For the trunking feature to have

broad appeal, this must be as user-transparent as possible.

Cascaded FICON in a Brocade environment

22 of 40

MAINFRAME

Technical Brief

At the hardware level, the switches on both sides of the trunk must be able to handle the division and

reassembly of several multi-gigabit I/O streams at wire speed, without dropping a single frame or delivering

even one frame out of order. To add to the challenge, there are often differences in cable length between

different ISLs. Within a trunk group, this creates a skew between the amounts of time each link takes to

deliver frames. This means that the receiving ASIC will almost always receive frames out of order and must

be able to calculate and compensate for the skew to re-order the stream properly.

There are limitations to the amount of skew that an ASIC can tolerate, but these limits are high enough that

they do not generally apply. The real-world applicability of the limitation is that it is not possible to configure

one link in a trunk to go clockwise around a large dark-fiber ring, while another link goes counterclockwise.

As long as the differences in cable length are measured in a few tens of meters or less, there will not be an

issue. If the differences are larger than this, a trunk group cannot form. Instead, the switch creates two

separate ISLs and uses either DLS or DPS to balance them.

Figure 8. Frame-level trunking concept

The main advantage of Brocade frame-level trunking is that it provides optimal performance: a trunk group

using this method truly aggregates the bandwidth of its members. The feature also increases availability by

allowing non-disruptive addition of members to a trunk group, and minimizing the impact of failures.

However, frame-level trunking does have some limitations. On the Brocade 5000, Brocade 24000 (with 16port, 4 Gbit/sec blades) and 48000 Directors, and the DCX, it is possible to configure multiple groups of up

to eight 4 Gbit/sec links each. The effect is the creation of balanced 32 Gbit/sec pipes (64 Gbit/sec fullduplex). When connecting a Brocade 48000 or other 4 Gbit/sec switch to a 2 Gbit/sec switch, a lowest

common denominator approach is used, meaning that the trunk groups is limited to 4x 2 Gbit/sec instead

of 8x 4 Gbit/sec.

Frame-level trunking requires that all ports in a given trunk must reside within an ASIC port-group on each

end of the link. While a frame-level trunk group outperforms either DLS or DPS solutions, using links only

within port groups limits configuration options. The solution is to combine frame-level trunking with one of

Cascaded FICON in a Brocade environment

23 of 40

MAINFRAME

Technical Brief

the other methods, as illustrated in Figure 8, which shows frame-level trunking operating within port groups,

and DLS operating between trunks. On the Brocade 48000 and DCX, trunking port groups are built on