Professional Documents

Culture Documents

Module 09

Uploaded by

MouliCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Module 09

Uploaded by

MouliCopyright:

Available Formats

Breaking Strength of Thread

PowerPoint to accompany

Introduction to MATLAB

for Engineers, Third Edition

Thread breaking strength (in N) for 20 tests:

92, 94, 93, 96, 93, 94, 95, 96, 91, 93, 95, 95, 95,

92, 93, 94, 91, 94, 92, 93

William J. Palm III

Chapter 7

Statistics, Probability,

and Interpolation

(Module 9)

The six possible outcomes are:

91, 92, 93, 94, 95, 96.

We can use the histogram function to plot the

histogram (next figure).

Copyright 2010. The McGraw-Hill Companies, Inc. This work is only for nonprofit use by instructors in courses for which this textbook has been adopted.

Any other use without publishers consent is unlawful.

7-2

Breaking Strength of Thread

Histograms for 20 tests of thread strength. Figure 7.11, page

297

% Thread breaking strength data for 20 tests.

y = [92,94,93,96,93,94,95,96,91,93,...

95,95,95,92,93,94,91,94,92,93];

% The six possible outcomes are ...

91,92,93,94,95,96.

x = [91:96];

histogram(y)

ylabel(Absolute Frequency)

xlabel(Thread Strength (N))

title([Absolute Frequency Histogram,...

for 20 Tests])

This creates the next figure.

7-3

7-4

Absolute frequency histogram for 100 thread tests.

Figure 7.12. This was created by the program on page 298.

How do

you think

this

compares

to 20

tests?

Use of the bar function for relative frequency histograms

(page 299)

Absolute frequency histograms show the total number of

samples

Relative frequency histograms show the fraction of samples

% Relative frequency histogram.

tests = 100;

y = [13,15,22,19,17,14]/tests;

x = [91:96];

bar(x,y),ylabel(Relative Frequency),...

xlabel(Thread Strength (N)),...

title([Relative Frequency Histogram,...

for 20 Tests])

This creates the next figure.

7-5

7-6

Relative frequency histogram for 100 thread tests.

Figure 7.13

Histogram functions Table 7.11

The hist function should be replaced by the histogram function

7-7

7-8

Command

Description

bar(x,y)

Creates a bar chart of y versus x, where x is the list of bin

centres.

histogram(y)

Aggregates the data in the vector y into an automaticallychosen number of bins.

histogram(y,n)

Aggregates the data in the vector y into n bins evenly

spaced between the minimum and maximum values in y.

histogram(y,x)

Aggregates the data in the vector y into bins whose edges

are specified by the vector x. The bin widths are the

distances between the edges. This behaviour is different to

hist and bar.

[z,edges] =

histcounts(y)

Returns two vectors z and x that contain the frequency

count and the bin edges. This uses the same algorithm as

histogram, but without producing the plot.

The Data Statistics tool. Figure 7.14 on page 301

Scaled Frequency Histogram (pages 301-303)

Scaled frequency histograms show the likelihood of a number

occurring: they are the probability density function (pdf).

They are important because you can directly compare

histograms with different bin widths.

% Absolute frequency data.

y_abs=[1,0,0,0,2,4,5,4,8,11,12,10,9,8,7,5,4,4,3,1,1,0,1];

binwidth = 0.5;

% Compute scaled frequency data.

area = binwidth*sum(y_abs);

y_scaled = y_abs/area;

% Define the bins.

bins = [64:binwidth:75];

% Plot the scaled histogram.

bar(bins,y_scaled),...

ylabel(Scaled Frequency),xlabel(Height (in.))

7-9

This creates the next figure.

More? See page 300.

Scaled histogram of height data. Figure 7.21, page 302

7-10

Scaled histogram of height data for very many

measurements.

Once we have enough samples, we can specify a model for the

populations distribution (the line)

7-11

7-12

The basic shape of the normal distribution curve.

Figure 7.22, page 304

7-13

More? See pages 303-304.

The effect on the normal distribution curve of increasing .

For this case = 10, and the three curves correspond to

= 1, = 2, and = 3.

7-14

Probability interpretation of the limits.

Probability interpretation of the 2 limits.

7-15

7-16

More? See pages 431-432.

Sample Statistics vs Population Statistics

The probability density function (pdf) is defined as:

A population is every single instance of something, e.g.:

all the people in Australia

every bolt manufactured in a factory

From discrete data (a histogram), it can be computed:

Note that Nx = area under an absolute frequency plot

The probability that the random variable x is no less

than a and no greater than b is written as P(a x b):

For a normal distribution, it can be computed as follows:

1

a

P(a x b) =

erf b erf

(7.23)

2

2

See pages 305-306.

7-17

Measuring how Good Statistics Are

A confidence interval is a measure of the accuracy of a

sample statistic.

It provides a range of values.

You expect the population statistic to lie within that range.

The standard definition is a 95% confidence interval, which

means that there is a 95% chance that the population statistic

lies within the range.

The width of the range increases as the probability of the

population statistic lying within the range increases.

The width of the range decreases as the sample size

increases.

Look at functions such as normfit to see how to obtain

these values in MATLAB.

7-19

Measuring the properties of the entire population is expensive and

often impractical.

A realisation is a single event or instance (one person or bolt).

A sample is a subset of a population (a collection of realisations),

which is affordable and practical to measure. If it is a

representative sample, the sample statistics will provide useful

information about the population statistics: you can infer the

population statistics and make decisions based on that information.

To be representative, the sample must be:

sufficiently

Representative

large

20% of the people in Aust

randomly

selected from

the population

Unrepresentative

0.01% of the people in Aust

All of Toowoomba to

represent all of Australia

5% of bolts from 10 batches All bolts from one batch

7-18

1 bolt per week

Measuring Confidence Intervals

Common sample statistics that are measured are the mean

and standard deviation.

Because each sample is different, the sample statistic varies.

Therefore the sample statistic has a distribution (ergo a pdf).

Irrespective of the population distribution, it is expected that

the sample statistic has a normal distribution.

For instance, the sample pdf might be well-represented by a

binomial distribution (the population pdf has a binomial

distribution).

7-20

However, the sample mean is expected to have a normal

distribution.

The width of this distribution (the standard deviation of the sample

mean) decreases as the number of samples increases (The

Central Limit Theorem).

As your number of samples increases, your accuracy improves.

A consequence of this is that 5 samples each containing 10

realisations could be more accurate than 1 sample containing 100

realisations.

Random number functions Table 7.31

Sums and Differences of Random Variables (page 307)

It can be proved that the mean of the sum (or difference) of

two independent normally distributed random variables

equals the sum (or difference) of their means, but the

variance is always the sum of the two variances. That is, if x

and y are normally distributed with means x and y, and

variances 2x and 2y, and if u = x + y and = x y, then

u = x + y

(7.24)

= x y

(7.25)

u==x+y

Command

Description

rand

Generates a single uniformly distributed random number

between 0 and 1.

rand(n)

Generates an n n matrix containing uniformly

distributed random numbers between 0 and 1.

rand(m,n)

Generates an m n matrix containing uniformly

distributed random numbers between 0 and 1.

rng(s)

Sets the state (seed) of all random number generators

to s.

rng(0)

Resets all random number generators to their initial

state.

rng(shuffle)

Seeds all random number generators to a different state

(determined by the current time) each time it is executed.

(7.26)

7-21

7-22

Table 7.31 (continued)

randn

Generates a single normally distributed random number

having a mean of 0 and a standard deviation of 1.

randn(n)

Generates an n n matrix containing normally

distributed random numbers having a mean of 0 and a

standard deviation of 1.

randn(m,n)

Generates an m n matrix containing normally

distributed random numbers having a mean of 0 and a

standard deviation of 1.

Sets the state (seed) of all random number generators

to s.

rng(s)

rng(0)

Resets all random number generators to their initial

state.

rng(shuffle)

Seeds all random number generators to a different state

(determined by the current time) each time it is

executed.

Generates a random permutation of the integers from 1

to n.

randperm(n)

7-23

The following session shows how to obtain the same

sequence every time rand is called.

7-24

>>rng(0)

>>rand

ans =

0.8147

>>rand

ans =

0.9058

>>rng(0)

>>rand

ans =

0.8147

>>rand

ans =

0.9058

You need not start with the initial state in order to

generate the same sequence. To show this, continue

the above session as follows.

The general formula for generating a uniformly distributed

random number y in the interval [a, b] is

y = (b a) x + a

>>s = rng;

>>rng(s)

>>rand

ans =

0.9058

>>rng(s)

>>rand

ans =

0.9058

(7.31)

where x is a random number uniformly distributed in the

interval [0, 1]. For example, to generate a vector y

containing 1000 uniformly distributed random numbers in

the interval [2, 10], you type y = 8*rand(1,1000) + 2.

7-25

7-26

If x is a random number with a mean of 0 and a

standard deviation of 1, use the following equation to

generate a new random number y having a standard

deviation of and a mean of .

y=x+

If y and x are linearly related, as

y = bx + c

(7.33)

and if x is normally distributed with a mean x and

standard deviation x, it can be shown that the mean

and standard deviation of y are given by

(7.32)

For example, to generate a vector y containing 2000

random numbers normally distributed with a mean of 5

and a standard deviation of 3, you type

y = bx + c

(7.34)

y = |b|x

(7.35)

y = 3*randn(1,2000) + 5.

More? See pages 310-311.

7-27

7-28

You might also like

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- New Text DocumentDocument159 pagesNew Text DocumentMouliNo ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Development of New AC To DC Converters For EHVDocument5 pagesDevelopment of New AC To DC Converters For EHVMouliNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- What Is Power Electronics?Document3 pagesWhat Is Power Electronics?MouliNo ratings yet

- 1 Output Controllability: MAE 280A 1 Maur Icio de OliveiraDocument10 pages1 Output Controllability: MAE 280A 1 Maur Icio de OliveiraMouliNo ratings yet

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- State Space ProblemsDocument87 pagesState Space ProblemsMouli0% (1)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Trigger CircuitDocument9 pagesTrigger CircuitMouliNo ratings yet

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Full Spectrum Simulation Platform (Miniature Academic Version) For Power Electronics and Power SystemsDocument35 pagesFull Spectrum Simulation Platform (Miniature Academic Version) For Power Electronics and Power SystemsMouliNo ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Eigenvectors and DiagonalizationDocument10 pagesEigenvectors and DiagonalizationMouliNo ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- State-Space Representation - Wikipedia, The Free EncyclopediaDocument9 pagesState-Space Representation - Wikipedia, The Free EncyclopediaMouliNo ratings yet

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- 1 More On The Cayley-Hamilton Theorem: MAE 280A 1 Maur Icio de OliveiraDocument7 pages1 More On The Cayley-Hamilton Theorem: MAE 280A 1 Maur Icio de OliveiraMouliNo ratings yet

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- 1 Zeros of LTI Systems: MAE 280A 1 Maur Icio de OliveiraDocument9 pages1 Zeros of LTI Systems: MAE 280A 1 Maur Icio de OliveiraMouliNo ratings yet

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- 1 Some Facts On Symmetric Matrices: MAE 280A 1 Maur Icio de OliveiraDocument9 pages1 Some Facts On Symmetric Matrices: MAE 280A 1 Maur Icio de OliveiraMouliNo ratings yet

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- 1 Minimal Realizations: Co o C C o 1 2 Co 3 C o 1 Co C oDocument6 pages1 Minimal Realizations: Co o C C o 1 2 Co 3 C o 1 Co C oMouliNo ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Jordan Canonical Form Generalized Modes Cayley-Hamilton TheoremDocument14 pagesJordan Canonical Form Generalized Modes Cayley-Hamilton TheoremMouliNo ratings yet

- Analysisof Aperiodicand Chaotic Motionsina LSRMversionpublieDocument5 pagesAnalysisof Aperiodicand Chaotic Motionsina LSRMversionpublieMouliNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- TeslaDocument17 pagesTeslaMouliNo ratings yet

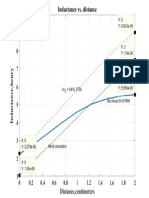

- Inductance VariationDocument1 pageInductance VariationMouliNo ratings yet

- HW2 SolDocument5 pagesHW2 SolMouliNo ratings yet

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- HT0500x FramelessTorqueMotors Datasheet R5Document4 pagesHT0500x FramelessTorqueMotors Datasheet R5MouliNo ratings yet

- HW1 SolDocument10 pagesHW1 SolMouliNo ratings yet

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- S.R.M and Drives: Mouli.TDocument24 pagesS.R.M and Drives: Mouli.TMouliNo ratings yet

- Allied Product Overview 20190304Document24 pagesAllied Product Overview 20190304MouliNo ratings yet

- Extremal TraceDocument16 pagesExtremal TraceMouliNo ratings yet

- IETE Template (Technical Review)Document6 pagesIETE Template (Technical Review)MouliNo ratings yet

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- S.R.M and Drives: Mouli.TDocument24 pagesS.R.M and Drives: Mouli.TMouliNo ratings yet

- Weekly Report Template 1Document5 pagesWeekly Report Template 1MouliNo ratings yet

- Mosaic 4 Photocopiable PDFDocument4 pagesMosaic 4 Photocopiable PDFguerrasuarezNo ratings yet

- Chapter 11 Inheritance and PolymorphismDocument55 pagesChapter 11 Inheritance and PolymorphismSharif mahamod khalifNo ratings yet

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Session 1-5 QMS-M&EDocument131 pagesSession 1-5 QMS-M&EOcir Ayaber100% (4)

- Ehr Software Pricing GuideDocument6 pagesEhr Software Pricing GuideChristopher A. KingNo ratings yet

- Introduction To Computer GraphicsDocument14 pagesIntroduction To Computer GraphicsSupriyo BaidyaNo ratings yet

- Microprocessor Viva QuestionsDocument2 pagesMicroprocessor Viva QuestionsPrabhakar PNo ratings yet

- NTH Term PDFDocument3 pagesNTH Term PDFCharmz JhoyNo ratings yet

- Analysis and Synthesis of Hand Clapping Sounds Based On Adaptive DictionaryDocument7 pagesAnalysis and Synthesis of Hand Clapping Sounds Based On Adaptive DictionarygeniunetNo ratings yet

- Apache Tutorials For BeginnersDocument23 pagesApache Tutorials For BeginnersManjunath BheemappaNo ratings yet

- Overview of Oracle Property Management ModuleDocument3 pagesOverview of Oracle Property Management ModuleMahesh Jain100% (1)

- Introduction of Oracle ADFDocument12 pagesIntroduction of Oracle ADFAmit SharmaNo ratings yet

- Content Strategy Toolkit 2Document10 pagesContent Strategy Toolkit 2msanrxlNo ratings yet

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Analyzing Iterative AlgorithmDocument38 pagesAnalyzing Iterative AlgorithmNitish SolankiNo ratings yet

- Algorithms - Key Sizes and Parameters Report. 2013 RecommendationsDocument96 pagesAlgorithms - Key Sizes and Parameters Report. 2013 Recommendationsgogi777No ratings yet

- Qtiplot Manual enDocument390 pagesQtiplot Manual enjovita georgeNo ratings yet

- HSSLiVE XII Practical Exam Commerce Comp Accountancy Scheme Model QuestionsDocument18 pagesHSSLiVE XII Practical Exam Commerce Comp Accountancy Scheme Model QuestionsDrAshish VashisthaNo ratings yet

- Clarifying Dreyfus' Critique of GOFAI's Ontological Assumption - A FormalizationDocument13 pagesClarifying Dreyfus' Critique of GOFAI's Ontological Assumption - A FormalizationAaron ProsserNo ratings yet

- 1.interview Prep JMADocument9 pages1.interview Prep JMASneha SureshNo ratings yet

- Rose Rea Resume 082916Document1 pageRose Rea Resume 082916api-253065986No ratings yet

- How To Use The Touch Command - by The Linux Information Project (LINFO)Document2 pagesHow To Use The Touch Command - by The Linux Information Project (LINFO)Alemseged HabtamuNo ratings yet

- Exercises ChurchTuringDocument2 pagesExercises ChurchTuringAna SantosNo ratings yet

- 3000 Cards DeutscheDocument140 pages3000 Cards DeutscheDhavaljk0% (1)

- Oracle Fusion Cloud Modules - Data Sheet With DetailsDocument17 pagesOracle Fusion Cloud Modules - Data Sheet With DetailsKishore BellamNo ratings yet

- Visual CryptographyDocument31 pagesVisual Cryptographyskirancs_jc1194100% (1)

- Management Information Systems: Managing The Digital Firm: Fifteenth EditionDocument19 pagesManagement Information Systems: Managing The Digital Firm: Fifteenth EditionShaunak RawkeNo ratings yet

- Assignment 1 Released: Course OutlineDocument4 pagesAssignment 1 Released: Course OutlineSachin NainNo ratings yet

- Cango - Customer Service ProcessDocument7 pagesCango - Customer Service Processalka murarkaNo ratings yet

- Badis For MRP - Sap BlogsDocument13 pagesBadis For MRP - Sap BlogsDipak BanerjeeNo ratings yet

- ISO 50001 Correspondence Table For EguideDocument2 pagesISO 50001 Correspondence Table For EguideThiago Hagui Dos SantosNo ratings yet

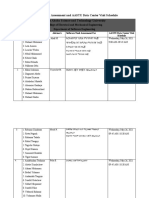

- Software Need Assessment and AASTU Data Center Visit ScheduleDocument9 pagesSoftware Need Assessment and AASTU Data Center Visit ScheduleDagmawiNo ratings yet