Professional Documents

Culture Documents

Intro RF p1g

Uploaded by

Iris ShtraslerOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Intro RF p1g

Uploaded by

Iris ShtraslerCopyright:

Available Formats

Dr.

Ariel Luzzatto

ANALOG TO DIGITAL CONVERSION

Quantization noise for equal level spacing

Let a zero-average signal to be quantized into M equally-spaced levels

a/2

a

a = P / M = 2V / M

V = Ma / 2 , A = ( M - 1 )a

3a/2

Aj +a/2

Aj

Aj -a/2

Any signal v in the range A j - a / 2 v A j + a / 2 will be quantized (approximated) as v A j ,

thus v = A j + e , where e a / 2 . This process produces errors that introduces an additive

quantization noise to the original signal. However, the quantization noise is limited to a / 2 ,

regardless of the amplitude of the signal. If we assume that all the possible error values of e are

equally likely to occur, namely the quantization error is uniformly distributed, then probability

distribution of e will be p( e ) = 1 / a . Denoting by E[x] the expected value of x one gets

m = E[e ] = 1

a/ 2

e d e = 0 , s 2 = E[( e - m )2 ] = E[e 2 ] = 1

-a / 2

a/ 2

e 2 de = e

-a / 2

3 a/ 2

3a a/ 2

=a

12

The peak signal is V peak = V = Ma / 2 , and if the signal is considered sinusoidal, the rms voltage

is

Vrms = V peak / 2 = Ma / 2 2 . Denoting the full-scale signal-to-noise ratio SNR of the

2 2

2

2

quantized signal by SNR = Vrms

/ s 2 we get SNR = Vrms

/ s 2 = M 2a / 8 = 3 M 2 .

2

a / 12

If now the number of quantization levels is taken to be a power of two, namely M = 2b , where b

is the number of bits used for the quantization, then

SNR dB = 10 log( 3 / 2 ) + 10 log( 22b ) 1.76 + 6b [dB ]

It follows that each quantization bit improves the SNR by 6dB.

Dr. Ariel Luzzatto

When working with radio receivers, one would like to sample and quantize the received signals,

but would also prevent the quantization noise from further deteriorate the SNR already limited by

the NF of the receiver, so we are not worsening the existing limiting factors. However, from the

computation done before, it follows that achieving this goal would require increasing the number

of bits b to a sometimes impractically large value. So one should find other ways of increasing the

effective number of bits while leaving the physical number of bits reasonably limited. Let us

see what figures we would come up if we just increase b.

Example: Assume that a radio has a baseband bandwidth of 9 kHz, and the receiver IMR3 (thirdorder intermodulation rejection) is specified to be 70dB. The required signal to noise at minimum

operating signal (at sensitivity) must be at least SNRO = 10dB. Assume that you allow a

sensitivity degradation of 3 dB, namely, you allow the ADC to produce a quantization noise equal

to the thermal noise at the sampling point. How many bits should the ADC have approximately?

Solution

In order to achieve a total SNRO = 10dB , the ADC must provide SNRADC = 13dB at the smallest

working signal. Since the IMR3 requires us to handle two signals of equal amplitude, each 70dB

higher than the smallest, namely a signal of peak 76dB higher that the smallest, then the ADC

must provide an overall SNRADC = 13 +76 = 89 dB at the largest signal, which yields

89 1.76 + 6b b ( 89 - 1.76 ) / 6 15 bit

The required number of bits may be reduced, while keeping the same SNR O by over-sampling the

signal, namely, sampling it at a rate which is a multiple of the Nyquist rate. This will end up in

keeping the same effective number of bits, while the physical number of bits will be less. To

understand how over-sampling works here, we need to refresh the concept of sample mean.

Sample mean

Consider n statistically-independent and identically-distributed random variables {X i }, i = 1,..,n ,

each with mean m x and variance s x2 . Let us denote by Sample mean the normalized sum Y

n

Y = 1 Xi

n

i =1

Clearly for a finite value of n, Y estimates is some respect the average of each variable X i . In fact,

the mean of Y yields

n

m y = E[Y ] = 1 E[X i ] = E[X i ] = m x .

n

i =1

Using the above result we get

n

s 2y = E[( Y - m y )2 ] = E[( 1 X i - m y )2 ] = E[( 1 X i )2 +m 2y - 2m y 1 X i ]

n

i =1

i =1

i =1

= E[( 1 X i )2 ] + E[m 2y ] - 2 E[m y 1 X i ] = E[( 1 X i )2 ] + m 2y - 2m y m x

n

n

n

i =1

n

i =1

i =1

n

= E[ 12 X i X j ] - m 2y = 12 E[X i2 ] + 12 E[X i X j ] - m x2

n i =1 j =1

n i =1

n i=1 j i

Dr. Ariel Luzzatto

Recalling that E[X i2 ] = s x2 + m x2 , and that E[X i X j ] = E[X i ] E[X j ] = m x2 from independence, we

get

n

s 2y = 1 ( s x2 + m x2 ) + 12 E[X i X j ] - m x2 = 1 ( s x2 + m x2 ) + 12 m x2 1 - m x2

n

n i =1 j i

i =1 j i

s

= 1 ( s x2 + m x2 ) + 1 m x2 n( n - 1 ) - m x2 = 1 s x2 + 1 m x2 + m x2 - 1 m x2 - m x2 = x

n

n

n

n

n

n2

s2

Summarizing, we got s 2y = x , namely, as the number of samples grows, the estimate Y of m x

n

becomes closer and closer to the exact average of X .

Note that if X is the sum of a deterministic signal embedded in a zero-mean noise, then m x is just

the average value of the deterministic signal over the sampling interval, and therefore Y is an

estimate of that average.

Effect of over-sampling on ADC accuracy

The sample mean yields the following conclusion: if we over-sample a signal together with a

zero-mean noise added as before, as the number of samples increases (as the over-sampling

becomes faster) the estimator Y approaches the average of the signal over the sampling interval.

Note that, if the signal is constant over the sampling interval, then s x2 is just the variance of the

quantization noise, which will be larger if one has fewer bits, and thus more samples (a higher

over-sampling) will be required to reduce it. Over-sampling K times can be viewed as squeezing

the quantization noise in a bandwidth K times wider that the Nyquist bandwidth.

From the relation s 2y = s x2 / n we see that the quantization noise power is reduced by 6dB for

each 4x over-sampling , namely when n 4n. In our previous example, if we used a baseband

sampling rate of Nyquist x 16 = (2x9)x16 Ksamples/sec =288 Ksamples/sec, we could use only

13 bits for the ADC, and obtain the same SNRO. In fact, if the over-sampling could be high

enough, one could obtain any SNR equivalent, by using only one bit for the ADC.

The effect of over-sampling, however, does not reduce the quantization noise fast enough, and

leads to over-sampling rates that are not practical for some applications. For instance, if one

wishes to use a one-bit quantizer, and obtain 4-bit effective resolution, the required over-sampling

rate to obtain the 3 additional effective bits would be 43 = 64, which looks reasonable, but to get a

16 bit resolution would require a 415 over-sampling rate, which is not realizable.

This obstacle can be overcome by re-shaping the quantization noise so that more power will fall

out of the Nyquist bandwidth during the over-sampling process. This process is carried out with

an ADC architecture known as Sigma-Delta.

Dr. Ariel Luzzatto

First-order Sigma-delta ADC

Comparator

Integrator

f (t )

Fn +1

+

-

One-bit

output

Latch

Clk

S( t )

DAC

Referring to figure, let f(t) be an analog signal of bandwidth much smaller than the acquisition

frequency 1/T, and with | f(t)| < K .

Denoting the clock period by t, and the acquisition time by T = Nt , N >>1, let Fn be the

value of the output of the integrator at the instant t = nt at which the nth clock occurs.

Assuming that the comparator outputs a logic 1 if Fn 0 or a logic 0 if Fn < 0, the DAC

outputs +K or -K in correspondence to Q = 1 or Q = 0 respectively, we get

T

FN - F0 = [f ( t ) - S( t )] dt = e ( t )dt , e ( t ) = f ( t ) - S( t )

where e ( t ) is the quantization error at the output at any instant t , and S n is the 1-bit quantized

(bipolar) output value at the same instant, with

S( t ) = Sn c n ( t )

S n = K sign( Fn )

1 , nt t < ( n + 1 )t

0 , else

cn( t ) =

Then we may write

FN - F0 =

T

N -1( n +1 )t

n =0

[f ( t ) - Sn ]dt =

nt

= f ( t )dt - t

N -1( n +1 )t

n =0

f ( t )dt -

nt

N -1

N -1

n =0

n =0

N -1

n=0

( n +1 )t

Sn

dt

nt

Sn = f ( t )dt - T N1 Sn

For any since | f(t)| < K , we note that the sequence {Fn} is bounded, for if Fn 0 then the input

to the integrator will be negative, thus Fn+1 < Fn , and similarly, if Fn < 0 the input to the

integrator will be positive, thus Fn+1 > Fn. Therefore, no matter where it started, {Fn} will end-up

oscillating near zero with alternating sign. Since | f(t)| < K and Fn = |Fn|sign( Fn ), we get

Dr. Ariel Luzzatto

( n +1 )t

Fn +1 = Fn +

[f ( t ) - S n ]dt

nt

( n +1 )t

= Fn sign( Fn ) +

f ( t )dt - K sign( Fn ) t

nt

= ( Fn - Kt )sign( Fn ) +

( n +1 )t

f ( t )dt < Fn - Kt + Kt

nt

Which implies that, {|Fn |} decreases monotonically with n as long as Fn > 2 Kt . Indeed

Fn = 2 Kt + x 2 Fn+1 < Fn - Kt + Kt = 2 Kt + x 2 Fn+1 < Fn

Moreover, for any F0 < , there always exists a value of n 0 for which Fn 2 Kt , since

( n +1 )t

Fn +1 - Fn =

( n +1 )t

[f ( t ) - Sn ]dt

nt

( n +1 )t

f ( t ) - Sn dt

nt

[ f ( t ) + K ]dt < 2 Kt

nt

and therefore there is no possibility that Fn will bypass the gap [ - 2 Kt , 2 Kt ] . Once Fn enters

the range FN 2 Kt , then it remains bounded . Indeed

Fn 2 Kt Fn +1 < Fn - Kt + Kt < 2 Kt

Therefore, if we started with F0 = 0+ , or after several clocks, {Fn} remains bounded. In other

words for N large enough FN = O( 2 Kt ) , thus, from the equation for FN we get

T

f ( t )dt - T 1

N

N -1

Sn = O( 2Kt ) = O( 2KT / N )

n =0

which, normalizing over the acquisition time yields

1

T

f ( t )dt = 1

N

N -1

N -1

n =0

n =0

Sn + O( 2K / N ) = K N1 sign( Fn ) + O( 2 / N )

In other words, for any given acquisition interval [t0,t0+T], the average value for f(t) is K times

the average of the comparator signs, with a relative error of the order of magnitude of 2/N.

If N is taken to be a power of two, say N = 2m, all we need to do in order to obtain the A/D

conversion value, is to attach an up/down counter to the clock, with the up/down control

connected to the Q bit of the latch. Then denoting by {Q0 ,Q1 ,..,QD -1} the output word of the

counter, the output word of the D/A is taken to be its mth left-shift, which is the same as the value

obtained by dividing the result by N. The figure shows an exemplary simulation of the

Dr. Ariel Luzzatto

convergence of the sigma-delta A/D converter as described, with K = 1, N = 128, F1 = 0.67, and

f(t0) = - 0.41. The approximation error is 2.8% which is in good agreement with the order of

magnitude 2/128 = 1.6%.

Output value

Clock No.

Output value after

128 clocks: -0.3984

If we increase N about ten times, namely N = 1024, then the output value becomes - 0.40918, and

the approximation error reduces to 0.2% in close agreement with 2/1024 = 0.195% .

From the previous analysis we see that, at any clock shift k and any set {Sn+ k } , as the sampling

rate increases, the average of the bipolar values over a time T at the output tends to the limit

m S ,k lim 1

N

N -1

n =0

Sn+ k = 1

T

kt +T

f ( kt + t )dt

kt

and assuming that all the random processes we deal with are ergodic, then m S ,k = E Sn,k ,

where E[ ] denotes the expected value.

Now, form the previous results, and setting Yk = 1

N

kt +T

N -1

E f ( t )dt - T 1 Sn + k

N

n=0

kt

N -1

Sn+k we get

n =0

= E[( F

2

2

N + k - Fk ) ] = E[( TYk - T m S ,k ) ]

Assuming that {Fn } are statistically independent zero-mean random variables, we get

E[( FN + k - Fk )2 ] = E[FN2 + k ] + E[Fk2 ] = 2 E[Fn2 ]

and since Fn 2 Kt , we estimate the rms value at the output of the integrator as

E[Fn2 ] = 2( Kt )2

from which it follows that

2Kt , thus

Dr. Ariel Luzzatto

E[( FN + k - Fk )2 ] = 2 E[Fn2 ] = ( 2 Kt )2

Substituting the above results, we obtain T 2 E[( Yk - m S ,k )2 ] = ( 2 Kt )2 and therefore

s Y2 E[( Yk - m S ,k )2 ] =

( 2 Kt )2

T

= 4K

N2

where we used the fact that T = Nt . Also we note that E[Yk ] = 1

N

N -1

E[Sn+ k ] = m S ,k .

n =0

If now we average N consecutive values of Yk ,Yk +1 , ,Yk + N -1 , which, as we show later, is

equivalent to low-pass filtering the sequence {Yk } , we obtain the sample mean estimator

Zk = 1

N

N -1

Yn+ k

n=0

, E[Z k ] = E[Yk ] = E[Sn + k ] = m S ,k

By the properties of the sample mean, the variance s Z2 E[( Z k - m S ,k )2 ] satisfies

s Y2

= 4 K3

N

N

2

and s Z is the quantization noise power if f(t) can be considered constant over a time period T.

For a full-scale signal of peak value V peak = K , and f(t) can be considered constant during the

s Z2 =

acquisition time, the peak signal to noise ratio obtained using the estimator Z is

SNR =

2

V peak

3

N

4

s Z2

Now if the Nyquist rate is Bs = 1 / T , and the sampling rate is Bos = 1 / t , then

Bos T

= =N

Bs t

For instance, for 4x over-sampling we get N = 4, and then

SNR dB 10 log( 43 / 4 ) 12dB

Since the SNR is proportional to N 3 , there is a 9dB improvement each time the sampling rate is

doubled, as opposed to the case of simple over-sampling, where the SNR is proportional to N and

there is an improvement of only 3dB (6dB for a 4x sampling rate increase).

Now, we show that averaging N samples f(nt), of an arbitrary function f(t) of bandwidth

A=2p(1/2t ) where T = nt , is roughly equivalent to take the of the same function f(t) after

filtering it using a filter of bandwidth B =2p(1/2T) . To see this let us write f(nt) with the help of

its inverse Fourier transform

Dr. Ariel Luzzatto

1

N

N -1

n =0

= 1

2p

= 1

2p

1

2p

f ( nt + kT ) = 1

N

N -1

n =0

1

2p

-A

f ( w )e jw nt e jw kT dw = 1

2p

-A

f ( w )e jw kt 1

N

N -1

e jw nT dw

n =0

jw Nt

jw Nt

- 1 dw = 1

- 1 dw

f ( w )e jw kT 1 e

f ( w )e jw kT 1 e

2p

N e jwt - 1

N e jwt - 1

-A

-A

sin( wT / 2 ) jw ( kT +T / 2 )

jw kT jw Nt / 2 sin( w Nt / 2 )

dw

f ( w )e ee jwt / 2 N sin( wt / 2 ) dw 21p f ( w ) wT / 2 e

-A

-A

B

-B

sin( wT / 2 )

f ( w )e jw ( kT +T / 2 ) dw g( kT + T / 2 ) , g(

w ) = f ( w )

wT / 2

sin( wT / 2 ) 1

=

wT = p Tf 1.392 f 0.44 1 , and also

wT / 2

2

T

2T

2

we approximated , in the range w B

where we used the fact that

sin( wt / 2 ) wt / 2 , N >> 1

- jwt / 2

1

e

wt / 2 = wT / 2 N p / 2 N << 1

Therefore, the Sigma-Delta operation, can be see as if we spread the quantization noise over a

bandwidth much larger than the signal bandwidth, reshape it so most noise energy lies outside the

signal band (in the higher portion of the spectrum) and then we apply low-pass filtering at signal

bandwidth, thus leaving out more noise than would be possible by just over-sampling.

Dr. Ariel Luzzatto

OSCILLATORS

+

Vi ( w )

-

+

V0 ( w )

-

G(V )

I0 ( w )

Z( w )

Oscillator components

I) A voltage-controlled non-linear current source driven by a controlling input voltage

vi(t) = Vcos(wit), where V can be assumed constant over time as compared to the angular

frequency wi.

Then I0(w) = G(V)Vi(w), where G(V) is a non-linear function of the peak amplitude V,

monotonically decreasing in absolute value as V increases.

II) A trans-impedance resonant linear feedback network with transfer function Z(w)

V (w )

Z( w ) 0

= R /( 1 + j 2Q Dw / w0 ), w > 0

I0 ( w )

In general, the larger the value of Q, the better the oscillator noise performance.

V0(w)can be written in the form

V0 ( w ) = Z( w )I 0 ( w ) = Z( w ) G(V ) Vi ( w )

If G(V) can adjust itself so that V0(w) = Vi( w) for some value of w and V, then the output of the

feedback network can be connected to the input of the current source, and the output current I0( w)

will continue to exist without the need of an external input signal Vi(w), which, in other words,

means that oscillations occur.

In other words an oscillation state must satisfy the complex-valued non-linear equation known as

the Barkhausen criterion

Im [Z( w )G(V )] = 0

Z( w ) G(V ) = 1

Re [Z( w )G(V )] = 1

37

Dr. Ariel Luzzatto

p - topology , Colpitts & common input equivalence

Z2

G(V )

Z3

Z1

p - topology

Z2

G(V)

Z3

G(V)

Z2

Z3

Z1

Z1

Colpitts

Z2

Z1

Z2

G(V)

G(V)

Z3

Z3

Z1

Common Input

- The non-linear transfer function G(V) determines the operating point of the oscillator.

- The critical value to design for, is the peak oscillating voltage at the limiting port of the nonlinear current source.

- The oscillating voltage amplitude at the limiting port, determines the far-out phase noise of the

oscillator (the noise floor).

- The quality factor of the transfer function Z(w) including input and output loading (the loaded

Q), determines the close-in phase noise.

38

Dr. Ariel Luzzatto

Basic feedback network

In the following, we assume high-Q behavior of the resonant circuit, therefore the transfer

function of the network in the figure can be readily computed with the help of zero-pole diagram

approximation technique (left as an exercise)

I0 ( w )

V0+( w )

CV

CI

V (w )

w L

1

1

Z( w ) = 0

, QL = 0 , w02 = 1 ( 1 + 1 )

2

I0 ( w )

w

w

r

L CV CI

0

w0 CI CV r 1 + j 2Q

L

w0

At w = w0 the transfer function is real, thus the first condition of Barkhausen criterion is satisfied,

and since Z(w0) = -1/(w02CICVr), if there exists a value of V that satisfies of the non-linear

equation

G(V ) = -w02 CI CV r

the second condition of Barkhausen criterion is also satisfied, and therefore oscillations will occur

and self-stabilize at that value.

- Note that the negative sign implies that we must use a phase-inverting active device.

- Since |G(V)| is a monotonically decreasing function, whenever V is smaller than the self-limiting

value, the amplitude of the oscillations will continue to grow, which assures oscillator start-up

following the presence of any small disturbance such as thermally-generated noise.

Example: large-signal oscillations

+ Vcc

C

L

Cb

vosc ( t )

Ce

I dc

Vbb +

-

39

Dr. Ariel Luzzatto

Denoting the base-emitter oscillating voltage by vosc ( t ) = Vdc + Vosc cos( w0t ) , and assuming that

neither collector saturation or reverse junction breakdown occur, and that r represent all the

resistive losses in the circuit, and given that Vosc >> 26mV , compute approximately Vosc

Solution: The ac-equivalent circuit is shown below

L

I osc cos( w0t )

+

Vosc cos( w0t )

Ce

Cb

Recall that for a bipolar transistor

I (x)

iC iE = I dc 1 + 2 n

cos ( nw0t )

n=1 I 0 ( x )

According to the voltage and current polarity given in the figure

I (x)

V

I osc = -2 I dc 1

, x = osc

I0 ( x )

VT

At resonance

V

Ce + Cb

1

, w0 =

Z( w0 ) = osc = I osc

LCe Cb

w02 CeCb r

and therefore

I ( x)

Vosc = - 2 1

-2 I dc 1

w C C r

I 0 ( x )

0 e b

Thus, we get a non-linear equation for x

x=

I dc / VT

w02CeCb r

I (x)

I (x)

gm

=

2 1

2 1

2

I0 ( x ) w C C r I0 ( x )

0 e b

I ( x)

For large values of x we may approximate 1

1 and therefore

I0 ( x )

2 I dc

Vosc

, Vosc >> 26mV

2

w0 CeCb r

40

Dr. Ariel Luzzatto

Example: oscillator start-up

In the previous example, what is the smallest value of I dc for which oscillations will start?

Solution: At the limit where oscillations just start, the value of x will be small, thus, we may

write

I /V

I ( x ) I dc / VT

x = dc T 2 1

x , x << 1

2

I

w C C r 0 ( x ) w 2C C r

0 e b

0 e b

Therefore, the requirement for oscillation start is

I dc / VT

w02CeCb r

1 I dc VT w02CeCb r

Exercise:

Neglecting the ac loading of Rb ,Rc and Re , assuming that the transistor base-emitter capacitance

is 5pF, and assuming that the base-emitter dc voltage is about 0.6Vdc compute approximately:

1) The oscillating frequency f0

2) The peak oscillating base-emitter voltage Vosc for r = 30W

3) The smallest value of Vcc for which oscillations will start

Circuit values:

Vcc = 10V

Rb

L = 130nH

Ce = 20 pF

+ Vcc

Rc

C

C

Cb = 15 pF

Rb = Rc = 33k W

Re = 5k W

hFE = 300

Cb

vosc ( t )

Ce

Re

Answers:

1) f0 139.6 MHz

2) Vosc 186mV

3) Vcc = 3.7V

The simulation is shown below. The simulated amplitude is about 80% of the computed due to

the ac loading of the resistors that has been neglected, which reflects in effectively increasing r.

41

Dr. Ariel Luzzatto

7.3 nS

290 mV

42

You might also like

- Lesson 4 Analog To Digital ConversionDocument27 pagesLesson 4 Analog To Digital ConversionChantaelNimerNo ratings yet

- Mathematical Modelling of Sampling ProcessDocument6 pagesMathematical Modelling of Sampling ProcessNishant SaxenaNo ratings yet

- Chemrite - 520 BADocument2 pagesChemrite - 520 BAghazanfar50% (2)

- Emcs - 607P 11-07-2014Document68 pagesEmcs - 607P 11-07-2014abdulbari.abNo ratings yet

- Digital Communication Vtu PaperDocument37 pagesDigital Communication Vtu PaperAnanth SettyNo ratings yet

- Lab 9: Timing Error and Frequency Error: 1 Review: Multi-Rate SimulationDocument15 pagesLab 9: Timing Error and Frequency Error: 1 Review: Multi-Rate Simulationhaiduong2010No ratings yet

- Unit-1 EeeDocument65 pagesUnit-1 EeeVinoth MuruganNo ratings yet

- Input Signal Amplitude (Between 2 Dashed Line) Quantized ValueDocument5 pagesInput Signal Amplitude (Between 2 Dashed Line) Quantized ValueFiras Abdel NourNo ratings yet

- Digital Modulation 1Document153 pagesDigital Modulation 1William Lopez JaramilloNo ratings yet

- Problemas Parte I Comunicaciones DigitalesDocument17 pagesProblemas Parte I Comunicaciones DigitalesNano GomeshNo ratings yet

- Digital Signal Processing NotesDocument18 pagesDigital Signal Processing NotesDanial ZamanNo ratings yet

- Digital Signal Processing: b, where (a, b) can be (t t x πDocument5 pagesDigital Signal Processing: b, where (a, b) can be (t t x πSugan MohanNo ratings yet

- ECE 410 Digital Signal Processing D. Munson University of IllinoisDocument10 pagesECE 410 Digital Signal Processing D. Munson University of IllinoisFreddy PesantezNo ratings yet

- DegitalDocument7 pagesDegitalRICKNo ratings yet

- Topic20 Sampling TheoremDocument6 pagesTopic20 Sampling TheoremManikanta KrishnamurthyNo ratings yet

- DSP-1 (Intro) (S)Document77 pagesDSP-1 (Intro) (S)karthik0433No ratings yet

- EE 3200, Part 13, Miscellaneous TopicsDocument7 pagesEE 3200, Part 13, Miscellaneous TopicsMuhammad Raza RafiqNo ratings yet

- Lecture #5, Fall 2003: ECE 6602 Digital CommunicationsDocument12 pagesLecture #5, Fall 2003: ECE 6602 Digital Communicationsshakr123No ratings yet

- Frequency Domain StatisticsDocument12 pagesFrequency Domain StatisticsThiago LechnerNo ratings yet

- Noise in ModulationDocument64 pagesNoise in ModulationDebojyoti KarmakarNo ratings yet

- Chap 5Document12 pagesChap 5Ayman Younis0% (1)

- 1 IEOR 4700: Notes On Brownian Motion: 1.1 Normal DistributionDocument11 pages1 IEOR 4700: Notes On Brownian Motion: 1.1 Normal DistributionshrutigarodiaNo ratings yet

- 1 Synchronization and Frequency Estimation Errors: 1.1 Doppler EffectsDocument15 pages1 Synchronization and Frequency Estimation Errors: 1.1 Doppler EffectsRajib MukherjeeNo ratings yet

- LezDocument13 pagesLezfgarufiNo ratings yet

- Hw6 Solution MAE 107 UclaDocument24 pagesHw6 Solution MAE 107 UclaMelanie Lakosta LiraNo ratings yet

- End Sem Solution 2020Document16 pagesEnd Sem Solution 2020Sri ENo ratings yet

- Tutorial 8Document4 pagesTutorial 8Sharath PawarNo ratings yet

- PS403 - Digital Signal Processing: III. DSP - Digital Fourier Series and TransformsDocument37 pagesPS403 - Digital Signal Processing: III. DSP - Digital Fourier Series and TransformsLANRENo ratings yet

- DigComm Fall09-Chapter4Document81 pagesDigComm Fall09-Chapter4rachrajNo ratings yet

- Data Transmission ExercisesDocument23 pagesData Transmission ExercisesSubash PandeyNo ratings yet

- HTVT 2Document153 pagesHTVT 2Vo Phong PhuNo ratings yet

- Digital Signal Processing - 1Document77 pagesDigital Signal Processing - 1Sakib_SL100% (1)

- Eecs 554 hw2Document3 pagesEecs 554 hw2Fengxing ZhuNo ratings yet

- Ejercicios de Comunicacion de DatosDocument7 pagesEjercicios de Comunicacion de DatosGaston SuarezNo ratings yet

- 1 Coherent and Incoherent Modulation in OFDM: 1.1 Review of Differential ModulationDocument15 pages1 Coherent and Incoherent Modulation in OFDM: 1.1 Review of Differential ModulationRajib MukherjeeNo ratings yet

- ELEN443 Project II BPSK ModulationDocument18 pagesELEN443 Project II BPSK ModulationZiad El SamadNo ratings yet

- Lab # 7: Sampling: ObjectiveDocument10 pagesLab # 7: Sampling: ObjectiveIbad Ali KhanNo ratings yet

- 183 EEE311Lesson2 PDFDocument35 pages183 EEE311Lesson2 PDFMasud SarkerNo ratings yet

- Optimal Reception of Digital SignalsDocument40 pagesOptimal Reception of Digital SignalsKaustuv Mishra100% (1)

- DPCMDocument12 pagesDPCMYENDUVA YOGESWARINo ratings yet

- Frequency Offset Reduction Methods in OFDM: Behrouz Maham Dr. Said Nader-EsfahaniDocument59 pagesFrequency Offset Reduction Methods in OFDM: Behrouz Maham Dr. Said Nader-EsfahaniomjikumarpandeyNo ratings yet

- CTFT DTFT DFTDocument6 pagesCTFT DTFT DFTZülal İlayda ÖzcanNo ratings yet

- Sampling and ReconstructionDocument40 pagesSampling and ReconstructionHuynh BachNo ratings yet

- Chap 5 120722024550 Phpapp02Document12 pagesChap 5 120722024550 Phpapp02Jowi Vega0% (1)

- Lecture 4 DPCMDocument9 pagesLecture 4 DPCMFiras Abdel NourNo ratings yet

- Questions: Practice SetDocument6 pagesQuestions: Practice SetAseel AlmamariNo ratings yet

- Digital Communication Ece 304 Assignment-1Document9 pagesDigital Communication Ece 304 Assignment-1ramjee26No ratings yet

- Fourier MathcadDocument11 pagesFourier MathcadAlberto OlveraNo ratings yet

- The Hong Kong Polytechnic University Department of Electronic and Information EngineeringDocument6 pagesThe Hong Kong Polytechnic University Department of Electronic and Information EngineeringSandeep YadavNo ratings yet

- 1.0 Complex Sinusoids: 1.1 The DTFTDocument7 pages1.0 Complex Sinusoids: 1.1 The DTFTmokorieboyNo ratings yet

- Continous Time Signal Vs Discrete Time SignalDocument9 pagesContinous Time Signal Vs Discrete Time SignalFairoz UsuNo ratings yet

- Introduction To Digital CommunicationsDocument44 pagesIntroduction To Digital CommunicationsKvnsumeshChandraNo ratings yet

- DSP Manual CompleteDocument52 pagesDSP Manual CompletevivekNo ratings yet

- Electronics 3 Checkbook: The Checkbooks SeriesFrom EverandElectronics 3 Checkbook: The Checkbooks SeriesRating: 5 out of 5 stars5/5 (1)

- Green's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)From EverandGreen's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)No ratings yet

- Ten-Decimal Tables of the Logarithms of Complex Numbers and for the Transformation from Cartesian to Polar Coordinates: Volume 33 in Mathematical Tables SeriesFrom EverandTen-Decimal Tables of the Logarithms of Complex Numbers and for the Transformation from Cartesian to Polar Coordinates: Volume 33 in Mathematical Tables SeriesNo ratings yet

- Compound Words, Blends and Phrasal Words: Hamza Shahid 40252193020Document12 pagesCompound Words, Blends and Phrasal Words: Hamza Shahid 40252193020Hamza ShahidNo ratings yet

- PPT. 21st Century 23-24-1st ClassDocument40 pagesPPT. 21st Century 23-24-1st ClassLove AncajasNo ratings yet

- MDM4U Formula Sheet New 2021Document2 pagesMDM4U Formula Sheet New 2021Andrew StewardsonNo ratings yet

- Linear Algebra in Economic AnalysisDocument118 pagesLinear Algebra in Economic AnalysisSwapnil DattaNo ratings yet

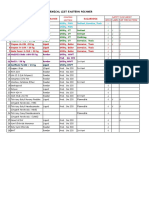

- Chemical List Eastern PolymerDocument1 pageChemical List Eastern PolymerIan MardiansyahNo ratings yet

- Biosafety Cabinets ATCDocument13 pagesBiosafety Cabinets ATCShivaniNo ratings yet

- Basic Electronics - JFET - TutorialspointDocument6 pagesBasic Electronics - JFET - Tutorialspointgunasekaran k100% (1)

- Tut 1Document2 pagesTut 1me21b105No ratings yet

- Introduction in Anesthesie, Critical Care and Pain ManagementDocument30 pagesIntroduction in Anesthesie, Critical Care and Pain ManagementSuvini GunasekaraNo ratings yet

- 02 Objective and Interpretive WorldviewsDocument26 pages02 Objective and Interpretive WorldviewsHazim RazamanNo ratings yet

- Commented List of The Lower Oligocene Fish Fauna From The Coza Valley (Marginal Folds Nappe, Eastern Carpathians, Romania)Document9 pagesCommented List of The Lower Oligocene Fish Fauna From The Coza Valley (Marginal Folds Nappe, Eastern Carpathians, Romania)Marian BordeianuNo ratings yet

- Theories and Causes of CrimeDocument15 pagesTheories and Causes of CrimeMartin OtienoNo ratings yet

- Open Source GMNSDocument124 pagesOpen Source GMNSHossain RakibNo ratings yet

- Datasheet Laminated (Stopsol + Clear)Document2 pagesDatasheet Laminated (Stopsol + Clear)stretfordend92No ratings yet

- Growel Guide To Fish Ponds Construction & ManagementDocument56 pagesGrowel Guide To Fish Ponds Construction & ManagementGrowel Agrovet Private Limited.100% (1)

- Joint WinnersJoint Winners of Hugo and Nebula Awards of Hugo and Nebula AwardsDocument1 pageJoint WinnersJoint Winners of Hugo and Nebula Awards of Hugo and Nebula AwardsLynnNo ratings yet

- Industrial Noise Control Manual PDFDocument357 pagesIndustrial Noise Control Manual PDFAnonymous cV8LSvyI100% (3)

- Engineered Rivers in Arid Lands Searching For Sustainability in Theory and Practice PDFDocument14 pagesEngineered Rivers in Arid Lands Searching For Sustainability in Theory and Practice PDFGuowei LiNo ratings yet

- Posthuman Critical Theory Rosi Braidotti in Posthuman GlossaryDocument4 pagesPosthuman Critical Theory Rosi Braidotti in Posthuman GlossaryLuis Herrero SierraNo ratings yet

- Corporate Social Responsibility and SustainabilityDocument15 pagesCorporate Social Responsibility and Sustainabilitybello adetoun moreNo ratings yet

- Boston University African Studies Center The International Journal of African Historical StudiesDocument6 pagesBoston University African Studies Center The International Journal of African Historical StudiesOswald MtokaleNo ratings yet

- Fecal Microbiota TransplantationDocument4 pagesFecal Microbiota TransplantationABCDNo ratings yet

- тестовик SAMPLE TESTS 2022-2023Document64 pagesтестовик SAMPLE TESTS 2022-2023Алена ДимоваNo ratings yet

- CNC TURNING MachineDocument14 pagesCNC TURNING MachineFaiz AhmedNo ratings yet

- Geography Chapter4Document21 pagesGeography Chapter4davedtruth2No ratings yet

- 2018 LLA Evaluation Forms - Final As of Sept 20, 2018Document25 pages2018 LLA Evaluation Forms - Final As of Sept 20, 2018nida dela cruz100% (1)

- A Survey On Deep Learning For Named Entity RecognitionDocument20 pagesA Survey On Deep Learning For Named Entity RecognitionAhmad FajarNo ratings yet

- Water PotentialDocument5 pagesWater PotentialTyrese BaxterNo ratings yet

- Contextual IntelligenceDocument10 pagesContextual IntelligenceTanya GargNo ratings yet