Professional Documents

Culture Documents

Ux RM

Uploaded by

liuylOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Ux RM

Uploaded by

liuylCopyright:

Available Formats

SAGE-AU Conference, Gold Coast, Australia, July 3-7 2000

UNIX RESOURCE MANAGERS

Capacity Planning and Performance Issues

Neil J. Gunther

Performance Dynamics Consulting

Castro Valley, California USA

http://www.perfdynamics.com/

ABSTRACT

The latest generation of UNIX includes facilities for automatically managing system resources such as

processors, disks, and memory. Traditional UNIX time-share schedulers attempt to be fair to all users by

employing a round-robin policy for allocating CPU time. Unfortunately, a loophole exists whereby the

scheduler can be biased in favor of a greedy user running many short CPU-demand processes. This

loophole is not a defect but an intrinsic property of the round-robin scheduler that ensures responsiveness to

the brief CPU demands associated with multiple interactive users. The new generation of UNIX constrains

the scheduler to be equitable to all users regardless of the number of processes each may be running. This

"fair-share" scheduling draws on the concept of pro rating resource "shares" across users and groups and

then dynamically adjusting CPU usage to meet those share proportions. This simple administrative notion

of statically allocating shares, however, belies the potential consequences for performance as measured by

user response time and service level targets. We examine several simple share allocation scenarios and the

surprising performance consequences with a view to establishing some guidelines for allocating shares.

INTRODUCTION

Capacity planning is becoming an increasingly important consideration for UNIX

system administrators as multiple workloads are consolidated onto single large servers,

and the procurement of those servers takes on the exponential growth of internet-based

business.

The latest implementations of commercial UNIX to offer mainframe-style

capacity management on enterprise servers include: AIX Workload Manager (WLM),

HP-UX Process Resource Manager (PRM), Solaris Resource Manager (SRM), SGI and

Compaq. The ability to manage server capacity is achieved by making significant

modifications to the standard UNIX operating system so that processes are inherently tied

to specific users. Those users, in turn, are granted only a certain fraction of system

resources. Resource usage is monitored and compared with each users grant to ensure

that the assigned entitlement constraints are met.

Shared system resources that can be managed in this way include: processors,

memory, and mass storage. Prima face, this appears to be exactly what is needed for a

UNIX system administrator to manage server capacity. State of the art UNIX resource

management, however, is only equivalent to that which was provided on mainframes

more than a decade ago but it had to start somewhere. The respective UNIX operations

2000 Performance Dynamics Consulting. All Rights Reserved. No part of this document may be reproduced in any

way without the prior written permission of the author. Permission is granted to SAGE-AU to publish this article for the

Conference in July 2000.

SAGE-AU Conference, Gold Coast, Australia, July 3-7 2000

manuals spell out the virtues of each vendor's implementation, so the purpose of this

paper is to apprise the sysadm of their limitations for meeting certain performance

requirements.

For example, an important resource management option is the ability to set

entitlement caps when actual resource consumption is tied to other criteria such as

performance targets and financial budgets. Another requirement is the ability to manage

capacity across multiple systems e.g., shared-disk and NUMA clusters. As UNIX system

administrators become more familiar with the new automated resource management

facilities, it also becomes important that they learn some of the relevant capacity planning

methodologies that have already been established for mainframes.

In that spirit, we begin by clearing up some of the confusion that has surrounded

the motivation and the terminology behind the new technology. The common theme

across each of the commercial implementations is the introduction of a fair-share

scheduler. After reviewing the alternative scheduler, we examine some of the less

obvious potential performance pitfalls. Finally, we present some capacity planning

guidelines for migrating to automated UNIX resource management.

LOOPHOLES IN TIME

UNIX is fundamentally a time-share (TS) operating system [Ritchie & Thompson

1978] aimed at providing responsive service to multiple interactive users. Put simply, the

goal of generic UNIX is to create the illusion for each user that they are the only one

accessing system resources. This illusion is controlled by the UNIX time-share scheduler.

A time-share scheduler attempts to give all active processes equal access to

processing resources by employing a round-robin scheduling policy for allocating

processor time. Put simply, each process is placed in a run-queue and when it is serviced,

it gets a fixed amount of time running on the processor. This fixed time is a called a

service quantum (commonly set to 10 milliseconds).

Processes that demand less than the service quantum, generally complete

processor service without being interrupted. However, a process that exceeds its service

quantum has its processing interrupted and is returned toward the back of the run-queue

to await further processing. A natural consequence of this scheduling policy is that

processes with shorter processing demands are favored over longer ones because the

longer demands are always preempted when their service quantum expires.

Herein lies a loophole. The UNIX scheduler is biased intrinsically in favor of a

greedy user who runs many short-demand processes. This loophole is not a defect, rather

it is a property of the round-robin scheduler that implements time-sharing which, in turn,

guarantees responsiveness to the short processing demands associated with multiple

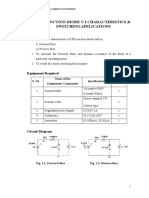

interactive users. Figure 1 shows this effect explicitly.

In Fig. 1, two users are distinguished as owning heavy and light processes in

terms of their respective processing demands. Below 10 processes, the heavy user sees a

better transaction throughput than the light user. Beyond 10 processes the throughput of

the light user begins to dominate the heavy user; in spite of the fact that both users are

adding more processes into the system!

SAGE-AU Conference, Gold Coast, Australia, July 3-7 2000

Time-Share Scheduling

0.7

0.6

0.5

0.4

0.3

0.2

0.1

0

0

10

15

Xlight

20

25

30

Xheavy

Fig. 1. Generic UNIX intrinsically favors shorter service-demand processes.

Note that the UNIX scheduler schedules processes, not users. Under a time-share

scheduler there is no way for users to assert that they would like a more equitable

allocation of processing resources to defeat greedy consumption by other users. The new

generation of UNIX addresses this loophole by implementing a fair-share (FS) scheduler

[Kay & Lauder 1988] that equitable processing to all users regardless of the number of

processes each may be running.

WHAT IS FAIR?

To define "fairness", the FS-based scheduler has a control parameter that allows

the sysadm to pro rate literal "shares" across users and groups of users. The FS scheduler

then dynamically adjusts processor usage to match those share proportions. Similarly,

shares can also be allocated to control memory and disk I/O resource consumption. For

the remainder of this paper, we'll confine the discussion to processor consumption

because it is easiest to understand and has the most dynamic consequences.

In the context of computer resources, the word fair is meant to imply equity, not

equality in resource consumption. In other words, the FS scheduler is equitable but not

egalitarian. This distinction becomes clearer if we consider shares in a publicly owned

corporation. Executives usually hold more equity in a company than other employees.

Accordingly, the executive shareholders are entitled to a greater percentage of company

profits. And so it is under the FS scheduler. The more equity you hold in terms of the

number of allocated resource shares, the greater the percentage of server resources you

are entitled to at runtime.

The actual number of FS shares you own is statically allocated by the UNIX

sysadm. This share allocation scheme seems straightforward. However, under the FS

scheduler there are some significant differences from the way corporate shares work.

Although the shares allocated to you is a fixed number, the proportion of resources you

SAGE-AU Conference, Gold Coast, Australia, July 3-7 2000

receive may vary dynamically because your entitlement is calculated as a function of the

total number of active shares; not the total pool of shares.

CAPPING IT ALL OFF

Suppose you are entitled to receive 10% of processing resources by virtue of

being allocated 10 out of a possible 100 system-wide processor shares. In other words,

your processing entitlement would be 10%. Further suppose you are the only user on the

system. Should you be entitled to access 100% of the processing resources? Most UNIX

system adminstrators believe it makes sense (and is fare) to use of all the resources rather

than have a 90% idle server.

But how can you access 100% of the processing resources if you only have 10

shares? You can if the FS scheduler only uses active shares to calculate your entitlement.

As the only user active on the system, owning 10 shares out of 10 active shares is

tantamount to a 100% processing entitlement.

This natural inclination to make use of otherwise idle processing resources really

rests on two assumptions:

1. You are not being charged for the consumption of processing resources. If your

manager only has a budget to pay for a maximum of 10% processing on a shared

server, then it would be fiscally undesirable to exceed that limit.

2. You are unconcerned about service targets. Its a law of nature that users

complain about perceived changes in response time. If there are operational

periods where response time is significantly better than at other times, those

periods will define the future service target.

One logical consequence of such dynamic resource allocation, is the inevitable loss of

control over resource consumption altogether. In which case, the sysadm may as well

spare the effort of migrating to an FS scheduler in the first place. If the enterprise is one

where chargeback and service targets are important then your entitlement may need to be

clamped at 10%. This is achieved through an additional capping parameter. Not all FS

implementations offer this control parameter.

The constraints of chargeback and service level objectives have not been a part of

traditional UNIX system administration, but are becoming more important with the

advent of application consolidation, and the administration of large-scale server

configurations (e.g., like those used in major e-commerce websites). The capping option

can be very important for enterprise UNIX capacity planning.

CONSPICUOUS CONSUMPTION

Lets revisit the earlier example of a heavy user and light user, but this time

running their processor-bound jobs under an FS scheduler with the heavy user awarded a

90% processor allocation and the light user allocated only 10% of the processor. Contrary

to the TS scheduling effect, the light user should not be able to dominate the heavy user

by running more short-demand processes.

In fact, Figure 2 bares this out. The heavy user continues to maintain a higher

transaction throughput because they have a 90% allocation of cpu resources. Even as the

SAGE-AU Conference, Gold Coast, Australia, July 3-7 2000

number of processes is dramatically increased, the light user cannot dominate the heavy

user because they are only receiving 10% of cpu resources.

Fair-share

Thruputs

0.5

0.45

0.4

0.35

0.3

0.25

0.2

0.15

0.1

0.05

0

0

10

15

Xlight

20

25

30

Xheavy

Fig. 2. The FS scheduler does not favor shorter CPU-demand processes.

The effect is easier to interpret if we look at comparative utilization. Under the FS

scheduler the utilization of each user climbs to match their respective cpu entitlements at

about 10 processes.

Comparative

Utilization

120

100

80

60

40

20

0

0

10

15

%UTSlight

%UFSheavy

Fig. 3.

%UTSheavy

UFStotal

20

25

30

%UFSlight

Comparative CPU utilization for two users with processes

running under the time-share and fair-share schedulers.

SAGE-AU Conference, Gold Coast, Australia, July 3-7 2000

Contrast this with a generic TS scheduler, and we see the heavy user reaches a

maximum utilization of about 80% and then falls off as cpu capacity is usurped by the

light user as it continues to consume cpu above 10%. Under either scheduling policy, the

dashed line shows that total processor utilization reaches 100%, as expected.

NICE GUYS FINISH LAST

The reader may wondering why we haven't said anything about two well-known

aspects of UNIX process control: priorities and nice values. Couldnt the same results be

achieved under a TS scheduler together with a good choice of nice values? The answer is,

no and heres why.

Solaris SRM Fair Share Algorithm

User Level Scheduling

Every 4000 milliseconds, accumulate the CPU usage per user

Parameters:

Delta = 4 Sec;

DecayUsage;

for(i=0; i<USERS; i++) {

usage[i] *= decayUsage;

usage[i] += cost[i];

cost[i] = 0;

}

Process Level Scheduling

Parameters:

priDecay = Real number in the range [0..1]

Every 1000 milliseconds, decay ALL process priority values

for(k=0; k<PROCS; k++) {

sharepri[k] *= priDecay;

}

priDecay = a * p_nice[k] + b;

Every 10 milliseconds (or tick) alter

priorities per user.

the

active

process

for(i=0; i<USERS; i++) {

sharepri[i] += usage[i] * p_active[i];

}

Its true that the TS scheduler has a sense of different priorities, but these

priorities relate only to processes, and not users. The TS scheduler employs an internal

SAGE-AU Conference, Gold Coast, Australia, July 3-7 2000

priority scheme (there are several variants in generic UNIX. See Chapter 7 in Mc

Dougall, et al. 1999 for a brief overview) to adjust the position of processes waiting in the

run-queue so that it can meet the goal of creating the illusion for each user that they are

the only one accessing system resources.

In standard UNIX, using the nice command to renice processes (by assigning a

value in the range 19 to +19) merely acts as a bias for the TS scheduler when it

repositions processes in the run-queue. This scanning and adjustment of the run-queue

occurs about once every 10 milliseconds (or 1 processor tick). Once again, in standard

UNIX, these internal priority adjustments apply only to processes and how well they are

meeting the time-share goal. There is no association between processes and the users that

own them. Hence, standard UNIX is susceptible to the loophole mentioned earlier in this

paper.

The FS scheduler, in contrast, has two levels of scheduling: process and user. (See

table) The process level scheduling is essentially the same as that in standard UNIX and

nice values can be applied there as well. The user level scheduler is the major new

component and the relationship between these two pieces is presented in the form of

(highly simplified) pseudo-code in the following table (for Solaris SRM).

Notice that whereas the process-level scheduling still occurs 100 times a second,

the user-level scheduling adjustments (through the cpu.usage parameter) are made on

a time-scale 400 times longer, or once every 4 seconds. In addition, once a second, the

process-level priority adjustments that were made in the previous second begin to be

forgotten. This is necessary to avoid starving a process of further processor service

simply because it exceeded its share in some prior service period [Bettison et al. 1991].

PERFORMANCE PITFALLS

So far, weve outlined how the FS scheduler provides a key mechanism for

capacity management of processing resources. What about performance? Having the

ability to allocate resources under a scheduler that can match this allocation to actual

resource consumption still tells us nothing about the impact on user response times.

Fair-share Response Times

140

120

100

80

60

40

20

0

0

10

15

Rlight

20

25

30

Rheavy

Fig. 5. Response times of heavy and light users under fair-share.

7

SAGE-AU Conference, Gold Coast, Australia, July 3-7 2000

Moreover, none of the current FS implementations seriously discuss the

performance impact of various parameter settings, yet this knowledge is vital for the

management of many commercial applications. To underscore the importance of this

point, compare the difference in response times between the TS scheduler in Figure 4 and

the FS scheduler shown in Figure 5.

Under TS we see that the light user always has the better response time than the

heavy user, while under FS where the light user only has a 10% cpu entitlement, the

opposite is true. This is correct FS behavior but consider the impact on the light user if

they were migrated off a generic UNIX server to a consolidated server running the FS

scheduler. That user would only get 10% of the processor and their corresponding

response time would be degraded by a factor of six times. That would be like suddenly

going from a 56Kbps modem to a 9600 baud modem!

PERFORMANCE UNDER STRESS

Next, we consider the performance impact on multiple users from groups of users

with larger CPU entitlements. Suppose there are three groups: a database group (DBMS),

a web server group (WEB), and three individual users: usrA, usrB and usrC in a third

group.

usrA

usrB

6%

5%

Web

25%

DBMS usrA

0% 15%

usrC

19%

DBMS

60%

usrB

13%

Web

10%

usrC

47%

Fig. 6a and 6b. Entitlements for DBMS=60, Web=10, Users=30 shares.

The group allocations of the100 pool shares is: DBMS = 60 shares, Web = 10

shares, Users = 30 shares. The corresponding entitlements are shown in Figure 6 with the

users A,B,C awarded 6, 5, and 19 cpu.shares respectively

If all the users and groups are active on the system and running processor-bound

workloads (a worst case scenario), the processor utilization exactly matches the

entitlements of Figure 6a.

SAGE-AU Conference, Gold Coast, Australia, July 3-7 2000

Relative Response Time Changes

25.00

20.00

15.00

10.00

5.00

0.00

Case1

Case2

Case3

usrA

usrB

usrC

Case4

Web

Case5

DBMS

Fig. 7. Relative impact on users A and B as load is added to the system.

If the DBMS group becomes inactive, then without capping, the entitlements

would be dynamically adjusted to those shown in Figure 6b. These new fractions are not

intuitively obvious even for CPU-bound workloads and could present a surprise to a

sysadm.

More surprising still is what happens to response times. The relative response

times cannot be calculated purely from the static share or entitlement allocations and

must be derived from a performance model. Figure 7 shows the effect on the average

response times seen by users A and B as other users and groups (see Fig. 6) become

active on the system. We see immediately that by the time all groups become active, the

response times of A and B have increased (degraded) by a factor of 10x.

This is a worst-case scenario because it assumes that all work is CPU-bound. But

worst-case analysis is precisely what needs to be done in the initial stages of any capacity

planning exercise.

All of the capacity scenarios presented in this paper were created using PDQ

[Gunther 1998] and have been corroborated with TeamQuest Model [TeamQuest 1998].

In general, none of the current UNIX resource management products offer a way to look

ahead at the performance impact of various share allocations. More sophisticated tools

like PDQ and Model are needed.

MIGRATION TIPS and TOOLS

It should be clear by now that to bridge the gap between allocating capacity and

the respective potential performance consequences, some kind of performance analysis

capability is important for automated resource management. As the next table shows,

none of the top vendors provide such tools.

What size share pool should be used? The total pool of shares to be allocated on a

server is an arbitrary number. One might consider assigning a pool of 10,000 shares so as

to accurately express entitlements like 1/3 as a decimal percentage with two significant

SAGE-AU Conference, Gold Coast, Australia, July 3-7 2000

digits i.e., 33.33%. It turns out that the FS scheduler is only accurate to within a few

percent. Since its easier to work with simple percentages, a good rule of thumb is to set

the total pool of shares to be 100. In the above example, an entitlement of one third

would be simply be equal to 33%.

O/S

AIX

Manager

WLM

HP-UX

PRM

Solaris

SRM

Tools

SMIT

RSI

prmanalyze

Glance

srmstat

liminfo

limadm

limreport

When contemplating the move to enterprise UNIX resource management, you

will need to reflect how you are going to set the FS parameters. These vary by product

but some general guidelines can be elucidated. In particular:

v Start collecting appropriate consumption measurements on your current TS

system. Collect process data to measure CPU and memory usage. This can be

translated directly into a first estimate for respective entitlements.

v Allocate shares or entitlements to your loved ones (mainframe-speak) or

your most favored users, first.

v Allocate shares based on the maximum response times seen under TS. This

approach should provide some wiggle room to meet service targets.

v Turn the capping option on (if available) when service targets are of primary

importance.

v If service targets are not being met after going over to an FS scheduler,

move the least favored users and resource groups onto another server

or invoke processor domain (if available).

Use modeling tools such as PDQ [PDQ 1999] and TeamQuest Model to make

more quantitative determinations of how each combination of likely share allocations

pushes on the performance envelope of the server.

COMMERCIAL COMPARISONS

The following table summarizes the key feature differences between the three

leading commercial UNIX resource managers.

MVS has been included at the end of the table for the sake of completeness. All of

the commercial UNIX resource managers are implemented as fair-share schedulers.

IBM/AIX, Compaq and HP developed their own implementations while SGI and Sun use

a modified variant of the SHARE II product [Bettison et al. 1991] licensed from Aurema

Pty Ltd. Neither SGI SHARE-II nor Solaris SRM offer resource capping [Gunther 1999].

Although each product has tools that are intended to help administer resource

allocation, they tend to be somewhat ad hoc rather than tightly integrated with the

operating system. This should improve with later releases.

10

SAGE-AU Conference, Gold Coast, Australia, July 3-7 2000

O/S

AIX

Compaq

HP-UX

IRIX

Solaris

MVS

MVS

Manager

WLM

Tru64

PRM

SHARE II

SRM

WLM-CM

WLM-GM

FS

Yes

Yes

Yes

Yes

Yes

Yes

No

Capping

Yes

No

Yes

No

No

Yes

N/A

Parameters

Targets/Limits

Shares

Entitlements

Shares

Shares

Service classes

Service goals

Both AIX [Brown 1999] and Solaris [McDougall et al. 1999] tend to present their

management guidelines using the terminology of shares. But shares are really the

underlying mechanism by which usage comparisons are made. It would be preferable to

discuss system administration in terms of entitlements (which are percentages) because it

is easier for a sysadm to match entitlement percentages against the measured utilization

percentages of the respective subsystems.

MVS Workload Manager in Compatibility Mode (WLMCM) is the vestige of

the original OS/390 System Resource Manager (also called SRM) first implemented a

decade ago [Samson 1997] by IBM. It is this level of functionality that the enterprise

UNIX vendors have introduced. The newer and more complex MVS in Goal Mode

(WLMGM) was only introduced by IBM a few years ago and it is likely to be several

more years before automated UNIX resource managers reach that level of maturity.

SUMMARY

Commercial UNIX is starting to emulate the kind of resource management

facilities that have become commonplace on mainframes. Some of the latest UNIX

implementations to offer capacity management on enterprise servers include: AIX

Workload Manager (WLM), HP-UX Process Resource Manager (PRM), and Solaris

Resource Manager (SRM). Nevertheless, the UNIX technology is still a decade behind

that found on current mainframes; a key distinction being the ability to define specific

service goals for mainframe workloads.

The latter is accomplished by having the scheduling mechanism monitor the waittime for each service group in the run-qeueue, whereas, the FS scheduler only monitors

the busy-time (CPU usage) consumed when each user or group is in service. This

limitation makes it difficult to allocate resources in such a way to meet service targets; an

important requirement for enterprise UNIX running commercial applications.

Until service goal specifications are engineered into UNIX, the sysadm must pay

careful attention to the allocation of shares and entitlements. Better tools of the type

discussed in this paper, would go a long way toward making the sysadm's job easier.

REFERENCES

Bettison, A., et al., 1991. "SHARE II A User Administration and Resource Control

System for UNIX, Proceedings of LISA91.

Brown, D. H., and Assoc., 1999. "AIX 4.3.3 Workload Manager Enriches SMP Value,"

Gunther, N. J., 1998. The Practical Performance Analyst, McGraw-Hill.

11

SAGE-AU Conference, Gold Coast, Australia, July 3-7 2000

Gunther, N. J., 1999. "Capacity Planning for Solaris SRM: All I Ever Wanted was My

Unfair Advantage (And Why You Cant Get It)," Proc. CMG99, Reno, Nevada.

Hewlett-Packard 1999. "Application Consolidation with HPUX Process Resource

Manager," HP Performance and Availability Solutions Report.

Kay, J., and Lauder, P., 1988. "A Fair Share Scheduler," Comm. ACM., Vol. 31.

McDougall, R., et al., 1999. Sun Blueprint: Resource Management, Prentice-Hall.

PDQ 1999. http://members.aol.com/Perfskool/pdq.tar.Z

Ritchie, D.M., and Thompson, K., 1978. "The UNIX Timesharing System," AT&T Bell

Labs. Tech. Journal, Vol. 57.

Samson, S. L. 1997. MVS Performance Management. McGraw-Hill.

TeamQuest 1998. TeamQuest Model End User Reference Manual.

12

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Periodicity in 3D Lab Report FormDocument2 pagesPeriodicity in 3D Lab Report FormZIX326100% (1)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Ziad Masri - Reality Unveiled The Hidden KeyDocument172 pagesZiad Masri - Reality Unveiled The Hidden KeyAivlys93% (15)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- VXDDL 1nDocument2 pagesVXDDL 1nliuylNo ratings yet

- NBU Snapshot 1Document2 pagesNBU Snapshot 1liuylNo ratings yet

- TL Tips 3Document4 pagesTL Tips 3liuylNo ratings yet

- EMC-CX Tips 2Document3 pagesEMC-CX Tips 2liuylNo ratings yet

- Ibm Apv 7Document4 pagesIbm Apv 7liuylNo ratings yet

- Ora SWE 3Document6 pagesOra SWE 3liuylNo ratings yet

- Solaris FS 1nDocument2 pagesSolaris FS 1nliuylNo ratings yet

- IBM P FW 2Document6 pagesIBM P FW 2liuylNo ratings yet

- Aix VioDocument8 pagesAix VioliuylNo ratings yet

- HP CVEVA Kit ContentsDocument10 pagesHP CVEVA Kit ContentsliuylNo ratings yet

- HDS SI SVM ImpDocument4 pagesHDS SI SVM ImpliuylNo ratings yet

- HP LicenseKeyInstallDocument2 pagesHP LicenseKeyInstallliuylNo ratings yet

- Ora TTBS 1Document11 pagesOra TTBS 1liuylNo ratings yet

- VXDMP CorruptDocument5 pagesVXDMP CorruptliuylNo ratings yet

- HP-UX PVID TDocument11 pagesHP-UX PVID TliuylNo ratings yet

- Nbu Faq 4Document3 pagesNbu Faq 4liuylNo ratings yet

- AIX Chdef 1Document2 pagesAIX Chdef 1liuylNo ratings yet

- VXDDL 1nDocument2 pagesVXDDL 1nliuylNo ratings yet

- Nbu Faq 3Document12 pagesNbu Faq 3liuylNo ratings yet

- HP-UX Login AccountDocument4 pagesHP-UX Login AccountliuylNo ratings yet

- AIX Fcs KDBDocument8 pagesAIX Fcs KDBliuylNo ratings yet

- VXDDL 1nDocument2 pagesVXDDL 1nliuylNo ratings yet

- Aix Wpar 2Document13 pagesAix Wpar 2liuylNo ratings yet

- Online JfsDocument3 pagesOnline JfsliuylNo ratings yet

- Npart 2Document1 pageNpart 2liuylNo ratings yet

- Shell 1Document2 pagesShell 1liuylNo ratings yet

- SW List FilterDocument15 pagesSW List FilterliuylNo ratings yet

- Docid: Lckbca00001025 Updated: 2/1/02 10:21:00 Am: Hp9000 Rp54Xx (L-Class) : PDC RevisionsDocument7 pagesDocid: Lckbca00001025 Updated: 2/1/02 10:21:00 Am: Hp9000 Rp54Xx (L-Class) : PDC RevisionsliuylNo ratings yet

- Oracle 10g RecommendationsDocument21 pagesOracle 10g RecommendationsliuylNo ratings yet

- MP1Document1 pageMP1liuylNo ratings yet

- Math PerformanceDocument2 pagesMath PerformanceMytz PalatinoNo ratings yet

- Ieee 1584 Guide Performing Arc Flash Calculations ProcedureDocument6 pagesIeee 1584 Guide Performing Arc Flash Calculations ProcedureBen ENo ratings yet

- Cooling Water CircuitDocument3 pagesCooling Water CircuitJamil AhmedNo ratings yet

- Configure and Verify Device ManagementDocument24 pagesConfigure and Verify Device ManagementJose L. RodriguezNo ratings yet

- RC OscillatorDocument8 pagesRC OscillatorRavi TejaNo ratings yet

- Holiday Homework Class 12 MathematicsDocument2 pagesHoliday Homework Class 12 MathematicsKartik SharmaNo ratings yet

- CBSE NCERT Solutions For Class 9 Science Chapter 10: Back of Chapter QuestionsDocument9 pagesCBSE NCERT Solutions For Class 9 Science Chapter 10: Back of Chapter QuestionsRTNo ratings yet

- Research On RosesDocument6 pagesResearch On RoseskirilkatzNo ratings yet

- Siddartha Institute of Science and Technoloy, Puttur: Seminar On Audio SpotlightingDocument19 pagesSiddartha Institute of Science and Technoloy, Puttur: Seminar On Audio SpotlightingRasiNo ratings yet

- Anatomia Del Pie en Insuficiencia VenosaDocument13 pagesAnatomia Del Pie en Insuficiencia VenosaDinOritha AtiLanoNo ratings yet

- NuPolar Quality Testing and ComparisonsDocument12 pagesNuPolar Quality Testing and Comparisonsou82muchNo ratings yet

- Optimization Questions For PracticeDocument4 pagesOptimization Questions For PracticejvanandhNo ratings yet

- Partially Miscible LiquidsDocument4 pagesPartially Miscible LiquidsCatriona BlackNo ratings yet

- Lipid TestDocument4 pagesLipid TestHak KubNo ratings yet

- DDR3 and LPDDR3 Measurement and Analysis: 6 Series MSO Opt. 6-CMDDR3 and Opt. 6-DBDDR3 Application DatasheetDocument14 pagesDDR3 and LPDDR3 Measurement and Analysis: 6 Series MSO Opt. 6-CMDDR3 and Opt. 6-DBDDR3 Application DatasheetNaveenNo ratings yet

- Urban Transportation PlanningDocument10 pagesUrban Transportation PlanningkiranNo ratings yet

- LogExportTool Use Guide For BeneVisionDocument23 pagesLogExportTool Use Guide For BeneVisionVivek Singh ChauhanNo ratings yet

- Product Position and Overview: Infoplus.21 Foundation CourseDocument22 pagesProduct Position and Overview: Infoplus.21 Foundation Courseursimmi100% (1)

- Semiconductor Devices and Circuits LaboratoryDocument53 pagesSemiconductor Devices and Circuits LaboratoryKaryampudi RushendrababuNo ratings yet

- Mikrotik Traffic Control With HTBDocument63 pagesMikrotik Traffic Control With HTBSigit PrabowoNo ratings yet

- Image - VC - Full DocumentDocument106 pagesImage - VC - Full DocumentSanthosh ShivaNo ratings yet

- Rapid Prototyping PPT SeminarDocument32 pagesRapid Prototyping PPT SeminarShantha Kumar G C0% (1)

- Av02 2516enDocument13 pagesAv02 2516enpagol_23_smhNo ratings yet

- rc218 CDW Jenkins Workflow PDFDocument8 pagesrc218 CDW Jenkins Workflow PDFVictor SantiagoNo ratings yet

- LI 6500WX: Making Life Easier and SaferDocument17 pagesLI 6500WX: Making Life Easier and SaferkellyburtonNo ratings yet

- Cambridge International AS and A Level Biology WorkbookDocument254 pagesCambridge International AS and A Level Biology WorkbookMash GrigNo ratings yet

- EEE2205 Introduction To MeasurementsDocument4 pagesEEE2205 Introduction To MeasurementsKibelennyNo ratings yet

- Study of Selected Petroleum Refining Residuals Industry StudyDocument60 pagesStudy of Selected Petroleum Refining Residuals Industry StudyOsama AdilNo ratings yet