Professional Documents

Culture Documents

Multi Linear Regression Handout 2x1

Uploaded by

Abhishek SardaCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Multi Linear Regression Handout 2x1

Uploaded by

Abhishek SardaCopyright:

Available Formats

31-03-2016

Automation Lab

IIT Bombay

CL 202: Introduction to Data Analysis

Linear and Nonlinear Regression

Sachin C. Patawardhan and Mani Bhushan

Department of Chemical Engineering

I.I.T. Bombay

31-Mar-16

Regression

Automation Lab

IIT Bombay

Outline

Mathematical Models in Chemical Engineering

Linear Regression Problem

Ordinary and Weighted Least Squares formulations through

algebraic viewpoint and geometric interpretations

Ordinary and Weighted Least Squares formulations through

probabilistic viewpoint

Ordinary Least Squares and Minimum Variance Estimation

Ordinary Least Squares and Maximum Likelihood Estimation

Confidences intervals for parameter estimates and

hypothesis testing

Nonlinear regression problem: Nonlinear in parameter

models and maximum likelihood parameter estimation

Examples of linear and nonlinear regression

Appendix: Ordinary Least Squares and Cramer-Rao Bound

31-Mar-16

Regression

31-03-2016

Automation Lab

IIT Bombay

Mathematical Models

Mathematical Model: mathematical description of a real

physical process

Used in all fields: biology, physiology, engineering, chemistry,

biochemistry, physics, and economics

Deterministic models: each variable and parameter can be

assigned a definite fixed number or a series of fixed

numbers, for any given set of conditions.

Stochastic models: variables or parameters used to

describe the input-output relationships and the structure of

the elements (and the constraints) are not precisely known

31-Mar-16

Regression

Automation Lab

IIT Bombay

Elements of a Model

Independent inputs (x)

Output (y) (dependent variable)

Parameters ()

Transformation operator (T)

Algebraic

Differential

x1

xn

31-Mar-16

Mathematical Model

T ( x1 ,.. xn , 1 ,..,

Regression

y

4

31-03-2016

Mathematical Models

Automation Lab

IIT Bombay

Models are used for

Behavior Prediction/Analysis: Understand the influence of the

independent inputs to a system on the observed system output

System/process/material design

Catalyst design, membrane design

Equipment Design: sizing of processing equipment

Flow-sheeting: deciding flow of material and energy in a

chemical plant

System / process operation: monitoring and control, safety and

hazard analysis, abnormal behavior diagnosis

31-Mar-16

Regression

Models in Chemical Engineering

Automation Lab

IIT Bombay

Models popularly used in chemical engineering

Transport phenomena based models: continuum equations

describing the conservation of mass, momentum, and energy

Population balance models: Residence time distributions

(RTD) and other age distributions

Empirical models based on data fitting: Typical examplepolynomials used to fit empirical data, thermodynamic

correlations, correlations based on dimensionless groups

used in heat, mass and momentum transfer, transfer

function models used in process control

31-Mar-16

Regression

31-03-2016

Automation Lab

IIT Bombay

Empirical Modeling

Exact expression relating the dependent and the

independent variable may not be known

Weierstrass theorem states that any continuous function

can be approximated by a polynomial function with arbitrary

degree of accuracy.

Invoking Weierstrass theorem, relationship between the

dependent and independent variables is approximated as a

polynomial

The order of polynomial used typically depends on range of

values over which approximation has been constructed.

31-Mar-16

Regression

Automation Lab

IIT Bombay

Empirical Modeling Examples

Temperature dependence of resistance

R

R

a bT

T

for

T

[T1

for

T2 ]

[T3

T4 ]

Temperature dependence of Cp

Cp

Cp

a bT

for

T2

[T1

for

T

[T3

T2 ]

T

T4 ]

Boiling point of hydrocarbons in a homologous series

as function of no. of carbon atoms

T

T

31-Mar-16

a bn cn 2

n

n2

Regression

n3

8

31-03-2016

Automation Lab

IIT Bombay

Empirical Modeling Examples

Temperature and pressure dependence of reaction yield

Y

for

[T1

T P

T2 ] for [ P1

Y a bT cP dT

for [T3 T T4 ] for

Dimensionless group based

eP

[ P3

P

2

Pr

Re

- rA

k0e

x

1 (

1) x

x : liquid mole fraction

Reaction Rate Equations

E / RT

fPT

P P4 ]

Simplified VLE Model :

models in heat transfer

Nu

P2 ]

y : vapor mole fraction

: relative volatility

CA

31-Mar-16

Regression

.

9

Linear in Parameter Models

Automation Lab

IIT Bombay

To begin with we consider models that can be

represented in the following abstract form

Defining x

y

f (x )

x1

x2 .... xm

f ( x ) ...

1 1

2 2

Defining new variables, zi

y

1 1

2 2

f p (x)

f i ( x ), we can write

...

p p

Sources of error

Measurement Errors in dependent variable (y)

Modeling or Approximation Errors

Let v denote a combined error arising from errors

in modeling and errors in the measurement of y , then

y

31-Mar-16

1 1

2 2

...

Regression

p p

v

10

31-03-2016

Linear Regression Problem

Defining z

z1

1 1

....

...

2 2

z 2 ....

zp

zp

Automation Lab

IIT Bombay

T

p

zT v

For the class of models considered till now, the dependent

variable is a linear function of model parameter

Linear Function Definition : g ( x1

x2 )

g ( x1 )

g (x 2 )

Given data sets

y1 , y2 ,........, yn and S Z

Sy

z( 1) , z( 2) ,...., z( n)

generated from n independent experiment and model

equations yi

vi : i 1,2..., n, estimate such that

z( i )

some scalar objective

31-Mar-16

v1 , v2 ,...., vn is minimized.

Regression

11

Linear Regression Problem

Choice of Objective Function

n

2 - norm :

v1 ,..., vn

vi

In practice, the 2-norm

or

i 1

n

v1 ,..., vn

wi vi

where wi

0 for all i

i 1

v1 ,..., vn

vi

or

v1 ,..., vn

i 1

wi vi

i 1

31-Mar-16

preferred over the other

two choices because of

(a) Amenability to the

Max

i

(b) Ease of geometric

interpretations

(c) Ease of interpretation

- Norm

v1 ,..., vn

based formulation is

analytical treatment

1 - Norm

n

Automation Lab

IIT Bombay

from viewpoint of

probability and statistics

vi

Regression

12

31-03-2016

Automation Lab

IIT Bombay

Model Parameter Estimation

Consider estimation of parameters

( a,b)

of a simple linear model of the form

y

a bx v

z

from S y

f1 ( x )

az1 bz2

f2 ( x)

1 x

y1 , y2 ,........, yn and S Z

y1

a bx1 v1

...(1)

y2

a bx2

v2

...( 2)

vi

...(i )

yn

a bxi

......

a bxn vn

...( n )

31-Mar-16

z (1) , z( 2 ) ,...., z( n )

.....

yi

y1

y2

1

1

x1

x2

v1

v2

...

yi

...

yn

... ... a

1 xi b

...

vi

...

vn

... ...

1 xn

Regression

13

Automation Lab

IIT Bombay

Model Parameter Estimation

Number of unknown variables =

2 (parameters) + n (errors)

Number of equations = n

Number of equations < number of unknowns

The system of linear equation has infinite number of

solution.

To estimate model parameters, we resort to optimization

The necessary conditions for optimality provide 2 additional

constraints so that the combined system of equations has a

unique solution.

31-Mar-16

Regression

14

31-03-2016

Automation Lab

IIT Bombay

Model Parameter Estimation

Defining a scalar function J ( v1, v2 ,...., vn ) where

vi

bz2i for i 1,2,....n

az1 i

yi

the necessary conditions for optimality are

J

a

J

b

and

Most commonly used scalar measure

Ordinary least square

n

( vi ) 2

J

i 1

Weighted least square

n

wi ( vi ) 2 ( wi

0 for all i )

i 1

31-Mar-16

Quadratic objective function

(a) Leads to analytical solution

(b) Has nice geometric

interpretation

(c) Facilitates interpretation

and analysis through

statistics

Regression

15

Automation Lab

IIT Bombay

Ordinary Least Squares

Using vector - matrix notation

Y A

V TV

vi

i 1

Necessary condition for optimality becomes

J

V TV

2 A T Y A

1

OLS AT A A T Y

Rules of differentiation of a scalar function w.r.t. a vector

( xT By )

x

(xT Bx)

x

31-Mar-16

By

( xT By )

y

BT x

2Bx when B is symmetric

Regression

16

31-03-2016

Geometric Interpretations

1

1

v1

v2

...

vi

...

1

y1

y2

...

yi

...

...

vn

1 x1

1 x2

... ... a

1 xi b

... ...

1 xn

yn

x1

x2

b

...

xn

31-Mar-16

Automation Lab

IIT Bombay

lies in the

Vector Y

column space of matrix A

Assumption : True behavior

Y

A *

* : True Parameters

A

Defining Y

V

we have Y

Estimated model residuals are

Y A A * V

V

Regression

17

Geometric Interpretations

Automation Lab

IIT Bombay

Necessary condition for optimality implies

A

Y A

AT V

TAT

V

is perpendicular to the column space of A.

i.e. vector V

: a projection of vector Y on the column space of A.

Y

V

H

A AT A

Y Y

I A AT A

AT Y

AT is known as Hat (or projection) matrix.

Note : H is idempotent matrix i.e. H 2

Vector Y is split into two orthogonal components

H Y : lying in the column space of A

Y

V

31-Mar-16

I H Y : orthogonal to the column space of A

Regression

18

31-03-2016

Geometric Interpretations

31-Mar-16

Automation Lab

IIT Bombay

Regression

Ethanol-Water Example

19

Automation Lab

IIT Bombay

Experimental Data

Density and weight percent of ethanol in ethanol-water mixture

Ref.: Ogunnaike, B. A., Random Phenomenon, CRC Press, London, 2010

31-Mar-16

Regression

20

10

31-03-2016

Automation Lab

IIT Bombay

Ethanol-Water Example

Ref.: Ogunnaike, B. A., Random Phenomenon, CRC Press, London, 2010

31-Mar-16

Regression

21

Quadratic Polynomial Model

Automation Lab

IIT Bombay

Consider model for temperature and

pressure dependence of reaction yield

Y

a bT

cP dT 2

eP 2

fPT

Data available : (Y1 , T1 , P1 ),(Y2 , T2 , P2 ),......, (Yn , Tn , Pn )

Corresponding model equations

Yi

a bTi

cPi

dTi

Defining

z (i )

1 Ti

Yi

Pi

Ti 2

a b c d

T

Pi 2

e

PiTi

f

z ( i ) vi for i 1,2,....., n

31-Mar-16

ePi

fPiTi

vi for i 1,2,..., n

Y1

Y2

....

Yn

1 T2 .... .... P2T2

.... .... .... .... ....

Y

n 1

A

n 6

Regression

T1

Tn

.... ....

P1T1

.... .... PnTn

a

b

....

f

6 1

v1

v2

....

vn

V

n 1

22

11

31-03-2016

Automation Lab

IIT Bombay

Generalization of OLS

Thus, consider estimation of parameter vector

of a general multi - linear model of the form

y

from S y

1 1

...

2 2

p p

y1 , y2 ,........, yn and S Z

v

z (1) , z ( 2 ) ,...., z ( n )

y1

z11

z21

.... ....

z p1

y2

z1 2

z22

.... ....

z p2

....

....

....

.... ....

....

....

yn

z1 n

z2 n

.... .... z pn

vn

p 1

V

n 1

Y

n 1

A

n p

31-Mar-16

v1

.

v2

....

Regression

23

Automation Lab

IIT Bombay

Weighted Least Square

Defining weighting matrix

Let W

diag w1

wi

w2 .... wn

0 for all i

The multilinear regression problem can be formulated as

Min

wi vi

V T WV

i 1

Subject to Y

A V

Using the necessary condition for optimality

J

V T WV

2 A T W Y A

1

A T WA A T W Y

Selecting W

31-Mar-16

I n n reduces WLS to OLS

Regression

24

12

31-03-2016

Automation Lab

IIT Bombay

Example: Multi-linear Regression

Laboratory experimental

data on Yield obtained from a

catalytic process at various

temperatures and pressures

Fitted multi - linear model

Y

75.9

0.0757 T

3.21 P

Ref.: Ogunnaike, B. A., Random

Phenomenon, CRC Press, London,

2010

31-Mar-16

Regression

Reactor Yield Data

31-Mar-16

Regression

25

Automation Lab

IIT Bombay

26

13

31-03-2016

Estimated Model

Automation Lab

IIT Bombay

Fitted multi - linear model

Y

75.9

0.0757 T

3.21 P

31-Mar-16

Regression

27

Automation Lab

IIT Bombay

Example: Multi-linear Regression

Boiling points of a series of hydrocarbons

Ref.: Ogunnaike, B. A., Random Phenomenon, CRC Press, London, 2010

31-Mar-16

Regression

28

14

31-03-2016

Automation Lab

IIT Bombay

Candidate Models

Linear Model : T

a bn

Quadratic Model : T

n2

250

200

Linear Model

T = 39*n - 170

Quadratic Model

T = - 3*n2 + 67*n - 220

150

Boiling Point (0C)

100

50

Data 1

Linear Model

Quadratic Model

0

-50

-100

-150

-200

-250

0

4

5

6

n, No. of Carbon Atoms

31-Mar-16

Regression

Unaddressed Issues

10

29

Automation Lab

IIT Bombay

Model parameter estimates change if

the data set size, n, matrix A and vector Y change

Matrix A is same but only Y changes (due to

measurement errors)

n is same but a different set of input conditions i.e.

different A matrix is chosen

How do we compare estimates generated through two

independent sets of experiments?

Can we come up with confidence intervals for true

parameters?

31-Mar-16

Regression

30

15

31-03-2016

Need for Statistical Approach

Automation Lab

IIT Bombay

If we have multiple candidate models, how does one

systematically select a most suitable model?

If identified model is used for prediction, how to quantify

uncertainties in the model predictions?

Linear algebra/optimization based treatment of model

parameter estimation problem does not help in answering

these questions systematically.

Remedy: Formulate and solve the parameter estimation

using framework of probability and statistics

31-Mar-16

Regression

31

Automation Lab

IIT Bombay

Notations

Consider n indepedndent random variables

Y1 , Y2 ,........, Yn

Data set is collected from n independent experiments,

one for each Yi , i.e. a set of realizations of Y1 , Y2 ,........, Yn

Sy

y1 , y2 ,........, yn

Model for RV Yi relating

Model Residuals V

Model for RV Yi

relating Random Error Vi

Yi

(i ) T

Vi

and parameter estimates (an RV)

Model relating

realizations of RVs Yi and Vi

yi

(i ) T

* vi

Yi

Model relating

realizations of RVs Y , and V

i

: True parameter vector

*

31-Mar-16

T

z( i ) Vi

yi

Regression

( i) T

v

i

32

16

31-03-2016

Automation Lab

IIT Bombay

Context Sensitive Notations

Y1

Y2

....

Yn

z1(1)

z2(1)

( 2)

1

(2)

2

.... ....

z (p1)

(2)

p

V1

V2

....

....

Vn

....

z1( n )

.... .... .... ....

z2( n ) .... .... z (pn )

Y

(n 1)

.... .... z

( p 1) ( n 1)

A

(n p )

y1

z11

z21

y2

....

yn

2

2

z1

....

z1 n

Y

n 1

z

....

z2n

.... ....

Note :

Bold Y and bold V

represent Vectors

of Random Variables

z p1

v1

2

p

2

....

represent vectors

v2

....

vn

p 1

V

n 1

of Random Variables

.... .... z

.... .... ....

.... .... z pn

A

n p

31-Mar-16

Note :

Y and V

of " realizations"

Regression

33

Automation Lab

IIT Bombay

Notations

Note

: True parameter vector (fixed, NOT a RV)

*

(bold) : Parameter Estimates (random variable vector)

(ordinary) : Parameter Estimates (a realization of )

A major simplifying assumption :

Set S Z

z (1) , z ( 2) ,...., z ( n )

consists of prefectly known vectors

i.e. there are no errors in measurements

or knowledge of z (i ) : i 1,2,..., n

31-Mar-16

Regression

34

17

31-03-2016

Regression Problem Formulation

Automation Lab

IIT Bombay

Let us assume that the modeling error

V

*

1 1

*

n

...

zp

is a zero mean RV with variance

0 and Var V

EV

, i.e.

Note : At this stage NO assumption has

been made about the form of FV (v )

It is assumed that z is a deterministic

vector and known exactly. Thus,

*

1 1

EY

where

*

1

,...,

*

p

*

p p

...

redpresent the true model parameters.

31-Mar-16

Regression

35

Regression Problem Formulation

Automation Lab

IIT Bombay

Now consider a dataset

yi , z1 i ,...,z pi :i 1,2 ,...,n

generated from n independent experiments

and corresponding model equations

* i

1 1

Yi

* i

p p

...

Vi

It is further assumed that each Vi

Vi

* i

1 1

Yi

...

* i

p p

for i 1,2,....n are independent and identically distributed.

Note : RVs Yi

* i

1 1

...

* i

p p

Vi for i 1,2,....n

are independen t but are NOT identicall y distributed.

31-Mar-16

Regression

36

18

31-03-2016

Regression Problem Formulation

Automation Lab

IIT Bombay

Using the vector notation,

collecting all model equations, we have

Y1

Y2

....

Yn

z11

z1 2

...

z1 p

Y

n 1

.... z p1

.... z p2

... ...

.... z pn

...

...

V1

V2

....

Vn

*

1

*

1

n p

p 1

V

n 1

or,

Y

31-Mar-16

A*

Regression

37

Regression Problem Formulation

Since E Vi

Automation Lab

IIT Bombay

0,

it implies that E V

0n 1

and

EY

E A *

Let R

A *

Cov( V )

E[ VV T ]

Since Vi : i 1,2,..., n are assumed to be IID,

and var(Vi )

R

, it follows that

E[ VV T ]

In

Problem : Estimate " unknown constant *"

from measuremen ts Y

31-Mar-16

Regression

A *

V

38

19

31-03-2016

Automation Lab

IIT Bombay

Ordinary Least Squares

Ordinary least square (OLS) estimate of

is obtained by minimizing objective function

VTV

Y A

Y A

with respect to

Note : V T V

vi 2

nS 2 (i.e. n sample variance)

i 1

Thus, OLS can be viewed as an estimator that

minimizes the sample variance of the modeling errors

OLS

AT A

AT Y

Is OLS an unbiased estimate of * ?

3/31/2016

State Estimation

39

Automation Lab

IIT Bombay

Ordinary Least Squares

Since Y is a realization of RV Y

it follows that

OLS can be viewed as a realization of RV OLS , i.e.,

1

1

AT A AT Y

A T A A T A* V

OLS

Taking expectations on both the sides

1

E

AT A AT E Y

OLS

A T A A T A *

Thus OLS is an unbiased estimate of * .

31-Mar-16

Regression

40

20

31-03-2016

Automation Lab

IIT Bombay

Ordinary Least Squares

1

Defining matrix L

AT A

LY * LV

OLS

Cov OLS

E OLS

LRLT

Difficulty :

* OLS

2

LLT

1

n

LE VV T LT

AT A

is not known in practice

Remedy : Estimate

AT

from samples

Y AOLS

TV

where V

Estimate of Cov( OLS )

31-Mar-16

2 AT A

Regression

41

Automation Lab

IIT Bombay

Minimum Variance Estimator

Given measurements Y

Y

A *

R n and a model

V

where A is a known n p matrix

and V

R n is a vector of random variables such that

EV

0n 1 and Cov V

Note: Here R is a symmetric and positive definite matrix

Suppose we want to find an unbiased

estimate, , of unknown, * R p , such that

Cov

E * *

is as small as possible.

31-Mar-16

Regression

42

21

31-03-2016

Automation Lab

IIT Bombay

Minimum Variance Estimator

Taking clues from the OLS solution,

let us propose a linear estimator of the form

LY where L is a ( p n ) matrix

Cov

E * *

E LY * LY *

Minimum Variance

Parameter Estimation Problem :

Find Matrix L such that Cov is as small as possible.

3/31/2016

State Estimation

43

Automation Lab

IIT Bombay

Minimum Variance Estimator

The unbiasedness requirement implies that

E * E LY * E L A* V * 0

E LA I *

Since E V

AV

0, the unbiasedness condition

will hold if and only if we choose L such that

LA

Minimum variance Parameter Estimaton Problem

.Min

L

J L

J Cov

J Cov LY

subject to LA

3/31/2016

State Estimation

I

44

22

31-03-2016

Automation Lab

IIT Bombay

Minimum Variance Estimator

To formulate an optimizati on problem, we need to construct

a scalar objective function. Consider a scalar function

J Cov

tr E * *

T

1

tr E * *

2

Var 1 Var 2 .... Var p

Thus, the problem of finding L that minimizes

a scalar function of Cov( ) can be formulated as

Min

L

J L

1

tr E LY * LY *

2

subject to LA

3/31/2016

State Estimation

45

Automation Lab

IIT Bombay

Minimum Variance Estimator

This is equivalent to finding matrix L such that

J

T

1

tr E * *

tr LA I

2

is minimized w.r.t. L, where

represents the matrix of Lagrange multipliers

E * *

* *

Since LA I, it follows that

LY L A* V * LV

3/31/2016

State Estimation

46

23

31-03-2016

Automation Lab

IIT Bombay

Minimum Variance Estimator

Since E VV T R , it follows that

T

E T

* *

LE VV T LT

* *

LRLT

or E * *

LRLT

Thus, the optimization problem can be

reformulated as minimizing objective function

J

1

tr LRLT tr LA I

2

with respect to L

3/31/2016

State Estimation

47

Automation Lab

IIT Bombay

Minimum Variance Estimator

Using results

tr BAC

BT CT and

tr ABAT

A BT

B

A

A

the necessary conditions for optimality are

J

L

LR

J

AT

[0]

I LA [0]

Thus, we have

T

L

and I LA

3/31/2016

AT R

T

A T R 1A [0]

State Estimation

48

24

31-03-2016

Automation Lab

IIT Bombay

Minimum Variance Estimator

This implies

AT R 1A

and it follows that

T

AT R

A T R 1A A T R

Thus the minimum variance estimator is

MV

A T R 1A AT R 1 Y

LY

For the minimum variance estimator

1

*

A T R 1A AT R 1 V

MV

which implies

Cov

E MV

min

* MV

3/31/2016

AT R 1A

State Estimation

Gauss Markov Theorem

49

Automation Lab

IIT Bombay

Comparing the minimum variance estimator

MV

LY

A T R 1A A T R 1 Y

with the weighted least square solution

WLS

X

A T WA A T W Y

indicates that selecting W

R 1 yields the MV estimator

Gauss-Markov theorem

The minimum variance unbiased linear estimator is

identical to the weighted least square estimator

when the weighting matrix is selected as inverse

of the measurement error covariance matrix.

31-Mar-16

Regression

50

25

31-03-2016

Automation Lab

IIT Bombay

Regression: OLS as MV Estimator

Any other linear unbiased estimator of ,

say ~

~

LY, where ~

L

L will have

tr Cov

tr Cov ~

MV

Returning to the regression problem

R

Cov( V )

I,

it follows that the OLS estimator

is the minimum variance estimator, i.e.

MV

AT (

I) 1 A A T (

Cov( MV )

3/31/2016

I) 1 Y

AT A

AT A A T Y

1

OLS

Cov( OLS )

State Estimation

51

Automation Lab

IIT Bombay

Insights

OLS is an unbiased parameter estimator. The variance

errors in the parameter estimates can be reduced by

increasing the sample size.

OLS estimator can be viewed as an estimator

that minimizes sample variance of model residuals

that yields the parameter estimates with the minimum

possible variance (the most efficient linear estimator)

This is how far we can go without making any assumption

about the distribution of the model residuals.

31-Mar-16

Regression

52

26

31-03-2016

Need to Choose Distribution

Automation Lab

IIT Bombay

For selecting a suitable black-box model that

explains the data best from candidate models, we

need to test hypothesis whether an estimated

model coefficient is close to zero or not close to

zero, i.e. whether the associated term in the

model can retained or neglected

We need to generate confidence intervals for the

true model parameters

We need to use the estimated model for carrying

out predictions

Thus, we cannot proceed further unless we select

a suitable distribution for the model residuals

31-Mar-16

Regression

53

Automation Lab

IIT Bombay

Example: Global Temperature Rise

.

Global Temperature Deviation

0.6

0.4

Y

Y

8.187 4.168 10- 3 t V

114.4 0.123 t 3.305 10- 5 t 2 V

0.2

Model

Developed

using OLS

0

-0.2

-0.4

Data

Linear Model

Quadratic Model

-0.6

-0.8

1850

1900

1950

2000

Year

31-Mar-16

Regression

54

27

31-03-2016

Automation Lab

IIT Bombay

Statistics

Linear Model

OLS

8.187 4.168 10

2

15.56

AT A

1.8208 10

3 T

-8.074 10

-8.074 10

4.19 10

Quadratic Model

OLS

114.4

0.123 3.305 10

2

AT A

1.582 10

4.29 104

4.46 101

1.16 10

4.46 101

4.63 10 2

1.202 10

31-Mar-16

5 T

1.16 10 2

1.202 10

7

3.118 10

Regression

55

Automation Lab

IIT Bombay

Example: Global Temperature Rise

18

Normalized residual : ~

vi

16

: sample std. dev.

14

Histogram

of Quadratic model

residuals (normalized)

10

8

6

15

4

2

0

-3

-2

-1

0

1

Normalized Residual

Histogram

of Linear model

Residuals (normalized)

10

Frequency

Frequency

12

vi

0

-3

31-Mar-16

Regression

-2

-1

0

1

Normalized Residual

56

28

31-03-2016

Choice of Distribution

Automation Lab

IIT Bombay

Least Squares (LS) estimation

Penalizes square of the deviations from zero error (i.e.

mean)

Thus, it favors errors close to zero

Moreover, positive and negative errors of equal

magnitude are equally penalized

Consequence: Histograms of the model residuals

are approximately bell shaped in most LS

estimation

Thus, it is reasonable to assume that the model

residuals have Gaussian/normal distribution

This choice also follows from a generalized version

of the Central Limit Theorem

31-Mar-16

Regression

57

Regression Problem Reformulation

Automation Lab

IIT Bombay

Up till now, it is assumed that each Vi

Vi

( i)

1 1

Yi

( i)

p p

...

for i 1,2,....n are independent

and identically distributed.

No distribution was specified for Vi

Now we additional ly assume that each Vi is Gaussian, i.e.

Vi ~ N 0,

for i 1,2,....n

or in other words

V ~ N 0n 1 ,

31-Mar-16

Regression

In

58

29

31-03-2016

Automation Lab

IIT Bombay

Gaussian Assumption: Visualization

Modeling Error Densities

True

Regression

Line

Ref.: Ogunnaike, B. A., Random Phenomenon, CRC Press, London, 2010

31-Mar-16

Regression

59

Automation Lab

IIT Bombay

Consequences of Gaussianity

Under the assumption that Vi : i 1,2,..., n are normal

and i.i.d., we can construct the likelihood function

for unknown parameters as follows

L

fV1 ,V2 ,....,Vn v1 , v2 ,...., vn|

NV1 v1|

NV2 v2|

.... NVn vn|

where

NVi vi|

vi

ln L

31-Mar-16

yi

1

2

exp

zi

...

1 1

n

ln 2

2

n

ln

2

Regression

vi2

2 2

i

p p

vi2

i 1

60

30

31-03-2016

Maximum Likelihood Estimation

Automation Lab

IIT Bombay

Alternatively

L

f V V |

n/ 2

exp

exp

1

n/ 2

ln L

n

ln 2

2

n

ln

2

1

1

V T 2I V

2

1

Y A T Y A

2 2

Y A

Y A

Necessary conditions for optimality in vector matrix notation

ln L

31-Mar-16

A T A Y

Regression

61

Automation Lab

IIT Bombay

OLS as ML Estimator

Thus, maximum likelihood point estimate of is

1

A T A A T Y

ML

OLS

Let ML represent a realization of RV ML .

E ML

Then, it follows that

2

and Cov

AT A

ML

~ N * ,

ML

AT A

Cov OLS

Thus, if we assume that the modeling errors are i.i.d.

samples from the Gaussian distribution, then

the OLS estimator turns out to be identical to

the Maximum Likelihood (ML) estimator.

31-Mar-16

Regression

62

31

31-03-2016

Consequences of Gaussianity

Automation Lab

IIT Bombay

From the properties of Gaussian RVs it follows that

1

1

AT A A T Y * A T A AT V

MV

OLS

ML

is a Gaussian random vector with

1

2

E * and Cov

AT A

Let us define matrix P

pii

A TA

i ' th diagnonal element of matrix P

From properties of multivariate Gaussian RV,

it follows that the marginal pdf of is univariate normal

i

~ N * , p

i

i

ii

31-Mar-16

Regression

63

Automation Lab

IIT Bombay

Confidence Internals on Parameters

Since i ~ N *i , pii

, in principle, we can construct

the confidence intervals on *i as

P

za / 2

i *i

pii 2

za / 2

Difficulty :

is unknown

Remedy : estimate

using model residuals

1

n

TV

and use them to construct the CI.

*

Question : what is the distribution of i 2i ?

pii

31-Mar-16

Regression

64

32

31-03-2016

Automation Lab

IIT Bombay

Confidence Internals on Parameters

1

Consider

T

V V

* T

vi2

V TV

i 1

2

n

is orthogonal to the column space of A,

Since V

TA * 0

it follows that V

1

2

1 T

V V

2

V TV

1

AT A

31-Mar-16

AT A

Thus, from the properties of

AT A

RV, it follows that

2

p

1 T

V V~

2

Regression

2

n p

65

Automation Lab

IIT Bombay

Confidence Internals on Parameters

Now 2

1

n

2

2

TV

i 1

1

p

i i

pii

Thus, (1

P t

i

31-Mar-16

/ 2, n p

2

n p

vi2

~

2

i 1

Thus, it follows that

and

Y A

vi2 where V

/

pii

(/ )

i

n

~

p

2

n p

Z/

p

2

n p

~ Tn

)100% confidence interval for

p

p

t

1

ii

Regression

/ 2, n p

ii

66

33

31-03-2016

Automation Lab

IIT Bombay

Example: Global Warming

Linear Model

OLS

8.187 4.168 10

P

A A

2 2*

p22

3 T

; 2

15.56

-8.074 10

2

4.19 10

4.19 10

Thus, 95% confidence interval for

P 4.168 10

/ 2 ,140

2.763 10

4

2

t

P 3.622 10

31-Mar-16

4.168 10

~ T142

2.763 10

/ 2 ,140

0.95

1.977

/ 2 ,140

3

2 2*

2.763 10

1.8208 10 2

-8.074 10

*

2

1.8208 10

*

2

4.714 10

0.95

Regression

67

Automation Lab

IIT Bombay

Hypothesis Testing

While developing a black box model from data, we

are often not clear about the terms to be

included in the model. For example, for the global

temperature data, should be develop a linear

model or a quadratic model?

To measure the importance of the contribution of

i' th component of * to E Y

*

1 1

we can test hypothesis

31-Mar-16

...

*

i

*

p p

Null hypothesis

H0 :

*

i

Alternate hypothesis

H1 :

*

i

Regression

68

34

31-03-2016

Automation Lab

IIT Bombay

Hypothesis Testing

If H 0 is true then,

0

i

~ Tn

pii

and, at level of significance , test of H 0 is to

Reject H 0 if

pii

/ 2 ,n p

Accept (i.e. we fail to reject) H 0 Otherwise

p value

Let k

be the observed value

pii

of the test statistics. Then

p value 2 P Tn p k

31-Mar-16

Regression

69

Automation Lab

IIT Bombay

Example: Global Warming

Quadratic Model

114.4

0.123 3.305 10

1.582 10

4.29 104

P

AT A

4.46 101

4.46 101

1.16 10

5 T

4.63 10

1.16 10

1.202 10

1.202 10

7

3.118 10

We are interested in finding whether inclusion of

the quadratic term is contributing to the mean of Y

31-Mar-16

Null hypothesis

H0 :

*

3

Alternate hypothesis

H1 :

*

3

Regression

70

35

31-03-2016

Automation Lab

IIT Bombay

Hypothesis Testing

If H 0 is true then,

0

3

p33

3

1.582 3.118 10

Let the level of significance be

Since

3.305 10

p33

Since k

0.05

4.705 t0.025,139

4.705, p value

p33

~T142

1.582 3.118 10 11

we reject H 0

3

11

2 P T139

1.9772

4.705

Thus, there is strong evidence that the quadratic

term contributes to the correlation

31-Mar-16

Regression

71

Automation Lab

IIT Bombay

Mean Response

Consider model Y z i

Suppose we select z i

zi

Yi

* Vi

z 0 and collect samples of Y0

Since Vi is an RV, we will get samples y0,1 , y0, 2 ,...., y0,m

y0 , j

z0

v0, j

Question is, for fixed z 0 , what is mean of RV Y z 0 ?

Y0

EY z0

z0

Since we do not know * ,

an estimate of

Y0

can be constructed using as follows

Y

31-Mar-16

z0

Regression

72

36

31-03-2016

Automation Lab

IIT Bombay

Mean Response

Since is a random vector, Y0 is a random variable.

Is Y0 an unbiased estimate of

E Y0

E z0

z0

Y0

Y0

Thus we have

Y

Cov Y0

or

E z0

and E

Y0

Cov Y0

2

Y0

z0

z0

z0

T

AT A

zT0 *

T

z0

z0

Cov z 0

T

z0

Pz0

Since is a Gaussian RV, it follows that

Y ~ N zT0 * ,

0

31-Mar-16

z0

Pz0

Regression

73

Automation Lab

IIT Bombay

Mean Response

Since, in practice, we rarely know true

an estimate of Y20 can be computed as follows

Y2

2 z 0

where 2

Y

Y0

1

n

Y0

Thus, (1

P Y0

Pz0

TV

and V

V

z0

Pz0

( / )

Y A

Z/

2

n p

~ Tn

)100% confidence interval on the true mean response

/ 2 ,n p

31-Mar-16

z0

Pz0

Y0

Regression

/ 2 ,n p

z0

Pz0

1

74

37

31-03-2016

Automation Lab

IIT Bombay

Future Response

Given the model at fixed z i

Y z0

z0

Y0

z0

* V0

in some situations, we interested in predicting Y z 0

Since we do not know * ,

an estimate of Y0 can be constructed using as follows

T

Y

z 0

0

Apart from determining a single value to predict a

response, we are interested in finding a prediction interval

that with a given degree of confidence will contain the

response.

31-Mar-16

Regression

75

Automation Lab

IIT Bombay

Future Response

Consider Y0 Y0

Since V~N( 0,

), it follows that

Y0 ~N zT0 * ,

and

Y0 ~N z 0

* ,

z0

Pz0

Y0 (i.e. future response) is independent of the past data

Y , z 1 , Y , z 2 ,...., Y , z n used to obtain .

1

Thus, it follows that

Y0 Y0 ~N 0,

31-Mar-16

Regression

z0

Pz0

76

38

31-03-2016

Automation Lab

IIT Bombay

Future Response

1

z0

Y0

z0

Pz0

Y0

z0

/

z0

Pz0

Z

2

n p

n

Thus, for any 0

P

Y0

/ 2 ,n p

31-Mar-16

1 we have

T

z0

T

z0

~Tn

Pz0

/ 2 ,n p

Regression

77

Automation Lab

IIT Bombay

Prediction Interval

A 100 1

% prediction interval

for the future response at z 0,

i.e. Y0

z0

Recall : 1

Y z 0 , then is :

/ 2 ,n p

z0

Pz0

100% confidence interval on

the mean response at z 0 , (i.e.

Y

31-Mar-16

/ 2 ,n p

z0

Regression

Y0

E Y0 ) is

Pz0

78

39

31-03-2016

Automation Lab

IIT Bombay

CI and PI

Difference between confidence interval (CI) and

prediction interval (PI):

Confidence interval (CI) is on a fixed parameter

of interest (like E[Y0] )

Prediction interval (PI) is on a random variable

(like Y0 )

At any z0, the prediction interval on future

response is wider than the confidence interval on

the mean response.

31-Mar-16

Regression

79

Automation Lab

IIT Bombay

Mileage Related to Engine Displacement

Consider the mileage (y, miles/gallon) and engine

displacement (x, inch3) data for various cars. An expert

car engineer insists that the mileage is related to

displacement as: Y = mx + c

Proposed Model

Y

c mx V

Estimated model parameters

T 33.73

c m

0.047 T

2

9.3941 ; P

A A

0.2219

-6.69 10

-6.69 10 4

2.347 10 6

(Montgomery and Runger, 2003)

31-Mar-16

Regression

80

40

31-03-2016

Automation Lab

IIT Bombay

Mileage Related to Engine Displacement

40

Scatter, CI for Mean Response and Prediction Interval

raw data

regression model

mean response: lower

mean response: upper

ind. pred.:lower

ind. pred.:upper

35

y (gasoline mileage)

30

25

20

Note :

15

PI is narrowest

Mean Response CI

10

at x0

Prediction Interval

5

0

x and

increases as we

move away from x

100

150

200 250 300 350 400

x (engine displacement)

31-Mar-16

450

500

Regression

81

Assessing Quality of Fit

Automation Lab

IIT Bombay

How do we assess whether the fitted model

is able to adequately explain the response variable Y?

Variability Analysis

Given data set yi , z i : i 1,2,...., n

n

SSY

yi

i 1

n

y i

yi

y i

i 1

yi

y i

y i

2 yi

y i y i

i 1

SS E

SS R

Residuals

Regression

2 yi

i 1

31-Mar-16

yi yi

2 V i yi

i 1

Regression

82

41

31-03-2016

Automation Lab

IIT Bombay

Variability Analysis

In OLS, the necessary condition for optimality

implies that

AT Y A

T

Y A A

T

Y A A

0T

vi yi

i 1

Note

Models used in multilinea r regression

are typically of the form

Yi

i

2 2

i.e. z1 i

...

i

p p

Vi

1 for i 1,2,..., n

31-Mar-16

Regression

83

Automation Lab

IIT Bombay

Variability Analysis

This implies that the first column of matrix A is

1n 1

1 1 ... 1 T

Thus, the necesary condition for oiptimality

0

A T Y A A T V

includes the constraint 1n 1 T V

vi

i 1

n

vi y

i 1

SSY

Total Variability

vi

i 1

SS E

SS R

{Variability left unexplained}

{Variability captured by regression model}

31-Mar-16

Regression

84

42

31-03-2016

Variability Analysis

Automation Lab

IIT Bombay

Variabilit y left unexplaine d is also the variabilit y

of the residuals and is denoted as SS Re s or SS E

A good measure of the quality of the fit is

R2

SS R

SYY

SS E

SYY

R2 is called coefficient of determination.

(a direct measure of the quality of fit)

R2 quantifies the proportion of the variability in

the response variable explained by the input variable.

A good fit should result in high R2

31-Mar-16

Regression

Variability Analysis

R2

SS R

SYY

85

Automation Lab

IIT Bombay

Variation in Y explained by regression

Total observed variation in Y

Note : 0

R2 1

The coefficient of determination close to 1 indicates

that the model adequately captures the relevant

information contained in the data.

Conversely, the coefficient of determination close to 0

indicates a model that is inadequate to captures the

relevant information contained in the data.

In general, it is possible to improve R2 by introducing

additional parameters in a model. However, note that

the improved R2 can be, at times, misleading.

31-Mar-16

Regression

86

43

31-03-2016

Automation Lab

IIT Bombay

Variability Analysis

An alternate measure of model fit

SS E /(n p )

2

Radj

1

SSY /( n 1)

SS E /( n

p ) : Residual mean square

SSY /( n 1) : This term remains constant

regardless of no. of variable in model

2

Radj

: penalizes a model that improve R2

through inclusion of more parameters

2

Relatively high and comparable valaues of R 2 and Radj

indicates that the variability in data has been captured

adequately without using excessive number of parameters.

31-Mar-16

Regression

87

Variability Analysis: Examples

Automation Lab

IIT Bombay

Gasoline mileage example :

SSY

1237.54,

R

SS R

955.72

0.77

Global Warming example :

Linear Model

SSY

R

6.6934,

0.6192,

SS R

2.5491

2

adj

0.6164

Quadratic Model

SSY

R

6.6934,

0.6715,

Note : 0

31-Mar-16

SS R

2

adj

R2

Regression

2.1989

0.6668

1

88

44

31-03-2016

Automation Lab

IIT Bombay

Example: Multi-linear Regression

Boiling points of a series of hydrocarbons

Ref.: Ogunnaike, B. A., Random Phenomenon, CRC Press, London, 2010

31-Mar-16

Regression

89

Automation Lab

IIT Bombay

Candidate Models

Linear Model : T

a bn

Quadratic Model : T

n2

250

200

Linear Model

T = 39*n - 170

Quadratic Model

T = - 3*n2 + 67*n - 220

150

Boiling Point (0C)

100

50

Data 1

Linear Model

Quadratic Model

0

-50

-100

-150

-200

-250

0

31-Mar-16

4

5

6

n, No. of Carbon Atoms

Regression

10

90

45

31-03-2016

Automation Lab

IIT Bombay

Raw Model Residues

10

8

Model Residue v(k) (0C )

6

4

6.3373

0

-2

-4

-6

-8

0

4

6

n, No. of Carbon Atoms

Quadratic Model : T

31-Mar-16

-218.1429 66.6667(n )-3.0238(n 2 ) v

Regression

91

Automation Lab

IIT Bombay

Confidence Interval

1.9464

P

(A A)

Thus, 1

-0.9107

0.0893

-0.9107 0.5060 -0.0536

0.0893 -0.0536 0.0060

100% confidence interval for

0.05 and t0.025,5

*

i

2.5706

Parameter 1 : -240.871, -195.415

Parameter 2 : 55.079, 78.254

Parameter 3 : -4.2807, -1.7670

To measure the importance of the contribution of

3rd component of to the quadratic model

we can test hypothesis 3*

31-Mar-16

Regression

0

92

46

31-03-2016

Automation Lab

IIT Bombay

Hypothesis Testing

Null hypothesis

H0 :

*

3

Alternate hypothesis H1 :

0

3

3

If H 0 is true then,

~T

pii 0.4889 5

*

3

and, at level of significance

0.01, test of H 0 is to

Reject H 0 if

t

4.0321

0.4889 0.005, 5

Fail to reject H 0 Otherwise

3

-3.0238

Test Statistics : k

6.1845

0.4889 0.4889

Since k 4.0321, the null hypothesis is rejected

31-Mar-16

Regression

93

Automation Lab

IIT Bombay

Hypothesis Testing

p value

k

6.1845 is the observed value of the test statistics.

p value

2 P T5

6.1845

0.0016

Note : p value level of significance ( 0.01)

Thus, there is strong evidence that the quadratic

term contributes to the correlation between the

boiling point and the carbon number

2

Coefficien t of Determinat in R 2 and Radj

Linear Model

: R2

0.974

2

Radj

0.9698

Quadratic Model : R 2

0.997,

2

Radj

0.9958

31-Mar-16

Regression

94

47

31-03-2016

Analysis of Residuals

Automation Lab

IIT Bombay

Linear Model

Normalized

Residuals show

a pattern

Normalized Model Residual v(k)

2

1

0

-1

Quadratic Model

Normalized

Residuals are

Randomly spread

Between +/- 2

-2

-3

Linear Model

Quadratic Model

-4

-5

0

31-Mar-16

4

6

n, No. of Carbon Atoms

Regression

95

Automation Lab

IIT Bombay

Example: Multi-linear Regression

Laboratory experimental

data on Yield obtained from a

catalytic process at various

temperatures and pressures

(n = 32)

Fitted multi - linear model

y

75.9 0.0757 x1

3.21x2

Ref.: Ogunnaike, B. A., Random

Phenomenon, CRC Press, London,

2010

31-Mar-16

Regression

96

48

31-03-2016

Automation Lab

IIT Bombay

Raw Model Residues

2.5

2

75.8660

Model Residue v(k)

1.5

0.0757

0.5

3.2120

0

-0.5

-1

0.9415

-1.5

-2

-2.5

0

10

15

20

Sample No.

31-Mar-16

25

30

35

Regression

97

Automation Lab

IIT Bombay

Confidence Interval

P

(A A)

9.6437 -0.0925 -0.6500

-0.0925 0.0010

0.0

-0.6500

0.0

0.4000

Thus, 95% confidence interval for

0.05 and t0.025, 29

*

i

2.0452

Parameter 1 : 69.89, 81.85

Parameter 2 : 0.015, 0.137

Parameter 3 : 1.994, 4.43

To measure the importance of the contribution of

2 nd component of to the proposed model

we can test hypothesis

31-Mar-16

Regression

*

2

0

98

49

31-03-2016

Automation Lab

IIT Bombay

Hypothesis Testing

Null hypothesis

H0 :

*

2

Alternate hypothesis H1 :

0

2

2

If H 0 is true then,

~T

p22 0.0298 29

*

2

and, at level of significance

Reject H 0 if

0.05, test of H 0 is to

t

2.0452

0.0298 0.025, 29

Fail to reject H 0 Otherwise

2

0.0757

Test Statistics : k

2.5439

0.0298 0.0298

Since k 2.0452, the null hypothesis is rejected

31-Mar-16

Regression

99

Automation Lab

IIT Bombay

Hypothesis Testing

p value

k

2.5439 is the observed value of the test statistics.

p value 2 P T29 2.5439 0.0166

Note : p value level of significance ( 0.05)

At level of significan ce

Reject H 0 if

0.01, test of H 0 is to

t

0.0298 0.005, 29

Fail to reject H 0 Otherwise

Since k

2.7564, we fail to reject null hypothesis

Note : p value

31-Mar-16

2.7564

level of significance ( 0.01)

Regression

100

50

31-03-2016

Nonlinear in Parameter Models

Reaction Rate Equations : -RA

k0e

E / RT

CA

Automation Lab

IIT Bombay

Dimensionless group based models in heat and mass transfer

Nu

Pr

Re

Sh

Sc

Re

x

Simplified VLE Model : Y

1 (

1) x

Thermodynamic correlations

RT

a

P

V b V T V b

RT

a

P

V b V2

B

ln Pv

A

T C

Redlich Kwong model

Van Der Waal model

Antoine Equation

31-Mar-16

Regression

101

Nonlinear-in-Parameter Models

Automation Lab

IIT Bombay

Abstract Model Form

Defining x

x1

x2 .... xn

....

T

m

g ( x, * )

: Model residual,

* : True parameters

Parameter Estimation

Ordinary least square

OLS

Min

The parameter estimation

problem has to be solved

using numerical

optimization tools.

( i )2

i 1

Weighted least square

OLS

Min

31-Mar-16

wi ( i ) 2

( wi

0 for all i )

i 1

Regression

102

51

31-03-2016

Regression Problem Formulation

Automation Lab

IIT Bombay

Now consider a dataset

yi , x i : i 1,2,..., n

Sy

generated from n independent experiments

and model equations

g x i ,

Yi

for i 1,2,..., n

It is assumed that each random modeling error

i

Yi

g x ,

for i 1,2,....n are independent and identically distributed.

It is further assumed that

i

~ N (0,

31-Mar-16

) for i 1,2,....n

Regression

103

Automation Lab

IIT Bombay

Consequences of Gaussianity

Under the assumption that

: i 1,2,..., n are normal

and i.i.d., we can construct the likelihood function

for unknown parameters as follows

L

f 1 , 2 ,...., n e1 , e2 ,...., en|

N 1 e1|

e2|

.... N

en|

where

1

2

N i ei|

ei

log L()

31-Mar-16

yi

exp

ei2

2 2

g x i ,

n

log(2 )

2

n

log(

2

Regression

1

2

ei2

i 1

104

52

31-03-2016

Maximum Likelihood Estimation

n

ln 2

2

ln L

n

ln

2

g x i ,

yi

Automation Lab

IIT Bombay

i 1

This implies that

Min

ln L

Min

i 1

g x i ,

yi

Thus, the maximum likelihood point estimate of is

ML

Min

i 1

yi

g x i ,

OLS

Thus, under the Gaussian assumption, the OLS estimator turns out

to be identical to the Maximum Likelihood (ML) estimator.

31-Mar-16

Regression

105

Automation Lab

IIT Bombay

Gauss-Newton Method

Consider Taylor series based approximation

in the neighborhood of a guess solution,

g x i ,

g x i ,

g x i ,

For small, , the model equations can be approximated as

Yi

g x ,

g x i ,

for i 1,2,...., n

Defining Yi( k )

Yi( k )

31-Mar-16

Yi

g x i ,

z( i ,k )

and z i ,k

Vi k

Regression

Vi

g x i ,

for i 1,2,..., n

106

53

31-03-2016

Automation Lab

IIT Bombay

Gauss-Newton Method

T

y1 k

.....

ynk

Y

z 1,k

........

T

z n ,k

k

Ak

Ak

Under the assumption that

v1k

.....

vnk

Stacking model equations

k

~ N 0,

2

k

, the maximum likelihood

estimate of

k

Ak

Ak

is

Ak

Thus, starting from an initial guess, 0 , we can generate

a new guess for as k 1 k

k

and continue the iterations till the folloing

termination criterion is satisfied

k

k 1

(tolerance) where

yi

g x i ,

i 1

31-Mar-16

Regression

107

Automation Lab

IIT Bombay

Covariance of Parameter Estimate

Let N represent the optimum solution obtained

when the Gauss Newton method terminates.

From the properties of OLS, it follows that

Cov

An estimate

2

V

2

N

van be contructed as follows

2 N

V

( N)

V

1

n

Since the optimum solution N

N is only

translation of RV N we can argue that Cov N

is identical to that of Cov( ), i.e.

Cov

31-Mar-16

2

V

Regression

108

54

31-03-2016

Automation Lab

IIT Bombay

Confidence Internals on Parameters

Let us assume that the ML estimator is unbiased and E

Defining P N

* .

A N , from the assumptoin of Gaussian

N , it follows that ~N * , 2 p N

distribution of V

i

i

ii

V N

Since

2 N

V

2

V

and

i

N

V

2

n p

/ 2,n p

N /

V

piiN

N

V

piiN

2

n p

*

i

Thus, 1

P i

, it follows that

2

n p

Z/

~Tn

100% confidence interval for

piiN

*

i

31-Mar-16

/ 2, n p

*

i

piiN

Regression

109

Linearizing Transformations

Automation Lab

IIT Bombay

In some special cases, a linear-in-parameter form

can be derived using variable transformations

log Nu

log Pr

log Nu

log

rA

log Re

log Pr

log(k0 )

log

log Re

E 1

R T

1

1

x

n log(C A ) V

Simplified VLE Model : Defining

1

1

y

1/

OLS/WLS methods developed for linear-in-parameter

models can be used for estimating parameters of the

transformed model.

31-Mar-16

Regression

110

55

31-03-2016

Nonlinear in Parameter Models

Automation Lab

IIT Bombay

Difficulty

The Original Model Residual ( ) cannot be transformed.

Moreover, solving for

T

Min

i 1

Vi

and recovering estimates from the transformed T

is NOT equivalent to estimating by solving for

Min

2

i

i 1

Parameters estimated using the transformed model serve as

a good initial guess for solving the nonlinear optimization problem.

31-Mar-16

Regression

111

Automation Lab

IIT Bombay

A Fix using WLS

By this approach, we try to approximate

2

i

wiVi 2

and solve for

Min

WLS

wi

i 1

Note : Vi is a complex finction of i . Let us denote it as

Vi

for i 1,2,..., n

Using Taylor series expansion in the nbhd of Vi

Vi

g 0

g

i

i

Vi

g

i

i

1 2

Choose wi

g

i

31-Mar-16

Regression

112

56

31-03-2016

Automation Lab

IIT Bombay

WLS Example

Consider model

Y

f1 x

f2 x

Y

fp

...

and transformed model

ln Y

ln

ln f1 x

....

ln f p

Transformed parameter estimation problem

T

Min

i 1

T

Vi

ln

Yi

0,Vi 0

Min

i 1

31-Mar-16

1

Yi

ln yi

ln yi

....

ln Y

ln Yi

ln Yi

Vi

i

vi

ln Yi

Choose wi

yi2

Regression

113

Automation Lab

IIT Bombay

WLS Example

Thus, we solve for

T

Min

T

yi2 vi

Min

T

i 1

ln yi

yi2( ln yi

i 1

Defining a diagonal matrix

diag y12

ln y1

YT

and A

y22 ....

ln y2

yn2 ,

.... ln yn

ln f1 x 1

.... ....

ln f1 x 2

.... .... ln f p 1 x 2

....

1

....

ln f1 x

ln f p 1 x 1

.... ....

n

....

.... .... ln f p 1 x

the WLS estimate is

T ,WLS

31-Mar-16

A T WA

Regression

AT W Y T

114

57

31-03-2016

Automation Lab

IIT Bombay

Example: Gilliland-Sherwood Correlation

Some of the earliest contributions to chemical engineering science

occurred in the form of correlations that shed light on mass and heat

transfer in liquids and gases. These correlations were usually

presented in the form of dimensionless numbers, combinations of

physical variables that enable general characterization of transport

and other phenomena in a manner that is independent of specific

physical dimensions of the equipment in question. One such correlation

regarding mass-transfer in falling liquid films is due to Gilliland and

Sherwood, (1934). It relates the Sherwood number, Sh, (a dimension

number representing the ratio of convective to diffusive mass

transport) to two other dimensionless numbers: the Reynolds number,

Re, (a dimensionless number that gives a measure of the ratio of

inertial forces to viscous forces) and the Schmidt number, Sc (the

ratio of momentum diffusivity (viscosity) to mass diffusivity; it

represents the relative ease of molecular momentum and mass

transfer).

Ref.: Ogunnaike, B. A., Random Phenomenon, CRC Press, London, 2010

31-Mar-16

Regression

115

Automation Lab

IIT Bombay

Example: Gilliland-Sherwood Correlation

A sample of data from the original

large set (of almost 400 data points!)

is shown in the table.

Postulated model : Sh

Re Sc

Paremeter Estimates : (original publication)

0.023, 0.830, 0.440

Parameters estimated using

linearizing transformation and OLS

0.0371,

0.777,

0.4553

OLS

OLS

OLS

Parameters estimated using

linearizing transformation and WLS

0.0372,

0.7852,

0.4298

WLS

31-Mar-16

WLS

Regression

WLS

116

58

31-03-2016

Automation Lab

IIT Bombay

Parameter Estimation

Estimation using Gauss Newton Method

Note: It is important to give good initial guess of

parameters to start the Gauss Newton method.

Iteration : Given guess

yi k

g x i ,

yi

Shi

Rei

Sci

for i 1,2,..., n

z

z

i ,k

Rei

g x i ,

i ,k

Sci

Initial Guess :

Generated using

the OLS solution

0.0371,

ln Rei

k

ln Sci

0.777,

0.4553

for i 1,2,..., n

31-Mar-16

Regression

117

Automation Lab

IIT Bombay

Parameter Estimation

Parameters estimated using Gauss - Newton method

0.0338,

0.7885,

0.4361

GN

Cov

GN

GN

95 % confidence intervals

1.186 -3.734 -0.679

10

-3.734 11.95 -0.276

-0.679 -0.276 36.707

Method

R2

: [1.06 10

5.70 10 2 ]

: [7.15 10

8.62 10 1 ]

: [3.07 10

5.65 10 1 ]

R2 (adjusted)

Linearized (OLS)

0.9795

0.9768

Linearized (WLS)

0.9797

0.9770

Gauss Newton

0.9798

0.9772

31-Mar-16

Regression

Note: R2

calculated

using the

original

model

118

59

31-03-2016

Automation Lab

IIT Bombay

Results of Regression

ln(Sh/Sc ) -ln(Sh/Sc0.44)

0.01

0.005

-0.005

-0.01

-0.015

7.5

31-Mar-16

Transformed Model (OLS)

Transformed Model (WLS)

Gauss Newton

8.5

ln(Re)

9.5

10

Regression

Results of Regression

119

Automation Lab

IIT Bombay

If we take the parameters in the original publication to be

reference/true values of the parameters, then, from

the figure we can conclude that

Estimates obtained using linearizing transformation and

OLS are far from the reference values

Estimates obtained using linearizing transformation and

WLS are relatively closer to the reference values

Estimates obtained through the Gauss Newton method

are closest to the reference values

31-Mar-16

Regression

120

60

31-03-2016

Automation Lab

IIT Bombay

Michaelis-Menten Model

Reaction rate data for an enzymatic reaction where the substrate

has been treated with Puromycin at several concentrations

Proposed model : Michaelis - Menten kinetics

r

C

C

1

2

Ref.: Mayers, B. A. et al., Generalized Linear Models, Wiley, N.J., 2010

31-Mar-16

Regression

121

Automation Lab

IIT Bombay

Parameter Estimation

Linearizing Transformation :

1

r

C V

Parameters estimated using linearizin g transformation and OLS

195.8 and

0.04841

1,OLS

1

ri

1

ri

1

i

Ci Vi

Vi

g( i )

Vi

i

2 ,OLS

1

i

ri

1

2

i

ri

1

r

Choose wi

ri4

Parameters estimated using linearizing transformation and WLS

208.67 and

0.05309

1,WLS

31-Mar-16

2 ,WLS

Regression

122

61

31-03-2016

Automation Lab

IIT Bombay

Parameter Estimation

Initial guess for Gauss Newton: Estimates obtained

using the linearizing transformation.

Parameters estimated using Gauss Newton method

212.68 and

0.06412

1,GN

Cov

10

48.26

0.044

2 ,GN

95 % confidence intervals

0.044

6.857 10

Method

R2

: [1.972 10 2 2.282 10 2 ]

: [4.567 10

R2 (adjusted)

Linearized (OLS)

0.9378

0.9315

Linearized (WLS)

0.9516

0.9468

Gauss Newton

0.9613

0.9574

8.257 10 2 ]

Note: R2

calculated

using the

original

model

The estimates obtained using the linearizing transformation and WLS are

relatively closer to the estimates obtained using Gauss Newton method.

31-Mar-16

Regression

123

Automation Lab

IIT Bombay

Michaelis-Menten Model

220

Michaelis - Menten Model: Puromycin Data Set

200

r, Reaction Rate

180

160

140

120

Experimental data

OLS using Linearized Model

Gauss-Newton using Nonlinear Model

WLS using Linearized Model

100

80

60

40

0

31-Mar-16

0.2

0.4

0.6

0.8

C, Concentration (ppm)

Regression

1.2

124

62

31-03-2016

Automation Lab

IIT Bombay

Summary

Revisiting least squares estimation through probabilistic

viewpoint has number of merits.

OLS is shown to be unbiased and most efficient (minimum

variance) estimator among the set of all linear unbiased

estimators

For Gaussian modelling errors, OLS turns out to be the

maximum likelihood estimator and the variance of the

parameter estimates attains the Cramer-Rao bound (see

Appendix). Thus, OLS is the minimum variance unbiased

estimator i.e. best amongst the set of all linear/nonlinear

unbiased estimators.

31-Mar-16

Regression

125

Automation Lab

IIT Bombay

Summary

The probabilistic viewpoint facilitates estimation of

Confidence intervals for the model parameters

Bounds on predicted outputs

Probabilistic viewpoint provides a systematic method to

add/remove terms in heuristic models

The probabilistic viewpoint provides a systematic method to

select a most suitable model from a set of multiple

candidate models.

31-Mar-16

Regression

126

63

31-03-2016

Automation Lab

IIT Bombay

Summary

There are many issues in linear and nonlinear regression,

which remain untouched in these notes. For example,

what if the values of the independent variable x (or z)

itself are corrupted with random noise?

what if we want to update model parameters on-line,

i.e. when size of the data matrix increases with time as

we collect more and more data?

A list of useful references that discuss such issues in

detail is given on the next slide.

31-Mar-16

Regression

127

Automation Lab

IIT Bombay

References

Strang, G.; Linear Algebra and Its Applications. Harcourt Brace

Jevanovich College Publisher, New York, 1988.

Ogunnaike, B. A., Random Phenomenon, CRC Press, London, 2010.

Myers, R. H., Montgomery, D. C., Vinning, G. G., Robinson, T. J.,

Generalized Linear Models with Applications in Engineering and

Sciences (2nd Ed.), Wiley, N. J., 2010.

Gourdin, A. and M Boumhrat; Applied Numerical Methods. Prentice

Hall India, New Delhi.

Crassidis, J. L. and Junkins, J. L., Optimal Estimation of Dynamic

Systems (2nd Ed.), Chapman and Hall/CRC, 2012.

31-Mar-16

Regression

128

64

31-03-2016

Tip of the Iceberg.

Automation Lab

IIT Bombay

Model development using regression is a vast area and is

being pursued for more than last 300 years. Through

these notes, we have only touched the tip of this

iceberg. Hope these notes give you some confidence to

explore the iceberg later when you need to do it in your

professional career!

Thank You!

31-Mar-16

Regression

129

Automation Lab

IIT Bombay

Appendix: Cramer Rao Bound

31-Mar-16

Regression

130

65

31-03-2016

Automation Lab

IIT Bombay

Cramer-Rao Inequality

Given statistical properties of the measurement

errors, the Cramer-Rao inequality give us a lower

bound on the covariance of the errors between the

estimated quantities and the true values.

Consider the conditional density f Y Y |

The Cramer - Rao inequality for an arbitrary unbiased

is given by

weighted least square estimate X

W

E W

W

*

* T

where Fisher Information Matrix F is given as

F

ln f Y Y |

ln f Y Y |

3/31/2016

or F

ln f Y Y |

T

State Estimation

131

Automation Lab

IIT Bombay

Cramer Rao Bound

Consider problem of estimating unknown

from measurements Y

A*

where the measurement errors have

Gaussian distribution, i.e. V ~ N 0, R

Now, if is given, then

E Y|

Cov Y|

Cov Y A

Cov V

This implies

f Y Y |

or f Y Y |

3/31/2016

exp

N A ,R

1

Y A

2

State Estimation

R 1Y A

132

66

31-03-2016

Automation Lab

IIT Bombay

Cramer Rao Bound

1

Y A T R 1 Y A ln( )

2

and the Fisher information matrix become

ln f Y Y |

ln f Y Y |

T

AT R 1A

and the Cramer - Rao bound is given by

Cov W

E W

AT R 1A

For the minimum variance estimator

MV

A T R 1A A T R 1 V

which implies

E MV

MV

3/31/2016

A T R 1A

State Estimation

133

Best Linear Unbiased Estimator

Automation Lab

IIT Bombay

Thus, the weighted least square estimate

obtained using the Gauss-Markov theorem

is the most efficient possible estimate

For the regression problem under consideration

1

AT A AT V

MV

OLS

and R

E OLS

I, which implies

* OLS

AT A

Thus, the assumption that model residuals have

Gaussian distribution implies that

E OLS

OLS

and OLS is the most efficient estimator.

3/31/2016

State Estimation

134

67

You might also like

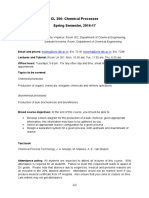

- CL 306: Chemical Processes Spring Semester, 2016-17: Madhu@che - Iitb.ac - in Noronha@che - Iitb.ac - inDocument2 pagesCL 306: Chemical Processes Spring Semester, 2016-17: Madhu@che - Iitb.ac - in Noronha@che - Iitb.ac - inAbhishek SardaNo ratings yet

- Tut2 SolnsDocument2 pagesTut2 SolnsAbhishek SardaNo ratings yet

- DiameterDocument3 pagesDiameterAbhishek SardaNo ratings yet

- Convective Mass Transfer PDFDocument14 pagesConvective Mass Transfer PDFAnonymous 4XZYsImTW5100% (1)

- Tut1 2016 QDocument5 pagesTut1 2016 QAbhishek SardaNo ratings yet

- Course Content CL305 2016 S1Document2 pagesCourse Content CL305 2016 S1Abhishek SardaNo ratings yet

- Experiment HT - 305: Plate Heat Exchanger: BackgroundDocument10 pagesExperiment HT - 305: Plate Heat Exchanger: BackgroundAbhishek SardaNo ratings yet

- Emulsion Coplymerization of Mma and BaDocument5 pagesEmulsion Coplymerization of Mma and BaAbhishek SardaNo ratings yet

- Chemical Engineering Thermodynamics (CL 253) Mid-Semester Examination (2012)Document1 pageChemical Engineering Thermodynamics (CL 253) Mid-Semester Examination (2012)Abhishek SardaNo ratings yet

- Distillation Separation TechniquesDocument12 pagesDistillation Separation TechniquesAbhishek SardaNo ratings yet

- ThermodynamicsDocument19 pagesThermodynamicsAbhishek SardaNo ratings yet