Professional Documents

Culture Documents

A Survey On Analyzing and Processing Data Faster Based On Balanced Partitioning - IIR - IJDMTA - V4 - I2 - 006

Uploaded by

Integrated Intelligent ResearchOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A Survey On Analyzing and Processing Data Faster Based On Balanced Partitioning - IIR - IJDMTA - V4 - I2 - 006

Uploaded by

Integrated Intelligent ResearchCopyright:

Available Formats

Integrated Intelligent Research (IIR)

International Journal of Data Mining Techniques and Applications

Volume: 04 Issue: 02 December 2015, Page No. 1- 4

ISSN: 2278-2419

A Survey on Analyzing and Processing Data Faster

Based on Balanced Partitioning

Annie .P. Kurian1, V. Jeyabalaraja2

1

Student, Dept. of Computer Science & Engg, Velammal Engg College, Chennai, India

Professor, Dept. of Computer Science & Engg., Velammal Engg College, Chennai, India

Email: anniekurian13@gmail.com,jeyabalaraja@gmail.com

Abstract---Analyzing and processing a big data is a challenging task

because of its various characteristics and presence of data in large

amount. Due to the enormous data in todays world, it is not only a

challenge to store and manage the data, but to also analyze and

retrieve the best result out of it. In this paper, a study is made on the

different types available for big data analytics and assesses the

advantages and drawbacks of each of these types based on various

metrics such as scalability, availability, efficiency, fault tolerance,

real-time processing, data size supported and iterative task support.

The existing system approaches for range-partition queries are

insufficient to quickly provide accurate results in big data. In this

paper, various partitioning techniques on structured data are done. The

challenge in existing system is, due to the proper partitioning

technique, and so the system has to scan the overall data in order to

provide the result for a query. Partitioning is performed; because it

provides availability, maintenance and improvised query performance

to the database users. A holistic study has been done on balanced

range partition for the structured data on the hadoop ecosystem i.e. the

HIVE and the impact on fast response which would eventually be

taken as specification for testing its efficiency. So, in this paper a

thorough survey on various topics for processing and analysis of vast

structured datasets, and we have inferred that balanced partitioning

through HIVE hadoop ecosystem would produce fast and an adequate

result compared to the traditional databases.

source framework that allows to store and process big data in a

distributed environment across clusters of computers using

simple programming models. HDFS is a distributed file system

(DFS) which is highly fault tolerant and provides high

throughput access to application data and is highly suitable for

large data sets. The programming model used in Hadoop is

Map Reduce (MR). Map Reduce is the data processing

functionality used in Hadoop which includes breaking the

entire task into two parts, known as map and reduce.

In the traditional system, handling of large datasets is deficient

in producing accurate and fast response. Some of the features

required to overcome the disadvantages of existing system are:

Analyzing and retrieving range aggregate query based on

balanced partitioning i.e. dividing a big data into

independent partitions and then generating a local

estimation for each partition.

The results retrieved should be faster and accurate.

Fault-tolerance.

Efficient response with minimum delay.

II.

A. Range queries in dynamic OLAP data cubes

Online Analytical Processing (OLAP) is a technology that is

used to organize large business databases and OLAP

application is a multidimensional database which is also called

as data cube. Range query applies an aggregation operation

(SUM) over selected OLAP data cubes, which provide

accurate results when datas are updated. This paper mainly

focuses on performing aggregation operation with reduced

time complexity. The algorithm used in this paper is double

relative prefix sum approach to speed up range queries from

O(nd/2) to O(nd/6), where d is the number of dimension and n is

the number distinct tuples at each dimension [5]. The precomputed auxiliary information helps to execute ad-hoc

queries. The ad-hoc query is used to obtain information only

when the need arises. So the prefix some approaches are

suitable for the data which is static or which are rarely updated.

A research is being done for updating process, i.e.by providing

simultaneous updating. Also, the time complexity for data

updates has to be reduced for range aggregate queries.

B. You can stop early with COLA: Online processing of

aggregate queries in the cloud

Keywords range aggregate, big data, HIVE, HDFS, Map Reduce.

I.

RELATED WORKS

INTRODUCTION

Due to the enormous data in todays world, the traditional

system mechanism becomes difficult to handle these large

amounts of data. The traditional approaches are meant for

handling structured data only to a certain size limit. Also, the

processing becomes unmanageable and non-scalable after

certain amount. So, that is when Big Data came into existence,

which handles huge amount of data.

Big data is a broad term for data sets so large or complex that

traditional data processing applications are inadequate.

Challenges include analysis, capture, search, sharing, storage,

transfer, visualization, and information privacy. Big Data is

meant for its sheer Volume, Variety, Velocity and Veracity.

Big data includes handling of structured data, semi-structured

and unstructured data. But the traditional system is meant only

for handling the structured data. The range aggregate query in

the existing system has row-oriented partitioning and has

limited processing of queries. The main focus of big data is

how the data are analyzed and retrieved according to the

efficiency and accuracy. In todays Map Reduce framework is

used for processing OLAP and OLTP. Hadoop is an open

COLA is used for answering cloud online aggregation for

single and multiple join tables with accuracy. COLA supports

incremental and continuous aggregation which reduces the

waiting time before an acceptable estimate is reached. It uses

Map Reduce concept to support continuous computing of

aggregation on joins which reduces the waiting time. The

1

Integrated Intelligent Research (IIR)

International Journal of Data Mining Techniques and Applications

Volume: 04 Issue: 02 December 2015, Page No. 1- 4

ISSN: 2278-2419

techniques used in [8] OLA are block level sampling and twophase stratified sampling. The block level sampling consists of

data which is split into blocks which forms samples. The

stratified sampling, divides the population/data into separate

groups, called strata.

Prototype (HOP) supports continues queries, where programs

written as event monitoring and stream processing, thus

retaining fault tolerance.

The drawback is that the output of map and reduce phase is

materialized to stable storage before it is consumed by the next

phase. Though the users consume results immediately, the

users obtain results which are not appropriate and accurate.

Also, the continuous query analysis processing is inefficient,

because each new Map Reduce job does not have access to the

computational state of the last analysis run, so the state must be

recomputed from scratch.

Then, a probability sampleis drawn from each group. The

efficiency in terms of speed is improved when compared to

existing system efficiency. The OLA provides early estimated

returns when the background computing processes are still

running the results are subsequently refined and the accuracy is

improved in succeeding stages. But users cannot obtain an

appropriate answering with satisfied accuracy in the early

stages. Also it cannot respond with acceptable accuracy within

desired time period.

F. Range Aggregation with Set Selection

The partitioning is done in large datasets in order to consume

less time for processing the data and to provide output which is

flexible and efficient. Range aggregation can be of any

operation such as COUNT, SUM, MIN, MAX etc. The

partition can be done based on different perspective such as:

Random partition

Hash partition

Range Partition

Random partition is where the datas are randomly partitioned

based on the size of the dataset. Hash partitioning of the data is

based according to hash key value and the hash algorithm.

Hash partitioning is a technique where a key value is used to

distribute/partition rows equally across different partitions.

Range partitioning separates the data according to range of

values of the partitioning key. In range partition when the

object resides on two different sets, then the operation has to be

done separately on the sets and the resultant set are combined

to produce the final output, based on the intersection operation.

This type of process is called a range partition set operation

[9]. The random partitioning might be easy to implement, but

then some datas or objects maybe dependent on the other in

order produce the data, so the processing of the results takes a

longer time. So the range partitioning method is used in which

several data are partitioned based on certain criteria and

processing and analysis are done simultaneously. Therefore, it

produces result with accuracy and it consumes linear space and

achieves nearly-optimal query time, since the processes are in

parallel.

C. HIVE- A petabyte scale data warehouse using Hadoop

Due to large data sets, traditional warehousing finds it difficult

to process and handle the data. It is also expensive and

produces non-scalable and inadequate result. In this paper, the

authors have explored the use of HIVE an ecosystem of

Hadoop. Hadoop is an open-source framework that allows to

store and process big data in a distributed environment across

clusters of computers using simple programming models and

process model(MR). Map-reduce require hard maintenance, so

the proposal system introduces a new framework called Hive

on top of Hadoop framework. Hive is a data warehouse

infrastructure built on top of Hadoop for providing data

summarization, query, and analysis. Hive is a DB engine,

which supports SQL like queries called Hive QL queries,

which are then compiled into MR jobs [1]. Hive also contains a

system log- meta store-which contains schema and semantics

becomes useful during data exploration. HIVE ecosystem is

best suitable for processing data, which is also used for

querying and managing structured data built on top of Hadoop.

D. Online aggregation for large Map Reduce jobs

This paper proposes a system model that is applicable for

online aggregation analytic (OLA) over map reduce in a large

scale distributed systems. Hyracks is a technique involving

pipelining of data processing which is faster than staged

processing of hadoop process model [7]. Master maintains

random block ordering. It consists of two intermediate set of

files i.e., data files which stores the values and the metadata

files which stores the timing information. For estimation of

aggregates- Bayesian estimator- uses correlation information

between value and processing time. This paper destroys

locality and hence introduces overhead. Since this approach is

suitable only for static data, where updating of files becomes

difficult and hence the result produced is inaccurate.

E. Online Aggregation and Continuous Query support in

Map Reduce

Map Reduce is a popular programming function in the Hadoop

open source framework. To resolve the issue of fault

tolerance, this paper allows the output of the MR task and job

function to be materialized to the disk i.e. the HDFS before it is

consumed. The reformed version of Map Reduce allows data to

be pipelined between operators [3]. A pipeline is a set of data

processing elements which is connected in series, where the

output of one element is sent as the input of the next one. So,

this improves and reduces the completion time and system

utilization. MR supports online aggregation. Hadoop Online

G. Hyper Log Log in practice: Algorithmic Engineering of a

State of the art Cardinality Estimation Algorithm

Hyper Log Log is an algorithm for the count-distinct problem,

approximating the number of distinct elements in a multi set.

Calculating the exact cardinality of a multiset requires an

amount of memory proportional to the cardinality, which is

impractical for very large datasets. This paper introduces

algorithm for selection of range cardinality queries. The Hyper

Log Log ++ algorithm is used for accurately estimating the

cardinality of a multi set using constant memory [4].Hyper Log

Log++ has multiple improvements over Hyper Log Log, with a

much lower error rate for smaller cardinalities. It serializes the

hash bits to bytes array in each bucket as a cardinality

estimated. The Hyper Log Log++ uses 64- bit hash function

instead of 32- bits in Hyper Log Log in order to improve the

data-scale and estimated accuracy in big data environment.

2

Integrated Intelligent Research (IIR)

International Journal of Data Mining Techniques and Applications

Volume: 04 Issue: 02 December 2015, Page No. 1- 4

ISSN: 2278-2419

Hence this algorithm decreases the memory usage; accuracy

increased and also reduces error.

Data Type

archiving

Structured

H. Fast data in the era of big data: Twitters real time

related query suggestion architecture

The big data as significant increase in data volume, and the

preferred tuples maybe located in different blocks or files in a

database. On the other hand, real time system aims to provide

appropriate results within seconds on massive data analysis.

This paper presents the architecture behinds Twitter real- time

related query suggestion and spelling correction service. It

describes two separate working systems that are built to solve

the problem. They are: 1) Using hadoop implementation,2)

Using in-memory processing engine [6]. A good related query

suggestion should provide Topicality and Temporality. Using

hadoop, Twitter has robust and production Hadoop cluster. It

uses Pig which is a scripting language to aggregate users

search session, compute term and co-occurrence statistics. Due

to this hadoop causes two bottlenecks that are log import and

latency problem. Then the in-memory processing stores query

co-occurrence and statistics, it stores the results in HDFS after

the process and also contains replication factor. It becomes

easier to fetch data from in- memory because it reduces the

time complexity, and therefore it improves the efficiency.

Availability

Storage

Data Size

Read/Write

limits

Transaction/

Processing

speed

Terabyte

1000 query/sec

Takes more time to process

and also not that accurate

and efficient

IV.

SYSTEM MODEL

Many organization and industries generate large amounts of

data in todays world which consist of both the structured and

unstructured data. Handling big data using the open- source

Hadoop framework would consist of using HIVE for analyzing

and processing structured data with the help of Map Reduce

function.

I. Effective use of Blocks- Level Sampling in Statistics

estimation

Due to the large datasets i.e. petabyte of datas, it becomes

hard to scan the whole data and retrieve the result and also it s

expensive. In order to overcome this, approximate statistics are

built based on sampling. Block level sampling is more

productive and efficient than the uniform-random sampling

over a large datasets, but accountable to significant errors if

used to create database statistics. In order to overcome it, two

approaches are used Histogram and Distinct- Value estimation.

Histogram uses two phase adaptive method in which sample

size is decided based on first phase sample, and this is

significantly faster than previous iterative methods.

Range-aggregate queries for structured data execute the

aggregate function depending on number of rows/columns

simultaneously in a given query ranges. The processing of

large amount of data in the traditional system is difficult, since

it processes only limited set of tuples and also takes a long time

to access and generate the accurate result. Therefore to

efficiently handle the query on big data, we would use the

range partition/ aggregation mechanism. The data are

partitioned in such a way that the searching and processing of

data becomes easier.

The 2-phase algorithm consists of the sort and Validate

mechanism, and from it the required histogram is merged. The

distinct value estimates appears as part of Histogram, because

in addition to the tuple counts in buckets, histogram also keeps

a count of the number of distinct value in each bucket [2]. This

gives a density measure, which is defined as the average

number of duplicates for the distinct value. The bucket weight

is returned as the estimated cardinality of query.

III.

Not a Fault tolerant system

Stores the data in rows and

columns

According to large data record field, the big data is partitioned

and then a local estimation sketch is generated for each

partition. When a user enters range-aggregate query according

to requirements, the system quickly try to fetch the respective

data from the respective partitions, instead of scanning the

whole data. This method tries to bring a holistic improvement

in handling structured data with accuracy, availability,

consistency and with fast response.

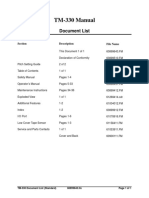

COMPARISON BETWEEN RDBMS AND BIG

DATA

A comparison is made between the traditional RDBMS and the

emerging technology i.e. big data, to show which is efficient in

handling the structured data. This shows that the big data has

various advantages when compared to RDBMS.

CRITERIA

Description

RDBMS

Used for

transactional

system,

reporting and

Structured,

Unstructured

and semistructured

Fault tolerant

Stores

in

Distributed

File System

(HDFS)

Petabyte

Millions

query/sec

Fast and

efficient

BIG DATA

Stores and

process data

in parallel

Fig1. Block Diagram

3

Integrated Intelligent Research (IIR)

V.

International Journal of Data Mining Techniques and Applications

Volume: 04 Issue: 02 December 2015, Page No. 1- 4

ISSN: 2278-2419

[5]

PROBLEM SCENARIO

Since the traditional relational database finds it difficult to

handle the large amount of data, therefore the system is

inadequate, non-scalable, causes relative errors and increased

time complexity. Here, an idea is introduced, which uses

balanced partitioning on range aggregate queries, which was

not that scalable and reliable with the traditional system. The

partitioning is the process which is done in order to reduce

time in scanning the whole data. Partition is a division of

permissible database or its peripheral elements into distinct

independent parts. This is normally done for performance,

accuracy reasons. The new approach would provide result with

accuracy andreduced relative errors when compared to the

existing system. Thus the system aims to provide:

Accurate results.

To increase the processing speed of range-aggregate

query.

To achieve scalability.

Updating of data done simultaneously.

Latency and errors are reduced.

Since our idea uses range partitioning technique in order to

process the data, it reduces the time required to access the data

and the results are retrieved faster. The Map Reduce is the

processing function in hadoop which does the analysis of the

datasets and stores the results in the HDFS. The results are

stored in a separate folder, in order to reduce the time.

VI.

[6]

[7]

[8]

CONCLUSION

In this paper, we have reviewed all the different types of

techniques which have its application effective in use for our

fast range aggregate queries in big data environment. The

traditional system models are nonscalable and unmanageable.

Also, the cost increases as the growth of data increases. We

would try to equally partition the data and provide sample

estimation for each partition, which would make the processing

of query easier and effective. The Hive is a data warehouse

query language which is used for handling the structured data.

The system which we analyzed would produce the data with

high efficiency result and with less time complexity for data

updates. This system would provide a good performance with

high scalability and accuracy. This approach would be good

techniques for developing real time answering methods for

big data analysis and processing.

REFERENCES

[1]

[2]

[3]

[4]

Ashish Thusoo ,Joydeep Sen Sarma, Namit Jain Hive A Petabyte Data

Warehouse using Hadoop. Proc. 13th Intl Conf .Extending Database

Technology(EDBT 10) ,2010.

Chaudhuri .S, Das .G, and Srivastava .U, Effective use of blocklevel

sampling in statistics estimation, in Proc. ACM SIGMODInt. Conf.

Manage. Data, 2004, pp. 287298.

Condie .T, Conway .N, Alvaro .P, Hellerstein .J .M, Gerth .J, Talbot .J,

Elmeleegy .K, and Sears .R, Online aggregation and continuous query

Support in Map Reduce, in Proc. ACM SIGMOD Int. Conf. Manage.

Data, 2010, pp. 11151118.

Heule .S, Nunkesser .M, and Hall .A, Hyperloglog in

practice:Algorithmic engineering of a state of the art cardinality

estimation Algorithm, in Proc. 16th Int. Conf. Extending Database

Technol.,2013, pp. 683692.

Liang .W, Wang .H, and Orlowska .M .E, Range queries indynamic

OLAP data cubes, Data Knowl. Eng., vol. 34, no. 1,pp. 2138, Jul.

2000.

Mishne .G, Dalton .J, Li .Z, Sharma .A, and Lin .J, Fast data in theera

of big data: Twitters real-time related query suggestionarchitecture, in

Proc. ACM SIGMOD Int. Conf. Manage. Data,2013, pp. 11471158.

Pansare .N, Borkar .V, Jermaine .C, and Condie .T, Online

aggregationfor large MapReduce jobs, Proc. VLDB Endowment, vol.

4,no.11, pp. 11351145, 2011.

Shi .Y, Meng .X, Wang .F, and Gan .Y, You can stop early withcola:

Online processing of aggregate queries in the cloud, in Proc.21st ACM

Int. Conf. Inf. Know. Manage. 2012, pp. 12231232.

Yufei Tao, Cheng Sheng, Chin-Wan Chung, and Jong-Ryul Lee, Range

Aggregation with Set Selection, IEEE transactions on knowledge and

data engineering, VOL. 26, NO. 5, MAY 2014.

You might also like

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- NEW CREW Fast Start PlannerDocument9 pagesNEW CREW Fast Start PlannerAnonymous oTtlhP100% (3)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Advance Bio-Photon Analyzer ABPA A2 Home PageDocument5 pagesAdvance Bio-Photon Analyzer ABPA A2 Home PageStellaEstel100% (1)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Understanding CTS Log MessagesDocument63 pagesUnderstanding CTS Log MessagesStudentNo ratings yet

- Overhead Door Closers and Hardware GuideDocument2 pagesOverhead Door Closers and Hardware GuideAndrea Joyce AngelesNo ratings yet

- Take Private Profit Out of Medicine: Bethune Calls for Socialized HealthcareDocument5 pagesTake Private Profit Out of Medicine: Bethune Calls for Socialized HealthcareDoroteo Jose Station100% (1)

- Competency-Based Learning GuideDocument10 pagesCompetency-Based Learning GuideOliver BC Sanchez100% (2)

- A Survey On The Simulation Models and Results of Routing Protocols in Mobile Ad-Hoc NetworksDocument6 pagesA Survey On The Simulation Models and Results of Routing Protocols in Mobile Ad-Hoc NetworksIntegrated Intelligent ResearchNo ratings yet

- A Review On Role of Software-Defined Networking (SDN) in Cloud InfrastructureDocument6 pagesA Review On Role of Software-Defined Networking (SDN) in Cloud InfrastructureIntegrated Intelligent ResearchNo ratings yet

- Investigating Performance and Quality in Electronic Industry Via Data Mining TechniquesDocument5 pagesInvestigating Performance and Quality in Electronic Industry Via Data Mining TechniquesIntegrated Intelligent ResearchNo ratings yet

- Three Phase Five Level Diode Clamped Inverter Controlled by Atmel MicrocontrollerDocument5 pagesThree Phase Five Level Diode Clamped Inverter Controlled by Atmel MicrocontrollerIntegrated Intelligent ResearchNo ratings yet

- Effective Approaches of Classification Algorithms For Text Mining ApplicationsDocument5 pagesEffective Approaches of Classification Algorithms For Text Mining ApplicationsIntegrated Intelligent ResearchNo ratings yet

- A New Computer Oriented Technique To Solve Sum of Ratios Non-Linear Fractional Programming ProblemsDocument1 pageA New Computer Oriented Technique To Solve Sum of Ratios Non-Linear Fractional Programming ProblemsIntegrated Intelligent ResearchNo ratings yet

- Prediction of Tumor in Classifying Mammogram Images by K-Means, J48 and CART AlgorithmsDocument6 pagesPrediction of Tumor in Classifying Mammogram Images by K-Means, J48 and CART AlgorithmsIntegrated Intelligent ResearchNo ratings yet

- Slow Intelligence Based Learner Centric Information Discovery SystemDocument3 pagesSlow Intelligence Based Learner Centric Information Discovery SystemIntegrated Intelligent ResearchNo ratings yet

- Impact of Stress On Software Engineers Knowledge Sharing and Creativity (A Pakistani Perspective)Document5 pagesImpact of Stress On Software Engineers Knowledge Sharing and Creativity (A Pakistani Perspective)Integrated Intelligent ResearchNo ratings yet

- Applying Clustering Techniques For Efficient Text Mining in Twitter DataDocument4 pagesApplying Clustering Techniques For Efficient Text Mining in Twitter DataIntegrated Intelligent ResearchNo ratings yet

- Classification of Retinal Images For Diabetic Retinopathy at Non-Proliferative Stage Using ANFISDocument6 pagesClassification of Retinal Images For Diabetic Retinopathy at Non-Proliferative Stage Using ANFISIntegrated Intelligent ResearchNo ratings yet

- A New Arithmetic Encoding Algorithm Approach For Text ClusteringDocument4 pagesA New Arithmetic Encoding Algorithm Approach For Text ClusteringIntegrated Intelligent ResearchNo ratings yet

- RobustClustering Algorithm Based On Complete LinkApplied To Selection Ofbio - Basis Foramino Acid Sequence AnalysisDocument10 pagesRobustClustering Algorithm Based On Complete LinkApplied To Selection Ofbio - Basis Foramino Acid Sequence AnalysisIntegrated Intelligent ResearchNo ratings yet

- Associative Analysis of Software Development Phase DependencyDocument9 pagesAssociative Analysis of Software Development Phase DependencyIntegrated Intelligent ResearchNo ratings yet

- A Study For The Discovery of Web Usage Patterns Using Soft Computing Based Data Clustering TechniquesDocument14 pagesA Study For The Discovery of Web Usage Patterns Using Soft Computing Based Data Clustering TechniquesIntegrated Intelligent ResearchNo ratings yet

- A Framework To Discover Association Rules Using Frequent Pattern MiningDocument6 pagesA Framework To Discover Association Rules Using Frequent Pattern MiningIntegrated Intelligent ResearchNo ratings yet

- A Study For The Discovery of Web Usage Patterns Using Soft Computing Based Data Clustering TechniquesDocument7 pagesA Study For The Discovery of Web Usage Patterns Using Soft Computing Based Data Clustering TechniquesIntegrated Intelligent ResearchNo ratings yet

- A Framework To Discover Association Rules Using Frequent Pattern MiningDocument6 pagesA Framework To Discover Association Rules Using Frequent Pattern MiningIntegrated Intelligent ResearchNo ratings yet

- A Framework To Discover Association Rules Using Frequent Pattern MiningDocument6 pagesA Framework To Discover Association Rules Using Frequent Pattern MiningIntegrated Intelligent ResearchNo ratings yet

- A Study For The Discovery of Web Usage Patterns Using Soft Computing Based Data Clustering TechniquesDocument7 pagesA Study For The Discovery of Web Usage Patterns Using Soft Computing Based Data Clustering TechniquesIntegrated Intelligent ResearchNo ratings yet

- A Comparative Analysis On Credit Card Fraud Techniques Using Data MiningDocument3 pagesA Comparative Analysis On Credit Card Fraud Techniques Using Data MiningIntegrated Intelligent ResearchNo ratings yet

- Multidimensional Suppression For KAnonymity in Public Dataset Using See5Document5 pagesMultidimensional Suppression For KAnonymity in Public Dataset Using See5Integrated Intelligent ResearchNo ratings yet

- Paper13 PDFDocument8 pagesPaper13 PDFIntegrated Intelligent ResearchNo ratings yet

- Significance of Similarity Measures in Textual Knowledge MiningDocument4 pagesSignificance of Similarity Measures in Textual Knowledge MiningIntegrated Intelligent ResearchNo ratings yet

- Agricultural Data Mining - Exploratory and Predictive Model For Finding Agricultural Product PatternsDocument8 pagesAgricultural Data Mining - Exploratory and Predictive Model For Finding Agricultural Product PatternsIntegrated Intelligent ResearchNo ratings yet

- Paper11 PDFDocument5 pagesPaper11 PDFIntegrated Intelligent ResearchNo ratings yet

- A Review Paper On Outlier Detection Using Two-Phase SVM Classifiers With Cross Training Approach For Multi - Disease DiagnosisDocument7 pagesA Review Paper On Outlier Detection Using Two-Phase SVM Classifiers With Cross Training Approach For Multi - Disease DiagnosisIntegrated Intelligent ResearchNo ratings yet

- Paper13 PDFDocument8 pagesPaper13 PDFIntegrated Intelligent ResearchNo ratings yet

- Paper13 PDFDocument8 pagesPaper13 PDFIntegrated Intelligent ResearchNo ratings yet

- Salary Slip Oct PacificDocument1 pageSalary Slip Oct PacificBHARAT SHARMANo ratings yet

- Fundamental of Investment Unit 5Document8 pagesFundamental of Investment Unit 5commers bengali ajNo ratings yet

- 1 Estafa - Arriola Vs PeopleDocument11 pages1 Estafa - Arriola Vs PeopleAtty Richard TenorioNo ratings yet

- 2020-05-14 County Times NewspaperDocument32 pages2020-05-14 County Times NewspaperSouthern Maryland OnlineNo ratings yet

- LPM 52 Compar Ref GuideDocument54 pagesLPM 52 Compar Ref GuideJimmy GilcesNo ratings yet

- Insulators and Circuit BreakersDocument29 pagesInsulators and Circuit Breakersdilja aravindanNo ratings yet

- Cercado VsDocument1 pageCercado VsAnn MarieNo ratings yet

- MSBI Installation GuideDocument25 pagesMSBI Installation GuideAmit SharmaNo ratings yet

- "60 Tips On Object Oriented Programming" BrochureDocument1 page"60 Tips On Object Oriented Programming" BrochuresgganeshNo ratings yet

- ABS Rules for Steel Vessels Under 90mDocument91 pagesABS Rules for Steel Vessels Under 90mGean Antonny Gamarra DamianNo ratings yet

- Lec - Ray Theory TransmissionDocument27 pagesLec - Ray Theory TransmissionmathewNo ratings yet

- The Human Resource Department of GIK InstituteDocument1 pageThe Human Resource Department of GIK InstitutexandercageNo ratings yet

- ECON Value of The FirmDocument4 pagesECON Value of The FirmDomsNo ratings yet

- BS EN 364-1993 (Testing Methods For Protective Equipment AgaiDocument21 pagesBS EN 364-1993 (Testing Methods For Protective Equipment AgaiSakib AyubNo ratings yet

- POS CAL SF No4 B2 BCF H300x300 7mmweld R0 PDFDocument23 pagesPOS CAL SF No4 B2 BCF H300x300 7mmweld R0 PDFNguyễn Duy QuangNo ratings yet

- CCS PDFDocument2 pagesCCS PDFАндрей НадточийNo ratings yet

- Tata Chemicals Yearly Reports 2019 20Document340 pagesTata Chemicals Yearly Reports 2019 20AkchikaNo ratings yet

- Ten Golden Rules of LobbyingDocument1 pageTen Golden Rules of LobbyingChaibde DeNo ratings yet

- PS300-TM-330 Owners Manual PDFDocument55 pagesPS300-TM-330 Owners Manual PDFLester LouisNo ratings yet

- SDNY - Girl Scouts V Boy Scouts ComplaintDocument50 pagesSDNY - Girl Scouts V Boy Scouts Complaintjan.wolfe5356No ratings yet

- EFM2e, CH 03, SlidesDocument36 pagesEFM2e, CH 03, SlidesEricLiangtoNo ratings yet

- Leases 2Document3 pagesLeases 2John Patrick Lazaro Andres100% (1)

- Chapter 3: Elements of Demand and SupplyDocument19 pagesChapter 3: Elements of Demand and SupplySerrano EUNo ratings yet

- Legal Techniques (2nd Set)Document152 pagesLegal Techniques (2nd Set)Karl Marxcuz ReyesNo ratings yet