Professional Documents

Culture Documents

Can Complex Systems Be Engineered

Uploaded by

LPomelo7Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Can Complex Systems Be Engineered

Uploaded by

LPomelo7Copyright:

Available Formats

Can Complex Systems Be Engineered?

Lessons

from Life

Anthony H. Dekker

Defence Science and Technology Organisation

DSTO Fern Hill

Department of Defence, Canberra ACT 2600, Australia

Email: dekker@ACM.org

Abstract. Recently there has been considerable debate as to whether Complex Systems can be

engineered. Can engineering techniques be applied to Complex Systems, or are there fundamental

attributes of Complex Systems that would prevent this? A first glance at the Complex Systems

literature suggests a negative answer, but this is partly due to the fact that complex systems theorists

often look for unstable, chaotic, and interesting behaviour modes, while engineers look for stable,

regular, and predictable modes. Looking more deeply at the Complex Systems literature, we suggest

that the answer is yes, and draw out a number of design principles for engineering in the Complex

Systems space. We illustrate these principles on the one hand by commonly-studied complex systems

such as the Game of Life, and on the other hand by real socio-technical systems. These design

principles are particularly related to properties of the underlying network topology of the Complex

System under consideration.

Keywords: Complex Systems, Systems Engineering, Software Safety, Network, Game of Life

Conways Game of Life (Sarkar 2000), and

secondly from a number of real-world examples.

1. INTRODUCTION

Is it possible to engineer Complex Systems?

This question has prompted considerable debate

(Wilson et al. 2007), although engineers have

been working with Complex Systems for many

years, and the problems that arise in todays

Complex Systems differ from those of the past

only in degree, not in kind. So-called Systems

of Systems are Complex Systems which are

particularly problematic because they:

involve a network of stakeholders, rather

than a rigid management hierarchy, so that

problem resolution requires negotiation;

require creative thinking when problems

arise;

need engineers to

conflicting objectives;

balance

multiple

and are in a constant state of flux.

Engineers have always faced these issues, of

course but as systems become larger and more

complex, these issues become more significant.

In this paper, we will show how the theory of

Complex Systems leads to a number of general

approaches which help to tame system

complexity. We will draw these examples firstly

from that prototypical Complex System,

2. COMPLEX SYSTEMS

Complex Systems are characterised by

interactions between system components that

produce emergent system properties. The study

of Complex Systems involves several

complementary viewpoints:

The Social Viewpoint focuses on human

aspects of systems (Heyer 2004, Checkland

1981). Many Complex Systems incorporate

a human component.

The Biological Viewpoint draws on the

study of complex biological systems

existing in nature, such as ecosystems and

even individual organisms (Sol and

Goodwin 2000). Biological and social

systems both involve adaptivity as

conditions change. As the philosopher

Heraclitus pointed out 2500 years ago,

change and adaptivity are ubiquitous, so that

you cannot step twice into the same

stream, since the stream is constantly

changing (Copleston 1946). It is important

to know the ways in which a system will

adapt and the implications of its doing so.

The Mathematical Viewpoint focuses on the

topology of the underlying network of the

system (Barabsi 2002, Watts 2003), and on

measurable system attributes. Much of the

theory of Complex Systems arises from

applying the mathematical viewpoint to

physical and biological systems.

The Engineering Viewpoint concentrates on

building Complex Systems with desirable

characteristics.

This overlaps with the

mathematical viewpoint when analysing and

simulating systems, but also overlaps with

the social viewpoint in areas such as process

design.

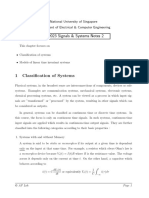

Figure 1 summarises these four viewpoints:

Social Viewpoint

Soft OR &

Process

Design

Engineering

Viewpoint

Adaptivity

Complex

System

Biological

Viewpoint

Complex

Systems

Theory

Analysis &

Simulation

Mathematical Viewpoint

Figure 1: Four Viewpoints on Complex

Systems

This paper will concentrate on the

engineering viewpoint, which attempts to tame

complexity rather than studying its fascinating

characteristics.

In

Complex

Systems

Engineering, both the system being built and

the organisation put together to build it, are

Complex Systems, and our comments will apply

to systems of both kinds.

3. STATE TRANSITION FORESTS

Let us consider a Complex System in terms

of the set S1SN of all possible states (the

number of states N may be extremely large). As

time progresses, states change according to a

deterministic state transition operation Si Sj

Sk

We can arrange the states into a State

Transition Forest (STF) by enumerating states

one by one and adding the chains of states they

transition to. If a state transitions to a previously

examined state in a different chain, the chains

join to form a tree. If the state transitions to a

previous state in the same chain, a back-link is

formed. The back-link defines a cycle, and

hence a periodic behaviour mode for the system

as a whole.

Figure 2 shows a simple illustration: the

system with the numbers 010 as states, and

transitions defined by x x2 mod 11. The state

transition forest for this system contains one

large tree and two small ones. Among the

important features of this forest are the indegrees of the states, that is, the number of

incoming arrows. For example, state 4 has an indegree of 2:

0

1

2

6

7

8

10

Figure 2: State Transition Forest for

x x2 mod 11

For each system, we can calculate the

average of the non-zero in-degrees in the state

transition forest, which we call din. For the

example in Figure 2, din = (1+2+2+2+2+2)/6 =

1.833. When din > 1, the expected depth of the

trees in the forest is approximately:

(log N ) / (log din)

For the example in Figure 2, this gives

(log 11)/(log 1.833) = 4.0, which in this case is

precisely the depth of the largest tree (though in

general it will not be exactly equal).

Since cycles of states are produced from

back-links in the state transition forest, the

expected value of the longest period is

approximately the same as . In Figure 2, it is 4,

which is in fact equal to (although again it will

not be exactly equal in general).

For systems where din is close to 1, the

expected length of the longest period is of the

order of N , by a version of the Birthday

Paradox. A more specific result is provided by

Wolfram et al. (1984). Summarising, the

expected longest period for a system with N

states is therefore:

if din 1

log N

log din

if din > 1

The Game of Life is a cellular automaton

where cells in a grid can be live (1) or dead (0),

and all cells change state (in parallel) based on

the number of live neighbours (horizontal,

vertical, or diagonal), as in Table 1.

Systems with an average nonzero in-degree

din > 1 are systems which erase old state

information (so that the details of the from

state is lost), and the higher din, the more rapidly

old state information is erased.

Why is erasing old state information

important? It contributes to system stability, and

fewer or shorter system oscillations. Imagine an

organisation with constantly changing equipment

and procedures, but which also temporarily

outposts staff to other units. When outposted

staff return, they incorporate old state

information in the form of out-of-date

procedures.

This can cause serious

organisational problems.

Organisational

methods for addressing this include compulsory

training, and specialised refresher training for

staff returning from outposted positions.

Distribution of key standards, requirements, and

decisions is also important, and should be

combined with mechanisms that force staff to

return out-of-date copies.

In software systems, old state information is

erased by, for example, setting blocks of memory

to all zeros before they are reused. This helps to

avoid obscure bugs.

4. THE GAME OF LIFE

When studying finite grids for the Game of

Life, the usual practice is to use a rectangle with

opposite

edges

viewed

as

connected

(topologically this corresponds to a torus). For

an mn grid, the number of different states or

patterns is N = 2mn.

We define the period of a pattern p as:

0, if the pattern p eventually dies out

(becomes all zeros);

1, if the pattern p becomes stable: p q

q

k 2, if the pattern p becomes a k-loop: p

q1 q2 qk q1

On a finite grid, every pattern has a welldefined period.

For a particular size and shape of finite grid,

we define the spectrum to be the distribution of

periods for randomly chosen starting patterns.

Figure 3 shows the spectrum for an 88 toroidal

Game of Life, showing examples of patterns with

period 0, 1, 2, 6, 32, 48, and 132 (periods 4, 8, 9,

16, and 20 are also possible, but rare). Notice

that the maximum period of 132 is very small

compared to the number of states N = 264, but has

the same order of magnitude as the logarithm of

the number of states. This is consistent with a

state transition forest with din > 1, i.e. one erasing

old state information.

The Game of Life (invented by John Conway

in 1970) is one of the most famous Complex

Systems (Sarkar 2000), and one of the easiest to

describe.

50%

40%

Frequency

Table 1: Cell Transition Rules for the

Game of Life

60%

30%

20%

Old

Cell

Live

Neighbours

New

Cell

Explanation

Birth

10%

0%

0

20

40

60

80

100

120

140

Period

No change

0, 1

Death from

isolation

Figure 3: Spectrum for the Game of Life

on an 88 Toroidal Grid

2, 3

Survival

48

Death by overcrowding

The one-dimensional analogue of the Game

of Life, sometimes called Rule 90 (Wolfram

2002) uses a ring of n cells, with state changes

defined by Table 2:

Table 2: Cell Transition Rules for OneDimensional Game of Life

Old

Cell

Live

Neighbours

New

Cell

Explanation

Birth

0, 2

No change

Death from

isolation

Survival

Death by overcrowding

For rings of size n where n is a prime number

not close to a power of 2, the most common

period is 2(n1)/2 1. For example, random

starting states on rings of size 11, 13, 19, and 23

give periods of 2(n1)/2 1 (i.e. periods of 31, 63,

511, and 2047) 99.9% of the time (for other ring

sizes, the period is less). The period 2(n1)/2 1 is

approximately:

1

2

This is consistent with a state transition forest

having din 1, i.e. one not erasing old state

information.

Disturbances propagate symmetrically along

both sides of the ring, and reflect back from

the other side. Each propagation changes the

cells it passes, and this in turn alters the impact

of the next propagation. Because old state

information is not erased, the disturbance

continues to move around the ring many times,

giving an extremely large period.

In contrast, if we produce a version of the

Game of Life where every cell is connected to all

the others, it is easy to show that the period will

always be at most 2, no matter which variation of

Table 1 we use. This corresponds to a state

transition forest with very large din (e.g. the

square root or cube root of N ).

The diameter of the underlying network is an

important factor in these variations of the Game

of Life. An nn square Game of Life grid has

diameter n/2, but the fully connected version

has diameter 1 (since all cells are one link apart).

Systems with low diameter tend to damp out

propagated disturbances more quickly (since the

disturbance returns after less time), and hence

tend to have much higher values of din.

Sometimes networks are defined so that this

relationship between diameter and din does not

hold, and it is din that is the more significant in

such cases. For example, in the random Boolean

networks of Kauffman (1995), large diameters

are associated with extremely high din and hence

periodic behaviour, while high connectivity is

associated with no erasure of state information

(din 1) and hence a period of N .

We can summarise the different kinds of

system behaviour demonstrated within these

variations of the Game of Life by adapting

Wolframs empirical classification of cellular

automata (Sarkar 2000).

In our modified

classification, it is the structure of the state

transition forests that is significant. There are

four classes of system:

Class 1 (stable): all patterns have period 0 or

1.

Class 2 (oscillatory): this extends class 1, in

that all patterns have small periods (much

smaller in size than log N ). This corresponds to

state transition forests with very high din, and

systems with underlying networks of very small

diameter. The fully connected Game of Life is

an example.

Class 3 (chaotic): class 1 or 2 behaviour can

occur, but some patterns have a very large

period, of order N . This corresponds to state

transition forests with din 1, and systems with

underlying networks of very large diameter. The

one-dimensional Game of Life is an example. In

real-world systems, the period of order N will

often be longer than the lifespan of the Universe,

giving essentially random behaviour.

Class 4 (complex): class 1 or 2 behaviour

can occur, but also patterns with periods of order

log N, giving a characteristic spectrum like that

in Figure 3. This corresponds to state transition

forests with din > 1, but where din is small

compared to N. The underlying network of the

system typically has a diameter of moderate size.

The ordinary Game of Life is an example.

When we engineer Complex Systems, we

would prefer their behaviour to be restricted to

classes 1 and 2. We can do this by putting in

place damping mechanisms to erase old state

information, and by reducing the diameter of the

systems

underlying

network,

so

that

disturbances do not propagate for long periods of

time.

Although the Game of Life is a famous

example of complexity, aficionados do in fact

engineer complicated Game of Life patterns to

achieve non-trivial goals. This engineering is

accomplished by:

(a) defining an ontology of stable or

oscillatory

sub-patterns,

such

as

blocks, gliders, glider guns,

eaters, spaceships, etc. [see Honour

and Valerdi (2006) for applications of

ontologies to Systems Engineering];

(b) conducting computer searches to find

patterns with specified properties;

(c) assembling patterns by placing them

adjacent to each other; and

(d) controlling interactions so that most

adjacent patterns do not interact, and the

ones that do interact do so in carefully

specified ways, for example by

exchanging gliders.

In general, engineering Complex Systems

requires controlling interactions. However, we

must not eliminate so many interactions that the

diameter of the underlying system network is

increased.

One way of reconciling these

conflicting goals is to introduce hub

components which interact with many different

parts of the system, but which do so in very

carefully specified ways. The bus inside a

computer or microprocessor is an example of

such a hub. Widely circulated documents,

standards, ontologies, and models are also hubs,

particularly when they are part of an easily

accessible online information repository.

However, they need to be carefully written so

that different people will interpret them in the

same way. If that is difficult, then processes

need to be put in place to resolve ambiguities and

widely disseminate the resolution.

Organisationally, a small underlying network

diameter can also be obtained by moving at least

partially from a hierarchical structure to an

Edge Organisation (Alberts and Hayes 2003,

2006), and by holding regular crossorganisational meetings and workshops on issues

of wide interest.

Many natural Complex Systems obtain a

small diameter for the underlying network with a

scale-free network topology (Barabsi 2002).

This is also possible for designed systems in

modern software packages, a low diameter can

result from an approximately scale-free topology

of module interconnectivity (Wen and Dromey

2006).

5. INTERACTION PROBLEMS

Safety expert Nancy Leveson points out that

the most challenging problems in building

complex systems today arise in the interfaces

between components.

In controlling

interactions between system components, we

wish to avoid two dangers:

an excessively large number of interactions

(as in software systems written in assembly

language, or using shared-memory threads);

or

a large underlying network diameter, which

may result in complex or chaotic behaviour,

as described in the previous section.

However, there are other features of the

underlying system network which are potentially

dangerous. One of them occurs when two

system components A and B both control a

component C (the troublesome triangle):

A

C

B

Figure 4: The Troublesome Triangle

Failure to coordinate inputs from components

A and B can result in incorrect behaviour by C,

and possibly system failure. Where A and/or B

have a human component, adaptation may

reduce the effectiveness of the coordination.

This can occur when people learn more

efficient short-cut procedures which rely on

assumptions which may not always be true

(Leveson 2004).

One example involving this troublesome

triangle was the tragic death of 71 people in a

midair collision over Ueberlingen in 2003

(Nunes and Laursen 2004). A contributing factor

to the collision was conflicting instructions to the

pilots from air traffic control on the one hand,

and the onboard Traffic Collision and Avoidance

System (TCAS) on the other. The overall system

did not contain mechanisms for coordinating or

resolving conflicts between air traffic control and

TCAS. Figure 5 gives a simplified view of this

system:

6. SOFTWARE SYSTEMS

Pilot 1

Air Traffic

Controller

Some of the most complex systems ever

designed by human beings have incorporated

large software subsystems. Some of these

systems have failed spectacularly, either in use,

or by being abandoned partway through

development (Brooks 1975; Fiadeiro 2007).

Figure 5: Troublesome Triangles for the

Traffic Collision and Avoidance System

(TCAS)

The Therac-25 X-ray therapy machine is one

of the more serious examples. The Therac-25

fatally overdosed several patients, as a result of

uncontrolled component interactions (assembly

language and shared-memory concurrency were

used) and a troublesome triangle in the control

of the radiation beam (Leveson 1995). Figure 7

illustrates the main system components:

TCAS 1

Collision

Inter-aircraft

communication

protocol

TCAS 2

Pilot 2

Another example was the shooting down of

two US Army Black Hawk helicopters by US Air

Force F-15s in the No Fly Zone over Iraq in

1994. Among the contributing factors to this

friendly fire incident was the fact that

helicopters and F-15s in the same airspace were

guided by different controllers on the same

AWACS aircraft (Leveson et al.

2002).

Consequently, three uncoordinated influence

chains impacted on the unfortunate Black Hawk

pilots, as shown in Figure 6:

Combined Task Force Commander

Air Component

Commander

Land Component

Commander

Airborne

Cmd Element

Senior

Director

No Fly Zone

Controller

Enroute

and Helo

Controller

F-15 Pilots

Shoot down

AWACS

Sensors

Operator

Computer

Controls

Turntable

Starts

Patient

Modifies

Radiation

Beam

Figure 7: The Troublesome Triangle in

the Therac-25 X-ray System

Traditional

software

techniques

controlling interactions include:

for

replacing goto commands by higher-level if,

for, and while constructs;

Black Hawk

Pilots

Figure 6: Simplified Control Structure for

No-Fly Zone Friendly Fire Incident

Organisational examples of the troublesome

triangle involve responsibilities for C shared

between organisational units A and B. Managing

such shared responsibilities requires effective

information exchange and liaison mechanisms,

including cross-posted staff, shared training, and

opportunities to discuss issues of common

interest.

controlled exception-handling, as in Java

(Gosling et al. 1996), which simplifies the

system state on exceptional conditions (an

example of erasing old state information);

discouraging globally writable data, in

favour of information hiding and

modularisation (Pressman 1992);

reducing sub-program side-effects (Storey

1996), or eliminating them completely as in

functional programming languages (Reade

1989);

using communicating processes (as in

Unix), rather than shared-memory threads;

type systems, assertions, and array bounds

checks (as in Java), to control the way that

data is accessed, and to provide safety

checks;

module interfaces which control and specify

interactions, including the use of types,

access control, and contracts (Fiadeiro

2007);

well-structured operating systems, such as

Unix, which provide a controlled

environment for software to run in;

static (compile-time) analysis of programs

to identify at least some undesirable

interactions; and

user interfaces which clearly communicate a

model of the systems current state, and its

expected state following user action.

All these methods are instances of the more

general techniques of reducing the number of

interactions, controlling the kinds of interactions,

and making the interactions more predictable.

These general techniques are applicable to all

Complex Systems.

7. DISCUSSION

Yes, it is possible to engineer Complex

Systems. Complex Systems Engineering is like

Traditional Systems Engineering, only more so.

Engineering Complex Systems is, however,

difficult. It demands even higher levels of

creativity and willingness to collaborate than

ordinary engineering does. This in turn requires

explicit management commitment to growing the

necessary staff particularly in developing their

people skills.

Unexpected things can occur in Complex

Systems, and dealing with this requires a

combination of planning ahead and responding

to problems as they arise.

The boundaries of Complex Systems need to

be drawn widely if there is debate about the

system boundary, then the broader definition is

probably the right one.

Intelligent risk

management needs to be applied to potential

problems near the system boundary.

The human components of Complex Systems

need to be understood, and appropriate

techniques (Heyer 2004) need to be applied to

understand them.

An important aspect of Complex Systems

Engineering is erasing old state information, and

replacing it with new information. This includes

continually

updated

online

information

repositories, and appropriate education and

training for the human elements of the system.

This applies to both the system being designed,

and the system responsible for designing it.

The underlying network of the system should

have a low diameter. One way of achieving this

is hub components which tie the whole system

together. Information repositories are hubs in

this sense, and within organisational systems,

workshops and meetings can fill the same role.

The hubs require particular care to ensure that

they are error-free.

It is also important to use traditional

engineering

approaches

for

reducing

subcomponent interactions and making them

predictable.

These techniques include

modularisation, interface specifications, and

avoidance of troublesome triangles, in which

components A and B both control C, but without

adequate coordination.

However, reducing

interactions should not compromise the low

diameter of the underlying system network.

Finally, it is important to understand the

ways in which the system can adapt, and to be

aware of the positive and negative impacts of

such adaptivity.

8. ACKNOWLEDGEMENTS

The author is grateful to Ed Kazmierczak,

Bernard Colbert, Anne-Marie Grisogono, se

Jakobsson, Jon Rigter, and Tim Smith for

discussions on Complex Systems Engineering.

9. REFERENCES

Alberts, D.S. and Hayes, R.E. (2003), Power to

the Edge, CCRP Press, Washington.

Available

at

www.dodccrp.org/files/Alberts_Power.pdf

Alberts, D.S. and Hayes, R.E. (2006),

Understanding Command and Control,

CCRP Press, Washington. Available online

at www.dodccrp.org/files/Alberts_UC2.pdf

Barabsi, A.-L.

Publishing.

(2002),

Linked,

Perseus

Blanchard, B.S. and Fabrycky, W.J. (1998),

Systems Engineering and Analysis, 3rd

edition, Prentice Hall.

Brooks, F.P. (1975), The Mythical Man-Month:

Essays on Software Engineering, AddisonWesley.

Checkland, P. (1981), Systems Thinking, Systems

Practice, Wiley.

Copleston, F. (1946), A History of Philosophy,

Volume 1: Greece and Rome, Continuum

Books.

Dekker, A.H. (2007), Using Tree Rewiring to

Study Edge Organisations for C2,

Proceedings of SimTecT 2007.

Fiadeiro, J.L. (2007), Designing for Softwares

Social Complexity, Computer 40 (1),

January, pp 3439.

Gosling, J., Joy, B. and Steele, G. (1996), The

Java Language Specification, AddisonWesley.

Heyer, R. (2004), Understanding Soft

Operations Research: The methods, their

application and its future in the Defence

setting, DSTO Report DSTO-GD-0411:

www.dsto.defence.gov.au/publications/3451/

DSTO-GD-0411.pdf

Honour, E. and Valerdi, R. (2006), Advancing

an Ontology for Systems Engineering to

Allow Consistent Measurement, 4th

Conference

on

Systems

Engineering

Research, Los Angeles, April.

Kauffman, S.A. (1995), At Home in the

Universe: The Search for the Laws of SelfOrganization and Complexity, Oxford

University Press.

Leveson, N. (1995), Safeware: System Safety and

Computers, Addison-Wesley.

Leveson, N. (2000), Intent Specifications: An

Approach to Building Human-Centered

Specifications, IEEE Transactions on

Software Engineering, 26 (1), January, pp

1535. At sunnyday.mit.edu/papers/intenttse.pdf

Leveson, N. (2004), A New Accident Model for

Engineering Safer Systems, Safety Science,

42 (4), April, pp 237270. Available at

sunnyday.mit.edu/accidents/safetysciencesingle.pdf

Leveson, N., Allen, P. and Storey, M.-A. (2002),

The Analysis of a Friendly Fire Accident

Using a Systems Model of Accidents, Proc.

International Conference of the System

Safety Society. Available online at

sunnyday.mit.edu/accidents/issc-bl-2.pdf

Nunes A. and Laursen, T. (2004), Identifying

the Factors That Contributed to the

Ueberlingen Midair Collision, Proc. 48th

Annual Chapter Meeting of the Human

Factors and Ergonomics Society, Sept 20

24, New Orleans.

Pressman, R. (1992), Software Engineering: A

Practitioners Approach, 3rd edition,

McGraw-Hill.

Reade, C. (1989), Elements of Functional

Programming, Addison-Wesley.

Sarkar, P. (2000), A Brief History of Cellular

Automata, ACM Computing Surveys, 32 (1),

March, pp 80107.

Sol, R. and Goodwin, B. (2000), Signs of Life:

How Complexity Pervades Biology, Basic

Books.

Storey, N. (1996), Safety-Critical Computer

Systems, Addison-Wesley.

Watts, D. (2003), Six Degrees: The Science of a

Connected Age, Vintage.

Wen, L. and Dromey, R.G. (2006), Component

Architecture and Scale-Free Networks,

Australian Software Engineering Conference

(ASWEC) Workshop on Complexity in ICT

Systems and Projects.

Wilson, S., Boyd, C., and Smeaton, A. (2007),

Beyond Traditional SE: Report on Panel at

SETE 2006, SESA Newsletter, No. 42,

March, pp 620.

Wolfram S. (2002), A New Kind of Science,

Wolfram Media, Champaign, IL.

Wolfram, S., Martin, O., and Odlyzko, A.M.

(1984), Algebraic Properties of Cellular

Automata,

Communications

in

Mathematical Physics, 93, March, pp 219

258.

Anthony Dekker obtained his PhD in Computer

Science from the University of Tasmania in 1991.

After working as an academic for five years, he

joined DSTO, where his interests include

networks, agent-based simulation, complex

systems, and organisational structures.

You might also like

- From Complexity in the Natural Sciences to Complexity in Operations Management SystemsFrom EverandFrom Complexity in the Natural Sciences to Complexity in Operations Management SystemsNo ratings yet

- New Directions in Dynamical Systems, Automatic Control and Singular PerturbationsFrom EverandNew Directions in Dynamical Systems, Automatic Control and Singular PerturbationsNo ratings yet

- Complexity TheoryDocument24 pagesComplexity TheoryPranav KulkarniNo ratings yet

- Applying The Optimal Control in The Study of Real World PhenomenaDocument15 pagesApplying The Optimal Control in The Study of Real World PhenomenaVasile SentimentNo ratings yet

- The Inherent Instability of Disordered Systems: New England Complex Systems Institute 277 Broadway Cambridge, MA 02139Document24 pagesThe Inherent Instability of Disordered Systems: New England Complex Systems Institute 277 Broadway Cambridge, MA 02139poheiNo ratings yet

- A Modeling Approach of System Reliability Based On StatechartsDocument7 pagesA Modeling Approach of System Reliability Based On StatechartsFDS_03No ratings yet

- Intro On Vibration AnalysisDocument5 pagesIntro On Vibration AnalysisEmri MeheshuNo ratings yet

- AutomaticaSurveyPaper PDFDocument20 pagesAutomaticaSurveyPaper PDFmouna abdelhakNo ratings yet

- 1 Introduction To Systems Modeling ConceptsDocument21 pages1 Introduction To Systems Modeling ConceptsSteven JongerdenNo ratings yet

- ExcerptDocument10 pagesExcerptsameinsNo ratings yet

- Dynamical SystemsDocument102 pagesDynamical SystemsMirceaSuscaNo ratings yet

- Michael J. McCourt, P. J. Antsaklis 2013 Control of Networked Switched SystemsDocument10 pagesMichael J. McCourt, P. J. Antsaklis 2013 Control of Networked Switched SystemsFelix GamarraNo ratings yet

- Eg 3170 NotesDocument38 pagesEg 3170 NotesAnonymous WkbmWCa8MNo ratings yet

- Restrepo 2006 Emergence 2Document9 pagesRestrepo 2006 Emergence 2pastafarianboyNo ratings yet

- Knowledge Area: Systems FundamentalsDocument31 pagesKnowledge Area: Systems Fundamentalsestefany mytchell noa ccalloNo ratings yet

- Frequency Locking in Countable Cellular Systems, Localization of (Asymptotic) Quasi-Periodic Solutions of Autonomous Differential SystemsDocument19 pagesFrequency Locking in Countable Cellular Systems, Localization of (Asymptotic) Quasi-Periodic Solutions of Autonomous Differential SystemsLutfitasari IttaqullohNo ratings yet

- NSF Complex SystemsDocument21 pagesNSF Complex SystemsBaltazar MarcosNo ratings yet

- Noise Enviorment CoordinationDocument20 pagesNoise Enviorment CoordinationRusty_Shackleford420No ratings yet

- Ijbc DLG 2012 PDFDocument10 pagesIjbc DLG 2012 PDFbdat2010No ratings yet

- CH01 ModelingSystemsDocument20 pagesCH01 ModelingSystemspraveen alwisNo ratings yet

- Works Cited ContextsDocument10 pagesWorks Cited ContextsPaulo CabeceiraNo ratings yet

- FSI Ch01Document13 pagesFSI Ch01Marcus Girão de MoraisNo ratings yet

- Disordered Systems Insights On Computational HardnessDocument42 pagesDisordered Systems Insights On Computational HardnessalekthieryNo ratings yet

- The Behavior of Dynamic Systems: 1.0 Where Are We in The Course?Document15 pagesThe Behavior of Dynamic Systems: 1.0 Where Are We in The Course?Mariaa Angeles StewartNo ratings yet

- CRYPTOLOGYDocument8 pagesCRYPTOLOGYNur HayatiNo ratings yet

- Helicopter Dynamics-10Document14 pagesHelicopter Dynamics-10KaradiasNo ratings yet

- Hybrid Systems - TutorialDocument66 pagesHybrid Systems - TutorialKarthik VazhuthiNo ratings yet

- Distribución Pareto Lognormal y OtrasDocument27 pagesDistribución Pareto Lognormal y OtrasjerweberNo ratings yet

- TMSChap 1Document21 pagesTMSChap 1Kjara HatiaNo ratings yet

- Digital Filters As Dynamical SystemsDocument18 pagesDigital Filters As Dynamical SystemsfemtyfemNo ratings yet

- Introdn To SystemsDocument7 pagesIntrodn To SystemsHarshal SpNo ratings yet

- Singular Perturbation An OverviewDocument46 pagesSingular Perturbation An OverviewBorislav BrnjadaNo ratings yet

- Elmenreich Et Al. - A Survey of Models and Design Methods For Self-Organizing Networked SystemsDocument11 pagesElmenreich Et Al. - A Survey of Models and Design Methods For Self-Organizing Networked Systemsfckw-1No ratings yet

- Ai Chapter 3Document22 pagesAi Chapter 3321106410027No ratings yet

- System DynamicsDocument39 pagesSystem DynamicsFahdAhmedNo ratings yet

- Harmonic Perturbations: Project PHYSNET Physics Bldg. Michigan State University East Lansing, MIDocument4 pagesHarmonic Perturbations: Project PHYSNET Physics Bldg. Michigan State University East Lansing, MIEpic WinNo ratings yet

- 322 Systems Engineering TrendsDocument18 pages322 Systems Engineering TrendsAmbaejo96No ratings yet

- Jacobi EDODocument10 pagesJacobi EDOJhon Edison Bravo BuitragoNo ratings yet

- Linear Dynamical System KalmanDocument41 pagesLinear Dynamical System KalmanNitin BhitreNo ratings yet

- Adaptive Cooperative Fuzzy Logic Controller: Justin Ammerlaan David WrightDocument9 pagesAdaptive Cooperative Fuzzy Logic Controller: Justin Ammerlaan David Wrightmenilanjan89nLNo ratings yet

- Dynamical SystemsDocument43 pagesDynamical Systemsdhruvi kapadiaNo ratings yet

- GCMS 11 010Document3 pagesGCMS 11 010cheko1990No ratings yet

- tmpB95C TMPDocument27 pagestmpB95C TMPFrontiersNo ratings yet

- Introduction To Control Systems Analysis and Design: Poles and ZerosDocument10 pagesIntroduction To Control Systems Analysis and Design: Poles and ZerosAzhar MalikNo ratings yet

- Human Geo ModelsDocument102 pagesHuman Geo ModelsBulbulNo ratings yet

- Hardware - Basic Requirements For Implementation: Jkinsergmu - EduDocument8 pagesHardware - Basic Requirements For Implementation: Jkinsergmu - EduJobQuirozNo ratings yet

- Espacios ConexosDocument28 pagesEspacios ConexosLuisa Fernanda Monsalve SalamancaNo ratings yet

- Signals and Systems-Chen PDFDocument417 pagesSignals and Systems-Chen PDFMrdemagallanes100% (1)

- Economics of ChaosDocument32 pagesEconomics of ChaosJingyi ZhouNo ratings yet

- Applications of Dynamical SystemsDocument32 pagesApplications of Dynamical SystemsAl VlearNo ratings yet

- Curran Emmett 1969Document74 pagesCurran Emmett 1969Aijaz AhmedNo ratings yet

- Physically Oriented Modeling of Heterogeneous Systems: Peter SchwarzDocument12 pagesPhysically Oriented Modeling of Heterogeneous Systems: Peter SchwarzManasi PattarkineNo ratings yet

- Chaos and Its Computing Paradigm: Dwight KuoDocument3 pagesChaos and Its Computing Paradigm: Dwight KuoRicku LpNo ratings yet

- Unit I: System Definition and ComponentsDocument11 pagesUnit I: System Definition and ComponentsAndrew OnymousNo ratings yet

- Thinking SystemicallyDocument45 pagesThinking SystemicallykrainajackaNo ratings yet

- EE2023 Signals & Systems Notes 2Document11 pagesEE2023 Signals & Systems Notes 2FarwaNo ratings yet

- Analysis of Intelligent System Design by Neuro Adaptive ControlDocument11 pagesAnalysis of Intelligent System Design by Neuro Adaptive ControlIAEME PublicationNo ratings yet

- Models of Linear SystemsDocument42 pagesModels of Linear SystemsFloro BautistaNo ratings yet

- Boussineq PaperDocument28 pagesBoussineq PaperJesús MartínezNo ratings yet

- DCSDocument49 pagesDCSRakesh ShinganeNo ratings yet

- Write Your EssayDocument3 pagesWrite Your EssayLPomelo7No ratings yet

- Godot Engine Game Development in 24 HoursDocument664 pagesGodot Engine Game Development in 24 HoursLPomelo7100% (1)

- I Am Some Random PDFDocument1 pageI Am Some Random PDFLPomelo7No ratings yet

- Artificial Inteligence PDFDocument328 pagesArtificial Inteligence PDFLPomelo7No ratings yet

- RND TestingDocument49 pagesRND TestingLPomelo7No ratings yet

- Anchored Conversations Chatting in The Context of A DocumentDocument8 pagesAnchored Conversations Chatting in The Context of A DocumentLPomelo7No ratings yet

- Dead On Arrival - Survivor Profile Pack: Written & Developed by Aj FergusonDocument11 pagesDead On Arrival - Survivor Profile Pack: Written & Developed by Aj FergusonLPomelo7No ratings yet

- Dungeon Crawl Stone Soup ManualDocument43 pagesDungeon Crawl Stone Soup ManualLPomelo7No ratings yet

- Deluxe RulesDocument6 pagesDeluxe RulesLPomelo7No ratings yet

- Conversions Question 1Document13 pagesConversions Question 1rahul rastogiNo ratings yet

- Major Company Interview QuestionsDocument100 pagesMajor Company Interview Questionsapi-375775980% (5)

- Dynamic Binding in JavaDocument19 pagesDynamic Binding in JavaKISHOREMUTCHARLANo ratings yet

- Angular 2.0 Curriculum PDFDocument4 pagesAngular 2.0 Curriculum PDFAbhinashNo ratings yet

- Oxygene Is A Powerful General Purpose Programming LanguageDocument10 pagesOxygene Is A Powerful General Purpose Programming LanguageAshleeJoycePabloNo ratings yet

- Java PrsolutionsDocument30 pagesJava PrsolutionsvenkatnritNo ratings yet

- Presenti C#Document137 pagesPresenti C#Yacine MecibahNo ratings yet

- JPR 01Document4 pagesJPR 01api-3728136No ratings yet

- Ashraf FouadDocument125 pagesAshraf Fouadamit.thechosen1100% (7)

- Cs101 Final Term Solved McqsDocument48 pagesCs101 Final Term Solved McqsZeeshanKavish67% (6)

- Programming Fundamentals: Lecturer XXXDocument30 pagesProgramming Fundamentals: Lecturer XXXPeterNo ratings yet

- 1z0-808 DumpsDocument199 pages1z0-808 DumpsJagadeesh Bodeboina0% (1)

- Eclipse Junit Testing TutorialDocument10 pagesEclipse Junit Testing TutorialDeependra MandrawalNo ratings yet

- Java ExceptionDocument19 pagesJava ExceptionrajuvathariNo ratings yet

- Vdmtools: Vdmtools User Manual (VDM++)Document30 pagesVdmtools: Vdmtools User Manual (VDM++)maNo ratings yet

- Debug C ApplicationDocument29 pagesDebug C ApplicationGaurav SharmaNo ratings yet

- Unit 3 Test (CH 4 5 6) 11-6-2013 KEYDocument31 pagesUnit 3 Test (CH 4 5 6) 11-6-2013 KEYOsama MaherNo ratings yet

- Test I Model AnswerDocument6 pagesTest I Model AnswerkiraNo ratings yet

- Exception QuestDocument19 pagesException QuestSaurabh SharmaNo ratings yet

- Java NotesDocument129 pagesJava Notesrohini pawarNo ratings yet

- Python Unit 1Document18 pagesPython Unit 1Rtr. Venkata chetan Joint secretaryNo ratings yet

- Exception Handling FundamentalsDocument4 pagesException Handling FundamentalsRamakrishnan SrinivasanNo ratings yet

- NAME:Kshitij Jha SUBJECT:Mathematics INDEX NO.:038Document207 pagesNAME:Kshitij Jha SUBJECT:Mathematics INDEX NO.:038Kshitij JhaNo ratings yet

- Labsheet - 2 - Java - ProgrammingDocument4 pagesLabsheet - 2 - Java - Programmingsarah smithNo ratings yet

- Dlubal Software OverviewDocument84 pagesDlubal Software Overviewbluefish80100% (2)

- Nailing 1Z0-808 Free SampleDocument68 pagesNailing 1Z0-808 Free SamplesergiorochaNo ratings yet

- Exception HandlingDocument33 pagesException HandlingSuvenduNo ratings yet

- 06 JavaDocument93 pages06 JavaSajedah Al-hiloNo ratings yet

- Slide I-App. PGMDocument96 pagesSlide I-App. PGMRishikaa RamNo ratings yet

- Conditionals StatementsDocument4 pagesConditionals StatementsFrancene AlvarezNo ratings yet