Professional Documents

Culture Documents

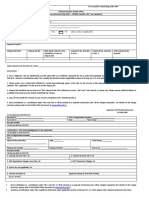

Text mining corpus creation and analysis in R

Uploaded by

ratan2030 ratings0% found this document useful (0 votes)

42 views2 pagestmcode text mining

Original Title

tmcode text mining

Copyright

© © All Rights Reserved

Available Formats

TXT, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this Documenttmcode text mining

Copyright:

© All Rights Reserved

Available Formats

Download as TXT, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

42 views2 pagesText mining corpus creation and analysis in R

Uploaded by

ratan203tmcode text mining

Copyright:

© All Rights Reserved

Available Formats

Download as TXT, PDF, TXT or read online from Scribd

You are on page 1of 2

library(tm)

#Create Corpus - CHANGE PATH AS NEEDED

docs <- Corpus(DirSource("C:/Users/<YourPath>/Documents/TextMining"))

#Check details

inspect(docs)

#inspect a particular document

writeLines(as.character(docs[[30]]))

#Start preprocessing

toSpace <- content_transformer(function(x, pattern) { return (gsub(pattern, " ",

x))})

docs <- tm_map(docs, toSpace, "-")

docs <- tm_map(docs, toSpace, ":")

docs <- tm_map(docs, toSpace, "'")

docs <- tm_map(docs, toSpace, "'")

docs <- tm_map(docs, toSpace, " -")

#Good practice to check after each step.

writeLines(as.character(docs[[30]]))

#Remove punctuation - replace punctuation marks with " "

docs <- tm_map(docs, removePunctuation)

#Transform to lower case

docs <- tm_map(docs,content_transformer(tolower))

#Strip digits

docs <- tm_map(docs, removeNumbers)

#Remove stopwords from standard stopword list (How to check this? How to add you

r own?)

docs <- tm_map(docs, removeWords, stopwords("english"))

#Strip whitespace (cosmetic?)

docs <- tm_map(docs, stripWhitespace)

#inspect output

writeLines(as.character(docs[[30]]))

#Need SnowballC library for stemming

library(SnowballC)

#Stem document

docs <- tm_map(docs,stemDocument)

#some clean up

docs <- tm_map(docs, content_transformer(gsub),

pattern = "organiz", replacement = "organ")

docs <- tm_map(docs, content_transformer(gsub),

pattern = "organis", replacement = "organ")

docs <- tm_map(docs, content_transformer(gsub),

pattern = "andgovern", replacement = "govern")

docs <- tm_map(docs, content_transformer(gsub),

pattern = "inenterpris", replacement = "enterpris")

docs <- tm_map(docs, content_transformer(gsub),

pattern = "team-", replacement = "team")

#inspect

writeLines(as.character(docs[[30]]))

#Create document-term matrix

dtm <- DocumentTermMatrix(docs)

#inspect segment of document term matrix

inspect(dtm[1:2,1000:1005])

#collapse matrix by summing over columns - this gets total counts (over all docs

) for each term

freq <- colSums(as.matrix(dtm))

#length should be total number of terms

length(freq)

#create sort order (asc)

ord <- order(freq,decreasing=TRUE)

#inspect most frequently occurring terms

freq[head(ord)]

#inspect least frequently occurring terms

freq[tail(ord)]

#remove very frequent and very rare words

dtmr <-DocumentTermMatrix(docs, control=list(wordLengths=c(4, 20),

bounds = list(global = c(3,27))))

freqr <- colSums(as.matrix(dtmr))

#length should be total number of terms

length(freqr)

#create sort order (asc)

ordr <- order(freqr,decreasing=TRUE)

#inspect most frequently occurring terms

freqr[head(ordr)]

#inspect least frequently occurring terms

freqr[tail(ordr)]

#list most frequent terms. Lower bound specified as second argument

findFreqTerms(dtmr,lowfreq=80)

#correlations

findAssocs(dtmr,"project",0.6)

findAssocs(dtmr,"enterprise",0.6)

findAssocs(dtmr,"system",0.6)

#histogram

wf=data.frame(term=names(freqr),occurrences=freqr)

library(ggplot2)

p <- ggplot(subset(wf, freqr>100), aes(term, occurrences))

p <- p + geom_bar(stat="identity")

p <- p + theme(axis.text.x=element_text(angle=45, hjust=1))

p

#wordcloud

library(wordcloud)

#setting the same seed each time ensures consistent look across clouds

set.seed(42)

#limit words by specifying min frequency

wordcloud(names(freqr),freqr, min.freq=70)

#...add color

wordcloud(names(freqr),freqr,min.freq=70,colors=brewer.pal(6,"Dark2"))

You might also like

- Lab Digital Assignment 6 Data Visualization: Name: Samar Abbas Naqvi Registration Number: 19BCE0456Document11 pagesLab Digital Assignment 6 Data Visualization: Name: Samar Abbas Naqvi Registration Number: 19BCE0456SAMAR ABBAS NAQVI 19BCE0456No ratings yet

- Mod11 TextminingDocument4 pagesMod11 TextminingSandhya KuppalaNo ratings yet

- R语言基础入门指令 (tips)Document14 pagesR语言基础入门指令 (tips)s2000152No ratings yet

- Text mining cloth reviews for recommendation classificationDocument2 pagesText mining cloth reviews for recommendation classificationvedavarshniNo ratings yet

- 21blc1084_edalab11Document4 pages21blc1084_edalab11vishaldev.hota2021No ratings yet

- RDataMining Slides Text MiningDocument34 pagesRDataMining Slides Text MiningSukhendra SinghNo ratings yet

- 9 20bec1318Document7 pages9 20bec1318Christina CeciliaNo ratings yet

- Naive Bayes Text Classification of 20 Newsgroups DataDocument3 pagesNaive Bayes Text Classification of 20 Newsgroups Databrahmesh_smNo ratings yet

- CodigoR PDFDocument1 pageCodigoR PDFTori RodriguezNo ratings yet

- Text Processing in R.RDocument3 pagesText Processing in R.RKen MatsudaNo ratings yet

- R CommandsDocument18 pagesR CommandsKhizra AmirNo ratings yet

- Spam Classification2Document21 pagesSpam Classification2paridhi kaushikNo ratings yet

- Spam ClassDocument21 pagesSpam Classparidhi kaushikNo ratings yet

- TP STDocument1 pageTP STSalma JedidiNo ratings yet

- Linux Tutorial Cheat SheetDocument2 pagesLinux Tutorial Cheat SheetHamami InkaZo100% (2)

- Text Mining Package and Datacleaning: #Cleaning The Text or Text TransformationDocument6 pagesText Mining Package and Datacleaning: #Cleaning The Text or Text TransformationArush sambyalNo ratings yet

- Scripts 1Document31 pagesScripts 1Anjali AgarwalNo ratings yet

- RDataMining Slides Text MiningDocument35 pagesRDataMining Slides Text MiningIqbal TawakalNo ratings yet

- Diary TopicDocument5 pagesDiary TopicRifki EdoNo ratings yet

- Text Mining CodeDocument3 pagesText Mining CodeyashsetheaNo ratings yet

- FAST TRACK 2021-2022: Slot: L29+L30+L59+L60Document10 pagesFAST TRACK 2021-2022: Slot: L29+L30+L59+L60SUKANT JHA 19BIT0359No ratings yet

- Package Inpdfr': R Topics DocumentedDocument29 pagesPackage Inpdfr': R Topics DocumentedahrounishNo ratings yet

- DOMDocument7 pagesDOMgopivanamNo ratings yet

- Alert For Database ChangeDocument2 pagesAlert For Database ChangepeddareddyNo ratings yet

- R FunctionsDocument8 pagesR FunctionsOwais ShaikhNo ratings yet

- RSQLML Final Slide 15 June 2019 PDFDocument196 pagesRSQLML Final Slide 15 June 2019 PDFThanthirat ThanwornwongNo ratings yet

- Step 1: Create A CSV File: # For Text MiningDocument9 pagesStep 1: Create A CSV File: # For Text MiningdeekshaNo ratings yet

- Write and Run Servlet Code To Fetch and Display All The Fields of A Student Table Stored in An OracleDocument4 pagesWrite and Run Servlet Code To Fetch and Display All The Fields of A Student Table Stored in An OracleRacheNo ratings yet

- This Powershell Script Allows To Download All The Files Inside A Folder From Azure Data Lake StorageDocument2 pagesThis Powershell Script Allows To Download All The Files Inside A Folder From Azure Data Lake StorageChandra ShekarNo ratings yet

- R Syntax GuideDocument6 pagesR Syntax GuidePedro CruzNo ratings yet

- TerraformDocument5 pagesTerraformilyas2sapNo ratings yet

- Tarea de Ciencia de DatosDocument32 pagesTarea de Ciencia de DatosLeomaris FerrerasNo ratings yet

- Writing Efficient R CodeDocument5 pagesWriting Efficient R CodeOctavio FloresNo ratings yet

- Aped For Fake NewsDocument6 pagesAped For Fake NewsBless CoNo ratings yet

- Econ589multivariateGarch RDocument4 pagesEcon589multivariateGarch RJasonClarkNo ratings yet

- CICS Commands: Indicates You Must Specify One of The OptionsDocument8 pagesCICS Commands: Indicates You Must Specify One of The Optionssug2010No ratings yet

- R Help CommandsDocument274 pagesR Help CommandsCody WrightNo ratings yet

- List Sas in DirDocument4 pagesList Sas in DirOb S QuraNo ratings yet

- Simple Tutorial in RDocument15 pagesSimple Tutorial in RklugshitterNo ratings yet

- Data Frames BenchmarkDocument40 pagesData Frames BenchmarkAsmatullah KhanNo ratings yet

- CICS Command ReferenceDocument10 pagesCICS Command ReferencenarasimhachinniNo ratings yet

- R StudioDocument25 pagesR StudioN KNo ratings yet

- Linux Lab RecordDocument48 pagesLinux Lab RecordHari Krishna KondaNo ratings yet

- 10-Visualization of Streaming Data and Class R Code-10!03!2023Document19 pages10-Visualization of Streaming Data and Class R Code-10!03!2023G Krishna VamsiNo ratings yet

- QuantedaDocument2 pagesQuantedaData ScientistNo ratings yet

- Cheat Sheet: Extract FeaturesDocument2 pagesCheat Sheet: Extract FeaturesGeraldNo ratings yet

- Machine Learning Theory (CS351) Report Text Classification Using TF-MONO Weighting SchemeDocument16 pagesMachine Learning Theory (CS351) Report Text Classification Using TF-MONO Weighting SchemeAmeya Deshpande.No ratings yet

- Machine LeaarningDocument32 pagesMachine LeaarningLuis Eduardo Calderon CantoNo ratings yet

- CSE100 Wk11 FilesDocument21 pagesCSE100 Wk11 FilesRazia Sultana Ankhy StudentNo ratings yet

- Import Numpy As NPDocument6 pagesImport Numpy As NPMaciej WiśniewskiNo ratings yet

- Unix CommandsDocument15 pagesUnix Commandssai.abinitio99No ratings yet

- PySpark Data Frame Questions PDFDocument57 pagesPySpark Data Frame Questions PDFVarun Pathak100% (1)

- Useful FoxPro CommandsDocument3 pagesUseful FoxPro Commandssmartguy007No ratings yet

- Bring Common UI Patterns to ADF with Tabbed Navigation, Context Menus and MoreDocument54 pagesBring Common UI Patterns to ADF with Tabbed Navigation, Context Menus and MoreSajid RahmanNo ratings yet

- Daily ChecksDocument4 pagesDaily CheckslakshmanNo ratings yet

- Text Mining: By: Dr. Arif MehmoodDocument6 pagesText Mining: By: Dr. Arif MehmoodFarhan AbbasiNo ratings yet

- ArimaDocument7 pagesArimaHospital BasisNo ratings yet

- Analítica Cross Selling: Prof José Antonio Taquía GutiérrezDocument17 pagesAnalítica Cross Selling: Prof José Antonio Taquía GutiérrezCarlo CerssoNo ratings yet

- NPS Contribution Form PDFDocument1 pageNPS Contribution Form PDFratan203No ratings yet

- FI ISIP Biller Registration-ICICIDocument10 pagesFI ISIP Biller Registration-ICICIratan203No ratings yet

- Learning Brochures OPMDocument18 pagesLearning Brochures OPMratan203No ratings yet

- FTF AsitDocument25 pagesFTF Asitratan203100% (2)

- 1513588238933Document460 pages1513588238933ratan203No ratings yet

- DB Structure Pivot EtcDocument1 pageDB Structure Pivot Etcratan203No ratings yet

- Creating Leanpub CoursesDocument39 pagesCreating Leanpub Coursesratan203No ratings yet

- The Startup Checklist Ebook PDFDocument22 pagesThe Startup Checklist Ebook PDFratan203No ratings yet

- Tidy Data PDFDocument24 pagesTidy Data PDFblueyes78No ratings yet

- Exponential SmoothingDocument5 pagesExponential Smoothingratan203No ratings yet

- When To Sell: GM BreweriesDocument12 pagesWhen To Sell: GM Breweriesratan203No ratings yet

- HDFC Sec PharmaDocument10 pagesHDFC Sec Pharmaratan203No ratings yet

- 30 December TO 1 January, 2020: 4Th Ica Open Below 1600 Fide Rating Chess TournamentDocument4 pages30 December TO 1 January, 2020: 4Th Ica Open Below 1600 Fide Rating Chess TournamentrahulNo ratings yet

- DB Structure Pivot EtcDocument14 pagesDB Structure Pivot Etcratan203No ratings yet

- NPS SUBSCRIBER REGISTRATION FORMDocument5 pagesNPS SUBSCRIBER REGISTRATION FORMratan203No ratings yet

- Top 10 Stocks To Buy Now 2018 Motilal Oswal PDFDocument26 pagesTop 10 Stocks To Buy Now 2018 Motilal Oswal PDFratan203No ratings yet

- Reliance Capital (RELCAP) : Businesses Growing, Return Ratios ImprovingDocument15 pagesReliance Capital (RELCAP) : Businesses Growing, Return Ratios Improvingratan203No ratings yet

- SCMA 632 SyllabusDocument1 pageSCMA 632 Syllabusratan203No ratings yet

- Ashiana Housing Investor UpdateDocument25 pagesAshiana Housing Investor Updateratan203No ratings yet

- Q4FY17 Earnings Report Reliance Capital LTD: Operating Income Ppop PAT Margin Net ProfitDocument5 pagesQ4FY17 Earnings Report Reliance Capital LTD: Operating Income Ppop PAT Margin Net Profitratan203No ratings yet

- M3665 MathGambing TalkDocument26 pagesM3665 MathGambing Talkratan203No ratings yet

- Aksharchem Annual Report 2011-12Document53 pagesAksharchem Annual Report 2011-12ratan203No ratings yet

- Edel 01-08-2017-07Document18 pagesEdel 01-08-2017-07ratan203No ratings yet

- Annual Report 2014 PDFDocument76 pagesAnnual Report 2014 PDFratan203No ratings yet

- MCX earnings decline on one-off costs but volumes recovery expectedDocument10 pagesMCX earnings decline on one-off costs but volumes recovery expectedratan203No ratings yet

- MCX - 3QFY18 - HDFC Sec-201801170938543652976Document12 pagesMCX - 3QFY18 - HDFC Sec-201801170938543652976ratan203No ratings yet

- Candidate Guide: Financial Risk ManagerDocument24 pagesCandidate Guide: Financial Risk Managerratan203No ratings yet

- Piramal New Pharma Presentation Jan2017Document33 pagesPiramal New Pharma Presentation Jan2017ratan203No ratings yet

- TCS Buyback Opportunity To Earn Around 13% in 3 Months (62% Annualized)Document4 pagesTCS Buyback Opportunity To Earn Around 13% in 3 Months (62% Annualized)ratan203No ratings yet

- Fortis Healthcare: Performance In-Line With ExpectationsDocument13 pagesFortis Healthcare: Performance In-Line With Expectationsratan203No ratings yet

- Develop A Rexx Utility (Line Command) That Checks The Presence of The Datasets in The Logon Procedure.Document10 pagesDevelop A Rexx Utility (Line Command) That Checks The Presence of The Datasets in The Logon Procedure.Shreyansh SinhaNo ratings yet

- Parallel Programming Course Project ProposalDocument2 pagesParallel Programming Course Project ProposalAkhil GuptaNo ratings yet

- 8086 Hardware 2 MEMORY and IO IntefaceDocument46 pages8086 Hardware 2 MEMORY and IO IntefaceНемања БорићNo ratings yet

- Head Office and Branch Concept Demostrated For Both Vendors CustomersDocument13 pagesHead Office and Branch Concept Demostrated For Both Vendors CustomersAvinash Malladhi0% (1)

- Assignment - IA Linked Lists SolutionsDocument8 pagesAssignment - IA Linked Lists SolutionsVikalp HandaNo ratings yet

- XML GuideDocument212 pagesXML Guideapi-3731900100% (1)

- Solution of The Transportation ModelDocument15 pagesSolution of The Transportation ModelrahulNo ratings yet

- Rhce Rhcsa PaperDocument27 pagesRhce Rhcsa PaperArif Mohammed Rangrezz100% (1)

- Computational Mathematics With Python (Lecture Notes)Document438 pagesComputational Mathematics With Python (Lecture Notes)clausfse100% (2)

- A-Z Index of Windows CMD commandsDocument5 pagesA-Z Index of Windows CMD commandsgutadanutNo ratings yet

- KnapsackDocument24 pagesKnapsackPulkit SinghNo ratings yet

- S 32 NXPDocument2,029 pagesS 32 NXPlorenaNo ratings yet

- Data Representation: Digital Number SystemsDocument8 pagesData Representation: Digital Number Systemsspecific guy2019No ratings yet

- Approaches SDL CDocument84 pagesApproaches SDL CSitti NajwaNo ratings yet

- K Avian Pour 2017Document4 pagesK Avian Pour 2017Adeel TahirNo ratings yet

- A Comparative Study On Mobile Platforms (Android vs. IOS) : Smt. Annapurna, K.V.S. Pavan Teja, Dr. Y. Satyanarayana MurtyDocument7 pagesA Comparative Study On Mobile Platforms (Android vs. IOS) : Smt. Annapurna, K.V.S. Pavan Teja, Dr. Y. Satyanarayana MurtyAravindhNo ratings yet

- mODULE POOLDocument25 pagesmODULE POOLMoisés João100% (1)

- Difference Between Scanner and BufferReader Class in JavaDocument4 pagesDifference Between Scanner and BufferReader Class in JavaPratik SoniNo ratings yet

- NetApp StorageGRID Webscale Software DSDocument4 pagesNetApp StorageGRID Webscale Software DSSourav ChatterjeeNo ratings yet

- Solaris 10 For Experienced System Administrators Student GuideDocument434 pagesSolaris 10 For Experienced System Administrators Student GuideKrishna KumarNo ratings yet

- Date SheetDocument1 pageDate SheetVinayak BathwalNo ratings yet

- Survey On Stereovision Based Disparity Map Using Sum of Absolute DifferenceDocument2 pagesSurvey On Stereovision Based Disparity Map Using Sum of Absolute DifferenceInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- PIC 8259 Interrupt Controller Block Diagram and DescriptionDocument15 pagesPIC 8259 Interrupt Controller Block Diagram and DescriptionSuyash Sanjay SrivastavaNo ratings yet

- Calculating Averages - Flowcharts and PseudocodeDocument4 pagesCalculating Averages - Flowcharts and PseudocodeLee Kah Hou67% (3)

- Smart Labors: Final Year Project Task 2Document3 pagesSmart Labors: Final Year Project Task 2Misbah NaveedNo ratings yet

- Livro - Mastering Simulink PDFDocument9 pagesLivro - Mastering Simulink PDFGusttavo Hosken Emerich Werner GrippNo ratings yet

- AWS Compute With CLIDocument35 pagesAWS Compute With CLIsatyacharanNo ratings yet

- QALD-4 Open Challenge: Question Answering Over Linked DataDocument17 pagesQALD-4 Open Challenge: Question Answering Over Linked DataMeo Meo ConNo ratings yet

- Sandbox Management Best PracticesDocument24 pagesSandbox Management Best PracticesmnawazNo ratings yet

- Star Schema & SAP HANA Views: by Vishal SaxenaDocument10 pagesStar Schema & SAP HANA Views: by Vishal SaxenaarajeevNo ratings yet

- A Mathematician's Lament: How School Cheats Us Out of Our Most Fascinating and Imaginative Art FormFrom EverandA Mathematician's Lament: How School Cheats Us Out of Our Most Fascinating and Imaginative Art FormRating: 5 out of 5 stars5/5 (5)

- Basic Math & Pre-Algebra Workbook For Dummies with Online PracticeFrom EverandBasic Math & Pre-Algebra Workbook For Dummies with Online PracticeRating: 4 out of 5 stars4/5 (2)

- Mathematical Mindsets: Unleashing Students' Potential through Creative Math, Inspiring Messages and Innovative TeachingFrom EverandMathematical Mindsets: Unleashing Students' Potential through Creative Math, Inspiring Messages and Innovative TeachingRating: 4.5 out of 5 stars4.5/5 (21)

- Quantum Physics: A Beginners Guide to How Quantum Physics Affects Everything around UsFrom EverandQuantum Physics: A Beginners Guide to How Quantum Physics Affects Everything around UsRating: 5 out of 5 stars5/5 (2)

- Making and Tinkering With STEM: Solving Design Challenges With Young ChildrenFrom EverandMaking and Tinkering With STEM: Solving Design Challenges With Young ChildrenNo ratings yet

- Mental Math Secrets - How To Be a Human CalculatorFrom EverandMental Math Secrets - How To Be a Human CalculatorRating: 5 out of 5 stars5/5 (3)

- Calculus Made Easy: Being a Very-Simplest Introduction to Those Beautiful Methods of Reckoning Which are Generally Called by the Terrifying Names of the Differential Calculus and the Integral CalculusFrom EverandCalculus Made Easy: Being a Very-Simplest Introduction to Those Beautiful Methods of Reckoning Which are Generally Called by the Terrifying Names of the Differential Calculus and the Integral CalculusRating: 4.5 out of 5 stars4.5/5 (2)

- Psychology Behind Mathematics - The Comprehensive GuideFrom EverandPsychology Behind Mathematics - The Comprehensive GuideNo ratings yet

- A Guide to Success with Math: An Interactive Approach to Understanding and Teaching Orton Gillingham MathFrom EverandA Guide to Success with Math: An Interactive Approach to Understanding and Teaching Orton Gillingham MathRating: 5 out of 5 stars5/5 (1)

- Mental Math: How to Develop a Mind for Numbers, Rapid Calculations and Creative Math Tricks (Including Special Speed Math for SAT, GMAT and GRE Students)From EverandMental Math: How to Develop a Mind for Numbers, Rapid Calculations and Creative Math Tricks (Including Special Speed Math for SAT, GMAT and GRE Students)No ratings yet

- The Long Hangover: Putin's New Russia and the Ghosts of the PastFrom EverandThe Long Hangover: Putin's New Russia and the Ghosts of the PastRating: 4.5 out of 5 stars4.5/5 (76)

- Build a Mathematical Mind - Even If You Think You Can't Have One: Become a Pattern Detective. Boost Your Critical and Logical Thinking Skills.From EverandBuild a Mathematical Mind - Even If You Think You Can't Have One: Become a Pattern Detective. Boost Your Critical and Logical Thinking Skills.Rating: 5 out of 5 stars5/5 (1)

- Math Magic: How To Master Everyday Math ProblemsFrom EverandMath Magic: How To Master Everyday Math ProblemsRating: 3.5 out of 5 stars3.5/5 (15)

- Fluent in 3 Months: How Anyone at Any Age Can Learn to Speak Any Language from Anywhere in the WorldFrom EverandFluent in 3 Months: How Anyone at Any Age Can Learn to Speak Any Language from Anywhere in the WorldRating: 3 out of 5 stars3/5 (79)

- How Math Explains the World: A Guide to the Power of Numbers, from Car Repair to Modern PhysicsFrom EverandHow Math Explains the World: A Guide to the Power of Numbers, from Car Repair to Modern PhysicsRating: 3.5 out of 5 stars3.5/5 (9)

- Strategies for Problem Solving: Equip Kids to Solve Math Problems With ConfidenceFrom EverandStrategies for Problem Solving: Equip Kids to Solve Math Problems With ConfidenceNo ratings yet

- Limitless Mind: Learn, Lead, and Live Without BarriersFrom EverandLimitless Mind: Learn, Lead, and Live Without BarriersRating: 4 out of 5 stars4/5 (6)