Professional Documents

Culture Documents

Jurnal - Gauss Newton

Uploaded by

Julham Efendi NasutionOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Jurnal - Gauss Newton

Uploaded by

Julham Efendi NasutionCopyright:

Available Formats

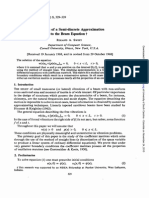

Computers M~h. Applic. VoL 25, No. 1, pp.

53-63, 1993

Printed in Great Britain. All rights reserved

0097-4943/93 $5.00 + 0.00

Copyright(_) 1992 Pergamon Press Ltd

A QUASI-GAUSS-NEWTON METHOD

FOR SOLVING N O N - L I N E A R A L G E B R A I C E Q U A T I O N S

H. WANG AND R. P . TEWAKSON

Department of Applied Mathematics & Statistics, State University of New York

Stony Brook, New York 11794-3600, U.S.A.

(Received December 1991)

Abstract---One of the most popular algorithms for solving systems of nonlinear algebraic equations

is the sequencing Q/t faztorization implementation of the quasi-Newton method. We propose a

significantly better algorithm and give computational results.

1. I N T R O D U C T I O N

Many important practical problems in science and engineering require the solution of systems of

nonlinear algebraic equations. The most widely used algorithm for this purpose is the sequencing QR factorization (SQRF) implementation of the quasi-Newton method [1]. We propose a

new algorithm that reduces the number of arithmetic operations significantly without losing the

advantages of SQRF, e.g., stability and the ability to monitor conditioning.

We consider the problem:

Given F : P~ --~ P~,

find ~. E R~ such that F(x.) = O.

We will now briefly outline Newton's method and then describe, in more detail, Broyden's method.

In the next section, we will show how this has naturally led to the SQRF method. Our method

is an enhancement of the SQRF method.

Newton's method is based on a first order approximation Lk to F. In a neighborhood of x~,

which is an estimate of the solution x.,

Lk(x) = F(zk) T J ( x ~ ) ( z - xk)

is a good approximation to F, where d(zk) is the Jacobian matrix at zk. Setting the above linear

equation to zero and solving for the Newton step s~, presumably, gives a better approximation

zk+l = zk + s~ to z.. Thus, given an initial guess x0, Newton's method attempts to improve x0

by the iteration

Xk-I-1

--

Xk

--

J(zk)-IF(zk),

k = O, 1,....

The corresponding algorithm is:

1. Compute F(z~). If norm F(zk) is small, then stop. Otherwise, compute the Jacobian

matrix J(xk ).

2. Solve the linear system J(x~)sk = -F(xk) for sk and set x~+l = xk + sk.

Newton's method is locally quadratically convergent. However, it has two disadvantages. First,

n 2 + n scalar function evaluations are required at each iteration to compute the finite difference

approximation to J(zk), when the analytical Jacobian is not available. This can be prohibitively

Research supported by NIH Grant 2 FrO1 DK17593.

Typeset by AA4S-TEX

53

54

H. WANe, R.P. TEWARSOU

expensive. Second, is that O(n 3) arithmetic operations are needed to solve the linear system for

the Newton step at each iteration.

Broyden's method [2] approximates Jacobian matrices rather than calculating them directly.

Broyden derived an approximation Bk to J(xk) such that the approximation Bk+l to J(xk+l)

may be obtained from Bk in O(n ~) arithmetic operations per iteration and evaluating F at only zt

and xt+l. To see the motivation for this method, consider a small neighbourhood around xk+l.

We have

F ( x ) ~ F(Xt+l) + J(Xt+l)(X - Xk+l).

If Bk+l is our next approximation to J ( z t + l ) , it seems reasonable that Bk+l satisfy the equation

F ( x t ) = F ( x t + l ) + Bt+l(Xk

-- ~gk+l).

If st = Xt+l - xt, then we get the so-called quasi-Newton condition.

Bt+l st = Yk = F(Xk+l) - F ( x t ) .

(1)

Except for the case n = 1, Bt+l is not uniquely determined. Suppose we had an approximation Bt to J(xk), Broyden reasoned that there is really no justification for having Bt+l differing

from Bt on the orthogonal complement of st, since the only information about F has been gained

in the direction determined by st. This leads to the requirement

Bk+lZ = BkZ,

if (z, st) = 0.

(2)

Clearly, (1) and (2) uniquely determine Bt+l from Bk and

Bk+l "- Bk + (Yt - Bk s t ) s T

STt S t

(3)

In fact, Broyden's update is the minimum change to Bk subject to quasi-Newton condition (see,

e.g., [3]), called least change secant approximation to Jacobian. There are many other secant

updates (see [4,5]).

This leads to the following iterative method of Broyden:

Given x0 E R n and Bo E R '*",

Solve Bt st : - F ( x k )

for st.

(4)

Yk : F(Xk+l) - F ( x t ) .

Then Xk+l : Xk + Sk,

Set Bt+l - Bt +

for k = 0, 1 , . . . .

(vk - B t s t ) s kr

ST St

It is clear that only n scalar function evaluations are needed per iteration. But, to implement

Broyden's method, one has to solve the linear system (4) at each iteration. Noticing that Bt+l

is a rank-one modification of Bt, it is natural to ask, is there a way to avoid O(n 3) operations

in order to solve (4)? The answer is yes. Originally, Sherman-Morrison-Woodbury formula was

used to get the inverse of a matrix after a rank-one modification:

(A + u v T ) - 1 : A -1 -- 1 A _ 1 u v T A -1 ,

(5)

O"

provided that A is nonsingular and ~ = 1 + vTA -1 u # 0, where u, v E R".

Straightforward application of (5) gives

Bk+l-i

= Bk-i +

(Sk

--

Bk-1 yDs T Bk-,

sT

k B t - t Yt

(6)

which requires O(n ~) operations provided that s T Bk-lyk # 0. The Broyden step --Bk-1 F(zk)

then can be computed by a matrix-vector multiplication, requiring an additional O(n 2) operations. Although in most cases this approach works well it has the troublesome feature that

detection of ill-conditioning in Bk is hard.

In the next section, we describe the so called QR factorization updating techniques due to

Gill and Murray [6], which naturally lead to the S Q R F method. We also give two Cholesky

factorization updating algorithms that are needed for the proposed method in Section 3.

Nonlinear algebraic equations

55

2. U P D A T I N G M A T R I X F A C T O R I Z A T I O N S A N D S Q R F A L G O R I T H M

Suppose we have the factorization Q R = B E R nxn and want to compute the factorization

B + u v T = Q1R1,

where u, v E R n are given. Notice that

B

Jl- u v T

Q(R + w vT),

where w = QTu. First, n - 1 Givens rotations J~ = J ( k , k + 1,0k) for k = n - 1 , . . . , 1 are applied

such that J ~ , . . . , J T 1 w = c~et, where a = =t: Ilwl12. The effect of these Givens rotations on R is

that

H=J~,...,JTn_IR

is upper Hessenberg. Consequently,

(j1T,. . , J n T_ l ) ( R + w

is another upper Hessenberg matrix.

k = n - 1 , . . . , 1 can be used to make

v T ) = H -~- Oee l VT = H 1

Now, n - 1 Givens rotations Gk = J ( k , k + 1,k) for

j Tn - l ,

""

, J ~ H = R1

upper triangular. Combining the above four equations, we obtain the factorization

B + uv T = Q1R1,

where

Q1 -= Q J n - 1 , . . . , J1 G 1 , . . . , Gn-1.

(7)

T h e entire Q R update process requires 13n 2 flops (see [7] for definition of flop). The most expensive part is the accumulation of Q J n - 1 , . . . , J I G 1 , . . . , Gn-1 into Q1. The proposed algorithm

in the next section does not require the accumulation of Q's.

Application of the above technique leads to the currently popular implementation of Broyden's

method (the basic form of quasi-Newton method without global strategies):

ALGORITHM 1. (Local quasi-Newton with S Q R F algorithm).

1. Given x0 E R n and B0 E R "xn, calculate the Q R decomposition Q0 R0 -- B0.

2. For k = 0 , 1 , . . .

sk = - R ; 1 QT F ( x k ) ,

Xk+l

--~ X k

-{- s k ,

Yk - F(Xk+l) - F(x~).

Determine the Q R factorization: Qk+l Rk+l = Qk Rk + (yk--Bk

,~)*~

(,k,~k)

"

This algorithm is fast and stable. A total of 14.5n 2 arithmetic operations are associated with

each iteration (excluding function evaluations). In a prudent algorithm, the condition number

of Rk should always be estimated before solving for sk. This adds another 2.5n ~ flops and,

therefore make the total of 17n 2 flops.

There are other algorithms based on alternative Q R factorization updating schemes (e.g.,

see [8]) which are a bit more economical than the preceding approach.

For example,

Mor~ et al.'s Minpack [1980] usually requires 11.5n 2 flops to process to each function call. A

method of updating Q R factorization with 8n ~ was reported by Gill et al. [9].

To our knowledge, whichever matrix factorization updating scheme is used, one thing is common to all the known quasi-Newton methods for solving nonlinear algebraic equations: Q has to

be formed explicitly at every iteration in order to solve

B~ sk = Qk Rk sk = - F ( x k ) .

56

H. WANG, R.P.

TEWARSON

Since our goal is to avoid accumulating Q, at this point (for comparison), it is instructive to take

a look at quasi-Newton method for unconstrained minimization.

ASSUMPTION. f : Rn -+ R is a twice differentiable and a positive definite secant approximation

Ilk to ~72f ( x k ) at xk is available. Then, the best Hessian update currently known is the B F G S

update

Yk yT

Sk+l = Hk + yT sk

Hk sk sT

s T Hk sk'

where yT sk > 0,

(8)

which has the property of hereditary symmetry and positive definiteness.

The Newton step s N is obtained by solving

Hk s g =

- ~7 2 f(xk).

It can be shown that if Lk LT is the Cholesky factorization of H~ then

nk+l "-- Jk+l J [ + l ,

where

Jk+l = Lk +

(yk - Lk vk )v T

vTuk

vk = + \ s T n k s k i

L T sk.

Now, employing the Q R factorization updating introduced at the beginning of this section we

can get the Q R factorization Qk+l LT+I of JT+I with O(n 2) operations, thus

T

gk+l = gk+l J[+l = nk+l Q~+IQk+l LL1 : Lk+l Lk+~,

and it is not necessary to accumulate Qk+l.

We observe that it is the positive symmetric definiteness that ensures Hk+l = Lk+l JT.F1, where

dk+l is a rank-one modification of Lk+l and the subsequent Q R factorization updating of Jk+l

gets the Cholesky decomposition of Hk+l without accumulating Qk+l. In solving nonlinear

equations, the properties of hereditary symmetry and positive definiteness do not exist.

As an alternative to the Q R factorization approach, Gill et al. [9] proposed a cheaper algorithm

which gets the Cholesky factorization Lk+l DT+I of Hk+l directly from Lk Dk L T for Hk in only

2.5n 2 flops.

Since the efficiency of our new method depends on these two algorithms, we briefly describe

them now. Suppose z E R n, a E R, B and/} are symmetric positive definite, and

B = B + a z z T.

Assuming that the Cholesky factors of B are known, viz. B = L D L T, we wish to determine the

factors

[~ = L D L r.

Observe that

- L(D + a p p T ) L T ,

where L p = z and p is determined from z by a forward substitution. If we form the factors

D + c~p pT -- "L--~TT,

then

= L~--D-~TL T,

Nonlinear algebraic equations

57

giving

L=L"

and

/9=D.

Gill e~ al. [9] observed that the elements below the diagonal of unit lower triangular matrix L

have the special form

l,j = p~ ~ ,

j < i.

T h e quantities D and ~ 1 , . . . , ~n-1 can be determined from D, a and p in O(n) multiplications.

By exploiting the special form L L = L can be calculated in n 2 + O(n) multiplications.

ALGOmTHM 2 [9].

If z is column vector, ~ is a positive number, L and D are, respectively, unit lower triangular

and positive diagonal matrices, then

Lj~)L T =

L D L T + a z z T,

(9)

where the elements of unit lower triangular L and d i a g o n a l / ) can be calculated from the following

recurrence relations:

1. Define ay -- a and w (1) = z.

2. For j = 1 , 2 , . . . , n , compute

pj : w~j),

dj

tJ+l --

'

dj

r : j + 1 , . . . , n , compute

=

and

T h e number of operations necessary to compute the modified factorization using the above algorithm is n 2 + O(n) multiplications and n 2 + O(n) additions.

When c~ < 0, much care needs to be taken. If a < 0 and B is nearly singular, it is possible that

rounding error could cause the diagonal elements dj to become zero, arbitrarily small or change

signs, even when the modification may be known from theoretical analysis to give a positive

definite factorization. Therefore, for a < 0, Gill et al. [9] suggest the following algorithm, which

ensures that the method will always yield a positive definite factorization even under extreme

circumstances. The method requires 1.5n 2 multiplications and n + 1 square roots.

ALGORITHM 3 [9].

If the same conditions hold as in Algorithm 1, except that a < 0, then

L D L T = L D L T + a z z T,

(10)

where the elements of unit lower triangular L and d i a g o n a l / ) can be calculated from the following

recurrence relations:

1. Solve L p 2. Define

z.

Ot 1 - - Or,

Ot

0-1--- l + ( l + t ~ S l ) l / 2 .

For j = l , 2 , . . . , n ,

w j1 :

Zj,

pi2

d-[

=-

E fl=j q,.

3. For j --- 1, 2 , . . . , n, compute

_

pj2

q~- dj'

o~ = l + 0-j qj,

58

H. WANG, R.P. T~-W^P,SON

a+1 = s - q,

p, = 0~ + a j , qi s+1,

d1 : p i = d i ,

Z~ =--~,

as p~

oq

aj+l :

a j ( l + pj)

For r = j + 1 , j + 2 , . . . , n ,

[ri = tri + Zi ~o+~.

3. P R O P O S E D A L G O R I T H M

The problem of solving nonlinear equations

given F : R" ---* R n,

find z. E R" such that F ( z , ) = 0

is closely related to the following nonlinear least square problem

n~in f(x) = 1 F ( x ) T F(x),

(11)

since z. solves (11).

The classical Gauss-Newton method locally approximates F(z) by a linear function

F ( z + p) ~ F(zk) + J(zk)p.

(12)

The iteration step p from the current point is obtained by solving

min [IF +

pER r'

Jp[[,

(13)

which is equivalent to modelling the change in the nonlinear least square objective F(z)TF(z)

by the quadratic function

1 _T . T

~Tp.-b-~

p d Jp,

(14)

L

where

~=- ~7 ( 1 F ( z ) T F ( z ) ) = JTF.

(15)

The Gauss-Newton step pGN minimizes the quadratic function (14) and, therefore, satisfies the

equation

j T j p __ _~.

(16)

which is also the normal equalion for linear least square problem (13). pGN is identical to the

Newton step for the original nonlinear equations problem s N when J is nonsingular. In the

above equations, if we replace the Jacobian matrix J by its least change secant approximation

B, then (16) becomes

B T B p - - B T F.

(17)

This formulation (quasi-Gauss-Newton) has the advantage of avoiding Q when solving for Newton

step, since B = Q R leads to

R T Rp - -B T f.

(18)

Q does not appear in the above equation. We will show that if Bk+l is a rank-one modification

of Bk, then Bk+lrBk+l is a symmetric rank-two modification of Bkr Bk.

Nonlinear algebraic equationa

59

LEMMA 1. If-B = B Jr u v T, where u , v E R n, B E R n", ~ = BTu, r = uT u, zl = [~Jr ( l - r / 2 ) v]/x/~ and z2 - [~ - (1 Jr r/2) v]/.gr2, then

~ T B _ _

B TBJrzlz

T-z2z

T.

(19)

PROOF.

- ~ T ' ~ = ( B Jr . t ] T ) T ( B

Jr U'OT )

= B T B + B T u v T Jr v u T B

Jr v u T u v T

= B T B Jr B T u v T Jr ( B T u v T ) T Jr r v V T.

But

B T u VT -- ('-S -- u t ) T ) T u V T

-- "BTB VT -- V BT u vT

"- U V T - - T ~ ) V T .

Thus,

-~T -~ _ BT B Jr ~ , v T __ r v vT + VUT _ r v vT + v v v T

= B T B Jr-~)T + tI-~T _ rVV T.

(20)

Now,

(1-

Zl ZlT -- Z2 ZT --" [~--~T Jr

~)2 v ~)T Jr

(1 -

~ ) ( u v T Jr v ~ T ) ]

2

[~--ffT+ (1 + ~ ) 2 v v T - - (1 + ~ ) ( ~ v T + Vu~T)]

2

+ VUT -- I"V VT,

(21)

and (19) follows from (20) and (21).

Therefore, if we have the Cholesky factors L, D for B T B , this lemma shows that L, D for ~ T ~

can be obtained in 2.5n 2 flops using the two algorithms in Section 2. Also notice if B = QR,

then D = diag (r21 .... , r~n ) and L = R T D -1/2. Our implementation of the basic quasi-Newton

method is:

ALGORITHM 4. (Local quasi-Gauss-Newton algorithm).

1. Given x0 E R n and B0 E R nxn' calculate the Q R decomposition Q0 R0 = B0 and define

Do = diag ( 1r 12, - . . , r . 2 . ) ,

L0 = R T D - 1 / 2

2. For k = 0 , 1 , . . .

Solve L~ Dk L~ sk = - B T f ( z ~ ) for sk.

Xk+l = X k Jr Sk,

Yk "- r ( z ~ + l ) - F(zk),

sT

Set B~+I = Bk + (y~ - Bk s k ) ~

and get Lk+l, Dk+l.

We emphasize here that Bk+l may be any rank-one update of Bk--not necessarily the Broyden

update.

An operation count reveals that the initiM iteration needs 2 n a / 3 + O ( n 2) flops, roughly the same

as the S Q R F update approach in Section 2. However, in subsequent iterations, it requires only

5.5n 2 + O(n) flops, compared with 14.5n 2 + O(n) for its Q R counterpart. Thus, more than 50%

of arithmetic operations are saved. Since the linear system (18) is already in a factored form,

H. WANG, R . P . TEWARSON

60

its condition number can be reliably estimated in 2.5n 2 flops using an algorithm given by Cline,

Moler, Stewart and Wilkinson [10]. Therefore, even if conditioning estimate feature is added to

the above algorithm, our total cost now, 8n 2, still leads to an improvement of over 50%.

At a first glance, it appears that there is a trade-off in terms of the condition number, since the

condition number of the linear system (18) is square of that in (4). However, due to the following

reasons it is not so:

For a well-posed problem of finding a root of a system of nonlinear equations, ill-conditioning usually does not occur near the solution, because (12) is a reasonably good approximation to the original nonlinear problem. Therefore, ill-conditioning in the linear system

would indicate that the nonlinear problem is itself very sensitive to small changes in its

data-the underlying problem is not well posed.

Equation (17) is a positive definite symmetric system as long as Bk is nonsingular. Wilkinson [11] has shown that Cholesky decomposition of such a system is completely stable and

its backward error analysis is much more favorable than any other decomposition (including QR ).

Even if ill-conditioning does occur, it can (almost) always be detected and proper measures

taken to alleviate it. For example, if LD 1/2 is singular or its estimated condition number

is greater than e-I/u, where e is the machine epsilon, following Dennis and Schnabel [1],

we perturb the quadratic model of a trust region to

fnk(xk + Sk) = -~1r(xk)TF(xk ) + (Bkr F(xk))T sk +

12sTHk sk,

where

H~ = BkT Bk +

(ne)l/21lB[ Bkll~- I.

Using Singular Value Decomposition it is not difficult to show that the condition number

of Hk is about e-I/2.

If ill-conditioning is a consistent feature with the underlying problem, we can always switch

to SQRF updating method to avoid potential loss of accuracy due to squared conditioning.

In fact, for really badly conditioned problems even QR approach can not help much.

a Generally, right before termination, Jacohian matrix is always recomputed with finite

difference, this leads to a Newton step. Therefore, any possible loss of accuracy due to

squared conditioning will be remedied by this Newton step.

The computational results show that ill-conditioning is not a problem.

T a b l e 1.

SQRF m e t h o d v e r s u s p r o p o s e d m e t h o d .

SQRF

Prob.

QGN

Improvement

No.

No. It.

T i m e (sec.)

No. It.

T i m e (sec.)

21

4.73

2.66

78%

22

6.20

3.45

80%

26

4.21

2.43

73~

27

28

Divergence

2

29

Divergence

4.21

2.41

N o convergence

3.62

75%

3O

120

43.47

126

22.14

96%

31(1)

14

9.24

14

5.78

6O%

31(2)

31(3)

31(4)

31(5)

31(6)

31(7)

18

10.41

18

6.41

62%

28

13.47

28

8.10

66%

3O

14.05

3O

8.41

67%

36

15.87

36

9.43

68%

57

22.23

55

12.59

77%

101

35.48

139

26.42

34~

Nonlinear algebraic equations

61

Computational Results

The results of computational experiments are given in Table 1. We used n = 100 and

ftol = 10-e. All test problems are taken from [12]. For easy reference, we use their order

for problem numbers. Please note that Problem 31 has been modified as follows: the original

constant coefficients 2, 5, 1 are changed to three parameters wl, w2, w3, respectively. By varying wl, w2, w3, additional problems with progressively worse sealing are generated. Since our

main concern is with the efficiency of updating matrix factorizations rather than any particular

global strategy, comparing local algorithms (no global strategies employed) suffices. Comparative results are given for Algorithm 4 (local quasi-Gauss.Newton (QGN)) and Algorithm 1 (local

quasi-Newton with S Q R F ) algorithms. The basic subroutines qrfac, q.form, rlupdt and rlmpyq

for S Q R F are taken from HYBRD of Minpack. Therefore, except for subroutine dogleg, Algorithm 1 is almost a local version of HYBRD. The initial points and Jacobians (calculated by finite

differences) are same for S Q R F and QGN, the only difference is in the subsequent updates of

the matrix factorizations.

The superior overall performance of QGN is obvious from Table 1. Notice that S Q R F approach

failed for two problems while QGN succeeded in getting a solution for one of them. The reason for

this is not clear. Our preceding justification for QGN (for example, the most favorable backward

error analysis) may play a part. The computations were done in double precision on a Sparc 2

using Fortran.

Global Algorithm

Global methods for solving nonlinear equations are generally based on applying global strategies, such as lineseareh or trust region approaches, to the quadratic model in (14). The following

algorithm incorporates our fast matrix factorization updating technique into the double dogleg

strategy employed by Dennis and Sehnabel [12].

ALGORITHM 5. (Quasi-Gauss-Newton employing double dogleg strategy).

1. Given Zo, calculate Fo = F(zo), Bo = J(zo), go = BTo Fo and QR decomposition of Bo.

Set o =

diag

and L = n

9-1/2.

Iteration:

2. Compute a quadratic model for the trust region algorithm. Determine Newton step s N - ( Lk Dk L ~ ) - l gk, or a variation if Bk is singular or ill-conditioned.

(a) If this is a restart, calculate the QR decomposition orBs, and determine the Cholesky

factorization Lk Dk L~ = B~ Bk, accordingly.

(b) Estimate the 11 condition number of Lk Dk~/2.

(c) If Lk Dk~/2 singular or ill-conditioned, set Hk -- Lk Dk L~ + V~ . [[Lk Dk L~[[II,

calculate the Cholesky factorization of Hk, set s N ~-- - H k - i [gk, where gk = BTk Fk,

and terminate Step 2.

(d) If Lk Dk,/~ is well-conditioned, set s~ ~-- - (Lt Dt L~)-lgk. Terminate Step 2.

3. Perform dogleg minor :'teration, possibly repeatedly. Find a step st on the double dogleg

curve such that [[st[[ = 6k and satisfactory reduction in f ( x ) - 1/2lIFT(z)F(z)[[ is

achieved. Starting with the input trust region radius 6k but increase or decrease it if

necessary. Also, produce trust radius 6~+1 for the next iteration.

4. If Bk is being calculated by secant approximations and the algorithm has bogged down,

reset Bk to J ( z t ) using finite differences, recalculate gk and go back to Step 2.

T

5. Bt+x *-- Bk +(yk -- Bt sk)s~/s~ st, gt+! -" Bt+lFk+l,

apply Lemma 1, and Algorithms 2

and 3 to determine the Cholesky factorization L~+ 1DT+x LT+x -- B~+ 1Bk+l.

6. If termination criterion has been satisfied, stop the algorithm and return the correspon cling

termination message. Otherwise, set z~ *-- xt+l, Fk ~ F~+I, gk ~ gk+l, Hk *-- Hk+l,

sn .__ sk+l Lk ~-- Lk+l, Dk ~-- Dk+l, 6k ~ 6k+1, and return to Step 2.

REMARKS.

For convergence of global algorithms, we refer to [13-15].

H. WANG, R.P. T~.WARSON

62

For clarity and simplicity,scaling of variables is not included in the above algorithm. However,

any implementation of the algorithm should embed scaling in it,because more efficiencyis usually

gained. Within the framework of the algorithm, there may be some variations:

(1) Instead of determining L0, Do from the QR decomposition of B0, we may calculate L0, Do

directly using Gill and Murray's [16] modified Cholesky factorization algorithm. This

results in L0 Do LoT = Bow B 0 + D , where D is a diagonal matrix with nonnegative diagonal

elements that are zero if Boa"B0 is sufficiently positive definite, with a prescribed upper

bound on its condition number. The advantage is guaranteed positive definiteness for the

quadratic trust region model right at the first iteration.

(2) For Step 3, rather than dogleg, linesearch strategy can be applied.

(3) In Step 5, Broyden's update is not the only choice. Rather, other rank-one modifications

may be used. For example, to avoid singularity in Bk+t, Powell [17] suggests a update of

the form

Bk+l = Bk + Ok (Yk -- Bk st)s T

81 Sk

where 0k is chosen such that I det Bk+l] _> ot det B I for some ~ in (0, 1); Powell uses

a = 0.1. For more details for choosing 0k see Mord and Trangenstein [18].

(4) A switch mechanism can be installed in the algorithm such that when ill-conditioning is

a consistent feature of the underlying problem it will automatically switch to SQRF.

Concluding Remarks

A quasi-Gauss-Newton type of method for solving systems of nonlinear algebraic equations is

proposed. By avoiding accumulating Q during the process of updating matrix factorizations, at

each iteration, the required number of arithmetic operations is reduced to about half the amount

necessary in the currently popular algorithms. Meanwhile, the desirable properties of stability

and monitoring of conditioning are preserved. Computational results clearly show the superiority

of our local algorithm over the popular SQRF.

REFERENCES

1. J.E. Dennis and R.B. Sehnabel, Numerical Methods for Unconstrained Optimization and Nonlinear Equations, Prentiee-HaU, Inc., Englewood Cliffs, New Jersey, (1983).

2. C.G. Broyden, A class of methods for solving nonlinear simultaneous equations, Math. Comp. 19,577-593

(1965).

3. J.E. Dennis and J.J. Mord, Quasi-Newton methods, motivation and theory, SIAM Review 19, 46-89 (1977).

4. D.M. Gay and R.B. Sehnabel, Solving systems of nonlinear equations by Broyden's method with projected

updates, In Nonlinear Programming 3, (Edited by O. Mangasarian, R. Meyer and S. Robinson), pp. 245-281,

Academic Press, New York, (1978).

5. R.P. Tewarson, A unified derivation of quasi-Newton methods for solving non-sparse and sparse nonlinear

equations, Computing 21,113-125 (1979).

6. P.E. Gill and W. Murray, Quasi-Newton methods for unconstrained optimization, J.LM.A. 9, 91-108 (1972).

7. G.H. Golub and C.F. van Loan, Matrix Computations, Johns Hopkins Press, Baltimore, MD, (1987).

8. P.E. Gill, W. Murray and M.H. Wright, Numerical Linear Algebra and Optimization, Addison-Wesley

Publishing Company, Redwood City, CA, (1991).

9. P.E. Gill, G.H. Golub, W. Murray and M.A. Saunders, Methods for modifying matrix factorizations, Math.

Comp. 28, 505-535 (1974).

10. A.K. Cline, C.B. Moler, G.W. Stewart and J.H. Wilkinson, An estimate for the condition number of a

matrix, SIAM J. Numer. Anal. 16,368--375 (1979).

11. J.H. Wilkinson, A priori error analysis of algebraic processes, In Proc. International Congress Math,

p. 629--639, Izdat. Mir., Moscow, (1968).

12. J.J. Mord, B.S. Garbow and K.E. Hillstrom, Testing unconstrained optimization software, ACM Trans.

Math. Software 7, 17-45 (1981).

13. M.J.D. Powell, Convergence properties of a class of minimization algorithms, In Nonlinear Programming ~,

(Edited by O. Mangasarian, R. Meyer and S. Robinson), Academic Press, New York, (1975).

14. J.J. Mold and D.C. Sorensen, Computing a trust region step, SIAM J. Sci. Star. Comput. 4, 553-572 (1983).

15. G.A. Shultz, R.B. Schnabel and R.H. Byrd, A family of trust-region-based algorithms for unconstrained

minimization with strong global convergence properties, SIAM J. Numer. Anal. 22, 47-67 (1985).

16. P.E. Gill, W. Murray and M.H. Wright, Practical Optimization, Academic Press, London, (1981).

Nonlinear algebraic equations

63

17. M.J.D. Powell, A hybrid method for nonlinear equations, In Numerical Methods Joe Nonlinear Algebraic

Equations, (Edited by P. Rabinowitz), pp. 87-114, Gordon and Breach, London, (1970).

18. J.J. Mor~ and J.A. Trangenstein, On global convergence of Broyden's method, Math. Comp. 30, 523-540

(1976).

19. C.G. Broyden, J.E. Dennis and J.J. Mor~, On the local and superlinear convergence of quasi-Newton

methods, J.LM.A. 12, 223-246 (1973).

20. J.E. Dennis and J.J. Mor~, Characterization of superlincar convergence and its application to quasi-Newton

methods, Math. Comp. 28, 549-560 (1974).

21. Ft. Fletcher, Practical Methods of Optimization, John Wiley and Sons, New York, (1980).

22. K.L. Hiebert, An evaluation of mathematical software that solves system of nonlinear equations, ACM

Trans. Math. Software 8, 5-20 (1982).

23. J.J. Stewart, The efl`ects of rounding error on an algorithm for downdating a Cholesky factorizatinn, J.LM.A.

23, 203-213 (1979).

24. R.P. Tewarson, Use of smoothing and damping techniques in the solution of nonlinear equations, SIAM

Rev. 19, 35-45 (1977).

25. J.H. Wilkinson, The Algebraic Ei9envalue Problem, Oxford University Press, London, (1965).

~5:1-E

You might also like

- Biomediacal Waste Project FinalDocument43 pagesBiomediacal Waste Project Finalashoknr100% (1)

- Overall Method StatementDocument33 pagesOverall Method Statementsaranga100% (1)

- Orofacial Complex: Form and FunctionDocument34 pagesOrofacial Complex: Form and FunctionAyushi Goel100% (1)

- Prawn ProcessingDocument21 pagesPrawn ProcessingKrishnaNo ratings yet

- 09 Passport 7K 15K Performance Guidelines PCR 3 0Document44 pages09 Passport 7K 15K Performance Guidelines PCR 3 0thed719No ratings yet

- Genie GS-1930 Parts ManualDocument194 pagesGenie GS-1930 Parts ManualNestor Matos GarcíaNo ratings yet

- A Quasi-Gauss-Newton Method For Solving Non-Linear Algebraic EquationsDocument11 pagesA Quasi-Gauss-Newton Method For Solving Non-Linear Algebraic EquationsMárcioBarbozaNo ratings yet

- The Finite Element Method For The Analysis of Non-Linear and Dynamic SystemsDocument20 pagesThe Finite Element Method For The Analysis of Non-Linear and Dynamic SystemsvcKampNo ratings yet

- Abstract. We Extend The Applicability of The Kantorovich Theorem (KT) ForDocument8 pagesAbstract. We Extend The Applicability of The Kantorovich Theorem (KT) ForPaco DamianNo ratings yet

- The Convergence of Quasi-Gauss-Newton Methods For Nonlinear ProblemsDocument12 pagesThe Convergence of Quasi-Gauss-Newton Methods For Nonlinear ProblemsMárcioBarbozaNo ratings yet

- A Truncated Nonmonotone Gauss-Newton Method For Large-Scale Nonlinear Least-Squares ProblemsDocument16 pagesA Truncated Nonmonotone Gauss-Newton Method For Large-Scale Nonlinear Least-Squares ProblemsMárcioBarbozaNo ratings yet

- ch4 KFderivDocument22 pagesch4 KFderivaleksandarpmauNo ratings yet

- Generating FunctionsDocument6 pagesGenerating FunctionsEverton ArtusoNo ratings yet

- Moments of Escape Times of Random WalkDocument4 pagesMoments of Escape Times of Random Walkinsightstudios33No ratings yet

- The Fokker-Planck EquationDocument12 pagesThe Fokker-Planck EquationslamNo ratings yet

- Properties of A Semi-Discrete Approximation To The Beam EquationDocument11 pagesProperties of A Semi-Discrete Approximation To The Beam EquationjtorerocNo ratings yet

- Quadratic Vector Equations: (X) Is Operator Concave Instead of ConvexDocument15 pagesQuadratic Vector Equations: (X) Is Operator Concave Instead of Convexnguyenthuongz1610No ratings yet

- Dynamic Programming and Optimal Control: Third Edition Dimitri P. BertsekasDocument54 pagesDynamic Programming and Optimal Control: Third Edition Dimitri P. BertsekasRachit ShahNo ratings yet

- Estimation 2 PDFDocument44 pagesEstimation 2 PDFsamina butoolNo ratings yet

- ProblemsDocument62 pagesProblemserad_5No ratings yet

- Lecture 2: Continuation of EquilibriaDocument10 pagesLecture 2: Continuation of Equilibria周沛恩No ratings yet

- Dtic Ad1022467limitteoremsDocument12 pagesDtic Ad1022467limitteoremsVAHID VAHIDNo ratings yet

- A Theorem Concerning The Positive Metric: Derek W. Robi NsonDocument6 pagesA Theorem Concerning The Positive Metric: Derek W. Robi NsonAnonymous FigYuONxuuNo ratings yet

- Symmetry: Extended Convergence Analysis of The Newton-Hermitian and Skew-Hermitian Splitting MethodDocument15 pagesSymmetry: Extended Convergence Analysis of The Newton-Hermitian and Skew-Hermitian Splitting MethodAlberto Magreñán RuizNo ratings yet

- Lecture14 Probset1.8Document4 pagesLecture14 Probset1.8JRNo ratings yet

- Kybernetika 39-2003-4 6Document11 pagesKybernetika 39-2003-4 6elgualo1234No ratings yet

- American Mathematical Society Journal of The American Mathematical SocietyDocument8 pagesAmerican Mathematical Society Journal of The American Mathematical Societychandan mondalNo ratings yet

- Classnotes For Classical Control Theory: I. E. K Ose Dept. of Mechanical Engineering Bo Gazici UniversityDocument51 pagesClassnotes For Classical Control Theory: I. E. K Ose Dept. of Mechanical Engineering Bo Gazici UniversityGürkan YamanNo ratings yet

- An Improved Parameter Regula Falsi Method For Enclosing A Zero of A FunctionDocument15 pagesAn Improved Parameter Regula Falsi Method For Enclosing A Zero of A FunctionIqbal Imamul MuttaqinNo ratings yet

- Optimal Control ExercisesDocument79 pagesOptimal Control ExercisesAlejandroHerreraGurideChile100% (2)

- Calculation of Gauss Quadrature Rules : by Gene H. Golub and John H. WelschDocument10 pagesCalculation of Gauss Quadrature Rules : by Gene H. Golub and John H. WelschlapuNo ratings yet

- P.L.D. Peres J.C. Geromel - H2 Control For Discrete-Time Systems Optimality and RobustnessDocument4 pagesP.L.D. Peres J.C. Geromel - H2 Control For Discrete-Time Systems Optimality and RobustnessflausenNo ratings yet

- Gauss-Laguerre Interval Quadrature Rule: Gradimir V. Milovanovi C, Aleksandar S. Cvetkovi CDocument14 pagesGauss-Laguerre Interval Quadrature Rule: Gradimir V. Milovanovi C, Aleksandar S. Cvetkovi CadilNo ratings yet

- Multinomial Logistic Regression AlgorithmDocument4 pagesMultinomial Logistic Regression AlgorithmJhon F. de la Cerna VillavicencioNo ratings yet

- Blackwell Publishing Royal Statistical SocietyDocument7 pagesBlackwell Publishing Royal Statistical SocietyMoeamen AbbasNo ratings yet

- The Finite-Difference Time-Domain (FDTD) Method - Part Iii: Numerical Techniques in ElectromagneticsDocument24 pagesThe Finite-Difference Time-Domain (FDTD) Method - Part Iii: Numerical Techniques in ElectromagneticsChinh Mai TienNo ratings yet

- Theorems of Sylvester and SchurDocument19 pagesTheorems of Sylvester and Schuranon020202No ratings yet

- 6 LQ, LQG, H, H Control System Design: 6.1 LQ: Linear Systems With Quadratic Performance Cri-TeriaDocument22 pages6 LQ, LQG, H, H Control System Design: 6.1 LQ: Linear Systems With Quadratic Performance Cri-TeriaAli AzanNo ratings yet

- Potts Ste I DLT AscheDocument15 pagesPotts Ste I DLT AscheistarhirNo ratings yet

- H2-Optimal Control - Lec8Document83 pagesH2-Optimal Control - Lec8stara123warNo ratings yet

- Erik Løw and Ragnar WintherDocument22 pagesErik Løw and Ragnar WintherLuis ValdezNo ratings yet

- Script 1011 WaveDocument10 pagesScript 1011 Wavetanvir04104No ratings yet

- HocsDocument5 pagesHocsyygorakindyyNo ratings yet

- On The Semilocal Convergence of A Fast Two-Step Newton MethodDocument10 pagesOn The Semilocal Convergence of A Fast Two-Step Newton MethodJose Miguel GomezNo ratings yet

- 1 General Solution To Wave Equation: One Dimensional WavesDocument35 pages1 General Solution To Wave Equation: One Dimensional WaveswenceslaoflorezNo ratings yet

- Ee263 Ps1 SolDocument11 pagesEe263 Ps1 SolMorokot AngelaNo ratings yet

- The DC Power Flow EquationsDocument22 pagesThe DC Power Flow EquationsPeter JumreNo ratings yet

- 1982-Date-Jimbo-Kashiwara-Miwa-Quasi-periodic Solutions of The Orthogonal KP-Publ - RIMSDocument10 pages1982-Date-Jimbo-Kashiwara-Miwa-Quasi-periodic Solutions of The Orthogonal KP-Publ - RIMSmm.mm.mabcmNo ratings yet

- N×P 2 2 2 HS TDocument5 pagesN×P 2 2 2 HS Thuevonomar05No ratings yet

- Advanced Problems and Solutions: Raymond E. WhitneyDocument6 pagesAdvanced Problems and Solutions: Raymond E. WhitneyCharlie PinedoNo ratings yet

- Táboas, P. (1990) - Periodic Solutions of A Planar Delay Equation.Document17 pagesTáboas, P. (1990) - Periodic Solutions of A Planar Delay Equation.Nolbert Yonel Morales TineoNo ratings yet

- Barlett MethodDocument15 pagesBarlett Methodking khanNo ratings yet

- Orthogonal Laurent PolynomialsDocument20 pagesOrthogonal Laurent PolynomialsLeonardo BossiNo ratings yet

- Complex Arithmetic Through CordicDocument11 pagesComplex Arithmetic Through CordicZhi ChenNo ratings yet

- 1 s2.0 S0898122100003400 MainDocument14 pages1 s2.0 S0898122100003400 Mainwaciy70505No ratings yet

- Unscented KF Using Agumeted State in The Presence of Additive NoiseDocument4 pagesUnscented KF Using Agumeted State in The Presence of Additive NoiseJang-Seong ParkNo ratings yet

- The M/M/1 Queue Is Bernoulli: Michael Keane and Neil O'ConnellDocument6 pagesThe M/M/1 Queue Is Bernoulli: Michael Keane and Neil O'Connellsai420No ratings yet

- 1 IEOR 4700: Notes On Brownian Motion: 1.1 Normal DistributionDocument11 pages1 IEOR 4700: Notes On Brownian Motion: 1.1 Normal DistributionshrutigarodiaNo ratings yet

- Hintermüller M. Semismooth Newton Methods and ApplicationsDocument72 pagesHintermüller M. Semismooth Newton Methods and ApplicationsパプリカNo ratings yet

- Approximation of Fractional CapacitorsDocument4 pagesApproximation of Fractional CapacitorsVigneshRamakrishnanNo ratings yet

- Chió's Trick For Linear Equations With Integer CoefficientsDocument3 pagesChió's Trick For Linear Equations With Integer CoefficientsNatkhatKhoopdiNo ratings yet

- The Runge-Kutta MethodDocument9 pagesThe Runge-Kutta MethodSlavka JadlovskaNo ratings yet

- New Results in The Calculation of Modulation ProductsDocument16 pagesNew Results in The Calculation of Modulation ProductsRia AlexNo ratings yet

- Green's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)From EverandGreen's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)No ratings yet

- Pertemuan 4 (Home Assigment Integral - 3)Document4 pagesPertemuan 4 (Home Assigment Integral - 3)Julham Efendi NasutionNo ratings yet

- Bidang Studi: Tes Bahasa Inggris 32 Topik:: Inverted Sentence, Preference and ArticleDocument1 pageBidang Studi: Tes Bahasa Inggris 32 Topik:: Inverted Sentence, Preference and ArticleJulham Efendi NasutionNo ratings yet

- Soal Dunia Akhirat Jangan Di DownloadDocument1 pageSoal Dunia Akhirat Jangan Di DownloadJulham Efendi NasutionNo ratings yet

- Pertemuan 4 (Home Assigment Integral - 3)Document4 pagesPertemuan 4 (Home Assigment Integral - 3)Julham Efendi NasutionNo ratings yet

- 7 Days Math Subtraction Series 1 Digit MDocument11 pages7 Days Math Subtraction Series 1 Digit MJulham Efendi NasutionNo ratings yet

- B. Study: Tes Bahasa Inggris - 1 Topik: Present Tenses: Structure and Written ExpressionDocument1 pageB. Study: Tes Bahasa Inggris - 1 Topik: Present Tenses: Structure and Written ExpressionJulham Efendi NasutionNo ratings yet

- Jadwal Kelas Saya - Semester Musim Gugur: Waktu Sen Sel Rab Kam Jum Sab MinDocument1 pageJadwal Kelas Saya - Semester Musim Gugur: Waktu Sen Sel Rab Kam Jum Sab MinJulham Efendi NasutionNo ratings yet

- Bagian Kedua Bahasa Inggris: Structure and Written ExpressionDocument5 pagesBagian Kedua Bahasa Inggris: Structure and Written ExpressionJulham Efendi NasutionNo ratings yet

- A Two-Dimensional Search For A Gauss-Newton AlgorithmDocument13 pagesA Two-Dimensional Search For A Gauss-Newton AlgorithmJulham Efendi NasutionNo ratings yet

- A Tabu Search Algorithm For Two Dimensional Non-Guillotine Cutting ProblemsDocument26 pagesA Tabu Search Algorithm For Two Dimensional Non-Guillotine Cutting ProblemsJulham Efendi NasutionNo ratings yet

- Kunci TODocument2 pagesKunci TOJulham Efendi NasutionNo ratings yet

- Two and Three DimensDocument14 pagesTwo and Three DimensJulham Efendi NasutionNo ratings yet

- Jurnal Gauss NewtonDocument14 pagesJurnal Gauss NewtonJulham Efendi NasutionNo ratings yet

- Higher Algebra - Hall & KnightDocument593 pagesHigher Algebra - Hall & KnightRam Gollamudi100% (2)

- Nutrient DeficiencyDocument8 pagesNutrient Deficiencyfeiserl100% (1)

- Government of West Bengal:: Tata Motors LTD: Abc 1 1 1 1 NA 0 NA 0Document1 pageGovernment of West Bengal:: Tata Motors LTD: Abc 1 1 1 1 NA 0 NA 0md taj khanNo ratings yet

- Binder 3 of 4 Dec-2018Document1,169 pagesBinder 3 of 4 Dec-2018Anonymous OEmUQuNo ratings yet

- BECO UACE Chem2Document6 pagesBECO UACE Chem2EMMANUEL BIRUNGINo ratings yet

- SAT Biochar Ethylene Poster 10 - 10b PDFDocument1 pageSAT Biochar Ethylene Poster 10 - 10b PDFsherifalharamNo ratings yet

- Firestone & Scholl - Cognition Does Not Affect Perception, Evaluating Evidence For Top-Down EffectsDocument77 pagesFirestone & Scholl - Cognition Does Not Affect Perception, Evaluating Evidence For Top-Down EffectsRed JohnNo ratings yet

- How To Eat WellDocument68 pagesHow To Eat WelleledidiNo ratings yet

- Rac Question PaperDocument84 pagesRac Question PaperibrahimNo ratings yet

- Calibration of Force ReductionDocument36 pagesCalibration of Force Reductionvincenzo_12613735No ratings yet

- Sw34 Religion, Secularism and The Environment by NasrDocument19 pagesSw34 Religion, Secularism and The Environment by Nasrbawah61455No ratings yet

- American University of BeirutDocument21 pagesAmerican University of BeirutWomens Program AssosciationNo ratings yet

- Electrical Power System Device Function NumberDocument2 pagesElectrical Power System Device Function Numberdan_teegardenNo ratings yet

- Plato: Epistemology: Nicholas WhiteDocument2 pagesPlato: Epistemology: Nicholas WhiteAnonymous HCqIYNvNo ratings yet

- Application of PCA-CNN (Principal Component Analysis - Convolutional Neural Networks) Method On Sentinel-2 Image Classification For Land Cover MappingDocument5 pagesApplication of PCA-CNN (Principal Component Analysis - Convolutional Neural Networks) Method On Sentinel-2 Image Classification For Land Cover MappingIJAERS JOURNALNo ratings yet

- JHS 182Document137 pagesJHS 182harbhajan singhNo ratings yet

- Accsap 10 VHDDocument94 pagesAccsap 10 VHDMuhammad Javed Gaba100% (2)

- Manual Nice9000v A04Document151 pagesManual Nice9000v A04hoang tamNo ratings yet

- Siprotec 7ut82 ProfileDocument2 pagesSiprotec 7ut82 ProfileOliver Atahuichi TorrezNo ratings yet

- DCS YokogawaDocument17 pagesDCS Yokogawasswahyudi100% (1)

- L GH Catalog PullingDocument60 pagesL GH Catalog PullingLuis LuperdiNo ratings yet

- Navy Supplement To The DOD Dictionary of Military and Associated Terms, 2011Document405 pagesNavy Supplement To The DOD Dictionary of Military and Associated Terms, 2011bateljupko100% (1)

- Serving North Central Idaho & Southeastern WashingtonDocument12 pagesServing North Central Idaho & Southeastern WashingtonDavid Arndt100% (3)

- Project Title Discipline Project Stage Client Attendance CirculationDocument4 pagesProject Title Discipline Project Stage Client Attendance CirculationgregNo ratings yet

- FAJASDocument891 pagesFAJASCecilia GilNo ratings yet