Professional Documents

Culture Documents

Improveandinnovate - Thoughts and Ideas On Quality, Improvement and Innovation

Uploaded by

avinashonscribdOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Improveandinnovate - Thoughts and Ideas On Quality, Improvement and Innovation

Uploaded by

avinashonscribdCopyright:

Available Formats

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

1 of 96

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

improveandinnovate

Thoughts and ideas on Quality , Improvement

and Innovation

CSSBB Tutorial Series : Lesson 9

(http://improveandinnovate.wordpress.co

m/2014/07/18/cssbb-tutorial-serieslesson-9/)

July 18, 2014July 19, 2014 Lean Six Sigma

Lesson 9 : Measure Phase Part 5 of 5

Topics Covered :

Process Capability Analysis

o Process Capability Indices : Cp/Cpk/Pp/Ppk

o Application to non- normal & aribute data

o Six Sigma Metrics : DPMO / PPM /RTY

Process Capability Analysis

The only man who behaved sensibly was my tailor: he took my measure anew every time

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

2 of 96

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

he saw me, whilst all the rest went on with their old measurements and expected them to t

me.

George Bernard Shaw

Process Capability Analysis

Process Capability refers to the ability of a process to meet customers

specications. Depending on the process and the quality characteristic of interest,

several methods are available for computing process capabilities.

Process Capabilities are generally expressed in terms of unitless numbers called

Process Capability Indices or Process Performance Indices. These are ratios of

process spread to tolerance (i.e customers specications)

Key requirements for computing Process Capability are :

The process should be stable i.e , the process operates within the Upper Control

Limit and the Lower Control Limit . This means the corresponding control chart

should be studied to assess process stability. ( Will be discussed in a later lesson

on Control Charts ).

The data follows a normal or near normal distribution . If the data is not normal ,

the process capabilities can still be computed but aer transforming the data .

Process Capability Indices ( Cp , Cpk)

Figure 32(a and b) shows two processes with same set of customer specications (

Lower Specication Limit LSL and Upper Specication Limit USL ) . Which

process has a beer capability of meeting customers requirements ?

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

3 of 96

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide232.jpg)

Quite obviously , the process in 32(a). Part of the process in Fg 32(b) is outside the

customers specication limits and hence will produce more non conforming product

than the process in Fig. 32(a). The only information one cannot obtain from the

above gures is how much non conforming product will each process produce? Such

information can be obtained with the help of process capability analysis.

Process Capability Indices are helpful when comparing two processes . For example:

Vendor evaluation and rating can be done using Process Capability Indices.

Another advantage is that the Process Capability Indices provide a universal

language that can be used to communicate process performance across industries &

processes .

(UCL LCL) is called the Process Width and is given by : UCL LCL = 6, hence

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

4 of 96

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

The concept of Cp is explained in the Fig. 33

(hps://improveandinnovate.les.wordpress.com/2014/07/slide201.jpg)

ii) Cpk : The assumption while computing Cp using the earlier formula is that the

process mean is centered between the customer specication , which may not be

always true . Fig. 34 shows two process with the same Cp but with dierent

capabilities. In such cases , the Cp fails to provide a correct estimate of the process

performance.

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

5 of 96

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide192.jpg)

Hence, we use a second process performance index called Cpk which is given by :

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

6 of 96

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide172.jpg)

Note : Cp Vs. Cpk : While the Cp is a good indicator of the potential of the process

to perform , the Cpk is a realistic measure of the ability of the process to meet

customers specications.

Typical Values of Cp & Cpk :

Cp = Cpk implies the process mean is equal to the customers target.

Cpk = 1 implies 99.72% of the process is within customer specication limits (

Refer g. 17 under normal distribution curve). This means the process is just

capable. This is also called a 3 sigma level process

Cpk = 1.33 implies a 4 sigma level process . Quite oen customers specify this

as the minimum requirement for their vendors.

Cpk = 1.67 implies a 5 sigma level process

Cpk = 2 implies a 6 sigma level process

Note : Refer Six Sigma Metrics in the following sections for details on Process Sigma

Level

Example : A lling machine in a boling process is expected to ll an average of 300ml.

Specications for this process are :

LSL = 295ml , USL = 305ml

A sample of 72( 24 subgroups of size n= 3) boles from the process were taken and the

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

7 of 96

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

control chart indicated the process was stable with Lower Control limit ( LCL ) = 298 and

Upper Control Limit ( UCL) = 306 with a mean of 302ml . Compute Cp & Cpk for this

process.

Solution :

Cp = ( USL LSL ) / (UCL LCL)

= (305 295) / (306 298)

= 10/8 = 1.25

(hps://improveandinnovate.les.wordpress.com/2014/07/slide162.jpg)

Note : UCL LCL= 6* = 8 ;

Hence , 3* = 4

The Cp indicates the process is capable. However, since the process is not centered , the

Cpk will not be equal to the Cp . The actual capability of the process is much lower than the

Cp suggests !

Cp Vs. Cpk : The following set of gures ( Fig. 36) show how the Cp and Cpk are

related.

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

8 of 96

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide152.jpg)

Process Performance Indices ( Pp , Ppk, Cpm)

The Cp & Cpk discussed earlier are called short term capability indices. This is

because the estimates of variation ( or standard deviation) are based on short term

samples . The short term standard deviation used for Cp / Cpk calculations is

estimated by :

st = (R(bar) / d2 , where

R(bar) is the average range of subgroups in the X(bar) R control chart and d2 is a

constant ( refer Xbar R Control Charts in subsequent lessons ! )

On the other hand , the Process Performance Indicators ( Pp & Ppk) use the overall (

long term ) standard deviation computed by the formula :

(hps://improveandinnovate.les.wordpress.com

/2014/07/slide142.jpg)

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

9 of 96

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

The Process Performance Indicators ( Pp , Ppk ) were introduced by the Automotive

Industry Action Group ( AIAG) .

The formulae for Pp and Ppk are very much similar to those of Cp & Cpk except that

the standard deviation is computed using dierent methods as discussed above.

Hence ,

Note : It is a common practice to report Pp and Ppk for processes that are not in

control. Many experts consider the use of Pp and Ppk unnecessary as it is

meaningless to estimate capability of a process that is not stable.

Cpm : The Cpm is another important Capability Ratio , and is given by :

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

Process Capability for Non Normal Data

As discussed earlier, the formula for the process capability indices assume that the

data comes from a normally distributed process. However ,If one were to compute

the capability of processes assuming normality when it is actually not , the results

could be in error .

Hence , it is important to rst assess if the data is normally distributed . This can be

done with methods such as probability ploing or more easily by hypothesis testing

methods such as the Anderson Darling test.

If the data is not normally distributed , one can try

o transforming the data to obtain a normal distribution Or

o Try ing other known distributions to the data such as Exponential , Weibull,

Lognormal etc. This can be done with standard statistical soware packages such as

the MINITAB.

o If no known distribution ts the data , one should work with non- parametric

methods and the above capability indices will not be valid.

The Box Cox Transformation Method

One approach to making non-normal data resemble normal data is by using a

transformation. Among the many methods available for transforming non- normal

data to normal , the Box Cox is one of the most popular. All transformation

methods use transformation functions that convert a non- normal distribution to a

normal distribution . The Box-Cox transformation function is dened as

Yt = Y

where , Yt is the transformed value of Y( the response variable ) and is the

transformation parameter. For = 0, the natural log of the data is taken instead of

using the above formula. For example :

10 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

For = -1 , the transformed value of Y will be 1 /Y ,

For = 2 , the transformed value of Y will be Y2

For = 1/2 , the transformed value of Y will be

= 0 , the transformed value of Y will be ln(y)

Example : The following table ( Fig 37) shows data of time taken to resolve customer

complaints ( TAT in hrs. ) An Anderson Darling Test using MINITAB indicates that the

data is not normally distributed. Refer Fig. 38 : Minitab Output . The p-value for the test is

0.032 ( < of 0.05)

(hps://improveandinnovate.les.wordpress.com/2014/07/slide113.jpg)

Note :

i) 10 data points may not be a statistically signicant sample size to establish normality .

ii) Concept of hypothesis testing and p-value is discussed in detail in subsequent lessons :

The Analyze Phase.

The data was transformed using the Box Cox Transformation method . Figure 39 is

the Minitab Output for the Box Cox plot . It shows the most likely value of , the

transformation parameter . In this case , the value of = 0 . This means the original

data can be transformed using ln(y) as the transformation function. The

transformed data is shown in the table in Figure 40 .

11 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

A normality test of the transformed data , using Anderson Darling Test conrms

that the transformed data is normally distributed. The corresponding p value is

0.079 ( > ) . Refer Figure 41 .

(hps://improveandinnovate.les.wordpress.com/2014/07/slide93.jpg)

(hps://improveandinnovate.les.wordpress.com/2014/07/slide84.jpg)

12 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide74.jpg)

Six Sigma Metrics & Capability Analysis for Aribute Data

The Six Sigma Methodology uses a set of metrics to measure process performance

that are similar to the Process Capability Indices , These metrics are oen used to

demonstrate the amount of improvement achieved by six sigma improvement teams

by estimating process capabilities before and aer the project . The Six Sigma

metrics can be used for variable as well as for aribute data ( count and proportions)

. Some of the commonly used metrics are :

Defects Per Million Opportunities ( DPMO)

Process Yields or PPM Defective

Rolled Throughput Yields ( RTY)

Each of the above results can be translated into a common metric called Process

Sigma Level Or Sigma Capability . These metrics are derived from the normal

probability distribution .

The sigma capability ( also called z value) is a metric expressed as a single number

that indicates defect rate of a process. This means , higher the sigma capability, the

lower will be the defect rate and vice- versa . For example , A 6 sigma level process

produces only 3.4 Defects in a Million Opportunities ( 3.4 DPMO ) , whereas a 3sigma

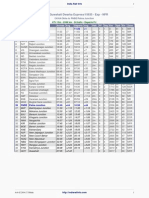

level produces 66,807 defects in a Million Opportunities. The following table (Fig.42 )

shows process sigma levels for various defect rates

13 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide65.jpg)

Note : The above DPMO values include a 1.5 shi to factor the long term process

variability a concept discussed later in the chapter.

Cpk & Process Sigma Level

Fig. 43 relates to the example discussed for computing Cp & Cpk. With reference to

this gure, it is easy to correlate Cpk with Process Sigma Level.

With reference to the normal probability distribution , the sigma level of a process

can be dened as the no. of standard deviations that can be ed between the

process mean and the CLOSEST specication limit .

14 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

Applying this denition of process sigma level we have :

The closest specication limit to the mean is the USL , Hence , the distance between

process mean and the CLOSEST specic limit is :

USL- = 305 302 = 3 ( Refer g. 43 )

Standard deviation = ( UCL LCL) /6

= ( 306 -298) / 6 = 8/6 = 1.33

Process sigma level ( z value ) = (USL )/

= 3/1.33 = 2.25

One would now observe that , Process sigma Level = 3* Cpk !

Defects Per Million Opportunities ( DPMO)

The DPMO is a Six Sigma metric that is used when a team needs to monitor process

performance with respect to defects.

Defect Vs. Defective : A defect is a non conformity in the product or process

with respect to a single quality characteristic that does not aect the functioning

of the product / process, whereas a defective is an entire unit that is

unacceptable to the customer. For example : A dent or a scratch on the body of car is

one defect whereas a defective car is one that fails to start.

An Opportunity is a Critical to Quality Characteristic ( CTQ) specied by the

customer. Thus , any failure to meet a CTQ requirement is termed a defect .

To compute DPMOs , consider the following example :

Example : A component has 4 dened opportunities /CTQs . 30 samples of the component

are inspected for defects against the 4 CTQs . Total defect count is 44 .

The Defects Per Opportunity( DPO ) is given by :

DPO = Observed Defects / Total Possible Defects

15 of 96

= 44/( 4 x 30)

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

= 0.366666

Note : Each component provides 4 opportunities to produce a defect. Hence Total Possible

Defects = 4 x 30

6

Hence , DPMO = DPO x 10

= 366666

To translate this into a process sigma level we can look up the DPMO & Yield

conversion tables ( refer standard tables in Six Sigma Handbooks ) which gives a process

sigma level of 1.84

We can also use the following formula in MS Excel:

NORMSINV( 1- defects/volume) + 1.5

Proportion Defective ( Yield or PPM)

This metric is used when monitoring rejections in processes / products . Commonly

used terms in the industry are Scrap% , First Time Thru , First Pass Yield , PPM

etc

Estimating Process Sigma Level in this case is fairly simple : Convert the data in % yield =

( 1 proportion defective) X100

Example : 92 out of 950 fasteners manufactured are defective.

Yield = ( 1 92/950) * 100

= 90.31%

From the DPMO& Yield conversion tables , this translates into a process sigma level of

2.79

We can also use the following formula in MS Excel :

NORMSINV( yield in fraction) + 1.5

Rolled Throughput Yield ( RTY)

16 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

This is an important six sigma metric and is useful when multiple processes are

connected in series ( Fig. 44 )

(hps://improveandinnovate.les.wordpress.com/2014/07/slide46.jpg)

Example : Consider the 4 processes shown in Fig 44 above .Following are yields of each of

the processes

o Process A : 97.5%

o Process B : 98%

o Process C : 99%

o Process D : 97.5%

The Rolled Thruput Yield is given as the product of all four yields i.e

RTY = (0.975 x 0.98 x 0.99 x 0.975) x 100 %

= 92.23 % !

From the conversion tables , this translates into a process sigma level of 1.42 !

Note : The RTY is a very important metric to establish the fact that even when each of the

4 processes performs at 6sigma levels , the customer will receive an output that will be

lower than a 6sigma level.

17 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

RTY & DPU : The RTY and Defects / Unit ( DPU ) can be related through a special

form of Poisson distribution as given below :

RTY = e

DPU

Short Term and Long Term Capability : The 1.5 Shi

In the Six Sigma methodology , one comes across a term called the 1.5 shi .

This is an interesting theory that has been a subject of much debate. The inventors of

the Six Methodology argued that in the long term a process is likely to show higher

variation than in the short term. Hence , when computing process sigma levels using

short term data ( samples) , one can obtain only short term process capabilities. To

factor in the long term variation , a process shi of 1.5 from the mean is

considered .

Example : If sample data indicates the process at 4.5 , then the long term sigma is

considered as 4.5 1.5 = 3 . This can be generalized as :

18 of 96

Long Term Sigma Level = Short Term Sigma Level 1.5

Or

Zlt = Zst 1.5

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

The Table in Fig . 42 shows long term sigma levels. We can add another column to

show corresponding short term sigma levels. Refer Fig. 45.

(hps://improveandinnovate.les.wordpress.com/2014/07/slide29.jpg)

Using the normal probability distribution concepts learnt earlier in the chapter , it is

easy to establish that a long term 6 sigma process actually produces only 0.002

DPMO This is also called 2 parts per billion ( 2ppb) . This is equivalent to a short term

process sigma level of 7.5 !

Suggested Reading :

1. Statistics For Management by Levin & Rubin

2. Jurans Quality Handbook

3. Introduction to Statistical Quality Control by D C Montgomery

Leave a comment

CSSBB Tutorial Series : Lesson 8

19 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(http://improveandinnovate.wordpress.co

m/2014/07/16/cssbb-tutorial-serieslesson-8/)

July 16, 2014July 17, 2014 Lean Six Sigma, Uncategorized

Lesson 8 : The Measure Phase Part 4

Topics Covered

Measurement Systems

Measurement Methods & Gauges

Measurement System Analysis Gage R & R Studies

Metrology-Basics

Measurement Systems

The only man who behaved sensibly was my tailor: he took my measure anew every time

he saw me, whilst all the rest went on with their old measurements and expected them to t

me.

George Bernard

Shaw

Introduction

Validating measurement systems is vital to successful data collection and analysis.

Quite oen , Six Sigma teams end up making wrong decisions due to poor quality of

data . Data accuracy and precision is a function of the operator or inspector

responsible for collecting the data as well as the gauges ( measuring instruments)

used to collect data. An inadequate Measurement System might adversely impact

the process and (or) the improvement project in the following ways :

o Inaccurate data analysis might impact further decision making

20 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

o The problem might get solved by simply xing the measurement system and the

project might not be required at all.

o The team might tamper with process in an aempt to reduce variation without

actually realizing that the root cause(s) is elsewhere.

Measurement Methods & Gauges : Data collected with the help of any

measuring instrument can be of two types : i) aribute or

ii) variable. Various

measurement methods and gauges are used depending on the process

requirements and quality characteristic to be measured. Some of the commonly

used measurement methods are :

o Mechanical Systems : Example vernier calipers , micrometers , ring gages etc.

o Electronic Systems : Example Co-ordinate measuring machines ( CMMs)

o Optical Systems : Example Infrared Thermometers

o Pneumatic Systems : Example air calipers and air ring gauges

o Electron based systems : Example hot cathode ionization gauge

Measurement Systems Analysis ( MSA)

A Measurement System Analysis, is a designed experiment to identify the

components of variation in the process . The objective of any MSA is to ensure that

variation due to measurement system is under control and does not adversely impact

analysis of the observed process variation. The MSA is an important part of any Six

Sigma improvement project.

The ow chart in Fig. 27 explains the concept of measurement system analysis.

21 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide102.jpg)

Measurement System Analysis : Terminologies

Measurement System Variation and Error can be classied into two categories :

Accuracy and Precision

Accuracy : The ability of a measurement system to provide the correct results.

Accuracy of a measurement system has three components :

o Bias : The absolute dierence between the observed value and the true ( actual )

value.

o Linearity A measure of the consistency of the accuracy of gage across the entire

range of the measurement system.

o Stability Ameasure of the accuracy of the system over a period of time.

Precision : Precision is a measure of the variation obtained from repeated

readings with the same gauge.Precision has two components :

o Repeatability : Variation when one operator repeatedly measures the same unit

with the same measuring equipment.

o Reproducibility : Variation when multiple operators measure the same unit with

the same measuring equipment.

22 of 96

Discrimination : The ability of the measurement system to detect small changes

in the value of the quality characteristic. As a rule of thumb , gauge selection

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

should be such that it can detect atleast 1/10 th of the tolerance i.e ( USL LSL)

specied by the customer.

Measurement System Analysis Types

Depending on the quality characteristic to be monitored , MSA can be classied into

two types : i) Variable and ii) Aribute

i) Variable MSA : The measurement system analysis used for variable data is called

variable Gauge Repeatability and Reproducibility ( Gauge R & R ). It is typically used

in manufacturing environment for inspection and measuring tools such as

micrometers , Vernier calipers , height gauges etc. Two methods are commonly used

in the variable MSA :

o Analysis of Variance ( ANOVA) Method and

o X(bar) R Method.

The ANOVA method is a more robust method as we can compute the Operator*Part

variation with this method( Refer Fig. 27). This is not possible with the X(bar) R

method. MSA computations can be quite cumbersome and hence require use of a

soware.

Note : Readers are advised to familiarize themselves with the ANOVA method (will be

discussed later !) for a good understanding of the variable MSA method.

Gauge R & R : Concept

2

The total observed variation in a process ( Tota) can be represented as :

2Total = 2 measurement process + 2 Process

and the variation due to measurement system can be represented as :

2

2

2

2

Measurement Process = gage = (repeatability ) + (reproducibility)

Hence , the Gauge R&R , which is a % of measurement variation over the total

variation is given by :

23 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide104.jpg)Note : Typical

GR&R studies are done with 10 parts , 3 operators and each operator taking two

measurements (trials) per part.

Example :The following data ( Fig. 28) relates to heights of aluminum ns ( mm) produced

by a n mill , measured by a height gauge. 10 ns were drawn at random from the process

.Three operators measured heights of the 10 ns , twice , in random order. The objective

was to compute Gauge R & R %

(hps://improveandinnovate.les.wordpress.com/2014/07/slide92.jpg)

Solution :

The Gauge R & R can be worked out easily with the help of Minitab Statistical Soware ,

using the following commands :

Stat > Quality Tools > Gage Study > Gage R & R Study ( Crossed). Following are the

results : ( MINITAB OUTPUT in Fig. 29)

24 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide83.jpg)

Acceptance Criteria for Gauge R & R Study

2

2

1. For % contribution = ( gage)/ ( total)*100 , the following is the acceptance

criteria :

If % contribution is less than 1 % Measurement System is Excellent

If % contribution is between 1% 10% Measurement System is acceptable ,

but should be used with caution

If % contribution is greater than 10% Measurement System is unacceptable

needs to be replaced.

Note : The corresponding value is circled in the MINITAB Output Table ( Fig. 29)

2 .For % Study Variation = Precision to Total Variation ( P /TV )% , the following is

the acceptance criteria :

% Study Variation is Given By : 6* (Measurement SD / Total SD)

If % Study Variation is less than 10 % Measurement System is Acceptable

If % Study Variation is between 10% 30% Measurement System is acceptable ,

but use with caution

If % Study Variation is greater than 30% Measurement System is unacceptable

needs to be replaced

Note : The corresponding value is circled in the MINITAB Output Table (Fig. 29)

3. No. of Distinct Categories ( NDC) is the no. of dierent groups in the data that

25 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

the measurement system can discern . This should be greater than 5 . It is

computed using the following formula :

NDC = 1.41x(Standard Deviation of Parts ) / Standard Deviation of Gauge

1. % Tolerance : Optionally, MINITAB will also return another important

information called SV/ Tolerance which is given by :

% Tolerance = (6 * Measurement SD) / ( USL LSL )

where, USL & LSL are the upper and lower specication limits provided by the

customer.

The operator * part interaction can be shown separately by the ANOVA method.

Minitab Output omits this from the table if the p value for Op* Part interaction >

0.25 . In the example discussed , there is no signicant Operator * Part interaction.

Gauge R & R : Graphical Results : Minitab Output

The Minitab Output also provides a graphical summary of the values shown in the

tables earlier. ( Fig. 30)

26 of 96

Components of variation graph displays Repeatability , Reproducibility and

Gauge R & R as a % of Total Variation

An important part of the graphical summary is the X(bar) & Range chart This

concept will be discussed in detail in subsequent lessons : The Control Phase .

Note : For an acceptable MSA , one would expect most points in the X bar

Chart to be outside the control limits indicating that the variation is primarily due

to dierences between the parts and not due to the measurement system . On

the other hand , if most points on the X-bar chart fall within control limits it

indicates the variation is primarily due to measurement system

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide55.jpg)

NOTE : All acceptance guidelines are as dened by the Automotive Industry Action

rd

Group ( AIAG) , MSA Reference Manual , 3 edition. The AIAG was formed by the big

three automakers i.e Ford , GM & Chrysler in the year 1991.Readers are advised to refer

to the latest AIAG manuals for any changes in the guidelines.

The AIAG method uses the following terminologies and denitions :

Gauge R & R , GRR =

(hps://improveandinnovate.les.wordpress.com

/2014/07/slide73.jpg)

% Gauge R & R = %GRR = (GRR/TV)*100

EV = Equipment Variation = Repeatability

Hence , % EV = (EV/TV) *100

AV = Appraiser Variation = Reproducibility

Hence , % AV = (AV/TV) *100

27 of 96

Total Variation ( TV ) =

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide64.jpg)

where , PV = Part- to Part Variation

The values of EV , AV and PV are computed using constants from the Xbar R

Control Chart tables ( can be found in any book on SPC)

Gauge R & R : X(bar) R Method :

This method is similar to the ANOVA method and can be performed easily with

MINITAB. One can expect minor dierences in the results due to the fact that this

method approximates standard deviation with range values using the control chart

method. Refer Chapter 8 for detailed information on the Xbar R Control Chart. As

discussed earlier , this method does not compute Operator*Part interaction

separately.

Measurement System Analysis : Aribute Data

MSA for aribute data ( also called Aribute Gauge R & R ) is used when the quality

characteristic to be monitored is aribute in nature , for example , ratings or rankings

, pass/ fail in an inspection , accepting / rejecting an application form. etc . Following

are the steps for conducting an Aribute Gauge R & R:

1. Identify the sample ,usually more than 30 ( some good ,some bad and some

borderline cases)

2. Have the items rated / graded rst by an expert ,

3. Select inspectors who would be rating the items

4. Pass the items in a random order to each inspector and record the ratings

5. Repeat the process to obtain ratings for a second trial ( in random order again ! ).

Note : This method is also known as kappa method

Example : The Manager of a placement rm is concerned about the consistency of

her executives in short listing resumes for various positions . The Manager would like

to validate her concerns by using an Aribute Gauge R & R method . 20 resumes of

applicants to a position advertised were identied by the manager ( a mix of good

ts , poor ts and borderline cases) . Three executives were asked to grade the

resumes ( Accept or Reject) with reference to the Job Description. Each executive

was given two trials , in random order . The resumes were also graded separately by

the manager regarded as the expert ( reference). The results are displayed in Fig. 30

28 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide45.jpg)

Solution :

The following information can be gathered from the table :

No. of times an executive agrees with herself In Sl.no. 3 ( shaded), Exec. A is not

consistent on both trials for the same resume

No. of times an executive agrees with herself and also with other executives In Sl.

No. 8(shaded) , all executives agree with themselves but executive B does not agree

with executives A & C

No. of times all executives agree with each other and also with the expert. In Sl.

No. 20 (shaded) , all executives agree with each other , but not with the expert. This

is an estimate of Aribute Gauge R & R %

In the above example, we have 14 occasions ( marked * ) where all executivesagreed

( accept or reject) with each other and also with the expert . Hence , the Aribute

Gage R & R % is given as : (14/20 ) x 100 = 70 % . The target is 100% for all cases with

a lower limit of 80%.

The same result can also obtained with MINITAB using the following command :

Stat > Quality Tools > Aribute Agreement Analysis

Fig. 31 shows the Minitab output and Fig. 32 shows a graphical analysis of the

appraisers performance .

29 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide35.jpg)

Conclusion :

Since the agreement is only 70% , the measurement system is not adequate . Figure

31 also shows the 95% condence interval for the agreement % and the Fleiss

kappa & Cohens kappa statistics . The corresponding p-values will indicate whether

or not to accept the null hypothesis.

Subsequent lessons will deal with condence intervals , hypothesis testing and

p-values.

Fig. 32 is a graphical analysis of the appraisers performance. Appraisers A and B

have only 85% agreement with the expert ( standard) while the within appraiser

agreement is low for appraiser A ( 90%)

(hps://improveandinnovate.les.wordpress.com/2014/07/slide28.jpg)

30 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

Metrology Basics

Metrology is dened as the science of measurement, embracing both experimental

and theoretical determinations at any level of uncertainty in any eld of science and

technology ( Source : The International Bureau of Weights and Measures ).

Metrology deals with the following subjects :

Development and establishment of units of measurements , traceability standards.

Application of the science of measurement in various manufacturing processes

which include selection of the right measuring instruments and their calibration.

Compliance to regulatory standards such as weights and measures , safety of the

consumers etc.

Traceability : A key concept in metrology is traceability which is the capability to

verify the history, location, or application of an item by means of documented or

recorded identication.

Traceability refers to an unbroken chain of comparisons relating an instruments

measurements to a known standard. Every country maintains its own metrology

system which dene various standards.

Calibration : Calibration is a comparison between measurements one of known

magnitude or correctness made or set with one device ( known as the standard) and

another measurement made in as similar a way as possible with a second device. This

is done to ensure an instruments accuracy ( bias , stability and linearity) during its

useful life.

Calibration is a periodic activity and follows a pre dened schedule that depends

on the measuring instrument and external factors. Calibration details need to be

recorded and are oen a subject of quality audits.

Leave a comment

CSSBB Tutorial Series : Lesson 7

(http://improveandinnovate.wordpress.co

m/2014/07/15/cssbb-tutorial-series31 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

lesson-6-part-3/)

July 15, 2014July 17, 2014 Lean Six Sigma

Lesson 7 : The Measure Phase Part 3

Topics Covered :

o Probability Distributions Discrete and Continuous

Probability Distributions

The only man who behaved sensibly was my tailor: he took my measure anew every time

he saw me, whilst all the rest went on with their old measurements and expected them to t

me.

George Bernard Shaw

I . Probability Distributions

A probability distribution is a statistical model that describes characteristics of a

population. Probability distributions can be used in Six Sigma for :

o Predicting probabilities of occurrence of future events

o Baselining process performance i.e understand how a process is currently

performing

o Comparing performance of multiple vendors ( processes ) etc.

Probability Distributions are primarily of two categories :

32 of 96

Discrete Probability Distributions, and

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

Continuous Probability Distributions

Probability Distributions Terminologies

Random Variable : A variable can be called random if it takes unique numerical

values with every outcome of an experiment. The value of the random variable

will vary from trial to trial as the experiment is repeated. Random Variables can

be discrete or continuous . For example :

o A coin is tossed several times. The outcome x is a discrete random variable as it can

take only values 0,1,2 etc.

o A process is run several times . The time taken to complete the process each time ( called

the cycle time) is an example of a continuous random variable as it can take any positive

value and not just integer values..

o Probability Density Function (pdf) is the probability of the random variable

taking a value equal to x, i.e

F(x) = P(X=x)

Cumulative Distribution Function (cdf) is denoted by F(x) and represents the

probability of the random variable ,X such that ,

F(x) = P(X x)

o The expected value (or population mean) of a random variable indicates its

average or central value. It is a summary value of the variables distribution. Thus

o For a discrete random variable the expected value is given by :

= E(X) = xi p(xi)

o For a continuous random variable , the expected value is given by :

= E(X) = x f(x)dx

Discrete Probability Distributions : Some situations call for discrete data, such as;

the no. of applications rejected , the no. of abandoned calls

etc. Such data can be represented by discrete probability distribution i.e a probability

distribution that can take only discrete values. The following are some commonly

33 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

used discrete probability distribution functions :

1. Poisson

2. Binomial

3. Hypergeometric

1. A Poisson distribution describes the count of the number of events that occur in

a certain time interval or space. For example, the number of customers arriving every

hour , the number of calls received by a switchboard during a given time period etc. The

Poisson probability density function is given by :

is the mean ,

x is the Poisson distributed random variable and

x! , called n factorial = n*(n-1)*(n-2).x1

Note :

i)

The mean of the Poisson process is ,

ii) The variance of the process is also , that is , 2= , so that the standard

deviation is =

iii)

The Poisson distribution can be used when:

o The no. of possible occurrences is large

o The average no. of occurrences is constant

o The probability of the event is small

34 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

Example : A soware averages 8 defects in 8000 lines of code. What is the probability of

exactly 2 errors in 4000 lines of code ? What is the probability of less than 3 errors?

Solution :

The average defects in 4000 line of code (= ) = 4,

Probability of exactly 2 errors is P(x=2) = (e-4x 42)/2! = 0.1465

Use of Poisson Tables : An easier way to arrive at the solution is to refer to the

Poisson Tables and read o the value relating to = 4 & x = 2.

This problem can also be easily solved with the help of MS Excel using the formula :

Poisson ( 2,4,0) = 0.146525.

Note : The 0 at the end of the formula gives the probability density function . For

cumulative density function , the zero should be replaced with 1

o Probability of less than 3 errors

P( x<3 ) = P(x=0) + P(x=1) + P(x=2)

From the table in Appendix VII ,

P(x<3) = 0.0183 + 0.0733 + 0.1465 = 0.2381

o As seen earlier , this can be solved easily with MS Excel using the following

formula :

P ( x<3 ) = Poisson (2,4,1)= 0.2381

Note : The 1 at the end of the formula gives the cumulative density function.

2. A Binomial random variable describes the number of successes in a series of trials.

For example, the number of students passed in class of 50. The binomial probability

density function is given as :

35 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide211.jpg)

where,

P(x,n,p) is the probability of exactly x successes in n trials with a probability of

success equal to p on each trial and

1. 1. the total number of trials is xed ;

2. there are only two possible outcomes for each trial : success and failure

3. the outcomes of all the trials are statistically independent;

4. all the trials have an equal probability of success.

Note :The mean and variance of the binomial distribution are

36 of 96

= np and

2 = np(1-p)

Example : The success rate of a Black Belt Certication test is 65% ! In a class of 10

participants , what is the probability of 7 participants clearing the test. ? What is the

probability of more than 8 participants clearing the test?

Solution :

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

p = 0.65 , n = 10 , substituting ,

i) P( x=7) = 0.2522

Using the Binomial Tables : An easier way to arrive at the solution is to use the

Binomial Table and read o the value relating to p=0.65 , n= 10 & x = 7 .

Note : This can also be solved with the help of MS Excel using the formula

BINOMDIST(7,10,0.65,0) = 0.25222

The binomial probability density function for n >8 is given by :

P ( x <=8) = P(x=0) + P(x=1) + P(x=2) + P (x=8)

Hence , the probability of more than 8 participants clearing the test is :

P(x> 8) = 1- P(x <=8)

= 1- Binomdist( 8,10,0.65,1) = 0.086

3. Hypergeometric Distribution : The hypergeometric distribution is a binomial

distribution without replacement in a nite sample and is given by :

(hps://improveandinnovate.les.wordpress.com

/2014/07/slide20.jpg)

where,

p(x,n,,m,N) is the probability of exactly x successes in a sample of n drawn from a

population of N containing m successes .

Note : Unlike the case of binomial distribution, in a hyper geometric distribution :

37 of 96

the trials are not independent and hence

the probability of success changes from trial to trial

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

Example : A consignment of 25 parts contains 4 defectives. What is the probability that a

sample of 8 drawn at random will contain 2 defectives ? What is the probability that the

sample will contain less than 2 defectives ?

Solution :

n=8 , N = 25, m = 4 ,

This problem can be solved with MS Excel using the formula HYPGEOMDIST()

P (x=2 ) = HYPGEOMDIST(2,8,4,25) =0.30

P( x<2) = P( x=0) + P( x= 1)

= HYPGEOMDIST(0,8,4,25) + HYPGEOMDIST(1,8,4,25)

= 0.19 + 0.43 = 0.62

Note : Excel does not have a cumulative form of the hyper geometric distribution.

Continuous Probability Distributions : Continuous random variables can take

innite no.of values between nite or innite ranges. Examples include : Distance

travelled , Cycle time , etc.

Most commonly used continuous probability distributions are :

Normal Distribution

Lognormal Distribution

Weibull Distribution

Exponential Distribution

1. The Normal Probability Distribution : This is the most widely used probability

distribution in statistical analysis . The probability density function of the normal

distribution is given by:

38 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide191.jpg)

where

x is the random variable such that

is the population mean

is the population standard deviation

= 3.14159

e = 2.71828

The normal probability distribution has certain properties that are very useful in our

understanding of the characteristics of the underlying process.

39 of 96

The distribution is symmetric i.e its skewness is zero

For the normal probability distribution , the mean = median = mode.

The curve extends from - to + along the x- axis.

The width ( spread) of the curve is a function of the standard deviation . Higher

the standard deviation , wider the curve and vice- versa. Refer to Fig. 18

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide181.jpg)

Fig. 18 : Normal Distribution Curve

The areas under the curve represent the probabilities of the distribution for

various values of the random variable. The mean () divides the curve into two

equal halves i.e 50% of the area under the curve is to the le of the mean and the

other 50% is to the right of the mean. The areas under the curve between any two

nite limits can be obtained by integrating the density function between the two

limits. Some important areas to remember are :

o 68.26 % of the area under the curve is within the +/- 1 limit. This means 68.26%

of the values of a normal random variable fall within the +/- 1 limit. Similarly,

o 95.44% of the area under the curve is within the +/- 2 limit., and

o 99.72% of the area under the curve is within the +/- 3 limit.

Areas under the normal curve between any nite limits , such as the above, can be

computed easily either with the help of tables or with statistical soware . Refer to

Fig 19a , 19b & 19c.

40 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide171.jpg)

The Standard Normality Probability Distribution

The Standard Normal Probability Distribution is a normal probability distribution

of a random variable ( called z ) with a mean of 0 and a standard deviation of 1 .

The random variable z is given by:

(hps://improveandinnovate.les.wordpress.com/2014/07/slide161.jpg)

Thus , the z value can be seen as the number of standard deviations that a point

x is from

Example :The cycle time of an assembly process is known to be normally distributed with a

mean of 28 mins. and a standard deviation of 8 mins. i) What % of assemblies have a

41 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

cycle time of less than 16 mins ? ii) What % of assemblies have a cycle time of more than

42 mins ? iii) What % of assemblies have a cycle time between 16mins. and 42 mins.

Solution :

(hps://improveandinnovate.les.wordpress.com/2014/07/slide151.jpg)

Fig. 20 : Areas under the normal Curve

The areas under the normal curve that are of interest are marked in Figure 20. First

the x values need to be converted to their corresponding z-values .

i)

z1 = (16-28)/8 = 1.5 ,

Hence , P(x <16) = P(z1 <1.5) , the ve sign indicates z is to the le of the mean.

From the standard normal table (refer to any book on statistics for the normal tables),

the area under the curve for a z value of 1.5 = 0.0668 .

Note : This value can also be obtained with MS Excel using the formula

NORMSDIST(1.5)

Conclusion : This means apprx. 6.68% of the assemblies have a cycle time of less

than 16 mins.

ii) z2 = (42 28)/8 = 1.75

Hence , P( x > 42) = P (z2 >1.75)

42 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

From the standard normal table , the area under the curve for a z- value of 1.75 =

0.9599.

Note : This value can also be obtained with MS Excel using the formula

NORMSDIST(1.75)

Conclusion : As indicated in the table in Appendix I , this value ( 0.9599) is the area

under the normal curve from - to z ( = 1.75 ) . Hence P(z>1,75) is the area to the

right of z , which is equal to (1 0.9599) = 0.04. This means approximately 4% of

assemblies have a cycle time of more than 42 mins.

iii) z3 is the area between z1 & z2 ( Refer Fig. 20 ) . It can be easily computed as :

1 (area under z1 + area under z2)

Hence , P ( 16<x<42) = P(-1.5<x<1.75)

= 1- (0.0668 + 0.04) = 0.8932 .

Conclusion :This means approximately 89% of assemblies have cycle time between

16 mins. and 42 mins.

2. The Students t Distribution : The t- distribution ( or Students t) is used as an

approximation for the normal distribution , when the sample sizes are small and the

population standard deviation is not known.The t distribution is a family of similar

probability distributions. The shape of the distribution depends on what is known as

degrees of freedom which is given by n-1 where n is the sample size. Thus , as the

sample size increases the t-distribution approaches normality. Fig. 21 shows typical tdistribution curves for n= 6 and n = 90 degrees of freedom. The t- distribution is

discussed in detail in The Analyze Phase

43 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide141.jpg)

Fig. 21 : t- distribution

3. The F- Distribution :The F distribution is used in hypothesis testing for comparing

two variances. Like the t distributions, the F distribution is actually a family of

distributions .However , unlike the t- distribution , the F distribution is

characterized by a pair of degrees of freedom i.e a numerator degrees of freedom

and a denominator degrees of freedom. The random variable is the F ratio , which

is a ratio of two variances. Fig . 22 shows four F Distributions for various numerator

and denominator degrees of freedom. Use of F- Distribution is discussed in detail in

The Analyze Phase.

(hps://improveandinnovate.les.wordpress.com/2014/07/slide132.jpg)

Fig. 22 : The F Distribution

44 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

4. The Exponential Distribution : The exponential distribution is used commonly in

reliability engineering.It is used to model items with a constant failure rate such as

electronic & mechanical components and other applications such as wait times at

customer service counters. The exponential distribution & the Poisson distribution

are inversely related. That is , if a random variable, x, is exponentially distributed,

then the reciprocal of x, y=1/x follows a Poisson distribution. Likewise, if x is Poisson

distributed, then y=1/x is exponentially distributed. Refer Fig . 23 .

The exponential probability density function is given by :

(hps://improveandinnovate.les.wordpress.com/2014/07/slide122.jpg)

The cumulative distribution function is given by :

(hps://improveandinnovate.les.wordpress.com/2014/07

/slide25.jpg)

= constant failure rate , for example : Number of failures / hr Or No. of failure / cycle

Thus , can also be expressed as , 1/

where , is the Mean Time Between Failures (MTBF)

45 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

The standard deviation of the exponential distribution is given by

(hps://improveandinnovate.les.wordpress.com/2014/07/slide112.jpg)

(hps://improveandinnovate.les.wordpress.com/2014/07/slide101.jpg)

Fig 23 : The exponential distribution

Example :

The average life of a component is 1000 hrs. It is known that the failure rate of the

component is exponentially distributed . i) What is the probability that the component will

last 800 hrs ? ii) What is the probability that the component will last atleast 800 hrs ?

Solution :

Since the average life is 1000 hrs . ,

= 1/1000 = 0.001

i) Probability of exactly 800 hrs. = P(x=800) = 0.001*e^(-0.001*800)

= 0.000449

Note : The same value can be obtained with MS Excel by using the formula

EXPONDIST(800,0.001,0)

46 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

ii ) Probability of at least 800 hrs. = P ( x > 800) = 1- P ( x <=800)

=

1- ( 1- e^(-0.001*800))

0.4493

Note :

This is a case of cumulative distribution function

The same value can also be obtained with MS Excel using the formula 1

EXPONDIST ( 800,0.001, 1 ) . The1 at the end of the formula indicates a

cumulative distribution function.

5. Weibull Distribution : The Weibull Distribution is used to model the time to

failure of products that have a varying failure rate. It is one of the most commonly

used distributions in reliability engineering.

The three parameter Weibull probability density function is given by :

(hps://improveandinnovate.les.wordpress.com/2014/07

/slide91.jpg)

where

is the shape parameter, >0

is the scale parameter, >0 , and

is the location parameter.

Figure 24 shows probability density functions of Weibull distribution for = 1 , = 0

and for several values of

47 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide82.jpg)

Fig. 24 : The Weibull Distribution

The shape parameter gives the Weibull Distribution its exibility. For example at

= 1 , the Weibull is identical to the exponential distribution . if is between 3

and 4 the Weibull distribution approximates the normal distribution. Refer Fig.

24.

The scale parameter, ,determines the range of the distribution.

The location parameter , , indicates the location of the distribution along the xaxis.

The cumulative distribution function of a Weibull random variable is given by :

(hps://improveandinnovate.les.wordpress.com/2014/07

/slide72.jpg)

Example : A typical application of the Weibull Distribution is to describe the time to

failure of electronic components . The time to failure of an electronic component in a

Television Set is known to follow a Weibull Distribution with a shape parameter of 0.5 , a

48 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

scale parameter of 70 hrs. and a location parameter of 0. What is the probability of the

component lasting at least 200 hrs ?

Solution

= 0.5 , = 70 and = 0

P ( at least 200 hrs. ) = P ( x > 200 )

= 1- P ( x < = 200)

= 1- 0.8155

= 0.1844

Note :The same value can be obtained with MS Excel using the formula :

1 Weibull ( 200, 0.5 , 70,1)

6. The Lognormal Distribution : The log-normal distribution is the single-tailed

probability distribution of any random variable whose logarithm is normally

distributed.If a data set is known to follow a lognormal distribution, transforming the

data by taking a logarithm yields a data set that is normally distributed. While it is

common to use natural logarithm ( denoted as ln),any base logarithm, such as base

10 or base 2, can also be used to yield a normal distribution. The lognormal

distribution is commonly used to model the time to failure of mechanical

components , where the failure is fatigue or stress related. Its probability density

function is given by :

(hps://improveandinnovate.les.wordpress.com

/2014/07/slide63.jpg)

is called the location parameter which is also the log mean . It is the mean of

49 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

the transformed data transformed data. is called the scale parameter which is also

the log SD. It is the standard deviation of the . Fig 25 shows lognormal distributions

for several values of .

(hps://improveandinnovate.les.wordpress.com/2014/07/slide54.jpg)

Fig. 25 : The Lognormal Distribution

Example : The time to failure of a mechanical component is known to follow a lognormal

distribution . The following is the time to failure data in hours for 6 samples tested .

221, 365 , 420 , 310 , 396 , 289

i) What is the probability that a component will last upto 360 hrs. ? ii) What is the

probability that a component will last more than 440 hrs ?

Solution :

The table below shows the transformed data using natural logarithm as the base

50 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

(hps://improveandinnovate.les.wordpress.com/2014/07/slide44.jpg)

i) The probability of a component lasting upto 360 hrs. is given by :

p(x < =360) = p( x <=ln ( 360 ) = p(x <= 5.8861)

z = (x-)/ = (5.8861 -5.7871)/0.2379 = 0.4161

From the normal dist. tables, p( z <= 0.4161) = 0.66

Note : The same value can be obtained in MS Excel using the formula :

NORMDIST( ln(360) , 5.7871 , 0.2379 , 1)

= 0.6613

ii) p(x > = 440) = 1- p( x <=400)

= 1- p( x <=ln (440)) = 1- p(x <=6.0867)

z = (x-)/ = (6.0867 5.7871)/0.2379 = 1.2596

From the normal dist. tables, p(z <=1.2596) = 0.8961

Hence , the probability of a component lasting more than 440 hrs. is

`1- 0.8961 = 0.1039

Note : The same value can be obtained in MS Excel using the formula :

51 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

1 NORMDIST( ln(440) , 5.7871 , 0.2379 , 1)

= 0.1039

7. The Chi- Square (2) Distribution

If n random values z1, z2, , zn are drawn from a standard normal distribution,

2

2

2

squared, and summed, (z1 + z2 + zn ) the resulting statistic is said to have a

chi-squared distribution with n -1 degrees of freedom This is a one-parameter family

of distributions, and the parameter, n, is the degrees of freedom of the distribution (

Refer Fig. 26)

(hps://improveandinnovate.les.wordpress.com/2014/07/slide34.jpg)

Fig. 26 : The Chi Square Distribution

The Chi- Square statistic is given by :

(hps://improveandinnovate.les.wordpress.com/2014/07/slide26.jpg)

, where ,

s2 = sample variance of sample size n

2 = estimated population variance

52 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

The Chi Square distribution has several applications in inferential statistics such as

interval estimates of variances and standard deviations , hypothesis testing such for

Goodness of Fit etc . Application of the Chi-Square distribution is discussed in The

Analyze Phase.

8. Bivariate Distribution :

In all the probability distributions discussed so far , we have seen distributions

relating to only one variable . These are called univariate distributions. If multiple

variables need to be studied simultaneously, the resulting distribution will be called a

multivariate distribution. A bivariate distribution is a from of multivariate

distribution with two variables .Bivariatedata arises from populations in which two

variables are associated with each observation .For example : A nutritionist may be

interested in studying how a particular diet inuences both height and weight of children.

The two variables of interest are height and weight. If both the variables are normally

distributed , we have a bivariate normal distribution.

Approximations to Probability Distributions

In many situations , one may nd it necessary to approximate the real probability

distribution with a simpler distribution. For example , It is known that an underlying

distribution is binomial but the analyst feels it would be easier to assume a normal

distribution and compute the probabilities . This can be done provided the data meets

certain conditions. Some of the commonly used approximations are described below

:

The Normal approximation to the Binomial : The normal distribution may be

used to approximate the binomial if :

np 5 and n(1-p) 5

The Poisson approximation to the Binomial : The Poisson distribution may be

used to approximate the binomial if :

n is large and p is small ( < 0.1) such that np < 5

In this case , , the mean of the Poisson distribution = np

53 of 96

The Normal approximation to the Poisson : The normal distribution may be

used to approximate the Poisson if :

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

The mean > 10

In this case , the mean () and variance (2 ) of the normal distribution will be equal

to

The Binomial approximation of the Hypergeometric : The binomial distribution

may be used to approximate the hypergeotmetric if :

n/N is small ( < 0.1)

In this case , probability of the binomial distribution, p = m/N ,

where , m is the number of successes in the population N

3 Comments

CSSBB Tutorial Series : Lesson 6

(http://improveandinnovate.wordpress.co

m/2014/07/12/cssbb-tutorial-serieslesson-6/)

July 12, 2014July 19, 2014 Lean Six Sigma

Lesson 6 : The Measure Phase Part 2

Topics Covered :

Handling Data

o Sampling techniques

o Data collection

o Basic Statistics & Probability

The only man who behaved sensibly was my tailor: he took my measure anew every time

54 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

he saw me, whilst all the rest went on with their old measurements and expected them to t

me.

George Bernard Shaw

I. Handling Data

Collecting and analyzing data is one of the key requirements in the Measure Phase .

The Six Sigma professional is expected to be an expert in data analysis. This section

will deal with some basic concepts of data management and statistics & probability.

Types of Data : Processes produce data of various types. The following chart (

Fig. 1) shows data types with examples.

(hps://improveandinnovate.les.wordpress.com/2014/07/slide13.jpg)

Fig. 1 : Data Types

Variable Data : Data that has units of measure ( ratio or interval type) . Example :

Temperature , Pressure. Variable Data is of two types

Continuous Data : Data that can take fractional values. Example : Height , Weight

, Time etc.

Discrete Data : Data that can take only integer values . Also called count data .

Example : No. of guests in a hotel , no. of calls received in a day etc.

Aribute Data : Data that is non- numeric and categorical . It is used to measure

aributes of a product or process. Example : customer satisfaction ratings ( good ,

55 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

satisfactory , poor ) , shades of colour in a fabric etc. Aribute Data can be of two types :

Nominal Data : Categorical data without a specic order is called nominal data .

Example : red , blue, black , green etc.

Ordinal Data : Data that has ordered categories but no meaningful intervals

between the measurement is called ordinal data. Example : Customer satisfaction

ratings on a scale of 1-5 etc.

Note : The more continuous the data , the easier it is to analyse.

Levels of Measurement : Measurements are categorized into several levels . A

particular level or scale of measurement will dene how the data should be

treated mathematically. Following are the scales of measurement :

o Nominal data have no order . They have names / labels to various categories.

o Ordinal data will have an order, but the interval between measurements is not

meaningful.

o Interval data have meaningful intervals between measurements, but there is no

true reference point (zero).

o Ratio data have the highest level of measurement. Ratios between measurements

as well as intervals are meaningful because there is a reference point (zero).

Sampling Methods :

For large populations , the time and cost involved in gathering data may be

infeasible. Sampling makes it easier to study a limited amount of data and draw

inferences about the underlying population

Sampling is generally of two types :

1. Judgemental sampling or non random sampling where sampling is done based

on ones expertise and opinion.

2. Random or probability sampling where all items / data points in the population

have an equal chance of being chosen. Statistical analysis can be done with this

data and inferences can be made as it is representative of the population.

Random Sampling : Methods

56 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

The following are the four commonly used methods of random sampling :

i) Simple Random Sampling : In this method each item in the population has equal

probability of geing picked. This removes the element of bias in sampling. This is

the simplest form of random sampling and can be used to estimate population

parameters based on summary statistics. The easiest way to do a simple random

sampling is with the help of random numbers. Using MS Excel , one can generate a

table of random numbers and use it to select the sample.

i) Systematic Sampling : In this method items are selected from the population at

pre- dened intervals of time or space . For example : samples drawn from a process

every 30 mins or every 5th component from the assembly line etc. The disadvantages of

such a sampling method are quite apparent. There could be a bias introduced due to

a pre- dened interval. However , it requires less time and consumes lesser resources

compared to the simple random sampling method.

ii)

Stratied Sampling : This method involves dividing the original

population into homogenous groups ( strata) and then drawing a sample at random

from each group ( stratum) . For example : A market survey team would like to know

how well a new brand of shampoo will be received . For this purpose , the team may rst

divide the population into various age groups ( teens , middle aged and senior citizens etc.

) . Random sampling from each of the groups will ensure that each group is represented in

the sample. This method helps in reducing sampling eort for populations with large

variances.

iii)

Cluster Sampling :This method is similar to Stratied Sampling . The

dierence being, stratied sampling is used when variation within groups is small ,

but variation between groups is large. The opposite is the case for cluster sampling

i.e the groups are essentially similar but within groups variation is large.

Data Collection

A formal data collection process should be established by the Six Sigma team. This

process will ensure that the data collected is NOT time or person dependent. In case

the six sigma team has delegated the data collection activity to others , they should

monitor such activities by :

57 of 96

Questioning collectors for the understanding of operations denitions.

Verifying collected data with source, through sampling or ad hoc QC

Ensuring that pre-dened procedures are followed during the data collection

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

process.

The following are some important properties of data that one needs to ensure while

collecting data :

Data integrity : Is the data genuine ?

Data precision & accuracy : Using the right operational denitions &

appropriate gauges for collecting data

Data consistency : Comparing apples with apples . The Six Sigma team should

ensure there are no changes in measurement systems , operational denitions ,

metrics etc during the course of the project.

Time traceability : Data must be traceable to the time it was collected.

Teams may use dierent templates ( formats ) for data collection depending upon

specic processes or organizational needs . A check sheet is a good example of a

data collection format Refer Fig. 2

(hps://improveandinnovate.les.wordpress.com/2014/07/slide23.jpg)

Fig 2 Check Sheet

Other techniques of data collection include

58 of 96

Data coding

Automating the data collection system

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

Both the techniques will ensure that the data is free from human & gauge errors .

II. Basics Statistics

Terminologies and their Denitions : The table in Fig. 3 shows commonly used

terminologies in statistics and their denitions.

(hps://improveandinnovate.les.wordpress.com/2014/07/slide32.jpg)

Summary Statistics :Summary Statistics are single numbers to describe

characteristics of a data set. Some of the most important summary statistics are :

1. Measures of central tendency : The three measure of central tendency are mean

, median and mode.

2. Mean ( arithmetic, weighted & geometric) : The arithmetic mean of a set of data

is the average of all the values and is given by formula

where , xbar is the sample mean,

is the population mean,

n is size of the sample and

N is the size of the population

59 of 96

7/19/2014 7:19 PM

improveandinnovate | Thoughts and ideas on Quality , Improvement and In...

http://improveandinnovate.wordpress.com/?goback=.gde_3151110_memb...

Median : Median is the middle value in a set of numbers , when the numbers are

arranged in an ascending or descending order. If the data set contains even no. of

observations , the median is the average of the two middle values . The advantage of

median over the mean is that extreme values in a data set do not aect the value of