Professional Documents

Culture Documents

Learning Rules of ANN

Uploaded by

bukyaravindarOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Learning Rules of ANN

Uploaded by

bukyaravindarCopyright:

Available Formats

1

2.1 The Process of Learning

2.1.1 Learning Tasks

The learning algorithm for a neural network is depended on

the learning tasks to be performed by the network. Such

learning tasks include

Pattern association

Pattern recognition

Function approximation

Filtering

Beam forming

Identification and Control

2.2 Learning Methods

2.2.1 Supervised Learning

This is learning with a teacher

Obviously the environment is unknown to the

neural network

Conceptually, the teacher is having knowledge of

the environment

As a result we have a set of input-output examples

This input-output examples will provide the

samples for training

Suppose we have a set of input signal (input vector)

from the environment and the teacher is capable of

supplying the desired response, we have a training

set

So we have an input matrix P and an output matrix

T as the training set

For a particular input, the network will give an

output which is different from the desired out put

given by the teacher

So there is an error between the actual and desired

response

This error is used then to correct the free parameters

of the network

This correction is continuous till the error between

the actual and the desired output is the same (with

in a tolerance limit)

Thus using the matrix P and T, we will train the

neural network. Then the network will be adapted to

the environment

Now suppose we give an arbitrary input (vector) to

the network, the network will supply the required

response (vector)

2.2.2 Unsupervised Learning

This is unsupervised learning where there is no

teacher, to oversee the learning process

This is self organized learning

This attempts to develop a network on the basis of

given sample data

One popular, efficient, and somewhat obvious

approach is clustering

Clustering is nothing but mode separation or class

separation

The objective is to design a mechanism that clusters

the given sample data

This can be achieved by computing similarity

In many occasions, the data fall in to easily

observable groups, where the task is simple. But in

some occasions this is not the case

To perform unsupervised learning we may use a

competitive learning rule

Fore example, let there is a neural network

consisting an input node and a competitive layer.

Here what is used is a task independent measure of

quality that the network is designed to learn

The free parameters of network will be adapted and

finally optimized on the basis of the task

independent measure mentioned early

Fundamentally

the

unsupervised

learning

algorithms (or laws) may be characterised by first

order differential equations

These equation describe how the networks free

parameters evolve (adjust) over time (or iteration, in

the discrete case)

Here some sort of pattern associability (similarity)

is used to guide the learning process

Such an operation leads to network correlation,

clustering or competitive behavior

2.3 Some Supervised / Unsupervised Learning Rules

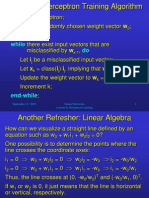

1. Perceptron learning rule

2. Widrow-Hoff learning rule

3. Delta learning rule

4. Hebbian learning

5. Competitive learning

1. The Rosenblatts perceptron learning rule

The learning signal is the difference between the desired

and the actual response. This learning is supervised. This

type of learning can be applied only if the neuron response

is binary (0 or 1) or bipolar (1 or 1). The weight

adjustment in this method is obtained as

wkj (n) [d k sgn (vk (n))] x j

1 if wT x0

(vk (n))

T

1 if w x0

wkj (n 1) wkj (n) wkj (n)

x1

(vk )

x2

vk

wkj

-1

dk

yk

ek

xm

Where n=1,2, is the iteration number, x j j 1,2,..., m is

the input, is the learning rate parameter, vk (n) is the net

activity of the neuron k, (vk (n)) yk (n) is the out put of

the neuron k, d k is the desired response, ek (n) is the error

between the output and the desired response of the neuron k

and wkj (n) is the correction applied to the synaptic

weight between the neuron k and the input node j=1,2, ,

m. There will be no weight correction for the cases were the

actual response and the desired response is equal.

Example

Consider a single perceptron with the set of input training

vectors (samples) and initial weight vector

1

0

1

1

2

1.5

1

1

, x3 ; w (1)

x1 , x 2

0

0.5

0.5

0

1

1

1

0.5

Let the learning rate parameter =0.1. The teachers desired

response

for

x1, x 2 , x3 are d1 1, d 2 1, and d3 1,

respectively. The learning according to the perceptron

learning rule progress as follows:

Step 1 Input is x1 and the desired response is d1

1

2

T

(vk (1)) w(1) x1 1 1 0 0.5 2.5

0

1

w ( 2) w (1) 0.1 ( 1 1)x1

1

1 0.8

1

2 0.6

0.2

0

0 0

0.5

1 0.7

Step 2 Input is x 2 and the desired response is d 2

1

1

T

(vk (2)) w(2) x 2 0.8 0.6 0 0.7 1.6

0.5

1

No correction is performed in this step because

d2 sgn (vk (2)) 1 hence; w(3) w(2)

Step 3 Input is x 3 and the desired response is d 3

1

1

T

(vk (3)) w(3) x3 0.8 0.6 0 0.7 2.1

0.5

1

w (4) w (3) 0.1 (1 1)x 3

0.8

1 0.6

0.6

1 0.4

0.2

0

0.5 0.1

0.7

1 0.5

This completes one epoch of training. Now the training

examples are again presented to the network. As an

exercise you may do this and commend on the result

obtained.

2. Widrow-Hoff learning rule

Here the neurons are assumed to be with linear activation

functions characterized by

yk (n) (vk (n)) vk (n)

The correction in the weights in each time step n is

obtained as

wkj (n) ek (n) x j (n)

wkj (n 1) wkj (n) wkj (n)

Remarks:

This is learning with a teacher.

The output of the neuron k should be directly available

so that the desired response can be supplied.

The correction in synaptic weight applied is

proportional to the product of the error signal and the

input signal.

wkj (n) and wkj (n 1) may be viewed as past and

present values of the synaptic weight wkj . In

computational terms we may write

wkj (n) z 1[wkj (n 1)]

1

Where z is the unit delay operator and represent a

storage element. We see that the error correction

learning is a closed loop control system.

3. The delta learning rule

The delta learning rule is also built around a single

neuron and is valid only for continuous activation

functions. This can be achieved by minimizing a cost

function

or

performance

index.

Since

we

are

interested in the error correction learning, this

performance index can take the form

(w ) 12 (ek 2 )

1

1

(d k y k ) 2 (d k (vk ))2

2

2

Where w [ wkj ] . The cost function (w ) denotes

the instantaneous energy, which can be used to make

the necessary changes in the synaptic weights. This

10

is obviously error correction learning. From the

steepest descend algorithm, the minimization of error

requires the weight changes to be in the direction of

the negative gradient, we take

w (w ) where the operator

,

, ,

wmk

w1k w2 k

and

( w )

,

, ,

w

2k

mk

1k

For a particular k. Now, the components of the

gradient vector are

(w )

(d k (vk )) ' (vk )

wkj

Since the minimization of the error requires the

changes in weight to be in the negative gradient

direction, we have

wkj (d k (vk )) ' (vk ) x j

ek ' (vk ) x j

11

Exapmple:

Consider the set of input training vectors and initial

weight vector

1

0

1

1

2

1.5

1

1

1

, x3 ; w

x1 , x 2

0

0.5

0.5

0

1

1

1

0.5

Let the learning rate parameter =0.1. The desired

response for the three inputs are

d1 1, d 2 1, and d3 1,

respectively.

Let the continuous bipolar activation function be

(v k )

1 e vk

1 e vk

2e vk

1

' (v k )

(1 2 (vk ))

(1 e vk ) 2 2

Such an activation function is continuous and bipolar.

Here the slope of activation function is expressed in

terms the output signal of the neuron. For the given

learning rate parameter the delta rule training can be

summarized as follows:

12

Step 1 We will present the first input sample x1 and

1

the initial weight vector w , yielding

v1k (w1 )T x1 2.5

y1k (v1k ) 0.848

1

' (v ) [1 2 (v1k )] 0.140

2

1

k

0.974

0.948

w 2 w1 0.1[d1 (v1k )] ' (v1k ) x1

0

0

.

526

Step 2

We will present

the second input sample

x 2 and the weight vector w 2 , yielding

vk2 ( w 2 )T x 2 1.948

yk2 (vk2 ) 0.75

' (vk2 )

1

[1 2 (vk2 )] 00.218

2

13

0.974

0.956

w 3 w 2 0.1[d 2 (vk2 )] ' (vk2 ) x 2

0.002

0

.

531

Step 3 We will present the second input sample

3

x 3 and the weight vector w , yielding

v k3 (w 3 ) T x 3 2.46

y k3 (v k3 ) 0.842

1

2

' (v k3 ) [1 2 (v k3 )] 0.145

0.947

0.929

w 4 w 3 0.1[d 3 (v k3 )] ' (v k3 ) x 3

0.016

0

.

505

Since the desired values are 1 or -1, correction is

applied in every step. Since the algorithm did not

converge, the training samples should be presented

again.

14

Example:

Consider a single perceptron with the set of input

training vectors (samples) and initial weight vector

1

1

0

1

2

0.5

1

1

, x3 ; w(1)

x1 , x 2

1.5

2

1

0

0

1.5

1.5

0.5

Assume the learning rate

0.5

and the nonlinear

activation as bipolar hyperbolic tangent function,

av

( v ) tan h

2

1 e av

2

1

av

1 e av 1 e

This is bipolar continuous activation function lies

between 1 and 1 as a .

15

4. Hebbian learning

To Donald Hebb in his famous book organizational

behavior (1949)

When an axon of cell A is near enough to excite a cell

B and repeatedly or persistently takes part in firing it,

some growth process or metabolic change takes place

in one or both cells such that A's efficiency, as one of

the cells firing B, is increased.

The above statement is in a neurobiological sense. For

more complex kinds of learning, almost every learning

modal that has been proposed, involves both output activity

and input activity in the learning rule. The essential idea is

that the amount of synaptic change is a function of both

pre-synaptic and post-synaptic activity. Based on the above

fact, Hebbian learning is the oldest and most famous of all

learning rules

The above statement is made in a neurobiological context.

We may expand and rephrase it as a two part rule

16

If two neurons on either side of a synaptic connection

are activated simultaneously (synchronously), then

the strength of the synapse is selectively increased

If two neurons on either side of a synaptic connection

are activated not simultaneously (asynchronously),

then the strength of the synapse is selectively

decreased

Hebbian learning can be applied for neurons with binary

and continuous activation function. Putting the above

mathematically:

Consider the single neuron k. The net activity of the neuron

k is obtained as;

vk (n) w T (n) x(n)

yk (n) (w T (n) x(n))

The corresponding weight is effected as

w(n) f ( yk (n), x(n))

In the above the function f can take a veriety of different

forms. One such form is,

17

w(n) yk (n) x(n)

w(n 1) w(n) w(n)

OR

wkj (n) yk (n) x j (n)

wkj (n 1) wkj (n) wkj (n)

Where j denotes the neuron just before the neuron k.

Hebbian Learning cont..

w jk

xj

Pre-synaptic N. j

yk

Post-synaptic N. k

w jk is the synaptic weight between the pre and post

synaptic variables.

The following remarks about the Hebbian learning are

in order.

It is the most natural learning of all types of all

other types of learning.

There is strong psychological evidence for

Hebbian learning.

Hebbian learning (memory) is taking place in the

area of the brain called hippocampus

18

It is most natural of all other types of learning.

All types of learning (memory) can be classified

as Hebbian, anti Hebbian (both Hebbian in

nature) or non-Hebbian (other types of

supervised learning).

It is unsupervised learning, but the output of the

post synaptic neuron is available in

aneurobiological sense, or you can compute it

from the mathematical expressions you have

formulated for your work.

This learning is localized in nature, since only two

neurons are involved and refers to short term

memory. It is already mentioned that it is

19

happening in the area of the brain called

hippocampus, as shown in the figure above and

it connects the left and right side of the memory.

In due course it turns out to be a long term

memory, if required.

In Alzheimer's disease, the hippocampus is one

of the first regions of the brain to suffer damage.

memory problems and disorientation appear

among the first symptoms.

Another example of the function f is

wkj (n) ( x j (n) x (n)) ( yk (n) y (n))

Where

x (n) and y (n) are the time depended average

of the corresponding variables.

Exercise: A generalized Hebbian rule is described by

w kj (n) f ( yk (n), x(n))

f ( yk (n)) g ( x j (n)) wkj (n) f ( yk (n))

Where f is the derivative w.r.t. its arguments.

Obtain the following:

20

(i) a plot between w kj (n) and w kj (n)

(ii) the balance point where w kj (n) 0

(iii) the maximum depression where w kj (n) is

minimum

w kj (n)

w kj (n)

g ( x j (n))

wkj (n) f ( yk (n))

(balance point)

(max. depression)

Method of steepest descend

Consider the cost function (w ) of some unknown

weight vector w . The function (w ) maps w in to real

numbers and let it is continuously differentiable w.r.t

w . The problem is to find out the optimal weight

vector

w * such that (w*) (w ) . This is an

21

unconstrained optimization problem which can be

stated as follows:

Minimize the cost function (w ) with respect

to the weight vector w .

In this method the correction in weight is applied in

the direction of steepest descent, that is, in a direction

opposite to the gradient vector (w ) where

,

, ,

w

1

2

m

( w )

,

, ,

w

2

m

1

Now the weight correction is effected as

w (n 1) w (n) (w )

w (n 1) w (n) w (n)

w (n) (w )

22

Using the first order Taylor series expansion around

w (n) to approximate ( w ( n 1))

(w (n 1)) (w (n)) ( (w (n)))T (w (n))

(w (n)) ( (w (n)))T (w (n))

( w (n)) (w (n))2

Thus we see that

(w (n 1)) (w (n)) ie, the

performance index decreases iteration after iteration.

Finally it converges to the optimal solution w*. The

convergence behavior depends on the learning rate

parameter. The following points are worth noting:

When is small, the transient response of the

algorithm is over damped and the trajectory

traced by w(n) take a smooth but slow path in the

w-plane

When

is large, the transient response of the

algorithm is under damped and the trajectory

traced by w(n) take a fast but oscillatory path in

the w-plane

23

When

exceeds a critical value, the algorithm

becomes unstable.

5. The competitive learning

Here we have p number of output neurons. The output of

the winning neuron k is set equal to one, and for all others

the output equal to zero.

1 if vk vi for all

yk 0 otherwise

i, i k

The weights connected to the neuron k is normalized as,

wkj 1

i

for all k

The weight correction is effected as

( x j wkj ) if neuron k wins the compitition

wkj 0

if neuron k losses the compition

O1

Ok

Op

24

The rule has the overall effect of moving the synaptic

weight of the winning neuron towards the input pattern

x . So the final result is the weight vector of the winning

neuron k orient itself towards the input pattern x .

Example: Consider the delta learning and the Hebb,s

rule, whose learning signals is given by

w ji e j xi and

w ji y j xi

Distinguish between them.

Ans: (i) Both the rules involves multiplication of the

term

e j xi

(ii) The error of the neuron j in the delta rule is replaced

by output of the neuron j in the Hebb,s rule

(iii) The delta rule requires a desired response where as the

Hebbs rule does not.

Exercise: A generalized Hebbian rule is described by

w kj (n) f ( yk (n), x(n))

f ( yk (n)) g ( x j (n)) wkj (n) f ( yk (n))

Obtain the following:

25

(i) a plot between w kj (n) and w kj (n)

(ii) the balance point where w kj (n) 0

(iii) the maximum depression where w kj (n) is

minimum

w kj (n)

w kj (n)

g ( x j (n))

wkj (n) f ( yk (n))

(max. depression)

(balance point)

You might also like

- 2.1 The Process of Learning 2.1.1 Learning TasksDocument25 pages2.1 The Process of Learning 2.1.1 Learning TasksChaitanya GajbhiyeNo ratings yet

- 4 Multilayer Perceptrons and Radial Basis FunctionsDocument6 pages4 Multilayer Perceptrons and Radial Basis FunctionsVivekNo ratings yet

- 1 Hassoun Chap3 PerceptronDocument10 pages1 Hassoun Chap3 Perceptronporapooka123No ratings yet

- Learn Backprop Neural Net RulesDocument19 pagesLearn Backprop Neural Net RulesStefanescu AlexandruNo ratings yet

- Fast Training of Multilayer PerceptronsDocument15 pagesFast Training of Multilayer Perceptronsgarima_rathiNo ratings yet

- Kevin Swingler - Lecture 3: Delta RuleDocument10 pagesKevin Swingler - Lecture 3: Delta RuleRoots999No ratings yet

- Ex Lecture1Document2 pagesEx Lecture1AlNo ratings yet

- ch6 Perceptron MLP PDFDocument31 pagesch6 Perceptron MLP PDFKrishnanNo ratings yet

- Applicable Artificial Intelligence Back Propagation: Academic Session 2022/2023Document20 pagesApplicable Artificial Intelligence Back Propagation: Academic Session 2022/2023muhammed suhailNo ratings yet

- ADALINE For Pattern Classification: Polytechnic University Department of Computer and Information ScienceDocument27 pagesADALINE For Pattern Classification: Polytechnic University Department of Computer and Information ScienceDahlia DevapriyaNo ratings yet

- Instructor's Solution Manual For Neural NetworksDocument40 pagesInstructor's Solution Manual For Neural NetworksshenalNo ratings yet

- instructor-solution-manual-to-neural-networks-and-deep-learning-a-textbook-solutions-3319944622-9783319944623_compressDocument40 pagesinstructor-solution-manual-to-neural-networks-and-deep-learning-a-textbook-solutions-3319944622-9783319944623_compressHassam HafeezNo ratings yet

- Answers All 2007Document64 pagesAnswers All 2007AngieNo ratings yet

- Back Propagation ALGORITHMDocument11 pagesBack Propagation ALGORITHMMary MorseNo ratings yet

- Refresher: Perceptron Training AlgorithmDocument12 pagesRefresher: Perceptron Training Algorithmeduardo_quintanill_3No ratings yet

- Artificial Neural NetworksDocument21 pagesArtificial Neural NetworksTooba LiaquatNo ratings yet

- Learning in Multi-Layer Perceptrons - Back-Propagation: Neural Computation: Lecture 7Document20 pagesLearning in Multi-Layer Perceptrons - Back-Propagation: Neural Computation: Lecture 7HakanKalaycıNo ratings yet

- Learning Rules: This Definition of The Learning Process Implies The Following Sequence of EventsDocument11 pagesLearning Rules: This Definition of The Learning Process Implies The Following Sequence of Eventsshrilaxmi bhatNo ratings yet

- 2007 02 01b Janecek PerceptronDocument37 pages2007 02 01b Janecek PerceptronRadenNo ratings yet

- The Perceptron, Delta Rule and Its VariantsDocument7 pagesThe Perceptron, Delta Rule and Its VariantsMaryam FarisNo ratings yet

- Institute For Advanced Management Systems Research Department of Information Technologies Abo Akademi UniversityDocument41 pagesInstitute For Advanced Management Systems Research Department of Information Technologies Abo Akademi UniversityKarthikeyanNo ratings yet

- TutorialDocument6 pagesTutorialspwajeehNo ratings yet

- Improving The Rate of Convergence of The Backpropagation Algorithm For Neural Networks Using Boosting With MomentumDocument6 pagesImproving The Rate of Convergence of The Backpropagation Algorithm For Neural Networks Using Boosting With MomentumNikhil Ratna ShakyaNo ratings yet

- Backpropagation AlgorithmDocument3 pagesBackpropagation AlgorithmFernando GaxiolaNo ratings yet

- Lecture Notes To Neural Networks in Electrical EngineeringDocument11 pagesLecture Notes To Neural Networks in Electrical EngineeringNaeem Ali SajadNo ratings yet

- AdalineDocument28 pagesAdalineAnonymous 05P3kMINo ratings yet

- Artificial Neural Networks - MLPDocument52 pagesArtificial Neural Networks - MLPVishnu ChaithanyaNo ratings yet

- 3 DeltaRule PDFDocument10 pages3 DeltaRule PDFKrishnamohanNo ratings yet

- Deep Feedforward Networks Application To Patter RecognitionDocument5 pagesDeep Feedforward Networks Application To Patter RecognitionluizotaviocfgNo ratings yet

- BackpropergationDocument4 pagesBackpropergationParasecNo ratings yet

- Part BDocument36 pagesPart BAngieNo ratings yet

- Anthony Kuh - Neural Networks and Learning TheoryDocument72 pagesAnthony Kuh - Neural Networks and Learning TheoryTuhmaNo ratings yet

- ANN PG Module1Document75 pagesANN PG Module1Sreerag Kunnathu SugathanNo ratings yet

- Pptchapter06 Unit 3Document80 pagesPptchapter06 Unit 3sandeepNo ratings yet

- Implementing Simple Logic Network Using MP Neuron ModelDocument41 pagesImplementing Simple Logic Network Using MP Neuron ModelP SNo ratings yet

- Experiments On Learning by Back PropagationDocument45 pagesExperiments On Learning by Back Propagationnandini chinthalaNo ratings yet

- Back PropDocument2 pagesBack PropNader Nashat NashedNo ratings yet

- Exercise 1 Instruction PcaDocument9 pagesExercise 1 Instruction PcaHanif IshakNo ratings yet

- ANN Models ExplainedDocument42 pagesANN Models ExplainedAakansh ShrivastavaNo ratings yet

- Diagrammatic Derivation of Gradient Algorithms For Neural NetworksDocument23 pagesDiagrammatic Derivation of Gradient Algorithms For Neural NetworksjoseNo ratings yet

- Matlab ManualDocument90 pagesMatlab ManualSri Harsha57% (7)

- Exp 3Document9 pagesExp 3Swastik guptaNo ratings yet

- Crammer, Kulesza, Dredze - 2009 - Adaptive Regularization of Weighted VectorsDocument9 pagesCrammer, Kulesza, Dredze - 2009 - Adaptive Regularization of Weighted VectorsBlack FoxNo ratings yet

- Kohonen Self Organizing MapsDocument45 pagesKohonen Self Organizing MapsVijaya Lakshmi100% (1)

- Neural Net 3rdclassDocument35 pagesNeural Net 3rdclassUttam SatapathyNo ratings yet

- Artificial Neural NetworksDocument34 pagesArtificial Neural NetworksAYESHA SHAZNo ratings yet

- Multilayered Network ArchitecturesDocument34 pagesMultilayered Network Architecturesaniruthan venkatavaradhanNo ratings yet

- Multilayer Perceptron and Uppercase Handwritten Characters RecognitionDocument4 pagesMultilayer Perceptron and Uppercase Handwritten Characters RecognitionMiguel Angel Beltran RojasNo ratings yet

- CNN Notes on Convolutional Neural NetworksDocument8 pagesCNN Notes on Convolutional Neural NetworksGiri PrakashNo ratings yet

- Learning in Recurrent NetworksDocument17 pagesLearning in Recurrent NetworksAnitha PerumalsamyNo ratings yet

- ARTIFICIAL NEURAL NETWORKS-moduleIIIDocument61 pagesARTIFICIAL NEURAL NETWORKS-moduleIIIelakkadanNo ratings yet

- ANN 3 - PerceptronDocument56 pagesANN 3 - PerceptronNwwar100% (1)

- T. Villmann Et Al - Fuzzy Labeled Neural Gas For Fuzzy ClassificationDocument8 pagesT. Villmann Et Al - Fuzzy Labeled Neural Gas For Fuzzy ClassificationTuhmaNo ratings yet

- Machine Learning: Lecture 4: Artificial Neural Networks (Based On Chapter 4 of Mitchell T.., Machine Learning, 1997)Document14 pagesMachine Learning: Lecture 4: Artificial Neural Networks (Based On Chapter 4 of Mitchell T.., Machine Learning, 1997)harutyunNo ratings yet

- LVQ Neural Network Implementation in MATLABDocument8 pagesLVQ Neural Network Implementation in MATLABBurak EceNo ratings yet

- An Adaptive Fuzzy Clustering Algorithm With Generalized Entropy Based On Weighted SampleDocument6 pagesAn Adaptive Fuzzy Clustering Algorithm With Generalized Entropy Based On Weighted SampleInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Zhang 2009Document10 pagesZhang 2009Roberth Saénz Pérez AlvaradoNo ratings yet

- FLNN Question BankDocument23 pagesFLNN Question BankDevLaxman75% (4)

- We Don't Eat Our: ClassmatesDocument35 pagesWe Don't Eat Our: ClassmatesChelle Denise Gumban Huyaban85% (20)

- Thin Layer Chromatograph1Document25 pagesThin Layer Chromatograph12581974No ratings yet

- Chapter 9 MafinDocument36 pagesChapter 9 MafinReymilyn SanchezNo ratings yet

- The Road To Privatization: TQM and Business Planning: Bennington, Lynne Cummane, JamesDocument11 pagesThe Road To Privatization: TQM and Business Planning: Bennington, Lynne Cummane, JamesBojan KovacevicNo ratings yet

- Simple Past Tense The Elves and The Shoemaker Short-Story-Learnenglishteam - ComDocument1 pageSimple Past Tense The Elves and The Shoemaker Short-Story-Learnenglishteam - ComgokagokaNo ratings yet

- Ariel StoryDocument2 pagesAriel StoryKKN Pasusukan2018No ratings yet

- Sengoku WakthroughDocument139 pagesSengoku WakthroughferdinanadNo ratings yet

- Fusion Tech ActDocument74 pagesFusion Tech ActrahulrsinghNo ratings yet

- 2018 World Traumatic Dental Injury Prevalence and IncidenceDocument16 pages2018 World Traumatic Dental Injury Prevalence and IncidencebaridinoNo ratings yet

- Supreme Court: Lichauco, Picazo and Agcaoili For Petitioner. Bengzon Villegas and Zarraga For Respondent R. CarrascosoDocument7 pagesSupreme Court: Lichauco, Picazo and Agcaoili For Petitioner. Bengzon Villegas and Zarraga For Respondent R. CarrascosoLOUISE ELIJAH GACUANNo ratings yet

- PHILIPPINE INCOME TAX REVIEWERDocument99 pagesPHILIPPINE INCOME TAX REVIEWERquedan_socotNo ratings yet

- ASBMR 14 Onsite Program Book FINALDocument362 pagesASBMR 14 Onsite Program Book FINALm419703No ratings yet

- Umair Mazher ThesisDocument44 pagesUmair Mazher Thesisumair_mazherNo ratings yet

- Hospital Registration Orientation 3 - EQRs With Operating ManualDocument33 pagesHospital Registration Orientation 3 - EQRs With Operating ManualElshaimaa AbdelfatahNo ratings yet

- Chapter 1. Introduction To TCPIP NetworkingDocument15 pagesChapter 1. Introduction To TCPIP NetworkingPoojitha NagarajaNo ratings yet

- Policy Guidelines On Classroom Assessment K12Document88 pagesPolicy Guidelines On Classroom Assessment K12Jardo de la PeñaNo ratings yet

- ADSL Line Driver Design Guide, Part 2Document10 pagesADSL Line Driver Design Guide, Part 2domingohNo ratings yet

- Row 1Document122 pagesRow 1abraha gebruNo ratings yet

- Software Security Engineering: A Guide for Project ManagersDocument6 pagesSoftware Security Engineering: A Guide for Project ManagersVikram AwotarNo ratings yet

- A Case of DrowningDocument16 pagesA Case of DrowningDr. Asheesh B. PatelNo ratings yet

- School For Good and EvilDocument4 pagesSchool For Good and EvilHaizyn RizoNo ratings yet

- Network Monitoring With Zabbix - HowtoForge - Linux Howtos and TutorialsDocument12 pagesNetwork Monitoring With Zabbix - HowtoForge - Linux Howtos and TutorialsShawn BoltonNo ratings yet

- ARCH1350 Solutions 6705Document16 pagesARCH1350 Solutions 6705Glecy AdrianoNo ratings yet

- ASSIGNMENTDocument5 pagesASSIGNMENTPanchdev KumarNo ratings yet

- Crypto Portfolio Performance and Market AnalysisDocument12 pagesCrypto Portfolio Performance and Market AnalysisWaseem Ahmed DawoodNo ratings yet

- The Other Side of Love AutosavedDocument17 pagesThe Other Side of Love AutosavedPatrick EdrosoloNo ratings yet

- People v Gemoya and Tionko - Supreme Court upholds murder convictionDocument7 pagesPeople v Gemoya and Tionko - Supreme Court upholds murder convictionLASNo ratings yet

- I Will Call Upon The Lord - ACYM - NewestDocument1 pageI Will Call Upon The Lord - ACYM - NewestGerd SteveNo ratings yet

- Word Formation - ExercisesDocument4 pagesWord Formation - ExercisesAna CiocanNo ratings yet

- Gcse English Literature Coursework Grade BoundariesDocument8 pagesGcse English Literature Coursework Grade Boundariesafjwfealtsielb100% (1)