Professional Documents

Culture Documents

FIOS: A Fair, Efficient Flash I/O Scheduler: Stan Park Kai Shen University of Rochester

Uploaded by

mansoor.ahmed100Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

FIOS: A Fair, Efficient Flash I/O Scheduler: Stan Park Kai Shen University of Rochester

Uploaded by

mansoor.ahmed100Copyright:

Available Formats

FIOS: A Fair, Ecient Flash I/O Scheduler

Stan Park Kai Shen

University of Rochester

1 / 21

Background

Flash is widely available as mass storage, e.g. SSD

$/GB still dropping, aordable high-performance I/O

Deployed in data centers as well low-power platforms

Adoption continues to grow but very little work in robust I/O

scheduling for Flash I/O

Synchronous writes are still a major factor in I/O bottlenecks

2 / 21

Flash: Characteristics & Challenges

No seek latency, low latency variance

I/O granularity: Flash page, 28KB

Large erase granularity: Flash block, 64256 pages

Architecture parallelism

Erase-before-write limitation

I/O asymmetry

Wide variation in performance across vendors!

3 / 21

Motivation

Disk is slow scheduling has largely been

performance-oriented

Flash scheduling for high performance ALONE is easy (just

use noop)

Now fairness can be a rst-class concern

Fairness must account for unique Flash characteristics

4 / 21

Motivation: Prior Schedulers

Fairness-oriented Schedulers: Linux CFQ, SFQ(D), Argon

Lack Flash-awareness and appropriate anticipation support

Linux CFQ, SFQ(D): fail to recognize the need for anticipation

Argon: overly aggressive anticipation support

Flash I/O Scheduling: write bundling, write block preferential, and

page-aligned request merging/splitting

Limited applicability to modern SSDs, performance-oriented

5 / 21

Motivation: Read-Write Interference

0 1 2

P

r

o

b

a

b

i

l

i

t

y

d

e

n

s

i

t

y

I/O response time (in msecs)

Intel SSD read (alone)

0 0.2 0.4 0.6

P

r

o

b

a

b

i

l

i

t

y

d

e

n

s

i

t

y

I/O response time (in msecs)

Vertex SSD read (alone)

0 100 200 300

P

r

o

b

a

b

i

l

i

t

y

d

e

n

s

i

t

y

all respond quickly

I/O response time (in msecs)

CompactFlash read (alone)

6 / 21

Motivation: Read-Write Interference

0 1 2

P

r

o

b

a

b

i

l

i

t

y

d

e

n

s

i

t

y

I/O response time (in msecs)

Intel SSD read (alone)

0 0.2 0.4 0.6

P

r

o

b

a

b

i

l

i

t

y

d

e

n

s

i

t

y

I/O response time (in msecs)

Vertex SSD read (alone)

0 100 200 300

P

r

o

b

a

b

i

l

i

t

y

d

e

n

s

i

t

y

all respond quickly

I/O response time (in msecs)

CompactFlash read (alone)

0 1 2

P

r

o

b

a

b

i

l

i

t

y

d

e

n

s

i

t

y

I/O response time (in msecs)

Intel SSD read (with write)

0 0.2 0.4 0.6

P

r

o

b

a

b

i

l

i

t

y

d

e

n

s

i

t

y

I/O response time (in msecs)

Vertex SSD read (with write)

0 100 200 300

P

r

o

b

a

b

i

l

i

t

y

d

e

n

s

i

t

y

I/O response time (in msecs)

CompactFlash read (with write)

Fast read response is disrupted by interfering writes.

6 / 21

Motivation: Parallelism

1 2 4 8 16 32 64

0

2

4

6

8

Number of concurrent I/O operations

S

p

e

e

d

u

p

o

v

e

r

s

e

r

i

a

l

I

/

O

Intel SSD

Read I/O parallelism Write I/O parallelism

1 2 4 8 16 32 64

0

1

2

3

4

Number of concurrent I/O operations

S

p

e

e

d

u

p

o

v

e

r

s

e

r

i

a

l

I

/

O

Vertex SSD

SSDs can support varying levels of read and write parallelism.

7 / 21

Motivation: I/O Anticipation Support

Reduces potential seek cost for mechanical disks

...but largely negative performance eect on Flash

Flash has no seek latency: no need for anticipation?

No anticipation can result in unfairness: premature service

change, read-write interference

8 / 21

Motivation: I/O Anticipation Support

0

1

2

3

4

I

/

O

s

l

o

w

d

o

w

n

r

a

t

i

o

L

i

n

u

x

C

F

Q

,

n

o

a

n

t

i

c

.

S

F

Q

(

D

)

,

n

o

a

n

t

i

c

.

F

u

l

l

q

u

a

n

t

u

m

a

n

t

i

c

.

Read slowdown

Write slowdown

Lack of anticipation can lead to unfairness; aggressive anticipation

makes fairness costly.

9 / 21

FIOS: Policy

Fair timeslice management: Basis of fairness

Read-write interference management: Account for Flash I/O

asymmetry

I/O parallelism: Recognize and exploit SSD internal

parallelism while maintaining fairness

I/O anticipation: Prevent disruption to fairness mechanisms

10 / 21

FIOS: Timeslice Management

Equal timeslices: amount of time to access device

Non-contiguous usage

Multiple tasks can be serviced simultaneously

Collection of timeslices = epoch; Epoch ends when:

No task with a remaining timeslice issues a request, or

No task has a remaining timeslice

11 / 21

FIOS: Interference Management

0 1 2

P

r

o

b

a

b

i

l

i

t

y

d

e

n

s

i

t

y

I/O response time (in msecs)

Intel SSD read (with write)

0 0.2 0.4 0.6

P

r

o

b

a

b

i

l

i

t

y

d

e

n

s

i

t

y

I/O response time (in msecs)

Vertex SSD read (with write)

0 100 200 300

P

r

o

b

a

b

i

l

i

t

y

d

e

n

s

i

t

y

I/O response time (in msecs)

CompactFlash read (with write)

Reads are faster than writes interference penalizes reads

more

Preference for servicing reads

Delay writes until reads complete

12 / 21

FIOS: I/O Parallelism

SSDs utilize multiple independent channels

Exploit internal parallelism when possible, minding timeslice

and interference management

Parallel cost accounting: New problem in Flash scheduling

Linear cost model, using time to service a given request size

Probabilistic fair sharing: Share perceived device time usage

among concurrent users/tasks

Cost =

T

elapsed

P

issuance

T

elapsed

is the requests elapsed time from its issuance to its

completion, and P

issuance

is the number of outstanding requests

(including the new request) at the issuance time

13 / 21

FIOS: I/O Anticipation - When to anticipate?

Anticipatory I/O originally used for improving performance on disk

to handle deceptive idleness: wait for a desirable request.

Anticipatory I/O on Flash used to preserve fairness.

Deceptive idleness may break:

timeslice management

interference management

14 / 21

FIOS: I/O Anticipation - How long to anticipate?

Must be much shorter than the typical idle period for disks

Relative anticipation cost is bounded by , where idle period is

T

service

1

where T

service

is per-task exponentially-weighted

moving average of per-request service time

(Default = 0.5)

ex. I/O anticipation I/O anticipation I/O

15 / 21

Implementation Issues

Linux coarse tick timer High resolution timer for I/O

anticipation

Inconsistent synchronous write handling across le system and

I/O layers

ext4 nanosecond timestamps lead to excessive metadata

updates for write-intensive applications

16 / 21

Results: Read-Write Fairness

0

8

16

24

32

I

/

O

s

l

o

w

d

o

w

n

r

a

t

i

o

4reader 4writer on Intel SSD

proportional

slowdown

R

a

w

d

e

v

i

c

e

I

/

O

L

i

n

u

x

C

F

Q

S

F

Q

(

D

)

Q

u

a

n

t

a

F

I

O

S

0

8

16

24

32

proportional

slowdown

R

a

w

d

e

v

i

c

e

I

/

O

L

i

n

u

x

C

F

Q

S

F

Q

(

D

)

Q

u

a

n

t

a

F

I

O

S

I

/

O

s

l

o

w

d

o

w

n

r

a

t

i

o

4reader 4writer (with thinktime) on Intel SSD

Average read latency Average write latency

Only FIOS provides fairness with good eciency under diering

I/O load conditions.

17 / 21

Results: Beyond Read-Write Fairness

0

8

16

proportional

slowdown

I

/

O

s

l

o

w

d

o

w

n

r

a

t

i

o

4reader 4writer on Vertex SSD

R

a

w

d

e

v

i

c

e

I

/

O

L

i

n

u

x

C

F

Q

S

F

Q

(

D

)

Q

u

a

n

t

a

F

I

O

S

Mean read latency

Mean write latency

0

2

4

6

8

proportional

slowdown

I

/

O

s

l

o

w

d

o

w

n

r

a

t

i

o

4KBreader and 128KBreader on Vertex SSD

R

a

w

d

e

v

i

c

e

I

/

O

L

i

n

u

x

C

F

Q

S

F

Q

(

D

)

Q

u

a

n

t

a

F

I

O

S

Mean latency 4KB read

Mean latency 128KB read

FIOS achieves fairness not only with read-write asymmetry but

also requests of varying cost.

18 / 21

Results: SPECweb co-run TPC-C

0

2

4

6

8

10

12

R

e

s

p

o

n

s

e

t

i

m

e

s

l

o

w

d

o

w

n

r

a

t

i

o

SPECweb and TPCC on Intel SSD

28

R

a

w

d

e

v

i

c

e

I

/

O

L

i

n

u

x

C

F

Q

S

F

Q

(

D

)

Q

u

a

n

t

a

F

I

O

S

SPECweb

TPCC

FIOS exhibits the best fairness compared to the alternatives.

19 / 21

Results: FAWNDS (CMU, SOSP09) on CompactFlash

0

1

2

3

T

a

s

k

s

l

o

w

d

o

w

n

r

a

t

i

o

proportional slowdown

R

a

w

d

e

v

i

c

e

I

/

O

L

i

n

u

x

C

F

Q

S

F

Q

(

D

)

Q

u

a

n

t

a

F

I

O

S

FAWNDS hash gets FAWNDS hash puts

FIOS also applies to low-power Flash and provides ecient fairness.

20 / 21

Conclusion

Fairness and eciency in Flash I/O scheduling

Fairness is a primary concern

New challenge for fairness AND high eciency (parallelism)

I/O anticipation is ALSO important for fairness

I/O scheduler support must be robust in the face of varied

performance and evolving hardware

Read/write fairness and BEYOND

May support other resource principals (VMs in cloud).

21 / 21

You might also like

- T=K ϕ I E E I R N= E K ϕ T=K I: Separately Excited DC Motor Series DC MotorDocument2 pagesT=K ϕ I E E I R N= E K ϕ T=K I: Separately Excited DC Motor Series DC Motormansoor.ahmed100No ratings yet

- Electrical Machines I: Week 13: Ward Leonard Speed Control and DC Motor BrakingDocument15 pagesElectrical Machines I: Week 13: Ward Leonard Speed Control and DC Motor Brakingmansoor.ahmed100No ratings yet

- 1Document18 pages1Mansoor AhmedNo ratings yet

- Notice: All The Images in This VTP LAB Are Used For Demonstration Only, You Will See Slightly Different Images in The Real CCNA ExamDocument14 pagesNotice: All The Images in This VTP LAB Are Used For Demonstration Only, You Will See Slightly Different Images in The Real CCNA Exammansoor.ahmed100No ratings yet

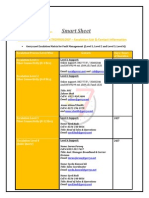

- Smart Sheet: GERRYS INFORMATION TECHNOLOGY - Escalation List & Contact InformationDocument1 pageSmart Sheet: GERRYS INFORMATION TECHNOLOGY - Escalation List & Contact Informationmansoor.ahmed100No ratings yet

- 3103 WorkbookDocument91 pages3103 Workbookmansoor.ahmed100No ratings yet

- PDC PmphandbookDocument0 pagesPDC PmphandbookAhmed FoudaNo ratings yet

- AtenolDocument6 pagesAtenolmansoor.ahmed100No ratings yet

- MS Electrical Engineering at DHA Suffa UniversityDocument34 pagesMS Electrical Engineering at DHA Suffa Universitymansoor.ahmed100No ratings yet

- Lab 3Document5 pagesLab 3mansoor.ahmed100No ratings yet

- Practice Problem 2Document2 pagesPractice Problem 2mansoor.ahmed100No ratings yet

- Practice Problem 2 - SolutionDocument14 pagesPractice Problem 2 - Solutionmansoor.ahmed100No ratings yet

- SUSE Linux 11 Administration WorkbookDocument153 pagesSUSE Linux 11 Administration Workbookmansoor.ahmed100No ratings yet

- LogitechDocument11 pagesLogitechmansoor.ahmed100No ratings yet

- Lotus Notes Client Version 8.5Document4 pagesLotus Notes Client Version 8.5mansoor.ahmed100No ratings yet

- Anomaly in Manet2Document14 pagesAnomaly in Manet2mansoor.ahmed100No ratings yet

- Research Assignment - DetailsDocument2 pagesResearch Assignment - Detailsmansoor.ahmed100No ratings yet

- H.M RashidDocument10 pagesH.M Rashidmansoor.ahmed100No ratings yet

- Mobile Ad-Hoc and Sensor NetworksDocument27 pagesMobile Ad-Hoc and Sensor Networksmansoor.ahmed100No ratings yet

- Extrusion Detection System: Problem StatementDocument3 pagesExtrusion Detection System: Problem Statementmansoor.ahmed100No ratings yet

- Extrusion Detection System: Problem StatementDocument3 pagesExtrusion Detection System: Problem Statementmansoor.ahmed100No ratings yet

- Paper Title (Use Style: Paper Title) : Subtitle As Needed (Paper Subtitle)Document3 pagesPaper Title (Use Style: Paper Title) : Subtitle As Needed (Paper Subtitle)mansoor.ahmed100No ratings yet

- Paper Title (Use Style: Paper Title) : Subtitle As Needed (Paper Subtitle)Document3 pagesPaper Title (Use Style: Paper Title) : Subtitle As Needed (Paper Subtitle)mansoor.ahmed100No ratings yet

- Extrusion Detection in Mobile Ad-hoc NetworksDocument8 pagesExtrusion Detection in Mobile Ad-hoc Networksmansoor.ahmed100No ratings yet

- Redhat Linux System Administration Rh133Document284 pagesRedhat Linux System Administration Rh133Adrian Bravo100% (1)

- A Study of Linux File System EvolutionDocument14 pagesA Study of Linux File System EvolutionVictor LyapunovNo ratings yet

- BlueSky Cloud File System Optimizes Enterprise Storage CostsDocument14 pagesBlueSky Cloud File System Optimizes Enterprise Storage Costsmansoor.ahmed100No ratings yet

- VrableDocument28 pagesVrablemansoor.ahmed100No ratings yet

- CMOS Digital Logic Circuits Chapter SummaryDocument56 pagesCMOS Digital Logic Circuits Chapter Summarymansoor.ahmed100No ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5782)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- HW3Document5 pagesHW3AlexonNo ratings yet

- N153Document5 pagesN153Yosef TesefahunNo ratings yet

- Pi - Ygw - 1.8.0.1 3Document97 pagesPi - Ygw - 1.8.0.1 3Arsa Arka GutomoNo ratings yet

- Docu92278 PowerPath For Windows 6.4 and Minor Releases Release NotesDocument24 pagesDocu92278 PowerPath For Windows 6.4 and Minor Releases Release NotesNAGARJUNA100% (1)

- How To Uninstall TuneUp UtilitiesDocument7 pagesHow To Uninstall TuneUp UtilitiesHewolf08No ratings yet

- Spesifikasi Netbook Gateway Lt21 WinxpDocument9 pagesSpesifikasi Netbook Gateway Lt21 WinxpKuro QuickNo ratings yet

- LM MODBUS USER INSTRUCTIONS PARAMETER READING AND WRITING GUIDEDocument2 pagesLM MODBUS USER INSTRUCTIONS PARAMETER READING AND WRITING GUIDEMarcos Luiz AlvesNo ratings yet

- Lecture 4Document40 pagesLecture 4latest moviesNo ratings yet

- CA Harvest Software Change Manager V14.0.2 Compatibility MatrixDocument3 pagesCA Harvest Software Change Manager V14.0.2 Compatibility Matrixalex pirelaNo ratings yet

- Redis Enterprise Overview: HA, DR, Performance at ScaleDocument35 pagesRedis Enterprise Overview: HA, DR, Performance at Scaleupendar reddy MalluNo ratings yet

- Introduction To Computer Administration: Mcgraw-Hill Technology EducationDocument33 pagesIntroduction To Computer Administration: Mcgraw-Hill Technology EducationZubairNo ratings yet

- PDSN Daily Surveillance Fault Rectification Ver 1.0Document5 pagesPDSN Daily Surveillance Fault Rectification Ver 1.0HM KattimaniNo ratings yet

- Creative Computing Janfeb78Document152 pagesCreative Computing Janfeb78khk007No ratings yet

- Ip Vshape Vmware With Eternus em enDocument97 pagesIp Vshape Vmware With Eternus em enYASO OMANo ratings yet

- Application Layer Functions and ProtocolsDocument24 pagesApplication Layer Functions and ProtocolsAshutosh SaraswatNo ratings yet

- Thinkpad P15 Gen 2: 20Yq002GidDocument2 pagesThinkpad P15 Gen 2: 20Yq002GidTEUKU RACHMATTRA ARVISANo ratings yet

- Internet of Things PrimeDocument3 pagesInternet of Things Primemohamed yasinNo ratings yet

- (123doc) - Nc2000-System-Operation-Manual-Vol4Document290 pages(123doc) - Nc2000-System-Operation-Manual-Vol4Mạnh Toàn NguyễnNo ratings yet

- HITACHI HDF-8040E 8.4" Digital Photo Frame With NXT Stereo Speaker Instructions ManualDocument50 pagesHITACHI HDF-8040E 8.4" Digital Photo Frame With NXT Stereo Speaker Instructions ManualCarlos PugaNo ratings yet

- GATE Questions - OS - CPU SchedulingDocument8 pagesGATE Questions - OS - CPU SchedulingdsmariNo ratings yet

- F15045Document38 pagesF15045tomNo ratings yet

- Patch Analyst 5 - ArcGIS 9Document2 pagesPatch Analyst 5 - ArcGIS 9Wahyu Sugiono100% (1)

- Ram RomDocument42 pagesRam Rommohit mishraNo ratings yet

- OMEGA Masternode Setup GuideDocument6 pagesOMEGA Masternode Setup Guideugurugur1982No ratings yet

- Carspot - Dealership Wordpress Classified React Native App: Preparation and InstallationDocument13 pagesCarspot - Dealership Wordpress Classified React Native App: Preparation and InstallationJin MingNo ratings yet

- Tsung - ManualDocument108 pagesTsung - ManualstrokenfilledNo ratings yet

- Quiz PrelimsDocument13 pagesQuiz PrelimsViswanathan SNo ratings yet

- P01 RTLinuxDocument26 pagesP01 RTLinuxSudheer Reddy100% (1)

- Printix's Print Solution For Microsoft Azure AD Now Available On Microsoft's AppSourceDocument3 pagesPrintix's Print Solution For Microsoft Azure AD Now Available On Microsoft's AppSourcePR.comNo ratings yet

- DS 2CD2043G0 I20190403aawrd03410072 - 20200522231311Document37 pagesDS 2CD2043G0 I20190403aawrd03410072 - 20200522231311revanth kumarNo ratings yet