Professional Documents

Culture Documents

VALIDITY

Uploaded by

Free Escort Service0 ratings0% found this document useful (0 votes)

27 views3 pagesjhj

Original Title

VALIDITY.docx

Copyright

© © All Rights Reserved

Available Formats

DOCX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this Documentjhj

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

27 views3 pagesVALIDITY

Uploaded by

Free Escort Servicejhj

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

You are on page 1of 3

1.

VALIDITY, RELIABILITY & PRACTICALITY

Prof. Jonathan Magdalena

2. QUALITIES OF MEASUREMENT DEVICES

Validity

Does it measure what it is supposed to measure?

Reliability

How representative is the measurement?

Objectivity

Do independent scorers agree?

Practicality

Is it easy to construct, administer, score and interpret?

3. VALIDITY

Validity refers to whether or not a test measures what it intends to measure.

A test with high validity has items closely linked to the tests intended focus. A test with poor

validity does not measure the content and competencies it ought to.

4. VALIDITY - Kinds of Validity

Content: related to objectives and their sampling.

Construct: referring to the theory underlying the target.

Criterion: related to concrete criteria in the real world. It can be concurrent or predictive.

Concurrent: correlating high with another measure already validated.

Predictive: Capable of anticipating some later measure.

Face: related to the test overall appearance.

5. 1. CONTENT VALIDITY

Content validity refers to the connections between the test items and the subject-related

tasks. The test should evaluate only the content related to the field of study in a manner

sufficiently representative, relevant, and comprehensible.

6. 2. CONSTRUCT VALIDITY

It implies using the construct (concepts, ideas, notions) in accordance to the state of the art

in the field. Construct validity seeks agreement between updated subject-matter theories

and the specific measuring components of the test.

For example, a test of intelligence nowadays must include measures of multiple

intelligences, rather than just logical-mathematical and linguistic ability measures.

7. 3. CRITERION-RELATED VALIDITY

Also referred to as instrumental validity, it is used to demonstrate the accuracy of a

measure or procedure by comparing it with another process or method which has been

demonstrated to be valid.

For example, imagine a hands-on driving test has been proved to be an accurate test of

driving skills. A written test can be validated by using a criterion related strategy in which the

hands-on driving test is compared to it.

8. 4. CONCURRENT VALIDITY

Concurrent validity uses statistical methods of correlation to other measures.

Examinees who are known to be either masters or non-masters on the content measured

are identified before the test is administered. Once the tests have been scored, the

relationship between the examinees status as either masters or non-masters and their

performance (i.e., pass or fail) is estimated based on the test.

9. 5. PREDICTIVE VALIDITY

Predictive validity estimates the relationship of test scores to an examinee's future

performance as a master or non-master. Predictive validity considers the question, "How

well does the test predict examinees' future status as masters or non-masters?"

For this type of validity, the correlation that is computed is based on the test results and the

examinees later performance. This type of validity is especially useful for test purposes

such as selection or admissions.

10. 6. FACE VALIDITY

Face validity is determined by a review of the items and not through the use of statistical

analyses. Unlike content validity, face validity is not investigated through formal procedures.

Instead, anyone who looks over the test, including examinees, may develop an informal

opinion as to whether or not the test is measuring what it is supposed to measure.

11. QUALITIES OF MEASUREMENT DEVICES

Validity

Does it measure what it is supposed to measure?

Reliability

How representative is the measurement?

Objectivity

Do independent scorers agree?

Practicality

Is it easy to construct, administer, score and interpret?

12. RELIABILITY

Reliability is the extent to which an experiment, test, or any measuring procedure shows the

same result on repeated trials.

For researchers, four key types of reliability are:

13. RELIABILITY

Equivalency: related to the co-occurrence of two items.

Stability: related to time consistency.

Internal: related to the instruments.

Interrater: related to the examiners criterion.

14. 1. EQUIVALENCY RELIABILITY

Equivalency reliability is the extent to which two items measure identical concepts at an

identical level of difficulty. Equivalency reliability is determined by relating two sets of test

scores to one another to highlight the degree of relationship or association.

15. 2. STABILITY RELIABILITY

Stability reliability (sometimes called test, re-test reliability) is the agreement of measuring

instruments over time. To determine stability, a measure or test is repeated on the same

subjects at a future date. Results are compared and correlated with the initial test to give a

measure of stability. Instruments with a high stability reliability are thermometers,

compasses, measuring cups, etc.

16. 3. INTERNAL CONSISTENCY

Internal consistency is the extent to which tests or procedures assess the same

characteristic, skill or quality. It is a measure of the precision between the measuring

instruments used in a study. This type of reliability often helps researchers interpret data

and predict the value of scores and the limits of the relationship among variables.

17. 4. INTERRATER RELIABILITY

Interraterreliability is the extent to which two or more individuals (coders or raters) agree.

For example, when two or more teachers use a rating scale with which they are rating the

students oral responses in an interview (1 being most negative, 5 being most positive). If

one researcher gives a "1" to a student response, while another researcher gives a "5,"

obviously the interrater reliability would be inconsistent.

18. SOURCES OF ERROR

Examinee (is a human being)

Examiner (is a human being)

Examination (is designed by and for human beings)

19. RELATIONSHIP BETWEEN VALIDITY & RELIABILITY

Validity and reliability are closely related.

A test cannot be considered valid unless the measurements resulting from it are reliable.

Likewise, results from a test can be reliable and not necessarily valid.

20. BACKWASH EFFECT

Backwash (also known as washback) effect is related to the potentially positive and

negative effects of test design and content on the form and content of English language

training courseware.

21. THANKS

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Pharmacological Treatment SchizDocument54 pagesPharmacological Treatment SchizFree Escort ServiceNo ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Mechanism For The Ability of 5Document2 pagesMechanism For The Ability of 5Free Escort ServiceNo ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- My Resume in Word Format1679Document11 pagesMy Resume in Word Format1679Free Escort ServiceNo ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Worldwide Impacts of SchizophreniaDocument10 pagesThe Worldwide Impacts of SchizophreniaMadusha PereraNo ratings yet

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Students Prayer For Exam 2014Document2 pagesStudents Prayer For Exam 2014Free Escort ServiceNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Inflammation in Schizophrenia and DepressionDocument9 pagesInflammation in Schizophrenia and DepressionFree Escort ServiceNo ratings yet

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Students Prayer For Exam 2014Document2 pagesStudents Prayer For Exam 2014Free Escort ServiceNo ratings yet

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- ValidityDocument3 pagesValidityFree Escort ServiceNo ratings yet

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- HerbaceutclDocument1 pageHerbaceutclFree Escort ServiceNo ratings yet

- SchizEdDay Freudenreich - PpsDocument29 pagesSchizEdDay Freudenreich - PpsFree Escort ServiceNo ratings yet

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Nutraceuticals: Let Food Be Your MedicineDocument32 pagesNutraceuticals: Let Food Be Your MedicineFree Escort ServiceNo ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Role of Nutraceuticals in Health Promotion: Swati Chaturvedi, P. K. Sharma, Vipin Kumar Garg, Mayank BansalDocument7 pagesRole of Nutraceuticals in Health Promotion: Swati Chaturvedi, P. K. Sharma, Vipin Kumar Garg, Mayank BansalFree Escort ServiceNo ratings yet

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

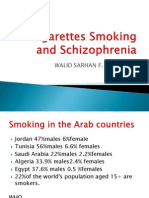

- Walid Sarhan F. R. C. PsychDocument46 pagesWalid Sarhan F. R. C. PsychFree Escort ServiceNo ratings yet

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Reliablity Validity of Research Tools 1Document19 pagesReliablity Validity of Research Tools 1Free Escort Service100% (1)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Reliability and ValidityDocument15 pagesReliability and Validityapi-260339450No ratings yet

- Reliablity Validity of Research Tools 1Document19 pagesReliablity Validity of Research Tools 1Free Escort Service100% (1)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Presentation 2Document29 pagesPresentation 2Free Escort ServiceNo ratings yet

- EulaDocument3 pagesEulaBrandon YorkNo ratings yet

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- ResumeDocument3 pagesResumeFree Escort ServiceNo ratings yet

- Quality Is Built in by Design, Not Tested inDocument1 pageQuality Is Built in by Design, Not Tested inFree Escort ServiceNo ratings yet

- Shubh AmDocument1 pageShubh AmFree Escort ServiceNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- New Pharmacological Approaches To The Treatment of SchizophreniaDocument1 pageNew Pharmacological Approaches To The Treatment of SchizophreniaFree Escort ServiceNo ratings yet

- MBKDocument12 pagesMBKFree Escort ServiceNo ratings yet

- Optimizing Quality by Design in Bulk Powder & Solid Dosage: Smt. Bhoyar College of Pharmacy, KamteeDocument3 pagesOptimizing Quality by Design in Bulk Powder & Solid Dosage: Smt. Bhoyar College of Pharmacy, KamteeFree Escort ServiceNo ratings yet

- 2415 14725 4 PBDocument13 pages2415 14725 4 PBFree Escort ServiceNo ratings yet

- QBD Definition AnvvvvDocument4 pagesQBD Definition AnvvvvFree Escort ServiceNo ratings yet

- Nutraceutical Role in Health CareDocument1 pageNutraceutical Role in Health CareFree Escort ServiceNo ratings yet

- Quality by Design On PharmacovigilanceDocument1 pageQuality by Design On PharmacovigilanceFree Escort ServiceNo ratings yet

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- ABSTRACT (QBD: A Holistic Concept of Building Quality in Pharmaceuticals)Document1 pageABSTRACT (QBD: A Holistic Concept of Building Quality in Pharmaceuticals)Free Escort ServiceNo ratings yet

- M.Pharm Dissertation Protocol: Formulation and Evaluation of Antihypertensive Orodispersible TabletsDocument7 pagesM.Pharm Dissertation Protocol: Formulation and Evaluation of Antihypertensive Orodispersible TabletsFree Escort ServiceNo ratings yet

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)