Professional Documents

Culture Documents

Inter Speech 2006 Nakano

Uploaded by

Raghavendra ShCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Inter Speech 2006 Nakano

Uploaded by

Raghavendra ShCopyright:

Available Formats

An Automatic Singing Skill Evaluation Method for Unknown Melodies

Using Pitch Interval Accuracy and Vibrato Features

Tomoyasu Nakano

Graduate School of Library,

Information and Media Studies,

University of Tsukuba, Japan

nakano@slis.tsukuba.ac.jp

Masataka Goto

National Institute of

Advanced Industrial Science

and Technology (AIST), Japan

m.goto@aist.go.jp

Yuzuru Hiraga

Graduate School of Library,

Information and Media Studies,

University of Tsukuba, Japan

hiraga@slis.tsukuba.ac.jp

Abstract

This paper presents a method of evaluating singing skills that does

not require score information of the sung melody. This requires

an approach that is different from existing systems, such as those

currently used for Karaoke systems. Previous research on singing

evaluation has focused on analyzing the characteristics of singing

voice, but were not aimed at developing an automatic evaluation

method. The approach presented in this study uses pitch inter-

val accuracy and vibrato as acoustic features which are indepen-

dent from specic characteristics of the singer or melody. The ap-

proach was tested by a 2-class (good/poor) classication test with

600 song sequences, and achieved an average classication rate of

83.5%.

Index Terms: singing skill, automatic evaluation, unknown

melodies.

1. Introduction

The aim of this study is to explore a method of automatic evalua-

tion of singing skills without score information. Our interest lies

in identifying the criteria that human subjects use in judging the

quality of singing for unknown melodies, using acoustic features

which are independent from specic characteristics of the singer

or melody. Such evaluation systems can be useful tools for im-

proving singing skills, and also can be applied to broadening the

scope of music information retrieval and singing voice synthesis.

Previous work related to singing skills include those based on

a control model of fundamental frequency (F0) trajectory [1], gen-

eral characteristics [2, 3], as well as work on automatic discrimi-

nation of singing and speaking voices [4], and acoustic differences

between trained and untrained singers voices [5, 6, 7]. None of

these work have gone as far as presenting an automatic evaluation

method.

This paper presents a singing skill evaluation scheme based on

pitch interval accuracy and vibrato, which are regarded as features

that function independently from the individual characteristics of

singer or melody. To test the validity of these features, an experi-

ment of automatic evaluation of singing performance by a 2-class

classication (good/poor) was conducted.

The following sections describe our approach and the exper-

imental results of its evaluation. Section 2 presents discussion of

features. Section 3 describes the classication experiment and its

evaluation. Section 4 concludes the paper, with discussion on di-

rections for future work.

2. Acoustic Features for Automatic Singing

Skill Evaluation

Human subjects can be seen to consistently evaluate the singing

skills for unknown melodies [8]. This suggests that their evalua-

tion utilizes easily discernible features which are independent of

the particular singer or melody. Within the scope of this paper, we

propose pitch interval accuracy and vibrato as such feature candi-

dates.

The proposed features are numbered and shown in boxed

form, like n . Throughout the paper, the singing samples are solo

vocal and are digital recordings of 16bit/16kHz/monaural.

2.1. Estimating Pitch Interval Accuracy

The pitch interval accuracy is judged by the tting of the F0 (fun-

damental frequency) trajectory to a semitone (100 cent) width grid

(corresponding to equal temperament in the Western Music Tra-

dition). Hereafter, pitch/frequency values will be referred to by

cents, which are log-scale frequency values. The cent value fcent

of frequency fHz given as follows (middle C corresponds to 4800

cent).

fcent = 1200 log

2

fHz

440 2

3

12

5

(1)

Suppose the semitone grid borders are set at multiples of 100.

Then a particular pitch x has an offset of F(0 F < 100) from

its nearest lower border. If F has a constant value throughout the

song sequence, then the singing can be seen to have a good tting

to the semitone grid.

Let p(x; F) be a Gaussian comb lter for pitch x and offset

F dened as follows, where i is a weight factor (currently set to

1), and i (= 16 cent) is the standard deviation of the Gaussian

distribution.

p(x; F) =

X

i=0

i

2i

exp

(x F 100i)

2

2

2

i

(2)

Using this as a lter function, the semitone stability Pg(F, t) at

time t and width T

g is dened as follows, where FF0(t) denotes

the F0 and PF0(t) denotes the F0 possibility at time t, which are

estimated per 10 msec using the method of Goto et al. [9].

P

g(F, t) =

Z

t

tTg

p(FF0(); F)PF0()d (3)

If all the F0 values are quantized by units of 100 cent, Pg(F, t)

will have a single sharp peak at grid frequency Fg

. The sharpness

1706

INTERSPEECH 2006 - ICSLP

September 17-21, Pittsburgh, Pennsylvania

of the Pg(F, t) distribution thus indicates the degree of deviation

of the singer from any semitone grid.

Figure 1 shows the calculation process and example results of

Pg(F, t). The top gure shows the original F0 trajectory. The sec-

ond gure shows the result of smoothing by an FIR lowpass lter

with 5 Hz cutoff frequency

1

, and then removing the silent sec-

tions. The purpose of lowpass ltering is to remove the F0 uctua-

tions (overshoot, vibrato, and preparation) of the singing voice [1].

P

g

(F, t) is calculated with a 200-sample (2sec) rectangular win-

dow shifted by 5 samples. The bottom left gures show example

tting to grids with F = 19 and F = 78, which are cumulated as

Pg(F, t) values shown to the right.

Figure 2 shows examples of P

g(F, t) and its long-term aver-

age g(F). When the singing is good, then P

g

(F, t), and conse-

quently, its g(F) always has a single sharp peak. So the sharpness

of g(F) can be utilized as a measure of pitch interval accuracy. Its

second moment M (dened as follows) is used as feature 1 :

M =

Z

Fg+50

Fg50

(Fg F)

2

g(F)dF (4)

where Fg is the F value for maximum g(F), i.e.:

Fg = argmax

F

g(F) (5)

The second feature 2 is taken as the slope bg of the linear

regression line of function G(F):

G(F) =

g(Fg +F) +g(Fg F)

2

(6)

bg is obtained by minimizing

err

2

g

=

Z

50

0

(G(F) (ag +bgF))

2

dF (7)

over a

g

and b

g

.

2.2. Estimating Vibrato Sections

Vibrato (deliberate, periodic uctuation of F0) is considered as

important singing technique, and so is incorporated within our

scheme. Our vibrato detection scheme imposes restrictions on vi-

brato parameters of rate (the number of vibrations per second) and

extent (the amplitude of vibration from an average pitch on the

vibrato section). Restrictions of rate and extent are based on pre-

vious research [2, 10], with the rate range set at 58 Hz and extent

range at 30150 cent.

The basic idea is to detect vibrato by using short-term Fourier

transform (STFT). In our current implementation, an STFT with a

32-sample (320 msec) Hanning window is calculated by using the

Fast Fourier Transform (FFT). STFT is applied to FF0(t), i.e.

the rst order nite differential of FF0(t). The power spectrum

X(f, t) can be expected to have a sharp peak where f corresponds

to the vibrato rate. This is expressed by the power v(t) and the

sharpness Sv(t) dened as:

v(t) =

Z

F

H

F

L

X(f, t)df (8)

Sv

(t) =

Z

F

H

F

L

X(f, t)

f

df (9)

1

We avoid unnatural smoothing by ignoring silence sections and leaps

of F0 transitions wider than a 300 cent threshold.

50 100 150 200 250 300 350 400 450

5200

5400

5600

5800

6000

50 100 150 200 250 300

5200

5400

5600

5800

6000

F = 19 cent

F = 78 cent

Original F0 trajectory

[cent]

[cent]

[cent]

100

0

...

Window-Shift

p(x; F)

[x 10msec]

[x 10msec]

5500

5600

5400

5300

5700

5800

5500

5600

5400

5300

5700

5800

[cent]

[cent]

[x 10msec]

[x 10msec]

100

100

200

200

p(x; F)

F0 trajectory without silent sections after lowpass filtering

Semitone stability Pg(F,t)

Figure 1: Overview of calculation method of Pg(F, t).

10 20 30 40 50 60 70 80 90 100

10 20 30 40 50 60 70 80 90 100

0

0

100

100

[cent]

[cent]

g(F)

g(F)

0

0

100

100

[cent]

[cent]

in good singing

[frames]

[frames]

Pg(F,t)

in poor singing Pg(F,t)

Figure 2: Examples of Pg(F, t) and its long-term average g(F) in

good/poor singing.

where (FL, FH) is the range of vibrato rate, and

X(f, t) is

X(f, t) normalized over f:

X(f, t) =

X(f, t)

R

X(f, t)df

(10)

Using these, the vibrato likeliness P

v(t) is dened as

Pv(t) = Sv(t)v(t) (11)

A section is judged as a vibrato section when it has high values

of Pv(t), and FF0(t) crosses its average value more than 5 times.

The vibrato rate and extent are obtained by

1

rate

=

1

N

N

X

n=1

Rn (12)

extent =

1

2N

N

X

n=1

En (13)

1707

INTERSPEECH 2006 - ICSLP

5500

5600

[cent]

5400

140 150 160 170 180 190 200

[x 10msec]

E

R

E

E

E

E

E

E

R R R

R R R

1

1 2

2

3

3

4

4

5

5

7

7 6

6

Vibrato (deliberate, periodic fluctuation of F0 [ ] )

average

Figure 3: Extraction parameters for vibrato detection.

F0 trajectory ( ) with estimated vibrato sections ( )

Vibrato likeliness

6000

5200

[cent]

100 200 300 400

0

0.1

0.2

Pv(t)

5600

[x 10msec]

[x 10msec]

100 200 300 400

Figure 4: An example of vibrato detection.

where Rn [sec] and En [cent] are illustrated in Figure 3. The total

length of the vibrato section is used as feature 3 .

The following two functions P

v1 and Pv2 are also used as fea-

tures 4 and 5 , since they take high values in the presence of

vibratos (Tv is the length of the analyzed signal).

Pv1 = max

0tTv

(Pv(t)) (14)

P

v2 =

1

Tv

Z

Tv

0

Pv(t)dt (15)

3. Classication Experiment

The proposed features 1 5 have been tested in 2-class classi-

cation (good/poor) experiments, using the results of a previous

rating experiment by human subjects [8].

3.1. Dataset

The song samples are taken from the AIST Humming Database

(AIST-HDB) [11]. The AIST-HDB contains singing voices of 100

subjects who sung melodies of 100 excerpts from 50 songs in the

RWCMusic Database (Popular Music [12] and Music Genre [13]).

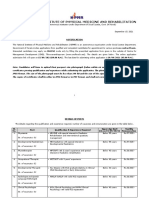

Table 1 shows the singing voice dataset used in the experi-

ment. Our automatic classication experiment used 600 samples

by 12 singers who sung 50 excerpts from either 25 Japanese or

English songs, after listening to each excerpt ve times. The 12

singer subjects (IDs in the name column) were selected by the

criteria of receiving a consistently high rating (good) or low rat-

ing (poor) in the rating experiment by human subjects, and all

their samples were marked good/poor accordingly (given in the

class column in the table).

Table 1: Dataset for classication experiment.

the number

name class language gender

of samples

E004 good English female 50

E008 good English female 50

E017 good English male 50

E021 good English male 50

J002 good Japanese female 50

J054 good Japanese male 50

E001 poor English female 50

E002 poor English female 50

E013 poor English male 50

E023 poor English male 50

J014 poor Japanese female 50

J052 poor Japanese male 50

3.2. Experimental Setting

Two experiments with different evaluation criteria were conducted

over three dataset settings (male, female, and male-female). In

each case, the feature value space was classied using Support

Vector Machine (SVM) as the classier. The two evaluation crite-

ria are 10-fold cross-validation and leave-one-out cross-validation.

The 10-fold cross-validation method uses 9/10 of the samples as

the training set and 1/10 as the test set in each trial. The leave-one-

out cross-validation, in our context, evaluates one sample as test

data, and uses the rest as training data (excluding those with the

same singer/melody as the test data).

3.3. Results and Discussion

Table 2 and Table 3 show the classication rates, precision rates

and recall rates of each class for the 10-fold and the leave-one-out

cross-validation respectively. The classication rate (C), precision

rate (Pi) and the recall rate (Ri) are dened as follows, where i

denotes the class of good (i = good) or poor (i = poor).

Pi =

samples correctly classied as class

i

samples classied as class

i

100 (16)

R

i =

samples correctly classied as class

i

samples in class

i

100 (17)

C =

samples correctly classied

total number of samples

100 =

R

good

+Rpoor

2

(18)

The results for male and male-female datasets are similar in

both criteria, while there is a signicant drop of ratings in the

leave-one-out criteria for the female dataset. The female dataset

also has a slightly lower rating in the 10-fold criteria as well. The

reason for this drop is yet unclear, although the overall agree-

ment in the male-female dataset suggests that the proposed fea-

tures are both effective, and also robust against individual differ-

ence of singer and/or melody.

The overall high values of P

good

and Rpoor suggest that the

classication is stringent towards judging as good (if judged as

good, then it is likely to be real good).

Figure 5 shows the classication rate for singers from the

male-female dataset. The results show that the classication of

good samples have a relatively high number of errors. One reason

1708

INTERSPEECH 2006 - ICSLP

Table 2: Results of the 10-fold criteria.

Dataset C P

good

R

good

Ppoor Rpoor

male 87.7% 95.2% 79.3% 82.3% 96.0%

female 80.3% 91.7% 66.7% 73.8% 94.0%

male-female 83.3% 90.3% 74.7% 78.4% 92.0%

Table 3: Results of the leave-one-out criteria.

Dataset C P

good

R

good

Ppoor Rpoor

male 87.7% 93.8% 80.7% 70.8% 94.7%

female 71.7% 74.8% 65.3% 58.0% 78.0%

male-female 83.5% 87.6% 78.0% 70.3% 89.0%

is that even a good singer may occasionally fail to keep good pitch

intervals, as they were singing out from memory. Such cases in-

clude when the overall pitch undergoes a gradual drift (resulting in

bad ratings in 1 2 ), which to the human ear does not sound so

distorted.

Classication errors also arise from the vibrato features 3

5 . A good sample can be misclassied as poor when there

is no vibrato, or when the values surpass the range restriction of

vibrato parameters. On the other hand, poor singing which cannot

keep a stable F0 can be mistakenly judged as a vibrato, especially

resulting from relatively high values of 4 5 .

4. Conclusion

This paper proposed two acoustical features, pitch interval accu-

racy and vibrato, which are effective for evaluating singing skills

without score information. In addition to these features, we have

also investigated other features, such as the slope of long-term av-

erage spectrum (LTAS), the power within a frequency range of

singers formant, the variance of 16-order cepstral coefcients, the

ratio between the power of harmonic components and others, the

average of spectral centroid or spectral rolloff, and the standard

deviation of F0 or power. The performance of their combinations,

however, has not surpassed the performance of the two features

presented in this paper. In the future, we plan to investigate other

features relevant to different vocal aspects such as rhythm and vo-

cal quality.

5. Acknowledgements

Authors would like to thank Mr. Hirokazu Kameoka (the Univer-

sity of Tokyo) for his valuable discussions and Dr. Elias Pampalk

(CREST/AIST) for proofreading an earlier version.

6. References

[1] Saitou, T., Unoki, M. and Akagi, M., Development of an

F0 Control Model Based on F0 Dynamic Characteristics for

Singing-voice Synthesis, Speech Communication, 46: 405

417, 2005.

[2] Sundberg, J., The Science of the Singing Voice, Northern Illi-

nois Univ Press, 226p., 1987.

[3] Kawahara, H. and Katayose, H., Scat Generation Research

Program Based on STRAIGHT, a High-quality Speech Anal-

100 100

0 0

50 50

J

0

5

2

J

0

1

4

J

0

0

2

J

0

5

4

E

0

0

1

E

0

0

4

E

0

0

8

E

0

1

7

E

0

2

1

E

0

0

2

E

0

1

3

E

0

2

3

Good Poor

[%] [%]

Singer No. Singer No.

Figure 5: Result of the classication experiment (by singer) using

the leave-one-out criteria and the male-female dataset.

ysis, Modication and Synthesis System, IPSJ Journal,

43(2):208218, 2002. (in Japanese)

[4] Ohishi, Y., Goto, M., Ito, K. and Takeda, K., Discrimination

between Singing and Speaking Voices, in Proc. 9th Euro-

pean Conference on Speech Communication and Technology

(Interspeech2005), 11411144, 2005.

[5] Omori, K., Kacker, A., Carroll, L.M., Riley, W.D. and Blau-

grund, S.M., Singing Power Ratio: Quantitative Evaluation

of Singing Voice Quality, Journal of Voice, 10(3):228235,

1996.

[6] Brown, W. S. Jr., Rothman, H. B. and Sapienza, C.M., Per-

ceptual and Acoustic Study of Professionally Trained Versus

Untrained Voices, Journal of Voice, 14(3):301309, 2000.

[7] Watts, C., Barnes-Burroughs, K., Estis, J. and Blanton,

D., The Singing Power Ratio as an Objective Measure of

Singing Voice Quality in Untrained Talented and Nontal-

ented Singers, Journal of Voice, 20(1):8288, 2006.

[8] Nakano, T., Goto, M. and Hiraga, Y., Subjective Evalua-

tion of Common Singing Skills Using the Rank Ordering

Method, in Proc. 9th International Conference of Music

Perception and Cognition (ICMPC2006), 2006. (accepted)

[9] Goto, M., Itou, K. and Hayamizu, S., A Real-time Filled

Pause Detection System for Spontaneous Speech Recogni-

tion, in Proc. 6th European Conference on Speech Commu-

nication and Technology (Eurospeech 99), 227230, 1999.

[10] Seashore, C.E., A Musical Ornament, the Vibrato, Chap-

ter 4, in Psychology of Music, McGrew-Hill Book Company,

pp.3352, 1938.

[11] Goto, M. and Nishimura, T., AIST Humming Database:

Music Database for Singing Research, The Special Inter-

est Group Notes of IPSJ (MUS), 2005(82):712, 2005. (in

Japanese)

[12] Goto, M., Hashiguchi, H., Nishimura, T. and Oka, R.,

RWC Music Database: Popular, Classical, and Jazz Music

Databases, in Proc. 3rd International Conference on Music

Information Retrieval (ISMIR 2002), 287288, 2002.

[13] Goto, M., Hashiguchi, H., Nishimura, T. and Oka, R., RWC

Music Database: Music Genre Database and Musical Instru-

ment Sound Database, in Proc. 4th International Confer-

ence on Music Information Retrieval (ISMIR 2003), 229

230, 2003.

1709

INTERSPEECH 2006 - ICSLP

You might also like

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Elementary Linear Algebra Applications Version 11th Edition Anton Solutions ManualDocument36 pagesElementary Linear Algebra Applications Version 11th Edition Anton Solutions Manualpearltucker71uej95% (22)

- Baccarat StrategyDocument7 pagesBaccarat StrategyRenz Mervin Rivera100% (3)

- 10 Fonts For A Better WebsiteDocument3 pages10 Fonts For A Better WebsiteAlyzza Kara AcabalNo ratings yet

- 39 - Riyadhah Wasyamsi Waduhaha RevDocument13 pages39 - Riyadhah Wasyamsi Waduhaha RevZulkarnain Agung100% (18)

- The Peppers, Cracklings, and Knots of Wool Cookbook The Global Migration of African CuisineDocument486 pagesThe Peppers, Cracklings, and Knots of Wool Cookbook The Global Migration of African Cuisinemikefcebu100% (1)

- Gian Lorenzo BerniniDocument12 pagesGian Lorenzo BerniniGiulia Galli LavigneNo ratings yet

- ECON 4035 - Excel GuideDocument13 pagesECON 4035 - Excel GuideRosario Rivera NegrónNo ratings yet

- Breast Cancer ChemotherapyDocument7 pagesBreast Cancer Chemotherapydini kusmaharaniNo ratings yet

- Extraction of Non-Timber Forest Products in The PDFDocument18 pagesExtraction of Non-Timber Forest Products in The PDFRohit Kumar YadavNo ratings yet

- Timothy Ajani, "Syntax and People: How Amos Tutuola's English Was Shaped by His People"Document20 pagesTimothy Ajani, "Syntax and People: How Amos Tutuola's English Was Shaped by His People"PACNo ratings yet

- BD9897FS Ic DetailsDocument5 pagesBD9897FS Ic DetailsSundaram LakshmananNo ratings yet

- 150 Years of PharmacovigilanceDocument2 pages150 Years of PharmacovigilanceCarlos José Lacava Fernández100% (1)

- Consolidated PCU Labor Law Review 1st Batch Atty Jeff SantosDocument36 pagesConsolidated PCU Labor Law Review 1st Batch Atty Jeff SantosJannah Mae de OcampoNo ratings yet

- Project Dayan PrathaDocument29 pagesProject Dayan PrathaSHREYA KUMARINo ratings yet

- Tfa Essay RubricDocument1 pageTfa Essay Rubricapi-448269753No ratings yet

- Modbus Manual TD80 PDFDocument34 pagesModbus Manual TD80 PDFAmar ChavanNo ratings yet

- TreeAgePro 2013 ManualDocument588 pagesTreeAgePro 2013 ManualChristian CifuentesNo ratings yet

- Edir AdminDocument916 pagesEdir AdminSELIMNo ratings yet

- Building Brand ArchitectureDocument3 pagesBuilding Brand ArchitectureNeazul Hasan100% (1)

- Lesson Plan 2 Revised - Morgan LegrandDocument19 pagesLesson Plan 2 Revised - Morgan Legrandapi-540805523No ratings yet

- 3658 - Implement Load BalancingDocument6 pages3658 - Implement Load BalancingDavid Hung NguyenNo ratings yet

- BrookfieldDocument8 pagesBrookfieldFariha Naseer Haral - 28336/TCHR/JVAITNo ratings yet

- Verbal Reasoning TestDocument3 pagesVerbal Reasoning TesttagawoNo ratings yet

- Awakening The Journalism Skills of High School StudentsDocument3 pagesAwakening The Journalism Skills of High School StudentsMaricel BernalNo ratings yet

- NIPMR Notification v3Document3 pagesNIPMR Notification v3maneeshaNo ratings yet

- Partnership & Corporation: 2 SEMESTER 2020-2021Document13 pagesPartnership & Corporation: 2 SEMESTER 2020-2021Erika BucaoNo ratings yet

- Total Recall and SkepticismDocument4 pagesTotal Recall and Skepticismdweiss99No ratings yet

- Access PHD Thesis British LibraryDocument4 pagesAccess PHD Thesis British Libraryafcmausme100% (2)

- Reviewer in Auditing Problems by Reynaldo Ocampo PDFDocument1 pageReviewer in Auditing Problems by Reynaldo Ocampo PDFCarlo BalinoNo ratings yet

- MSC Nastran 20141 Install GuideDocument384 pagesMSC Nastran 20141 Install Guiderrmerlin_2No ratings yet