Professional Documents

Culture Documents

Method of Research (Lec 3)

Uploaded by

John Russell MoralesCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Method of Research (Lec 3)

Uploaded by

John Russell MoralesCopyright:

Available Formats

Data-Gathering Instruments and Evidences

Crucial to the outcome of the research process is the source of information from

which the findings and conclusions are based. In modern terminology, information is

defined as processed data while a datum is the unit of information. Clearly, if we

want information to be clear, precise and accurate, they must come from good and

clean data. Primary data are data gathered from the origin or source while

secondary data are those processed from primary data and published in some

form. Researchers, generally, prefer to obtain primary data. However, for purposes

of descriptive studies, secondary data can also be used.

Primary Data Generation

When primary data from other researches , particularly those generated through

strict experimental designs, are available, you should consider using them rather

than generate your own. However, if no such data exist, then we consider

generating our own primary data sets following strict guidelines.

Primary data are of two kinds: evidence and perceptions. Evidences are hard,

irrefutable facts which cannot change even if you interviewed a different set of

respondents, while perceptions are not. Perceptions change with people, across

time and across space. It is therefore obviously difficult to form generalized scientific

conclusions from perception-based findings. For this reason, we advocate the use of

evidences rather than perceptions in academic research.

A foremost characteristic of a good data is validity. Validity refers to the extent to

which your data measure what they intend to measure in the first place.

Example: Compare the validity of the data generated to respond to the objective

of finding the Extent to which a municipal solid waste management ordinance is

implemented in a barangay:

Method 1: A questionnaire is constructed and administered to the barangay

residents. The questionnaire has items referring to the provisions of the solid waste

management ordinance and the respondents are asked to rate the extent to which

they have implemented the provisions with : 5 = very large extent down to 1=very

little extent. For example:

To what extent do you segregate your wastes into biodegradable and non-

biodegradable ---- 5: Very Large Extent,., 1 = Very little Extent

Method 2: Enumerators were hired by the researcher to go house to house and

count the number of houses with trash cans clearly marked as biodegradable and

non-biodegradable or in the absence of such trash cans, the enumerators asked

the households how they manage their solid wastes and look for evidence of their

responses. The extent to which this provision is implemented by the barangay at the

household level equals the percentage of households with clear waste segregation

system to the total number of households in that barangay.

Clearly, the data generated from Method 2 are more valid than the ones

generated from Method 1 (perception-based). In Method 1, it is possible that the

respondents will fake the responses. Likewise, even if they do not , it is still not clear

how a very large extent response would differ from a large extent response. Of

course, you can remedy this by defining exactly what is meant by the adjectives

very large extent, large extent etc. by saying very large extent means that you do

it every time without fail while large extent means that you do it 90% of the time.

Even then, the correlation between the responses obtained in Method 1 to what you

actually see happening in the barangay will be less than 1. In Method 2, there is a

perfect match between what you see and what is actually happening in the

barangay. The data obtained from Method 2 are classified as evidences while the

data obtained from Method 1 are classified as perceptions. Which of the two kinds

of data in this example would you have more faith in?

As scholars in our disciplines, we strive to obtain evidences rather than

perceptions because the former are more valid than the latter. In fact, we will show

later that the former is also more reliable than the latter. What steps should we

undertake to ensure that evidences are gathered rather than perceptions?

Evidence-Gathering

1. Clearly state what you want to know, preferably, in behavioural or observable

terms.

Example: Gather information on how the households implement the solid waste

management ordinance

2. Break down what you want to know in terms of specifics.

Example: Identify the provisions of the solid waste management ordinance in

terms of: (a.) waste segregation, and (b.) waste disposal

3. For each specific dimension, identify clear and observable indicators of the

presence or absence of the desired characteristics

Example: The following are the indicators per specific dimension of the solid

waste management ordinance:

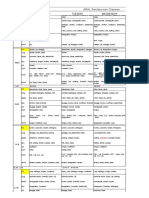

Specific Dimension Observable Indicator Quantitative Evidence

Derived

1. Waste Segregation 1. Household trash cans

clearly marked

biodegradable or

non-biodegradable or:

2. Evidence found inside

household showing

P = Percentage of

households with clear waste

separation scheme for

biodegradable and non-

biodegradable solid wastes to

the total number of

separation of wastes

into biodegradable or

non-biodegradable

households in the barangay

3. Waste Disposal 1. Households with open

dumps

P1 = Percentage of

households with open dumps

2. Households which use

open burning

P2 = Percentage of

households which use open

burning

3. Households using

compost pits

4. Households using the

weekly garbage

collection scheme

P3 = Percentage of

households using compost

pits

P4 = Percentage of

households using garbage

collection scheme

Extent of Waste Disposal

Compliance

= (P3 + P4) - (P1 + P2)

The table above is a useful guide for gathering evidences. However, for

some research studies evidence-gathering will be a real challenge to the researcher.

Here are some examples of such situations:

A Challenging Situation: No Way Out But Use Perceptions as Pseudo-

Evidence

Example: You wish to find information on the Attitudes of Students Toward

Mathematics. The major problem is to define what is meant by attitude. Perhaps

the most straightforward way of finding out about someones attitudes would be to

ask them. However, attitudes are related to self-image and social acceptance (i.e.

attitude functions). In order to preserve a positive self-image, peoples responses

may be affected by social desirability. They may not well tell about their true

attitudes, but answer in a way that they feel socially acceptable.

Given this problem, various methods of measuring attitudes have been

developed. However, all of them have limitations. In particular the different measures

focus on different components of attitudes cognitive, affective and behavioural

and as we know, these components do not necessarily coincide.

Semantic Differential Scale

The semantic differential technique of Osgood et al. (1957) asks a person to rate an

issue or topic on a standard set of bipolar adjectives (i.e. with opposite meanings),

each representing a seven point scale. To prepare a semantic differential scale,

you must first think of a number of words with opposite meanings that are applicable

to describing the subject of the test.

For example, participants are given a word, for example 'car', and presented with a

variety of adjectives to describe it. Respondents tick to indicate how they feel about

what is being measured

In the picture above, you will find Osgoods map of the responses of twenty (20)

people on the word POLITE. The semantic differential technique reveals

information on three basic dimensions of attitudes: evaluation, potency (i.e. strength)

and activity.

Evaluation is concerned with whether a person thinks positively or negatively

about the attitude topic (e.g. dirty clean, and ugly - beautiful).

Potency is concerned with how powerful the topic is for the person (e.g. cruel

kind, and strong - weak).

Activity is concerned with whether the topic is seen as active or passive (e.g.

active passive).

Using this information we can see if a persons feeling (evaluation) towards an

object is consistent with their behaviour. For example, a place might like the taste of

chocolate (evaluative) but not eat it often (activity). The evaluation dimension has

been most used by social psychologists as a measure of a persons attitude,

because this dimension reflects the affective aspect of an

attitude.(www.simplypsyhology.pwp.blueyonder.co.uk, 2008)

The difficulty that we encounter here stems from the inherent nature of the

phenomenon we are trying to observe. Attitude is mainly an affective domain

concept but what we want to do is try to gather observable evidence of the

phenomenon in the behavioural domain and these two need not coincide. The best

that can be done in situations such as this is to use a Semantic Differential Scale

and report the results in terms of Evaluation, Potency and Activity as separate

components of Attitudes Toward Mathematics, then use these as pseudo-

evidences.

Likert Scale

Rensis Likert (1958) proposed a more direct measurement of attitude which

involve two domains: cognitive and affective domains. He gives a series of

statements about the phenomenon and the respondent is asked to rate on a scale of

1 to 5 the extent to which he agrees or disagrees with the statement.

Example: Mathematics is a beautiful subject.

1:Strongly Disagree, 2: Disagree 3: No comment 4: Agree 5: Strongly Agree

The positive and negative statements are sequenced randomly in the questionnaire

to avoid faking of responses. A serious limitation of the Likert Scale is the social

desirability effect where respondents tend to answer in a way that is socially

acceptable rather than reveal their true attitudes toward the subject. For instance, if

a teacher wants to know if the use of Computer Assisted Instruction improves the

students attitudes toward Mathematics and if the teacher conducts the study

himself, then the students attitudinal responses to the Likert scale will be influenced

by their perception about their teachers attitude towards what he is trying to prove.

A second limitation of the Likert scale is related to the subsequent data analysis

to be performed on the scores obtained. If no qualifications are put on the choices,

then one cannot use the mean(or weighted mean) as a measure of central tendency

because the numbers themselves are meaningless. The numbers are

categorical/nominal/ordinal but when you qualify the choices (putting some

descriptive numerical meanings to them), then the numbers become interval data.

Proposed Hard Evidence

What hard evidence is needed in the above example which is not based on

perception? One possible way to generate hard evidence is to augment the

questionnaire with a different way of measuring liking of Mathematics as follows:

Present a student with a list of ten things that he would rather do. The list contains

five (5) activities which are non-Mathematical e.g. writing an essay, and five (5)

activities which are Mathematical e.g. solving verbal problems. Ask the student to

select his five most liked activities. Examine the students choices. His Mathematical

liking score is equal to the number of activities which are mathematical in nature

divided by 5 expressed in percentage. The number you will derive is not based on

perception but on the students actual liked activities. This is hard evidence. You can

now correlate this hard evidence score with the mean of the perceptions of the

student to see the extent to which the perceptions coincide with the hard evidence

As an aside, we state here that in so far as Statistical Theory is concerned , we

can always compute the mean and variance of random variables X even if they are

measured in nominal /ordinal/ categorical scales, provided that the underlying

probability distributions have finite mean and variances. What is being objected to by

social scientists when we use the mean for computing the central tendency of data

obtained from a Likert scale is the physical interpretability of the resulting quantity.

Example: Consider a random variable X representing the sex of a respondent.

Let :

X = 0 if the respondent is a male, and X = 1 if the respondent is a female.

Clearly, the variable X is a nominal variable. Suppose that there are 40% female

respondents and 60% male respondents so that:

P(X = 0 ) = .60 and P(X = 1) = .40.

Then, we can compute the mean and variance of this random variable from the

Bernoulli probability distribution:

= mean = 0.40 and

2

= variance = (.40)x(.60) = 0.24

So, in so far as Statistics is concerned, these are the location and scale parameter

estimates no problem. But, what does a mean sex of = 40% , really mean? This

is the problem of the Social Scientists. This cannot be the mode because obviously

there are more males in our sample; it cannot be the median because there is no

middle sex! What the statistical result really says is that there are 40% ones (1s) in

the data set or 40% female respondents.

The moral lesson of this example is that we must always be conscious of the

difference between STATISTICAL INTERPRETATION and PHYSICAL AND REAL

INTERPRETATION of the results of statistical analysis. In many instances, this

becomes a source of quarrel among experts: the failure to differentiate between

the two interpretations. No mathematical regulations are violated when you obtain

the mean of nominal random variables but certainly, there are hosts of discipline-

based regulations that are violated by doing so, foremost of which is the common

sense regulation.

Data Reliability

A second characteristic of a good data is reliability. Reliability refers to the

consistency or stability of the information content of the data. In other words if you

gathered your data now about a certain phenomenon and after sometime, gather the

same data from the same set of respondents, then the two data sets should

correlate highly. If you were using evidences then the correlation would be exactly

equal to 1. If you were using perceptions then the correlation would be close to 1 but

not exactly equal to 1 if the data were valid in the first place.

Gathering the same data twice, however , could be tedious or in some instances,

infeasible. The way out is to use what is called a split-half method. In this method,

the reliability coefficient is estimated by correlating the odd and even responses of

the respondents. What you get is a measure of internal consistency or internal

reliability.

Reliability may be estimated through a variety of methods that fall into two types:

single-administration and multiple-administration. Multiple-administration methods

require that two assessments are administered. In the test-retest method, reliability is

estimated as the Pearson product-moment correlation coefficient between two

administrations of the same measure: see also item-total correlation. In the alternate

forms method, reliability is estimated by the Pearson product-moment correlation

coefficient of two different forms of a measure, usually administered together. Single-

administration methods include split-half and internal consistency. The split-half method

treats the two halves of a measure as alternate forms. This "halves reliability" estimate

is then stepped up to the full test length using the Spearman-Brown prediction formula.

The most common internal consistency measure is Cronbach's alpha, which is usually

interpreted as the mean of all possible split-half coefficients. Cronbach's alpha is a

generalization of an earlier form of estimating internal consistency, Kuder-Richardson

Formula 20.

These measures of reliability differ in their sensitivity to different sources of error and

so need not be equal. Also, reliability is a property of the scores of a measure rather

than the measure itself and are thus said to be sample dependent. Reliability estimates

from one sample might differ from those of a second sample (beyond what might be

expected due to sampling variations) if the second sample is drawn from a different

population because the true variability is different in this second population. (This is true

of measures of all typesyardsticks might measure houses well yet have poor reliability

when used to measure the lengths of insects.)

Data-Gathering Instruments

The data-gathering instruments are the devices used to obtain the data required. These

devices may take the following forms:

1. Evidence-Table Checklist. You have seen an example of this in the solid waste

management example of this section. You have three columns: Specific

Dimension, Indicators Per Dimension, Quantitative Evidence . The checklist is

used by the data collectors in gathering evidences.

2. Tests. Tests are the most common form of data-gathering instruments. They are

most useful in the assessment of cognitive abilities. There is a whole subject

dedicated to test construction and item analysis beyond the scope of this book.

3. Questionnaire. A questionnaire consists of statements or questions to which the

respondents are expected to respond. The responses may be limited to a set of

options, such as the Likert Scale option or may be open to any kind of response

(open-ended questionnaires).

4. Semantic Differential Scales. Introduced by Osgood(1957), the instrument has

been described in detail in this section and is useful when obtaining information

in the affective domain. The instrument is able to assess three dimensions:

evaluation, potency and activity.

5. Structured Interview Forms. Similar to a questionnaire, structured interview

forms consists of a series of questions which will be asked by the interviewers to

the respondents. There is usually an added dimension: the interviewer needs to

fill out his impressions of the responses made by the respondents per item.

Whatever data-gathering instrument a researcher decides to use, the important thing to

remember is to be able to elicit valid and reliable data from the responses of the

respondents.

First, we will discuss the general criteria of a good research instrument:

Validity refers to the extent to which the instrument measures what it intends to

measure. Content validity can be established through the opinions of experts in the area

of knowledge being investigated. For example, an instrument on managerial

effectiveness must be shown to management experts. Each item in the instrument must

be accompanied by explanatory remarks from the researcher as to the concept being

measured by that item. The expert then puts his remarks on whether or not that item

indeed measures the concept.

Reliability refers to the extent to which the instrument is consistent. The

instrument should be able to elicit approximately the same response when applied to

respondents who are similarly situated. Similarly, when the instrument is applied at two

different points in time, the responses must highly correlate with one another. Thus,

reliability can be measured by correlating the responses of subjects exposed to the

instrument at two different time periods or by correlating the responses of the subjects

who are similarly situated.

Internal consistency. If an instrument measures a specific concept and this

concept is measured through questions or indicators, each question must correlate

highly with the total for this dimension. For example, if managerial effectiveness is

measured in terms of five questions, the scores for each question must correlate highly

with the total for managerial effectiveness

Readability refers to the level of difficulty of the instrument relative to the

intended users. Thus, an instrument in English applied to a set of respondents with no

education will be useless and unreadable.

You might also like

- 1q Gsa Morales2223Document5 pages1q Gsa Morales2223John Russell MoralesNo ratings yet

- Explaining and Predicting Filipino Industrial WorkersDocument1 pageExplaining and Predicting Filipino Industrial WorkersJohn Russell MoralesNo ratings yet

- 1st Quarter 2023Document44 pages1st Quarter 2023John Russell Morales0% (1)

- Sample Fn:catog/classsched/jrm/hcp: All Documents' File Name Should Be in This FormatDocument1 pageSample Fn:catog/classsched/jrm/hcp: All Documents' File Name Should Be in This FormatJohn Russell MoralesNo ratings yet

- Subject SchedDocument16 pagesSubject SchedJohn Russell MoralesNo ratings yet

- GYLC 18 Registration Application Revised 1Document5 pagesGYLC 18 Registration Application Revised 1John Russell MoralesNo ratings yet

- 5 Part Lesson Plan TraditionalDocument2 pages5 Part Lesson Plan TraditionalJohn Russell MoralesNo ratings yet

- John Russell V. Morales: January 13, 2016Document1 pageJohn Russell V. Morales: January 13, 2016John Russell MoralesNo ratings yet

- 10 Classroom Rules: Activity 1 Origin of My NameDocument2 pages10 Classroom Rules: Activity 1 Origin of My NameJohn Russell MoralesNo ratings yet

- Summative TestDocument1 pageSummative TestJohn Russell MoralesNo ratings yet

- Sabbath School Handbook PDFDocument121 pagesSabbath School Handbook PDFJohn Russell Morales50% (2)

- Control Loop CharacteristicsDocument10 pagesControl Loop CharacteristicsJohn Russell MoralesNo ratings yet

- George Frideric HandelHandelDocument7 pagesGeorge Frideric HandelHandelJohn Russell MoralesNo ratings yet

- Summative TestDocument1 pageSummative TestJohn Russell MoralesNo ratings yet

- December 10Document1 pageDecember 10John Russell MoralesNo ratings yet

- Tecarro College FoundationDocument8 pagesTecarro College FoundationJohn Russell MoralesNo ratings yet

- Doccumentation: in Mrs. Jocelyn Benituasan's Class - Grade IIDocument1 pageDoccumentation: in Mrs. Jocelyn Benituasan's Class - Grade IIJohn Russell MoralesNo ratings yet

- 6th Central Pay Commission Salary CalculatorDocument15 pages6th Central Pay Commission Salary Calculatorrakhonde100% (436)

- Julyan Straight LinesDocument1 pageJulyan Straight LinesJohn Russell MoralesNo ratings yet

- The Four Basic Elements of NegligenceDocument2 pagesThe Four Basic Elements of NegligenceJohn Russell MoralesNo ratings yet

- Recruitment and SelectionDocument15 pagesRecruitment and SelectionJohn Russell MoralesNo ratings yet

- Registration Without NameDocument1 pageRegistration Without NameJohn Russell MoralesNo ratings yet

- Ay Program Template 2016Document3 pagesAy Program Template 2016John Russell MoralesNo ratings yet

- Application FormDocument2 pagesApplication Formjohn100% (1)

- Necrological Service: Maria Adtoon BalateroDocument6 pagesNecrological Service: Maria Adtoon BalateroJohn Russell MoralesNo ratings yet

- Many Years Later Ahab Became The King of The Kingdom of IsraelDocument9 pagesMany Years Later Ahab Became The King of The Kingdom of IsraelJohn Russell MoralesNo ratings yet

- Application FormDocument2 pagesApplication Formjohn100% (1)

- Learning Outcomes: Maria Teresa Cruz Padilla Member, Ched-TcmidedDocument2 pagesLearning Outcomes: Maria Teresa Cruz Padilla Member, Ched-TcmidedJohn Russell MoralesNo ratings yet

- Food Menu: April 27, 2015 April 28, 2015Document2 pagesFood Menu: April 27, 2015 April 28, 2015John Russell MoralesNo ratings yet

- Ay Programme 2016 Schedule ProposalDocument2 pagesAy Programme 2016 Schedule ProposalJohn Russell MoralesNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Contoh RPH RingkasDocument6 pagesContoh RPH RingkasHanis AqilahNo ratings yet

- UT Dallas Syllabus For Phin1120.002.07s Taught by Terry Gold (Yogagold)Document4 pagesUT Dallas Syllabus For Phin1120.002.07s Taught by Terry Gold (Yogagold)UT Dallas Provost's Technology GroupNo ratings yet

- Assignment 2 - 6503Document6 pagesAssignment 2 - 6503api-399872156No ratings yet

- Heather Creighton ResumeDocument2 pagesHeather Creighton ResumehscreightonNo ratings yet

- Management AccountingDocument3 pagesManagement AccountingBryan Albert Cala25% (4)

- Q 34Document40 pagesQ 34Bob Miller0% (3)

- Argument EssayDocument7 pagesArgument Essayapi-302836312No ratings yet

- Pedagogy FinalDocument11 pagesPedagogy FinalClare CooneyNo ratings yet

- Impact of Time Management Behaviors On Undergraduate Engineering Students' PerformanceDocument11 pagesImpact of Time Management Behaviors On Undergraduate Engineering Students' PerformanceBella FrglNo ratings yet

- Lesson 3pptDocument40 pagesLesson 3pptDave Domingo DulayNo ratings yet

- Tendering and Estimating Assignment 02Document6 pagesTendering and Estimating Assignment 02Kasun CostaNo ratings yet

- CPR, AED and First Aid Certification Course - UdemyDocument8 pagesCPR, AED and First Aid Certification Course - UdemyAmirul AsyrafNo ratings yet

- BasicElectricity PDFDocument297 pagesBasicElectricity PDFtonylyf100% (1)

- Qa QCDocument183 pagesQa QCYuda FhunkshyangNo ratings yet

- Making Your First MillionDocument173 pagesMaking Your First MillionNihilist100% (1)

- Ancient RomanDocument264 pagesAncient RomanDr.Mohammed El-Shafey100% (3)

- Improper Fractions to Mixed Numbers LessonDocument14 pagesImproper Fractions to Mixed Numbers LessonAnna Liza BellezaNo ratings yet

- On Task BehaviorDocument1 pageOn Task Behaviorapi-313689709No ratings yet

- Understanding Grading SystemsDocument5 pagesUnderstanding Grading SystemsJeromeLacsinaNo ratings yet

- Seminar ProposalDocument11 pagesSeminar Proposalpuskesmas watulimoNo ratings yet

- MOOC Module 4 - InstructionalApproachesforTeachingWritingDocument18 pagesMOOC Module 4 - InstructionalApproachesforTeachingWritingSummer WangNo ratings yet

- Guhyagarbha Tantra IntroductionDocument134 pagesGuhyagarbha Tantra IntroductionGonpo Jack100% (12)

- Pearson Vue Exam Appointment ScheduleDocument10 pagesPearson Vue Exam Appointment ScheduleCamille EspinosaNo ratings yet

- Admission Policy of SAHARA Medical CollegeDocument6 pagesAdmission Policy of SAHARA Medical CollegeMamoon Ahmad ButtNo ratings yet

- Practical Research 2 MethodologyDocument42 pagesPractical Research 2 MethodologyBarbie CoronelNo ratings yet

- OJT training request letterDocument3 pagesOJT training request letterOliverMonteroNo ratings yet

- Sri Lanka's MD Radiology ProgramDocument27 pagesSri Lanka's MD Radiology ProgramGmoa Branchunion Diyathalawasrilanka50% (2)

- Course Category: Online Course DesignDocument6 pagesCourse Category: Online Course Designpaulina rifaiNo ratings yet

- Invigorating Economic Confidence in Malaysia - G25 ReportDocument92 pagesInvigorating Economic Confidence in Malaysia - G25 ReportSweetCharity77No ratings yet

- Achievement EssayDocument4 pagesAchievement EssayAngsana JalanNo ratings yet