Professional Documents

Culture Documents

Karush Kuhn Tucker Slides

Uploaded by

matchman6Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Karush Kuhn Tucker Slides

Uploaded by

matchman6Copyright:

Available Formats

Lagrange Multipliers and the

Karush-Kuhn-Tucker conditions

March 20, 2012

Optimization

Goal:

Want to nd the maximum or minimum of a function subject to

some constraints.

Formal Statement of Problem:

Given functions f, g

1

, . . . , g

m

and h

1

, . . . , h

l

dened on some

domain R

n

the optimization problem has the form

min

x

f(x) subject to g

i

(x) 0 i and h

j

(x) = 0 j

In these notes..

We will derive/state sucient and necessary for (local) optimality

when there are

1 no constraints,

2 only equality constraints,

3 only inequality constraints,

4 equality and inequality constraints.

Unconstrained Optimization

Unconstrained Minimization

Assume:

Let f : R be a continuously dierentiable function.

Necessary and sucient conditions for a local minimum:

x

is a local minimum of f(x) if and only if

1 f has zero gradient at x

x

f(x

) = 0

2 and the Hessian of f at w

is positive semi-denite:

v

t

(

2

f(x

)) v 0, v R

n

where

2

f(x) =

2

f(x)

x

2

1

2

f(x)

x

1

x

n

.

.

.

.

.

.

.

.

.

2

f(x)

x

n

x

1

2

f(x)

x

2

n

Unconstrained Maximization

Assume:

Let f : R be a continuously dierentiable function.

Necessary and sucient conditions for local maximum:

x

is a local maximum of f(x) if and only if

1 f has zero gradient at x

:

f(x

) = 0

2 and the Hessian of f at x

is negative semi-denite:

v

t

(

2

f(x

)) v 0, v R

n

where

2

f(x) =

2

f(x)

x

2

1

2

f(x)

x

1

x

n

.

.

.

.

.

.

.

.

.

2

f(x)

x

n

x

1

2

f(x)

x

2

n

Constrained Optimization:

Equality Constraints

Tutorial Example

Problem:

This is the constrained optimization problem we want to solve

min

xR

2

f(x) subject to h(x) = 0

where

f(x) = x

1

+ x

2

and h(x) = x

2

1

+ x

2

2

2

Tutorial example - Cost function

x

1

x

2

x

1

+ x

2

= 2

x

1

+ x

2

= 1

x

1

+ x

2

= 0

x

1

+ x

2

= 1

x

1

+ x

2

= 2

iso-contours of f(x)

f(x) = x

1

+ x

2

Tutorial example - Feasible region

x

1

x

2

x

1

+ x

2

= 2

x

1

+ x

2

= 1

x

1

+ x

2

= 0

x

1

+ x

2

= 1

x

1

+ x

2

= 2

iso-contours of f(x)

feasible region: h(x) = 0

h(x) = x

2

1

+ x

2

2

2

Given a point x

F

on the constraint surface

x

1

x

2

feasible point x

F

Given a point x

F

on the constraint surface

x

1

x

2

x

Find x s.t. h(x

F

+x) = 0 and f(x

F

+x) < f(x

F

)?

Condition to decrease the cost function

x

1

x

2

x

f(x

F

)

At any point x the direction of steepest descent of the cost

function f(x) is given by

x

f( x).

Condition to decrease the cost function

x

1

x

2

x

Here f(x

F

+ x) < f(x

F

)

To move x from x such that f(x + x) < f(x) must have

x (

x

f(x)) > 0

Condition to remain on the constraint surface

x

1

x

2

x

h(x

F

)

Normals to the constraint surface are given by

x

h(x)

Condition to remain on the constraint surface

x

1

x

2

x

h(x

F

)

Note the direction of the normal is arbitrary as the constraint be

imposed as either h(x) = 0 or h(x) = 0

Condition to remain on the constraint surface

x

1

x

2

Direction orthogonal to

x

h(x

F

)

To move a small x from x and remain on the constraint surface

we have to move in a direction orthogonal to

x

h(x).

To summarize...

If x

F

lies on the constraint surface:

setting x orthogonal to

x

h(x

F

) ensures h(x

F

+ x) = 0.

And f(x

F

+ x) < f(x

F

) only if

x (

x

f(x

F

)) > 0

Condition for a local optimum

Consider the case when

x

f(x

F

) =

x

h(x

F

)

where is a scalar.

When this occurs

If x is orthogonal to

x

h(x

F

) then

x (

x

F

f(x)) = x

x

h(x

F

) = 0

Cannot move from x

F

to remain on the constraint surface and

decrease (or increase) the cost function.

This case corresponds to a constrained local optimum!

Condition for a local optimum

Consider the case when

x

f(x

F

) =

x

h(x

F

)

where is a scalar.

When this occurs

If x is orthogonal to

x

h(x

F

) then

x (

x

F

f(x)) = x

x

h(x

F

) = 0

Cannot move from x

F

to remain on the constraint surface and

decrease (or increase) the cost function.

This case corresponds to a constrained local optimum!

Condition for a local optimum

x

1

x

2

critical point

critical point

A constrained local optimum occurs at x

when

x

f(x

) and

x

h(x

) are parallel that is

x

f(x

) =

x

h(x

)

From this fact Lagrange Multipliers make sense

Remember our constrained optimization problem is

min

xR

2

f(x) subject to h(x) = 0

Dene the Lagrangian as

L(x, ) = f(x) + h(x)

Then x

a local minimum there exists a unique

s.t.

1

x

L(x

) = 0

2

L(x

) = 0

3 y

t

(

2

xx

L(x

))y 0 y s.t.

x

h(x

)

t

y = 0

From this fact Lagrange Multipliers make sense

Remember our constrained optimization problem is

min

xR

2

f(x) subject to h(x) = 0

Dene the Lagrangian as note L(x

) = f(x

L(x, ) = f(x) + h(x)

Then x

a local minimum there exists a unique

s.t.

1

x

L(x

) = 0 encodes

x

f(x

) =

x

h(x

)

2

L(x

) = 0 encodes the equality constraint h(x

) = 0

3 y

t

(

2

xx

L(x

))y 0 y s.t.

x

h(x

)

t

y = 0

Positive denite Hessian tells us we have a local minimum

The case of multiple equality constraints

The constrained optimization problem is

min

xR

2

f(x) subject to h

i

(x) = 0 for i = 1, . . . , l

Construct the Lagrangian (introduce a multiplier for each constraint)

L(x, ) = f(x) +

l

i=1

i

h

i

(x) = f(x) +

t

h(x)

Then x

a local minimum there exists a unique

s.t.

1

x

L(x

) = 0

2

L(x

) = 0

3 y

t

(

2

xx

L(x

))y 0 y s.t.

x

h(x

)

t

y = 0

Constrained Optimization:

Inequality Constraints

Tutorial Example - Case 1

Problem:

Consider this constrained optimization problem

min

xR

2

f(x) subject to g(x) 0

where

f(x) = x

2

1

+ x

2

2

and g(x) = x

2

1

+ x

2

2

1

Tutorial example - Cost function

x

1

x

2

iso-contours of f(x)

minimum of f(x)

f(x) = x

2

1

+ x

2

2

Tutorial example - Feasible region

x

1

x

2

feasible region: g(x) 0

iso-contours of f(x)

g(x) = x

2

1

+ x

2

2

1

How do we recognize if x

F

is at a local optimum?

x

1

x

2

How can we recognize x

F

is at a local minimum?

Remember x

F

denotes a feasible point.

Easy in this case

x

1

x

2

How can we recognize x

F

is at a local minimum?

Unconstrained minimum

of f(x) lies within

the feasible region.

Necessary and sucient conditions for a constrained local

minimum are the same as for an unconstrained local minimum.

x

f(x

F

) = 0 and

xx

f(x

F

) is positive denite

This Tutorial Example has an inactive constraint

Problem:

Our constrained optimization problem

min

xR

2

f(x) subject to g(x) 0

where

f(x) = x

2

1

+ x

2

2

and g(x) = x

2

1

+ x

2

2

1

Constraint is not active at the local minimum (g(x

) < 0):

Therefore the local minimum is identied by the same conditions

as in the unconstrained case.

Tutorial Example - Case 2

Problem:

This is the constrained optimization problem we want to solve

min

xR

2

f(x) subject to g(x) 0

where

f(x) = (x

1

1.1)

2

+ (x

2

1.1)

2

and g(x) = x

2

1

+ x

2

2

1

Tutorial example - Cost function

x

1

x

2

iso-contours of f(x)

minimum of f(x)

f(x) = (x

1

1.1)

2

+ (x

2

1.1)

2

Tutorial example - Feasible region

x

1

x

2

iso-contours of f(x)

feasible region: g(x) 0

g(x) = x

2

1

+ x

2

2

1

How do we recognize if x

F

is at a local optimum?

x

1

x

2

Is x

F

at a local minimum?

Remember x

F

denotes a feasible point.

How do we recognize if x

F

is at a local optimum?

x

1

x

2

How can we tell if x

F

is at a local minimum?

Unconstrained local

minimum of f(x)

lies outside of the

feasible region.

the constrained local minimum occurs on the surface of the

constraint surface.

How do we recognize if x

F

is at a local optimum?

x

1

x

2

How can we tell if x

F

is at a local minimum?

Unconstrained local

minimum of f(x)

lies outside of the

feasible region.

Eectively have an optimization problem with an equality

constraint: g(x) = 0.

Given an equality constraint

x

1

x

2

A local optimum occurs when

x

f(x) and

x

g(x) are parallel:

-

x

f(x) =

x

g(x)

Want a constrained local minimum...

x

1

x

2

Not a constrained

local minimum as

x

f(x

F

) points

in towards the

feasible region

Constrained local minimum occurs when

x

f(x) and

x

g(x)

point in the same direction:

x

f(x) =

x

g(x) and > 0

Want a constrained local minimum...

x

1

x

2

Is a constrained

local minimum as

x

f(x

F

) points

away from the

feasible region

Constrained local minimum occurs when

x

f(x) and

x

g(x)

point in the same direction:

x

f(x) =

x

g(x) and > 0

Summary of optimization with one inequality constraint

Given

min

xR

2

f(x) subject to g(x) 0

If x

corresponds to a constrained local minimum then

Case 1:

Unconstrained local minimum

occurs in the feasible region.

1 g(x

) < 0

2

x

f(x

) = 0

3

xx

f(x

) is a positive

semi-denite matrix.

Case 2:

Unconstrained local minimum

lies outside the feasible region.

1 g(x

) = 0

2

x

f(x

) =

x

g(x

)

with > 0

3 y

t

xx

L(x

) y 0 for all

y orthogonal to

x

g(x

).

Karush-Kuhn-Tucker conditions encode these conditions

Given the optimization problem

min

xR

2

f(x) subject to g(x) 0

Dene the Lagrangian as

L(x, ) = f(x) + g(x)

Then x

a local minimum there exists a unique

s.t.

1

x

L(x

) = 0

2

0

3

g(x

) = 0

4 g(x

) 0

5 Plus positive denite constraints on

xx

L(x

).

These are the KKT conditions.

Lets check what the KKT conditions imply

Case 1 - Inactive constraint:

When

= 0 then have L(x

) = f(x

).

Condition KKT 1 =

x

f(x

) = 0.

Condition KKT 4 = x

is a feasible point.

Case 2 - Active constraint:

When

> 0 then have L(x

) = f(x

) +

g(x

).

Condition KKT 1 =

x

f(x

) =

x

g(x

).

Condition KKT 3 = g(x

) = 0.

Condition KKT 3 also = L(x

) = f(x

).

KKT conditions for multiple inequality constraints

Given the optimization problem

min

xR

2

f(x) subject to g

j

(x) 0 for j = 1, . . . , m

Dene the Lagrangian as

L(x, ) = f(x) +

m

j=1

j

g

j

(x) = f(x) +

t

g(x)

Then x

a local minimum there exists a unique

s.t.

1

x

L(x

) = 0

2

j

0 for j = 1, . . . , m

3

j

g(x

) = 0 for j = 1, . . . , m

4 g

j

(x

) 0 for j = 1, . . . , m

5 Plus positive denite constraints on

xx

L(x

).

KKT for multiple equality & inequality constraints

Given the constrained optimization problem

min

xR

2

f(x)

subject to

h

i

(x) = 0 for i = 1, . . . , l and g

j

(x) 0 for j = 1, . . . , m

Dene the Lagrangian as

L(x, , ) = f(x) +

t

h(x) +

t

g(x)

Then x

a local minimum there exists a unique

s.t.

1

x

L(x

) = 0

2

j

0 for j = 1, . . . , m

3

j

g

j

(x

) = 0 for j = 1, . . . , m

4 g

j

(x

) 0 for j = 1, . . . , m

5 h(x

) = 0

6 Plus positive denite constraints on

xx

L(x

).

You might also like

- Chap1 Lec1 Introduction To NLODocument3 pagesChap1 Lec1 Introduction To NLOUzair AslamNo ratings yet

- Optimal Control Dynamic ProgrammingDocument18 pagesOptimal Control Dynamic ProgrammingPuloma DwibediNo ratings yet

- Econ11 HW PDFDocument207 pagesEcon11 HW PDFAlbertus MuheuaNo ratings yet

- Mathematics For Economics (Implicit Function Theorm)Document10 pagesMathematics For Economics (Implicit Function Theorm)ARUPARNA MAITYNo ratings yet

- Dynamic Programming Value IterationDocument36 pagesDynamic Programming Value Iterationernestdautovic100% (1)

- Libro - An Introduction To Continuous OptimizationDocument399 pagesLibro - An Introduction To Continuous OptimizationAngelNo ratings yet

- Notes 3Document8 pagesNotes 3Sriram BalasubramanianNo ratings yet

- Engineering Optimization Methods Constrained ProblemsDocument49 pagesEngineering Optimization Methods Constrained Problemsprasaad08No ratings yet

- Constraint QualificationDocument7 pagesConstraint QualificationWhattlial53No ratings yet

- CV and EV explained: Calculating compensating and equivalent variationsDocument8 pagesCV and EV explained: Calculating compensating and equivalent variationsRichard Ding100% (1)

- Dynamic ProgrammingDocument52 pagesDynamic ProgrammingattaullahchNo ratings yet

- Learning Hessian Matrix PDFDocument100 pagesLearning Hessian Matrix PDFSirajus SalekinNo ratings yet

- Maximum Value Functions and The Envelope TheoremDocument10 pagesMaximum Value Functions and The Envelope TheoremS mukherjeeNo ratings yet

- Lecture - 10 Monotone Comparative StaticsDocument20 pagesLecture - 10 Monotone Comparative StaticsAhmed GoudaNo ratings yet

- Real ITSM: How IT Is Really Done in The Real World - From An ITIL and ITSM ViewpointDocument15 pagesReal ITSM: How IT Is Really Done in The Real World - From An ITIL and ITSM Viewpointitskeptic100% (1)

- Advanced Microeconomics: Envelope TheoremDocument3 pagesAdvanced Microeconomics: Envelope TheoremTianxu ZhuNo ratings yet

- Lagrange Multipliers: Solving Multivariable Optimization ProblemsDocument5 pagesLagrange Multipliers: Solving Multivariable Optimization ProblemsOctav DragoiNo ratings yet

- Envelope Theorem and Identities (Good)Document17 pagesEnvelope Theorem and Identities (Good)sadatnfsNo ratings yet

- Constrained Optimization Problem SolvedDocument6 pagesConstrained Optimization Problem SolvedMikeHuynhNo ratings yet

- Optimization Methods (MFE) : Elena PerazziDocument28 pagesOptimization Methods (MFE) : Elena Perazziddd huangNo ratings yet

- Analyzing GDP: Components, Measurement, and LimitationsDocument70 pagesAnalyzing GDP: Components, Measurement, and LimitationsMichelleJohnsonNo ratings yet

- Optimization Methods (MFE) : Elena PerazziDocument31 pagesOptimization Methods (MFE) : Elena PerazziRoy Sarkis100% (1)

- Karush Kuhn TuckerDocument14 pagesKarush Kuhn TuckerAVALLESTNo ratings yet

- Integer ProgrammingDocument58 pagesInteger ProgrammingshahabparastNo ratings yet

- 1 Functions of Several VariablesDocument4 pages1 Functions of Several VariablesMohammedTalibNo ratings yet

- Integer ProgrammingDocument51 pagesInteger Programmingapi-26143879No ratings yet

- Problem Set 1 - SolutionsDocument4 pagesProblem Set 1 - SolutionsAlex FavelaNo ratings yet

- Lecture3 PDFDocument42 pagesLecture3 PDFAledavid Ale100% (1)

- Cobb DouglasDocument14 pagesCobb DouglasRob WolfeNo ratings yet

- IFRS 9 Impairment PDFDocument26 pagesIFRS 9 Impairment PDFgreisikarensaNo ratings yet

- Binomial TheoremDocument24 pagesBinomial TheoremEdwin Okoampa BoaduNo ratings yet

- Dynamic ProgrammingDocument36 pagesDynamic Programmingfatma-taherNo ratings yet

- Tutorial 5 - Derivative of Multivariable FunctionsDocument2 pagesTutorial 5 - Derivative of Multivariable FunctionsИбрагим Ибрагимов0% (1)

- BDO PSAK71 Financial Inst RevisiDocument6 pagesBDO PSAK71 Financial Inst RevisiNico Hadi GelianNo ratings yet

- Maximization and Lagrange MultipliersDocument20 pagesMaximization and Lagrange MultipliersMiloje ĐukanovićNo ratings yet

- Simplex MethodDocument59 pagesSimplex Methodahmedelebyary100% (1)

- OQM Lecture Note - Part 8 Unconstrained Nonlinear OptimisationDocument23 pagesOQM Lecture Note - Part 8 Unconstrained Nonlinear OptimisationdanNo ratings yet

- Lecture 2Document19 pagesLecture 2Jaco GreeffNo ratings yet

- 06 Subgradients ScribedDocument5 pages06 Subgradients ScribedFaragNo ratings yet

- Slides 01Document139 pagesSlides 01supeng huangNo ratings yet

- 23 Lect DualAlgoDocument51 pages23 Lect DualAlgozhongyu xiaNo ratings yet

- Nonlinear OptimizationDocument6 pagesNonlinear OptimizationKibria PrangonNo ratings yet

- Non Linear Optimization ModifiedDocument78 pagesNon Linear Optimization ModifiedSteven DreckettNo ratings yet

- B For I 1,, M: N J J JDocument19 pagesB For I 1,, M: N J J Jضيٍَِِ الكُمرNo ratings yet

- Optimization-Based Control: Richard M. Murray Control and Dynamical Systems California Institute of TechnologyDocument21 pagesOptimization-Based Control: Richard M. Murray Control and Dynamical Systems California Institute of TechnologyBang JulNo ratings yet

- Chapter 3Document43 pagesChapter 3Di WuNo ratings yet

- 09 - nlp1 - OnlineDocument23 pages09 - nlp1 - OnlineHuda LizamanNo ratings yet

- Unconstrained Optimization Math CampDocument5 pagesUnconstrained Optimization Math CampShreyasKamatNo ratings yet

- Operation Research: X, X, .., X H) F (X, H,, H,, HDocument31 pagesOperation Research: X, X, .., X H) F (X, H,, H,, HTahera ParvinNo ratings yet

- KKT Conditions and Duality: March 23, 2012Document36 pagesKKT Conditions and Duality: March 23, 2012chgsnsaiNo ratings yet

- Lect 2 ClassicalDocument29 pagesLect 2 ClassicalJay BhavsarNo ratings yet

- Operaciones Repaso de OptimizacionDocument21 pagesOperaciones Repaso de OptimizacionMigu3lspNo ratings yet

- Optimization Problems ChapterDocument4 pagesOptimization Problems ChapterJǝ Ǝʌan M CNo ratings yet

- Non Linear Programming ProblemsDocument66 pagesNon Linear Programming Problemsbits_who_am_iNo ratings yet

- Operation ResearchDocument23 pagesOperation ResearchTahera ParvinNo ratings yet

- Lecture-02 (Functions and Its Limit)Document16 pagesLecture-02 (Functions and Its Limit)Abidur Rahman ZidanNo ratings yet

- Unconstrained and Constrained OptimizationDocument31 pagesUnconstrained and Constrained OptimizationPhilip PCNo ratings yet

- UGCM1653 - Chapter 2 Calculus - 202001 PDFDocument30 pagesUGCM1653 - Chapter 2 Calculus - 202001 PDF木辛耳总No ratings yet

- Subgradients: Subgradient Calculus Duality and Optimality Conditions Directional DerivativeDocument39 pagesSubgradients: Subgradient Calculus Duality and Optimality Conditions Directional DerivativeDAVID MORANTENo ratings yet

- HL Syll Lent2020Document1 pageHL Syll Lent2020matchman6No ratings yet

- Prove It - The Art of Mathematical Argument: 1 Front CoverDocument4 pagesProve It - The Art of Mathematical Argument: 1 Front Covermatchman6No ratings yet

- Optimisation For EconomistsDocument5 pagesOptimisation For EconomistsWill MatchamNo ratings yet

- Week 5 Class EC221Document12 pagesWeek 5 Class EC221matchman6No ratings yet

- Non Parametric Econometrics SlidesDocument57 pagesNon Parametric Econometrics Slidesmatchman6No ratings yet

- Sieve Estimation of S-NP ModelsDocument27 pagesSieve Estimation of S-NP Modelsmatchman6No ratings yet

- 2001, Pena, PrietoDocument25 pages2001, Pena, Prietomatchman6No ratings yet

- RooterDocument4 pagesRooterJessica RiveraNo ratings yet

- Vickrey Auction EquilibriaDocument9 pagesVickrey Auction Equilibriamatchman6No ratings yet

- Programming ExercisesDocument2 pagesProgramming ExercisesDaryl Ivan Empuerto HisolaNo ratings yet

- MCR 3U5 CPT Part 2Document4 pagesMCR 3U5 CPT Part 2Ronit RoyanNo ratings yet

- Advanced Higher Maths Exam 2015Document8 pagesAdvanced Higher Maths Exam 2015StephenMcINo ratings yet

- Crankweb Deflections 9 Cyl ELANDocument2 pagesCrankweb Deflections 9 Cyl ELANСлавик МосинNo ratings yet

- Linear equations worksheet solutionsDocument4 pagesLinear equations worksheet solutionsHari Kiran M PNo ratings yet

- Dost Jls S PrimerDocument17 pagesDost Jls S PrimerShaunrey SalimbagatNo ratings yet

- M.A.M College of Engineering: Department of Electrical and Electronics EnggDocument16 pagesM.A.M College of Engineering: Department of Electrical and Electronics EnggKrishna ChaitanyaNo ratings yet

- Materials Selection ProcessDocument9 pagesMaterials Selection ProcessMarskal EdiNo ratings yet

- 1.2 Student Workbook ESSDocument7 pages1.2 Student Workbook ESSTanay shahNo ratings yet

- General Certificate of Education June 2008 Advanced Extension AwardDocument12 pagesGeneral Certificate of Education June 2008 Advanced Extension AwardDaniel ConwayNo ratings yet

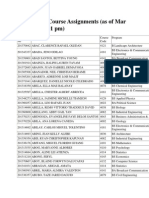

- UP Diliman Course AssignmentsDocument82 pagesUP Diliman Course Assignmentsgamingonly_accountNo ratings yet

- Fastener Process SimiulationDocument4 pagesFastener Process SimiulationMehran ZaryounNo ratings yet

- Methods and Algorithms For Advanced Process ControlDocument8 pagesMethods and Algorithms For Advanced Process ControlJohn CoucNo ratings yet

- Virial TheoremDocument3 pagesVirial Theoremdodge_kevinNo ratings yet

- Induction MotorDocument27 pagesInduction MotorNandhini SaranathanNo ratings yet

- KANNUR UNIVERSITY FACULTY OF ENGINEERING Curricula, Scheme of Examinations & Syllabus for COMBINED I & II SEMESTERS of B.Tech. Degree Programme with effect from 2007 AdmissionsDocument23 pagesKANNUR UNIVERSITY FACULTY OF ENGINEERING Curricula, Scheme of Examinations & Syllabus for COMBINED I & II SEMESTERS of B.Tech. Degree Programme with effect from 2007 AdmissionsManu K MNo ratings yet

- Orifice SizingDocument2 pagesOrifice SizingAvinav Kumar100% (1)

- Jan Corné Olivier - Linear Systems and Signals (2019)Document304 pagesJan Corné Olivier - Linear Systems and Signals (2019)fawNo ratings yet

- J.F.M. Wiggenraad and D.G. Zimcik: NLR-TP-2001-064Document20 pagesJ.F.M. Wiggenraad and D.G. Zimcik: NLR-TP-2001-064rtplemat lematNo ratings yet

- Ziad Masri - Reality Unveiled The Hidden KeyDocument172 pagesZiad Masri - Reality Unveiled The Hidden KeyAivlys93% (15)

- On The Residual Shearing Strength of ClaysDocument5 pagesOn The Residual Shearing Strength of ClaysHUGINo ratings yet

- IS 1570 Part 5Document18 pagesIS 1570 Part 5Sheetal JindalNo ratings yet

- Anemometer ActivityDocument4 pagesAnemometer ActivityGab PerezNo ratings yet

- Quantum Mechanics Module NotesDocument12 pagesQuantum Mechanics Module NotesdtrhNo ratings yet

- Malla Curricular Ingenieria Civil UNTRMDocument1 pageMalla Curricular Ingenieria Civil UNTRMhugo maldonado mendoza50% (2)

- FFT window and transform lengthsDocument5 pagesFFT window and transform lengthsNguyen Quoc DoanNo ratings yet

- Interference QuestionsDocument11 pagesInterference QuestionsKhaled SaadiNo ratings yet

- Topic 5 - Criticality of Homogeneous ReactorsDocument53 pagesTopic 5 - Criticality of Homogeneous ReactorsSit LucasNo ratings yet

- 2SD2525 Datasheet en 20061121Document5 pages2SD2525 Datasheet en 20061121Giannis MartinosNo ratings yet

- Chapter 11Document2 pagesChapter 11naniac raniNo ratings yet