Professional Documents

Culture Documents

The Fundamental Theorem of Markov Chains

Uploaded by

Michael PearsonOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

The Fundamental Theorem of Markov Chains

Uploaded by

Michael PearsonCopyright:

Available Formats

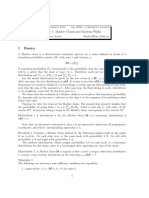

THE FUNDAMENTAL THEOREM OF MARKOV CHAINS

AARON PLAVNICK

Abstract. This paper provides some background for and proves the Funda-

mental Theorem of Markov Chains. It provides some basic denitions and

notation for recursion, periodicity, and stationary distributions.

Contents

1. Denitions and Background 1

2. Fundamental Theorem of Markov Chains 3

References 4

1. Definitions and Background

So what is a Markov Chain, lets dene it.

Denition 1.1. Let {X

0

, X

1

, . . .} be a sequence of random variables and Z =

0, 1, 2, . . . be the union of the sets of their realizations.Then {X

0

, X

1

, . . .} is

called a discrete-time Markov Chain with state space Z if:

P(X

n+1

= i

n+1

|X

n

= i

n

, . . . , X

1

= i

1

) = P(X

n+1

= i

n+1

|X

n

= i

n

)

Now lets set up some notation for the one-step transition probabilities of the

Markov Chain Let:

p

ij

(n) = P(X

n+1

= j|X

n

= i); n = 0, 1, . . .

We will limit ourselves to homogeneous Markov Chains. Or Markov Chains that

do not evolve in time.

Denition 1.2. We say that a Markov Chain is homogeneous if its one-step tran-

sition probabilities do not depend on n ie.

n, m N and i, j Z p

ij

(n) = p

ij

(m)

We then dene the n-step transition probabilities of a homogeneous Markov

Chain by

p

(m)

ij

= P(X

n+m

= j|X

n

= i)

Where by convention we dene

p

(0)

ij

=

(

1 if i = j

0 if i 6= j

Date: August 22, 2008.

1

2 AARON PLAVNICK

With these denitions we are now ready for a quick theorem: the Chapman-

Kolmogorov equation. This serves to relate the transition probabilities of a Markov

Chain.

Theorem 1.3.

p

(m)

ij

=

X

kZ

p

(r)

ik

p

(mr)

kj

; r N {0}

Proof. Making use of the total probability rule as well as the Markovian property

in this proof yields:

By total probability rule:

p

(m)

ij

= P(X

m

= j|X

0

= i) =

X

kZ

P(X

m

= j, X

r

= k|X

0

= i)

=

X

kZ

P(X

m

= j|X

r

= k, X

0

= i)P(X

r

= k|X

0

= i)

and now by the Markovian property:

=

X

kZ

P(X

m

= j|X

r

= k)P(X

r

= k|X

0

= i) =

X

kZ

p

(r)

ik

p

(mr)

kj

The Chapaman-Kolmogrov equation allows us to associate our n-step transition

probabilities to a matrix of n-step transistion probabilities. Lets dene the matrix:

P

(m)

= ((p

(m)

ij

))

Corollary 1.4. Observe now that:

P

(m)

= P

m

Where P

m

here denotes the typical matrix multiplication.

Proof. We can rewrite the Chapaman-Kolmogrov equation in matrix form as:

P

(m)

= P

(r)

P

(mr)

; r N {0}

Now we can induct on m. Its apparent that

P

(1)

= P

Giving us:

P

(m+1)

= P

(r)

P

(m+1r)

A Markov Chains transition probabilities along with an initial distribution com-

pletely determine the chain.

Denition 1.5. An initial distribution is a probability distribution {

i

= P(X

0

=

i)|i Z}

X

iZ

i

= 1

Such a distribution is said to be stationary if it satises

j

=

X

iZ

i

p

ij

^^independence

matrix

multipli

cation

THE FUNDAMENTAL THEOREM OF MARKOV CHAINS 3

Denition 1.6. We say that a subset space state C Z is closed if

X

jC

p

ij

= 1 i C

If Z itself has no proper closed subsets then the Markov Chain is said to be

irreducible.

Denition 1.7. We dene the period of a state i by

d

i

= gcd(m Z|p

(m)

ii

> 0)

State i is aperiodic if d

i

= 1

Denition 1.8. A state i is said to be accessible from from a state j if there is an

m 1 s.t

p

(m)

ij

> 0

If i is accessible from j and j is accessible from i then i and j are said to commu-

nicate.

Denition 1.9. We dene the rst-passage time probabilities by

f

(m)

ij

= P(X

m

= j; X

k

6= j, 0 < k < m1|X

0

= i) ; i, j Z

And we will denote the expected value, the expected return time, of this distri-

bution by

ij

=

X

m=1

mf

(m)

ij

Denition 1.10. We say that a state i is recurrent if

X

m=1

f

(m)

ij

= 1

and transient if

X

m=1

f

(m

ij

< 1

A recurrent state i is positive-recurrent if

ii

< and null-recurrent if

ii

=

2. Fundamental Theorem of Markov Chains

Theorem 2.1. For any irreducible, aperiodic, positive-recurrent Markov Chain

there exist a unique stationary distribution {

j

, j Z} s.t i Z

Proof. Because our chain is irreducible, aperiodic, and positive-recurrent we know

that for all i Z

j

= lim

n

p

(n)

ij

> 0. Likewise as

j

is a probability distribution

X

jZ

j

. We know that for any m

m

X

i=0

p

(m)

ij

X

i=0

p

(m)

ij

1

4 AARON PLAVNICK

taking the limit

lim

m

m

X

i=0

p

(m)

ij

=

X

i=0

j

1

which implies that for any M

M

X

i=0

j

1

Now we can use the Chapman-Kolmogorov equation analogously

p

(m+1)

ij

=

X

i=0

p

(m)

ik

p

kj

M

X

i=0

p

(m)

ik

p

kj

as before we take the limit as m, M yielding

X

i=0

k

p

kj

Now we search for contradiction and assume strict inequality holds for at least one

state j. Summing over these inequalities yields

X

j=0

j

>

X

j=0

X

k=0

k

p

kj

=

X

k=0

X

j=0

p

kj

=

X

k=0

k

But this is a contradiction, hence equality must hold

j

=

X

k=0

k

p

kj

Thus a unique stationary distribution exists.

Futher it can be shown that this unique distribution is related to the expected

return value of the Markov chain by

j

=

1

jj

References

[1] F. E. Beichelt. L. P. Fatti. Stochastic Processes And Their Applications. Taylor and Francis.

2002.

You might also like

- 1 Pratiksha Presentation1Document4 pages1 Pratiksha Presentation1richarai2312No ratings yet

- MC NotesDocument42 pagesMC NotesvosmeraNo ratings yet

- Markov Chains 2013Document42 pagesMarkov Chains 2013nickthegreek142857No ratings yet

- Markov Chains (4728)Document14 pagesMarkov Chains (4728)John JoelNo ratings yet

- Cadena de MarkovDocument20 pagesCadena de MarkovJaime PlataNo ratings yet

- StochBioChapter3 PDFDocument46 pagesStochBioChapter3 PDFThomas OrNo ratings yet

- Markov Chains ErgodicityDocument8 pagesMarkov Chains ErgodicitypiotrpieniazekNo ratings yet

- Markov ChainDocument37 pagesMarkov ChainNyoman Arto SupraptoNo ratings yet

- Markov ChainsDocument42 pagesMarkov Chainsbuhyounglee7010No ratings yet

- Bayesian Modelling Tuts-10-11Document2 pagesBayesian Modelling Tuts-10-11ShubhsNo ratings yet

- Part IB - Markov Chains: Based On Lectures by G. R. GrimmettDocument42 pagesPart IB - Markov Chains: Based On Lectures by G. R. GrimmettBob CrossNo ratings yet

- Markov ChainsDocument76 pagesMarkov ChainsHaydenChadwickNo ratings yet

- Chap MarkovDocument19 pagesChap MarkovSaumya LarokarNo ratings yet

- Stationary Distributions of Markov Chains: Will PerkinsDocument20 pagesStationary Distributions of Markov Chains: Will PerkinsVrundNo ratings yet

- 9 - Markov ChainsDocument14 pages9 - Markov ChainsTuấn ĐỗNo ratings yet

- 1516 Markov Chains 2 HDocument21 pages1516 Markov Chains 2 HmassNo ratings yet

- Chap1-2 Markov ChainDocument82 pagesChap1-2 Markov ChainBoul chandra GaraiNo ratings yet

- CasarottoDocument7 pagesCasarottoDharmendra KumarNo ratings yet

- Lecture10 06Document6 pagesLecture10 06GONZALONo ratings yet

- Math 312 Lecture Notes Markov ChainsDocument9 pagesMath 312 Lecture Notes Markov Chainsfakame22No ratings yet

- On The Markov Chain Monte Carlo (MCMC) Method: Rajeeva L KarandikarDocument24 pagesOn The Markov Chain Monte Carlo (MCMC) Method: Rajeeva L KarandikarDiana DilipNo ratings yet

- 4703 07 Notes MC PDFDocument7 pages4703 07 Notes MC PDFjpalacios806853No ratings yet

- MATH858D Markov Chains: Maria CameronDocument44 pagesMATH858D Markov Chains: Maria CameronadelNo ratings yet

- Markov ChainsDocument86 pagesMarkov ChainsAnannaNo ratings yet

- Cadenas de MarkovDocument53 pagesCadenas de MarkovolsolerNo ratings yet

- Markov Decission Process. Unit 3Document37 pagesMarkov Decission Process. Unit 3AlexanderNo ratings yet

- Volfovsky PDFDocument11 pagesVolfovsky PDFShreya MondalNo ratings yet

- BONUS MalesDocument57 pagesBONUS MalesDionisio UssacaNo ratings yet

- Convergence of Markov Processes: Martin HairerDocument39 pagesConvergence of Markov Processes: Martin HairerhmducvnNo ratings yet

- Notes On Markov ChainDocument34 pagesNotes On Markov ChainCynthia NgNo ratings yet

- Random ProcessesDocument112 pagesRandom ProcessesA.BenhariNo ratings yet

- Markovchain PackageDocument70 pagesMarkovchain PackageRenato Ferreira Leitão AzevedoNo ratings yet

- An Introduction To Markovchain PackageDocument72 pagesAn Introduction To Markovchain PackageJhon Edison Bravo BuitragoNo ratings yet

- Tutorial 8: Markov ChainsDocument14 pagesTutorial 8: Markov ChainsmaundumiNo ratings yet

- Markov ChainsDocument13 pagesMarkov Chainsmittalnipun2009No ratings yet

- Markov Chain Monte Carlo and Gibbs SamplingDocument24 pagesMarkov Chain Monte Carlo and Gibbs Samplingp1muellerNo ratings yet

- An Introduction To Markovchain Package PDFDocument68 pagesAn Introduction To Markovchain Package PDFJames DunkelfelderNo ratings yet

- Markov Process and Markov Chains (Unit 3)Document5 pagesMarkov Process and Markov Chains (Unit 3)Aravind RajNo ratings yet

- Markov ChainsDocument38 pagesMarkov ChainsHaydenChadwickNo ratings yet

- The Markovchain Package: A Package For Easily Handling Discrete Markov Chains in RDocument73 pagesThe Markovchain Package: A Package For Easily Handling Discrete Markov Chains in RJuan Jose Leon PaedesNo ratings yet

- An Introduction To Markovchain PackageDocument71 pagesAn Introduction To Markovchain PackageKEZYA FABIAN RAMADHANNo ratings yet

- 1 Discrete-Time Markov ChainsDocument8 pages1 Discrete-Time Markov ChainsDiego SosaNo ratings yet

- 6761 4 MarkovChainsDocument76 pages6761 4 MarkovChainsSanket DattaNo ratings yet

- Cycle Structure of Affine Transformations of Vector Spaces Over GF (P)Document5 pagesCycle Structure of Affine Transformations of Vector Spaces Over GF (P)polonium rescentiNo ratings yet

- SolutionsDocument8 pagesSolutionsSanjeev BaghoriyaNo ratings yet

- 3Document58 pages3Mijailht SosaNo ratings yet

- PStat 160 A Homework SolutionsDocument6 pagesPStat 160 A Homework Solutionslex7396No ratings yet

- Co 3 MaterialDocument16 pagesCo 3 MaterialAngel Ammu100% (1)

- Chapter 10 Limiting Distribution of Markov Chain (Lecture On 02-04-2021) - STAT 243 - Stochastic ProcessDocument6 pagesChapter 10 Limiting Distribution of Markov Chain (Lecture On 02-04-2021) - STAT 243 - Stochastic ProcessProf. Madya Dr. Umar Yusuf MadakiNo ratings yet

- Markov Chains, Examples: June 20, 201Document22 pagesMarkov Chains, Examples: June 20, 201Sabir BhatiNo ratings yet

- MCMC With Temporary Mapping and Caching With Application On Gaussian Process RegressionDocument16 pagesMCMC With Temporary Mapping and Caching With Application On Gaussian Process RegressionChunyi WangNo ratings yet

- Markov Chains: 1.1 Specifying and Simulating A Markov ChainDocument38 pagesMarkov Chains: 1.1 Specifying and Simulating A Markov ChainRudi JuliantoNo ratings yet

- 6.896: Probability and Computation: Spring 2011Document19 pages6.896: Probability and Computation: Spring 2011Anurag PalNo ratings yet

- Applied Stochastic ProcessesDocument2 pagesApplied Stochastic ProcessesAyush SekhariNo ratings yet

- Markov ChainsDocument9 pagesMarkov ChainsMauro Luiz Brandao JuniorNo ratings yet

- MIT6 436JF18 Lec23Document9 pagesMIT6 436JF18 Lec23DevendraReddyPoreddyNo ratings yet

- PRP UNIT IV Markove ProcessDocument52 pagesPRP UNIT IV Markove ProcessFactologyNo ratings yet

- An Introduction To Markovchain Package PDFDocument71 pagesAn Introduction To Markovchain Package PDFCatherine AlvarezNo ratings yet

- 3 Markov Chains and Markov ProcessesDocument3 pages3 Markov Chains and Markov ProcessescheluvarayaNo ratings yet

- Molecular Computing Does DNA ComputeDocument4 pagesMolecular Computing Does DNA ComputeMichael PearsonNo ratings yet

- Tracking The Origins of A State Terror NetworkDocument23 pagesTracking The Origins of A State Terror NetworkMichael PearsonNo ratings yet

- Ramparts CIA FrontDocument10 pagesRamparts CIA FrontMichael PearsonNo ratings yet

- Brakke - Surface EvolverDocument25 pagesBrakke - Surface EvolverMichael PearsonNo ratings yet

- Foundations of Algebraic GeometryDocument764 pagesFoundations of Algebraic GeometryMichael Pearson100% (2)

- Everify White Paper ACLUDocument13 pagesEverify White Paper ACLUMichael PearsonNo ratings yet

- Electrochemical Corrosion of Unalloyed Copper in Chloride Media - A Critical ReviewDocument27 pagesElectrochemical Corrosion of Unalloyed Copper in Chloride Media - A Critical ReviewMichael PearsonNo ratings yet

- Botany of DesireDocument65 pagesBotany of DesirejanetmahnazNo ratings yet

- Elisabeth Verhoeven Experiential Constructions in Yucatec Maya A Typologically Based Analysis of A Functional Domain in A Mayan Language Studies in Language Companion SDocument405 pagesElisabeth Verhoeven Experiential Constructions in Yucatec Maya A Typologically Based Analysis of A Functional Domain in A Mayan Language Studies in Language Companion SMichael Pearson100% (1)

- United States: (12) Patent Application Publication (10) Pub. No.: US 2001/0044775 A1Document59 pagesUnited States: (12) Patent Application Publication (10) Pub. No.: US 2001/0044775 A1Michael PearsonNo ratings yet

- United States: (12) Patent Application Publication (10) Pub. No.: US 2014/0218233 A1Document18 pagesUnited States: (12) Patent Application Publication (10) Pub. No.: US 2014/0218233 A1Michael PearsonNo ratings yet

- Modeling The Radiation Pattern of LEDsDocument12 pagesModeling The Radiation Pattern of LEDsMichael PearsonNo ratings yet

- Time-Fractal KDV Equation: Formulation and Solution Using Variational MethodsDocument18 pagesTime-Fractal KDV Equation: Formulation and Solution Using Variational MethodsMichael PearsonNo ratings yet

- 2003 Goldbeter J BiosciDocument3 pages2003 Goldbeter J BiosciMichael PearsonNo ratings yet

- Project Management TY BSC ITDocument57 pagesProject Management TY BSC ITdarshan130275% (12)

- Open Source NetworkingDocument226 pagesOpen Source NetworkingyemenlinuxNo ratings yet

- Jan 25th 6 TicketsDocument2 pagesJan 25th 6 TicketsMohan Raj VeerasamiNo ratings yet

- Dec JanDocument6 pagesDec Janmadhujayan100% (1)

- Matka Queen Jaya BhagatDocument1 pageMatka Queen Jaya BhagatA.K.A. Haji100% (4)

- Manual E07ei1Document57 pagesManual E07ei1EiriHouseNo ratings yet

- Codan Rubber Modern Cars Need Modern Hoses WebDocument2 pagesCodan Rubber Modern Cars Need Modern Hoses WebYadiNo ratings yet

- Chapter 2Document8 pagesChapter 2Fahmia MidtimbangNo ratings yet

- 2016 IT - Sheilding Guide PDFDocument40 pages2016 IT - Sheilding Guide PDFlazarosNo ratings yet

- CS-6777 Liu AbsDocument103 pagesCS-6777 Liu AbsILLA PAVAN KUMAR (PA2013003013042)No ratings yet

- Expression of Interest (Consultancy) (BDC)Document4 pagesExpression of Interest (Consultancy) (BDC)Brave zizNo ratings yet

- EKC 202ABC ManualDocument16 pagesEKC 202ABC ManualJose CencičNo ratings yet

- MME 52106 - Optimization in Matlab - NN ToolboxDocument14 pagesMME 52106 - Optimization in Matlab - NN ToolboxAdarshNo ratings yet

- 928 Diagnostics Manual v2.7Document67 pages928 Diagnostics Manual v2.7Roger Sego100% (2)

- Aakriti 1Document92 pagesAakriti 1raghav bansalNo ratings yet

- Eurocode 3: Design of Steel Structures "ReadyDocument26 pagesEurocode 3: Design of Steel Structures "Readywazydotnet80% (10)

- Draft PDFDocument166 pagesDraft PDFashwaq000111No ratings yet

- Walking in Space - Lyrics and Chord PatternDocument2 pagesWalking in Space - Lyrics and Chord Patternjohn smithNo ratings yet

- To Find Fatty Material of Different Soap SamplesDocument17 pagesTo Find Fatty Material of Different Soap SamplesRohan Singh0% (2)

- Genomic Tools For Crop ImprovementDocument41 pagesGenomic Tools For Crop ImprovementNeeru RedhuNo ratings yet

- UM-140-D00221-07 SeaTrac Developer Guide (Firmware v2.4)Document154 pagesUM-140-D00221-07 SeaTrac Developer Guide (Firmware v2.4)Antony Jacob AshishNo ratings yet

- H13 611 PDFDocument14 pagesH13 611 PDFMonchai PhaichitchanNo ratings yet

- List of Iconic CPG Projects in SingaporeDocument2 pagesList of Iconic CPG Projects in SingaporeKS LeeNo ratings yet

- Odisha State Museum-1Document26 pagesOdisha State Museum-1ajitkpatnaikNo ratings yet

- DS Agile - Enm - C6pDocument358 pagesDS Agile - Enm - C6pABDERRAHMANE JAFNo ratings yet

- Focus Edition From GC: Phosphate Bonded Investments For C&B TechniquesDocument35 pagesFocus Edition From GC: Phosphate Bonded Investments For C&B TechniquesAlexis De Jesus FernandezNo ratings yet

- Bacacay South Hris1Document7,327 pagesBacacay South Hris1Lito ObstaculoNo ratings yet

- Convection Transfer EquationsDocument9 pagesConvection Transfer EquationsA.N.M. Mominul Islam MukutNo ratings yet

- Myanmar 1Document3 pagesMyanmar 1Shenee Kate BalciaNo ratings yet

- GE 7 ReportDocument31 pagesGE 7 ReportMark Anthony FergusonNo ratings yet