Professional Documents

Culture Documents

Reliability and Validity of Q

Uploaded by

Yagnesh VyasOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Reliability and Validity of Q

Uploaded by

Yagnesh VyasCopyright:

Available Formats

Tips for Developing and Testing

Questionnaires/Instruments

Abstract

Questionnaires are the most widely used data collection methods in educational and evaluation

research. This article describes the process for developing and testing questionnaires and posits

five sequential steps involved in developing and testing a questionnaire: research background,

questionnaire conceptualization, format and data analysis, and establishing validity and

reliability. Systematic development of questionnaires is a must to reduce many measurement

errors. Following these five steps in questionnaire development and testing will enhance data

quality and utilization of research.

Rama B. Radhakrishna

Associate Professor

The Pennsylvania State University

University Park, Pennsylvania

Brr100@psu.edu

Introduction

Questionnaires are the most frequently used data collection method in educational and evaluation

research. Questionnaires help gather information on knowledge, attitudes, opinions, behaviors,

facts, and other information. In a review of 748 research studies conducted in agricultural and

Extension education, Radhakrishna, Leite, and Baggett (2003) found that 64% used

questionnaires. They also found that a third of the studies reviewed did not report procedures for

establishing validity (31%) or reliability (33%). Development of a valid and reliable

questionnaire is a must to reduce measurement error. Groves (1987) defines measurement error

as the "discrepancy between respondents' attributes and their survey responses" (p. 162).

Development of a valid and reliable questionnaire involves several steps taking considerable

time. This article describes the sequential steps involved in the development and testing of

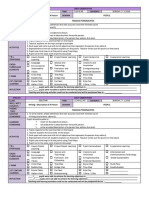

questionnaires used for data collection. Figure 1 illustrates the five sequential steps involved in

questionnaire development and testing. Each step depends on fine tuning and testing of previous

steps that must be completed before the next step. A brief description of each of the five steps

follows Figure 1.

Figure 1.

Sequence for Questionnaire/Instrument Development

Step 1--Background

In this initial step, the purpose, objectives, research questions, and hypothesis of the proposed

research are examined. Determining who is the audience, their background, especially their

educational/readability levels, access, and the process used to select the respondents (sample vs.

population) are also part of this step. A thorough understanding of the problem through literature

search and readings is a must. Good preparation and understanding of Step1 provides the

foundation for initiating Step 2.

Step 2--Questionnaire Conceptualization

After developing a thorough understanding of the research, the next step is to generate

statements/questions for the questionnaire. In this step, content (from literature/theoretical

framework) is transformed into statements/questions. In addition, a link among the objectives of

the study and their translation into content is established. For example, the researcher must

indicate what the questionnaire is measuring, that is, knowledge, attitudes, perceptions, opinions,

recalling facts, behavior change, etc. Major variables (independent, dependent, and moderator

variables) are identified and defined in this step.

Step 3--Format and Data Analysis

In Step 3, the focus is on writing statements/questions, selection of appropriate scales of

measurement, questionnaire layout, format, question ordering, font size, front and back cover,

and proposed data analysis. Scales are devices used to quantify a subject's response on a

particular variable. Understanding the relationship between the level of measurement and the

appropriateness of data analysis is important. For example, if ANOVA (analysis of variance) is

one mode of data analysis, the independent variable must be measured on a nominal scale with

two or more levels (yes, no, not sure), and the dependent variable must be measured on a

interval/ratio scale (strongly agree to strongly disagree).

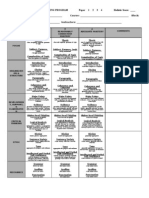

Step 4--Establishing Validity

As a result of Steps 1-3, a draft questionnaire is ready for establishing validity. Validity is the

amount of systematic or built-in error in measurement (Norland, 1990). Validity is established

using a panel of experts and a field test. Which type of validity (content, construct, criterion, and

face) to use depends on the objectives of the study. The following questions are addressed in

Step 4:

1. Is the questionnaire valid? In other words, is the questionnaire measuring what it intended

to measure?

2. Does it represent the content?

3. Is it appropriate for the sample/population?

4. Is the questionnaire comprehensive enough to collect all the information needed to

address the purpose and goals of the study?

5. Does the instrument look like a questionnaire?

Addressing these questions coupled with carrying out a readability test enhances questionnaire

validity. The Fog Index, Flesch Reading Ease, Flesch-Kinkaid Readability Formula, and

Gunning-Fog Index are formulas used to determine readability. Approval from the Institutional

Review Board (IRB) must also be obtained. Following IRB approval, the next step is to conduct

a field test using subjects not included in the sample. Make changes, as appropriate, based on

both a field test and expert opinion. Now the questionnaire is ready to pilot test.

Step 5--Establishing Reliability

In this final step, reliability of the questionnaire using a pilot test is carried out. Reliability refers

to random error in measurement. Reliability indicates the accuracy or precision of the measuring

instrument (Norland, 1990). The pilot test seeks to answer the question, does the questionnaire

consistently measure whatever it measures?

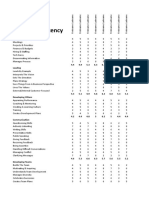

The use of reliability types (test-retest, split half, alternate form, internal consistency) depends on

the nature of data (nominal, ordinal, interval/ratio). For example, to assess reliability of questions

measured on an interval/ratio scale, internal consistency is appropriate to use. To assess

reliability of knowledge questions, test-retest or split-half is appropriate.

Reliability is established using a pilot test by collecting data from 20-30 subjects not included in

the sample. Data collected from pilot test is analyzed using SPSS (Statistical Package for Social

Sciences) or another software. SPSS provides two key pieces of information. These are

"correlation matrix" and "view alpha if item deleted" column. Make sure that items/statements

that have 0s, 1s, and negatives are eliminated. Then view "alpha if item deleted" column to

determine if alpha can be raised by deletion of items. Delete items that substantially improve

reliability. To preserve content, delete no more than 20% of the items. The reliability coefficient

(alpha) can range from 0 to 1, with 0 representing an instrument with full of error and 1

representing total absence of error. A reliability coefficient (alpha) of .70 or higher is considered

acceptable reliability.

Conclusions

Systematic development of the questionnaire for data collection is important to reduce

measurement errors--questionnaire content, questionnaire design and format, and respondent.

Well-crafted conceptualization of the content and transformation of the content into questions

(Step 2) is inessential to minimize measurement error. Careful attention to detail and

understanding of the process involved in developing a questionnaire are of immense value to

Extension educators, graduate students, and faculty alike. Not following appropriate and

systematic procedures in questionnaire development, testing, and evaluation may undermine the

quality and utilization of data (Esposito, 2002). Anyone involved in educational and evaluation

research, must, at a minimum, follow these five steps to develop a valid and reliable

questionnaire to enhance the quality of research.

References

Esposito, J. L. (2002 November). Interactive, multiple-method questionnaire evaluation

research: A case study. Paper presented at the International Conference in Questionnaire

Development, Evaluation, and Testing (QDET) Methods. Charleston, SC.

Groves, R. M., (1987). Research on survey data quality. Public Opinion Quarterly, 51, 156-172.

Norland-Tilburg, E. V. (1990). Controlling error in evaluation instruments. Journal of Extension,

[On-line], 28(2). Available at http://www.joe.org/joe/1990summer/tt2.html

Radhakrishna, R. B. Francisco, C. L., & Baggett. C. D. (2003). An analysis of research designs

used in agricultural and extension education. Proceedings of the 30

th

National Agricultural

Education Research Conference, 528-541.

Reliability Vs Validity of a Questionnaire in

any Research Design

Questionnaires are most widely used tools in specially social science research. Most

questionnaires objective in research is to obtain relevant information in most reliable and valid

manner. Therefore the validation of questionnaire forms an important aspect of research

methodology and the validity of the outcomes. Often a researcher is confused with the objective

of validating a questionnaire and tends to find a link between the reliability of a questionnaire

with the validity of it.

The reality is that reliability and validity are two different aspects of an acceptable research

questionnaire. It is important for a researcher to understand the differences between these two

aspects. In its simple explanation, reliability of a questionnaire seems to emerge from the quality

of the questionnaire. On the other hand validity seems to emerge from the internal and external

consistency and relevance of the questionnaire. In other words reliability of a questionnaire

refers to the quality of tool (read questionnaire) while validity refers to the process used to

employ the tool in use, i.e. the process used to conduct the questionnaire. There are several

dimensions to the process of employment of a questionnaire in use. Some of the important

dimensions are discussed in the following paragraphs.

General Validity

A major aspect of validation of a questionnaire refers to common validity of the questionnaire.

The most common elements widely used in questionnaire validation are

Known Group Validity refers to the extent to which an instrument can demonstrate variability

of scores which vary on a certain known variables.

Construct Validity refers to the extent to which an instrument can demonstrate the measure of

the intended construct.

Content Validity refer to the extent to which an instrument covers all aspect of social problem

under study

Criterion Validity refers to consistency with the gold standard questionnaire

Correlation

Variables may have correlation but this correlation should be optimal. Most commonly

correlation tests are aimed at finding interclass correlation, between group correlations.

Correlation mainly provides measure of internal consistency for validating the questionnaires.

Some of the common correlation test for validating questionnaire relate to following

Inter class correlation coefficient It refers to the ratio between interclass variance to total

variance.

Cronbach Alpha Is the measure of the correlation between items of the test. It is the

homogeneity of the test. Experts agree that items in a test are moderately correlated. This way

these are expected to measure all aspects of a single trait being tested. If the correlation is too

low it may indicate that items refers to not one trait but two or more different trait. On the other

hand a very high correlation refers to one of the items being redundant for the test

Discriminant correlation refers to the extent to which a measure of a research attribute is

related to measure of a different attribute which is not intended to be measured.

Bias

Bias is more problematic than random error and can be intentional or unintentional. The bias is

related to characteristics of investigator, observer or instrument. Unintentional bias is of bigger

concern. It is to be avoided by uncovering its source and re looking at design instrument or using

a method to avoid it.

A validated questionnaire is one that has undergone validation procedure to show that it

accurately measures what its objective is, regardless of the respondents status, timing of

response, different investigators. The instrument is compared with the Gold Standard, if

available. It is also compared with other sources of data. The reliability is also tested. Even if the

questionnaire is not fully valid (which is rare), reliability of the questionnaire has its own value.

If the reliability is there it offers an opportunity to compare results with other studies.

You might also like

- Private Files Module 3 Practical Research 2 Conceptual Framework and Hypothesis PDFDocument20 pagesPrivate Files Module 3 Practical Research 2 Conceptual Framework and Hypothesis PDFLovely IñigoNo ratings yet

- Qualitative Research: Reported byDocument27 pagesQualitative Research: Reported byCarlo SalvañaNo ratings yet

- Citation of LiteratureDocument84 pagesCitation of LiteratureLea Victoria PronuevoNo ratings yet

- PERDEV2Document2 pagesPERDEV2Lourraine Pan0% (1)

- St. Matthew Academy Leadership in Times of PandemicDocument4 pagesSt. Matthew Academy Leadership in Times of PandemicAlissa MayNo ratings yet

- Module 8. Planning The Data Collection StrategiesDocument4 pagesModule 8. Planning The Data Collection StrategiesYannah JovidoNo ratings yet

- Session 7, 8 ART 203Document17 pagesSession 7, 8 ART 203fireballhunter646No ratings yet

- Research DesignDocument14 pagesResearch DesignsandeepkumarkudNo ratings yet

- Chapter ProposalDocument6 pagesChapter ProposalClariza PascualNo ratings yet

- Department of Education: Learner'S Activity Sheet in Inquiries, Investigations and Immersion For Quarter 3, Week 1Document9 pagesDepartment of Education: Learner'S Activity Sheet in Inquiries, Investigations and Immersion For Quarter 3, Week 1Franzhean Balais CuachonNo ratings yet

- III Module 1Document21 pagesIII Module 1France RamirezNo ratings yet

- SHS MIL Week 5 - MEDIADocument6 pagesSHS MIL Week 5 - MEDIAbeaxallaine100% (1)

- Lesson2 Importance of QR Across FieldsDocument12 pagesLesson2 Importance of QR Across Fieldsmargilyn ramosNo ratings yet

- Variablesandmeasurementscales 090604032334 Phpapp02Document34 pagesVariablesandmeasurementscales 090604032334 Phpapp02ihtishamNo ratings yet

- Qualitative and Quantitative RESEARCHDocument16 pagesQualitative and Quantitative RESEARCHRichmond Jake AlmazanNo ratings yet

- PR2Document41 pagesPR2Jedidiah FloresNo ratings yet

- PracRes2 11 Q3 M14Document17 pagesPracRes2 11 Q3 M14Hikaru Nikki Flores NakamuraNo ratings yet

- PracResearch2 Grade-12 Q4 Mod5 Data-Collection-Presentation-and-Analysis Version3Document58 pagesPracResearch2 Grade-12 Q4 Mod5 Data-Collection-Presentation-and-Analysis Version3Catherine BauzonNo ratings yet

- Senior Practical Research 2 Q1 Module10 For PrintingDocument16 pagesSenior Practical Research 2 Q1 Module10 For PrintingChristine SalveNo ratings yet

- Writing Critiques: Formalism, Feminism, Reader Response & Marxist ApproachesDocument2 pagesWriting Critiques: Formalism, Feminism, Reader Response & Marxist ApproachesCamille DiganNo ratings yet

- PRACTICAL RESEARCH 12, Jemuel B. PrudencianoDocument26 pagesPRACTICAL RESEARCH 12, Jemuel B. PrudencianoJemuel PrudencianoNo ratings yet

- Q1. W8. The Definition of TermsDocument2 pagesQ1. W8. The Definition of TermsDazzleNo ratings yet

- Guidelines For Chapter 1 To 5 Body of ResearchDocument17 pagesGuidelines For Chapter 1 To 5 Body of ResearchFritz Gerald Buenviaje100% (1)

- Importance of Quantitative Research Across FieldsDocument11 pagesImportance of Quantitative Research Across FieldsMarjorie Jacinto CruzinNo ratings yet

- 3 - Research Instrument, Validity, and ReliabilityDocument4 pages3 - Research Instrument, Validity, and ReliabilityMeryl LabatanaNo ratings yet

- PR1 Characteristics Strengths and Weaknesses Kinds and Importance of Qualitative ResearchDocument13 pagesPR1 Characteristics Strengths and Weaknesses Kinds and Importance of Qualitative ResearchMaki KunNo ratings yet

- Basic Counseling Skills ModuleDocument8 pagesBasic Counseling Skills ModuleKiran MakhijaniNo ratings yet

- G11 SLM3 Q3 PR2 FinalDocument18 pagesG11 SLM3 Q3 PR2 FinalBenjie Sucnaan ManggobNo ratings yet

- Practical Research: Characteristics of Quantitative ResearchDocument34 pagesPractical Research: Characteristics of Quantitative ResearchMartin DaveNo ratings yet

- Recaforte, M. (HOPE)Document1 pageRecaforte, M. (HOPE)MarcoNo ratings yet

- pr1 Lesson 4Document3 pagespr1 Lesson 4Krystel Tungpalan100% (1)

- English For Academic and Professional Purpose1Document3 pagesEnglish For Academic and Professional Purpose1Char LayiNo ratings yet

- PR111 Q2 Mod6 Finding Answers Through Data Collection Version2Document10 pagesPR111 Q2 Mod6 Finding Answers Through Data Collection Version2Jf UmbreroNo ratings yet

- III Las 3 Version 3Document4 pagesIII Las 3 Version 3Randy ReyesNo ratings yet

- Practical Research 2 Q3 SLM7Document13 pagesPractical Research 2 Q3 SLM7Lady AnnNo ratings yet

- III - LAS 4 - Q3 - Citation of Review of Related Literature - FinalDocument13 pagesIII - LAS 4 - Q3 - Citation of Review of Related Literature - FinalJoanna Marey FuerzasNo ratings yet

- Pogil Module 5 PR2Document8 pagesPogil Module 5 PR2MARICEL CAINGLESNo ratings yet

- SHS Module PRACTICAL RESEARCH 2 Week 1Document22 pagesSHS Module PRACTICAL RESEARCH 2 Week 1Roilene MelloriaNo ratings yet

- Q3 M2 3is Identifying The Problem and Asking The QuestionsV4 1Document41 pagesQ3 M2 3is Identifying The Problem and Asking The QuestionsV4 1jollypasilan5No ratings yet

- Practical Research 2 MethodologyDocument42 pagesPractical Research 2 MethodologyBarbie CoronelNo ratings yet

- How religiosity impacts perceptions of teenage pregnancy stigmaDocument45 pagesHow religiosity impacts perceptions of teenage pregnancy stigmaKacy KatNo ratings yet

- Analyzing Arguments: Key Steps and ComponentsDocument10 pagesAnalyzing Arguments: Key Steps and ComponentsArkan NabawiNo ratings yet

- NCP TemplateDocument1 pageNCP TemplateQuiannë Delos ReyesNo ratings yet

- Practical Research 2 - LasDocument12 pagesPractical Research 2 - LasClaribel C. AyananNo ratings yet

- Analyzing Qualitative DataDocument4 pagesAnalyzing Qualitative DataAlvin PaboresNo ratings yet

- PE 4 - Dry Land Preparatory SkillsDocument6 pagesPE 4 - Dry Land Preparatory SkillsFaith BariasNo ratings yet

- Answers:: What I KnowDocument8 pagesAnswers:: What I KnowFatulousNo ratings yet

- Module 8 (1) PDFDocument3 pagesModule 8 (1) PDFAngel Monique SumaloNo ratings yet

- Template On Shs Research SeminarDocument5 pagesTemplate On Shs Research SeminarArn HortizNo ratings yet

- PR1 Week1Document28 pagesPR1 Week1Rence Matthew AustriaNo ratings yet

- Teachers Guide Practical Research 1Document198 pagesTeachers Guide Practical Research 1Jayson VelascoNo ratings yet

- Inform ConsentDocument3 pagesInform ConsentdawnparkNo ratings yet

- Practical Research 2: Quarter 1 - Module 1Document35 pagesPractical Research 2: Quarter 1 - Module 1Marjorie Villanueva PerezNo ratings yet

- Quantitative ResearchDocument60 pagesQuantitative ResearchHoney Grace dela CruzNo ratings yet

- Entrepreneurship: Marketing Plan and Financial PlanDocument3 pagesEntrepreneurship: Marketing Plan and Financial PlanFunny Juan100% (1)

- Population Sampling MethodsDocument17 pagesPopulation Sampling MethodsJohnkervin EsplanaNo ratings yet

- Department of Education: Republic of The PhilippinesDocument10 pagesDepartment of Education: Republic of The PhilippinesLourence CaringalNo ratings yet

- Objectives: Overview:: of Inquiry and Research Processes, AND Ethics OF ResearchDocument7 pagesObjectives: Overview:: of Inquiry and Research Processes, AND Ethics OF ResearchJulliene Sanchez DamianNo ratings yet

- Literature ReviewDocument26 pagesLiterature ReviewKin Adonis TogononNo ratings yet

- LLM ML Interview QDocument43 pagesLLM ML Interview QYagnesh VyasNo ratings yet

- RFP Human Resources ServicesDocument8 pagesRFP Human Resources ServicesYagnesh VyasNo ratings yet

- The Ultimate Excel HandbookDocument43 pagesThe Ultimate Excel HandbookChandra RaoNo ratings yet

- Salary Prediction LinearRegressionDocument7 pagesSalary Prediction LinearRegressionYagnesh Vyas100% (1)

- Japanese StoriesDocument2 pagesJapanese StoriesYagnesh VyasNo ratings yet

- DSI ACE PREP - Data Science Interview_ Prep for SQL, Panda, Python, R Language, Machine Learning, DBMS and RDBMS – and More – the Full Data Scientist Interview Handbook-Data Science Interview Books (2Document136 pagesDSI ACE PREP - Data Science Interview_ Prep for SQL, Panda, Python, R Language, Machine Learning, DBMS and RDBMS – and More – the Full Data Scientist Interview Handbook-Data Science Interview Books (2dhruv mahajanNo ratings yet

- SQL NotesDocument16 pagesSQL NotesLeo Chen67% (3)

- SQL Basics and Advanced GuideDocument101 pagesSQL Basics and Advanced GuideSnehalNo ratings yet

- SQL Guide AdvancedDocument26 pagesSQL Guide AdvancedRustik2020No ratings yet

- Machine Learning For EveryoneDocument35 pagesMachine Learning For EveryoneagalvezsalNo ratings yet

- DSA Using PyDocument16 pagesDSA Using PyYagnesh VyasNo ratings yet

- List of Incubators in IndiaDocument10 pagesList of Incubators in IndiaYagnesh VyasNo ratings yet

- SQL PDFDocument221 pagesSQL PDFYunier Felicò Mederos100% (6)

- NUmpy Interview QDocument3 pagesNUmpy Interview QYagnesh VyasNo ratings yet

- Python Programming PDFDocument138 pagesPython Programming PDFSyed AmeenNo ratings yet

- DataCleaning TechniquesDocument20 pagesDataCleaning TechniquesYagnesh VyasNo ratings yet

- Personality DevelopmentDocument132 pagesPersonality Developmentapi-26231809100% (2)

- A Study in The Typical Functions of A Typical HR Department in An Typically Advanced OrganizationDocument30 pagesA Study in The Typical Functions of A Typical HR Department in An Typically Advanced OrganizationansmanstudiesgmailcoNo ratings yet

- And Concept of Research: Presenter NameDocument10 pagesAnd Concept of Research: Presenter NameYagnesh VyasNo ratings yet

- Syllabus of PH D Entrance Component 'A', 'B' and 'C'Document4 pagesSyllabus of PH D Entrance Component 'A', 'B' and 'C'Yagnesh VyasNo ratings yet

- FLSCompQuestions PDFDocument12 pagesFLSCompQuestions PDFYagnesh VyasNo ratings yet

- Frontline Management Training PDFDocument9 pagesFrontline Management Training PDFYagnesh VyasNo ratings yet

- Customer Service PresentationDocument41 pagesCustomer Service PresentationIkin Solihin100% (2)

- MP Front End RequirementsDocument7 pagesMP Front End RequirementsYagnesh VyasNo ratings yet

- Business Research Methods - Understanding Research ProcessDocument13 pagesBusiness Research Methods - Understanding Research ProcessYagnesh VyasNo ratings yet

- Find the missing term in analogy testsDocument58 pagesFind the missing term in analogy testsLaks Sadeesh100% (2)

- DissertationDocument139 pagesDissertationYagnesh VyasNo ratings yet

- Grad ChecklistDocument1 pageGrad ChecklistYagnesh VyasNo ratings yet

- 2010 01 01rao - PDF Od InterventionsDocument22 pages2010 01 01rao - PDF Od InterventionsMohd Haneef AhmedNo ratings yet

- 15 Toughest Interview Questions and Answers!: 1. Why Do You Want To Work in This Industry?Document8 pages15 Toughest Interview Questions and Answers!: 1. Why Do You Want To Work in This Industry?johnlemNo ratings yet

- The Power of WordsDocument273 pagesThe Power of Wordscirus28100% (2)

- University of Ibn Zohr AgadirDocument4 pagesUniversity of Ibn Zohr AgadirDriss BaoucheNo ratings yet

- Trends, Networks, and Critical Thinking in The 21 Century CultureDocument2 pagesTrends, Networks, and Critical Thinking in The 21 Century CulturekbolorNo ratings yet

- Understanding DialecticsDocument3 pagesUnderstanding DialecticsMaria Fernanda Pedraza DiazNo ratings yet

- Model of Text ComprehensionDocument2 pagesModel of Text ComprehensionanaNo ratings yet

- Abu Nasr Al FarabiDocument10 pagesAbu Nasr Al FarabiSenyum SokmoNo ratings yet

- Tattersall Mason 2015Document718 pagesTattersall Mason 2015Sunday Augustine ChibuzoNo ratings yet

- Lesson Plan Week 2Document10 pagesLesson Plan Week 2NURUL NADIA BINTI AZAHERI -No ratings yet

- In-Service English Teaching Certificate: Planning Beyond the LessonDocument11 pagesIn-Service English Teaching Certificate: Planning Beyond the LessonHellen Muse100% (2)

- Psych MSEDocument2 pagesPsych MSELorina Lynne ApelacioNo ratings yet

- Training Needs Analysis Template Leadership Skills ExampleDocument4 pagesTraining Needs Analysis Template Leadership Skills ExampleJona SilvaNo ratings yet

- Integration of Task-Based Approaches in A TESOL CourseDocument13 pagesIntegration of Task-Based Approaches in A TESOL CourseAlexDomingoNo ratings yet

- Inquiry (5E) Lesson Plan TemplateDocument2 pagesInquiry (5E) Lesson Plan Templateapi-476820141No ratings yet

- Applied Linguistics Lecture 2Document17 pagesApplied Linguistics Lecture 2Dina BensretiNo ratings yet

- PEDSC 311 - The Teacher and The School Curriculum: Course Developer: Jane Evita S. NgoDocument39 pagesPEDSC 311 - The Teacher and The School Curriculum: Course Developer: Jane Evita S. NgoLudioman MaricelNo ratings yet

- Humanities Writing RubricDocument2 pagesHumanities Writing RubricCharles ZhaoNo ratings yet

- University of Algiers 2 Applied Linguistics and Teaching English As A Foreign LanguageDocument8 pagesUniversity of Algiers 2 Applied Linguistics and Teaching English As A Foreign LanguageNessma baraNo ratings yet

- Cohesionandcoherence 111225175031 Phpapp01 PDFDocument11 pagesCohesionandcoherence 111225175031 Phpapp01 PDFyenny clarisaNo ratings yet

- Tabel-MultiparadigmPerspectives On Theory BuildingDocument2 pagesTabel-MultiparadigmPerspectives On Theory BuildingAwal Asn100% (2)

- Lesson Plan-Unit 15Document8 pagesLesson Plan-Unit 15JamesChiewNo ratings yet

- DeconstructionDocument5 pagesDeconstructionBasheer AlraieNo ratings yet

- JRTE: Journal of Research On Technology in EducationDocument5 pagesJRTE: Journal of Research On Technology in Educationapi-3718445No ratings yet

- TNG of GrammarDocument15 pagesTNG of GrammarDaffodilsNo ratings yet

- ROL and Summary and ConclusionDocument17 pagesROL and Summary and Conclusionsharmabishnu411No ratings yet

- This Document Should Not Be Circulated Further Without Explicit Permission From The Author or ELL2 EditorsDocument8 pagesThis Document Should Not Be Circulated Further Without Explicit Permission From The Author or ELL2 EditorsSilmi Fahlatia RakhmanNo ratings yet

- A Buddhist Doctrine of Experience A New Translation and Interpretation of The Works of Vasubandhu The Yogacarin by Thomas A. Kochumuttom PDFDocument314 pagesA Buddhist Doctrine of Experience A New Translation and Interpretation of The Works of Vasubandhu The Yogacarin by Thomas A. Kochumuttom PDFdean_anderson_2100% (2)

- MG University BA PSY Programme Syllabi CBCSS - 2010 - EffectiDocument61 pagesMG University BA PSY Programme Syllabi CBCSS - 2010 - EffectiSuneesh LalNo ratings yet

- DLP PPT IIb 5.3 1Document4 pagesDLP PPT IIb 5.3 1Shannen GonzalesNo ratings yet

- Fenix - Assingment 1 - Sec4Document3 pagesFenix - Assingment 1 - Sec4Dei FenixNo ratings yet

- Advantages and Disadvantages of Heuristic HeuristicDocument2 pagesAdvantages and Disadvantages of Heuristic HeuristicRobi ParvezNo ratings yet