Professional Documents

Culture Documents

Breast Cancer Dataset

Uploaded by

Chong SiowHui60%(5)60% found this document useful (5 votes)

1K views41 pagesDATA MINING GROUP PROJECT

(BREAST CANCER DATASET)

GROUP MEMBERS:

211245 LEE PEI PEI

211330 SOH GUAN CHEN

211650 CHONG SIOW HUI

212072 LIM KOK SIANG

Original Title

BREAST CANCER DATASET

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentDATA MINING GROUP PROJECT

(BREAST CANCER DATASET)

GROUP MEMBERS:

211245 LEE PEI PEI

211330 SOH GUAN CHEN

211650 CHONG SIOW HUI

212072 LIM KOK SIANG

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

60%(5)60% found this document useful (5 votes)

1K views41 pagesBreast Cancer Dataset

Uploaded by

Chong SiowHuiDATA MINING GROUP PROJECT

(BREAST CANCER DATASET)

GROUP MEMBERS:

211245 LEE PEI PEI

211330 SOH GUAN CHEN

211650 CHONG SIOW HUI

212072 LIM KOK SIANG

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 41

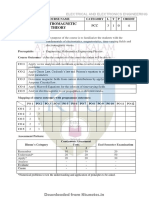

SQIT 3033 KNOWLEDGE ACQUISITION IN

DECISION MAKING (A)

GROUP PROJECT

LECTURER:

DR. IZWAN NIZAL MOHD SHAHARANEE

PROJECT:

BREAST CANCER DATASET

DUE DATE:

28 MAY 2014

PREPARED BY:

211245 LEE PEI PEI

211330 SOH GUAN CHEN

211650 CHONG SIOW HUI

212072 LIM KOK SIANG

Contents

CHAPTER 1: INTRODUCTION .............................................................................. 1

1.1 Background of the Problem ............................................................................. 1

1.2 Motivation for the Reported Work ................................................................... 1

1.3 Define the Problem .......................................................................................... 2

1.4 Aims and Objectives ........................................................................................ 2

1.5 Significant of the Work .................................................................................... 2

CHAPTER 2: LITERATURE REVIEW .................................................................... 3

CHAPTER 3: METHODOLOGY ............................................................................. 4

3.1 Knowledge Discovery in Database (KDD) ....................................................... 4

3.1.1 Selection ................................................................................................... 4

3.1.2 Pre-processing .......................................................................................... 4

3.1.3 Transformation ......................................................................................... 5

3.1.4 Data mining .............................................................................................. 6

3.1.5 Interpretation and Evaluation .................................................................... 6

3.2 Data Description .............................................................................................. 6

3.3 Process of Developing and Comparing the Models .......................................... 8

3.3.1 Data Mining Methodology ........................................................................ 8

3.3.2 Models Development ................................................................................ 9

CHAPTER 4: KNOWLEDGE DISCOVERY PROCESS IN SAS ENTERPRISE

MINER ............................................................................................................... 11

4.1 Data Selection................................................................................................ 11

4.2 Pre-processing ............................................................................................... 13

4.3 Transformation .............................................................................................. 14

4.4 Data Mining................................................................................................... 15

4.4.1 Logistics Regression ............................................................................... 15

4.4.2 Neural Network ...................................................................................... 17

4.4.3 Decision Tree .......................................................................................... 19

4.5 Interpretation and Evaluation ......................................................................... 22

CHAPTER 5: RESULT AND DISCUSSION .......................................................... 26

CHAPTER 6: CONCLUSION ................................................................................ 27

CHAPTER 7: REFERENCE .................................................................................. 28

CHAPTER 8: APPENDICES ................................................................................. 29

1

CHAPTER 1: INTRODUCTION

1.1 Background of the Problem

According to Wikipedia (2014), breast cancer is a type of cancer originating

from breast tissue, most commonly from the inner lining of milk ducts or the lobules

that supply the ducts with milk. Normally, breast cancer occurs in humans and other

mammals. Majority of the human cases are happened on women and some cases

occur in men only.

There will be some sign and symptoms which are noticeable. The very first

noticeable symptom of breast cancer typically a lump that feels different from the rest

of the breast tissue. Most of the women which are around 80% only realize they are

being discovered a breast cancer after feel a lump on their breast.

However, breast cancer can be classified into benign and malignant. We will

never know the cancer is considered benign and malignant before any diagnosis

treatment going on. There are some criteria need to be observed which are uniformity

of cell size, uniformity of cell shape, marginal adhesion, single epithelial cell size,

bare nuclei, bland chromatin, normal nucleoli. Through the observation on these

criteria, doctors or scientists will be able to make particular decision according the

diagnosis test of the patients.

Unhealthy lifestyle will be obtained higher risk of getting breast cancer instead

of others. Smoking, consume oily food and alcohol drinks, lack of exercise and

always work under stressful situation are the unhealthy daily routine. Moreover,

genetics play a minor role in most cases. This mean getting a breast cancer not

because of genetics but unhealthy lifestyle contributed the most.

1.2 Motivation for the Reported Work

With the building and understanding of the three models and the selection on

the best model, we are able to have a better understanding about the differences

among the three models.

Through this project, we are able to differentiate the models and apply the

respective model to a different scenario. Also, with the aids of the software such as

2

SAS Enterprise Miner, we are able to develop a more organized and systematic model

that can be understood by everyone.

The real breast cancer dataset will enable us to look through the classes of

breast cancer and classify their based on their characteristics.

1.3 Define the Problem

In our group project, we are given the breast cancer dataset. The dataset

consists of 11 variables and 699 observations. The 11 variables are Sample code

number, Clump Thickness, Uniformity of Cell Size, Uniformity of Cell Shape,

Marginal Adhesion, Single Epithelial Cell Size, Bare Nuclei, Bland Chromatin,

Normal Nucleoli, Mitoses and Class.

Also, the dataset is found out to have missing values in it. So, we are required

to do something to replace the missing values.

With the given dataset, we are required to develop three models and decide

which model is among the best to predict or classify the target variable. The more

accurate is the model, the better is it.

Every model will have its own advantages and disadvantages as well as its

strengths and weaknesses. Each model has its own characteristics in dealing with

different situations.

So, we will have to classify the classes of the breast cancer and come out with

a good and accurate model.

1.4 Aims and Objectives

Our aims are to develop three models for the breast cancer dataset and decide

which of the three models the best among the rest is.

One of the objectives is to determine the three most suitable models that are

suitable and relevant to be used in the breast cancer dataset. Moreover, we need to

classify the best model for the dataset.

1.5 Significant of the Work

The project is significant for the researchers, scientist or doctors or ant others

related areas in life to use so that they can determine the classes for breast cancer. The

3

best developed model can help them to quickly detect the category of the breast

cancer in the patients. In addition, a positive impact will give to the society as well as

the patients as the breast cancer can be detected more quickly.

CHAPTER 2: LITERATURE REVIEW

There are two relevant research studied by the researchers before. Both

researchers are using the breast cancer data set for their research.

William and Olvi (1990) apply multisurface pattern separation in their

research. It is a mathematical method to differentiate the elements of two pattern set.

Each element of the pattern sets is comprised of various scalar observations. In their

research, they use the diagnosis of breast cytology to demonstrate the applicability of

this method to medical diagnosis and decision making. Only 369 sample size that

used for training data in their study. According to William and Olive, only 1 trail for

collected classification results. The result showed that two pairs of parallel were

found to be consistent with 50% of the data and 6.5% of the samples were

misclassified, the accuracy on remaining 50% of dataset: 93.5%. Three pairs of

parallel were found to be consistent with 67% of data and 4.1% of the samples were

misclassified, the accuracy on remaining 33% of dataset: 95.9%. William and Olvi

also show that the multisurface method of pattern separation is more powerful than

other methods for breast cytology diagnosis because it utilizes all of the available

diagnostic information.

According to Zhang (1990), only 369 instances of data set used in his research.

He applied 4 instance-based learning algorithms in his study. the collected

classification results averaged over 10 trials data set. The result show that the best

accuracy result included one nearest neighbour with 93.7% of the data set and the

training data is 200 instances (54%) and the tested data is 169 instances (46%). He is

also interested in using only typical instances which is total 92.2% of the dataset with

storing only 23.1 instances. The training data set that used in this study were 200

instances (54%) and 169 for tested data set (46%).

4

CHAPTER 3: METHODOLOGY

3.1 Knowledge Discovery in Database (KDD)

3.1.1 Selection

Data selection is to acquire the most appropriate size that useful to the KDD

process. We can use the sampling method for which the data is too big. There are two

types of sampling method which are probability sampling method and non-probability

sampling. Three approaches to determine size of sample are as below.

Central limit theorem is used for the size of sample, n must be greater than

30 ( n > 30 ). If n is size of population, then standard error is 0.

Second approach is based on the confidence interval and accepted error.

Third is subjected to data availability. The dataset for our project is

Wisconsin Breast Cancer Database (January 8, 1991) which is adapted from UCI

Machine Learning website. (UCI Machine Learning Repository, 1992)

3.1.2 Pre-processing

Pre-processing process is to ensure the data is clean. Certain data mining

algorithm requires pre-processing for better performance. For example Neural

Networks incapable to perform well using string data types. However, real world data

usually contains missing value, noisy data and inconsistent value. Thus, here are the

methods as shown below:

Data cleaning is the method to handle incomplete, noisy and inconsistent data.

Incomplete or missing data is due to improper data collection method. To solve this

missing data, we can using mean-value, estimate the probable value using regression,

using constant value such as null or ignore the missing record. For the case of noisy

data, noisy data is random error or variance in data. This is due to corrupted data

5

transmission, technological limitation. During transmission data into certain software

such as SPSS or SAS, we may key in wrong data in it, thus, this will cause noisy data

happened. To solve this problem, we can use binning method or outlier removal

method. Inconsistent data means the data contains replication or possibly redundancy

data. Method to overcome this problem is removing redundant or replicate data.

Data integration is data comes from different sources with different naming

standard. This will cause in inconsistencies and redundancies. There are several ways

to handle this problem which is Consolidate different source into one repository

(using metadata), Correlation analysis (measure the strength of relationship between

different attribute).

Data reduction is the transformation of numerical or alphabetical digital

information derived empirical or experimentally into a corrected, ordered, and

simplified form. This is to increase efficiency, can reduce the huge data set into a

smaller representation. Several techniques can be used in data reduction such as data

cube aggregation, dimension reduction, data compression and discretization.

3.1.3 Transformation

In the transformation process, which also known as data normalization, is

basically re-scale the data into a suitable range. This process is important because it

can increase the processing speed and reduce the memory allocation. There are

several methods in transformation:

Z-Score Normalization is useful when the extreme value is unknown or

outlier dominates the extreme values. Typically the scale will be [0 to 1]

Min-Max Normalization is a linear transformation of the original input to

newly specified range.

6

Decimal Scaling is to divide the value by 10 power n, where n is the number

of digits of the maximum absolute value.

3.1.4 Data mining

Data Mining is the use of algorithms to extract the information and patterns by

the KDD process. This step applies algorithms to the transformed data to generate the

desired results. The hearts of KDD process (where unknown pattern will be revealed).

Example of algorithms: Regression (classification, prediction), Neural Networks

(prediction, classification, clustering), Apriori Algorithms (association rules), K-

Means & K-Nearest Neighbor (clustering), Decision Tree (classification), Instance

Learning (classification).

3.1.5 Interpretation and Evaluation

In interpretation and evaluation process, certain data mining output is non-

human understandable format and we need interpretation for better understanding. So,

we convert output into an easy understand medium (using graphs, mathematical

model, tables and etc.). Visualization methods: graphical (charts, graphs), geometric

(box-plot), icon-based (figures, icon), pixel-based (colored pixel), hierarchical (tree),

hybrid (combination of any).

3.2 Data Description

The dataset for our project is Wisconsin Breast Cancer Database (January 8,

1991) which is adapted from UCI Machine Learning website. (UCI Machine Learning

Repository, 1992)

The sources of our dataset are as bellow:

1) Dr. WIlliam H. Wolberg (physician)

University of Wisconsin Hospitals

Madison, Wisconsin

USA

7

2) Donor: Olvi Mangasarian (mangasarian@cs.wisc.edu)

Received by David W. Aha (aha@cs.jhu.edu)

Date: 15 July 1992

There are a total of 699 instances in the database and also 11 attributes

including the class attribute. The attribute names with their domain as shown in

below.

Attribute Domain

1. Sample code number id number

2. Clump Thickness 1 - 10

3. Uniformity of Cell Size 1 - 10

4. Uniformity of Cell Shape 1 - 10

5. Marginal Adhesion 1 - 10

6. Single Epithelial Cell Size 1 - 10

7. Bare Nuclei 1 - 10

8. Bland Chromatin 1 - 10

9. Normal Nucleoli 1 - 10

10. Mitoses 1 - 10

11. Class 2 for benign, 4 for malignant

The 11 variables are Sample code number, Clump Thickness, Uniformity of

Cell Size, Uniformity of Cell Shape, Marginal Adhesion, Single Epithelial Cell Size,

Bare Nuclei, Bland Chromatin, Normal Nucleoli, Mitoses and Class. The attribute

Class will be used as target attribute.

There are 16 missing values in the attribute Bare Nuclei. Replacement for the

values will be done by using the Replacement node.

Before we do analysing using this dataset, we replace the values in attribute

Class into their respective classes. In such, the value of 2 in attribute class will be

replaced to Benign while value of 4 will be replaced as Malignant. Refer to Appendix

1 for the replaced dataset.

8

The dataset is in xls format in MS Excel and will be exported into SAS

Enterprise Miner for model development and comparison.

3.3 Process of Developing and Comparing the Models

3.3.1 Data Mining Methodology

There are two types of data mining methodology, which are hypothesis testing

and knowledge discovery. Hypothesis testing is the top down approach that attempts

to substantiate or disprove preconceived idea. On the other hand, knowledge

discovery is a bottom-up approach which is started with data and tried to find

something that is unknown.

In our project, we will use the directed knowledge discovery method where

the sources of pre classified data are identified. The five steps of knowledge discovery

process as shown in Table 3.1 will be developed and used by us.

Table 3.1: Knowledge Discovery Process

The data mining task consists of predictive and descriptive modelling.

Predictive modelling is making prediction about values of data using known results

found from different data and performing inference based on the current data to make

Data Selection

The selected dataset is the breast cancer dataset with 11 variables and 699 observations.

Pre-processing

The dataset consists of 16 missing values.

The missing values will be replaced so that the data is clean and the quality of the data is

high.

Transformation

Data from different sources will be transformed into a common format for processing.

Data Mining

Develop three models and apply algorithms to the transformed data to generate the

desired results.

Interpretation

and Evaluation

The results are intepreted and presented in a proper and visualizing manner

9

predictions. On the other hand, descriptive modelling is to identify pattern or

relationships in data. It is to explore the properties of data examined but not to predict

the new properties. It always required a domain expert to do so.

Thus, in our project, we decide to use the predictive modelling tools in

dealing with our dataset. Under the predictive modelling, there are 4 types of models

which are classification, regression, time series analysis and also prediction. By using

predictive modelling tools, we can make prediction and inferences based on the

available breast cancer dataset.

3.3.2 Models Development

Classification is chosen due to the characteristics of accuracy, speed,

robustness, scalability and also interpretability. Classification is accurate as it has the

ability to correctly predict the new class label. Moreover, it is fast in computation the

results. It is also able to make correct predictions given a noisy and missing data. In

addition, it has the ability to construct the classifier efficiently given a large amount of

data. Lastly, it gives a better understanding and insight for the results.

Moreover, classification techniques are most suitable for predicting data sets

with binary or nominal categories. They are less effective for ordinal categories since

they do not consider the implicit order among the categories. Since our target variable

is nominal data, so it is most suitable to use classification.

Three models that are chosen to be developed under the classification are

logistics regression, neural network and also decision tree.

3.3.2.1 Logistics Regression

Logistics regression is a nonlinear regression technique for problem having a

binary outcome. A created regression equation limits the values of the output attribute

to class values between 0 and 1. This allows output to represent a probability of class

membership.

Target is a discrete (binary or ordinal) variable while input variables have any

measurement level. Predicted values are the probability of a particular level(s) of the

target variable at the given values of the input variables.

10

3.3.2.2 Neural Network

Neural network offers a mathematical model that attempts to mimic the human

brains. Knowledge is often represented as a layered set of interconnected processors.

Each Node has a weighted connection to several other nodes in adjacent layers.

Moreover, individual nodes take the input received from connected nodes and use the

weight together with a simple function to compute output values.

3.3.2.3 Decision Tree

A decision tree is a structure that can be used to divide up a large collection of

records into successfully smaller sets of records by applying a sequence of simple

decision rules. The algorithm used to construct decision tree is referred to as recursive

partitioning.

The target variable is usually categorical and the decision tree is used either to

calculate the probability that a given record belong to each of the category or to

classify the record by assigning it to the most likely class.

Decision tree has three types of nodes, which are root node which is the top

(or left-most) node with no incoming edges and zero or more outgoing edges, child or

internal Node which is the descendent node which has exactly one incoming edge and

two or more outgoing edges and lastly the leaf Node is the terminal node which has

exactly one incoming edge and no outgoing edges. Each leaf node is assigned a class

label. The rules or branches are the unique path (edges) with a set of conditions

(attribute) that divide the observations into smaller subset.

11

CHAPTER 4: KNOWLEDGE DISCOVERY PROCESS IN SAS ENTERPRISE

MINER

4.1 Data Selection

To begin, select Solution Analysis Enterprise Miner. Then, the SAS

Enterprise Miner window will open. After that, click File New Project to create

a new project which named BreastCancer. After we name our project as BreastCancer,

click create and rename the untitled diagram as Project.

The breast cancer dataset is imported from MS Excel to SAS Enterprises

Miner and being stored in EMDATA so that the data is stored in a permanent SAS

library. Then, the Input Data Source node is added to the workspace so that the

breast cancer dataset can be selected. The Input Data Source node represents the data

source that we choose for a mining analysis and provides details (metadata) about the

variables in the data source that we want to use.

After we have dragged in the Input Data Source node, we click open to

select the breast cancer dataset which is named EMDATA.CANCER as the source

data which consists of 699 metadata sample.

Data:

The data consists of 11 variables where 1 class variable (CLASS) and 10

interval variables (CLUMP THICKNESS, UNIFORMITY OF CELL SIZE,

UNIFORMITY OF CELL SHAPE, MARGINAL ADHESION, SINGLE

EPITHELIAL CELL SIZE, BARE NUCLEI, BLAND CHROMATIN, NORMAL

NUCLEOLI and MITOSES). There are no missing values in all the variables except

the BARE NUCLEI variable which has 2% missing data.

12

Then, we click Variables and set the model role. We set model role for CLASS

from input to become a target. The model rule for each variable is shown as below.

Variable Model Rule

SAMPLE CODE NUMBER id

CLUMP THICKNESS input

UNIFORMITY OF CELL SIZE input

UNIFORMITY OF CELL SHAPE input

MARGINAL ADHESION input

SINGLE EPITHELIAL CELL SIZE input

BARE NUCLEI input

BLAND CHROMATIN input

NORMAL NUCLEOLI input

MITOSES input

CLASS target

Variables:

Interval Variables:

Class Variables:

13

4.2 Pre-processing

We drag Data Partition node and connect it with Input Data Source node.

This node is to partition the input data sets of breast cancer into a training, validation and

test model. The training data set is used for preliminary model fitting. The validation data

set is used to monitor and tune the free model parameters during estimation and is also

used for model assessment. The test data set is an additional holdout data set that we can

use for model assessment.

Then, right click on this node and click open. We decided to set 70% for

training, 0% for validation and 30% for test. Thus, Model construction is developed

from 70% of the training data and the remaining 30% testing data is used for model

evaluation. Training data is used to build the model while the testing data is used to

validate the model.

Partition:

For developing the models, it is necessary to handle and replace the missing

values. A Replacement node is dragged inside and connected with Data Partition

node. We use the Replacement node to generate score code to process unknown levels

when scoring and also to interactively specify replacement values for class and interval

levels. In some cases we might want to reassign specified non missing values before

performing imputation calculations for the missing values.

14

The missing values are being replaced by using Replacement node. The

imputation methods for the interval variables are using mean whereas the imputation

methods for the class variables are using count. The variable CLASS will not be using

to replace as it is the target variable.

Interval Variables:

Class Variables:

After we run the node, we can see that the missing values of CLASS variable

are being replaced in the observations with the values of 3.4886. The table below

shows part of the dataset after the missing values are being replaced.

4.3 Transformation

We will then drag Transform Variables node and connect it with

Replacement node. The function of the Transform Variables node is to create new

variables or variables that are transformations of existing variables in the data.

Transformations are useful when we want to improve the fit of a model to the data. The

Transform Variables node also enables us to create interaction variables. Sometimes,

input data is more informative on a scale other than that on which it was originally

collected. For example, variable transformations can be used to stabilize variance, remove

nonlinearity, improve additively, and counter non-normality. Therefore, for many models,

transformations of the input data (either dependent or independent variables) can lead to a

better model fit. These transformations can be functions of either a single variable or of

more than one variable.

15

In our project, we use the Transform Variables node to make variables better

suited for logistic regression model and neural network.

4.4 Data Mining

4.4.1 Logistics Regression

The Regression is being dragged and connected with Transform Variables

node. The function of Regression node is to fit both linear and logistic regression

models to the data. We can use continuous, ordinal, and binary target variables, and

you can use both continuous and discrete input variables. The node supports the

stepwise, forward, and backward selection methods.

In this project, we are going to use Stepwise method with Profit / Loss criteria.

Variables:

Model Options:

16

Selection Method:

After that, we run the node and the results will appear. We click on the

Statistics and we will able to get the results below.

Statistics:

From the results above, we can see that the Misclassification Rate for Training

is 0.0286 while for Test is 0.0524. The misclassification rate for Training is less than

Test which indicates that result for our logistics regression model is good.

Then, we can get our logistics regression equation from the Estimates

Table.

Estimates: Table:

Our logistics regression equation, Y=-9.7120(Intercept: Class=MALIGNANT)

+0.6503(BARE NUCLEI) +0.5604(CLUMP THICKNESS) +0.3541(MARGINAL

ADHESION) +0.7246(MITOSES) +0.6027(UNIFORMITY OF CELL SIZE).

17

Results Viewer:

The confusion matrix above shows that 64.42% of the class BENIGN are

being classified correctly and 32.72% of the class MALIGNANT are classified

correctly. Only 6% of the class BENIGN are being misclassified as class

MALIGNANT while 8% of class MALIGNANT are being misclassified as BENIGN.

4.4.2 Neural Network

We drag Neural Network node and connect it with Transform Variables node.

Neural Network node is used to construct, train, and validate multilayer, feed forward

neural networks.

By default, the Neural Network node automatically constructs a network that has

one hidden layer consisting of three neurons. In general, each input is fully connected to

the first hidden layer, each hidden layer is fully connected to the next hidden layer, and

the last hidden layer is fully connected to the output. The Neural Network node supports

many variations of this general form.

In our project, we will also select Profit / Loss model selection criteria.

Variables:

18

General:

After that, we will run the node and the results will appear.

Basic:

Table:

From the results, we can see that the Misclassification Rate for Training is

0.0307 and Test is 0.0238. We can say that this model is the good as the errors are

smaller in Training as compared to Test in the model.

19

Weights:

In the Weights option, we are able to see that weights for all the variables. As

we can see from the table above, we have 9 variables with their respective weights.

The highest weight is from variable 1 (BARE NUCLEI with a value of 0.2096 and the

lowest weight is variable 8 (UNIFORMITY OF CELL SHAPE) with a value of -

0.0014. We can say that variable 1 contributes the most to the model as the weight is

the highest.

4.4.3 Decision Tree

We drag Tree node and connect it with Replacement node. Tree node is used

to fit decision tree models to the data. The implementation includes features that are

found in a variety of popular decision tree algorithms such as CHAID, CART, and C4.5.

The node supports both automatic and interactive training.

When we run the Decision Tree node in automatic mode, it automatically ranks

the input variables, based on the strength of their contribution to the tree. This ranking

can be used to select variables for use in subsequent modelling. We can override any

automatic step with the option to define a splitting rule and prune explicit tools or sub-

trees. Interactive training enables us to explore and evaluate a large set of trees as we

develop them.

20

Variables:

Basic:

Then, we will run the node and the results appear.

All:

We can see that the diagram above shows only 5 leaves are being selected in

Training dataset and the Misclassification Rate is 0.0286. This shows that the model

is good where there is small error in the Training dataset.

21

Summary:

The summary above is the confusion matrix, we can see that 64% of the

Benign are correctly classified while for Malignant, 33% are correctly classified. Only

1% are incorrectly classified.

We can also see decision tree results by clicking View Tree.

From the above, we can see that five leaf nodes represent the class label with

all correctly classified. By having UNIFORMITY OF CELL SIZE less than 2.5, the

breast cancer is classified as class BENIGN with the BARE NUCLEI of less than 5.5.

For UNIFORMITY OF CELL SIZE that is 2.5 and above, if it also consists BARE

NUCLEI 3.7443 and above, then it is classified as class MALIGNANT. On the other

hand, if it consists BARE NUCLEI less than 3.7443 with UNIFORMITY OF CELL

22

SIZE less than 4.5, then it is classified as class BENIGN, otherwise it is classified as

class MALIGNANT with the UNIFORMITY OF CELL SIZE 4.5 and above.

Next, we can see the completing splits for the decision tree by right clicking

View completing splits.

From the table below, we can say that UNIFORMITY OF CELL SIZE

variable is used for the first split with the highest Logworth of 78.522 while the other

variables such as UNIFORMITY OF CELL SHAPE, BARE NUCLEI, BLAND

CHROMATIN and SINGLE EPITHELIAL CELL SIZE are the completing splits for

the first split.

4.5 Interpretation and Evaluation

An Assessment node is being dragged and connected to Regression node,

Neural Network node and Tree node. The Assessment node provides a common

framework for comparing models and predictions from any of the modeling nodes

(Regression, Tree, Neural Network, and User Defined Model nodes).

After that, the node is run and the results appear.

23

Models:

We can see that the table above shows that in the Decision Tree model, the

Misclassification Rates for Training dataset is 0.0286 while for Test dataset is 0.0667.

For Neural Network model, the Misclassification Rates for Training dataset is 0.0307

while for Test dataset is 0.0238. Both models have very small errors but Neural

Network model is better than Decision Tree model as the misclassification rate for

Test is smaller. .

Next, we can see the lift chart for the both models by just highlighting the

model that we want to see and click Draw Lift Chart.

Both the models show the lift chart as below.

Decision Tree:

24

From the lift chart for Decision Tree model, in 10

th

to 20

th

percentile, the

cumulative % response is 96.454%. At the 30th percentile, the next observation with

the highest predicted probability is a non-response, so the cumulative response drops

to 96.431%.

Neural Network:

25

From the lift chart for Neural Network model, in 10

th

to 20

th

percentile, the

cumulative % response is 100.000%. At the 30th percentile, the next observation with

the highest predicted probability is a non-response, so the cumulative response drops

to 99.318%.

Logistics Regression:

From the lift chart for Logistic Regression model, in 10

th

to 20

th

percentile, the

cumulative % response is 100.000%. At the 30th percentile, the next observation with

the highest predicted probability is a non-response, so the cumulative response drops

to 98.637%.

Thus, both three models can be used.

In addition, an Insight node can be added to connect with the Assessment node

with all the three models to see the results of the breast cancer dataset. The Insight

node is to enable us to open a SAS/INSIGHT session. SAS/INSIGHT software is an

interactive tool for data exploration and analysis.

26

The table below shows the part of the result from the SAS/INSIGHT session.

From the last column Class, it shows the predicted Class for all the observations by

SAS Enterprise Miner. We can use the predicted Class to compare with our original

dataset for Class and see the differences between them.

CHAPTER 5: RESULT AND DISCUSSION

The misclassification rate for both training and test dataset will be shown at

table below for the three models used.

Model Misclassification Rate:

Training

Misclassification Rate:

Test

Regression 0.0286 0.0524

Neural Network 0.0307 0.0238

Decision Tree 0.0286 0.0667

From the above results, we can say that the Neural Network model can be

used for the breast cancer dataset as the misclassification rate for Test dataset is the

smallest with the value of 0.0238 as compared to the other 2 models. The Decision

Tree model is the worst model to be used as the misclassification rate for Test dataset

is the highest among the other two models, with a value of 0.0667.

27

CHAPTER 6: CONCLUSION

It is important for any professionals or specialists in life areas to classify the

classes of the breast cancer correctly.

The models used in this project are not pretty sure that they can be applied

perfectly to all the breast cancer patients, yet the models can be a guideline for them

to know and understand more about the breast cancers classes more quickly.

Based on our result, we can conclude that Neural Network model is the best in

classifying the dataset for Breast Cancer as the misclassification rate is the lowest.

The models will be developed and changed from time to time as the increasing

numbers of variables that are contributing to the data.

28

CHAPTER 7: REFERENCE

UCI Machine Learning Repository. (1992). Breast Cancer Wisconsin (Original) Data

Set. Adapted 21 May 2014 from

https://archive.ics.uci.edu/ml/datasets/Breast+Cancer+Wisconsin+%28Origina

l%29

Wikipedia. (2014). Breast Cancer. Adapted 21 May 2014 from

http://en.wikipedia.org/wiki/Breast_cancer

Wolberg, W.H., & Mangasarian, O.L. (1990). Multisurface method of pattern

separation for medical diagnosis applied to breast cytology. In Proceedings of

the National Academy of Sciences, 87, 9193--9196.

Zhang, J. (1992). Selecting typical instances in instance-based learning. In

Proceedings of the Ninth International Machine Learning Conference (pp.

470--479). Aberdeen, Scotland: Morgan Kaufmann.

29

CHAPTER 8: APPENDICES

Appendix 1:

Sample

code

number

Clump

Thickness

Uniformity

of Cell Size

Uniformity

of Cell

Shape

Marginal

Adhesion

Single

Epithelial

Cell Size

Bare

Nuclei

Bland

Chromatin

Normal

Nucleoli Mitoses Class

1000025 5 1 1 1 2 1 3 1 1 Benign

1002945 5 4 4 5 7 10 3 2 1 Benign

1015425 3 1 1 1 2 2 3 1 1 Benign

1016277 6 8 8 1 3 4 3 7 1 Benign

1017023 4 1 1 3 2 1 3 1 1 Benign

1017122 8 10 10 8 7 10 9 7 1 Malignant

1018099 1 1 1 1 2 10 3 1 1 Benign

1018561 2 1 2 1 2 1 3 1 1 Benign

1033078 2 1 1 1 2 1 1 1 5 Benign

1033078 4 2 1 1 2 1 2 1 1 Benign

1035283 1 1 1 1 1 1 3 1 1 Benign

1036172 2 1 1 1 2 1 2 1 1 Benign

1041801 5 3 3 3 2 3 4 4 1 Malignant

1043999 1 1 1 1 2 3 3 1 1 Benign

1044572 8 7 5 10 7 9 5 5 4 Malignant

1047630 7 4 6 4 6 1 4 3 1 Malignant

1048672 4 1 1 1 2 1 2 1 1 Benign

1049815 4 1 1 1 2 1 3 1 1 Benign

1050670 10 7 7 6 4 10 4 1 2 Malignant

1050718 6 1 1 1 2 1 3 1 1 Benign

1054590 7 3 2 10 5 10 5 4 4 Malignant

1054593 10 5 5 3 6 7 7 10 1 Malignant

1056784 3 1 1 1 2 1 2 1 1 Benign

1057013 8 4 5 1 2 ? 7 3 1 Malignant

1059552 1 1 1 1 2 1 3 1 1 Benign

1065726 5 2 3 4 2 7 3 6 1 Malignant

1066373 3 2 1 1 1 1 2 1 1 Benign

1066979 5 1 1 1 2 1 2 1 1 Benign

1067444 2 1 1 1 2 1 2 1 1 Benign

1070935 1 1 3 1 2 1 1 1 1 Benign

1070935 3 1 1 1 1 1 2 1 1 Benign

1071760 2 1 1 1 2 1 3 1 1 Benign

1072179 10 7 7 3 8 5 7 4 3 Malignant

1074610 2 1 1 2 2 1 3 1 1 Benign

1075123 3 1 2 1 2 1 2 1 1 Benign

1079304 2 1 1 1 2 1 2 1 1 Benign

1080185 10 10 10 8 6 1 8 9 1 Malignant

1081791 6 2 1 1 1 1 7 1 1 Benign

1084584 5 4 4 9 2 10 5 6 1 Malignant

1091262 2 5 3 3 6 7 7 5 1 Malignant

1096800 6 6 6 9 6 ? 7 8 1 Benign

1099510 10 4 3 1 3 3 6 5 2 Malignant

1100524 6 10 10 2 8 10 7 3 3 Malignant

1102573 5 6 5 6 10 1 3 1 1 Malignant

1103608 10 10 10 4 8 1 8 10 1 Malignant

1103722 1 1 1 1 2 1 2 1 2 Benign

1105257 3 7 7 4 4 9 4 8 1 Malignant

1105524 1 1 1 1 2 1 2 1 1 Benign

1106095 4 1 1 3 2 1 3 1 1 Benign

1106829 7 8 7 2 4 8 3 8 2 Malignant

1108370 9 5 8 1 2 3 2 1 5 Malignant

1108449 5 3 3 4 2 4 3 4 1 Malignant

1110102 10 3 6 2 3 5 4 10 2 Malignant

1110503 5 5 5 8 10 8 7 3 7 Malignant

1110524 10 5 5 6 8 8 7 1 1 Malignant

1111249 10 6 6 3 4 5 3 6 1 Malignant

1112209 8 10 10 1 3 6 3 9 1 Malignant

1113038 8 2 4 1 5 1 5 4 4 Malignant

1113483 5 2 3 1 6 10 5 1 1 Malignant

1113906 9 5 5 2 2 2 5 1 1 Malignant

1115282 5 3 5 5 3 3 4 10 1 Malignant

1115293 1 1 1 1 2 2 2 1 1 Benign

1116116 9 10 10 1 10 8 3 3 1 Malignant

1116132 6 3 4 1 5 2 3 9 1 Malignant

1116192 1 1 1 1 2 1 2 1 1 Benign

30

1116998 10 4 2 1 3 2 4 3 10 Malignant

1117152 4 1 1 1 2 1 3 1 1 Benign

1118039 5 3 4 1 8 10 4 9 1 Malignant

1120559 8 3 8 3 4 9 8 9 8 Malignant

1121732 1 1 1 1 2 1 3 2 1 Benign

1121919 5 1 3 1 2 1 2 1 1 Benign

1123061 6 10 2 8 10 2 7 8 10 Malignant

1124651 1 3 3 2 2 1 7 2 1 Benign

1125035 9 4 5 10 6 10 4 8 1 Malignant

1126417 10 6 4 1 3 4 3 2 3 Malignant

1131294 1 1 2 1 2 2 4 2 1 Benign

1132347 1 1 4 1 2 1 2 1 1 Benign

1133041 5 3 1 2 2 1 2 1 1 Benign

1133136 3 1 1 1 2 3 3 1 1 Benign

1136142 2 1 1 1 3 1 2 1 1 Benign

1137156 2 2 2 1 1 1 7 1 1 Benign

1143978 4 1 1 2 2 1 2 1 1 Benign

1143978 5 2 1 1 2 1 3 1 1 Benign

1147044 3 1 1 1 2 2 7 1 1 Benign

1147699 3 5 7 8 8 9 7 10 7 Malignant

1147748 5 10 6 1 10 4 4 10 10 Malignant

1148278 3 3 6 4 5 8 4 4 1 Malignant

1148873 3 6 6 6 5 10 6 8 3 Malignant

1152331 4 1 1 1 2 1 3 1 1 Benign

1155546 2 1 1 2 3 1 2 1 1 Benign

1156272 1 1 1 1 2 1 3 1 1 Benign

1156948 3 1 1 2 2 1 1 1 1 Benign

1157734 4 1 1 1 2 1 3 1 1 Benign

1158247 1 1 1 1 2 1 2 1 1 Benign

1160476 2 1 1 1 2 1 3 1 1 Benign

1164066 1 1 1 1 2 1 3 1 1 Benign

1165297 2 1 1 2 2 1 1 1 1 Benign

1165790 5 1 1 1 2 1 3 1 1 Benign

1165926 9 6 9 2 10 6 2 9 10 Malignant

1166630 7 5 6 10 5 10 7 9 4 Malignant

1166654 10 3 5 1 10 5 3 10 2 Malignant

1167439 2 3 4 4 2 5 2 5 1 Malignant

1167471 4 1 2 1 2 1 3 1 1 Benign

1168359 8 2 3 1 6 3 7 1 1 Malignant

1168736 10 10 10 10 10 1 8 8 8 Malignant

1169049 7 3 4 4 3 3 3 2 7 Malignant

1170419 10 10 10 8 2 10 4 1 1 Malignant

1170420 1 6 8 10 8 10 5 7 1 Malignant

1171710 1 1 1 1 2 1 2 3 1 Benign

1171710 6 5 4 4 3 9 7 8 3 Malignant

1171795 1 3 1 2 2 2 5 3 2 Benign

1171845 8 6 4 3 5 9 3 1 1 Malignant

1172152 10 3 3 10 2 10 7 3 3 Malignant

1173216 10 10 10 3 10 8 8 1 1 Malignant

1173235 3 3 2 1 2 3 3 1 1 Benign

1173347 1 1 1 1 2 5 1 1 1 Benign

1173347 8 3 3 1 2 2 3 2 1 Benign

1173509 4 5 5 10 4 10 7 5 8 Malignant

1173514 1 1 1 1 4 3 1 1 1 Benign

1173681 3 2 1 1 2 2 3 1 1 Benign

1174057 1 1 2 2 2 1 3 1 1 Benign

1174057 4 2 1 1 2 2 3 1 1 Benign

1174131 10 10 10 2 10 10 5 3 3 Malignant

1174428 5 3 5 1 8 10 5 3 1 Malignant

1175937 5 4 6 7 9 7 8 10 1 Malignant

1176406 1 1 1 1 2 1 2 1 1 Benign

1176881 7 5 3 7 4 10 7 5 5 Malignant

1177027 3 1 1 1 2 1 3 1 1 Benign

1177399 8 3 5 4 5 10 1 6 2 Malignant

1177512 1 1 1 1 10 1 1 1 1 Benign

1178580 5 1 3 1 2 1 2 1 1 Benign

1179818 2 1 1 1 2 1 3 1 1 Benign

1180194 5 10 8 10 8 10 3 6 3 Malignant

1180523 3 1 1 1 2 1 2 2 1 Benign

1180831 3 1 1 1 3 1 2 1 1 Benign

1181356 5 1 1 1 2 2 3 3 1 Benign

1182404 4 1 1 1 2 1 2 1 1 Benign

1182410 3 1 1 1 2 1 1 1 1 Benign

31

1183240 4 1 2 1 2 1 2 1 1 Benign

1183246 1 1 1 1 1 ? 2 1 1 Benign

1183516 3 1 1 1 2 1 1 1 1 Benign

1183911 2 1 1 1 2 1 1 1 1 Benign

1183983 9 5 5 4 4 5 4 3 3 Malignant

1184184 1 1 1 1 2 5 1 1 1 Benign

1184241 2 1 1 1 2 1 2 1 1 Benign

1184840 1 1 3 1 2 ? 2 1 1 Benign

1185609 3 4 5 2 6 8 4 1 1 Malignant

1185610 1 1 1 1 3 2 2 1 1 Benign

1187457 3 1 1 3 8 1 5 8 1 Benign

1187805 8 8 7 4 10 10 7 8 7 Malignant

1188472 1 1 1 1 1 1 3 1 1 Benign

1189266 7 2 4 1 6 10 5 4 3 Malignant

1189286 10 10 8 6 4 5 8 10 1 Malignant

1190394 4 1 1 1 2 3 1 1 1 Benign

1190485 1 1 1 1 2 1 1 1 1 Benign

1192325 5 5 5 6 3 10 3 1 1 Malignant

1193091 1 2 2 1 2 1 2 1 1 Benign

1193210 2 1 1 1 2 1 3 1 1 Benign

1193683 1 1 2 1 3 ? 1 1 1 Benign

1196295 9 9 10 3 6 10 7 10 6 Malignant

1196915 10 7 7 4 5 10 5 7 2 Malignant

1197080 4 1 1 1 2 1 3 2 1 Benign

1197270 3 1 1 1 2 1 3 1 1 Benign

1197440 1 1 1 2 1 3 1 1 7 Benign

1197510 5 1 1 1 2 ? 3 1 1 Benign

1197979 4 1 1 1 2 2 3 2 1 Benign

1197993 5 6 7 8 8 10 3 10 3 Malignant

1198128 10 8 10 10 6 1 3 1 10 Malignant

1198641 3 1 1 1 2 1 3 1 1 Benign

1199219 1 1 1 2 1 1 1 1 1 Benign

1199731 3 1 1 1 2 1 1 1 1 Benign

1199983 1 1 1 1 2 1 3 1 1 Benign

1200772 1 1 1 1 2 1 2 1 1 Benign

1200847 6 10 10 10 8 10 10 10 7 Malignant

1200892 8 6 5 4 3 10 6 1 1 Malignant

1200952 5 8 7 7 10 10 5 7 1 Malignant

1201834 2 1 1 1 2 1 3 1 1 Benign

1201936 5 10 10 3 8 1 5 10 3 Malignant

1202125 4 1 1 1 2 1 3 1 1 Benign

1202812 5 3 3 3 6 10 3 1 1 Malignant

1203096 1 1 1 1 1 1 3 1 1 Benign

1204242 1 1 1 1 2 1 1 1 1 Benign

1204898 6 1 1 1 2 1 3 1 1 Benign

1205138 5 8 8 8 5 10 7 8 1 Malignant

1205579 8 7 6 4 4 10 5 1 1 Malignant

1206089 2 1 1 1 1 1 3 1 1 Benign

1206695 1 5 8 6 5 8 7 10 1 Malignant

1206841 10 5 6 10 6 10 7 7 10 Malignant

1207986 5 8 4 10 5 8 9 10 1 Malignant

1208301 1 2 3 1 2 1 3 1 1 Benign

1210963 10 10 10 8 6 8 7 10 1 Malignant

1211202 7 5 10 10 10 10 4 10 3 Malignant

1212232 5 1 1 1 2 1 2 1 1 Benign

1212251 1 1 1 1 2 1 3 1 1 Benign

1212422 3 1 1 1 2 1 3 1 1 Benign

1212422 4 1 1 1 2 1 3 1 1 Benign

1213375 8 4 4 5 4 7 7 8 2 Benign

1213383 5 1 1 4 2 1 3 1 1 Benign

1214092 1 1 1 1 2 1 1 1 1 Benign

1214556 3 1 1 1 2 1 2 1 1 Benign

1214966 9 7 7 5 5 10 7 8 3 Malignant

1216694 10 8 8 4 10 10 8 1 1 Malignant

1216947 1 1 1 1 2 1 3 1 1 Benign

1217051 5 1 1 1 2 1 3 1 1 Benign

1217264 1 1 1 1 2 1 3 1 1 Benign

1218105 5 10 10 9 6 10 7 10 5 Malignant

1218741 10 10 9 3 7 5 3 5 1 Malignant

1218860 1 1 1 1 1 1 3 1 1 Benign

1218860 1 1 1 1 1 1 3 1 1 Benign

1219406 5 1 1 1 1 1 3 1 1 Benign

1219525 8 10 10 10 5 10 8 10 6 Malignant

32

1219859 8 10 8 8 4 8 7 7 1 Malignant

1220330 1 1 1 1 2 1 3 1 1 Benign

1221863 10 10 10 10 7 10 7 10 4 Malignant

1222047 10 10 10 10 3 10 10 6 1 Malignant

1222936 8 7 8 7 5 5 5 10 2 Malignant

1223282 1 1 1 1 2 1 2 1 1 Benign

1223426 1 1 1 1 2 1 3 1 1 Benign

1223793 6 10 7 7 6 4 8 10 2 Malignant

1223967 6 1 3 1 2 1 3 1 1 Benign

1224329 1 1 1 2 2 1 3 1 1 Benign

1225799 10 6 4 3 10 10 9 10 1 Malignant

1226012 4 1 1 3 1 5 2 1 1 Malignant

1226612 7 5 6 3 3 8 7 4 1 Malignant

1227210 10 5 5 6 3 10 7 9 2 Malignant

1227244 1 1 1 1 2 1 2 1 1 Benign

1227481 10 5 7 4 4 10 8 9 1 Malignant

1228152 8 9 9 5 3 5 7 7 1 Malignant

1228311 1 1 1 1 1 1 3 1 1 Benign

1230175 10 10 10 3 10 10 9 10 1 Malignant

1230688 7 4 7 4 3 7 7 6 1 Malignant

1231387 6 8 7 5 6 8 8 9 2 Malignant

1231706 8 4 6 3 3 1 4 3 1 Benign

1232225 10 4 5 5 5 10 4 1 1 Malignant

1236043 3 3 2 1 3 1 3 6 1 Benign

1241232 3 1 4 1 2 ? 3 1 1 Benign

1241559 10 8 8 2 8 10 4 8 10 Malignant

1241679 9 8 8 5 6 2 4 10 4 Malignant

1242364 8 10 10 8 6 9 3 10 10 Malignant

1243256 10 4 3 2 3 10 5 3 2 Malignant

1270479 5 1 3 3 2 2 2 3 1 Benign

1276091 3 1 1 3 1 1 3 1 1 Benign

1277018 2 1 1 1 2 1 3 1 1 Benign

128059 1 1 1 1 2 5 5 1 1 Benign

1285531 1 1 1 1 2 1 3 1 1 Benign

1287775 5 1 1 2 2 2 3 1 1 Benign

144888 8 10 10 8 5 10 7 8 1 Malignant

145447 8 4 4 1 2 9 3 3 1 Malignant

167528 4 1 1 1 2 1 3 6 1 Benign

169356 3 1 1 1 2 ? 3 1 1 Benign

183913 1 2 2 1 2 1 1 1 1 Benign

191250 10 4 4 10 2 10 5 3 3 Malignant

1017023 6 3 3 5 3 10 3 5 3 Benign

1100524 6 10 10 2 8 10 7 3 3 Malignant

1116116 9 10 10 1 10 8 3 3 1 Malignant

1168736 5 6 6 2 4 10 3 6 1 Malignant

1182404 3 1 1 1 2 1 1 1 1 Benign

1182404 3 1 1 1 2 1 2 1 1 Benign

1198641 3 1 1 1 2 1 3 1 1 Benign

242970 5 7 7 1 5 8 3 4 1 Benign

255644 10 5 8 10 3 10 5 1 3 Malignant

263538 5 10 10 6 10 10 10 6 5 Malignant

274137 8 8 9 4 5 10 7 8 1 Malignant

303213 10 4 4 10 6 10 5 5 1 Malignant

314428 7 9 4 10 10 3 5 3 3 Malignant

1182404 5 1 4 1 2 1 3 2 1 Benign

1198641 10 10 6 3 3 10 4 3 2 Malignant

320675 3 3 5 2 3 10 7 1 1 Malignant

324427 10 8 8 2 3 4 8 7 8 Malignant

385103 1 1 1 1 2 1 3 1 1 Benign

390840 8 4 7 1 3 10 3 9 2 Malignant

411453 5 1 1 1 2 1 3 1 1 Benign

320675 3 3 5 2 3 10 7 1 1 Malignant

428903 7 2 4 1 3 4 3 3 1 Malignant

431495 3 1 1 1 2 1 3 2 1 Benign

432809 3 1 3 1 2 ? 2 1 1 Benign

434518 3 1 1 1 2 1 2 1 1 Benign

452264 1 1 1 1 2 1 2 1 1 Benign

456282 1 1 1 1 2 1 3 1 1 Benign

476903 10 5 7 3 3 7 3 3 8 Malignant

486283 3 1 1 1 2 1 3 1 1 Benign

486662 2 1 1 2 2 1 3 1 1 Benign

488173 1 4 3 10 4 10 5 6 1 Malignant

492268 10 4 6 1 2 10 5 3 1 Malignant

33

508234 7 4 5 10 2 10 3 8 2 Malignant

527363 8 10 10 10 8 10 10 7 3 Malignant

529329 10 10 10 10 10 10 4 10 10 Malignant

535331 3 1 1 1 3 1 2 1 1 Benign

543558 6 1 3 1 4 5 5 10 1 Malignant

555977 5 6 6 8 6 10 4 10 4 Malignant

560680 1 1 1 1 2 1 1 1 1 Benign

561477 1 1 1 1 2 1 3 1 1 Benign

563649 8 8 8 1 2 ? 6 10 1 Malignant

601265 10 4 4 6 2 10 2 3 1 Malignant

606140 1 1 1 1 2 ? 2 1 1 Benign

606722 5 5 7 8 6 10 7 4 1 Malignant

616240 5 3 4 3 4 5 4 7 1 Benign

61634 5 4 3 1 2 ? 2 3 1 Benign

625201 8 2 1 1 5 1 1 1 1 Benign

63375 9 1 2 6 4 10 7 7 2 Malignant

635844 8 4 10 5 4 4 7 10 1 Malignant

636130 1 1 1 1 2 1 3 1 1 Benign

640744 10 10 10 7 9 10 7 10 10 Malignant

646904 1 1 1 1 2 1 3 1 1 Benign

653777 8 3 4 9 3 10 3 3 1 Malignant

659642 10 8 4 4 4 10 3 10 4 Malignant

666090 1 1 1 1 2 1 3 1 1 Benign

666942 1 1 1 1 2 1 3 1 1 Benign

667204 7 8 7 6 4 3 8 8 4 Malignant

673637 3 1 1 1 2 5 5 1 1 Benign

684955 2 1 1 1 3 1 2 1 1 Benign

688033 1 1 1 1 2 1 1 1 1 Benign

691628 8 6 4 10 10 1 3 5 1 Malignant

693702 1 1 1 1 2 1 1 1 1 Benign

704097 1 1 1 1 1 1 2 1 1 Benign

704168 4 6 5 6 7 ? 4 9 1 Benign

706426 5 5 5 2 5 10 4 3 1 Malignant

709287 6 8 7 8 6 8 8 9 1 Malignant

718641 1 1 1 1 5 1 3 1 1 Benign

721482 4 4 4 4 6 5 7 3 1 Benign

730881 7 6 3 2 5 10 7 4 6 Malignant

733639 3 1 1 1 2 ? 3 1 1 Benign

733639 3 1 1 1 2 1 3 1 1 Benign

733823 5 4 6 10 2 10 4 1 1 Malignant

740492 1 1 1 1 2 1 3 1 1 Benign

743348 3 2 2 1 2 1 2 3 1 Benign

752904 10 1 1 1 2 10 5 4 1 Malignant

756136 1 1 1 1 2 1 2 1 1 Benign

760001 8 10 3 2 6 4 3 10 1 Malignant

760239 10 4 6 4 5 10 7 1 1 Malignant

76389 10 4 7 2 2 8 6 1 1 Malignant

764974 5 1 1 1 2 1 3 1 2 Benign

770066 5 2 2 2 2 1 2 2 1 Benign

785208 5 4 6 6 4 10 4 3 1 Malignant

785615 8 6 7 3 3 10 3 4 2 Malignant

792744 1 1 1 1 2 1 1 1 1 Benign

797327 6 5 5 8 4 10 3 4 1 Malignant

798429 1 1 1 1 2 1 3 1 1 Benign

704097 1 1 1 1 1 1 2 1 1 Benign

806423 8 5 5 5 2 10 4 3 1 Malignant

809912 10 3 3 1 2 10 7 6 1 Malignant

810104 1 1 1 1 2 1 3 1 1 Benign

814265 2 1 1 1 2 1 1 1 1 Benign

814911 1 1 1 1 2 1 1 1 1 Benign

822829 7 6 4 8 10 10 9 5 3 Malignant

826923 1 1 1 1 2 1 1 1 1 Benign

830690 5 2 2 2 3 1 1 3 1 Benign

831268 1 1 1 1 1 1 1 3 1 Benign

832226 3 4 4 10 5 1 3 3 1 Malignant

832567 4 2 3 5 3 8 7 6 1 Malignant

836433 5 1 1 3 2 1 1 1 1 Benign

837082 2 1 1 1 2 1 3 1 1 Benign

846832 3 4 5 3 7 3 4 6 1 Benign

850831 2 7 10 10 7 10 4 9 4 Malignant

855524 1 1 1 1 2 1 2 1 1 Benign

857774 4 1 1 1 3 1 2 2 1 Benign

859164 5 3 3 1 3 3 3 3 3 Malignant

34

859350 8 10 10 7 10 10 7 3 8 Malignant

866325 8 10 5 3 8 4 4 10 3 Malignant

873549 10 3 5 4 3 7 3 5 3 Malignant

877291 6 10 10 10 10 10 8 10 10 Malignant

877943 3 10 3 10 6 10 5 1 4 Malignant

888169 3 2 2 1 4 3 2 1 1 Benign

888523 4 4 4 2 2 3 2 1 1 Benign

896404 2 1 1 1 2 1 3 1 1 Benign

897172 2 1 1 1 2 1 2 1 1 Benign

95719 6 10 10 10 8 10 7 10 7 Malignant

160296 5 8 8 10 5 10 8 10 3 Malignant

342245 1 1 3 1 2 1 1 1 1 Benign

428598 1 1 3 1 1 1 2 1 1 Benign

492561 4 3 2 1 3 1 2 1 1 Benign

493452 1 1 3 1 2 1 1 1 1 Benign

493452 4 1 2 1 2 1 2 1 1 Benign

521441 5 1 1 2 2 1 2 1 1 Benign

560680 3 1 2 1 2 1 2 1 1 Benign

636437 1 1 1 1 2 1 1 1 1 Benign

640712 1 1 1 1 2 1 2 1 1 Benign

654244 1 1 1 1 1 1 2 1 1 Benign

657753 3 1 1 4 3 1 2 2 1 Benign

685977 5 3 4 1 4 1 3 1 1 Benign

805448 1 1 1 1 2 1 1 1 1 Benign

846423 10 6 3 6 4 10 7 8 4 Malignant

1002504 3 2 2 2 2 1 3 2 1 Benign

1022257 2 1 1 1 2 1 1 1 1 Benign

1026122 2 1 1 1 2 1 1 1 1 Benign

1071084 3 3 2 2 3 1 1 2 3 Benign

1080233 7 6 6 3 2 10 7 1 1 Malignant

1114570 5 3 3 2 3 1 3 1 1 Benign

1114570 2 1 1 1 2 1 2 2 1 Benign

1116715 5 1 1 1 3 2 2 2 1 Benign

1131411 1 1 1 2 2 1 2 1 1 Benign

1151734 10 8 7 4 3 10 7 9 1 Malignant

1156017 3 1 1 1 2 1 2 1 1 Benign

1158247 1 1 1 1 1 1 1 1 1 Benign

1158405 1 2 3 1 2 1 2 1 1 Benign

1168278 3 1 1 1 2 1 2 1 1 Benign

1176187 3 1 1 1 2 1 3 1 1 Benign

1196263 4 1 1 1 2 1 1 1 1 Benign

1196475 3 2 1 1 2 1 2 2 1 Benign

1206314 1 2 3 1 2 1 1 1 1 Benign

1211265 3 10 8 7 6 9 9 3 8 Malignant

1213784 3 1 1 1 2 1 1 1 1 Benign

1223003 5 3 3 1 2 1 2 1 1 Benign

1223306 3 1 1 1 2 4 1 1 1 Benign

1223543 1 2 1 3 2 1 1 2 1 Benign

1229929 1 1 1 1 2 1 2 1 1 Benign

1231853 4 2 2 1 2 1 2 1 1 Benign

1234554 1 1 1 1 2 1 2 1 1 Benign

1236837 2 3 2 2 2 2 3 1 1 Benign

1237674 3 1 2 1 2 1 2 1 1 Benign

1238021 1 1 1 1 2 1 2 1 1 Benign

1238464 1 1 1 1 1 ? 2 1 1 Benign

1238633 10 10 10 6 8 4 8 5 1 Malignant

1238915 5 1 2 1 2 1 3 1 1 Benign

1238948 8 5 6 2 3 10 6 6 1 Malignant

1239232 3 3 2 6 3 3 3 5 1 Benign

1239347 8 7 8 5 10 10 7 2 1 Malignant

1239967 1 1 1 1 2 1 2 1 1 Benign

1240337 5 2 2 2 2 2 3 2 2 Benign

1253505 2 3 1 1 5 1 1 1 1 Benign

1255384 3 2 2 3 2 3 3 1 1 Benign

1257200 10 10 10 7 10 10 8 2 1 Malignant

1257648 4 3 3 1 2 1 3 3 1 Benign

1257815 5 1 3 1 2 1 2 1 1 Benign

1257938 3 1 1 1 2 1 1 1 1 Benign

1258549 9 10 10 10 10 10 10 10 1 Malignant

1258556 5 3 6 1 2 1 1 1 1 Benign

1266154 8 7 8 2 4 2 5 10 1 Malignant

1272039 1 1 1 1 2 1 2 1 1 Benign

1276091 2 1 1 1 2 1 2 1 1 Benign

35

1276091 1 3 1 1 2 1 2 2 1 Benign

1276091 5 1 1 3 4 1 3 2 1 Benign

1277629 5 1 1 1 2 1 2 2 1 Benign

1293439 3 2 2 3 2 1 1 1 1 Benign

1293439 6 9 7 5 5 8 4 2 1 Benign

1294562 10 8 10 1 3 10 5 1 1 Malignant

1295186 10 10 10 1 6 1 2 8 1 Malignant

527337 4 1 1 1 2 1 1 1 1 Benign

558538 4 1 3 3 2 1 1 1 1 Benign

566509 5 1 1 1 2 1 1 1 1 Benign

608157 10 4 3 10 4 10 10 1 1 Malignant

677910 5 2 2 4 2 4 1 1 1 Benign

734111 1 1 1 3 2 3 1 1 1 Benign

734111 1 1 1 1 2 2 1 1 1 Benign

780555 5 1 1 6 3 1 2 1 1 Benign

827627 2 1 1 1 2 1 1 1 1 Benign

1049837 1 1 1 1 2 1 1 1 1 Benign

1058849 5 1 1 1 2 1 1 1 1 Benign

1182404 1 1 1 1 1 1 1 1 1 Benign

1193544 5 7 9 8 6 10 8 10 1 Malignant

1201870 4 1 1 3 1 1 2 1 1 Benign

1202253 5 1 1 1 2 1 1 1 1 Benign

1227081 3 1 1 3 2 1 1 1 1 Benign

1230994 4 5 5 8 6 10 10 7 1 Malignant

1238410 2 3 1 1 3 1 1 1 1 Benign

1246562 10 2 2 1 2 6 1 1 2 Malignant

1257470 10 6 5 8 5 10 8 6 1 Malignant

1259008 8 8 9 6 6 3 10 10 1 Malignant

1266124 5 1 2 1 2 1 1 1 1 Benign

1267898 5 1 3 1 2 1 1 1 1 Benign

1268313 5 1 1 3 2 1 1 1 1 Benign

1268804 3 1 1 1 2 5 1 1 1 Benign

1276091 6 1 1 3 2 1 1 1 1 Benign

1280258 4 1 1 1 2 1 1 2 1 Benign

1293966 4 1 1 1 2 1 1 1 1 Benign

1296572 10 9 8 7 6 4 7 10 3 Malignant

1298416 10 6 6 2 4 10 9 7 1 Malignant

1299596 6 6 6 5 4 10 7 6 2 Malignant

1105524 4 1 1 1 2 1 1 1 1 Benign

1181685 1 1 2 1 2 1 2 1 1 Benign

1211594 3 1 1 1 1 1 2 1 1 Benign

1238777 6 1 1 3 2 1 1 1 1 Benign

1257608 6 1 1 1 1 1 1 1 1 Benign

1269574 4 1 1 1 2 1 1 1 1 Benign

1277145 5 1 1 1 2 1 1 1 1 Benign

1287282 3 1 1 1 2 1 1 1 1 Benign

1296025 4 1 2 1 2 1 1 1 1 Benign

1296263 4 1 1 1 2 1 1 1 1 Benign

1296593 5 2 1 1 2 1 1 1 1 Benign

1299161 4 8 7 10 4 10 7 5 1 Malignant

1301945 5 1 1 1 1 1 1 1 1 Benign

1302428 5 3 2 4 2 1 1 1 1 Benign

1318169 9 10 10 10 10 5 10 10 10 Malignant

474162 8 7 8 5 5 10 9 10 1 Malignant

787451 5 1 2 1 2 1 1 1 1 Benign

1002025 1 1 1 3 1 3 1 1 1 Benign

1070522 3 1 1 1 1 1 2 1 1 Benign

1073960 10 10 10 10 6 10 8 1 5 Malignant

1076352 3 6 4 10 3 3 3 4 1 Malignant

1084139 6 3 2 1 3 4 4 1 1 Malignant

1115293 1 1 1 1 2 1 1 1 1 Benign

1119189 5 8 9 4 3 10 7 1 1 Malignant

1133991 4 1 1 1 1 1 2 1 1 Benign

1142706 5 10 10 10 6 10 6 5 2 Malignant

1155967 5 1 2 10 4 5 2 1 1 Benign

1170945 3 1 1 1 1 1 2 1 1 Benign

1181567 1 1 1 1 1 1 1 1 1 Benign

1182404 4 2 1 1 2 1 1 1 1 Benign

1204558 4 1 1 1 2 1 2 1 1 Benign

1217952 4 1 1 1 2 1 2 1 1 Benign

1224565 6 1 1 1 2 1 3 1 1 Benign

1238186 4 1 1 1 2 1 2 1 1 Benign

1253917 4 1 1 2 2 1 2 1 1 Benign

36

1265899 4 1 1 1 2 1 3 1 1 Benign

1268766 1 1 1 1 2 1 1 1 1 Benign

1277268 3 3 1 1 2 1 1 1 1 Benign

1286943 8 10 10 10 7 5 4 8 7 Malignant

1295508 1 1 1 1 2 4 1 1 1 Benign

1297327 5 1 1 1 2 1 1 1 1 Benign

1297522 2 1 1 1 2 1 1 1 1 Benign

1298360 1 1 1 1 2 1 1 1 1 Benign

1299924 5 1 1 1 2 1 2 1 1 Benign

1299994 5 1 1 1 2 1 1 1 1 Benign

1304595 3 1 1 1 1 1 2 1 1 Benign

1306282 6 6 7 10 3 10 8 10 2 Malignant

1313325 4 10 4 7 3 10 9 10 1 Malignant

1320077 1 1 1 1 1 1 1 1 1 Benign

1320077 1 1 1 1 1 1 2 1 1 Benign

1320304 3 1 2 2 2 1 1 1 1 Benign

1330439 4 7 8 3 4 10 9 1 1 Malignant

333093 1 1 1 1 3 1 1 1 1 Benign

369565 4 1 1 1 3 1 1 1 1 Benign

412300 10 4 5 4 3 5 7 3 1 Malignant

672113 7 5 6 10 4 10 5 3 1 Malignant

749653 3 1 1 1 2 1 2 1 1 Benign

769612 3 1 1 2 2 1 1 1 1 Benign

769612 4 1 1 1 2 1 1 1 1 Benign

798429 4 1 1 1 2 1 3 1 1 Benign

807657 6 1 3 2 2 1 1 1 1 Benign

8233704 4 1 1 1 1 1 2 1 1 Benign

837480 7 4 4 3 4 10 6 9 1 Malignant

867392 4 2 2 1 2 1 2 1 1 Benign

869828 1 1 1 1 1 1 3 1 1 Benign

1043068 3 1 1 1 2 1 2 1 1 Benign

1056171 2 1 1 1 2 1 2 1 1 Benign

1061990 1 1 3 2 2 1 3 1 1 Benign

1113061 5 1 1 1 2 1 3 1 1 Benign

1116192 5 1 2 1 2 1 3 1 1 Benign

1135090 4 1 1 1 2 1 2 1 1 Benign

1145420 6 1 1 1 2 1 2 1 1 Benign

1158157 5 1 1 1 2 2 2 1 1 Benign

1171578 3 1 1 1 2 1 1 1 1 Benign

1174841 5 3 1 1 2 1 1 1 1 Benign

1184586 4 1 1 1 2 1 2 1 1 Benign

1186936 2 1 3 2 2 1 2 1 1 Benign

1197527 5 1 1 1 2 1 2 1 1 Benign

1222464 6 10 10 10 4 10 7 10 1 Malignant

1240603 2 1 1 1 1 1 1 1 1 Benign

1240603 3 1 1 1 1 1 1 1 1 Benign

1241035 7 8 3 7 4 5 7 8 2 Malignant

1287971 3 1 1 1 2 1 2 1 1 Benign

1289391 1 1 1 1 2 1 3 1 1 Benign

1299924 3 2 2 2 2 1 4 2 1 Benign

1306339 4 4 2 1 2 5 2 1 2 Benign

1313658 3 1 1 1 2 1 1 1 1 Benign

1313982 4 3 1 1 2 1 4 8 1 Benign

1321264 5 2 2 2 1 1 2 1 1 Benign

1321321 5 1 1 3 2 1 1 1 1 Benign

1321348 2 1 1 1 2 1 2 1 1 Benign

1321931 5 1 1 1 2 1 2 1 1 Benign

1321942 5 1 1 1 2 1 3 1 1 Benign

1321942 5 1 1 1 2 1 3 1 1 Benign

1328331 1 1 1 1 2 1 3 1 1 Benign

1328755 3 1 1 1 2 1 2 1 1 Benign

1331405 4 1 1 1 2 1 3 2 1 Benign

1331412 5 7 10 10 5 10 10 10 1 Malignant

1333104 3 1 2 1 2 1 3 1 1 Benign

1334071 4 1 1 1 2 3 2 1 1 Benign

1343068 8 4 4 1 6 10 2 5 2 Malignant

1343374 10 10 8 10 6 5 10 3 1 Malignant

1344121 8 10 4 4 8 10 8 2 1 Malignant

142932 7 6 10 5 3 10 9 10 2 Malignant

183936 3 1 1 1 2 1 2 1 1 Benign

324382 1 1 1 1 2 1 2 1 1 Benign

378275 10 9 7 3 4 2 7 7 1 Malignant

385103 5 1 2 1 2 1 3 1 1 Benign

37

690557 5 1 1 1 2 1 2 1 1 Benign

695091 1 1 1 1 2 1 2 1 1 Benign

695219 1 1 1 1 2 1 2 1 1 Benign

824249 1 1 1 1 2 1 3 1 1 Benign

871549 5 1 2 1 2 1 2 1 1 Benign

878358 5 7 10 6 5 10 7 5 1 Malignant

1107684 6 10 5 5 4 10 6 10 1 Malignant

1115762 3 1 1 1 2 1 1 1 1 Benign

1217717 5 1 1 6 3 1 1 1 1 Benign

1239420 1 1 1 1 2 1 1 1 1 Benign

1254538 8 10 10 10 6 10 10 10 1 Malignant

1261751 5 1 1 1 2 1 2 2 1 Benign

1268275 9 8 8 9 6 3 4 1 1 Malignant

1272166 5 1 1 1 2 1 1 1 1 Benign

1294261 4 10 8 5 4 1 10 1 1 Malignant

1295529 2 5 7 6 4 10 7 6 1 Malignant

1298484 10 3 4 5 3 10 4 1 1 Malignant

1311875 5 1 2 1 2 1 1 1 1 Benign

1315506 4 8 6 3 4 10 7 1 1 Malignant

1320141 5 1 1 1 2 1 2 1 1 Benign

1325309 4 1 2 1 2 1 2 1 1 Benign

1333063 5 1 3 1 2 1 3 1 1 Benign

1333495 3 1 1 1 2 1 2 1 1 Benign

1334659 5 2 4 1 1 1 1 1 1 Benign

1336798 3 1 1 1 2 1 2 1 1 Benign

1344449 1 1 1 1 1 1 2 1 1 Benign

1350568 4 1 1 1 2 1 2 1 1 Benign

1352663 5 4 6 8 4 1 8 10 1 Malignant

188336 5 3 2 8 5 10 8 1 2 Malignant

352431 10 5 10 3 5 8 7 8 3 Malignant

353098 4 1 1 2 2 1 1 1 1 Benign

411453 1 1 1 1 2 1 1 1 1 Benign

557583 5 10 10 10 10 10 10 1 1 Malignant

636375 5 1 1 1 2 1 1 1 1 Benign

736150 10 4 3 10 3 10 7 1 2 Malignant

803531 5 10 10 10 5 2 8 5 1 Malignant

822829 8 10 10 10 6 10 10 10 10 Malignant

1016634 2 3 1 1 2 1 2 1 1 Benign

1031608 2 1 1 1 1 1 2 1 1 Benign

1041043 4 1 3 1 2 1 2 1 1 Benign

1042252 3 1 1 1 2 1 2 1 1 Benign

1057067 1 1 1 1 1 ? 1 1 1 Benign

1061990 4 1 1 1 2 1 2 1 1 Benign

1073836 5 1 1 1 2 1 2 1 1 Benign

1083817 3 1 1 1 2 1 2 1 1 Benign

1096352 6 3 3 3 3 2 6 1 1 Benign

1140597 7 1 2 3 2 1 2 1 1 Benign

1149548 1 1 1 1 2 1 1 1 1 Benign

1174009 5 1 1 2 1 1 2 1 1 Benign

1183596 3 1 3 1 3 4 1 1 1 Benign

1190386 4 6 6 5 7 6 7 7 3 Malignant

1190546 2 1 1 1 2 5 1 1 1 Benign

1213273 2 1 1 1 2 1 1 1 1 Benign

1218982 4 1 1 1 2 1 1 1 1 Benign

1225382 6 2 3 1 2 1 1 1 1 Benign

1235807 5 1 1 1 2 1 2 1 1 Benign

1238777 1 1 1 1 2 1 1 1 1 Benign

1253955 8 7 4 4 5 3 5 10 1 Malignant

1257366 3 1 1 1 2 1 1 1 1 Benign

1260659 3 1 4 1 2 1 1 1 1 Benign

1268952 10 10 7 8 7 1 10 10 3 Malignant

1275807 4 2 4 3 2 2 2 1 1 Benign

1277792 4 1 1 1 2 1 1 1 1 Benign

1277792 5 1 1 3 2 1 1 1 1 Benign

1285722 4 1 1 3 2 1 1 1 1 Benign

1288608 3 1 1 1 2 1 2 1 1 Benign

1290203 3 1 1 1 2 1 2 1 1 Benign

1294413 1 1 1 1 2 1 1 1 1 Benign

1299596 2 1 1 1 2 1 1 1 1 Benign

1303489 3 1 1 1 2 1 2 1 1 Benign

1311033 1 2 2 1 2 1 1 1 1 Benign

1311108 1 1 1 3 2 1 1 1 1 Benign

1315807 5 10 10 10 10 2 10 10 10 Malignant

38

1318671 3 1 1 1 2 1 2 1 1 Benign

1319609 3 1 1 2 3 4 1 1 1 Benign

1323477 1 2 1 3 2 1 2 1 1 Benign

1324572 5 1 1 1 2 1 2 2 1 Benign

1324681 4 1 1 1 2 1 2 1 1 Benign

1325159 3 1 1 1 2 1 3 1 1 Benign

1326892 3 1 1 1 2 1 2 1 1 Benign

1330361 5 1 1 1 2 1 2 1 1 Benign

1333877 5 4 5 1 8 1 3 6 1 Benign

1334015 7 8 8 7 3 10 7 2 3 Malignant

1334667 1 1 1 1 2 1 1 1 1 Benign

1339781 1 1 1 1 2 1 2 1 1 Benign

1339781 4 1 1 1 2 1 3 1 1 Benign

13454352 1 1 3 1 2 1 2 1 1 Benign

1345452 1 1 3 1 2 1 2 1 1 Benign

1345593 3 1 1 3 2 1 2 1 1 Benign

1347749 1 1 1 1 2 1 1 1 1 Benign

1347943 5 2 2 2 2 1 1 1 2 Benign

1348851 3 1 1 1 2 1 3 1 1 Benign

1350319 5 7 4 1 6 1 7 10 3 Malignant

1350423 5 10 10 8 5 5 7 10 1 Malignant

1352848 3 10 7 8 5 8 7 4 1 Malignant

1353092 3 2 1 2 2 1 3 1 1 Benign

1354840 2 1 1 1 2 1 3 1 1 Benign

1354840 5 3 2 1 3 1 1 1 1 Benign

1355260 1 1 1 1 2 1 2 1 1 Benign

1365075 4 1 4 1 2 1 1 1 1 Benign

1365328 1 1 2 1 2 1 2 1 1 Benign

1368267 5 1 1 1 2 1 1 1 1 Benign

1368273 1 1 1 1 2 1 1 1 1 Benign

1368882 2 1 1 1 2 1 1 1 1 Benign

1369821 10 10 10 10 5 10 10 10 7 Malignant

1371026 5 10 10 10 4 10 5 6 3 Malignant

1371920 5 1 1 1 2 1 3 2 1 Benign

466906 1 1 1 1 2 1 1 1 1 Benign

466906 1 1 1 1 2 1 1 1 1 Benign

534555 1 1 1 1 2 1 1 1 1 Benign

536708 1 1 1 1 2 1 1 1 1 Benign

566346 3 1 1 1 2 1 2 3 1 Benign

603148 4 1 1 1 2 1 1 1 1 Benign

654546 1 1 1 1 2 1 1 1 8 Benign

654546 1 1 1 3 2 1 1 1 1 Benign

695091 5 10 10 5 4 5 4 4 1 Malignant

714039 3 1 1 1 2 1 1 1 1 Benign

763235 3 1 1 1 2 1 2 1 2 Benign

776715 3 1 1 1 3 2 1 1 1 Benign

841769 2 1 1 1 2 1 1 1 1 Benign

888820 5 10 10 3 7 3 8 10 2 Malignant

897471 4 8 6 4 3 4 10 6 1 Malignant

897471 4 8 8 5 4 5 10 4 1 Malignant

You might also like

- Intelligent SensorDocument19 pagesIntelligent SensorRaj HakaniNo ratings yet

- Symphony CorporateDocument48 pagesSymphony CorporatepptdownloadsNo ratings yet

- 1800flowers Com Company AnalysisDocument21 pages1800flowers Com Company AnalysissyedsubzposhNo ratings yet

- Cipla PresentationDocument11 pagesCipla Presentationdavebharat2275% (8)

- Case Study-Infosys IngeniousDocument1 pageCase Study-Infosys IngeniousDeepikaNo ratings yet

- Case Study Orange CountyDocument3 pagesCase Study Orange CountyJobayer Islam Tunan100% (1)

- DR Reddy'sDocument6 pagesDR Reddy'sViraat Lakhanpal0% (1)

- Market Analysis :glucometrDocument41 pagesMarket Analysis :glucometrARVINDSD100% (1)

- Homework No. 1: Instructions: Encode Your Answers in Microsoft Word, Save The File With Document NameDocument2 pagesHomework No. 1: Instructions: Encode Your Answers in Microsoft Word, Save The File With Document Namer4inbowNo ratings yet

- Comparision of HPCL, BPCL & IOCLDocument57 pagesComparision of HPCL, BPCL & IOCLpushpa patel54% (13)

- Ather Energy Case Study: Spearheading India'S Switch To Clean EnergyDocument2 pagesAther Energy Case Study: Spearheading India'S Switch To Clean EnergyANSHUL KANODIANo ratings yet

- Assignment On Secure Electronic TransactionDocument10 pagesAssignment On Secure Electronic TransactionSubhash SagarNo ratings yet

- HSBC Global StandardDocument2 pagesHSBC Global StandardTivanthini GkNo ratings yet

- Itc Case Study - September5Document21 pagesItc Case Study - September5Sagar PatelNo ratings yet

- Seven Eleven Japan CompanyDocument19 pagesSeven Eleven Japan CompanyJithin NairNo ratings yet

- REMS Abuse and The CREATES ActDocument2 pagesREMS Abuse and The CREATES ActArnold VenturesNo ratings yet

- Green Mountain ResortDocument3 pagesGreen Mountain ResortTaha H. Ahmed100% (1)

- Atrum Coal Investment Fact Sheet February 2012Document2 pagesAtrum Coal Investment Fact Sheet February 2012Game_BellNo ratings yet

- Corporate Innovation PDFDocument10 pagesCorporate Innovation PDFCésar YarlaquéNo ratings yet

- Accor - Strengthening The Brand With Digital Marketing (Group 1, Section D)Document2 pagesAccor - Strengthening The Brand With Digital Marketing (Group 1, Section D)Arnob RayNo ratings yet

- Competitive StrategiesDocument31 pagesCompetitive StrategiesDarshan PatilNo ratings yet

- AMUL Pro FinalDocument38 pagesAMUL Pro Finalimfareed100% (1)

- Emudhra Embridge Windows Troubleshooting GuideDocument7 pagesEmudhra Embridge Windows Troubleshooting GuideAmit JindalNo ratings yet

- Godrej Builds 'Sampark' With Distributors - E-Business - Express Computer IndiaDocument2 pagesGodrej Builds 'Sampark' With Distributors - E-Business - Express Computer IndiaPradeep DubeyNo ratings yet

- Macro Economic Factors Affecting Pharma IndustryDocument2 pagesMacro Economic Factors Affecting Pharma IndustryDayaNo ratings yet

- Updated Report CiplaDocument5 pagesUpdated Report Ciplanitzr7No ratings yet

- Loyalty Management Platform - Tracxn Business Model Report - 28 Jul 2020Document98 pagesLoyalty Management Platform - Tracxn Business Model Report - 28 Jul 2020Prajval SomaniNo ratings yet

- Social Media AnalyticsDocument15 pagesSocial Media AnalyticsAbhishek SaxenaNo ratings yet

- CVS HealthDocument4 pagesCVS Healthsalman sheikhNo ratings yet

- Aata PresentationDocument20 pagesAata PresentationFiroz Ahamed100% (1)

- Apple Vs Epic GamesDocument6 pagesApple Vs Epic GamesAɗɘ ɘɭNo ratings yet

- Summary Create New Market SpaceDocument2 pagesSummary Create New Market SpaceMubashir JafriNo ratings yet

- Strategic Individual Assignment 3Document6 pagesStrategic Individual Assignment 3Thu Hiền KhươngNo ratings yet

- Managing Presales - Div C - Group - 2 - RFP - Cisco Systems Implementing ERPDocument11 pagesManaging Presales - Div C - Group - 2 - RFP - Cisco Systems Implementing ERPrammanohar22No ratings yet

- GodrejDocument7 pagesGodrejKETAN085No ratings yet

- Whitepaper PDFDocument44 pagesWhitepaper PDFVicky SusantoNo ratings yet

- Nestlé 4Ps Challenger CaseDocument7 pagesNestlé 4Ps Challenger CaseYash AgarwalNo ratings yet

- Session 4 & 5Document23 pagesSession 4 & 5Sanchit BatraNo ratings yet

- SBI Plans 'By-Invitation-Only' Branches in 20 CitiesDocument1 pageSBI Plans 'By-Invitation-Only' Branches in 20 CitiesSanjay Sinha0% (1)

- Bhanu Management Tata NanoDocument16 pagesBhanu Management Tata NanoAbhishek YadavNo ratings yet

- Netmeds SrsDocument3 pagesNetmeds SrsDirector Ishu ChawlaNo ratings yet

- Interim ReportDocument12 pagesInterim ReportSiddhant YadavNo ratings yet

- Cera Sanitaryware - CRISIL - Aug 2014Document32 pagesCera Sanitaryware - CRISIL - Aug 2014vishmittNo ratings yet

- ITC YippeeDocument18 pagesITC YippeeGurleen Singh Chandok50% (2)

- Scientific Management: Principles in Today'S Industries and OrganisationsDocument18 pagesScientific Management: Principles in Today'S Industries and OrganisationsVaidehi VRNo ratings yet

- Abstract On Six SigmaDocument1 pageAbstract On Six Sigmahardish_trivedi7005100% (2)

- Case Study Analysis: Chestnut FoodsDocument7 pagesCase Study Analysis: Chestnut FoodsNaman KohliNo ratings yet

- Business Opportunity of Zomato in BangladeshDocument2 pagesBusiness Opportunity of Zomato in Bangladeshanu sahaNo ratings yet

- Plagiarism - ReportDocument49 pagesPlagiarism - ReportravinyseNo ratings yet

- Quietly Brilliant HTCDocument2 pagesQuietly Brilliant HTCOxky Setiawan WibisonoNo ratings yet

- Faircent P2P Case AnalysisDocument5 pagesFaircent P2P Case AnalysisSiddharth KashyapNo ratings yet

- Business StrategyDocument23 pagesBusiness Strategywilsonngary100% (1)

- CiplaDocument10 pagesCiplaamrut9100% (1)

- Vendor Master RecordsDocument8 pagesVendor Master RecordsKishore NaiduNo ratings yet

- Cs08 Rfid MetroDocument8 pagesCs08 Rfid MetroMartha SanchezNo ratings yet

- Cipla LTD: Company AnalysisDocument13 pagesCipla LTD: Company AnalysisDante DonNo ratings yet

- BRM Taaza ThindiDocument14 pagesBRM Taaza ThindiGagandeep V NNo ratings yet

- Marketline ReportDocument41 pagesMarketline ReportDinesh KumarNo ratings yet

- Convolutional Neural NetworksDocument28 pagesConvolutional Neural NetworksGOLDEN AGE FARMSNo ratings yet

- Comparison of Decision Tree Methods For Breast Cancer DiagnosisDocument7 pagesComparison of Decision Tree Methods For Breast Cancer DiagnosisEmina AličkovićNo ratings yet

- Oops Level 1 Oops ElabDocument59 pagesOops Level 1 Oops ElabChellamuthu HaripriyaNo ratings yet

- Dataforth Elit PDFDocument310 pagesDataforth Elit PDFDougie ChanNo ratings yet

- Trust-In Machine Learning ModelsDocument11 pagesTrust-In Machine Learning Modelssmartin1970No ratings yet

- Electromagnetic TheoryDocument8 pagesElectromagnetic TheoryAlakaaa PromodNo ratings yet

- Further Maths Week 9 Notes For SS2 PDFDocument11 pagesFurther Maths Week 9 Notes For SS2 PDFsophiaNo ratings yet

- Stability of Tapered and Stepped Steel Columns With Initial ImperfectionsDocument10 pagesStability of Tapered and Stepped Steel Columns With Initial ImperfectionskarpagajothimuruganNo ratings yet

- Lesson 1: Exponential Notation: Student OutcomesDocument9 pagesLesson 1: Exponential Notation: Student OutcomesDiyames RamosNo ratings yet

- Practice Paper: I. Candidates Are Informed That Answer Sheet Comprises TwoDocument19 pagesPractice Paper: I. Candidates Are Informed That Answer Sheet Comprises TwoRashid Ibn AkbarNo ratings yet

- Application of Statistics in Education: Naeem Khalid ROLL NO 2019-2716Document13 pagesApplication of Statistics in Education: Naeem Khalid ROLL NO 2019-2716Naeem khalidNo ratings yet

- Physics 119A Midterm Solutions 1 PDFDocument3 pagesPhysics 119A Midterm Solutions 1 PDFHenry JurneyNo ratings yet

- ECE457 Pattern Recognition Techniques and Algorithms: Answer All QuestionsDocument3 pagesECE457 Pattern Recognition Techniques and Algorithms: Answer All Questionskrishna135No ratings yet

- Mine System Analysis - Transportation, Transshipment and Assignment ProblemsDocument87 pagesMine System Analysis - Transportation, Transshipment and Assignment ProblemsAli ÇakırNo ratings yet

- 1.5 Differentiation Techniques Power and Sum Difference RulesDocument4 pages1.5 Differentiation Techniques Power and Sum Difference RulesVhigherlearning100% (1)

- Tutorial Week 10 - Internal Bone RemodellingDocument13 pagesTutorial Week 10 - Internal Bone RemodellingHussam El'SheikhNo ratings yet

- Ebcs 5 PDFDocument244 pagesEbcs 5 PDFAbera Mulugeta100% (4)

- Chapter 08Document25 pagesChapter 08Eyasu Dejene DanaNo ratings yet

- B262u3p127as cq939p ExerciseDocument3 pagesB262u3p127as cq939p Exercisenavaneethan senthilkumarNo ratings yet

- Excel 2007 Lecture NotesDocument20 pagesExcel 2007 Lecture Notessmb_146100% (1)

- Structural Analysis of Transmission Structures: 1.problem DescriptionDocument69 pagesStructural Analysis of Transmission Structures: 1.problem DescriptionMahesh ANo ratings yet

- PHD ACODocument13 pagesPHD ACOPrasadYadavNo ratings yet

- Hanson CaseDocument11 pagesHanson Casegharelu10No ratings yet

- Problems Involving Sets2.0Document39 pagesProblems Involving Sets2.0Agnes AcapuyanNo ratings yet

- Noelle Combs Inquiry LessonDocument6 pagesNoelle Combs Inquiry LessonBrandi Hughes CaldwellNo ratings yet

- Figueiredo 2016Document22 pagesFigueiredo 2016Annisa RahmadayantiNo ratings yet

- M&SCE NotesPart IDocument65 pagesM&SCE NotesPart IHassane AmadouNo ratings yet

- SmalloscillationsDocument12 pagesSmalloscillationsrajbaxeNo ratings yet

- Project-Musical Math PDFDocument3 pagesProject-Musical Math PDFsunil makwanaNo ratings yet