Professional Documents

Culture Documents

Face Recognition Using Multi-Support Vector Machines

Uploaded by

seventhsensegroupCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Face Recognition Using Multi-Support Vector Machines

Uploaded by

seventhsensegroupCopyright:

Available Formats

International Journal of Computer Trends and Technology (IJCTT) volume 4 Issue 8 August 2013

ISSN: 2231-2803 http://www.ijcttjournal.org Page 2556

Face Recognition Using Multi-Support Vector Machines

A.Swathi

1

, Dr.R.Pugazendi

2

Department of Computer Science,

K.S.Rangasamy College of Arts and Science, Tiruchengode, Tamilnadu, India

Abstract- In Face Identification task, an image of an

unknown person is matched to a gallery of known people.In

Face Verification task, is to accept or deny the identity

claimed by a person.Therefore, given two face images, the

goal is to decide whether two images are same or not.In

previous research PLS method which used for scalable to the

gallery size and modify one-against-all approach to use a

treebased structure. At each internal node of the tree, a

binary classifier based on PLS (Partial Least Square)

regression is used to guide the search of the matching subject

in the gallery. The use of this structure provides substantial

reduction in the number of comparisons when a probe sample

is matched against the gallery. There are very accurate

techniques to perform face identification in controlled

environments, particularly when large numbers of samples

are available for each face. As an enhancement this paper

proposes to use SVM (Support Vector Machine) classifier to

recognize the person. An experimental result shows that the

recognition accuracy and time efficiency has been increased

by using a SVM.

Keywords Binary Classification, Discriminant

Hyperplanes, Support Vector Machine, Partial Least Square.

I. INTRODUCTION

Face Recognition is an important part of the reported

security incidents, biometrics-based techniques are

becoming a very interesting authentication and identification

method for both IT Security providers and actually, for an

increasing number of sectors of the civil society.Biometrics

identifiers are built from a unique, physical or behavioral

trail of an individual for automatically recognizing or

verifying the identity [18]. As such, they provide a solution

to the problem of unequivocal identification of users, and,

hence, can efficiently prevent identity theft and

unauthorized access attacks.The research on face recognition

has recently proposed a new method[2] [3] based on one

class Support Vector Machines[17], which consider very

promising and worth looking at into more detail. Support

Vector Machines, the learning approach originally

developed by Vapnik et al[19], represent a powerful pattern

recognition method, able to deal with sample sizes of order

of hundred of thousands instances [10]. They have been

used till now for solving several practical problems, like

isolated handwritten digit recognition [14], speaker

identification [13], face detection [11] and text categorization

[9].

Mainly, Support Vector Machines are used for multi-

class classification [5], in which any new object is assigned

to one of a predefined set of classes [4].The proposed

face recognition procedure is built on the work presented in

[3], but also introduces a new component based approach, in

which the one-class SVM algorithm is applied to the main

components of the human face (eyes, nose and mouth). The

combined global and local approach performs better that the

original algorithm. The paper also proposes a different

feature extraction method instead of raw gray level features

and presents the experimental results obtained with three

different light normalization procedures.

The paper is organized as follows: the next section

presents the basic operational flow of the recognition

procedure and outlines the novelty of the proposed approach

with respect to the prior work. In the third section the

experimental results are presented. The paper ends with a

conclusions and future work section.

II.RELATED WORK

Partial Least Squares (PLS) has been widely adopted

as the most promising face recognition algorithm. PLS is

used extensively in all forms of analysis from neuroscience

to computer graphics because it is a simple, non-

parametric method of extracting relevant information from

confusing data sets. With minimal additional effort PLS

provides a roadmap to reduce a complex data set to a lower

dimension to reveal sometimes hidden, simplified structure

that often underlie it.

PLS is an orthogonal transformation of the coordinate

system in which the pixels are described. The main idea of

the principal component analysis is to find the vectors which

best describe the distribution of face images within the

entire image space and it aims to extract a subspace where

the variance is maximized. PLS is performed by projecting

a new image into the subspace called face space spanned by

the eigenfaces and then classifying the face by comparing its

position in face space with the positions of known

individuals.

A face image in 2-dimension with size N N can also

be considered as one dimensional vector of dimension

N

2

. The main idea of the principle component is to find the

vectors that best account for the distribution of face images

within the entire image space. These vectors define the

subspace of face images, which is call face space. Each

of these vectors is of length N

2

, describes an N N image,

International Journal of Computer Trends and Technology (IJCTT) volume 4 Issue 8 August 2013

ISSN: 2231-2803 http://www.ijcttjournal.org Page 2557

and is a linear combination of the original face images.

These vectors are the eigenvectors of thecovariance matrix

corresponding to the original face images, and because they

are face like in appearance, they are referred as

eigenfaces.The below algorithm is named as PLS which is

used for classification.

Step 1: The normalized training image in the N-

dimensional space is stored in a vector of size N. Let the

normalized training face image set,

T={X

1

, X

2.

X

N

} where X={x

1

, x

2

... x

m

}

T

Step 2: Each of the normalized training face images are

mean centered. This is done by subtracting the mean face

image fromeach of the normalized training images. The

mean image is represented as a column vector where each

scalar is the mean of all corresponding pixels of the training

images,

X X Xt

t

=

Where the average of the training face image set is defined as

1

1

N

i

X Xn

N

=

=

Step 3: Once the training face images are centered, the

next process is to create the Eigenspace which is the reduced

vectors of the mean normalized training face images.

The training images are combined into a data matrix of size

N by P, where P is the number of training images and each

column is a single image

2

1

{ , ,......, } P X X X X =

Step 4: The column vectors are combined into a data

matrix which is multiplied by its transpose to create a

covariance matrix. The covariance is defined as:

T

X X O=

Step 5: The Eigen values and corresponding eigenvectors

are computed for the covariance matrix using J acobian

transformation,

V V O = A

Where v is the set of eigenvectors associated with the Eigen

values .

Step 6: Order the eigenvectors V

i

V according to their

corresponding eigenvalues

i

from high to low with non-

zero eigenvalues. This matrix of eigenvectors is the

eigenspace zero eigenvalues. This matrix of eigenvectors is

the eigenspace.

{ }

1 2

, ,....,

i P

V V V V =

A. Problem Identification

Need to handle the problem of insufficient training

data.

Need to achieve high performance when only a

single sample per subject is available.

Need to reduce the number of comparisons made for

verification.

IV. PROPOSED METHOD

Support vector machines are supervised learning models

with associated learning algorithms that analyze data and

recognize patterns.It used for classification and regression

analysis. SVM takes a set of input data and predicts, for each

given input, which of two possible classes forms the output,

making it non-probabilistic binary linear classifier.

SVM maps input vectors to a higher dimensional vector

space where an optimal hyper plane is constructed.Among

the many hyper planes available, there is only one hyper

plane that maximizes the distance between itself and the

nearest data vectors of each category.This hyper plane which

maximizes the margin is called the optimal separating hyper

plane and the margin is defined as the sum of distances of the

hyper plane to the closest training vectors of each category.

Alternatively, an SVM-based face detector can be

designed. This work, extend the idea of [6, 7] to the multi-

view situation based on pose estimation. It is interesting to

notice that:

1. While the eigenface method models the probability density

of face patterns, the SVM-based method only models the

boundary between faces and non-faces;

2. By solving a quadratic programming problem, the SVM-

based method is guaranteed to converge to the global

optimum;

3. The solution is expressed directly by a subset of

important training examples called Support Vectors.

B. Linear case

Consider a set of l vectors {xi}, xi R

n

, 1 i l,

representing input samples and set of labels {yi }, yi {1},

that divide input samples into two classes, positive and

negative. If the two classes are linearly separable, there

existsa separating hyperplane (w, b) defining the function, in

equation (1)

f (x) =< w x > +b, (1)

And sgn (f (x)) shows on which side of the hyperplane x

rests,in other words the class of x. Vector w of the

separating hyperplane can be expressed as a linear

combination of xi (often called a dual representation of w)

with weights i:

i i

l i

i

y

s s

=

1

(2)

The dual representation of the decision function f (x) is then:

s s

+ > < =

l i

i i i

b x x y x f

1

. ) ( (3)

Training a linear SVM means finding the embedding strengths

{i} and offset b such that hyperplane (w, b) separates pos-

International Journal of Computer Trends and Technology (IJCTT) volume 4 Issue 8 August 2013

ISSN: 2231-2803 http://www.ijcttjournal.org Page 2558

itive samples from negatives ones with a maximal margin.

Notice that not all input vectors {xi} might be used in the

dual representation of w; those vectors xi that have weight

i > 0 and form w are called support vectors.

C. Non-linear case

In real-life problems it is rarely the case that positive

and negative samples are linearly separable. Non-linear

support vector classifiers map input space X into a feature

space F via a usually non-linear map : X F, x 1

(x) and solve the linear separation problem in the feature

space by finding weights i of the dual expression of the

separating hyperplanes vector w:

s s

=

l i

i i i

x y

1

) ( (4)

While the decision function f (x) takes the form

s s

+ < =

l i

i i i

b x x y x f

1

) ( ). ( ) ( (5)

Usually F is

function K(x, y) that computes thedot product in F , K(x,

y) =< (x) (y) >. The decision function (5) can then be

computed by just using the kernel function and it can also

be shown that finding the maximum margin separating

hyperplane is equivalent to solving the following

optimization problem

(

s s l j i

j i j i j i i

x x K y y

, 1

) , (

2

1

max

(6)

s s

= s s s s

l i

i i i

y l i c

1

0 , 1 , 0

Where positive C is a parameter showing the trade-off

between margin maximization and training error

minimization.The kernel function K avoid working directly

in feature space F. After solving (6), offset b can be chosen

so that the margins between the hyperplane and

(

+ =

s s l i

i i i

b x x k y x f

1

) , ( sgn )) ( sgn(

(7)

The two classes of sample images are equal then have

our decision function Commonly used kernels include

polynomial kernels K(x, y) =(x +y) d and the Gaussian

kernel K(x, y) =exp (||xy||2).This implementation use the

Gaussian kernel, however one of the interesting points for

further research is approaches for choosing an optimal kernel

for the given input data.

V. EXPERIMENTAL RESULTS

In order to demonstrate the effectiveness of SVMs for

object recognition under varying illumination conditions, it

conducted a number of experiments by using the Face

Database. It is known that face recognition under varying

illumination conditions is a very difficult task because

variations due to changes in illumination are usually larger

than those due to changes in face identity [13]. The

performance of generative methods for face recognition [8,

1] implies that the assumption that there is no intersection

among illumination cones is approximately satisfied.

Fig 1.Components-based approach detection and training

Componenet based approach detection and training

presented in figure 1.It represents the features.

D. Face image database

The database consists of images of 10 individuals in 9

poses acquired under 64 different point light sources and an

ambient light: 5850 images in total. The coordinates of the

left eye, right eye, and mouth are appended for images in the

frontal pose, and the coordinate of the face center is

appended for images in other poses. Each image is assigned

to one of 5 subsets according to the angle between the

direction of the light source and the optical axis of a camera.

Fig.2.Illumination images in database for single person 16

illumination Images are there.

Fig.2.shows the different illumination conditions of image

database.

Fig.3.Input Image

Fig.4.Enhanced Image

International Journal of Computer Trends and Technology (IJCTT) volume 4 Issue 8 August 2013

ISSN: 2231-2803 http://www.ijcttjournal.org Page 2559

Image Enhancem

Fig 3 and 4 represents input image and enhanced image.

After Image Enhancement, feature extractions are performed

such as Hog, lbp, colour features and Gabor features.

Fig.5.Non overlapping image left side eye

Fig.6.Non overlapping image right side eye

2.Non overlbing image rideside eye.

Fig.7.Non overlapping image left side Nose&Mouth

Fig.8.Non overlapping image right side Nose&mouth

Non overlapping image right and left side eye represent in

figure 5 and 6 and Non overlapping image left and right side

nose and mouth represent in figure 7 and 8.It display the

hog,lbp,color,gabor features.

The table 1 represents the classification using SVM for

identifying the person.

TABLE 1: CLASSIFICATION USING SVM

TABLE 2: CLASSIFICATION USING PLS

Table 2 represents the classification using PLS for identifying

the person.

0

5

10

15

20

Person 1Person 2Person 3Person 4Person 5

I

l

l

u

m

i

n

a

t

i

o

n

Persons

Person 1 Person 2 Person 3 Person 4 Person 5

Person 1 17 0 0 0 0

Person 2 0 17 0 0 0

Person 3 0 0 17 0 0

Person 4 0 0 0 17 0

Person 5 0 0 0 0 17

Person

1

Person

2

Person

3

Person

4

Person

5

Person

1

17 0 1 0 0

Person

2

0 17 0 0 0

Person

3

0 0 15 0 0

Person

4

0 0 0 17 0

Person

5

0 0 1 0 17

International Journal of Computer Trends and Technology (IJCTT) volume 4 Issue 8 August 2013

ISSN: 2231-2803 http://www.ijcttjournal.org Page 2560

Fig.9. SVM classification

0

5

10

15

20

Person 1Person 2Person 3Person 4Person 5

I

l

l

u

m

i

n

a

t

i

o

n

Persons

Fig.10.PLS Classification

The classifications chart of SVM and PLS is represented in

figure 9 and 10.It analyse the accuracy performance of SVM

is better than PLS.

97

98

99

PLS SVM

accuracy

Fig.11.Comparison with PLS and SVM

Fig.11 shows the accuracy of SVM and PLS.The

experimental results shows SVM classification is higher than

PLS classification and accuracy of SVM classification is

98.7%.

While comparing SVM with PLS, The table1 shows the

classification of persons. There is no misclassification in

SVM on person identification 3.Table 2 shows there is

misclassification on person identification 3 in PLS, so SVM

has highest accuracy then PLS.

VI. CONCLUSIONS AND FUTURE WORK

This paper discussed the problem of object recognition

under varying illumination conditions, in this paper, an

efficient Support vector machine approach to face

recognition based on wavelet transform is proposed. The

exsisting result shows that the face recognition performance

is relatively unaffected even though there is a transformation

of the face including translation, small rotation and

illumination.The SVM algorithm is used, because it is the

basic and straight forward method for feature extraction. It

provides efficient results and requires less storage.

In the present study, the experiment is conducted by

using face images. However, the proposed method should be

applicable to non-Lambertian objects when illumination

cones of objects are approximated by low dimensional

subspaces.Therefore, the future work plan is to confirm the

effectiveness of SVM method for objects with various

reflectance properties.The future efforts will be on the

recognition of face images in dynamic video sequences and

real time tasks using fuzzy logic.

REFERENCES

[1] R. Basri and D. J acobs, Lambertian reflectance and linear subspaces,

In Proc. IEEE ICCV 2001, pp.383390, 2001.

[2] P. Belhumeur, J . Hespanha, and D. Kriegman, Eigenfaces vs.

Fisherfaces: recognition using class specific linear projection, IEEE

Trans.PAMI, 19(7), pp.711720, 1997.

[3] P. Belhumeur and D. Kriegman, What is theset of images of an object

under all possible lighting conditions?, Intl. J . Computer Vision,

28(3), pp.245260, 1998.

[4] K. Bennett and E. Bredensteiner, Duality and geometry in SVM

classifiers, In Proc. Intl. Conf. Machine Learning (ICML 2000),

pp.6572, 2000.

[5] R. Brunelli and T. Poggio, Face recognition: featuresversus templates,

IEEE Trans. PAMI, 15(10), pp.10421052, 1993.

[6] H. Chen, P. Belhumeur, and D. J acobs, In search of illumination

invariants, In Proc. IEEE CVPR 2000, pp.254261, 2000.

[7] R. Duda, P. Hart, and D. Stork, Pattern Classification, J ohn Wiley

&Sons, New York, 2001.

[8] A. Georghiades, P. Belhumeur, and D. Kriegman, Fromfew to many

illuminations cone models for face recognition under variable lighting

and pose, IEEE Trans. PAMI, 23(6), pp.643660, 2001.

[9] G. Guo, S. Li, and K. Chan, Face recognition by support vector

machines, In Proc. IEEE FG 2000, pp.195201, 2000.

[10] P. Hallinan, A low-dimensional representation of human faces for

arbitrary lighting conditions, In Proc. IEEE CVPR 94, pp.995999,

1994.

[11] B. Heisele, P. Ho, and T. Poggio, Face recognition with support vector

machines: global versus component-based approach, In Proc. IEEE

ICCV 2001, pp.688694, 2001.

[12] Y. Moses, Y. Adini, and S. Ullman, Face recognition: the problemof

compensating for changes in illumination direction, In Proc. ECCV

94, pp.286296, 1994.

[13] H. Murase and S. Nayar, Visual learning and recognition of 3-D

objects fromappearance, Intl. J . Computer Vision, 14(1), pp.524,

1995..

[14] P. Phillips, Support vector machines applied to face recognition,

Advances in Neural Information Processing Systems 11, pp.803809,

1998.

[15] M. Pontil and A. Verri, Support vector machines for 3D object

recognition, IEEE Trans. PAMI, 20(6), pp.637646, 1998.

[16] R. Ramamoorthi and P. Hanrahan, On the relationship between

radiance and irradiance: determining the illumination fromimagesof a

convex Lambertian object, J . Opt. Soc. Am. A, 18(10), pp.2448

2459,2001.

[17] R. Ramamoorthi and P. Hanrahan, A signal-processing framework for

inverse rendering, In Proc. ACM SIGGRAPH 2001, pp.117128,

2001.

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5795)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1091)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Azure Sentinel Deployment Best PracticesDocument71 pagesAzure Sentinel Deployment Best PracticesKoushikKc Chatterjee100% (1)

- Mitsubishi WD-73727 Distortion Flicker SolvedDocument15 pagesMitsubishi WD-73727 Distortion Flicker SolvedmlminierNo ratings yet

- Design, Development and Performance Evaluation of Solar Dryer With Mirror Booster For Red Chilli (Capsicum Annum)Document7 pagesDesign, Development and Performance Evaluation of Solar Dryer With Mirror Booster For Red Chilli (Capsicum Annum)seventhsensegroupNo ratings yet

- Fabrication of High Speed Indication and Automatic Pneumatic Braking SystemDocument7 pagesFabrication of High Speed Indication and Automatic Pneumatic Braking Systemseventhsensegroup0% (1)

- Implementation of Single Stage Three Level Power Factor Correction AC-DC Converter With Phase Shift ModulationDocument6 pagesImplementation of Single Stage Three Level Power Factor Correction AC-DC Converter With Phase Shift ModulationseventhsensegroupNo ratings yet

- Ijett V5N1P103Document4 pagesIjett V5N1P103Yosy NanaNo ratings yet

- FPGA Based Design and Implementation of Image Edge Detection Using Xilinx System GeneratorDocument4 pagesFPGA Based Design and Implementation of Image Edge Detection Using Xilinx System GeneratorseventhsensegroupNo ratings yet

- An Efficient and Empirical Model of Distributed ClusteringDocument5 pagesAn Efficient and Empirical Model of Distributed ClusteringseventhsensegroupNo ratings yet

- Non-Linear Static Analysis of Multi-Storied BuildingDocument5 pagesNon-Linear Static Analysis of Multi-Storied Buildingseventhsensegroup100% (1)

- Design and Implementation of Multiple Output Switch Mode Power SupplyDocument6 pagesDesign and Implementation of Multiple Output Switch Mode Power SupplyseventhsensegroupNo ratings yet

- Ijett V4i10p158Document6 pagesIjett V4i10p158pradeepjoshi007No ratings yet

- Experimental Analysis of Tobacco Seed Oil Blends With Diesel in Single Cylinder Ci-EngineDocument5 pagesExperimental Analysis of Tobacco Seed Oil Blends With Diesel in Single Cylinder Ci-EngineseventhsensegroupNo ratings yet

- Analysis of The Fixed Window Functions in The Fractional Fourier DomainDocument7 pagesAnalysis of The Fixed Window Functions in The Fractional Fourier DomainseventhsensegroupNo ratings yet

- Cl-29a5w8x Cl29a6p Ks3aDocument79 pagesCl-29a5w8x Cl29a6p Ks3aaerodomoNo ratings yet

- Ebooklet On IT Initiatives of National Health MissionDocument48 pagesEbooklet On IT Initiatives of National Health MissionhgukyNo ratings yet

- Modeling Transition State in GaussianDocument5 pagesModeling Transition State in GaussianBruno Moraes ServilhaNo ratings yet

- Tilt Switch OldDocument2 pagesTilt Switch OldAngel Francisco NavarroNo ratings yet

- Tutorial M WorksDocument171 pagesTutorial M WorksNazriNo ratings yet

- M Rades Mechanical Vibrations 2Document354 pagesM Rades Mechanical Vibrations 2Thuha LeNo ratings yet

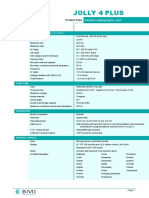

- JOLLY 4 PLUS (PD-01-E Rev. 20)Document3 pagesJOLLY 4 PLUS (PD-01-E Rev. 20)Nguyen AnhNo ratings yet

- Whitepaper: Chiva TokenDocument15 pagesWhitepaper: Chiva TokenMatzeboNo ratings yet

- Manual NTWDocument24 pagesManual NTWElias Melo JrNo ratings yet

- Sigtran Ss7: Asri WulandariDocument44 pagesSigtran Ss7: Asri WulandarifaisalNo ratings yet

- LogDocument8 pagesLogBBGETA 52No ratings yet

- Automated Testing ToolDocument20 pagesAutomated Testing TooltejaswiNo ratings yet

- Automatic Speech Recognition (ASR) : Omar Khalil Gómez - Università Di PisaDocument65 pagesAutomatic Speech Recognition (ASR) : Omar Khalil Gómez - Università Di PisaDanut Simionescu100% (1)

- 4.registration Form of RksDocument3 pages4.registration Form of Rksanon_57550479No ratings yet

- Unit 6 - Module-3 (Week-3) : Assignment 3Document6 pagesUnit 6 - Module-3 (Week-3) : Assignment 3Rohit DuttaNo ratings yet

- FBS115 PDFDocument2 pagesFBS115 PDFharmonoNo ratings yet

- Fuzzy Logic and Applications: Building GUI Interfaces in MatlabDocument7 pagesFuzzy Logic and Applications: Building GUI Interfaces in MatlabMuhammad Ubaid Ashraf ChaudharyNo ratings yet

- Ricardo Tapia Cesena: Java Solution Architect / SR FULL STACK Java DeveloperDocument9 pagesRicardo Tapia Cesena: Java Solution Architect / SR FULL STACK Java Developerkiran2710No ratings yet

- Checklist Audit Factorial HRDocument2 pagesChecklist Audit Factorial HRSoraya AisyahNo ratings yet

- Amazon Web Services in Action, Third Edition - Google BooksDocument2 pagesAmazon Web Services in Action, Third Edition - Google Bookskalinayak.prasadNo ratings yet

- Asmtiu 23Document356 pagesAsmtiu 23Gokul KrishnamoorthyNo ratings yet

- How To Extend The TCP Half-Close Timer For Specific TCP ServicesDocument1 pageHow To Extend The TCP Half-Close Timer For Specific TCP ServicesLibero RighiNo ratings yet

- Operating System MCQsDocument13 pagesOperating System MCQsmanish_singh51No ratings yet

- Ds-7600Ni-K1 Series NVR: Features and FunctionsDocument3 pagesDs-7600Ni-K1 Series NVR: Features and Functionskrlekrle123No ratings yet

- CRS212 1G 10S 1SplusIN - QGDocument3 pagesCRS212 1G 10S 1SplusIN - QGanthykoeNo ratings yet

- Nvidia 2019 InterviewDocument2 pagesNvidia 2019 InterviewShrikant CharthalNo ratings yet

- ACE Exam SamplDocument5 pagesACE Exam SamplShankar VPNo ratings yet

- Socket Programming - Client and Server PDFDocument3 pagesSocket Programming - Client and Server PDF一鸿No ratings yet