Professional Documents

Culture Documents

Markov Chain Exercises

Uploaded by

Jason WuCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Markov Chain Exercises

Uploaded by

Jason WuCopyright:

Available Formats

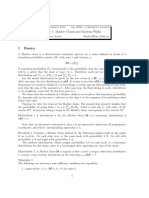

Worksheet # 2

Statistics 150, Pitman, Spring 2013

Topics: Conditional independence, Markov property, transition probability matrices, matrix methods, reversibility. Reading: Secs 4.1, 4.2, 4.3, 6.5. Topic notes linked to above. Note: If you have strong background in linear algebra you may appreciate Secs 4.4, 4.5, 4.6 and associated exercises. But this material is beyond scope of the present course. Exercises: 4.1, 4.3, 4.4, 4.5, 4.6, 4.7, 4.8, 4.9, 4.10, 6.9 , 6.10, plus 1,2,3 below. Homework: 6.9 (need extra assumptions, to be stated, for the limit probabilities), 4.9, 4.10, and from this sheet: 3 e), 3 m). 1. Random walk on a graph. This is the Markov chain of Exercise 6.9 with dij = dji {0, 1} for all i, j in some nite set of states S . Say there is an (undirected) edge between i and j i dij = dji = 1. The graph structure G = (S, E ) is the set of states S with the set E of unordered pairs of states which form the edges. Under what condition on the graph is this random walk an irreducible Markov chain? 2. Random chess moves. Let S be the set of squares on an 8 8 chessboard labeled a1, . . . , a8, b1, . . . , b8, . . . , h1, . . . h8. For each of the chess pieces except a pawn (i.e. king, queen, rook, bishop, knight), dene a corresponding graph on S by dij = 1 i the piece can get from square i to square j in a single move (see Wikipedia: Chess. So random walk on this graph describes random moves of the piece on an empty chessboard. For each piece, (a) what is the number of communicating classes of states in the associated Markov chain? (b) for each communicating class, what is its period? (c) for each communicating class, what is its steady state distribution?

3. Steady-state implies positive recurrence. This exercise oers a simplied approach to the results in Section 6.3.2. Suppose a Markov chain (Xn ) with nite or countable state space S and transition matrix P is irreducible (i.e. for all i and j there n exists n with Pij > 0) and that there exists a steady-state probability distribution (i.e. i 0, i i = 1 and P = ). Write Pi for probabilities and Ei for expectations conditioned on X0 = i and P := i i Pi for steady-state probabilities. Let Ti be the least n 1 such that Xn = i, with the convention Ti = is there is no such n. Show as simply as possible, and without using any results from Section 6.3.2, that for all i, j S and n 1: (a) = P n (b) i > 0 (c) P (Xn = i) = i (d) P (Xm = i for 0 m n 1) = P (Xm = i for 1 m n) (e) P (X0 = i, Xm = i for 1 m n 1) = P (Xm = i for 1 m n 1, Xn = i) (f) P (X0 = i, Ti n) = P (Ti = n) (g) i Pi (Ti n) = P (Ti = n) (h) i Ei (Ti ) = P (Ti < ) (i) Ei (Ti ) < (i.e. every state is positive recurrent: Lemma 6.3.2) (j) Ej (Ti ) < (k) Pj (Ti < ) = 1 (l) Ei (Ti ) = 1/i ( and hence is unique : Theorem 6.3.5 ) (m) Compute Ei (Ti ) for random knight moves on an empty chessboard, and i = b1 (usual starting square for one of the white knights).

You might also like

- SolutionsDocument8 pagesSolutionsSanjeev BaghoriyaNo ratings yet

- Markov Chain Monte CarloDocument29 pagesMarkov Chain Monte Carlomurdanetap957No ratings yet

- An Instability Result To A Certain Vector Differential Equation of The Sixth OrderDocument4 pagesAn Instability Result To A Certain Vector Differential Equation of The Sixth OrderChernet TugeNo ratings yet

- Logic Tutorial-LogicDocument6 pagesLogic Tutorial-LogicĐang Muốn ChếtNo ratings yet

- Markov Chains ErgodicityDocument8 pagesMarkov Chains ErgodicitypiotrpieniazekNo ratings yet

- MC NotesDocument42 pagesMC NotesvosmeraNo ratings yet

- Lec06 570Document5 pagesLec06 570Mukul BhallaNo ratings yet

- Homework 5Document4 pagesHomework 5Ale Gomez100% (6)

- MTH6141 Random Processes, Spring 2012 Solutions To Exercise Sheet 1Document3 pagesMTH6141 Random Processes, Spring 2012 Solutions To Exercise Sheet 1aset999No ratings yet

- Stochastic Processes and The Mathematics of Finance: Jonathan Block April 1, 2008Document132 pagesStochastic Processes and The Mathematics of Finance: Jonathan Block April 1, 2008.cadeau01No ratings yet

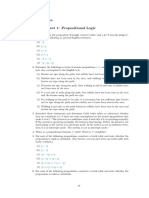

- Propositional and Predicate Logic ExercisesDocument6 pagesPropositional and Predicate Logic ExercisesMazter Cho100% (1)

- PaperII 3Document23 pagesPaperII 3eReader.LeaderNo ratings yet

- Saharon Shelah - Very Weak Zero One Law For Random Graphs With Order and Random Binary FunctionsDocument11 pagesSaharon Shelah - Very Weak Zero One Law For Random Graphs With Order and Random Binary FunctionsJgfm2No ratings yet

- HocsDocument5 pagesHocsyygorakindyyNo ratings yet

- CS229 Practice Midterm QuestionsDocument4 pagesCS229 Practice Midterm QuestionsArka MitraNo ratings yet

- Part IB Paper 6: Information Engineering Linear Systems and Control Glenn VinnicombeDocument18 pagesPart IB Paper 6: Information Engineering Linear Systems and Control Glenn Vinnicombeaali1915No ratings yet

- Markov Chain Monte Carlo and Gibbs Sampling ExplainedDocument24 pagesMarkov Chain Monte Carlo and Gibbs Sampling Explainedp1muellerNo ratings yet

- HW 3 SolutionDocument10 pagesHW 3 SolutionEddie Martinez Jr.100% (1)

- Midterm SolutionsDocument5 pagesMidterm SolutionsLinbailuBellaJiangNo ratings yet

- Discrete MathematicsDocument11 pagesDiscrete MathematicsRohit SuryavanshiNo ratings yet

- Stochastic Solutions ManualDocument144 pagesStochastic Solutions ManualAftab Uddin100% (8)

- Assignment 1 - Sig and SysDocument2 pagesAssignment 1 - Sig and SysHarsh KaushalyaNo ratings yet

- Notes On Markov ChainDocument34 pagesNotes On Markov ChainCynthia NgNo ratings yet

- The Derivation of Markov Chain Properties Using Generalized Matrix InversesDocument31 pagesThe Derivation of Markov Chain Properties Using Generalized Matrix InversesvahidNo ratings yet

- Markov Models ExplainedDocument10 pagesMarkov Models ExplainedraviNo ratings yet

- Probability and Queuing Theory - Question Bank.Document21 pagesProbability and Queuing Theory - Question Bank.prooban67% (3)

- MC MC RevolutionDocument27 pagesMC MC RevolutionLucjan GucmaNo ratings yet

- Nonlinear 2005Document8 pagesNonlinear 2005kadivar001No ratings yet

- Markov Chains 2013Document42 pagesMarkov Chains 2013nickthegreek142857No ratings yet

- LAL Lecture NotesDocument73 pagesLAL Lecture NotesAnushka VijayNo ratings yet

- Frequency-Domain Analysis of Discrete-Time Signals and SystemsDocument31 pagesFrequency-Domain Analysis of Discrete-Time Signals and SystemsSwatiSharmaNo ratings yet

- AnL P Inequality For PolynomialsDocument10 pagesAnL P Inequality For PolynomialsTanveerdarziNo ratings yet

- Applied Probability Lecture Notes (OS2103Document22 pagesApplied Probability Lecture Notes (OS2103Tayyab ZafarNo ratings yet

- The Mathematics of Mixing Things Up: Received: Date / Accepted: DateDocument16 pagesThe Mathematics of Mixing Things Up: Received: Date / Accepted: DateArthurDentistNo ratings yet

- StochBioChapter3 PDFDocument46 pagesStochBioChapter3 PDFThomas OrNo ratings yet

- Hayashi Econometrics AnswersDocument2 pagesHayashi Econometrics AnswersCristina TessariNo ratings yet

- FormulaDocument7 pagesFormulaMàddìRèxxShìrshírNo ratings yet

- MCMC With Temporary Mapping and Caching With Application On Gaussian Process RegressionDocument16 pagesMCMC With Temporary Mapping and Caching With Application On Gaussian Process RegressionChunyi WangNo ratings yet

- Stochastic Process Simulation in MatlabDocument17 pagesStochastic Process Simulation in MatlabsalvaNo ratings yet

- The Existence of Generalized Inverses of Fuzzy MatricesDocument14 pagesThe Existence of Generalized Inverses of Fuzzy MatricesmciricnisNo ratings yet

- Discrete Mathematics and Its Applications: Exercise BookDocument84 pagesDiscrete Mathematics and Its Applications: Exercise BookLê Hoàng Minh ThưNo ratings yet

- MATH39001 - Combinatorics and Graph Theory - Exam - Jan-2011Document8 pagesMATH39001 - Combinatorics and Graph Theory - Exam - Jan-2011scribd6289No ratings yet

- Assign4 SolDocument5 pagesAssign4 Solmorteza2885No ratings yet

- Lec20 PDFDocument7 pagesLec20 PDFjuanagallardo01No ratings yet

- PeskinDocument714 pagesPeskinShuchen Zhu100% (1)

- Test ExamDocument4 pagesTest ExamMOhmedSharafNo ratings yet

- Hermite Mean Value Interpolation: Christopher Dyken and Michael FloaterDocument18 pagesHermite Mean Value Interpolation: Christopher Dyken and Michael Floatersanh137No ratings yet

- RESET, White, Terasvirta Tests for NonlinearityDocument4 pagesRESET, White, Terasvirta Tests for NonlinearityBima VhaleandraNo ratings yet

- 100 Problems in Stochastic ProcessesDocument74 pages100 Problems in Stochastic Processesscrat4acornNo ratings yet

- FornbergDocument9 pagesFornbergSomya kumar SinghNo ratings yet

- Em04 IlDocument10 pagesEm04 Ilmaantom3No ratings yet

- Stability analysis and LMI conditionsDocument4 pagesStability analysis and LMI conditionsAli DurazNo ratings yet

- ML cd −y+ (1−θ) zDocument2 pagesML cd −y+ (1−θ) zThinhNo ratings yet

- EE222: Solutions To Homework 2: Solution. by Solving XDocument10 pagesEE222: Solutions To Homework 2: Solution. by Solving XeolNo ratings yet

- IERG 5300 Random Process Suggested Solution of Assignment 1Document4 pagesIERG 5300 Random Process Suggested Solution of Assignment 1Hendy KurniawanNo ratings yet

- 4lectures Statistical PhysicsDocument37 pages4lectures Statistical Physicsdapias09No ratings yet

- PQT NotesDocument337 pagesPQT NotesDot Kidman100% (1)

- Radically Elementary Probability Theory. (AM-117), Volume 117From EverandRadically Elementary Probability Theory. (AM-117), Volume 117Rating: 4 out of 5 stars4/5 (2)

- A-level Maths Revision: Cheeky Revision ShortcutsFrom EverandA-level Maths Revision: Cheeky Revision ShortcutsRating: 3.5 out of 5 stars3.5/5 (8)

- Optimization ExercisesDocument48 pagesOptimization ExercisesJason WuNo ratings yet

- Time Series Lecture 1Document22 pagesTime Series Lecture 1Jason WuNo ratings yet

- Stat 20 Section Worksheet 2 Problems From FPP, Chapter 2Document2 pagesStat 20 Section Worksheet 2 Problems From FPP, Chapter 2Jason WuNo ratings yet

- Optimization HW5 ProblemsDocument3 pagesOptimization HW5 ProblemsJason WuNo ratings yet

- ECON 100A Midterm 2 ReviewDocument64 pagesECON 100A Midterm 2 ReviewJason WuNo ratings yet

- Time Series Lecture 3Document24 pagesTime Series Lecture 3Jason WuNo ratings yet

- Time Series Lecture 4Document2 pagesTime Series Lecture 4Jason WuNo ratings yet

- Optimization Models: Exercises 2Document2 pagesOptimization Models: Exercises 2Jason WuNo ratings yet

- Time Series Lecture 2Document17 pagesTime Series Lecture 2Jason WuNo ratings yet

- Microecon Review Problems and SolutionsDocument6 pagesMicroecon Review Problems and SolutionsJason Wu0% (1)

- Solucionario de Principios de Analisis Matematico Walter Rudin PDFDocument89 pagesSolucionario de Principios de Analisis Matematico Walter Rudin PDFKimiharoprzNo ratings yet

- Physics 7B - Fall 2004 - Packard - FinalDocument7 pagesPhysics 7B - Fall 2004 - Packard - FinalJason WuNo ratings yet

- Stochastic Calculus For Finance Shreve SolutionsDocument60 pagesStochastic Calculus For Finance Shreve SolutionsJason Wu67% (3)

- Physics 7B - Fall 2004 - Packard - FinalDocument7 pagesPhysics 7B - Fall 2004 - Packard - FinalJason WuNo ratings yet

- PQT Model ExamDocument2 pagesPQT Model ExamBalachandar BalasubramanianNo ratings yet

- Gambler's RuinDocument4 pagesGambler's RuinAvesh DyallNo ratings yet

- M.E (FT) 2021 Regulation-Ece SyllabusDocument64 pagesM.E (FT) 2021 Regulation-Ece SyllabusbsudheertecNo ratings yet

- UC Berkeley CS 174 Solutions to Problem Set 6Document4 pagesUC Berkeley CS 174 Solutions to Problem Set 6eetahaNo ratings yet

- Gaze Transition Entropy, KRZYSZTOF KREJTZ, ANDREW DUCHOWSKIDocument19 pagesGaze Transition Entropy, KRZYSZTOF KREJTZ, ANDREW DUCHOWSKIPablo MelognoNo ratings yet

- Wang 1990Document9 pagesWang 1990Aya MusaNo ratings yet

- B.tech 2 2 CSE AI ML CSE AI R20 Course Structue SyllabiDocument38 pagesB.tech 2 2 CSE AI ML CSE AI R20 Course Structue Syllabimehrajshaik9178No ratings yet

- PQT-Assignment 2 PDFDocument2 pagesPQT-Assignment 2 PDFacasNo ratings yet

- Mitsubishi Elevator CalculationsDocument8 pagesMitsubishi Elevator CalculationsAmit Pekam100% (3)

- 2016 Book BranchingProcessesAndTheirAppl PDFDocument331 pages2016 Book BranchingProcessesAndTheirAppl PDFAristarco deSamosNo ratings yet

- Markov Chains Limiting ProbabilitiesDocument9 pagesMarkov Chains Limiting ProbabilitiesMauro Luiz Brandao JuniorNo ratings yet

- Conditional Expectation and MartingalesDocument21 pagesConditional Expectation and MartingalesborjstalkerNo ratings yet

- FandI CT4 200704 Exam FINALDocument9 pagesFandI CT4 200704 Exam FINALTuff BubaNo ratings yet

- An Introduction To Markovchain PackageDocument39 pagesAn Introduction To Markovchain Packageratan203No ratings yet

- Tutorial: Stochastic Modeling in Biology: Applications of Discrete-Time Markov ChainsDocument47 pagesTutorial: Stochastic Modeling in Biology: Applications of Discrete-Time Markov ChainsMehr Un NisaNo ratings yet

- Massima Guidolin - Markov Switching Models LectureDocument86 pagesMassima Guidolin - Markov Switching Models Lecturekterink007No ratings yet

- hm4 2015Document2 pageshm4 2015Mickey Wong100% (1)

- David Freedman (Auth.) - Approximating Countable Markov Chains (1983, Springer-Verlag New York) PDFDocument149 pagesDavid Freedman (Auth.) - Approximating Countable Markov Chains (1983, Springer-Verlag New York) PDFMogaime BuendiaNo ratings yet

- SvdthesisDocument175 pagesSvdthesisAvinash JaiswalNo ratings yet

- Unit 2: Software Reliability Models and TechniquesDocument79 pagesUnit 2: Software Reliability Models and TechniquesKartik Gupta 71No ratings yet

- M343 Unit 15Document52 pagesM343 Unit 15robbah112No ratings yet

- Ebook College Mathematics For Business Economics Life Sciences and Social Sciences 13Th Edition Barnett Test Bank Full Chapter PDFDocument44 pagesEbook College Mathematics For Business Economics Life Sciences and Social Sciences 13Th Edition Barnett Test Bank Full Chapter PDFalberttuyetrcpk100% (12)

- Causality Bernhard SchölkopfDocument169 pagesCausality Bernhard SchölkopfQingsong GuoNo ratings yet

- Assignment 2: Markov ChainDocument1 pageAssignment 2: Markov ChainAimaan SharifaNo ratings yet

- 1997 OpMe RFS Conley Luttmer Scheinkman Short Term Interest RatesDocument53 pages1997 OpMe RFS Conley Luttmer Scheinkman Short Term Interest RatesjeanturqNo ratings yet

- STAT3007 Problem Sheet 3Document3 pagesSTAT3007 Problem Sheet 3ray.jptryNo ratings yet

- Algorithmic Composition: Andrew Pascoe December 7, 2009Document27 pagesAlgorithmic Composition: Andrew Pascoe December 7, 2009juan perez arrikitaunNo ratings yet

- Chapman Kolmogorov EquationsDocument10 pagesChapman Kolmogorov EquationsYash DagaNo ratings yet

- Ross Chapter - 6 ExerciseDocument4 pagesRoss Chapter - 6 Exercisebrowniebubble21No ratings yet

- Is It Time To Reformulate The Partial Differential Equations of Poisson and Laplace?Document9 pagesIs It Time To Reformulate The Partial Differential Equations of Poisson and Laplace?International Journal of Innovative Science and Research TechnologyNo ratings yet