Professional Documents

Culture Documents

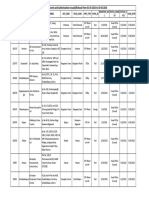

DataTransferProcessConfig BI73

Uploaded by

Lokesh BangaloreOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

DataTransferProcessConfig BI73

Uploaded by

Lokesh BangaloreCopyright:

Available Formats

Data Transfer Process (DTP) BI 7.

3

Sources: http://scn.sap.com/docs/DOC-47495 Questions: What are the key benefits of using a DTP over Conventional IP loading? Refer to section Key Benefits of using a DTP over conventional IP loading. What are the different types of DTPs? It seems like there is only one Standard Type of DTP. DTPs can use different DataSources as shown in section DTP can use the following as DataSources. How to create Data Transfer Process for different types of DataSources? What is the t-code to create a DataTransferProcess? Start in RSA1 - Modeling - DW Workbench. What does Only Retrieve last Request option under Full Extraction Mode on Extraction tab do? What is Temporary Storage Area in Data Transfer Process? What is Erro Stack in Data Transfer Process? How to look at Error Stack? How to delete Error Stack? How to use Semantic Keys in Data Transfer Process?

DTP is the Transfer of Data between two persistent objects. SAP Net Weaver 7.3, Info Package loads data from a Source System only up to PSA. It is DTP that determines the further loading of data thereafter. Data Transfer Process is used to: Data Loading from PSA (Persistant Storage Area) to InfoProvider(s). Data Transfer betweeen Info Providers within BI. Data Distribution to targets outside BI, e.g. Open HUBs, etc. Key Benefits of using a DTP over conventional IP loading DTP follows one to one mechanism between a source and a Target i.e. one DTP sources data to only one data target whereas, IP loads data to all data targets at once. This is one of the major advantages over the Info Package method as it helps in achieving a lot of other benefits. Isolation of Data loading from Source to BI system (PSA) and within BI system. This helps in scheduling data loads to Info Provider at any time after loading data from the source. Better Error handling mechanism with the use of Temporary storage area, Semantic Keys and Error Stack.

is the ICON for DataTransferProcess Objects. Creating DTPs: Right click on the DataSource and choose Create Data Transfer Process.

DTP Type:

Object Type for Target of DTP:

Subtype of Object:

DTP can use the following as DataSources:

Data Transfer Process (DTP) contains three tabs: Extraction, Update and Execute. EXTRACTION Tab:

1. 2.

Parallel Extraction new option in BI 7.3 could not find this option. Delta Init. Extraction from could not find this option.

Two Extraction Modes:

More options with Delta Extraction Mode:

Delta Init without Data is a new option in BI 7.3.

Filter option can be used to filter data based on conditions:

Semantic Group

UPDATE TAB

Maximum Number of Errors per Pack after which the load process terminates.

Error Handling options:

1. 2.

DTP Settings to Increase the Loading Performance Handle Duplicate Records Key

EXECUTE TAB

1.

Automatically Repeat Red Request in Process Chain new option in BI 7.3)

Data Transfer Process (DTP) BI 7.3

Data Transfer Process (DTP) contains three tabs

1. Extraction 2. Update 3. Execute

Detail Explanation Each Tab

1. Extraction There are two types of Extraction modes for a DTP FULL and DELTA. When the source of data is any one from the below Info Provider, FULL and DELTA Extraction Mode is available.

Data Store Info Cube Multi Provider Info Set Info object :Attribute Info object :Text Info object :Hierarchies Info object :Hierarchies (One segment) Semantically partitioned objects Info object :Attribute

Note: Now in SAP BW 7.3, we can extract data from Multi Provider and Info set which is not possible in earlier version BI 7.0 and BW 3.5 Please check the below screen.

In the process of transferring data within BI, the Transformations define mapping and logic of data updating to the data targets whereas, the Extraction mode and Update mode are determined using a DTP. DTP is used to load data within BI system only. If you selected transfer mode Delta, you can define further parameters:

A. Only get delta once:

It can select this option where the most recent data required in data target. In case delete overlapping request from data target have to select this option and use delete overlapping request process type in process chain. If used these setting then from the second loads

it will delete the overlapping request from the data target and keeps only the last loaded request in data target.

B. Get all new data request by request:

If don't select this option then the DTP will load all new requests from source into a single request. Have to select this option when the number of new requests is more in source and the amount of data volume is more. If selected this option then the DTP will load request by request from source and keep the same request in target.

C. Delta Init without Data: (new option in BI 7.3)

This option specifies whether the first request of delta DTP should be transferred without any data. This is same as Initialization without data transfer in Delta Info Package.

If we select this option, the source data is only flagged as extracted and is not moved to the target (first request of delta DTP will get successful with one record). This option will sets the data mart status for the requests in source and we cannot extract this requests using delta DTP anymore. We will have this option in Extraction tab of Data transfer Process and can be seen in the below screen as well.

Note: If we are running any DTP for testing purpose, then it is not recommended to use this option. Instead, on Execute tab page, set the processing type to Mark Source Data as Retrieved.

D. Optimal Package Size:

In the standard setting in the data transfer process, the size of a data package is set to 50,000 data records, on the assumption that a data record has a width of 1,000 bytes. To improve performance, you can set the value for the data package size, depending on the size of the main memory.

Enter this value under Package Size on the Extraction tab in the DTP maintenance transaction.

E. Parallel Extraction new option in BI 7.3)

System uses Parallel Extraction to execute the DTP when the following conditions are reached.

The Source of the DTP supports Parallel Extraction (Ex: Data Source).

Error Handling is De-activated.

The list for creating semantic groups in DTP's and transformations is empty.

The Parallel Extraction field is selected.

This field allows us to control the processing type of the Data Transfer Process and switch between the Parallel Extraction and Processing and Serial Extraction, Immediate Parallel Processing processing types.

How it works: If the system is currently using the processing type Parallel Extraction and Processing, we can select this option and change the processing type to Serial Extraction, Immediate Parallel Processing.

If the system is currently using the processing type Serial Extraction, Immediate Parallel Processing, we can select the Parallel Extraction field and change the processing type to Parallel Extraction and Processing (provided that error handling is deactivated and the list of grouping fields is empty).

This field will be selected automatically if source of the DTP support parallel extraction.

F. Semantic Group

We chose Semantic keys to specify how we want to build the data packages that are read from the source. Based on the key fields we define in semantic keys, records with the same key are combined in a single data package. This setting is only relevant for Data Store objects with data fields that are overwritten. This setting also defines the key fields for the error stack. By defining the key for the error stack, you ensure that the data can be updated in the target in the correct order.

G. Filter

Instead of transferring large amount data at a time, we can divide the data by defining filters. The filter thus restricts the amount of data to be copied and works like the selections in the Info Package. We can specify single values, multiple selections, intervals, selections based on variables, or routines.

H. Delta Init. Extraction from

Active Table (with Archive) The data is read from the DSO active table and from the archived data. Active Table (Without Archive) The data is only read from the active table of a DSO. If there is data in the archive or in near-line storage at the time of extraction, this data is not extracted. Archive (Full Extraction Only) The data is only read from the archive data store. Data is not extracted from the active table. Change Log the data is read from the change log and not the active table of the DSO.

2. UPDATE TAB

A. Error Handling

Deactivated:

Using this option error stack is not enabled at all. Hence for any failed records no data is written to the error stack. Thus if the data load fails, all the data needs to be reloaded again.

No update, no reporting:

If there is erroneous/incorrect record and we have this option enabled in the DTP, the load stops therewith no data written to the error stack. Also this request will not be available for reporting. Correction would mean reloading the entire data again.

Valid Records Update, No reporting (Request Red):

Using this option all correct data is loaded to the cubes and incorrect data to the error stack. The data will not be available for reporting until the erroneous records are updated and QM status is manually set to green. The erroneous records can be updated using the error DTP.

Valid Records Updated, Reporting Possible (Request Green):

Using this option all correct data is loaded to the cubes and incorrect data to the error stack. The data will be available for reporting and process chains continue with the next steps. The erroneous record scan be updated using the error DTP.

B. DTP Settings to Increase the Loading Performance

Number of Parallel Process:

We can define the number of processes to be used in the DTP

Open DTP and Go to Menu Select settings for Batch Manager

Now appear below screen

Here defined 3, hence 3 data packages are processed in parallel.

If we want we can change the job priority also. We have three types of priorities for background jobs, they are

i) A ii) Biii) C-

High Priority. Medium Priority Low Priority

C. Handle Duplicate Records Key:

In case load to DSO, we can eliminate duplicate records by selecting option "Unique Data Records". If loading to master data it can be handled by selecting handling duplicate record keys option in DTP. If you select this option then It will overwrite the master data record in case it time independent and will create multiple entries in case dime dependent master data.

3. EXECUTE TAB

A. Automatically Repeat Red Request in Process Chain new option in BI 7.3)

Sometimes DTP load fails with one off error messages like RFC connection error, missing packets, database connection issues etc. Usually these are resolved by repeating the failed DTP load. In BW 7.3 there is an option under execute tab which if you check will automatically repeat red requests in process chains. I think this is a cool feature and saves us time and effort in monitoring data loads. Automatically Repeat Red Requests in Process Chains. If a data transfer process (DTP) has produced a request containing errors during the previous run of a periodically scheduled process chain, this indicator is evaluated the next time the process chain is started. How it works: If we select this option, the previous request that contains errors is automatically deleted and a new one is started. If the indicator is set, the previous request that contains errors is automatically deleted and a new one is started. If the indicator is not set, the DTP terminates and an error message appears explaining that no new request can be started until the previous request is either repaired or deleted.

Hopes it is helps....

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Rural Infrastructure DevelopmentDocument961 pagesRural Infrastructure DevelopmentLokesh BangaloreNo ratings yet

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Rural Infrastructure DevelopmentDocument961 pagesRural Infrastructure DevelopmentLokesh BangaloreNo ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Daily PrayersDocument11 pagesDaily Prayerssaurabh0015No ratings yet

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- A Prayer to the Sacred River KaveriDocument7 pagesA Prayer to the Sacred River KaveriLokesh BangaloreNo ratings yet

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Addressing Non-Adherence To Antipsychotic Medication - A Harm-Reduction ApproachDocument12 pagesAddressing Non-Adherence To Antipsychotic Medication - A Harm-Reduction ApproachMatt AldridgeNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Kaveri RiverDocument14 pagesKaveri RiverLokesh BangaloreNo ratings yet

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Hosalli Poverty AnalysisDocument19 pagesHosalli Poverty AnalysisLokesh BangaloreNo ratings yet

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Urban IndiaDocument23 pagesUrban IndiaLokesh BangaloreNo ratings yet

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Development of Lean Assessment ModelDocument8 pagesDevelopment of Lean Assessment ModelLokesh BangaloreNo ratings yet

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- Yield Estimation of Coconut in Tumkur District of Karnataka: January 2016Document9 pagesYield Estimation of Coconut in Tumkur District of Karnataka: January 2016Lokesh BangaloreNo ratings yet

- Krishna River Basin Closing PDFDocument48 pagesKrishna River Basin Closing PDFLokesh BangaloreNo ratings yet

- Sri Krishnaveni Mahatmyam: - P.R. KannanDocument9 pagesSri Krishnaveni Mahatmyam: - P.R. KannanLokesh BangaloreNo ratings yet

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Karnataka HistoryDocument48 pagesKarnataka HistoryLokesh Bangalore71% (7)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Hydropower Generation Performance in Cauvery Basin: Projects Inst Capacity (MW) Generation (MU) Mu/MwDocument1 pageHydropower Generation Performance in Cauvery Basin: Projects Inst Capacity (MW) Generation (MU) Mu/MwLokesh BangaloreNo ratings yet

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Story of BAHUBALI & Xilinx FPGAsDocument2 pagesThe Story of BAHUBALI & Xilinx FPGAsRakib HasanNo ratings yet

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Ohio Colleges UniversitiesDocument1 pageOhio Colleges UniversitiesLokesh BangaloreNo ratings yet

- KarnatakaDocument1 pageKarnatakaseenu189No ratings yet

- Gubbi Project PLanDocument16 pagesGubbi Project PLanLokesh BangaloreNo ratings yet

- Tumkuru District PoliciesDocument12 pagesTumkuru District PoliciesLokesh BangaloreNo ratings yet

- Telephone List - Tumkur District.Document9 pagesTelephone List - Tumkur District.Lokesh Bangalore100% (1)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Issues Refused by State GovtDocument57 pagesIssues Refused by State GovtLokesh BangaloreNo ratings yet

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- Notes On Abstract Algebra 2013Document151 pagesNotes On Abstract Algebra 2013RazaSaiyidainRizviNo ratings yet

- bb77 PDFDocument28 pagesbb77 PDFLokesh BangaloreNo ratings yet

- MangalorePopulationDiversity PDFDocument8 pagesMangalorePopulationDiversity PDFLokesh BangaloreNo ratings yet

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- bb77 PDFDocument28 pagesbb77 PDFLokesh BangaloreNo ratings yet

- Gulbarga District, Karnataka: Ground Water Information BookletDocument24 pagesGulbarga District, Karnataka: Ground Water Information BookletLokesh Bangalore100% (1)

- Core Connections Algebra: Selected Answers ForDocument10 pagesCore Connections Algebra: Selected Answers ForLokesh BangaloreNo ratings yet

- Math 2270 Last HomeworkDocument1 pageMath 2270 Last HomeworkLokesh BangaloreNo ratings yet

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- CS 484 - ParallelProgrammingDocument5 pagesCS 484 - ParallelProgrammingGokul KrishnamoorthyNo ratings yet

- Cuda Programming Within MathematicaDocument25 pagesCuda Programming Within MathematicaIgnoratoNo ratings yet

- Pipeline Pilot Interface: Edgar Derksen, Sally HindleDocument22 pagesPipeline Pilot Interface: Edgar Derksen, Sally HindlesteliosNo ratings yet

- Yyy Yyyyyy Yyyyyy Yyyyyyyyyy Yyyy Yyyyy YyyyyyyDocument10 pagesYyy Yyyyyy Yyyyyy Yyyyyyyyyy Yyyy Yyyyy Yyyyyyyhill_rws407No ratings yet

- Computer Organization Course OverviewDocument32 pagesComputer Organization Course Overviewrajak1No ratings yet

- Computer Architecture - Teachers NotesDocument11 pagesComputer Architecture - Teachers NotesHAMMAD UR REHMANNo ratings yet

- Parallel Thinking: Core Ideas for Teaching Parallel ProgrammingDocument37 pagesParallel Thinking: Core Ideas for Teaching Parallel ProgrammingAnonymous RrGVQjNo ratings yet

- Lec 2 Types, Classsifications and GenerationsDocument38 pagesLec 2 Types, Classsifications and Generationsomare paulNo ratings yet

- Cloud Computing IntoductionDocument71 pagesCloud Computing Intoductionpruthvi mpatilNo ratings yet

- CSE Syl BOS 14-15 Draft-161-188Document28 pagesCSE Syl BOS 14-15 Draft-161-188prasathNo ratings yet

- Unit 3 - Operating System - WWW - Rgpvnotes.inDocument38 pagesUnit 3 - Operating System - WWW - Rgpvnotes.inGirraj DohareNo ratings yet

- Serpent and Smartcards: Abstract. We Proposed A New Block Cipher, Serpent, As A Candidate ForDocument8 pagesSerpent and Smartcards: Abstract. We Proposed A New Block Cipher, Serpent, As A Candidate ForAKISNo ratings yet

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Tensor FlowDocument19 pagesTensor Flowcnm_scribdNo ratings yet

- Parallel Architecture Classification GuideDocument41 pagesParallel Architecture Classification GuideAbhishek singh0% (1)

- Msrit 4 YearDocument42 pagesMsrit 4 YearRishabh SinghNo ratings yet

- Configuring High Performance Computing GuideDocument34 pagesConfiguring High Performance Computing GuideSuri Kens MichuaNo ratings yet

- Harnessing Multiple Computers for Complex TasksDocument31 pagesHarnessing Multiple Computers for Complex TasksCutiepiezNo ratings yet

- Unit 1 - BD - Introduction To Big DataDocument83 pagesUnit 1 - BD - Introduction To Big DataRishab kumarNo ratings yet

- 05 Huawei MindSpore AI Development Framework - ALEXDocument57 pages05 Huawei MindSpore AI Development Framework - ALEXMohamed ElshorakyNo ratings yet

- CMPE 478 Parallel ProcessingDocument60 pagesCMPE 478 Parallel ProcessingHiro RaylonNo ratings yet

- Sati It SyllabusDocument12 pagesSati It SyllabusDevendra ChauhanNo ratings yet

- Dca6105 - Computer ArchitectureDocument6 pagesDca6105 - Computer Architectureanshika mahajanNo ratings yet

- Linux Multithreaded Server ArchitectureDocument36 pagesLinux Multithreaded Server ArchitectureShaunak JagtapNo ratings yet

- Discrete Element MethodsDocument564 pagesDiscrete Element Methodschingon987100% (8)

- Installation Guide LS-DYNA-971 R4 2 1Document48 pagesInstallation Guide LS-DYNA-971 R4 2 1nahkbce0% (2)

- Chapter04 ProcessorDocument209 pagesChapter04 ProcessorAbdul ThowfeeqNo ratings yet

- H AMACHERDocument59 pagesH AMACHERPushpavalli Mohan0% (1)

- ,, ,,, Enhanced Intel Technology (EIST),, XD Bit (An Implementation)Document5 pages,, ,,, Enhanced Intel Technology (EIST),, XD Bit (An Implementation)mahesa34No ratings yet

- Parallel Implementation of Divide-and-Conquer Semiempirical Quantum Chemistry CalculationsDocument9 pagesParallel Implementation of Divide-and-Conquer Semiempirical Quantum Chemistry Calculationssepot24093No ratings yet

- OSY Board Questions With AnswersDocument51 pagesOSY Board Questions With AnswersHemil ShahNo ratings yet

- Naples, Sorrento & the Amalfi Coast Adventure Guide: Capri, Ischia, Pompeii & PositanoFrom EverandNaples, Sorrento & the Amalfi Coast Adventure Guide: Capri, Ischia, Pompeii & PositanoRating: 5 out of 5 stars5/5 (1)

- Arizona, Utah & New Mexico: A Guide to the State & National ParksFrom EverandArizona, Utah & New Mexico: A Guide to the State & National ParksRating: 4 out of 5 stars4/5 (1)