Professional Documents

Culture Documents

Mobile Ground-Based Radar Sensor For Localization and Mapping An Evaluation of Two Approaches

Uploaded by

Aurel GSOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Mobile Ground-Based Radar Sensor For Localization and Mapping An Evaluation of Two Approaches

Uploaded by

Aurel GSCopyright:

Available Formats

International Journal of Advanced Robotic Systems

Mobile Ground-Based Radar

Sensor for Localization and Mapping:

An Evaluation of two Approaches

Regular Paper

Damien Vivet

1,2

, Franck Grossier

1,2

, Paul Checchin

1,2,*

,

Laurent Trassoudaine

1,2

and Roland Chapuis

1,2

1 Institut Pascal, Universit Blaise Pascal, Clermont Universit, Clermont-Ferrand, France

2 Institut Pascal, CNRS, UMR 6602, Aubire, France

* Corresponding author E-mail: paul.checchin@univ-bpclermont.fr

Received 2 Oct 2012; Accepted 10 May 2013

DOI: 10.5772/56636

2013 Vivet et al.; licensee InTech. This is an open access article distributed under the terms of the Creative

Commons Attribution License (http://creativecommons.org/licenses/by/3.0), which permits unrestricted use,

distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract This paper is concerned with robotic

applications using a groundbased radar sensor for

simultaneous localization and mapping problems. In

mobile robotics, radar technology is interesting because

of its long range and the robustness of radar waves to

atmosphericconditions,makingthesesensorswellsuited

for extended outdoor robotic applications. Two

localizationandmappingapproachesusingdataobtained

from a 360 field of view microwave radar sensor are

presentedandcompared.Thefirstmethodisatrajectory

oriented simultaneous localization and mapping

technique, which makes no landmark assumptions and

avoids the data association problem. The estimation of

the egomotion makes use of the FourierMellin

transform for registering radar images in a sequence,

from which the rotation and translation of the sensor

motion can be estimated. The second approach uses the

consequence of using a rotating range sensor in high

speed robotics. In such a situation, movement

combinations create distortions in the collected data.

Velocimetryisachievedherebyexplicitlyanalysingthese

measurementdistortions.Asaresult,thetrajectoryofthe

vehicleandthentheradarmapofoutdoorenvironments

can be obtained. The evaluation of experimental results

obtained by the two methods is presented on realworld

data from a vehicle moving at 30 km/h over a 2.5 km

course.

Keywords Radar Sensor, Mapping, Localization, Fourier

MellinTransform,Distortion

1.Introduction

The increased autonomy of robots is directly linked to

their capability to perceive their environment.

Simultaneous Localization and Mapping (SLAM)

techniques, which associate perception and movement,

are particularly interesting because they provide

advanced autonomy to vehicles such as robots. In

outdoorenvironments,climaticconstraintsoroperational

context(dust,lightcondition,rain,etc.)showthelimitsof

usualperceptionsystems.Thisworkproposesradarasan

alternative to existing perception systems for ground

1 Damien Vivet, Franck Grossier, Paul Checchin, Laurent Trassoudaine and Roland Chapuis:

Mobile Ground-Based Radar Sensor for Localization and Mapping: An Evaluation of two Approaches

www.intechopen.com

ARTICLE

www.intechopen.com

Int. j. adv. robot. syst., 2013, Vol. 10, 307:2013

mobile robots. Its ability to work in all weather, day and

night, makes the radar very attractive for outdoor

applications. The combined use of radar with SLAM

techniques in the field of mobile ground robotics is the

maincontributionofthepresentedwork.

SLAM has been studied intensively over the past two

decades. For a broad and quick review of the different

approaches developed to face this high level of

complexityinautonomousmobilerobotapplications,the

reader can consult [14]. Localization and mapping in

large outdoor environments are applications related to

theavailabilityofefficientandrobustperceptionsensors,

particularly with regard to the problem of maximum

rangeandcompliancewiththeenvironmentalconditions.

Even though lasers and cameras are wellsuited sensors

for environment perception [57], their strong sensitivity

to atmospheric conditions has, among other reasons,

given rise to an interest in the development of mapping

andlocalizationmethodsworkingwithdatacomingfrom

othersensorslikeradarorsonar[8].

In this paper, two SLAM techniques are presented using

data from a 360

field of view radar sensor. This radar is

based on the Frequency Modulated Continuous Wave

(FMCW) technology [9]. In section 2, a review of articles

relatedtoourresearchworkiscarriedoutinthefieldsof

outdoor mapping and egomotion estimation. Section 3

briefly presents the microwave radar scanner we used

and that was developed by a team from the CEMAGREF

Institute(IRSTEA)workingonenvironmentalsciencesand

technologies. Section 4 deals with a scanmatching

approachforlocalizationandmapping.Section5presents

an approach based on an analysis of distortion due to

movement in order to extract information about the

robots trajectory and then the radar map of outdoor

environments. Finally, section 6 shows experimental

results.

2.Relatedwork

2.1Outdoormapping

In order to perform outdoor SLAM, laser sensors have

been widely used [10]. To provide localization and map

building, the input range data are processed using

geometric feature extraction and scan correlation

techniques. Less research exists using sensors such as

underwater sonar [8] and Frequency Modulated

Continuous Wave (FMCW) radar. Largearea SLAM has

already been carried out successfully for visionbased

underwater missions over long distances [11] [12].

Interestingly, a radar sensor has already been used by

Clark in [13] at the end of the last century. In an

environment containing a small number of well

separated, highly reflective beacons, experiments were

led with this sensor to provide a solution to the SLAM

problem [2] using an extended Kalman filter framework

and a landmarkbased approach. In [14], a model

dedicated to the radar was developed to build an

occupancy grid map. Finally, in [15], a method for

building a map with sensors returning both range

information and received signal power information was

presented. An outdoor occupancy grid map related to a

30mvehiclestrajectoryisanalysed.

A common method of pose estimation for mobile robots

isscanmatching[16].Bysolvingtherigidtransformation

between consecutive scans from a range sensor, the

robots motion in the time period between the scans can

beinferred.Oneofthemostpopularapproachesforscan

matching is the Iterative Closest Point (ICP) [17]

algorithm. In ICP, the transformation between scans is

founditerativelybyassumingthateverypointinthefirst

scan corresponds to its closest point in the second scan,

and by calculating a closed form solution using these

correspondences. However, sparse and noisy data, such

asthosefromanimagingradar,cancauseanICPfailure.

A single noisy reading can significantly affect the

computed transformation, causing the estimated robot

pose to drift over time. Yet, it is wellknown that the

differentpossiblecombinationsofradarsignalsintroduce

the speckle effect which can lead to ghost detections or

false disappearances. To overcome this problem, the

FourierMellin transform for registering images in a

sequenceisusedinourfirstapproach[18,19]toestimate

the rotation and translation of the radar sensor motion

(seesection4).InthecontextofscanmatchingSLAM,the

use of the FourierMellin transform is original and

provides an accurate and efficient way of computing the

rigid transformation between consecutive scans (see

section6).Itisaglobalmethodthattakesintoaccountthe

contributionsofboththerangeandpowerinformationof

theradarimage.Insomeways,thisglobalapproachis

alsoclosetothehistogramcorrelationtechnique[10].

2.2Egomotionestimation

Theestimationofavehiclesdisplacementoregomotion

is a widely studied problem in mobile robotics. Most

applications are based on proprioceptive data provided

by odometer sensors, gyrometers, IMU or other

positioning systems such as GPS [20]. But in order to

estimate motion, some research works tried to use only

exteroceptivedata.Thus,theauthorsof[2123]proposed

a visual odometry without proprioceptive data. Tipaldi

and Ramos [24] proposed filtering out moving objects

before doing egomotion. In such an approach, the

exteroceptive egomotion sensor is considered as

augmentingratherthanreplacingclassicalproprioceptive

sensors.Sometimes,classicaldisplacementmeasurements

are much more difficult and have limitations: inertial

sensors are prone to drift, and wheel odometry is

unreliableinroughterrain(wheelstendtoslipandsink)

2 Int. j. adv. robot. syst., 2013, Vol. 10, 307:2013 www.intechopen.com

and as a consequence, visual odometric approaches are

widelystudied[25,26].Forexample,inanunderwateror

naval environment, classical egomotion techniques are

not suitable. In [27], an egomotion technique was

proposed based on visual SLAM fused with IMU. In

order to find displacement with exteroceptive sensors

such as range finders, the scan matching method is once

more commonly used [8, 28], but each scan is corrected

withproprioceptivesensors,especiallywhenthesensoris

slow. In all scan matching work, distortion is taken into

account but considered as a disturbance and thus

corrected.

The only work dealing with distortion as a source of

informationusedaspecificcamerawitharollingshutter.

In [29], AitAider et al. computed the instantaneous 3 D

pose and velocity of fast moving objects using a single

cameraimage,butintheircontextpriorknowledgeofthe

observed object is required. In mobile robotics, we have

no a priori about the surrounding environment of the

robot. To the best of our knowledge, there is absolutely

no work in the field of mobile robotics literature

considering distortion as a source of information for an

odometric purpose. So, the goal of this second approach

istostudyandusedatadistortionwithamobileground

based panoramic radar sensor in the context of field

robotics and to show its usefulness for localization and

mapping[30].

3.TheK2Piradar

The exploited radar uses the frequency modulation

continuous wave (FMCW) technique [9]. The FMCW

radariscalledK2Pi(2forpanoramicinKband)andis

equipped with a rotating antenna in order to achieve a

complete360

persecondmonitoringaroundthevehicle,

withanangularresolutionof3,inthe3100mrange.A

general view of the radar is presented in Fig. 1 and its

main characteristics are listed in Table 1. The example of

two radar images is presented in Fig. 2. Variations of

shading indicate variations of amplitude in the power

spectra.

4.FMTRSLAM:FourierMellin

transformbasedradarSLAM

Inordertoovercomethecomplexityofradarimageanalysis,

a trajectoryoriented EKFSLAM technique using data from

the360

fieldofviewradarsensorhasbeendeveloped.This

process makes no landmark assumptions and avoids the

data association problem. The method of egomotion

estimation makes use of the FourierMellin transform for

registering radar images in a sequence, from which the

rotation and translation of the sensor motion can be

estimated [31]. In the context of the scanmatching SLAM,

the use of the FourierMellin transform is original and

provides an accurate and efficient way of computing the

rigidtransformationbetweenconsecutivescans.

4.1RadarscanmatchingSLAM

The used formulation of our SLAM approach is to

estimate the vehicle trajectory defined by the estimated

state

0 k 1 k

T

T T T

v v v

x(k) x , , x , x ,

(

=

where

i

T

v i i i

x x , y ,| ( =

is the state vector describing the

location and orientation of the vehicle at time

i

t . There is

no explicit map; rather each pose estimate has an

associated scan of raw sensed data that can be next

alignedtoformaglobalmap.

Scanmatchingistheprocessoftranslatingandrotatinga

radarscansuchthatamaximaloverlapwithanotherscan

emerges.Assumingthedistributionofalignmenterrorsis

approximately Gaussian, a new vehicle pose is added to

the SLAM map by only adding the pose to the SLAM

statevector.

So, observations are associated to each pose. They are

comparedandregisteredtoofferpotentialconstraintson

theglobalmapofvehicleposes.Thisisnotonlyusefulfor

motionbased state augmentation, but it is also an

essentialpointforloopclosing.

The estimator used here is the extended Kalman filter

(EKF). Given a noisy control input (k 1) + u at time

k 1

t

+

measured from a gyrometer and odometers, upon

calculation of the new vehicle pose, x

n 1

v

(k 1|k),

+

+ and a

corresponding covariance matrix, P

n 1

v

(k 1|k),

+

+ the

predicted global state vector, x(k 1|k), + and

corresponding covariance matrix, (k 1|k), + P can be

augmentedasfollows:

( )

( )

( )

( )

( )

( )

( ) ( )

( ) ( )

( ) ( )

( ) ( )

0

1

n

n

n 1

n

n

n

n 1

n

v

v

v

v

v

T

v

v

T v

v

v

x

x

x k k

x k 1|k k 1|k ,

x k 1

x

x

x k 1

k k k k

x

k 1 k .

x k 1

k k k 1 k

x

+

+

(

(

(

(

(

( + = = +

(

+ (

(

(

(

(

c +

(

(

c

(

+ =

(

c +

(

+

(

c

(

u

u

P P

P

u

P P

The operator is the displacement composition operator

(e.g., [32]).

( )

n 1

v

k 1 k

+

+ P is the covariance of the newly

added vehicle state. Let us assume that two scans or

images,

i

I and

j

, I havebeenregistered.So,anobservation

i, j

T of the rigid transformation between poses and in the

state vector exists. Therefore, a predicted transformation

betweenthetwoposescanbefoundfromtheobservation

modelasfollows:

3 Damien Vivet, Franck Grossier, Paul Checchin, Laurent Trassoudaine and Roland Chapuis:

Mobile Ground-Based Radar Sensor for Localization and Mapping: An Evaluation of two Approaches

www.intechopen.com

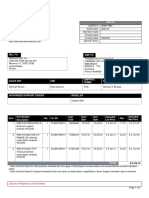

Figure1.TheK2PiFMCWradar

Carrierfrequency F0 24GHz

Transmitterpower Pt 20dBm

AntennagainG 20dB

Bandwidth 250MHz

Scanningrate 1Hz

Angularresolution 3

Angularprecision 0.1

RangeMin/Max 3m/100m

Distanceresolution 0.6m

Distanceprecision(canonicaltargetat100m) 0.05m

Size(lengthwidthheight) 2724

30cm

Weight 10kg

Table1.CharacteristicsoftheK2PiFMCWradar

Figure2.Twoconsecutiveradarimages((a)&(b))obtainedwith

theFMCWradarsensor(seeFig.1)

( ) ( ) ( )

( ) ( )

( )

j i

i, j

v v

k 1 k h x k 1 k

x k 1 k x k 1 k

+ = +

= + +

T

wheretheoperator istheinversetransformationoperator.

Observations are assumed to be made according to a

modeloftheform:

( ) ( ) ( ) ( ) ( )

i, j i j

k 1 h x k 1 k 1 , , + = + + + = T I I

in which represents a registration algorithm, ( )

k 1 + a

vector of observation errors, and h the observation

function.Thestateupdateequationsarethentheclassical

EKF update equations. The search for a transformation

i, j

T is achieved by maximizing a cross correlation

function [33]. This process has been adapted in order to

alsoreturntheassociatedcovariance.

4.2FourierMellintransformforegomotionestimation

The problem of registering two scans in order to

determine their relative positions has to be solved. The

choiceofanalgorithmisstronglyinfluencedbytheneed

for realtime operation. A FFTbased algorithm was

chosentoperformscanmatching.

Fourierbased schemes are able to estimate large

rotations, scalings and translations. Let us note that the

scale factor is irrelevant in our case. Most of the DFT

based approaches use the shift property [31] of the

Fourier transform, which enables robust estimation of

translationsusingnormalizedphasecorrelation.

To match two scans which are translated and rotated

withrespecttoeachother,thephasecorrelationmethod

is used, stating that a shift in the coordinate frames of

twofunctionsistransformedintheFourierdomainasa

linear phase difference. To deal with the rotation as a

translational displacement, the images are previously

transformed into a uniform polar Fourier

representation.

It is known that if two images

1

I and

2

I differ only by a

shift, ( x, y), A A (i.e.,

2 1

(x, y) (x x, y y)), = A A I I then

theirFouriertransformsarerelatedby:

( ) x y

1 w x w y

2 x y 2 x y

(w , w ).e (w , w ).

A + A

= I I

Hence,thenormalizedcrosspowerspectrumisgivenby

( )

( ) x y

1 w x w y

2 x y

1 x y

(w , w )

Corr x, y e .

(w , w )

A + A

= =

I

I

(1)

TakingtheinverseFouriertransform:

( )

( ) ( )

( )

= = A A

1

x y

Corr x, y F Corr w , w x x, y y , d

which means that ( )

Corr x, y is nonzero only at

( )

( )

( ) { }

x,y

x, y argmax Corr x, y . A A = If the two images

differ by rotational movement ( )

0

q with translation

( )

x, y , A A then

2 1 0 0

0 0

(x, y) (xcos ysin x,

xsin ycos y).

= + A

+ A

I I q q

q q

Converting from rectangular coordinates to polar

coordinates makes it possible to represent rotation as

shift:theFouriertransforminpolarcoordinatesis

( ) ( )

( ) x y

i w x w y

2 1 0

, , .e .

A + A

= I I r q r q-q

4 Int. j. adv. robot. syst., 2013, Vol. 10, 307:2013 www.intechopen.com

Introducing the magnitudes of

1

I and

2 1

I I and

2

I

respectively),theyarerelatedby

1 2 0

. I I , , q

The shift between the two images can now be resolved

using(1).

4.3Scanregistration

In order to perform a scan registration algorithm, the

FourierMellintransform(FMT)hasbeenchosen[34][31].

The FMT is a global method that takes the contributions

from all points in the images into account, in order to

provide a way to recover all rigid transformation

parameters,i.e.,rotation,translation.Itisanefficientand

accurate method to process a couple of images that are

fairlysimilar(seeFig.2).Thestepsofthescanregistration

algorithmarepresentedinAlgorithm1.

Input:Radarimages

k

I and

k 1

I

1.Apply the thresholding filter to eliminate the speckle noise

inbothimages

2.ApplytheFFTtoimages

k k

I I and

k 1 k 1

I I

3.Computethemagnitudes

k k 1

,

I I

4.Transform the resulting values from rectangular to polar

coordinates.

I I ,

5.Apply the FFT to polar images, a bilinear interpolation is

used.

I I , ,

6.Compute

Corr w , w

between

w , w

I , and

k 1

w , w

I , using(1)

7.Compute

1

Corr F Corr w , w

,

8.Findthelocationofthe maximumof

Corr , andobtain

therotationvalue

9.Construct Ir byapplyingreverserotationto

k 1

I

10.ApplytheFFTtoimage

k 1

Ir

11.Computethecorrelation

x y

Corr w , w using(1)

12.TaketheinverseFFT

Corr x, y of

x y

Corr w , w

13.return the values ( x, y) of the shift and the rotation

value

Algorithm1.Scanregistrationalgorithm

5.ROLAM:radaronlylocalizationandmapping

Inaroboticscontext,itisusuallyassumedthatthescanof

a range sensor is a collection of depth measurements

takenfromasinglerobotposition.Thiscanbedonewhen

working with lasers that are much faster than radar

sensors and can be considered instantaneous when

compared with the dynamics of the vehicle. However,

whentherobotismovingathighspeed,mostofthetime,

this assumption is unacceptable. Important distortion

phenomena appear and cannot beignored.In the case of

alaserrangefinderwitha75Hzscanningrate,distortion

exists but is ignored. This assumption is valid for low

speed applications, nevertheless still moving straight

aheadataspeedof5m/s,a7cmdistortioneffectappears.

At classical road vehicle speeds (in cities, on roads or

motorways) more important distortions can be observed.

Of course, the rotation of the vehicle itself during the

measurementacquisitionisanothersourceofdisturbance

which cannot be neglected for high speed displacement

or with slow sensors (cf. Fig. 3). Finally, let us note that

when the sensor is too slow, a stop & scan method is

oftenapplied[35].

For example, in the previous radar mapping application

(presented in section 4), the sensor delivers one

panoramicradarimagepersecond.Whentherobotkeeps

moving straight ahead, at a low speed of 5 m/s, the

panoramic image includes a 5metre distortion. We deal

with this distortion by using proprioceptive sensors in

ordertoestimatethetrajectoryandtobuildavirtualscan

taken from a single position. In fact, distortion is

considered as a noise and filtered out. In our second

approach, we use distortion as a source of information

regarding the displacement of the vehicle. The objective

here is not to remove distortion but to extract movement

informationfromit.

5.1Distortionproblemstatement

Distortionisthealterationofthedataasalsoobservedin

ourradarimages(cf.Fig.3).Witharotatingrangesensor,

thedesiredacquisitionshouldrepresentthesurroundings

oftherobotattime t. Whenthesensorsrotationspeedis

slowcomparedtothevehiclesmotion,thechangesinthe

robots location during the acquisition period lead to

image distortion. This distortion effect is presented on

realandsimulateddatainFig.3&4.

Without any prior knowledge of the environment, a

unique scan acquisition from a single sensor cannot give

information about distortion. This is one of the reasons

why distortion is usually considered as noise and

corrected by proprioceptive sensors. The aim of this

second approach is to measure the distortion of the data

bycomparingsuccessiveradarimagesinordertoextract

information about the robots movement. By using a

rotating sensor without any knowledge of the

environment shape, two successive observations are

required. The stated assumption is the local constant

velocity of the vehicle during two successive

measurements.Theposeofeachmeasurementisdirectly

linked to the observation pose and to the angle of

observation.Thisposecanbeexpressedwiththeconstant

5 Damien Vivet, Franck Grossier, Paul Checchin, Laurent Trassoudaine and Roland Chapuis:

Mobile Ground-Based Radar Sensor for Localization and Mapping: An Evaluation of two Approaches

www.intechopen.com

velocitymodelofthevehicleandisonlyafunctionofthe

linearandangularspeedoftherobot.

Datadistortionresultsfromcombinedsensorandvehicle

movements. The distortion equation can be represented

bytheparametricequationofatrochoidasshowninFig.

4(a).Indeed,attime t, thepositionofadetectiondoneat

range r is a function of the centre pose

( )

c c c

t t t

x , y ,| and of

thesensorbearing

t

. q

( )

( )

( ) ( )

( ) ( )

( )

( )

c

t

c

t

A)Centerpositionattimet

c c

t t

t

c c

t

t t

B)Centerrotation

C)Detectionpositionattimet

x t x

y t

y

cos sin

cos

.

sin

sin cos

| |

| |

( (

= ( (

( (

(

(

(

+ (

(

(

(

r q

r q

In order to obtain the centre pose at time t, an evolution

model taking into account the linear ( )

V and angular ( )

w

velocities is formulated. Pose

( )

c c c

t t t

x , y ,| is obtained as

follows:

c

t

c

t

c c

t 0

t

cos

x 2 2V t

sin ,

2 t y

sin

2

t. | |

( | |

( |

(

| |

\ . (

= (

|

(

| | ( \ .

(

|

( \ .

= +

w

w

w w

w

(3)

If based on this distortion parametric equation, the

measurements can be distorted or undistorted. The

equation is parameterized with respect to the linear and

angular velocities V and w. With a prior estimate of these

parameters, detection( )

, , q r done at time t in the sensor

frame,canbetransformedintotheworldframebasedon

(3)and(2)(cf.Fig.5).

Theobjectiveistoestimateproprioceptiveinformation,in

fact velocities V

and w,

which best undistort the

measurements.

5.2Proprioceptiveinformationextraction

According to the assumption that the velocity of the

vehicle is locally constant during two successive

measurements,theeffectivevelocitiesoftherobotwillbe

those that, using distortion formulation, allow

superimposingdetectionsofthesameobjectintheworld.

Figure3.Distorteddatafromarealsensor:radardata(a)withdistortioneffect(b)withoutanydistortioneffect

Figure4.(a)Distortionphenomenon:whenthevehicleismovingalongthegreentrajectory,thesensorisscanning.Thesensorbeams

arerepresentedinredandblueduringthefirstandsecondradaracquisitionrespectively.Thefirstandthelastbeamofeachacquisition

do not measure the same thing. Each scan is distorted by movement. (b) represents the desired acquisition from the second position

(orincaseofstop&scanmethod)withcorrectedbeam

i

j

t

z' fromscan j takenattimestamp

i

t and(c)theobtainedmeasurementswhen

movingwithequivalentuncorrecteddetection

i

j

t

z

6 Int. j. adv. robot. syst., 2013, Vol. 10, 307:2013 www.intechopen.com

Figure5.(a)Dataobtainedinsensorframewithoutconsideringdistortion.(b)Undistorteddatabasedondistortionformulation

The pose of each measurement is directly linked to the

observation pose and to the angle of observation. This

posecanbeexpressedwiththeconstantvelocitymodelof

the vehicle and is only a function of the linear and

angularspeedsoftherobot,see(3).Let

1

and

2

bethe

landmarks representing the same point in the world

w

in their respective distorted scans. We can transform

1

and

2

into the undistorted world frame by using the

parameters(i.e.,linearvelocity V andangularvelocity w )

anddistortionfunctions f andg (cf.Fig.6).Bycomparing

the different projected poses in each acquisition, velocity

parameterscanbeextracted.Inordertoachievethistask,

thedataassociationbetweenimages1and2isrequired.

Because the radar data are submitted to different

perturbations (such as speckle, Doppler, antenna

aperture, etc.) the appearance of a detected object keeps

changingfromonescantoanother.Asaconsequence,no

descriptorisavailabletoperformdataassociation,soitis

based only on the Mahalanobis distance between

predictions and measurements. The function

1

h g f

= is

unknown because

1

g

cannot be obtained; consequently,

minimization techniques have to be used in order to

estimate

1

. Finally,eachassociationcangivenewvalues

ofthevelocityparameters.

The robot moves from an initial pose

0

T

v 0 0

[x , y ] = x with

aninitialorientation

0

| ataconstantvelocity

T

v

[V, ] e = V

during two successive sensor scans. Each landmark

M M T

i i i

[x , y ] = M observed at time

i

t is distorted by the

robots displacement. At this step, we suppose that

i

M is

the expression of the landmark after Doppler correction.

If

M M T

d d d

[x , y ] = M is the perturbed detection of

landmark

i

M at time

d

t , the correction is obtained as

follows:

( ) ( )

( )

( )

( )

2 2

sensor d M M

i d d

sensor d

sensor d

Vcos t

x y 2

cos t

sin t

| |

= + + |

|

\ .

(

(

(

M

w

a

l

w

w

(4)

where l is the wavelength of the radar signal, a a

coefficient which links frequency and distance, and

sensor

w therotatingrateofthesensor.IfnoDopplereffect

has to be considered, as is the case with laser sensors,

simplynotethat

i d

. = M M

So,

1

and

2

detected in their respective scans can be

propagated in the world frame by their two respective

propagationfunctions f andg.

( ) ( )

w 1 w 2

f , V, g , V, . = = M M and M M w w (5)

For the first radar image, the function f can be expressed

as:

( ) ( )

( ) ( )

0

0 1 0 1

w v 1

0 1 0 1

1

0

1

1

0

cos t sin t

x

sin t cos t

t

cos

2 t 2V

sin

2 t

sin

2

| |

| |

|

|

( + +

= + (

+ +

(

( | |

+

( |

| |

\ . (

+

|

(

| |

\ .

( +

|

(

\ .

M M

w w

w w

w

w

w w

(6)

with

( )

1 1

1

sensor

arctan y , x

t . =

w

Similarly, for the second scan ( )

w 2

g , V, = M M w can be

easily deducted with

( )

2 2

2

sensor

arctan y , x 2

t .

+

=

p

w

The

functionarctanisdefinedon ; . ( +

p p

The entire set of detections in the world frame can be

easily expressed in a matricial form based on (6). Based

on these equations we can conclude that distortion is

linkedtothevelocityparameters ( )

V, , e tothelandmarks

in the two successive scans

1

and

2

, to the initial pose

of the robot

0

v 0

( , ) | x and to the sensor scanning rate

sensor

. w But, in fact, the only parameters that need to be

estimated are the unknown velocities and consequently

thecurrentradarpose.

7 Damien Vivet, Franck Grossier, Paul Checchin, Laurent Trassoudaine and Roland Chapuis:

Mobile Ground-Based Radar Sensor for Localization and Mapping: An Evaluation of two Approaches

www.intechopen.com

5.3Estimationofvelocities

In order to estimate the velocity parameters

T

V, , the

data association between landmarks from the two

successivescansneedstobedone.

1

hastobepredicted

from

1

(in the first scan) onto the second scan. A

minimization technique is applied in order to calculate

the function

1 1

h( , V, ) M M because h cannot be

calculated directly. The cost function S for one landmark

isgivenby

2

w2 w1

( ) M M or:

2

1 1 1 1

S(x , y ) (g( , V, ) f( , V, )) . M M (7)

A gradient method with adaptive stepsizes is used to

minimizethiscostfunction.Asaresult,thepredictionofthe

firstradarimagelandmarkscanbecomputedinthesecond

image as well as its uncertainty ellipsis. Data association

between prediction

1

and landmark

2

is then calculated,

based on Mahalanobis distance criteria by taking into

accountuncertaintiesofmeasurementsandpredictions.

As radar data are very noisy (cf. Fig. 2), both landmark

extractionanddataassociationcanbefalse.Forexample,

the speckle effect can lead to some ghost detections or

false disappearances due to the different possible

combinationsofradarsignals.Moreover,duetomultiple

reflections, radar data are not as accurate as laser data.

Thus,allpossibledataassociationshavetobeconsidered.

Two assumptions are made at this point. First, the

percentage of detections due to static objects has to be

sufficient.Indeeddetectionscomingfrommovingobjects

can lead to false velocity estimates. Moreover, if all the

detections coming from moving objects are coherent and

give the same velocity estimate, the most pessimistic

requirement is that 50% of the detections in the

environmenthavetocomefromstaticobjects.

Figure 6. Principle of distortion analysis: the detected landmark in

eachscaninred,andthecorrespondinglandmarkintherealworld;

thepredicteddetectionposefromscan1ontoscan2ingreen

Figure7.Trajectoriesobtainedbythetwomethods.Inblack the

DGPS reference trajectory, in red (dotdashed curve) the

trajectory obtained by the FMTRSLAM approach and in blue

(stardashedcurve)theROLAMtrajectory

Second, the vehicle equipped with the radar sensor is

supposed to be moving during two consecutive

acquisitions at a constant velocity ( V and ). Actually,

whenthevehicleacceleratesordecelerates,theestimated

velocityobtainedwillbethemeanspeedofthevehicle.

For each data association allowed by the Mahalanobis

distance, a new estimate of the robots velocity is

computed and sent over to an extended Kalman filter

process. Then, updated speeds are projected into the

velocity space with their respective uncertainties. In this

space, the global coherence of the scene is achieved by

fusing all the consistent estimates. This fusion process is

done after removing the outliers (i.e., wrong detections

and associations) using a RANSAC process. Indeed, we

suppose that most detections are static and well

associated. The fusion uses the covariance intersection

(CI) [36] method in order to be more pessimistic in the

case of the presence of any residual wrong vote during

fusion.

Positioningerror(m) Mean Std

ROLAM 10.4721 11.2428

FMTRSLAM 12.9435 14.1574

Displacementerror(m) Mean Std

ROLAM 0.20261 0.69689

FMTRSLAM 0.092042 0.37894

Rotationalerror(deg) Mean Std

ROLAM 0.002779 0.14556

FMTRSLAM 0.02757 0.11151

Table2.Trajectoryevaluation

8 Int. j. adv. robot. syst., 2013, Vol. 10, 307:2013 www.intechopen.com

Figure8.Translationaltransformationnormandestimationofrotationforbothapproaches.(a)Normofthetranslationaldisplacement

ateachstep,(b)normofthetranslationaldisplacementerror.(c)Valueofrotationateachstep,(d)errorinrotation

Figure9.(a)AerialGoogleview.(b)RadarmapobtainedbytheFMTRSLAMprocess.(c)RadarmapobtainedbytheROLAMprocess

9 Damien Vivet, Franck Grossier, Paul Checchin, Laurent Trassoudaine and Roland Chapuis:

Mobile Ground-Based Radar Sensor for Localization and Mapping: An Evaluation of two Approaches

www.intechopen.com

6.Experimentalresults

This section provides the experimental results of both

radarbased approaches. The radar and the

proprioceptivesensorsweremountedonautilitycar.The

experimental run that is presented was conducted in an

outdoor field, around the Auvergne Zenith car park (cf.

aerial view in Fig. 9(a)), with a semistructured

environment(buildings,trees,roads,roadsigns,etc.).The

K2Pi radar was on top of the vehicle, two metres above

the ground. A 2.5 km trajectory was travelled at a mean

speed of 30 km/h. In order to evaluate the performance

assessmentofeachapproach,aDGPSsystemwasusedto

provide a reference trajectory. Radar images as well as

the data obtained from the other sensors were recorded

andpostprocessedasexplainedpreviously.

The trajectories obtained by the FMTRSLAM and

ROLAM algorithms are presented, respectively in red

(dotdashedcurve)andblue(stardashedcurve),inFig.7

andthereferencetrajectoryisplottedinblack.Duetothe

factthat,withoutaloopclosure,theerroriscumulatedall

alongthetrajectory,thepositioningerrorisgrowingand

representsthedriftoftheprocess.

In order to obtain an efficient and correct evaluation of

the results, relative displacements between robot poses

are studied. Figure 8(a) represents the translational

transformation value for the two approaches and the

reference. Figure 8(b) is the corresponding estimation

error. As a result of the initial acceleration, a peak at the

beginning of the translational transformation error plot

can be observed for the ROLAM approach. This effect is

not so obvious with the FMTRSLAM method. The

angular comparison is described in Fig. 8(a)&(b). It may

be observed that at the given times 90s and 135 s, peaks

appear in the estimation of the rotation angle (and, to a

lesser extent, in translation). This error is due to driving

around the roundabouts at high speed, thus making the

assumption of constant angular velocity erroneous; as a

consequence, the estimates of angular velocity are not as

goodasexpectedatthisparticularstage.

In order to compare both results in a quantitative way,

different criteria have been considered. First, the global

positioning error, directly linked to the visual evaluation

of thetrajectory, is analysed.The cumulative error of the

dead reckoning method makes the final error with

respect to the ground truth trajectory equal to 65 and 48

metres with respectively FMTRSLAM and ROLAM

algorithmsafteratrajectoryof2.5km.Duetothefactthis

metrictakesintoaccountthecumulativeerrorswhichdo

not represent directly the quality of the estimates, two

metrics based on relative displacement are considered.

Successive translational and rotational transformations

areestimated.Allquantitativeresultsaresummarizedin

Table2.

The FMTRSLAM approach provides displacement

results with a mean error of 0.09 m and a standard

deviation of 0.38 m, while the ROLAM algorithm has a

mean error of 0.20 m with a 0.70 m standard deviation.

Considering rotation, the FMTRSLAM algorithm

obtains a mean error of 0.03 deg with a standard

deviation of 0.1 deg, while the ROLAM process gives a

zeromeanerrorwitha0.15degstandarddeviation.

Byanalysingthesevalues,wecanconcludethatboththe

FMTRSLAMandROLAMalgorithmsallowobtaininga

goodestimateofthevehicletrajectory,eveniftheFMTR

SLAM approach seems to provide more accurate

displacement estimates while the ROLAM technique

gives better results in rotation. Both methods produce

interestingtrajectoryresultswhichallowbuildingaradar

map by positioning each radar scan at its corresponding

estimated position. A radarmapping result based on the

FMTRSLAMprocess is given in Fig. 9(b). As illustrated

in Fig 9(c), a map can also be obtained by the ROLAM

algorithmiftheacquisitionposesofeachbeam,thathave

beenpredicted,arerecorded.Inthiscase,eachrawradar

spectrum is positioned and plotted in order to build a

map. In both cases, the maps reveal details of the

environment,butadifferenceappearsduetothefactthat

nofilteringisappliedinthesecondapproach.Ofcourse,

postprocessing could have been added in order to

remove these different perturbations (speckle effect,

Doppler, antenna aperture, etc.) and to improve the

appearanceofthemap.Wechosetopresenttheresultsin

this manner in order to highlight the difference between

both approaches: the first one is based on a scan

matchingtechnique,thesecondonedealswitheachradar

beamaspreviouslyexplained.

Considering time processing, the pose estimation with

MATLAB is done by ROLAM in an average processing

time of 26 ms with an average number of 10 considered

landmarks. One step of the FMTRSLAM process (scan

building, scan registration, prediction and update),

implemented in C/C++, is achieved in less than one

secondwithaQuadCoreIntelXeon(2.8GHz)withsixGo

DDR2 FBDIMM RAM (667 MHz). The processing time

increaseswhentryingtomanagehowtoclosetheloop,

but this case is not considered here. The radar frequency

being 1 Hz, both approaches can be implemented in real

time.

7.Conclusion

Two methods for computing a mobile robots

localization estimates in natural or seminatural

environments are presented using radar technology. By

using such a groundbased microwave radar sensor,

which is most unusual in mobile robotics, a trajectory

oriented SLAM process is first proposed. It is based on

10 Int. j. adv. robot. syst., 2013, Vol. 10, 307:2013 www.intechopen.com

the FourierMellin transform and the extended Kalman

filter. The second approach presented is based on the

fact that when the robot moves at high speed while the

sensor is rotating, data are subject to distortion

phenomena. This second method computes the

velocimetry of the vehicle by explicitly analysing these

measurement distortions. Such radarbased odometry

doesnotuseanyproprioceptivesensors.Thispartofthe

presented work can be extended to any kind of non

instantaneous rotating sensor. Our two approaches

work without any knowledge of the vehicles

surroundings; not only do they allow estimating the

robotstrajectory,theyalsopermitbuildingamapofthe

environment.Anevaluationandacomparativestudyof

thetwoproposedmethodsonrealworlddataovera2.5

km course have been conducted, which have

demonstrated their feasibility and reliability for mobile

groundvehiclesathighspeed(30km/h).

8.Acknowledgements

ThisstudywassupportedbytheAgenceNationaledela

Recherche(ANRtheFrenchNationalResearchAgency)

(ANRImpalaPsiRobANR06ROBO0012).Theauthors

would like to thank the members of CEMAGREF (IRSTEA)

fortheirkindloanoftheradarsensorusedinthispaper.

This work has also been funded by the French

government research programme Investissements

davenir through the RobotEx Equipment of Excellence

(ANR10 EQPX44) and the IMobS3 Laboratory of

Excellence(ANR10LABX1601),bytheEuropeanUnion

through the programme Regional Competitiveness and

Employment20072013(ERDFAuvergneregion),bythe

Auvergne region and by the French Institute for

AdvancedMechanics.

9.References

[1] Tim Bailey and Hugh DurrantWhyte. Simultaneous

Localization and Mapping (SLAM): Part II. IEEE

RoboticsandAutomationMagazine,13(3):108117,2006.

[2] G. Dissanayake, P. Newman, H. F. DurrantWhyte, S.

Clark,andM.Csorba.ASolutiontotheSimultaneous

Localization and Map Building (SLAM) Problem.

IEEE Transactions on Robotics and Automation,

17(3):229241,2001.

[3] Hugh DurrantWhyte and Tim Bailey. Simultaneous

Localization and Mapping: Part I. IEEE Robotics and

AutomationMagazine,13(2):99110,2006.

[4] U. Frese. A Discussion of Simultaneous Localization

andMapping.AutonomousRobots,20(1):2542,2006.

[5] D. Hehnel, W. Burgard, D. Fox, and S. Thrun. A

highly efficient FastSLAM algorithm for generating

cyclic maps of largescale environments from raw

laser range measurements. In Proc. of the Inter. Conf.

onIntelligentRobotsandSystems,pages206211.IEEE,

2003.

[6] CharlesBibbyandIanReid.Simultaneouslocalisation

and mapping in dynamic environments (slamide)

withreversibledataassociation.InWolframBurgard,

Oliver Brock, and Cyrill Stachniss, editors, Robotics:

ScienceandSystems.TheMITPress,2007.

[7] J. Leonard, J. How, S. Teller, M. Berger, S. Campbell,

G. Fiore, L. Fletcher, E. Frazzoli, A. Huang, S.

Karaman,O.Koch,Y.Kuwata,D.Moore,E.Olson,S.

Peters, J. Teo, R. Truax, M. Walter, D. Barrett, A.

Epstein, K. Maheloni, K. Moyer, T. Jones, R.Buckley,

M. Antone, R. Galejs, S. Krishnamurthy, and J.

Williams. A Perception Driven Autonomous Urban

Vehicle. Journal of Field Robotics, 25:727774, October

2008. Issue 10, Special Issue on the 2007 DARPA

UrbanChallenge,PartIII.

[8] D. Ribas, P. Ridao, J.D. Tards, and J. Neira.

Underwater SLAM in a Marina Environment. In

ProceedingsofInt.Conf.onIntelligentRobotsandSystems,

pages14551460,SanDiego,USA,October2007.IEEE.

[9] M.O. Monod. Frequency modulated radar: a new

sensor for natural environment and mobile robotics.

Doctoraldissertation,ParisVIUniversity,1995.

[10] M. Bosse and R. Zlot. Map Matching and Data

Association for LargeScale Twodimensional Laser

Scanbased SLAM. Int. Journal of Robotics Research,

27(6):667691,2008.

[11] R. Eustice, H. Singh, J. Leonard, M. Walter, and R.

Ballard. Navigating the RMS Titanic with SLAM

Information Filters. In Proc. of Robotics: Science and

Systems (RSS), pages 5564, Cambridge, MA, USA,

June2005.TheMITPress.

[12] I.Mahon,S.B.Williams,O.Pizarro,andM.Johnson

Roberson. Efficient ViewBased SLAM Using Visual

Loop Closures. IEEE Trans. on Robotics, 24(5):1002

1014,October2008.

[13] S. Clark and G. Dissanayake. Simultaneous

localizationandmapbuildingusingmillimeterwave

radartoextractnaturalfeatures.InProc.ofInter.Conf.

on Robotics and Automation, pages 13161321, Detroit,

Michigan,USA,May1999.IEEE.

[14] A. Foessel, J. Bares, and W.R.L. Whittaker. Three

dimensional map building with MMW RADAR. In

The 3rd International Conference on Field and Service

Robots (FSR), page 6, Helsinki, Finland, 6 2001.

YleisjljennsPainnoprssi.

[15] J.Mullane,E.Jose,M.D.Adams,andW.S.Wijesoma.

Including Probabilistic Target Detection Attributes

Into Map Representations. International Journal of

RoboticsandAutonomousSystems,55(1):7285,2007.

[16] F. Lu and E. Milios. Robot Pose Estimation in

Unknown Environments by Matching 2D Range

Scans. Journal of Intelligent and Robotics Systems,

18:249275,1997.

[17] P.J. Besl and N.D. McKay.A Method for Registration

of3DShapes.InIEEETransactiononPatternAnalysis

andMachineIntelligence,volume14(2),pages239256,

USA,1992.IEEEComputerSociety.

11 Damien Vivet, Franck Grossier, Paul Checchin, Laurent Trassoudaine and Roland Chapuis:

Mobile Ground-Based Radar Sensor for Localization and Mapping: An Evaluation of two Approaches

www.intechopen.com

[18] P.Checchin,F.Grossier,C.Blanc,R.Chapuis,andL.

Trassoudaine. Field and Service Robotics Springer

TractsinAdvancedRobotics,volume62,chapterRadar

Scan Matching SLAM using the FourierMellin

Transform,pages151161.Springer,2010.

[19] F.Grossier,P.Checchin,C.Blanc,R.Chapuis,andL.

Trassoudaine. Trajectoryoriented EKFSLAM using

the FourierMellin Transform applied to Microwave

Radar Images. In IEEE/RSJ Inter. Conf. on Intelligent

RobotsandSystems(IROS),pages49254930,StLouis,

Missouri,USA,102009.IEEE.

[20] J. Borenstein, H. R. Everett, L. Feng, and D. Wehe.

Mobile robot positioning: Sensors and techniques.

JournalofRoboticSystems,14(4):231249,1997.

[21] Andrew Howard. Realtime stereo visual odometry

forautonomousgroundvehicles.InProc.ofInt.Conf.

on Intelligent Robots and Systems, pages 39463952.

IEEE,2008.

[22] Bernd Kitt, Andreas Geiger, and Henning Lategahn.

Visual Odometry based on Stereo Image Sequences

with RANSACbased Outlier Rejection Scheme. In

Intelligent Vehicles Symposium, pages 486492, San

Diego,USA,June2010.IEEE.

[23] David Nistr, Oleg Naroditsky, and James Bergen.

Visual odometry for ground vehicle applications.

JournalofFieldRobotics,23,2006.

[24] Gian Diego Tipaldi and Fabio Ramos. Motion

clustering and estimation with conditional random

fields. In Proceedings of the Inter. Conf. on Intelligent

Robots and Systems, (IROS 2009), pages 872877, St.

Louis,MO,USA,2009.IEEE.

[25] B.WilliamsandI.Reid.OnCombiningVisualSLAM

and Visual Odometry. In Proc. of Inter. Conf. on

Robotics and Automation, pages 34943500,

Anchorage,Alaska,USA,2010.IEEE.

[26] Alberto Pretto, Emanuele Menegatti, Maren

Bennewitz, Wolfram Burgard, and Enrico Pagello. A

VisualOdometryFrameworkRobusttoMotionBlur.

InInter.Conf.onRoboticsandAutomation,pages1685

1692,Kobe,Japan,2009.IEEE.

[27] Michael Jenkin, Bart Verzijlenberg, and Andrew

Hogue. Progress towards underwater 3D scene

recovery. In Proc. of the 3rd Conf. on Computer Science

and Software Engineering, pages 123128, Montral,

Quebec,Canada,2010.ACM.

[28] E. Olson. Realtime correlative scan matching. In

Proc. of Inter. Conf. on Robotics and Automation, pages

43874393.IEEE,2009.

[29] Omar AitAider, Nicolas Andreff, JeanMarc Lavest,

andPhilippeMartinet.SimultaneousObjectPoseand

Velocity Computation Using a Single View from a

Rolling Shutter Camera. In European Conf. on

ComputerVision,volume3952,pages5668.Springer,

2006.

[30] D. Vivet, P. Checchin, and R. Chapuis. Radaronly

Localization and Mapping for Ground Vehicle at

High Speed and for Riverside Boat. In Inter. Conf. on

Robotics and Automation (ICRA), pages 26182624, St

Paul,Minnesota,USA,52012.IEEE.

[31] B.S. Reddy and B.N. Chatterji. An FFTbased

Technique for Translation, Rotation, and Scale

Invariant Image Registration. IEEE Trans. on Image

Processing,3(8):12661270,August1996.

[32] D.M. Cole and P.M. Newman. Using Laser Range

Data for 3D SLAM in Outdoor Environments. In

Proc. of Inter. Conf. on Robotics and Automation, pages

15561563,Orlando,FL,USA,May2006.IEEE.

[33] P. Aschwanden and W. Guggenbuhl. Experimental

resultsfromacomparativestudyoncorrelationtype

registration algorithms. In Robust computer vision:

quality of vision algorithms, pages 268 289. W.

ForstnerandSt.Ruwiedel,editors,Wichmann,1992.

[34] Q. Chen, M. Defrise, and F. Deconinck. Symmetric

PhaseOnly Matched Filtering of FourierMellin

Transforms for Image Registration and Recognition.

IEEE Trans. Pattern Anal. Mach. Intell., 16(12):1156

1168,1994.

[35] Andreas Nchter, Kai Lingemann, Joachim

Hertzberg, and Hartmut Surmann. HeuristicBased

Laser Scan Matching for Outdoor 6D SLAM. In

Advances in Artif. Intellig. 28th German Conf. on AI,

pages304319.Springer,2005.

[36] S. Julier and J. Uhlmann. Using Covariance

Intersection for SLAM. Robotics and Autonomous

Systems,55(1):320,2007.

12 Int. j. adv. robot. syst., 2013, Vol. 10, 307:2013 www.intechopen.com

You might also like

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Piper Lance II - Turbo Lance II-Maintenance - smv1986Document568 pagesPiper Lance II - Turbo Lance II-Maintenance - smv1986willkobiNo ratings yet

- F3 Maths 2012 1stexam Paper1Document3 pagesF3 Maths 2012 1stexam Paper1YiuhangLeung100% (2)

- OCEN 201 Introduction To Ocean & Coastal EngineeringDocument28 pagesOCEN 201 Introduction To Ocean & Coastal EngineeringbalumaxNo ratings yet

- Types of EvidenceDocument13 pagesTypes of EvidenceSteven Jan Sab-itNo ratings yet

- PID-Controller Tuning Optimization With Genetic Algorithms in Servo SystemsDocument14 pagesPID-Controller Tuning Optimization With Genetic Algorithms in Servo SystemsAurel GSNo ratings yet

- Uncalibrated Neuro-Visual Servoing Control For Multiple Robot ArmsDocument12 pagesUncalibrated Neuro-Visual Servoing Control For Multiple Robot ArmsAurel GSNo ratings yet

- Vibration Control of Flexible Mode For A Beam-Type Substrate Transport RobotDocument7 pagesVibration Control of Flexible Mode For A Beam-Type Substrate Transport RobotAurel GSNo ratings yet

- Top-View-Based Guidance For Blind People Using Directional Ellipse ModelDocument13 pagesTop-View-Based Guidance For Blind People Using Directional Ellipse ModelAurel GSNo ratings yet

- Visual Trajectory-Tracking Model-Based Control For Mobile RobotsDocument12 pagesVisual Trajectory-Tracking Model-Based Control For Mobile RobotsAurel GSNo ratings yet

- ROV-Based Underwater Vision System For Intelligent Fish Ethology ResearchDocument9 pagesROV-Based Underwater Vision System For Intelligent Fish Ethology ResearchAurel GSNo ratings yet

- Lossless Geometry Compression Through Changing 3D Coordinates Into 1DDocument7 pagesLossless Geometry Compression Through Changing 3D Coordinates Into 1DAurel GSNo ratings yet

- Modelling Behaviour Patterns of Pedestrians For Mobile Robot Trajectory GenerationDocument11 pagesModelling Behaviour Patterns of Pedestrians For Mobile Robot Trajectory GenerationAurel GSNo ratings yet

- Kinematics and The Implementation of A Modular Caterpillar Robot in Trapezoidal Wave LocomotionDocument11 pagesKinematics and The Implementation of A Modular Caterpillar Robot in Trapezoidal Wave LocomotionAurel GSNo ratings yet

- Sensor Feature Selection and Combination For Stress Identification Using Combinatorial FusionDocument10 pagesSensor Feature Selection and Combination For Stress Identification Using Combinatorial FusionAurel GSNo ratings yet

- Micro Aerial Vehicle (MAV) Flapping Motion Control Using An Immune Network With Different Immune FactorsDocument9 pagesMicro Aerial Vehicle (MAV) Flapping Motion Control Using An Immune Network With Different Immune FactorsAurel GSNo ratings yet

- TMP 2 DEEDocument13 pagesTMP 2 DEEFrontiersNo ratings yet

- Compliant Leg Architectures and A Linear Control Strategy For The Stable Running of Planar Biped RobotsDocument13 pagesCompliant Leg Architectures and A Linear Control Strategy For The Stable Running of Planar Biped RobotsAurel GSNo ratings yet

- Design and Real-Time Control of A 4-DOF Biped RobotDocument11 pagesDesign and Real-Time Control of A 4-DOF Biped RobotAurel GSNo ratings yet

- Balanced Motions Realization For A Mechanical Regulators Free and Front-Wheel Drive Bicycle Robot Under Zero Forward SpeedDocument9 pagesBalanced Motions Realization For A Mechanical Regulators Free and Front-Wheel Drive Bicycle Robot Under Zero Forward SpeedAurel GSNo ratings yet

- Dynamic Modelling and Trajectory Tracking of Parallel Manipulator With Flexible LinkDocument9 pagesDynamic Modelling and Trajectory Tracking of Parallel Manipulator With Flexible LinkAurel GSNo ratings yet

- Dynamic Positioning of Underwater Robotic Vehicles With Thruster Dynamics CompensationDocument8 pagesDynamic Positioning of Underwater Robotic Vehicles With Thruster Dynamics CompensationAurel GSNo ratings yet

- A Path Tracking Algorithm Using Future Prediction Control With Spike Detection For An Autonomous Vehicle RobotDocument9 pagesA Path Tracking Algorithm Using Future Prediction Control With Spike Detection For An Autonomous Vehicle RobotAurel GSNo ratings yet

- A Specification Patterns System For Discrete Event Systems AnalysisDocument13 pagesA Specification Patterns System For Discrete Event Systems AnalysisAurel GSNo ratings yet

- A Synergistic Approach For Recovering Occlusion-Free Textured 3D Maps of Urban Facades From Heterogeneous Cartographic DataDocument10 pagesA Synergistic Approach For Recovering Occlusion-Free Textured 3D Maps of Urban Facades From Heterogeneous Cartographic DataAurel GSNo ratings yet

- A Novel Temperature Compensation Method For A MEMS Gyroscope Oriented On A Periphery CircuitDocument10 pagesA Novel Temperature Compensation Method For A MEMS Gyroscope Oriented On A Periphery CircuitAurel GSNo ratings yet

- Distance Computation Between Non-Holonomic Motions With Constant AccelerationsDocument15 pagesDistance Computation Between Non-Holonomic Motions With Constant AccelerationsAurel GSNo ratings yet

- A Path Tracking Algorithm Using Future Prediction Control With Spike Detection For An Autonomous Vehicle RobotDocument9 pagesA Path Tracking Algorithm Using Future Prediction Control With Spike Detection For An Autonomous Vehicle RobotAurel GSNo ratings yet

- A New Hybrid Approach For Augmented Reality Maintenance in Scientific FacilitiesDocument10 pagesA New Hybrid Approach For Augmented Reality Maintenance in Scientific FacilitiesAurel GSNo ratings yet

- A Fast and High-Resolution Multi-Target Localization Approach in MIMO RadarDocument8 pagesA Fast and High-Resolution Multi-Target Localization Approach in MIMO RadarAurel GSNo ratings yet

- A Highest Order Hypothesis Compatibility Test For Monocular SLAMDocument11 pagesA Highest Order Hypothesis Compatibility Test For Monocular SLAMAurel GSNo ratings yet

- The Mechanism of A Snake-Like Robot's Clamping Obstacle Navigation On High Voltage Transmission LinesDocument14 pagesThe Mechanism of A Snake-Like Robot's Clamping Obstacle Navigation On High Voltage Transmission LinesAurel GSNo ratings yet

- Robot Visual Tracking Via Incremental Self-Updating of Appearance ModelDocument11 pagesRobot Visual Tracking Via Incremental Self-Updating of Appearance ModelAurel GSNo ratings yet

- ED 107 162 Author Morphology. Pub Date Aug 69 Note Austin Edrs Price MF-$O.76 DescriptorsDocument75 pagesED 107 162 Author Morphology. Pub Date Aug 69 Note Austin Edrs Price MF-$O.76 DescriptorsTalha KhanNo ratings yet

- Quote: Quotation Prepared By: Sloan SellersDocument2 pagesQuote: Quotation Prepared By: Sloan SellersRubén CastañoNo ratings yet

- Introduction Unit Short Test 1B Grammar, Vocabulary, and VerbsDocument1 pageIntroduction Unit Short Test 1B Grammar, Vocabulary, and VerbsDimitar IvanovNo ratings yet

- Updated Official All Campuses ON CAMPUS Final Exams Schedule Spring 2021-22 Plan BDocument11 pagesUpdated Official All Campuses ON CAMPUS Final Exams Schedule Spring 2021-22 Plan BMohammad SafadiehNo ratings yet

- Tester Sursa Gembird CHM 03 ManualDocument15 pagesTester Sursa Gembird CHM 03 Manualzavaidoc70No ratings yet

- Analytical ExpositionDocument7 pagesAnalytical ExpositionEman FakotNo ratings yet

- ARHITECTURA Si FOCULDocument282 pagesARHITECTURA Si FOCULTheodor DinuNo ratings yet

- Hemera Creality CR10s Pro Upgrade Guide (Edition 1)Document24 pagesHemera Creality CR10s Pro Upgrade Guide (Edition 1)gumushNo ratings yet

- Bing WorksheetDocument3 pagesBing WorksheetFrutti MataniNo ratings yet

- Floor Heating Controls Wiring Instructions for FS and BA Master Weather CompensationDocument12 pagesFloor Heating Controls Wiring Instructions for FS and BA Master Weather Compensationjamppajoo2No ratings yet

- Johnson RPM Chart Evinrude E-Tec RPM Chart Mercury 4-Stroke RPM ChartDocument2 pagesJohnson RPM Chart Evinrude E-Tec RPM Chart Mercury 4-Stroke RPM ChartUlf NymanNo ratings yet

- Thrust Equation For A Turbofan Double Inlet/Outlet: Joshtheengineer April 8, 2017Document7 pagesThrust Equation For A Turbofan Double Inlet/Outlet: Joshtheengineer April 8, 2017Muhammad RidwanNo ratings yet

- Business Conclave - Concept DesignDocument3 pagesBusiness Conclave - Concept DesignSajal GuptaNo ratings yet

- Western Preços - SPDocument28 pagesWestern Preços - SPRobertaoJasperNo ratings yet

- Academic SummaryDocument4 pagesAcademic SummaryJacqui PendergastNo ratings yet

- 7 Barriers To Implementing and Maintaining An Effective HRM FunctionDocument13 pages7 Barriers To Implementing and Maintaining An Effective HRM FunctionPaing Hein KyawNo ratings yet

- DSP Lab - ScilabDocument17 pagesDSP Lab - ScilabSai Nikshipth MaddhugaruNo ratings yet

- 4 Compass SurveyingDocument10 pages4 Compass SurveyingSuson DhitalNo ratings yet

- Viking Tech ARG03FTC2551 - C217943Document8 pagesViking Tech ARG03FTC2551 - C217943ALIRIO SOLANONo ratings yet

- Phase-Field Models For The Evolution of Complex SystemsDocument37 pagesPhase-Field Models For The Evolution of Complex SystemsMathis PlappNo ratings yet

- Beck Thinking and Depression Idiosyncratic Content and Cognitive DistortionsDocument10 pagesBeck Thinking and Depression Idiosyncratic Content and Cognitive DistortionsFrancisco GaravagliaNo ratings yet

- Upto 62rd BPSC Mechanical Question BankDocument140 pagesUpto 62rd BPSC Mechanical Question BankASHISH KUMAR SINGHNo ratings yet

- A Thesis 123Document77 pagesA Thesis 123Meli SafiraNo ratings yet

- Maintenance Manual - Booms: S Booms Z BoomsDocument185 pagesMaintenance Manual - Booms: S Booms Z BoomsRafael Vieira De AssisNo ratings yet

- Mobil Dynagear Series Performance ProfileDocument2 pagesMobil Dynagear Series Performance ProfileXavier DiazNo ratings yet

- Frankfurt School taxes and ideology critiqueDocument5 pagesFrankfurt School taxes and ideology critiqueErnesto BulnesNo ratings yet