Professional Documents

Culture Documents

Pattern Classification Using Simplified Neural Networks With Pruning Algorithm

Uploaded by

Thái SơnOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Pattern Classification Using Simplified Neural Networks With Pruning Algorithm

Uploaded by

Thái SơnCopyright:

Available Formats

ICTM 2005

Pattern Classification using Simplified Neural Networks with Pruning Algorithm

S. M. Kamruzzaman1 Ahmed Ryadh Hasan2 Abstract: In recent years, many neural network models have been proposed for pattern classification, function approximation and regression problems. This paper presents an approach for classifying patterns from simplified NNs. Although the predictive accuracy of ANNs is often higher than that of other methods or human experts, it is often said that ANNs are practically black boxes, due to the complexity of the networks. In this paper, we have an attempted to open up these black boxes by reducing the complexity of the network. The factor makes this possible is the pruning algorithm. By eliminating redundant weights, redundant input and hidden units are identified and removed from the network. Using the pruning algorithm, we have been able to prune networks such that only a few input units, hidden units and connections left yield a simplified network. Experimental results on several benchmarks problems in neural networks show the effectiveness of the proposed approach with good generalization ability. Keywords: Artificial Neural Network, Pattern Classification, Pruning Algorithm, Weight Elimination, Penalty Function, Network Simplification.

1 Introduction

In recent years, many neural network models have been proposed for pattern classification, function approximation and regression problems [2] [3] [18]. Among them, the class of multi-layer feed forward networks is most popular. Methods using standard back propagation perform gradient descent only in the weight space of a network with fixed topology [13]. In general, this approach is useful only when the network architecture is chosen correctly [9]. Too small a network cannot learn the problem well or too large a size will lead to over fitting and poor generalization [1]. Artificial neural networks are considered as efficient computing models and as the universal approximators [4]. The predictive accuracy of neural network is higher than that of other methods or human experts, it is generally difficult to understand how the network arrives at a particular decision due to the complexity of a particular architecture [6] [15]. One of the major criticism is their being black boxes, since no satisfactory explanation of their behavior has been offered. This is because of the complexity of the interconnections between layers and the network size [18]. As such, an optimal network size with minimal number of interconnection will give insight into how neural network performs. Another motivation for network simplification and pruning is related to time complexity of learning time [7] [8].

2 Pruning Algorithm

Network pruning offers another approach for dynamically determining an appropriate network topology. Pruning techniques [11] begin by training a larger than necessary network and then eliminate weights and neurons that are deemed redundant. Typically, methods for removing weights involve adding a penalty term to the error function [5]. It is hoped that adding a penalty term to the error function, unnecessary connection will have smaller weights and therefore complexity of the network can be significantly reduced. This paper aims at pruning the

1

Assistant Professor, Department of Computer Science and Engineering, Manarat International University, Dhaka1212, Bangladesh, Email: smk_iiuc@yahoo.com

School of Communication, Independent University Bangladesh, Chittagong, Bangladesh. Email: ryadh78@yahoo.com

ICTM 2005

network size both in number of neurons and number of interconnections between the neurons. The pruning strategies along with the penalty function are described in the subsequent sections. 2.1 Penalty Function When a network is to be pruned, it is a common practice to add a penalty term to the error function during training [16]. Usually, the penalty term, as suggested in different literature, is

2 h n ( wm ) 2 h o h n h o ( vm 2 l p ) m 2 + (1) P ( w, v ) = 1 w vm + + ( ) ( l p ) 2 2 2 m =1 l =1 1 + ( wm ) m =1 p =1 1 + ( v m ) m =1 l =1 m =1 p =1 l p m i Given an n-dimensional example x , i { 1, 2,...., k } as input, let wl be the weight for the connection from input

unit l , l { 1, 2,...., n} to hidden unit m, m { 1, 2,...., h} and v p be the weight for the connection from hidden

m

unit m to output unit p, p { 1, 2,...., o} , the pth output of the network for example xi is obtained by computing

h S ip = m v m (2) p , where m =1 n m = xli wlm , ( x ) = ( e x e x ) / ( e x e x ) (3) l =1 i i i The target output from an example x that belongs to class C j is an o-dimensional vector t , where t p = 0 if p = j

and t p = 1, j, p = 1,2o. The back propagation algorithm is applied to update the weights (w, v) and minimize the following function:

i

( w, v ) = F ( w, v ) + P ( w, v )

F ( w, v ) = ( t ip log S ip + (1 t ip ) log(1 S ip ) )

i =1 p =1 k o

(4)

where F ( w, v ) is the cross entropy function as defined (5)

and P ( w, v ) is a penalty term as described in (1) used for weight decay. 2.2 Redundant weight Pruning Penalty function is used for weight decay. As such we can eliminate redundant weights with the following Weight Elimination Algorithm as suggested in different literature [12][14][17]. 2.2.1Weight Elimination Algorithm: 1. Let 1 and 2 be positive scalars such that 1 + 2 < 0.5. 2. Pick a fully connected network and train this network such that error condition is satisfied by all input patterns. Let (w, v) be the weights of this network. 3.

m For each wl , if

v m wlm 4 2

Then remove

(6) from the network

wlm

ICTM 2005

4.

For each v

, If (7)

m

v 4 2

Then remove v 5. 6. from the network

m If no weight satisfies condition (6) or condition (7) then remove wl with the smallest product

v m wlm .

Retrain the network. If classification rate of the network falls below an acceptable level, then stop. Otherwise go to Step 3.

2.3 Input and Hidden Node Pruning A node-pruning algorithm is presented below to remove redundant nodes in the input and hidden layer. 2.3.1 Input and Hidden node Pruning Algorithm: Step 1: Create an initial network with as many input neurons as required by the specific problem description and with one hidden unit. Randomly initialize the connection weights of the network within a certain range. Step 2: Partially train the network on the training set for a certain number of training epochs using a training algorithm. The number of training epochs, , is specified by the user. Step 3: Eliminate the redundant weights by using weight elimination algorithm as described in section 2.2. Step 4: Test this network. If the accuracy of this network falls below an acceptable range then add one more hidden unit and go to step 2. Step 5: If there is any input node xl with wl = 0, for m = 1,2h, then remove this node. Step 6: Test the generalization ability of the network with test set. If the network successfully converges then erminate, otherwise, go to step 1.

m

3. Experimental Results And Discussions

In this experiment, we have used three benchmark classification problems. The problems are breast cancer diagnosis, classification of glass types and Pima Indians Diabetes diagnosis problem [10] [19]. All the data sets were obtained from the UCI machine learning benchmark repository. Brief characteristics of the data sets are listed in Table 1. Table 1: Characteristics of data sets. Input Attributes 9 Cancer1 9 Glass 8 Diabetes Data set Output Units 2 6 2 Output Classes 2 6 2 Training Validation Test Examples Examples examples 350 175 174 107 54 54 384 192 192 Total examples 699 215 768

The experimental results of different data sets are shown in table 2, figure 1, 2 and 3. In the experimental results of cancer data set, we have found that a fully connected network of 9-3-2 architecture has the classification accuracy of

ICTM 2005

97.143%. After pruning the network with Weight Elimination Algorithm and Input and Hidden node Pruning Algorithm, we have found a simplified network of 3-1-2 architecture with classification accuracy of 96.644%. The graphical representation of the simplified network is given in figure 3. It shows that only the input attributes I1, I6, I9 along with a single hidden unit is adequate for this problem.

O1

Output Layer Hidden Layer Bias Bias Bias

O2

W1 = -21.992443 W6 = -13.802489 W9 = -13.802464 V1 = 3.035398 V2 = -3.035398

Input Layer I1 I2 I3 I4 I5 I6 I7 I8 I9 Active Weight Pruned Weight Active Neuron Pruned Neuron

Wi = Input to Hidden Weight Vi = Hidden to Output Weight Ii = Input signal Oi = Output Signal

Figure 1: Simplified Network for Breast Cancer Diagnosis problem. O1 O2 W = -204.159255 Output Layer Hidden Layer Bias Bias W13 = 74.090849 W14 = -52.965123 W18 = 52.965297 W21 = 47.038678 W23 = 52.469025 W24 = 46.967161 W25 = 46.967161 W26 = 46.967161 W27 = -46.967363 W28= -46.967363 V11 = -1.152618 V12 = 1.152618 V21 = -32.078753 V22 = 32.084780 Active Weight Pruned Weight Active Neuron

12

Bias

Bias

Input Layer I1 I2 I3 I4 I5 I6 I7 I8

Wi = Input to Hidden Weight Vi = Hidden to Output Weight Ii = Input signal Oi = Output Signal

Pruned Neuron Figure 2: Simplified Network for Pima Indians Diabetes diagnosis problem.

ICTM 2005

In the experimental results of Pima Indians Diabetes data set, we have found that a fully connected network of 8-3-2 architecture has the classification accuracy of 77.344%. After pruning the network with Weight Elimination Algorithm and Input and Hidden node Pruning Algorithm, we have found a simplified network of 8-2-2 architecture with classification accuracy of 75.260%. The graphical representation of the simplified network is given in figure 2. It shows that no input attribute can be removed but a hidden node along with some redundant connection has been removed which have been shown with a dotted line in figure 2.

O1

O2

O3

O4

O5

O6

Output Layer

Hidden Layer

Input Layer I1 I2 I3 I4 I5 I6 I7 I8 I9

Active Weight Ii = Input signal Oi = Output Signal Pruned Weight Active Neuron Pruned Neuron Figure 3: Simplified Network for Glass classification problem. In the experimental results of Glass classification data set, we have found that a fully connected network of 9-46 architecture has the classification accuracy of 65.277%. After pruning the network with Weight Elimination Algorithm and Input and Hidden node Pruning Algorithm, we have found a simplified network of 9-3-6 architecture with classification accuracy of 63.289%. The graphical representation of the simplified network is given in figure 3. It shows that no input attribute can be removed but a hidden node along with some redundant connection has been removed which have been shown with a dotted line in figure 3.

ICTM 2005

Table 2: Experimental Results Data sets Cancer1 Results Learning Rate No. of Epoch Initial Architecture Input Nodes Removed Hidden Nodes Removed Total Connection Removed Simplified Architecture Accuracy (%) of fully connected network Accuracy (%) of simplified network 0.1 500 9-3-2 6 2 24 3-1-2 97.143 96.644 Diabetes 0.1 1200 8-3-2 0 1 13 8-2-2 77.344 75.260 Glass 0.1 650 9-4-6 1 2 16 9-3-6 65.277 63.289

From the experimental results discussed above, it can be said that not all input attributes and weights are equally important. Moreover, it is difficult to determine the appropriate number of hidden nodes. By pruning approach we can automatically determine an appropriate number of hidden nodes. We can remove redundant nodes and connections without sacrificing significant accuracy using network pruning approach discussed in section 2.2 and section 2.3. As such we can reduce computational cost by using the simplified networks.

4 Future Work

In future we will use this network pruning approach for rule extraction and feature selection. These pruning strategies will be also examined for function approximation and regression problems.

5 Conclusions

In this paper we proposed an efficient network simplification algorithm using pruning strategies. Using this approach we obtain optimal network architecture with minimal number of connections and neurons without deteriorating the performance of the network significantly. Experimental results show that the performance of the simplified network is quite significant and acceptable compared to fully connected network. This simplification of the network ensures both reliability and reduced computational cost. Reference [1] T. Ash, Dynamic node creation in backpropagation networks, Connection Sci., vol. 1, pp. 365375, 1989. [2] R. W. Brause, Medical Analysis and Diagnosis by Neural Networks, J.W. Goethe-University, Computer Science Dept., Frankfurt a. M., Germany. [3] J. W., Everhart, J. E., Dickson, W. C., Knowler, W. C., Johannes, R. S., Using the ADAP learning algorithm to forecast the onset of diabetes mellitus, Proc. Symp. on Computer Applications and Medical Care (Piscataway, NJ: IEEE Computer Society Press), pp. 2615, 1988.

ICTM 2005

[4] S. E. Fahlman and C. Lebiere, The cascade-correlation learning architecture, in Advances in Neural Information Processing System 2, D. S. Touretzky, Ed. San Mateo, CA: Morgan Kaufmann, pp. 524-532, 1990. [5] Simon Haykin, Neural Networks- A Comprehensive Foundation, Second Edition, Pearson Edition Asia, Third Indian Reprint, 2002. [6] T. Y. Kwok and D. Y. Yeung, Constructive Algorithm for Structure Learning in feed- forward neural network for regression problems, IEEE Trans. Neural Networks, vol. 8, pp. 630-645, 1997. [7] M. Monirul. Islam and K. Murase, A new algorithm to design compact two hidden-layer artificial neural networks, Neural Networks, vol. 4, pp. 12651278, 2001. [8] M. Monirul Islam, M. A. H. Akhand, M. Abdur Rahman and K. Murase, Weight Freezing to Reduce Training Time in Designing Artificial neural Networks, Proceedings of 5th ICCIT, EWU, pp. 132-136, 27-28 December 2002. [9] R. Parekh, J.Yang, and V. Honavar, Constructive Neural Network Learning Algorithms for Pattern Classification, IEEE Trans. Neural Networks, vol. 11, no. 2, March 2000. [10] L. Prechelt, Proben1-A Set of Neural Network Benchmark Problems and Benchmarking Rules, University of Karlsruhe, Germany, 1994. [11] R. Reed, Pruning algorithms-A survey, IEEE Trans. Neural Networks, vol. 4, pp. 740-747, 1993. [12] R. Setiono and L.C.K. Hui, Use of quasi-Newton method in a feedforward neural network construction algorithm, IEEE Trans. Neural Networks, vol. 6, no.1, pp. 273-277, Jan. 1995. [13] R. Setiono, Huan Liu, Understanding Neural networks via Rule Extraction, In Proceedings of the International Joint conference on Artificial Intelligence, pp. 480-485, 1995. [14] R. Setiono, Huan Liu, Improving Backpropagation Learning with Feature Selection, Applied Intelligence, vol. 6, no. 2, pp. 129-140, 1996. [15] R. Setiono, Extracting rules from pruned networks for breast cancer diagnosis, Artificial Intelligence in Machine, vol. 8, no. 1, pp. 37-51, 1996. [16] R. Setiono, A penalty function approach for pruning Feedforward neural networks, Neural Computation, vol. 9, no. 1, pp. 185-204, 1997. [17] R. Setiono, Techniques for extracting rules from artificial neural networks, Plenary Lecture presented at the 5th International Conference on Soft Computing and Information Systems, Iizuka, Japan, October 1998. [18] R. Setiono W. K. Leow and J. M. Zurada, Extraction of rules from artificial neural networks for nonlinear regression, IEEE Trans. Neural Networks, vol. 13, no.3, pp. 564-577, 2002. [19] W. H. Wolberg and O.L. Mangasarian, Multisurface method of pattern separation for medical diagnosis applied to breast cytology, Proceedings of the National Academy of Sciences, U SA, Volume 87, pp 91939196, December 1990.

You might also like

- Pattern Classification of Back-Propagation Algorithm Using Exclusive Connecting NetworkDocument5 pagesPattern Classification of Back-Propagation Algorithm Using Exclusive Connecting NetworkEkin RafiaiNo ratings yet

- Modeling and Optimization of Drilling ProcessDocument9 pagesModeling and Optimization of Drilling Processdialneira7398No ratings yet

- Data Mining Algorithms For RiskDocument9 pagesData Mining Algorithms For RiskGUSTAVO ADOLFO MELGAREJO ZELAYANo ratings yet

- Neural Network Two Mark Q.BDocument19 pagesNeural Network Two Mark Q.BMohanvel2106No ratings yet

- Fast Training of Multilayer PerceptronsDocument15 pagesFast Training of Multilayer Perceptronsgarima_rathiNo ratings yet

- Real Timepower System Security - EEEDocument7 pagesReal Timepower System Security - EEEpradeep9007879No ratings yet

- UNIT IV - Neural NetworksDocument7 pagesUNIT IV - Neural Networkslokesh KoppanathiNo ratings yet

- Classification Using Neural Network & Support Vector Machine For Sonar DatasetDocument4 pagesClassification Using Neural Network & Support Vector Machine For Sonar DatasetseventhsensegroupNo ratings yet

- Feed Forward Neural Network ApproximationDocument11 pagesFeed Forward Neural Network ApproximationAshfaque KhowajaNo ratings yet

- Principles of Training Multi-Layer Neural Network Using BackpropagationDocument15 pagesPrinciples of Training Multi-Layer Neural Network Using Backpropagationkamalamdharman100% (1)

- Application of Back-Propagation Neural Network in Data ForecastDocument23 pagesApplication of Back-Propagation Neural Network in Data ForecastEngineeringNo ratings yet

- A Time-Delay Neural Networks Architecture For Structural Damage DetectionDocument5 pagesA Time-Delay Neural Networks Architecture For Structural Damage DetectionJain DeepakNo ratings yet

- Training Feed Forward Networks With The Marquardt AlgorithmDocument5 pagesTraining Feed Forward Networks With The Marquardt AlgorithmsamijabaNo ratings yet

- Character Recognition Using Neural Networks: Rókus Arnold, Póth MiklósDocument4 pagesCharacter Recognition Using Neural Networks: Rókus Arnold, Póth MiklósSharath JagannathanNo ratings yet

- Performance Evaluation of Artificial Neural Networks For Spatial Data AnalysisDocument15 pagesPerformance Evaluation of Artificial Neural Networks For Spatial Data AnalysistalktokammeshNo ratings yet

- IJCNN 1993 Proceedings Comparison Pruning Neural NetworksDocument6 pagesIJCNN 1993 Proceedings Comparison Pruning Neural NetworksBryan PeñalozaNo ratings yet

- Luna16 01Document7 pagesLuna16 01sadNo ratings yet

- Neural Networks Embed DDocument6 pagesNeural Networks Embed DL S Narasimharao PothanaNo ratings yet

- ImageamcetranksDocument6 pagesImageamcetranks35mohanNo ratings yet

- Pattern Classification Neural NetworksDocument45 pagesPattern Classification Neural NetworksTiến QuảngNo ratings yet

- Particle Swarm Optimization-Based RBF Neural Network Load Forecasting ModelDocument4 pagesParticle Swarm Optimization-Based RBF Neural Network Load Forecasting Modelherokaboss1987No ratings yet

- Deep Learning Assignment 1 Solution: Name: Vivek Rana Roll No.: 1709113908Document5 pagesDeep Learning Assignment 1 Solution: Name: Vivek Rana Roll No.: 1709113908vikNo ratings yet

- Soft Computing-Lab FileDocument40 pagesSoft Computing-Lab FileAaaNo ratings yet

- ProjectReport KanwarpalDocument17 pagesProjectReport KanwarpalKanwarpal SinghNo ratings yet

- Project Report (Conv-ELM)Document11 pagesProject Report (Conv-ELM)John DoeNo ratings yet

- WeightStealing ComputingConferce2020Document12 pagesWeightStealing ComputingConferce2020Alice VillardièreNo ratings yet

- From: First IEE International Conference On Artificial Neural Networks. IEE Conference Publication 313Document18 pagesFrom: First IEE International Conference On Artificial Neural Networks. IEE Conference Publication 313Ahmed KhazalNo ratings yet

- Pattern Recognition System Using MLP Neural Networks: Sarvda Chauhan, Shalini DhingraDocument4 pagesPattern Recognition System Using MLP Neural Networks: Sarvda Chauhan, Shalini DhingraIJERDNo ratings yet

- King-Rook Vs King With Neural NetworksDocument10 pagesKing-Rook Vs King With Neural NetworksfuzzieNo ratings yet

- Applying Multiple Neural Networks On Large Scale Data: Kritsanatt Boonkiatpong and Sukree SinthupinyoDocument5 pagesApplying Multiple Neural Networks On Large Scale Data: Kritsanatt Boonkiatpong and Sukree SinthupinyomailmadoNo ratings yet

- Classification Algorithms for VLSI Circuit Partitioning OptimizationDocument3 pagesClassification Algorithms for VLSI Circuit Partitioning OptimizationSudheer ReddyNo ratings yet

- Neural Network Approach To Power System Security Analysis: Bidyut Ranjan Das Author, and Dr. Ashish Chaturvedi AuthorDocument4 pagesNeural Network Approach To Power System Security Analysis: Bidyut Ranjan Das Author, and Dr. Ashish Chaturvedi AuthorInternational Journal of computational Engineering research (IJCER)No ratings yet

- Contents:-: Using Neural Networks 7Document21 pagesContents:-: Using Neural Networks 7adimishra019No ratings yet

- 19 - Introduction To Neural NetworksDocument7 pages19 - Introduction To Neural NetworksRugalNo ratings yet

- Supervised Training Via Error Backpropagation: Derivations: 4.1 A Closer Look at The Supervised Training ProblemDocument32 pagesSupervised Training Via Error Backpropagation: Derivations: 4.1 A Closer Look at The Supervised Training ProblemGeorge TsavdNo ratings yet

- Lab 1Document13 pagesLab 1YJohn88No ratings yet

- Trajectory-Control Using Deep System Identification and Model Predictive Control For Drone Control Under Uncertain LoadDocument6 pagesTrajectory-Control Using Deep System Identification and Model Predictive Control For Drone Control Under Uncertain LoadpcrczarbaltazarNo ratings yet

- Module 1part 2Document70 pagesModule 1part 2Swathi KNo ratings yet

- A Gentle Introduction To BackpropagationDocument15 pagesA Gentle Introduction To BackpropagationManuel Aleixo Leiria100% (1)

- Filtering Noises From Speech Signal: A BPNN ApproachDocument4 pagesFiltering Noises From Speech Signal: A BPNN Approacheditor_ijarcsseNo ratings yet

- Evaluate Neural Network For Vehicle Routing ProblemDocument3 pagesEvaluate Neural Network For Vehicle Routing ProblemIDESNo ratings yet

- Application of Artificial Neural NetworkDocument7 pagesApplication of Artificial Neural NetworkRahul Kumar JhaNo ratings yet

- Implementing Simple Logic Network Using MP Neuron ModelDocument41 pagesImplementing Simple Logic Network Using MP Neuron ModelP SNo ratings yet

- Optimal Neural Network Models For Wind SDocument12 pagesOptimal Neural Network Models For Wind SMuneeba JahangirNo ratings yet

- ANN Models ExplainedDocument42 pagesANN Models ExplainedAakansh ShrivastavaNo ratings yet

- M.abyan Tf7a5 Paper Semi-Supervised LearningDocument41 pagesM.abyan Tf7a5 Paper Semi-Supervised LearningMuhammad AbyanNo ratings yet

- 076bct041 AI Lab4Document6 pages076bct041 AI Lab4Nishant LuitelNo ratings yet

- Patel Collage of Science and Technology Indore (M.P) : Lab IIDocument14 pagesPatel Collage of Science and Technology Indore (M.P) : Lab IIOorvi ThakurNo ratings yet

- Deep Learning in Astronomy: Classifying GalaxiesDocument6 pagesDeep Learning in Astronomy: Classifying GalaxiesAniket SujayNo ratings yet

- 1 Brief Introduction 2 Backpropogation Algorithm 3 A Simply IllustrationDocument18 pages1 Brief Introduction 2 Backpropogation Algorithm 3 A Simply Illustrationgauravngoyal6No ratings yet

- An Improved Fuzzy Neural Networks Approach For Short-Term Electrical Load ForecastingDocument6 pagesAn Improved Fuzzy Neural Networks Approach For Short-Term Electrical Load ForecastingRramchandra HasabeNo ratings yet

- IC 1403 – NEURAL NETWORKS AND FUZZY LOGIC CONTROL QUESTION BANKDocument12 pagesIC 1403 – NEURAL NETWORKS AND FUZZY LOGIC CONTROL QUESTION BANKAnonymous TJRX7CNo ratings yet

- Week - 5 (Deep Learning) Q. 1) Explain The Architecture of Feed Forward Neural Network or Multilayer Perceptron. (12 Marks)Document7 pagesWeek - 5 (Deep Learning) Q. 1) Explain The Architecture of Feed Forward Neural Network or Multilayer Perceptron. (12 Marks)Mrunal BhilareNo ratings yet

- 2019 Zhang Tian Shao Unwrapping CNNDocument10 pages2019 Zhang Tian Shao Unwrapping CNNDiego Eusse NaranjoNo ratings yet

- Annex AmDocument28 pagesAnnex AmJose A MuñozNo ratings yet

- Optimal Brain Damage: 598 Le Cun, Denker and SollaDocument8 pagesOptimal Brain Damage: 598 Le Cun, Denker and Sollahugo pNo ratings yet

- Fuzzy Clustering ComparisonDocument20 pagesFuzzy Clustering ComparisonNguyễn PhongNo ratings yet

- Integer Optimization and its Computation in Emergency ManagementFrom EverandInteger Optimization and its Computation in Emergency ManagementNo ratings yet

- Introduction to Deep Learning and Neural Networks with Python™: A Practical GuideFrom EverandIntroduction to Deep Learning and Neural Networks with Python™: A Practical GuideNo ratings yet

- DMU PaperDocument9 pagesDMU Papersathiyam007No ratings yet

- LMS7002M Quick Starter Manual EVB7 2 2r0 PDFDocument81 pagesLMS7002M Quick Starter Manual EVB7 2 2r0 PDFthagha mohamedNo ratings yet

- Synapse DFT Overview: Embedded Deterministic Test Architecture and FlowDocument51 pagesSynapse DFT Overview: Embedded Deterministic Test Architecture and Flowsenthilkumar100% (8)

- Search For in TheDocument65 pagesSearch For in ThezaheergtmNo ratings yet

- Passport Seva ProjectDocument14 pagesPassport Seva ProjectChandan BanerjeeNo ratings yet

- Prime Factorization: by Jane Alam JanDocument6 pagesPrime Factorization: by Jane Alam JanSajeebNo ratings yet

- Wireless Communication by Rappaport Problem Solution ManualDocument7 pagesWireless Communication by Rappaport Problem Solution ManualSameer Kumar DashNo ratings yet

- RSLogix 5000 V20.03 focuses on product resiliencyDocument4 pagesRSLogix 5000 V20.03 focuses on product resiliencyGilvan Alves VelosoNo ratings yet

- Statistics Lecture1 2.10.18Document41 pagesStatistics Lecture1 2.10.18Liad ElmalemNo ratings yet

- Cisco CallManager and QuesCom GSM Gateway Configuration EN1210 PDFDocument11 pagesCisco CallManager and QuesCom GSM Gateway Configuration EN1210 PDFvmilano1No ratings yet

- Project Documents On Student Enrollment SystemDocument32 pagesProject Documents On Student Enrollment SystemSankalp MeshramNo ratings yet

- Lec 0Document24 pagesLec 0Akhil VibhakarNo ratings yet

- White Paper Deltav Control Module Execution en 56282Document6 pagesWhite Paper Deltav Control Module Execution en 56282samim_khNo ratings yet

- Enterprise Architecture PlanningDocument28 pagesEnterprise Architecture PlanningWazzupdude101No ratings yet

- OS 03 SyscallsDocument46 pagesOS 03 SyscallsSaras PantulwarNo ratings yet

- DBMS ConceptsDocument79 pagesDBMS ConceptsAashi PorwalNo ratings yet

- Start Here 10 Infographic TemplatesDocument10 pagesStart Here 10 Infographic TemplatesJennyfer Paiz100% (1)

- Assisting The Composer - First EditionDocument76 pagesAssisting The Composer - First EditionMikeNo ratings yet

- 3dconnexion - SpaceMouse EnterpriseDocument15 pages3dconnexion - SpaceMouse EnterpriseWandersonNo ratings yet

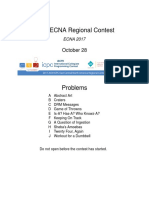

- Game of Throwns ECNA 2017 Regional ContestDocument22 pagesGame of Throwns ECNA 2017 Regional ContestDuarte Garcia JorgeNo ratings yet

- Ti Iwr6412Document84 pagesTi Iwr6412alperusluNo ratings yet

- BMR BiometricsDocument17 pagesBMR BiometricsXxJustAnotherGirlxXNo ratings yet

- Inspire Science Temporary PasswordsDocument8 pagesInspire Science Temporary Passwordsart tubeNo ratings yet

- Customer Letter - AppleDocument3 pagesCustomer Letter - ApplePramod Govind SalunkheNo ratings yet

- Evermotion Vol 39 PDFDocument2 pagesEvermotion Vol 39 PDFMikeNo ratings yet

- Army PKI Slides On CAC CardsDocument26 pagesArmy PKI Slides On CAC CardszelosssNo ratings yet

- Cyber Security With FermingDocument26 pagesCyber Security With FermingAvishek JanaNo ratings yet

- Atoll 3.2.1 CrosswaveDocument78 pagesAtoll 3.2.1 CrosswaveYiğit Faruk100% (1)

- Excel Power Pivot TutorialDocument24 pagesExcel Power Pivot TutorialicetesterNo ratings yet

- PostgreSQL Conceptual ArchitectureDocument23 pagesPostgreSQL Conceptual ArchitectureAbdul RehmanNo ratings yet