Professional Documents

Culture Documents

Wonderware FactorySuite A2 Deployment Guide PDF

Uploaded by

DeanLefebvreOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Wonderware FactorySuite A2 Deployment Guide PDF

Uploaded by

DeanLefebvreCopyright:

Available Formats

Wonderware FactorySuite A2

Deployment Guide

Revision D.2 Last Revision: 1/27/06

Invensys Systems, Inc.

All rights reserved. No part of this documentation shall be reproduced, stored in a retrieval system, or transmitted by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior written permission of Invensys Systems, Inc. No copyright or patent liability is assumed with respect to the use of the information contained herein. Although every precaution has been taken in the preparation of this documentation, the publisher and the author assume no responsibility for errors or omissions. Neither is any liability assumed for damages resulting from the use of the information contained herein. The information in this documentation is subject to change without notice and does not represent a commitment on the part of Invensys Systems, Inc. The software described in this documentation is furnished under a license or nondisclosure agreement. This software may be used or copied only in accordance with the terms of these agreements. 2006 Invensys Systems, Inc. All Rights Reserved. Trademarks All terms mentioned in this documentation that are known to be trademarks or service marks have been appropriately capitalized. Invensys Systems, Inc. cannot attest to the accuracy of this information. Use of a term in this documentation should not be regarded as affecting the validity of any trademark or service mark. Alarm Logger, ActiveFactory, ArchestrA, Avantis, DBDump, DBLoad, DT Analyst, FactoryFocus, FactoryOffice, FactorySuite, FactorySuite A2, InBatch, InControl, IndustrialRAD, IndustrialSQL Server, InTouch, InTrack, MaintenanceSuite, MuniSuite, QI Analyst, SCADAlarm, SCADASuite, SuiteLink, SuiteVoyager, WindowMaker, WindowViewer, Wonderware, and Wonderware Logger are trademarks of Invensys plc, its subsidiaries and affiliates. All other brands may be trademarks of their respective owners.

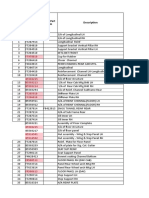

Contents

Contents

Before You Begin ............................................. 11

About This Document ...........................................................................11 Assumptions ..........................................................................................11 FactorySuite A2 Application Versions ............................................. 12 FactorySuite A2 Terminology .......................................................... 13 Document Conventions ........................................................................ 14 Where to Find Additional Information................................................. 14 ArchestrA Community Website........................................................ 14 Technical Support ............................................................................. 15

CHAPTER 1: Planning the Integration Project.............................................17

FactorySuite A2 Project Workflow ...................................................... 18 FactorySuite A2 Workflow Summary .............................................. 18 Identify Field Devices and Functional Requirements ...................... 18 Define Object Naming Conventions................................................. 21 Define the Area Model ..................................................................... 22 Plan Templates.................................................................................. 23 Define the Security Model................................................................ 26 Define the Deployment Model ......................................................... 27 Document the Planning Results........................................................ 28

CHAPTER 2: Identifying Topology Requirements ....................................................29

Topology Component Distribution....................................................... 30 Galaxy Repository (Configuration Database) .................................. 31 AutomationObject Server Node ....................................................... 32 Visualization Node ........................................................................... 33 I/O Server Node................................................................................ 33 Engineering Station Node................................................................. 34 Historian Node.................................................................................. 35 SuiteVoyager Portal .......................................................................... 36 Topology Categories ............................................................................ 37 Distributed Local Network ............................................................... 37 Client/Server ..................................................................................... 40 General Topology Planning Considerations......................................... 41 Legacy InTouch Software Applications ........................................... 44 Terminal Services ............................................................................. 45 Widely-Distributed Network ............................................................ 50 Best Practices for Topology Configuration .......................................... 51 Network Configuration..................................................................... 51 Software Configuration .................................................................... 53

FactorySuite A2 Deployment Guide

Contents

I/O Server Connectivity ........................................................................54 I/O and DAServers: Best Practice .....................................................54 DIObject Advantages ........................................................................55 Extending the IAS Environment ...........................................................56

CHAPTER 3: Implementing Redundancy.......61

Redundant System Requirements .........................................................62 AppEngine Redundancy States .........................................................63 NIC Configuration: Redundant Message Channel (RMC) ...................63 Primary Network Connection............................................................64 RMC Network Connection................................................................64 Redundant DIObjects............................................................................65 Configuration.....................................................................................65 Redundant Configuration Combinations ..............................................67 Dedicated Standby Server - No Redundant I/O Server .....................67 Load Sharing Configurations ............................................................68 Run-Time Considerations..................................................................73 Deployment Considerations ..............................................................74 Scripting Considerations ...................................................................75 History ...............................................................................................77 Alarms in a Redundant Configuration ..................................................78 Redundant Configuration with Dedicated Visualization Nodes........79 Redundant Pair with No Dedicated Visualization Client Nodes .......79 Failover Causes in Redundant AppEngines..........................................80 Forcing Failover ................................................................................80 Communication Failure in the Supervisory (Primary) Network .......80 RMC Communication Failure ...........................................................83 PC Failures ........................................................................................83 Redundant System Checklist ................................................................85 Tuning Recommendations for Redundancy in Large Systems .............86 Tuning Redundant Engine Attributes ................................................87 Engine Monitoring ............................................................................88

CHAPTER 4: Integrating FactorySuite Applications.......................................................91

IndustrialSQL Server Historian ............................................................92 ActiveFactory Software ........................................................................92 ActiveFactory Reporting Website .....................................................92 InTouch HMI Software .........................................................................93 WindowMaker, WindowViewer, and View .......................................93 InTouch SmartSymbols .....................................................................93 Network Utilization ...........................................................................94 InTouch Software Add-Ins ................................................................95 Tablet and Panel PCs .........................................................................96 SCADAlarm Event Notification Software............................................97

FactorySuite A2 Deployment Guide

Contents

Alarm DB Manager.............................................................................. 97 SuiteVoyager Software ......................................................................... 97 QI Analyst Software............................................................................. 98 DT Analyst Software............................................................................ 98 InTrack Software .................................................................................. 99 Using InTrack Software with an Application Server ............................................................................ 99 InTrack Software Integration with Other FactorySuite Software... 101 InBatch Software................................................................................ 102 InBatch Production System Requirements ..................................... 103 InBatch Production System Topologies.......................................... 104 Third Party Application Integration ................................................... 106 FactorySuite Gateway..................................................................... 106 Other Connectivity/Integration Tools ............................................. 106

CHAPTER 5: Working with Templates..........109

Before Creating Templates..................................................................110 Creating a Template Model .................................................................110 Containment vs. UDAs....................................................................111 Base Template Functional Summary ...............................................112 Template Modeling Examples .........................................................114 Using UDAs and Extensions...............................................................116 Deriving Templates and Instances.......................................................117 Re-Using Templates in Different Galaxies .........................................118 Export/Import Templates and Instances ..............................................119 Export Automation Objects ............................................................ 120 Galaxy Dump.................................................................................. 120 Scripting at the Template Level ......................................................... 122 Script Execution Types ................................................................... 123 Scoping Variables ........................................................................... 123 Scripting for OLE and/or COM Objects......................................... 125 Using Aliases .................................................................................. 125 Determining Object and Script Execution Order ........................... 126 Asynchronous Scripts ..................................................................... 128 Scripting I/O References ................................................................ 128 Writing to a Database ..................................................................... 129

CHAPTER 6: Implementing QuickScript .NET .............................................133

IAS Scripting Architecture................................................................. 134 ApplicationObject Script Interactions ............................................ 136 Script Access to IAS Object Attributes.............................................. 137 Referencing Object Attribute and Property Values ........................ 137 Other Script Function Categories ................................................... 138 Using UDAs ................................................................................... 142

FactorySuite A2 Deployment Guide

Contents

Inter- and Intra-Object Scripting Considerations ............................142 Evolving from InTouch to ArchestrA .................................................142 Inter-Object Scripting Interactions ..................................................142 Intra-Object Scripting Interactions ..................................................142 Remote References to Live Data.....................................................143 Indirect Referencing ........................................................................143 IAS Context .....................................................................................143 Accessing the .NET Framework .........................................................144 .NET Overview................................................................................144 System.Data Classes (Connection to MSSQL via ADO.NET).......145 Scripting Practices ...........................................................................145 Using .NET for Database Access........................................................146 Defining Project Scope and Requirements......................................147 Using "Shape" Template Objects ....................................................148 Using the ObjectCacheExt (Function Library)................................148 Posting the Data...............................................................................149 Object Functional Encapsulations ...................................................149 Scripting Database Access ..................................................................150 ObjectCacheExt.DLL Overview .....................................................151 Purpose of ObjectCacheExt.DLL....................................................153 Template Object Examples .................................................................155 Example Object Interactions via UDAs ..........................................155 $SqlConnCacheMgr Script Examples.............................................158 $PostToDBaORb Template Object..................................................170 $PostTOaORb Derived From $PostToDBaORb .............................182 Time-Based Calculations and Retentive Values..............................183 Documenting Your Classes .............................................................190 Summary .........................................................................................190

CHAPTER 7: Architecting Security ..............191

Wonderware Security Perspective.......................................................192 Common Control System Security Considerations.........................193 Common Security Evaluation Topics..............................................193 About Security Infrastructure Components.....................................196 Securing FactorySuite A2 Systems.....................................................200 Security Considerations...................................................................200 Corporate Network Infrastructure Layer .........................................202 Process Control Network (PCN) Layer...........................................202 Securing Visualization ........................................................................204 OS Group Based Security Mode Notes ...........................................205 Securing the Configuration Environment ...........................................206 Distributed COM (DCOM) .................................................................207 Limiting the DCOM Port Range .....................................................207 Security Recommendations Summary ................................................208

FactorySuite A2 Deployment Guide

Contents

CHAPTER 8: Historizing Data .......................209

General Considerations ...................................................................... 210 Data Point Volumes ........................................................................ 210 Data Storage Rate ............................................................................211 Data Loss Prevention.......................................................................211 Area and Data Storage Relocation ......................................................211 Non-Historian Data Storage Considerations ...................................... 212

CHAPTER 9: Implementing Alarms and Events ..........................................213

General Considerations ...................................................................... 214 Configuring Alarm Queries................................................................ 214 Determining the Alarm Topology ...................................................... 215 Alarming in a Distributed Local Network Topology...................... 215 Alarming in a Client/Server Topology ........................................... 216 Logging Historical Alarms................................................................. 217

CHAPTER 10: Assessing System Size and Performance ....................................................219

System Disk Space and RAM Use..................................................... 220 Galaxy Repository Performance Considerations............................ 220 Predicting Disk Space and RAM Requirements at Configuration Time ........................................................................ 222 Predicting Disk Space and RAM Requirements at Run-Time ....... 225 Predicting System Performance ......................................................... 227 Unit Application Definition............................................................ 227 Hardware Specifications................................................................. 229 Baseline Server CPU Values........................................................... 229 Performance Data............................................................................... 230 Single Node System Implementation ............................................. 234 Large Distributed FactorySuite A2 System Topology.................... 237 Very Large Distributed FactorySuite A2 System Topology ........... 239 Failover Performance ......................................................................... 242 Dedicated Standby Configuration................................................... 242 Load Shared with Remote I/O Data Source ....................................... 244 DIObject Performance Notes ............................................................. 245 OPC Client Performance.................................................................... 246

FactorySuite A2 Deployment Guide

Contents

Chapter 11: Working in Wide-Area Networks and SCADA Systems ......................................249

Wide-Area Networks Overview..........................................................250 Network Terminology .....................................................................251 Network and Operating System Configuration...................................251 Minimum Bandwidth Requirements ...............................................251 Subnets ............................................................................................251 DCOM .............................................................................................252 Domain Controller...........................................................................252 Remote Access Services (RAS) ......................................................254 Terminal Services ............................................................................255 Security ...............................................................................................256 Domain-Level Security ...................................................................256 Workgroup-Level Security ..............................................................258 Application Configuration Overview..................................................258 Acquire and Store Timestamps for Event Data ...............................258 Acquire and Store RTU Event Information.....................................258 Disaster Recovery............................................................................259 Industrial Application Server (Distributed IDE) .............................259 Platform and Engine Tuning ...............................................................261 Tuning the Historian Primitive/MDAS (in Platforms and Engines) ..............................................................261 Alarms .............................................................................................262 Inter-Node Communications ...........................................................263 Distributing InTouch HMI Nodes ...................................................265 Using IndustrialSQL Server Historian ............................................266 Diagnostics..........................................................................................266 SCADA Benchmarks ..........................................................................268 MX Subscriptions Network Utilization...........................................268 Alarm Network Utilization..............................................................269 History Network Utilization ............................................................270 Summary Network Utilization ........................................................270 Network Utilization at Deployment ................................................271

Chapter 12: Maintaining the System ............273

FactorySuite A2 System Diagnostic/Maintenance Tools....................274 Object Viewer..................................................................................274 System Management Console (SMC) .............................................275 Add-on Diagnostic/Maintenance Tools ..............................................276 Galaxy Diagnostic Tools .................................................................276 Galaxy Maintenance Tools ..............................................................277 OS Diagnostic Tools ...........................................................................279 Performance Monitor.......................................................................279 Event Viewer ...................................................................................279

FactorySuite A2 Deployment Guide

Contents

APPENDIX A: System Integrator Checklist..281

General ............................................................................................... 282 Use Time Synchronization.............................................................. 282 Disable Hyper-Threading ............................................................... 282 Communication .................................................................................. 282 Configure IP Addressing ................................................................ 282 Configure Dual NICs...................................................................... 283 Security............................................................................................... 283 Confirm User Name and Password ................................................ 283 Configure Anti-Virus Software ...................................................... 283 Administration (Local and Remote)................................................... 284 Install Correct IAS Components..................................................... 284 Connection Requirements for Remote IDE (from a Client Machine to a Galaxy).............................................. 284 Redundancy Configuration ................................................................ 284 Redundant AppEngines .................................................................. 284 Multiple NICs ................................................................................. 284 Migration............................................................................................ 285 Verify Version and Patches ............................................................. 285 Upgrade Correctly .......................................................................... 285 Compatibility...................................................................................... 285 FactorySuite Component Version Compatibility............................ 285

APPENDIX B: .NET Example Source Code ..287

SqlConnCacheMgr Object ................................................................. 288 SqlConnCacheMgr Overview......................................................... 288 SqlConnCacheMgr Configuration .................................................. 289 PostTOaORb Object........................................................................... 313 PostTOaORb Overview .................................................................. 313 Template Object:$PostTOaORb ..................................................... 321 RunTime Object ................................................................................. 340 RunTime Overview......................................................................... 340 RunTime Run-Time Behavior ........................................................ 340 RunTime Configuration.................................................................. 341 Template Object:$RunTime............................................................ 345 $RunTime - Start ............................................................................ 348 ObjectCache.dll Visual Studio.NET C# Solution .............................. 350 Debugging the Project .................................................................... 351 ObjectCacheExt.DLL Source Code................................................ 353

Index ................................................................387

FactorySuite A2 Deployment Guide

10

Contents

FactorySuite A2 Deployment Guide

Before You Begin

11

Before You Begin

About This Document

The FactorySuite A2 Deployment Guide provides recommendations and "best practice" information to help define, design and implement integration projects within the Wonderware FactorySuite A2 System environment. Recommendations included in this guide are based on experience gained from multiple projects using the ArchestrA infrastructure for FactorySuite A2. Recommendations contained in this document should not preclude discovering other methods and procedures that work effectively.

Assumptions

This deployment guide is intended for:

Engineers and other technical personnel who will be developing and implementing FactorySuite A2 System solutions. Sales personnel or Sales Engineers who need to define system topologies in order to submit FactorySuite A2 System project proposals.

It is assumed that you are familiar with the working environment of the Microsoft Windows 2000 Server, Windows Server 2003, and Windows XP Professional operating systems, as well as with a scripting, programming, or macro language. Also, an understanding of concepts such as variables, statements, functions, and methods will help you to achieve best results. It is assumed that you are familiar with the individual components that constitute the FactorySuite A2 environment. For additional information about a component, see the associated user documentation. All topologies referenced in this Deployment Guide assume a "Bus" topology, which comprises a single main communications line (trunk) to which nodes are attached; also known as Linear Bus. Exceptions are noted. For more information on standard topology schemas, see http://www.microsoft.com/technet/prodtechnol/visio/visio2002/plan/glossary. mspx or http://en.wikipedia.org/wiki/Category:Network_topologies.

FactorySuite A2 Deployment Guide

12

Before You Begin

FactorySuite A2 Application Versions

This Deployment Guide has been updated to include current Wonderware software product versions (current Service Packs are assumed for all software). However, the information is designed to apply to previous versions except where noted. The current versions are:

Wonderware Industrial Application Server 2.1 IndustrialSQL Server Historian 9.0 InTouch HMI 9.5 Active Factory Software 9.1 SCADAlarm Event Notification Software 6.0 SP1 SuiteVoyager Software 2.5 QI Analyst Software 4.2 DT Analyst Software 2.2 InTrack Software 7.1 InBatch Software 8.0 (FlexFormula and Premier 8.1) Production Event Module (PEM) 1.0 InControl Software 7.11 Third Party and Wonderware SDK Applications as noted.

The figure on the following page contains terms used throughout this document. Definitions of specific terms are included after the figure.

FactorySuite A2 Deployment Guide

Before You Begin

13

FactorySuite A2 Terminology

The following figure shows basic object classifications and their relationships within the IAS System. This document focuses on the Application/Device Integration/Engine/Platform/Area Objects level except where otherwise noted:

Galaxy Objects

AutomationObjects

Domain Objects

System Objects

Application Objects

Device Integration Objects (DIObjects)

EngineObjects

PlatformObjects

AreaObjects

AnalogDevice

FS Gateway

AppEngine

WinPlatform

Area

DiscreteDevice

AB TCP Network

Field Reference

AB PLC5

Switch

Etc.

User-Defined

The following terms are used throughout this document:

Area: The AutomationObject that represents an Area of a plant within a Galaxy. The Area object acts as an alarm concentrator and is used to put other AutomationObjects into the context of the actual physical automation layout. ApplicationEngine (AppEngine): A real-time Engine that hosts and executes AutomationObjects. ApplicationObject: An AutomationObject that represents some element of a user application. This may include elements such as (but not limited to) an automation process component (e.g. thermocouple, pump, motor, valve, reactor, tank, etc.) or associated application component (e.g. function block, PID loop, Sequential Function Chart, Ladder Logic program, batch phase, SPC data sheet, etc.). AutomationObject: An Object that represents hardware, software or Engines as objects with a user-defined, unique name within the Galaxy. It provides a standard way to create, name, download, execute or monitor the represented component. Device Integration Objects (DIObject): AutomationObjects that represent the communication with external devices, which all run on the Application Engine. For example:

FactorySuite A2 Deployment Guide

14

Before You Begin

DINetwork Object: Refers to the object that represents the network interface port to the device via the Data Access Server, providing diagnostics, and configuration for that specific card. DIDevice Object: Refers to the object that represents the actual external device (e.g. PLC, RTU) which is associated to the DINetwork Object. Providing the ability to diagnose, browse data registers, and DAGroups for that device.

Platform Object: A representation of the physical hardware that the ArchestrA software is running on. Platform Objects host Engine Objects (see WinPlatform). WinPlatform: A single computer in a Galaxy consisting of Network Message Exchange, a set of basic services, the operating system and the physical hardware. This object hosts Engines and is a type of Platform Object.

Document Conventions

This documentation uses the following conventions: Convention Bold

Monospace

Used for Menus, commands, buttons, icons, dialog boxes and dialog box options. Start menu selections, text you must type, and programming code. Options in text or programming code you must type.

Italic

Where to Find Additional Information

Wonderware offers a variety of support options to answer questions on Wonderware products and their implementation.

ArchestrA Community Website

For timely information about products and real-world scenarios, refer to the ArchestrA Community website: http://www.archestra.biz. The ArchestrA Community website is an information center where users, systems integrators (SIs) and OEMs can share information and application stories, obtain products and learn about training opportunities. A key component of this website is the Application Object Warehouse, a constantly growing resource that provides downloadable ArchestrA objects, including a range of shareware products. In the future, objects from the Invensys-driven object library will be available for purchase. Third parties are also encouraged to submit their own ArchestrA objects for inclusion.

FactorySuite A2 Deployment Guide

Before You Begin

15

Technical Support

Before contacting Technical Support, please refer to the appropriate chapter(s) of this manual and to the User's Guide and Online Help for the relevant FactorySuite A2 System component(s). For local support in your language, please contact a Wonderware-certified support provider in your area or country. For a list of certified support providers, see http://www.wonderware.com/about_us/contact_sales.

E-mail: Receive technical support by sending an E-mail to your local distributor or to support@wonderware.com. Online: You can access Wonderware Technical Support online at http://www.wonderware.com/support/mmi. Telephone: Call Wonderware Technical Support at the following numbers:

U.S. and Canada (toll-free): 800-WONDER1 (800-966-3371) 7 a.m. to 5 p.m. (Pacific Time) Outside the U.S. and Canada: (949) 639-8500

If you need to contact technical support for assistance, please have the following information available:

The type and version of the operating system you are using. For example, Microsoft Windows XP Professional. The exact wording of the error messages encountered. Any relevant output listing from the Log Viewer or any other diagnostic applications. Details of the attempts you made to solve the problem(s) and your results. Details of how to reproduce the problem. If known, the Wonderware Technical Support case number assigned to your problem (if this is an ongoing problem).

When requesting technical support, please include your first, last and company names, as well as the telephone number or e-mail address where you can be reached.

FactorySuite A2 Deployment Guide

16

Before You Begin

FactorySuite A2 Deployment Guide

Planning the Integration Project

17

C H A P T E R

Planning the Integration Project

The FactorySuite A2 System project begins with a thorough planning phase. This chapter explains the FactorySuite A2 project workflow. The workflow is designed to make engineering efforts more efficient by completing specific tasks in a logical and consistent (repeatable) sequence.

Contents FactorySuite A2 Project Workflow

FactorySuite A2 Deployment Guide

18

Chapter 1

FactorySuite A2 Project Workflow

The project information resulting from the planning phase becomes a roadmap (project template) when creating the FactorySuite A2 System using the Integrated Development Environment (IDE). The more detailed the project plan, the less time it takes to create and implement the application, with fewer mistakes and rework.

FactorySuite A2 Workflow Summary

The following figure (workflow diagram) summarizes the sequential tasks necessary to successfully complete a FactorySuite A2 project:

Identify Field Devices and Functional Requirements

Define Naming Conventions

Define the Area Model

Plan Templates

Define the Security Model

Define the Deployment Model

Each task is detailed on the following pages, and includes a checklist (summary) where applicable.

Identify Field Devices and Functional Requirements

The first project workflow task identifies field devices that are included in the system. Field devices include components such as valves, agitators, rakes, pumps, Proportional-Integral-Derivative (PID) controllers, totalizers, and so on. Some devices are made up of more base-level devices. For example, a motor is a device that may be part of an agitator or a pump. After identifying all field devices, determine the functionality for each.

FactorySuite A2 Deployment Guide

Planning the Integration Project

19

Field Devices Checklist

A. To identify field devices, refer to a Piping and Instrumentation Diagram (P&ID). This diagram shows all field devices and illustrates the flow between them. A good P&ID ensures the application planning process is faster and more efficient. Verify that the P&ID is correct and up-to-date before beginning the planning process. The following figure shows a simple P&ID:

FIC 301

FV103

PT 301 TT 301

FIC 402

FV402

LIC 401

DRIVE 3

FT 302 CT 301

LT 402

PT 401

FV403

DRIVE 4

FT 401 CT 401

FV401

The key for this P&ID is as follows: FIC = Flow Controller PT = Pressure Transmitter TT = Temperature Transmitter FT = Flow Transmitter CT = Concentration Transmitter LT = Level Transmitter LIC = Level Controller FV = Flow Valve B. Examine each component in the P&ID and identify each basic device. For example, a simple valve can be a basic device. A motor, however, may be comprised of multiple basic devices.

FactorySuite A2 Deployment Guide

20

Chapter 1

C. Once a complete list is created, group the devices according to type, such as by Valves, Pumps, and so on. Consolidate any duplicate devices into common types so that only a list of unique basic devices remains, and then document them in the project planning worksheet. Each basic device is represented in the IDE as an ApplicationObject. An instance of an object must be derived from a defined template. The number of device types in the final list will help determine how many object templates are necessary for your application. Group multiple basic objects to create more complex objects (containment). For more information on objects, templates, and containment, see the IDE documentation for the Industrial Application Server.

Functional Requirements Checklist

D. Define the functional requirements for each unique device. The functional requirements list includes:

User Defined Attributes: Determine the types of attributes the object will have. Attributes are parameters of the object that can access data from other objects as well as provide access to their own data to other objects (inputs and outputs). Scripting: What scripts will be associated with the device? Specify scripts both for self-configuring the object as well as for run-time operation. Historization: Are there process values associated with this device that you want to historize? How often do you want to store the values? Do you want to add change limits for historization? Alarms and Events: Which attributes require alarms? What values do you want to be logged as events?

Note The Industrial Application Server IDE's alarms and events provide similar functionality to what is provided within InTouch Software.

Security: Which users will access to the device? What type of access is appropriate? For example, you may grant a group of operators readonly access for a device, but allow read-write access for an administrator. You can set up different security for each attribute of a device.

All the above functional requirement areas are discussed in detail in this Deployment Guide.

FactorySuite A2 Deployment Guide

Planning the Integration Project

21

Define Object Naming Conventions

The second workflow task defines naming conventions for templates, objects, and object attributes. Naming conventions should adhere to:

Conventions in use by the company. ArchestrA IDE naming restrictions. For information on allowed names and characters, see IDE documentation.

The following (instance) tagname is used as an example: YY123XV456 It has the following attributes: OLS, CLS, Out, Auto, Man The following figure shows the diiffences between the HMI and ArchestrA naming conventions:

HMIs

YY123XV456\OLS YY123XV456\CLS YY123XV456\Out YY123XV456\Auto YY123XV456\Man

ArchestrA

.OLS .CLS YY123XV456 .Out .Auto .Man

Individual Tags

Object

Object Attributes

Create references using the following IDE naming convention: <objectname>.<attributename> For example: YY123XV456.OLS

FactorySuite A2 Deployment Guide

22

Chapter 1

Define the Area Model

The third workflow task defines the Area model. An Area is a logical object group that represents a portion of the physical plant layout. For example, Receiving Area, Process Area, Packaging Area and Dispatch Areas are all logical representations of a physical plant area. Define and document all necessary Plant Areas to ensure each AutomationObject is assigned to its relevant Area. Note The default installation creates the Unassigned Area. All object instances will be assigned to this Area unless new areas are created.

Area Model Checklist

A. Create all Areas first. An object instance can then be easily assigned to the correct Area; otherwise, you will have to move them out of the Unassigned Area later. B. Create a System Area. Assign instances of WinPlatform and AppEngine objects to the System Area. WinPlatform and AppEngine objects are used to support communications for the application, and are not necessarily relevant to a plant-related Area. C. Group Alarms according to Areas. D. Areas can be nested. Sub-areas can be assigned to a different AppEngine on a different platform. E. When building an Area hierarchy, remember that the base Area (assigned to a Platform) determines how underlying objects are deployed. F. If a plant area (physical location) contains two computers running AutomationObject Server platforms, two logical Areas must be created for the single physical plant area. It may be practical to create an object instance for one Area at a time. If using this development approach, mark the Area as Default, so that each object instance is automatically assigned to the Default Area. Before creating instances in another Area, change the default setting to the new Area. G. Equate various Areas to Alarm Groups. Alarm displays can easily be filtered at the Area level. For more information on Areas, see the IDE documentation.

FactorySuite A2 Deployment Guide

Planning the Integration Project

23

Plan Templates

The fourth workflow task determines the necessary object "shape" templates. A Shape template is an object that contains common, configuration parameters for derived objects (objects used multiple times within a project). The Shape Template is derived from the $BaseTemplate object and is designed to represent baseline or "generic" physical objects, or to encapsulate specific, baseline functionality within the production environment. Both the Shape Templates and child Template instances are called ApplicationObjects. For example, multiple instances of a certain valve type may exist within the production environment. Create a Shape valve template that includes the required, basic, valve properties. The Shape Template can now be reused multiple times, either as another template or an object instance. If changes are necessary, they are propagated to the derived object instances. Use the drag-and-drop operation within the IDE to create object instances. The following figure shows multiple instances (Valve001, -002, etc.) derived from a single object template ($Valve):

Note For a practical example of a Shape Template, see "Using "Shape" Template Objects" on page 148. Industrial Application Server is shipped with a number of pre-defined base templates to help you create your application quickly and easily. The base templates provided with Industrial Application Server are summarized in "Base Template Functional Summary" on page 112. Determine if any of their functionally match the requirements of the devices on your list. If the base templates do not satisfy the design requirements, create (derive) new shape templates or object instances from the $UserDefined object base template.

FactorySuite A2 Deployment Guide

24

Chapter 1

Application Objects

$AnalogDevice $DiscreteDevice $FieldReference $UserDefined $Valve Derived Template Base Templates

A child template derived from a base (parent) template can be highly customized. Implement user-defined attributes (UDAs), scripting, and alarm and history extensions. Note Use the Galaxy Dump and Load Utility to create a .csv file, which can then be modified using a text editor. Then load the .csv file back into the Galaxy Repository. This enables quick and easy bulk configuration edits.

Template Derivation

Since templates can be derived from other templates, and child templates can inherit properties of the parents, establish a template hierarchy that defines what is needed before creating other object templates or instances. Always begin with the most basic template for a type of object, then derive more complicated objects. If applicable, lock object attributes at the template level, so that changes cannot be made to those same attributes for any derived objects. A production facility typically uses many different device models from different manufacturers. For example, a process production environment has a number of flow meters in a facility. A base flow meter template would contain those fields, settings, and so on, that are common to all models used within the facility. Derive a new template from the base flow meter template for each manufacturer. The derived template for the specific manufacturer includes additional attributes specific to the manufacturer. A new set of templates would then be derived from the manufacturer-specific template to define specific models of flow meters. Finally, instances would be created from the model-specific template. Note For detailed examples of template derivation, see Chapter 5, "Working with Templates." For more information on templates, template derivation, and locking, see the IDE documentation.

Template Containment

Template containment allows more advanced structures to be modeled as a single object. For example, a new template called "Tank" is derived from the $UserDefined base or shape template. Use the instance to contain other ApplicationObjects that represent aspects of the tank, such as pumps, valves, and levels.

FactorySuite A2 Deployment Guide

Planning the Integration Project

25

Then, derive two $DiscreteDevice template instances called "Inlet" and "Outlet," and configure them as valves. Derive an AnalogDevice template instance called "Level," and contain them within the Tank template. The containment hierarchy is as follows:

$Tank

$V101[ Inlet ] $V102[ Outlet ] $LT102[ Level ]

Note Deeply nested template/container structures can slow the check-in of changes in IDE development and propagation. Two options are available when defining object properties:

Template containment

Use template containment to create a higher-level object with lower-level objects. This practice works best when the lower-level object also has many components to it and may contain even lower-level objects. However, when adding the lowest-level object to a template, it is possible to use either template containment or user-defined attributes. Both allow for an external I/O point link and historization. If required attributes (such as complex alarms, setpoints, or other features) are readily available in a template, use template containment. If the lowerlevel object is very basic, use a UDA (User-Defined Attribute). It is always valid to use a contained object, even if it is a simple property.

Always use a contained object for I/O points and use a user-defined attribute for memory or calculated values. How this is accomplished is up to the application designer, and should be decided in advance for project consistency.

Object Template Checklist

A. Document which existing templates can be used for which objects, and which templates are created from scratch. For information on a particular object template, see the Help file for that object. B. Design your containment model at the template level before generating large object instance quantities. C. Create instances from the top "container" template of a hierarchical set of contained templates. Such template hierarchies should be tested with one or two instances before proceeding to the generation of numerous instances. Any change by insertion or removal of a contained template in the hierarchy does not result in propagation of new insertions or removals in the instance hierarchies. For instances of the containment hierarchy, insertions and removals must be managed individually. However, changes within already included contained templates can be automatically propagated by locking.

FactorySuite A2 Deployment Guide

26

Chapter 1

Note For detailed information on working with templates, see Chapter 5, "Working with Templates."

Define the Security Model

The fifth workflow task defines the security model. The following basic concepts are reviewed in order to reinforce understanding of the ArchestrA security model:

Users: A user is each individual person that will be using the system. For example, John Smith and Peter Perez. Roles: Roles define groups of users within the security system. Roles usually reflect the type of work performed by different groups within the factory environment. For example, Operators and Technicians. Permissions: Permissions determine what users are allowed to do within the system. For example: Operate, Tune, and Configure. Security Groups: A security group is a group of objects with the same security characteristics. The purpose of a security group is to simplify object security management by avoiding the need to assign security permission for each role to each individual object. Security groups typically map to Areas and reflect a physical location of your plant, but each area will have more than one security groups if multiple levels of security is required within the area. For example, it may be necessary to assign specific Technicians to the Line_1 security group, but not to the Line_2 security group.

Security Model Checklist

A. Define Users, Roles, Permissions, and Security Groups necessary to implement security for the factory environment. Select Users and Roles previously defined within the Operating System Security model, or define them within the IDE. A combination of both security types is also possible. Using Operating System users and Roles facilitates object deployment and makes future maintenance easier. B. Determine security settings for writable attributes of objects. The security options for writeable attributes consists of: Read Only, Operate, Tune, Secured Write, Verified Write, and Configure. Review the functional worksheet that lists the objects (and their attributes). Security permissions reflect the users' rights to change the attribute value. An Operate permission requirement does not mean that the user must be an Operator. A QA inspector might have Operate permissions to change a value on an object that that collects QA data, while an operator on the same production line does not have this permission.

FactorySuite A2 Deployment Guide

Planning the Integration Project

27

To set up Security using the IDE 1. 2. 3. 4. 5. Configure the attribute security for Template objects (at the Template level). Create security groups. Create roles and assign them to security groups. Select permissions and grant them to roles. Define users and assign them to roles.

For more information on security configuration, see the IDE documentation. Note For detailed information about FactorySuite A2 Security, see Chapter 7, "Architecting Security."

Define the Deployment Model

The last workflow task defines the deployment model that specifies where objects are deployed. In other words, the deployment model defines which nodes will host the various AutomationObjects. Each computer in the FactorySuite A2 System network must have a Platform object, AppEngine object, and Area object deployed to it. For example, KT101, LT 101 and MK101 are all areas in the following figure:

MyGalaxy Unassigned Host MMark08 MMark13 AppEngine001 KT101 [ KT101 ] LT101 [ LT101 ] MK101 [ MK101 ] LT112HT239 LT112P200 LT112PT221 LT112PT223

The objects deployed on particular platforms and engines define the objects' "load" on the platform. The load is based on the number of I/O points, the number of user-defined attributes (UDAs), etc. The more complex the object, the higher the load required to run it. Note For object types and target deployment node recommendations (such as DIObjects), see Chapter 10, "Assessing System Size and Performance." After deployment, use the Object Viewer to check communications between nodes and determine if the system is running optimally. For example, a node may be executing more objects than it can easily handle, and it will be necessary to deploy one or more objects to another computer.

FactorySuite A2 Deployment Guide

28

Chapter 1

Note For more information on deployment, see IDE documentation.

Document the Planning Results

Determine how to document the project planning results before beginning the planning phase. Use Microsoft Excel (or other spreadsheet application) to document the list of devices, the functionality of each device, process areas to which the devices belong, etc. For example:

FactorySuite A2 Deployment Guide

Identifying Topology Requirements

29

C H A P T E R

Identifying Topology Requirements

System and information requirements are unique to each manufacturing domain. To control equipment, computers must provide real-time response to interrupts. To plan production, scheduling systems must consider sales commitments, routing costs, equipment downtime, and numerous other variables. Enterprise system and information requirements are satisfied by architecting effective network topologies and implementing software to leverage the topology. This chapter describes common FactorySuite A2 System topologies. They include application components such as Industrial Application Server, IndustrialSQL Server Historian, InTouch HMI software, and Wonderware I/O Servers, OLE for Process Control (OPC) Servers, and Data Access Servers (DAServers). The topology configurations include descriptions and "best practice" recommendations for specific components and functionality. Note For updated information on system requirements, see the user guides or readme files in the installation directory of the appropriate product CD. Pay particular attention to the requirements regarding the version and Service Pack level of the operating system and other application components.

Contents Topology Component Distribution Topology Categories General Topology Planning Considerations Best Practices for Topology Configuration I/O Server Connectivity Extending the IAS Environment

FactorySuite A2 Deployment Guide

30

Chapter 2

Topology Component Distribution

A Galaxy encompasses the whole supervisory control system, which is represented by a single logical namespace and a collection of Platforms, AppEngines, and objects. The Galaxy defines the namespace in which all components and objects reside. Because of its distributed nature and common services, Industrial Application Server does not require expensive server-class or fault-tolerant computers to enable a robust industrial application. The Industrial Application Server distributes objects throughout a distributed (networked) environment, allowing a single application to be split into a number of different component objects, each of which can run on a different computer. Before exploring the following FactorySuite A2 System topologies, review the main components and how they will be distributed based on requirements and functionality. The main topology components are:

Galaxy Repository (Configuration Database) AutomationObject Server Node (AOS) Visualization Node I/O Server Node Engineering Station Node Historian Node SuiteVoyager Portal

The following figure identifies each topology component:

Visualization Node

Visualization Node

Visualization Node

Supervisory Network

Network Device (Switch or Router ) AutomationObject Server Historian ( Data and Alarms) Engineering Station Configuration Database SuiteVoyager Portal I/O Server

PLC Network

FactorySuite A2 Deployment Guide

Identifying Topology Requirements

31

Galaxy Repository (Configuration Database)

The Galaxy Repository (also called Configuration Database or "GR") can be installed on a dedicated node or on the Engineering Station. Note The following topology figures show the Galaxy Repository as the Configuration Database on the Engineering Node. The Galaxy Repository manages the configuration data associated with one or more Galaxies. This data is stored in individual databases, one for each Galaxy in the system. Microsoft SQL Server 2000 (Standard Edition) is the relational database used to store the data. Note Install the Galaxy Repository on the same AOS node only when using a single node. For information on installing the GR on the same node with any other components, see "Single Node System Implementation" on page 234. During run-time, the GR communicates with all nodes in the Galaxy to keep them updated on global changes such as security model modifications, etc. Even though it is possible to disconnect the GR from the Galaxy and still keep remaining nodes in production, it is recommended to maintain the GR connection to the Galaxy in order to transfer all global changes when they occur. The Galaxy Repository is accessed when the objects in the database are viewed, created, modified, deleted, deployed, or uploaded. The Galaxy Repository is also accessed when a running object attempts to access another object that has not been previously referenced.

Best Practice

If working in a distributed network context (wide-area networks, distributed SCADA systems characterized by slow connections), install the Galaxy Repository on a laptop computer and carry it to remote sites as a portable resource for application maintenance. Connect the portable configuration database node to the local node via a dedicated local area network (LAN) connection to expedite the process of deploying/undeploying objects and creating and configuring templates. Remember that if the Galaxy Repository is not available on the network, existing objects cannot be deployed or undeployed to/from any Platform. However, any deployed objects will continue to operate normally. Create a ghost image of the portable GR Node, and perform frequent backups in case the laptop is damaged or lost.

FactorySuite A2 Deployment Guide

32

Chapter 2

Configuration and run-time components for the Configuration Database node are described in the following table: Configuration Components Run-Time Components

Integrated Development Environment (IDE) Galaxy Repository* Microsoft SQL Server 2000*

Bootstrap* Platform*

* Required component

AutomationObject Server Node

The AutomationObject Server node provides the processing resources for AppEngines, Areas, Application Objects, and DeviceIntegration (DI) Objects. The AutomationObject Server node requires a Platform to be deployed. The AutomationObject Server node functionality can be combined with a visualization node, depending on the process requirements and system capabilities. A distributed local network topology takes advantage of this type of configuration to provide flexibility to the system. Configuration and run-time components for the AutomationObject Server node are described in the following table: Configuration Components Run-Time Components

Integrated Development Environment (IDE)

Bootstrap* Platform* AppEngine* Areas ApplicationObjects DIObjects

* Required component

FactorySuite A2 Deployment Guide

Identifying Topology Requirements

33

Visualization Node

A Visualization node is a computer running InTouch Software on top of a Platform. The Platform provides for communication with any other Galaxy component via the Message Exchange (MX) protocol. Configuration and run-time components for the Visualization node are described in the following table: Configuration Components (none) Run-Time Components

Bootstrap* Platform* InTouch Software 8.0 or later; OR InTouch View 8.0 or higher*

* Required component

I/O Server Node

An I/O Server node functions as the data source for the Industrial Application Server system. I/O Servers communicate with external devices. Supported communication protocols: Dynamic Data Exchange (DDE), SuiteLink, and OPC. Note An I/O Server node can also be an AutomationObject Server, depending on the available memory and CPU resources. DIObjects on the Industrial Application Server side manage the communication between AutomationObjects and the I/O Servers. DIObjects provide connectivity to I/O data sources, and represent the corresponding server(s) as part of the plant model in the AutomationServer environment. In the case of DAServers, the corresponding DIObjects are so closely related that redeploying a DIObject to a different node uninstalls the DAServer locally and reinstalls it in the target machine. DIObjects require a Platform and an AppEngine. These objects can reside either on the I/O Server node or on any AutomationObject Server node in the Galaxy. Configuration and run-time components for the I/O Server node are described in the following table: Configuration Components I/O Server* Run-Time Components

Bootstrap Platform InTouch Software 8.0 or higher or InTouch View 8.0 or higher I/O Server*

* Required component

FactorySuite A2 Deployment Guide

34

Chapter 2

Best Practice

Observe the following guidelines to optimize I/O data transmission:

Always deploy the DIObject to the same node where the I/O data source is located, regardless of the protocol used by the I/O data source (DDE, Suitelink, OPC). If the I/O data source is on a node that is remote to the AutomationObject Server, the communication between the nodes is highly optimized by the MX (Message Exchange) protocol. A particular benefit is gained with this configuration when using OPC servers in remote nodes, since the system uses the MX protocol instead of DCOM communication between nodes. If the I/O data source is located in the same node as the AutomationObject Server, the communication is local, minimizing the travel time of data through the system.

Note I/O Server installation sequence is important: Always install the most recent software last. For example: first I/O Servers, then DAServers, then the Bootstrap, and so on.

Engineering Station Node

All of the recommended topologies include a dedicated Engineering Station node for maintenance purposes. The Engineering Station node contains a FactorySuite Development package which consists of components such as the Integrated Development Environment (IDE) and InTouch WindowMaker. The GR (Galaxy Repository) could also reside on this node. Thus, you could implement any required changes in the system from the Engineering Station node using the IDE, which would then access the local configuration database. This configuration provides flexibility in maintaining remote sites, since you can use a laptop computer as the Engineering Station containing the configuration database node and gain access to the AutomationObject Server node via a LAN connection. With the IDE and the Galaxy Repository on the same node, if you need to deploy or undeploy large applications, network traffic in the Galaxy would not be affected by the IDE accessing the configuration data in a remote node. Configuration and run-time components for the Engineering Station node are described in the following table: Configuration Components Run-Time Components

Integrated Development Environment (IDE) InTouch WindowMaker 8.0 or higher

Bootstrap* Platform

* Required component The IDE does not require a Platform. However, if Object Viewer is used on an Engineering Station node, a Platform is required. For more information about Object Viewer, see the Object Viewer documentation.

FactorySuite A2 Deployment Guide

Identifying Topology Requirements

35

Best Practice

When remote off-site access to the Galaxy Repository is required by means of the IDE, use a Terminal Service session or Remote Desktop connection to the Galaxy repository where the IDE has also been installed. Important! In order to launch an IDE session, the session must have user accounts in the Administrators group of the Terminal Server node. For more information about integrating InTouch Software for Terminal Services with other FactorySuite A2 System components, see the Terminal Services for InTouch Deployment Guide. The Engineering Station node also hosts the development tools to modify InTouch Software applications using WindowMaker.

Historian Node

The Historian node is used to run IndustrialSQL Server Historian software. IndustrialSQL Server Historian stores all historical process data and provides real-time data to FactorySuite client applications such as ActiveFactory and SuiteVoyager Software. The Historian Node does not require a Platform. The AutomationObject Server pushes data (configured for historization) to the Historian node using the Manual Data Acquisition Service (MDAS) packaged with Industrial Application Server and IndustrialSQL Server Historian. Important! MDAS uses DCOM to send data to IndustrialSQL Server Historian. Ensure that DCOM is enabled (not blocked) and that TCP/UDP port 135 is accessible on both the AppServer and IndustrialSQL Server Historian nodes. The port may not be accessible if DCOM has been disabled on either of the computers or if there is a router between the two computers - the router may block the port. Configuration and run-time components for the Historian Node are included in the following table: Configuration Components Run-Time Components

Microsoft SQL Server 2000*

IndustrialSQL Server Historian*

* Required component

Best Practice

Most system topologies combine the Historical and Alarm databases on the Historian Node. Configure the alarm system using the Alarm Logger utility, which creates the appropriate database and tables in Microsoft SQL Server. For requirements and recommendations for alarm configuration, see Chapter 9, "Implementing Alarms and Events." For information about historization, see Chapter 8, "Historizing Data."

FactorySuite A2 Deployment Guide

36

Chapter 2

SuiteVoyager Portal

A Server machine with a SuiteVoyager Software portal can be incorporated into any Galaxy. Use the Win-XML Exporter to convert InTouch windows to XML format, so SuiteVoyager Software clients can access real-time data from the Galaxy. A Platform must be deployed on the SuiteVoyager Portal for SuiteVoyager Software to access the Galaxy. Configuration and run-time components for the SuiteVoyager Portal are described in the following table: Configuration Components SuiteVoyager Software 2.0 SP1 or higher* Run-Time Components

Bootstrap* Platform* SuiteVoyager Software 2.0 SP1 or higher*

* Required component For information on deployment options for SuiteVoyager Software, see the SuiteVoyager Software documentation.

FactorySuite A2 Deployment Guide

Identifying Topology Requirements

37

Topology Categories

The following information describes high-level topology categories using FactorySuite A2 System components.

Distributed Local Network

This topology is designed for medium-sized systems where the processing requirements of each software component can be easily handled by the nodes providing the projected performance support. The primary characteristic of this topology type is that Visualization and AutomationObject Server functionalities coexist in the same node, called a Workstation. Several Workstation nodes (defined below) share data and run multiple functional components on each node. Different Workstation nodes coexist on a locally distributed network. The following Workstation characteristics are assumed unless otherwise noted:

Workstation Node

The Visualization and AutomationObject Server components are combined on the same node. Both components share the Platform, which handles communication with other nodes in the Galaxy. The Platform also allows for deployment/undeployment of ApplicationObjects. If you plan to combine the Visualization and AutomationServer components on the same node, evaluate the resource requirements for the following:

Active tags-per-window. ActiveX controls displayed. Alarm displays. Trending.

These values will impact AutomationObject service performance. Note For details on Alarm System configuration, see Chapter 9, "Implementing Alarms and Events." The relationship between "standalone" Workstations on a distributed local network is also known as "Peer-to-Peer."

FactorySuite A2 Deployment Guide

38

Chapter 2

The following figure illustrates the software components and their distribution:

Supervisory Network

Network Device (Switch or Router ) Workstation Historian Engineering Station ( Data and Alarms) Configuration Database SuiteVoyager Portal I/O Server

PLC Network

Client operating systems such as Windows XP Professional can manage up to 10 simultaneous active connections with other nodes. If the system is larger than 10 nodes, Windows Server 2003 must be used for all nodes. For additional information, see the General Topology Planning Considerations section later in this chapter.

I/O Server Node(s)

Different I/O data sources have different requirements. Two main groups are identified:

Legacy I/O Server applications (SuiteLink, DDE, and OPC Servers) do not require a platform on the node on which they run. They can reside on either a standalone or workstation node. However, the DIObjects used to communicate with those data sources such as the DDESuiteLinkClient object, OPCClient object, and InTouchProxy objects must be deployed to an AppEngine on a Platform. Although it is not required that these DIObjects be installed on the same node as the data server(s) they communicate with, it is highly recommended in order to optimize communication throughput.

For Device Integration objects like ABCIP and ABTCP DINetwork objects, both the DAServer and the corresponding DIObjects must reside on the same computer hosting an AppEngine.

Best Practice

I/O Servers can run on Workstations, provided the requirements for visualization processing, data processing, and I/O read-writes can be easily handled by the computer. Run the I/O Server and the corresponding DIObject on the same node where most or all of the object instances (that obtain data from that DIObject) are deployed. This implementation expedites the data transfer between the two components (the I/O Server and the object instance), since they both reside on the same node. This implementation also minimizes network traffic and increases reliability.

FactorySuite A2 Deployment Guide

Identifying Topology Requirements

39

However, it is good practice to evaluate the overhead necessary to run each component. The following figure illustrates implementing I/O Servers on the same node:

Supervisory Network

Network Device (Switch or Router ) Workstation I/O Server Historian (Data and Alarms) Engineering Station Configuration Database SuiteVoyager Portal

PLC Network

Historian Node

The IndustrialSQL Server Historian software must run on a designated node.

Engineering Station and GR (Configuration Database)

The Engineering Station node hosts the IDE and InTouch WindowMaker to facilitate Industrial Application Server and InTouch Software application maintenance. As the GR node, it hosts the SQL Server database that stores the Galaxy's Configuration Data.

SuiteVoyager Portal

The SuiteVoyager Portal supplies real-time historical data to web clients.

FactorySuite A2 Deployment Guide

40

Chapter 2

Client/Server

This topology configuration includes dedicated nodes running AutomationObject Servers, while visualization tasks are performed on separate nodes. The benefits of this topology include usability, flexibility, scalability, system reliability, and ease of system maintenance, since all configuration data resides on dedicated servers. The client components (represented by the visualization nodes) provide the means to operate the process using applications that provide data updates to process graphics. The clients have a very light data processing load. The AutomationObject Server nodes share the load of data processing, alarm management, communication to external devices, security management, etc. For details on the implementation of AppEngine and I/O server redundancy in a client server configuration, see the Widely-Distributed Network section later in this chapter. The following figure illustrates a client/server topology:

Visualization Node

Visualization Node

Visualization Node

Supervisory Network

Network Device (Switch or Router ) AutomationObject Server Historian ( Data and Alarms) Engineering Station Configuration Database SuiteVoyager Portal I/O Server

PLC Network

This topology is scalable to include a greater number of servers. Including more servers distributes data processing loads and enables a higher load of I/O reads/writes. Client nodes can be added when additional operator stations are needed.

FactorySuite A2 Deployment Guide

Identifying Topology Requirements

41

General Topology Planning Considerations

When deciding on the topology to implement with Industrial Application Server, it is important to consider different topology variations. For example, it is critical to evaluate variations of the Client/Server topology, keeping in mind process requirements such as the number of I/O points, the update rate of variables at the server and client level, etc. The peer-to-peer nature of the communication between the Platforms requires adequate support for network connections.

Windows XP Operating Station Notes

Client operating systems such as Windows XP Professional can manage up to 10 simultaneous active connections with other nodes. In a pure client/server architecture, it is possible to have more than 10 client nodes running Windows XP Professional. The following requirements must be met:

Client nodes are only running a Platform and InTouch Software. The IDE is not installed on the client nodes. The SMC (System Management Console) is installed with a Bootstrap and InTouch Software. However, the Platform Manager snap-in to the SMC should not be launched, as it connects to all Platforms in the Galaxy. Object Viewer is not run either from the SMC (Platform Manager) or from the executable file installed in the application directory. The client nodes are not running other applications, ActiveX objects, or functions that request data from remote sources (for example, ActiveFactory). This could cause more open connections on the client node. Also, consider any network shares on the client nodes as possible open connections. None of the client platforms are configured as InTouch Alarm Providers. To report alarms from client Platforms, place all Platforms in an Area hosted by any of the servers. A single client node does not require data from more than 10 server nodes.

Note This topology was tested and the above requirements validated on a system that included 16 InTouch Software client nodes and five AutomationObject Server nodes executing ApplicationObjects. The Galaxy Repository was installed on a dedicated server. For more information on defining system size, see Chapter 10, "Assessing System Size and Performance." Finally, consider different options when deploying I/O Servers in a Galaxy, such as whether to run them on AutomationObject Servers or on dedicated computers, and redundancy strategies.

FactorySuite A2 Deployment Guide

42

Chapter 2

I/O Servers on AutomationObject Server Nodes

Both components (I/O Servers and AutomationObject Servers) can reside on the same node if I/O and data processing loads are not a constraint. In a topology that includes multiple I/O Servers, the load is balanced so that each AutomationObject Server executes a set of I/O Servers, DIObjects, and ApplicationObjects. The goal of this topology is to optimize network performance by reducing the network traffic between Galaxy components. Associated DIObjects and ApplicationObjects should run on the same Platform in order to minimize network traffic. The following figure illustrates this concept:

Visualization Node

Visualization Node

Visualization Node

Supervisory Network