Professional Documents

Culture Documents

Data Center Poster

Uploaded by

Roberto SolanoCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Data Center Poster

Uploaded by

Roberto SolanoCopyright:

Available Formats

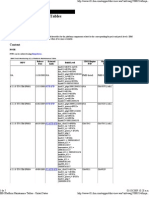

DATA CENTRE NETWORKED APPLICATIONS BLUEPRINT

A KEY FOUNDATION OF CISCO SERVICE-ORIENTED NETWORK ARCHITECTURE

SECONDARY INTERNET EDGE AND EXTRANET

LARGE BRANCH OFFICE INTERNET EDGE

EXTRANET Perform Distributed Denial of Service (DDoS) Partners

Use dual Integrated Services Routers for a large attack mitigation at the Enterprise Edge. Place the

branch office. Each router is connected to different Use a collapsed Internet Edge and

Guard and Anomaly Detector to detect and extranet design for a highly centralized

WAN links for higher redundancy. Use dual stack mitigate high-volume attack traffic by diverting

IPv6-IPv4 services on Layer 3 devices. Use the and integrated edge network. Edge

Use a dedicated extranet as a highly through anti-spoofing and attack specific dynamic services are provided by embedding

integrated IOS firewall and intrusion prevention for scalable and secure termination point filter counter-measures. Use the Adaptive Security

edge security and the integrated Call Manager for intelligence from service modules such as

for IPsec and SSL VPNs to support Appliance to concurrently perform firewall, VPN, firewall, content switching and SSL (ACE)

remote voice capabilities. Consider IPv6 firewall business partner connectivity. Apply and instrusion protection functions at the edge of

policies, filtering and DHCP prefix delegation when and VPN modules, and appliances such

IPv6 traffic is expected to/from branch offices. Use

the intranet server farm design best

practices to the specific partner

the enterprise network. Use dual-stack IPv6-IPv4

on the edge routers and consider IPv6 firewall

Service Service as Guard XT and the Anomaly Detector for

DDoS protection. Additional edge

integrated Wide Area Engines for file caching,

local video broadcast, and static content serving.

facing application environments, but

considering their specific security and

and filtering capabilities. Provider 1 Provider 2 functionality includes site selector and

content caching, as well as event

scalability requirements. Consider the correlation engines and traffic monitoring

use of the Application Control Engine Place the Global Site Selector in the DMZ to provided by the integrated service devices.

Large branches have similar LAN/SAN designs to

INTERNET

(ACE) to provide high performance prevent DNS traffic from penetrating the edge Consider the use of dual-stack IPv4-IPv6

small data centers and small campuses for server and high scalability load balancing, security boundaries. Consider the design of the services in Layer 3 devices and the need

and storage, and client connectivity respectively. SSL and application security Internet-facing server farm following the same to support IPv6 firewall policies and IPv6

Use Layer 3 switches to house branch-wide LAN

services and to provide connectivity to all required

capabilities per partner profile by using

virtual instances of these functions.

best practices used in intranet server farms, with

specific scalability and security requirements

VPN filtering capabilities.

access layer switches. Consider a number of

driven by the size of the target user population.

VLANs based on the branch functions such as

Use the AONs application gateway for XML

server farm, point of sale, voice, video, data,

filtering such as schema and digital signature

wireless and management in the LAN design.

validation, and provide transaction integrity and

security for legacy application message formats.

Consider the storage network design and available

storage connectivity options: FC, iSCSI and NAS.

Plan the data replication process from the branch

to headquarters based on latency and transaction

rates requirements. Consider QoS classification to SECONDARY CAMPUS NETWORK

ensure the different types of traffic match the loss

and latency requirements of the applications. CAMPUS CORE

CAMPUS NETWORK Integrate wireless controllers at

Building Y WIDE AREA NETWORK the distribution layer and wireless

access points at the access layer.

When Layer 2 is used in the

Campus access layer, select

CAMPUS CORE Use Etherchannel between the

distribution switches to provide

a primary distribution switch redundancy and scalability.

to be the primary default

gateway and STP root.

The Campus core provides connectivity between PSTN Dual-home access switches to the

distribution layer to increase

REMOTE OFFICES Set the redundant distribution

the major areas of an Enterprise network including

the data center, extranet, Internet edge, Campus, redundancy by providing alternate

switch as the backup Wide Area Network (WAN), and Metropolitan Area paths. Consider the use of

default gateway and Network (MAN). Use a fully-meshed Campus core dual-stack IPv4-IPv6 services at

HOME OFFICE SMALL OFFICE

secondary root. Use to provide high-speed redundant Layer 3 the access, distribution and core

HSRP and PVRST+ connectivity between the different network areas. Layer 3 and/or Layer 2 devices.

as the primary default Use dual-stack IPv6-IPv4 in all Layer 3 devices

Consider an integrated services design gateway and STP protocols. and desktop services.

Consider the home office as an

extension of the enterprise network. for a full service branch environment.

Basic services include access to Services include voice, video, security PRIMARY SITE SECONDARY SITE Building Z

applications and data, voice and and wireless. Voice services include IP

video. Use VPN to ensure security phones, local call processing, local voice

for teleworker environments, thus mail, and VoIP gateways to the PSTN.

relying on the corporate security Security services include integrated

policies. Also consider the use of firewall, intrusion protection, IPsec and

wireless access as an extension of admission control. Connect small office Use 10GbE throughout the

SECONDARY DATA CENTER

Use the WAN as the primary path for user traffic

the enterprise network; secure and networks to headquarters through VPN, infrastructure (between destined for the intranet server farm. Through the use

reliable. and ensure QoS classification and distribution switches and of DNS and RHI control the granularity of applications

Enable QoS to police and enforce enforcement provides adequate service between access and being independently advertised, and state of distributed

service levels for voice, data and levels to the different traffic types. distribution) when high application environments. Ensure the proper QoS

video traffic. Consider a dual-stack Configure multicast for applications that throughput is required. classification is used for voice, data and video traffic. Use the secondary data center as a

IPv6-IPv4 router to support IPv6 require concurrent recipients of the same Use Layer 3 access Use dual-stack IPv6-IPv4 in all Layer 3 devices. backup location that houses critical

remote devices. Security policies for traffic. Consider a dual-stack IPv4-IPv6 switches when shared standby transactional (near zero

IPv6 traffic should include IPv6 router to support IPv6 traffic. Ensure VLANs are not needed in RPO and RTO) and redundant active

filtering and capable firewalls. IPv6 firewall rules and filtering more than one access non-transactional applications (RPO

capabilities are enabled on the router. switch at a time, and very and RTO in the 12-24 hours range).

low convergence is required.

Building X The secondary data center design is

a smaller replica of the primary that

houses backup critical application

environments. These support business

functions that must be resumed to

achieve regular operating conditions.

LARGE-SCALE PRIMARY DATA CENTER BLADE SERVER COMPLEX

MAN INTERCONNECT Group servers providing like-functions

in the same VLANs to apply consistent

and manageable set of security, SSL,

METRO ETHERNET

COLLAPSED MULTITIER DESIGN DATA CENTER CORE Place all network-based service devices (modules or

load balancing, and monitoring policies.

Dual-home critical servers to different

appliances) at the aggregation layer to centralise the

access switches, and stagger primary

configuration and management tasks and to leverage

Use a data center core layer to support multiple aggregation physical connections between

Use ACE as a content switch to service intelligence applied to the entire server farm.

modules. Use multiple aggregation modules when the number Use a high-speed (10GbE) metro optical available access switches.

scale application services including

of servers per module exceeds the capacity of the module. network for packet-based and transparent

SSL off-loading on server farms. Consider blade server direct attachment and network

Connect the data center core switches to the campus core LAN services between distributed Campus

Use virtual firewalls to isolate fabric options; Pass-through modules or integrated Use PortChannels and trunks to

switches to reach the rest of the Enteprise network. Consider and Data Centre environments.

application environments. Use AON switches, and Fibre Channel, Ethernet and Infiniband. Blade Blade aggregate multiple physical inter-switch

the use of 10GbE links between core and aggregation switches. Servers Servers

to optimise inter-application security links (ISL) into a logical link. Use

Use dual-stack IPv6-IPv4 in all Layer 3 devices in the data

and communications services, and In an integrated Ethernet switch fabric, set up half the VSANs to segregate multiple distinct

center, and identify the server farm requirements to ensure

to provide visibility into real-time blades active on switch1 and half active on switch2. A SANs in a physical fabric to consolidate

IPv6 traffic conforms to firewall and filtering policies. Infiniband B

transactions. Use MARS to detect Dual-home each Ethernet switch to Layer 3 switches isolated SANs and SAN fabrics. Use

security anomalies by correlating through GbE-channels. Use RPVST+ for fast STP Network core-edge topologies to connect

data from different traffic sources. convergence. Use link-state tracking to detect uplink multiple workgroup fabric switches

failure and allow the blades standby NIC to take over. when tolerable over-subcription is a

design objective.

Attach integrated Infiniband switches to Server Fabric

Consolidate application and Switches acting as gateways to the Ethernet network.

security services (service modules EXPANDED MULTI-TIER DESIGN Connect the gateway switches to the aggregation

switches to reach the IP network.

Use storage virtualisation to further

increase the effective storage utilization

or appliances) at the aggregation

layer switches. Ensure the access and centralise management of storage

A B arrays. Arrays form a single pool of

layer design (whether L2 or L3) Application, security and virtualisation When using pass-through modules dual-home

provides a predictable and servers to access/edge layer switches. Pass-through virtual storage which are presented

services provided by service modules

deterministic behavior and allows modules allow Fibre Channel environments to avoid as virtual disks to applications.

or appliances are best offered from the Use a SONET/SDH transport network for FCIP,

the server farm to scale up the aggregation layer. Services are made interoperability issues while allowing access to the in addition to voice, video, and additional IP

expected number of nodes. Use available to all servers, provisioning is advance SAN fabric features. traffic between distributed locations in a metro

VLANs in conjunction with centralized and the network topology or long-haul environments. Consider the use of

instances of application and is kept predictable and deterministic. RPR/802.17 technology to create a highly

security services applied to each

4992 Node Ethernet Cluster

available MAN core for distributed locations.

application environment

independently. Select a primary aggregation switch to HIGH DENSITY ETHERNET CLUSTER • Modular chassis per rack group

A B be the primary default gateway and • 8 aggregation - 16 access switches

STP root. Set the redundant High density Ethernet clusters consist of

• 16 10GbE downlinks per aggregation

aggregation switch as the backup multiple servers that operate concurrently

• 8 10GbE uplinks per access

Use VSANs to create separate default gateway and secondary root. to solve computational tasks. Some of

• Layer 3 in aggregation and access

SANs over a shared physical Use HSRP and RPVST+ as the these tasks require certain degree of

• 8-way equal cost multipath ECMP

infrastructure. Use two distinct SAN primary default gateway and STP processing parallelism while others require

• 312 GbE ports per access switch

fabrics to mainain a highly available protocols and ensure he active service raw CPU capacity. Common applications

• 3.9:1 Oversubscription

SAN environment. Use port channel devices are in the STP root switch. of large Ethernet clusters include large

• 80 Gigabit per access switch

to increase path redundancy and search engine sites and large web server

fast recovery from link failure. Use farms. The diagram shows a tiered design

The core layer is required when the

FSPF for equal cost load-balancing Deploy access layer switches in pairs using “top of rack” 1RU access switches

cluster needs to connect to an existing

through redundant paths. Use to enable server dual-homing and NIC for a total of 1536 servers.

IP network environment. The modular

storage virtualisation to pool distinct Teaming. Use trunks and channels

access layer switches provide access

physical storage arrays as one, between access and aggregation Topology Details:

Use a DWDM/SONET/SDH/Ethernet transport 4992 GbE attached servers functions to groups of racks at a time.

hiding physical details (arrays, switches. Carry VLANs that are needed

network to support high-speed, low-latency Design is aimed at reducing hop count

spindles, LUNs). throughout the server farm on every - 8 core switches connected to each

uses, such as synchronous data replication between any two nodes in the cluster.

trunk, to increase flexibility. Trimm aggregation module through a 10GbE

unneeded VLANs from every trunk. link per switch between distributed disk subsystems. The

- 4 aggregation modules each with 2 common transport network supports multiple

Layer 3 switches that provide 10GbE protocols such as FC, GbE, and ESCON

Use firewalls to control the traffic path connectivity to the access layer switches concurrently. 288 Node Infiniband Cluster

HIGH PERFORMANCE INFINIBAND CLUSTER between tiers of servers and to isolate - 8 access switches per aggregation

SERVER module, each switch connecting to 2 Use a non-blocking design for server

distinct application environments. Use

CLUSTER aggregation switches through 10GbE clusters dedicated to computational tasks.

Use an Infiniband fabric for applications that execute a high ACE as a content switch to monitor SERVER

VSAN links

1536 GbE In a non-blocking design for every HCA

rate of computational tasks and require low latency and high and control server and application servers CLUSTER

- Each access layer switch supports 48 connected to the edge/access layer, there

throughput. Select the proper oversubcription rate between health, and to distribute traffic load VSAN

10/100/1000 ports and 2 10GbE uplinks is an uplink to an aggregation layer switch.

edge and aggregation layers for intracluster purposes, or between clients and the server farm,

between aggregation, core and the Ethernet fabric. In a 2:1 and between server/application tiers.

Topology Details

blocking topology for every two HCAs connected to edge Edge

switches, there is an uplink to an aggregation layer switch. Core To achieve additional redundancy on an HA server cluster, distribute a portion of • 24 switches - 24 ports each

switches provide connectivity to the Ethernet fabric. Use Vframe Connect access switches used in the servers in the HA cluster to a data center. This distribution of HA clusters • 12 servers per switch

to manage I/O virtualisation capabilities of server fabric switches. application and back-end segments to HIGH AVAILABILITY SERVER CLUSTER across distributed data centers, referred to as geo-clusters or stretched clusters, • 12 uplinks to aggregation layer

each other across application tier often times requires Layer 2 adjacency between distributed nodes. Adjacency Aggregation

Topology details for 1024 servers include:

function boundary through means the same VLAN (IP subnet) and VSAN have to be extended over the • 12 switches - 24 ports each

Edge: EtherChannel® links. Use VLANs to High availability clusters consist of multiple

shared transport infrastructure, between the distributed data centers. The HA • 1 or more uplinks to each core switch

• 96 switches - 24 ports each - 12 servers per switch separate groups of servers by function servers supporting mission-critical applications

cluster spans multiple geographically distant data center hosting facilities. Core

• 12 Uplinks to aggregation layer or application service type. in business continuance or disaster recovery

scenarios. The applications include databases, • number of core switches based on

Aggregation: connectivity needs to IP network

• 6 switches - 96 ports each filers, mail servers or file servers. The nodes of

• 12 downlinks - one per edge switch a single application cluster use a clustering

Use VSANs to group isolated fabrics

Core: into a shared infastructure while A B mechanism that relies on unicast packets if PUBLIC VLANs

• Number of switches based on fabric connectivity needs there are two nodes or multicast if using more

keeping their dedicated fabric HIGH AVAILABILITY SERVER CLUSTER

than two nodes. The nodes backup

services, security, and stability The nodes in HA clusters are linked

each other and use heartbeats to determine

integral per group. Dual-home hosts CLUSTER VLANs to multiple networks using existing

node status. The network infrastructure

to each of the SAN fabrics using Fibre network infrastructure. Use the

suporting the HA cluster is shared by other

Channel Host Bus Adapters (HBAs). private network for heartbeats and

NETWORK OPERATIONS CENTER (NOC) server farms. Additional VLANs and VSANs

the public network for inter-cluster

NOC VLAN/VSAN are used to connect additional NICs and HBAs FABRIC C communication and client access.

required by the cluster to operate. The

Use a dual-fabric (fabrics A and B) Nodes in distributed data centers

application data must be available to all nodes

topology to achieve high resiliency in may need to be in the same subnet,

in the cluster. This requires the disk to be shared

SAN environments. A common FABRIC D requiring Layer 2 adjacency.

so it cannot be local to each node. The shared

management VSAN is recommended disk or disk array is also accessible through IP

to allow the fabric manager to manage (iSCSI or NAS), Fibre Channel (SAN) or shared

and monitor the entire network VLAN X VLAN Y VSAN P VSAN Q

SCSI. The transport technologies that can be

Use a NOC VLAN to house critical management tools and to isolate management traffic from client/server traffic. Use NTP, environment. used to connect the LAN and the SAN of the PUBLIC PRIVATE

SSH-2, SNMPv3, CDP and Radius/TACACS+ as part of the management infrastructure. Use CiscoWorks LMS to manage data centers can be Dark Fiber, DWDM, CWDM, The transport network supports multiple communication streams between nodes NETWORK

NETWORK

the network infrastructure and monitor IPv4-IPv6 traffic, and the Cisco Security Manager to control, configure and deploy SONET, Metro Ethernet, EoMPLS, L2TPv3 as in the stretched clusters. Use multiple VLANs to separate intracluster from client

firewall, VPN and IPS security policies. Use on the Performance Visibility Manager to measure end-to-end application some of the options shown above. traffic. Use multiple SAN fabrics to provide path redundancy for the extended

performance. Use the Monitoring, Analysis, and Response System to correlate traffic for anomaly detection purposes. Use SAN. Use multipathing on the hosts and IVR between the SAN fabric Directors to

the Network Planning Solution to build network topology models, for failure scenario analysis and other what-if scenarios take advantage of the redundant fabrics. Use write acceleration to improve the

based on device configuration, routing tables, NAM and NetFlow data. Use the MDS Fabric Manager to manage the storage performance rate of the data replication process. Consider the use of encryption

network. Use NetFlow and the Network Analysis Module for capacity planning and traffic profiling. to secure data transfers and compression to increase the data transfer rates.

Designed By: Data Center Fundamentals: Service

Modules End-user

10 GbE

Workstation GbE

Mauricio Arregoces www.ciscopress.com/datacenterfundamentals Area Wireless

Connection

marregoc@cisco.com

Service Service Cisco Cisco 3000

Questions: Design Best Practices: Appliances Devices

Placement

Application

Control

Series

Area Multifabric

ask-datacenter-grp@cisco.com

www.cisco.com/go/datacenter Location Engine

Server Switch

Part #: 910300406R01

You might also like

- Infrastructure and Data Center Complete Self-Assessment GuideFrom EverandInfrastructure and Data Center Complete Self-Assessment GuideNo ratings yet

- Intel Data Center DesignDocument21 pagesIntel Data Center DesigndarvenizaNo ratings yet

- DataCenter Design GuideDocument86 pagesDataCenter Design Guidebudai8886% (7)

- Data Center GuideDocument49 pagesData Center GuideNithin TamilNo ratings yet

- Data Center Design GuideDocument123 pagesData Center Design Guidealaa678100% (1)

- Data Center DesignDocument40 pagesData Center Designsjmpak100% (2)

- Data CentreDocument65 pagesData CentreMuhd Anas100% (1)

- Data Center AssessmentsDocument12 pagesData Center Assessmentsyadav123456No ratings yet

- DATA CENTER Design ChecklistDocument6 pagesDATA CENTER Design ChecklistJane Kenny86% (7)

- Data Center Design InfrastructureDocument15 pagesData Center Design InfrastructureShahanawaz KhanNo ratings yet

- Data Center Design Case StudiesDocument256 pagesData Center Design Case StudiesGeorgi Dobrev50% (6)

- Data - Centers - Design Consideration PDFDocument56 pagesData - Centers - Design Consideration PDFFazilARahman100% (1)

- Uptime Institute Standard Tier TopologyDocument12 pagesUptime Institute Standard Tier TopologySarach KarnovaNo ratings yet

- Data Center Ebook Efficient Physical InfrastructureDocument66 pagesData Center Ebook Efficient Physical InfrastructureCharly Skyblue100% (1)

- English Preparation Guide CDCP 201706Document16 pagesEnglish Preparation Guide CDCP 201706Ungku Amir Izzad100% (2)

- Data Centers Roadmap FinalDocument53 pagesData Centers Roadmap FinalRich Hintz100% (11)

- Data Center Infrastructure ManagementDocument7 pagesData Center Infrastructure ManagementHassan Ammar100% (1)

- Data Center Design Best PracticesDocument41 pagesData Center Design Best PracticesEmerson Network Power's Liebert products & solutions100% (12)

- Data CenterDocument6 pagesData Centershaikamjad50% (4)

- Best Practices - Data Center Cost and DesignDocument22 pagesBest Practices - Data Center Cost and DesignMarc CNo ratings yet

- High Performance Data Centers: A Design Guidelines SourcebookDocument63 pagesHigh Performance Data Centers: A Design Guidelines SourcebookRich Hintz100% (20)

- Data Center Best PracticesDocument28 pagesData Center Best Practicesmnt617683% (6)

- Data Center Cabling A Data Center - TIA-942 - PresentationDocument61 pagesData Center Cabling A Data Center - TIA-942 - PresentationGonzalo Prado100% (1)

- Tier Standard-Operational-Sustainabilitypdf PDFDocument17 pagesTier Standard-Operational-Sustainabilitypdf PDFPanos Cayafas100% (1)

- Applying TIA 942 in DatacenterDocument5 pagesApplying TIA 942 in DatacentereriquewNo ratings yet

- Microsoft Word - CDCPDocument3 pagesMicrosoft Word - CDCPAhyar AjahNo ratings yet

- Data Center Tier ClassificationDocument12 pagesData Center Tier Classificationasif_ahbab100% (1)

- Data Center ChecklistDocument2 pagesData Center Checklistalialavi20% (1)

- BICSI Data Center Standard: Stephen Banks, RCDD CDCDPDocument26 pagesBICSI Data Center Standard: Stephen Banks, RCDD CDCDPMarcelo Leite Silva100% (2)

- Introducing TIA 942 PracticesDocument38 pagesIntroducing TIA 942 PracticesAbu_gad100% (3)

- Data Centre Design Consultant (DCDC)Document12 pagesData Centre Design Consultant (DCDC)HM0% (1)

- Data Center Design Case StudiesDocument288 pagesData Center Design Case Studiesnoella mcleodNo ratings yet

- Datacenter Design & Infrastructure LayoutDocument80 pagesDatacenter Design & Infrastructure Layoutraj_engg100% (2)

- Bicsi 002 Data Center Design and Implementation Best PracticesDocument50 pagesBicsi 002 Data Center Design and Implementation Best Practicesmc3imc3100% (7)

- Iverson Associates CDCP Training QuotationDocument3 pagesIverson Associates CDCP Training QuotationhighlandranNo ratings yet

- Data Center Infrastructure Resource GuideDocument64 pagesData Center Infrastructure Resource Guidesondagi367% (3)

- Data Center Knowlede DCIM GuideDocument19 pagesData Center Knowlede DCIM GuidegenwiseNo ratings yet

- Data Center Design Power SessionDocument192 pagesData Center Design Power SessionJarod AhlgrenNo ratings yet

- A B C D e F G H I J K C M N o P: Products Quantity (Number) BrandDocument4 pagesA B C D e F G H I J K C M N o P: Products Quantity (Number) Brandvr_xlentNo ratings yet

- Data Center Projects System PlanningDocument32 pagesData Center Projects System PlanningEmin M. KrasniqiNo ratings yet

- Data Center Design Overview SummaryDocument36 pagesData Center Design Overview SummaryKunjan Kashyap0% (1)

- DC110 Data Center Design and Best Practices Version 1.4 Student Guide September 2010Document253 pagesDC110 Data Center Design and Best Practices Version 1.4 Student Guide September 2010Mathew Paco88% (8)

- Data Centre Infrastructure ManagementDocument39 pagesData Centre Infrastructure ManagementBryan BowmanNo ratings yet

- PTCL Data Centre FinalDocument32 pagesPTCL Data Centre FinalZohaib Chachar100% (2)

- Data Center Infrastructure Management DCIM A Complete GuideFrom EverandData Center Infrastructure Management DCIM A Complete GuideNo ratings yet

- Data center infrastructure management A Complete Guide - 2019 EditionFrom EverandData center infrastructure management A Complete Guide - 2019 EditionNo ratings yet

- Data Center Power and Cooling Technologies A Complete Guide - 2019 EditionFrom EverandData Center Power and Cooling Technologies A Complete Guide - 2019 EditionRating: 4 out of 5 stars4/5 (1)

- Data Center Design And Construction A Complete Guide - 2020 EditionFrom EverandData Center Design And Construction A Complete Guide - 2020 EditionNo ratings yet

- Data Center Infrastructure Providers A Complete Guide - 2019 EditionFrom EverandData Center Infrastructure Providers A Complete Guide - 2019 EditionNo ratings yet

- Data Center Power Equipment A Complete Guide - 2020 EditionFrom EverandData Center Power Equipment A Complete Guide - 2020 EditionNo ratings yet

- Cloud Data Centers and Cost Modeling: A Complete Guide To Planning, Designing and Building a Cloud Data CenterFrom EverandCloud Data Centers and Cost Modeling: A Complete Guide To Planning, Designing and Building a Cloud Data CenterNo ratings yet

- Coexistence Scenarios Between ITM and OMEGAMON XE For MessagingDocument2 pagesCoexistence Scenarios Between ITM and OMEGAMON XE For MessagingRoberto SolanoNo ratings yet

- Lista de Parches para ITM Al 01.10.2009Document5 pagesLista de Parches para ITM Al 01.10.2009Roberto SolanoNo ratings yet

- Manage VlandatDocument12 pagesManage VlandatRoberto SolanoNo ratings yet

- Easy Neural Networks With FANNDocument6 pagesEasy Neural Networks With FANNRoberto SolanoNo ratings yet

- Squirrel Shell: Shell para Cualquier Sistema OperativoDocument13 pagesSquirrel Shell: Shell para Cualquier Sistema OperativoRoberto SolanoNo ratings yet

- Cisco IS-IS Portable Command GuideDocument12 pagesCisco IS-IS Portable Command GuideRoberto Solano100% (4)

- C CheatsheetDocument74 pagesC CheatsheetRoberto Solano100% (6)

- CHAPTER 3 Design Methodology 3.1 MethodologyDocument4 pagesCHAPTER 3 Design Methodology 3.1 MethodologyRuth EnormeNo ratings yet

- BOQ of Fire Alarm, PA 05-08-14Document5 pagesBOQ of Fire Alarm, PA 05-08-14abdullahNo ratings yet

- WWW Edn Com Design Analog 4363990 Control An LM317T With A PWM SignalDocument5 pagesWWW Edn Com Design Analog 4363990 Control An LM317T With A PWM SignalcristoferNo ratings yet

- Lecture Logical EffortDocument111 pagesLecture Logical EffortYagami LightNo ratings yet

- 02 Microwave Experiment Manual - PolarizationDocument2 pages02 Microwave Experiment Manual - PolarizationAriel Carlos CaneteNo ratings yet

- Two PortDocument11 pagesTwo PortVasu KhandelwalNo ratings yet

- Transformer Vectro Group-2Document3 pagesTransformer Vectro Group-2Electrical_DesignNo ratings yet

- TLP 3022Document6 pagesTLP 3022elecompinnNo ratings yet

- LNB Selection MatrisiDocument1 pageLNB Selection MatrisiArif CigdemNo ratings yet

- History of MicroprocessorsDocument37 pagesHistory of MicroprocessorsWessam Essam0% (1)

- ZTE LTE Random AccessDocument65 pagesZTE LTE Random AccessGauthier Toudjeu100% (2)

- Suntrio Plus 12-20K-Datasheet 201907Document1 pageSuntrio Plus 12-20K-Datasheet 201907ali ahmadNo ratings yet

- GSLC Week 3 Tour de France PDFDocument5 pagesGSLC Week 3 Tour de France PDFDerian WijayaNo ratings yet

- Microstrip Antennas GuideDocument56 pagesMicrostrip Antennas GuidePrajakta MoreNo ratings yet

- Digital 262Document4 pagesDigital 262directNo ratings yet

- Filtering in Frequency Domain1Document62 pagesFiltering in Frequency Domain1nandkishor joshiNo ratings yet

- Wave Propagation and Transmission Line EffectsDocument16 pagesWave Propagation and Transmission Line Effectssadke213No ratings yet

- EE 308 Apr. 19, 2002Document12 pagesEE 308 Apr. 19, 2002asprillaNo ratings yet

- Heathkit Test Equipment 1954Document44 pagesHeathkit Test Equipment 1954Vasco MelloNo ratings yet

- LCR-92 - Installation ManualDocument122 pagesLCR-92 - Installation Manualramiro69100% (2)

- Patent For Spy Cam in Your TVDocument17 pagesPatent For Spy Cam in Your TVGodIsTruthNo ratings yet

- 1tne968902r1101 Ai561s500 Analog Input Mod 4ai U IDocument2 pages1tne968902r1101 Ai561s500 Analog Input Mod 4ai U IMartinCastilloSancheNo ratings yet

- V4-65D-R4-V2: General SpecificationsDocument5 pagesV4-65D-R4-V2: General SpecificationsFernando Cuestas RamirezNo ratings yet

- Challenges and HurdlesDocument8 pagesChallenges and HurdlesManisha RajpurohitNo ratings yet

- Vibrating Wire Indicator: Model Edi-51VDocument2 pagesVibrating Wire Indicator: Model Edi-51Vdox4printNo ratings yet

- Lm331 Voltaje A FrecuenciaDocument24 pagesLm331 Voltaje A FrecuenciaLeguel LNo ratings yet

- Huawei Indoor Digitalization Multi-Operator Sharing Solution-LampSite 3.0-20170615 PDFDocument27 pagesHuawei Indoor Digitalization Multi-Operator Sharing Solution-LampSite 3.0-20170615 PDF123 mlmb100% (2)

- Uc Creg 097 2008Document399 pagesUc Creg 097 2008algotrNo ratings yet

- Bluetooth HM-13 enDocument22 pagesBluetooth HM-13 enNicolas Prudencio RojasNo ratings yet

- Product data sheet for variable speed drive ATV12 - 0.18kW - 0.25hpDocument2 pagesProduct data sheet for variable speed drive ATV12 - 0.18kW - 0.25hpmanas jenaNo ratings yet