Professional Documents

Culture Documents

Free Communication

Uploaded by

Anbarasan RamamoorthyOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Free Communication

Uploaded by

Anbarasan RamamoorthyCopyright:

Available Formats

Concept of Error Free Communication In Communication system, the probability of error pe for detected signal at the receiver is inversely

proportional to the transmitted signals power. Then error probability pe and bit energy Eb can related as follows

Where

Probability of error Energy of a bit.

If Eb increases, the probability of error Pe decreases. Signal power can be expressed as S=EbRb, Eb can be increased by increasing signal power S Eb can be increased by decreasing the bit transmission rate Rb . So , In Communication systems, If we reduce the transmission rate ,error rate can be reduced. If we pass, the data with low or no redundancy, noise will affect the data, so information may loss . i.e., its difficult to detect the transmitted symbol correctly. For e.g. ., Optimum codes has no redundancy and compact codes has less redundancy. When these low redundancy codes are transmitted, it will collide with noise. So noise causes some information loss. So its impossible to detect the transmitted symbol correctly. If redundancy of data increases, the immunity of the signal against nose increases. Only way to increase redundancy is to repeat a digit a number of times. The receiver uses majority rule to decipher the message. So, ever if any one of those digits is found to be error, the receiver has detect the transmitted symbol correctly .There is the case that noise may corrupts two or more digits to .So the corrupted bits are found to be error. In that case, redundancy should be increased. For e.g., Repetition of Five digits Figure 4.13 shows all the eight possible sequences that can be received by the receiver when a single bit is repeated three times and transmitted. The sequences are represented as the vertices of a 3dimensional cube. Sequence 000 or 111 are taken as two origin vertices among eight vertices. The majority decision rule basically takes a decision in favor of the message whose Hamming distance is closest to the received sequence.

Sequences 000,001,010 and 100 are within 1 unit of Hamming distance form 000 but it is two units away from 111. So. If any of these are received, decision would be 0. Sequences 110,111,011 and 101 are within 1 unit of Hamming distance form 111 but it is two units away from 000. So, If any of these are received, decision would be 1. For five repetition bits, the sequences are represented by a hypercube of five dimensions. For n repetitions, we may get 2n sequences. Then for the 5 repetitions, we may get 25 =32 sequences. Among these 32 sequences, 00000 and 11111 are taken as origin vertices .These two origin vertices are separated by 5 units.2 errors can be detected for these 5 repetitions. Smaller the fractions of vertices, smaller the probability of error Pe. The process of reducing error probability in a communication system by introducing redundancy of bits is called as channel coding. . We have to keep in mind that transmission rate Rb must also reduced even adding repetitions.This is the main drawback. Drawback of channel code can be improved instead of inserting redundancy ,we can incorporate redundancy for a block of information digit. Mutual Information Mutual information can be defined as amount of information transferred when transmitted and yi is received. the signal x i

Discrete Memory less channel In a discrete-time channel, if the values that the input and output variables can take are finite, or countably infinite, the channel is called a discrete channel. If the detector output in a given interval depends only on the signal transmitted in that interval but does not depends upon previous transmission, then the waveform channel is said to be discrete memory-less channel. Simply we may come to know that there is no memory to store the previous transmission values. In general, a discrete channel is defined by , the input alphabet, , the output alphabet, and p(y | x) the conditional PMF of the output sequence given the input sequence. A schematic representation of a discrete channel is given in figure 4.3. p(y | x) = 4.1 Where p(y | x) probability of receiving symbol y , given that symbol x was sent. y modulator input symbol. x demodulator output symbol In general especially a channel with ISI, the output yi does not only depend on the input at the same time xi but also on the previous inputs , or even previous and future inputs (in storage channels). Therefore, a channel can have memory.

Figure 4.3 Discrete Memory-less channel The modulator has only the binary symbols 0 and 1 as inputs when binary coding is used. If binary quantization of the demodulator output is used, the decoder has only binary inputs. Two types of decision is used at the demodulator. 1).Hard decision 2).Soft decision. 1).Hard Decision Hard decision is made on the demodulator output as to which symbol was actually transmitted. In this situation, We have a binary symmetric channel(BSC) with a transition probability diagram as shown in figure- 4.4.The binary symmetric channel, assuming a channel noise modeled as additive white Gaussian noise(AWGN) channel, is completely described by the transition probability p. The majority of coded digital communication systems employ binary coding with hard-decision decoding. Due to the simplicity of implementation offered by such an approach. Hard-decision decoders, or algebraic decoders, take advantage of the special algebraic structure that is built into the design of channel codes to make the decoding relatively easy to perform. 2).Soft Decision There is irreversible loss information in the receiver while using Hard decision. To reduce this loss, soft-decision coding is used. At the demodulator output , multilevel quantizer included as shown in figure4.4.a.The input-output characteristic of the quantizer is shown in figure 4.4.b. The modulator has only the binary symbols 0 and 1 as inputs, but the demodulator output now has an alphabet with Q symbols. Assuming the use of the quantizer as described in figure 4.4.c. We have Q = 8. Such a channel a binary input Q-ary output discrete memory-less channel. The corresponding channel transition. probability diagram is shown in figure 4.4.c.

Figure 4.4.a:Binary input Q-ary output discrete memory-less channel Output

b1

b4

b5

input

b8 Figure 4.4.b Receiver for BPSK

Figure 4.4.c Channel transition probability diagram Channel capacity C is given by C= The transmission efficiency or channel efficiency ( ) can be represented as follows

= =

Since C= , Then = If k symbols are transmitted per second, then the maximum rate of transmission of information per second is kC.Thus the channel capacity rate in binits per seconds is denoted by Cs. Cs = Kc binits/sec Channel coding Theorem It states that if a discrete memory-less channel has capacity C and a source generates information at a rate less than C, then there exists a coding technique such that the output of the source may be transmitted over the channel. The maximum rate at which one can communicate over a discrete-memory-less channel and still make the error probability approach 0 as the code block length increases, is called the channel capacity and is denoted by C. The most famous formula from Shannon's work is arguably the channel capacity of an ideal band-limited Gaussian channel1, which is given by C =W log2 (1+S/N) (bits/ second). 4.2 Where C is the channel capacity, that is, the maximum number of bits which can be transmitted through this channel per unit time (second), W is the bandwidth of the channel, S/N is the signal-to-noise power ratio at the receiver. Shannon's main theorem, S/N asserts that error probabilities as small as desired can be achieved as long as the transmission rate R through the channel (in bits/second) is smaller than the channel capacity C. This can be achieved by using an appropriate encoding and decoding operation. However, Shannon's theory is silent about the structure of these encoders and decoders.

You might also like

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- MEEN 364 Lecture 4 Examples on Sampling and Aliasing PhenomenaDocument5 pagesMEEN 364 Lecture 4 Examples on Sampling and Aliasing PhenomenaHiren MewadaNo ratings yet

- Unit1 UpdateDocument7 pagesUnit1 UpdateAnbarasan RamamoorthyNo ratings yet

- Waveform Coding Techniques: Chapter-3Document33 pagesWaveform Coding Techniques: Chapter-3Ranbir FrostbornNo ratings yet

- BHEL engineer trainee model question paper of electronics major with answersDocument13 pagesBHEL engineer trainee model question paper of electronics major with answersAnbarasan RamamoorthyNo ratings yet

- BHELDocument8 pagesBHELAnbarasan RamamoorthyNo ratings yet

- Final Syllabus - Anna University Tirunelveli 5-8 SemestersDocument93 pagesFinal Syllabus - Anna University Tirunelveli 5-8 SemestersVijay SwarupNo ratings yet

- MaterilasDocument1 pageMaterilasAnbarasan RamamoorthyNo ratings yet

- Bhel With AnswersDocument28 pagesBhel With AnswersAnbarasan RamamoorthyNo ratings yet

- Drift and diffusion currents in PN junction diodesDocument3 pagesDrift and diffusion currents in PN junction diodesAnbarasan RamamoorthyNo ratings yet

- Tancet 2013 MeDocument2 pagesTancet 2013 MeCraig DaughertyNo ratings yet

- Difference Between Baseband and BroadbandDocument1 pageDifference Between Baseband and BroadbandAnbarasan RamamoorthyNo ratings yet

- HazardDocument5 pagesHazardAnbarasan RamamoorthyNo ratings yet

- AnbarasanDocument3 pagesAnbarasanAnbarasan RamamoorthyNo ratings yet

- WS1Document81 pagesWS1sadimitaNo ratings yet

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Loadrunner Vs JMeterDocument4 pagesLoadrunner Vs JMetercoer_277809436No ratings yet

- Prashant - COBOL 400Document44 pagesPrashant - COBOL 400SaiprasadNo ratings yet

- Ext2 & Ext3 File Systems: File System and File StructuresDocument18 pagesExt2 & Ext3 File Systems: File System and File StructuresSyeda Ashifa Ashrafi PapiaNo ratings yet

- MOS3E Chapter1 pp1 10Document10 pagesMOS3E Chapter1 pp1 10tutorial122011No ratings yet

- Network Security Quiz AnswersDocument37 pagesNetwork Security Quiz AnswershobtronNo ratings yet

- Save, Delete, Update and Search Record by Adodc With PDFDocument9 pagesSave, Delete, Update and Search Record by Adodc With PDFBhuvana LakshmiNo ratings yet

- Java Web Services Using Apache Axis2Document24 pagesJava Web Services Using Apache Axis2kdorairajsgNo ratings yet

- Naman Goyal: (+91) - 8755885130 Skype Id - Nmngoyal1Document3 pagesNaman Goyal: (+91) - 8755885130 Skype Id - Nmngoyal1nmngoyal1No ratings yet

- Calculating Averages - Flowcharts and PseudocodeDocument4 pagesCalculating Averages - Flowcharts and PseudocodeLee Kah Hou67% (3)

- Backup of Locked Files Using VshadowDocument3 pagesBackup of Locked Files Using Vshadownebondza0% (1)

- WinForms TrueDBGridDocument354 pagesWinForms TrueDBGridajp123456No ratings yet

- WCCA ProgRef en-US PDFDocument1,352 pagesWCCA ProgRef en-US PDFjbaltazar77No ratings yet

- Cyber Security Management Plan ProceduresDocument7 pagesCyber Security Management Plan ProceduresSachin Sikka100% (5)

- R 3 DlogDocument1 pageR 3 DlogcastnhNo ratings yet

- Comp230 w2 Ipo EvkeyDocument6 pagesComp230 w2 Ipo EvkeyrcvrykingNo ratings yet

- Acsls Messages 7 0Document185 pagesAcsls Messages 7 0vijayaragavaboopathy5632No ratings yet

- Calculate volume of shapesDocument49 pagesCalculate volume of shapesPuneet JangidNo ratings yet

- Scheduling and ATP OM AdvisorWebcast ATPSchedulingR12 2011 0517Document43 pagesScheduling and ATP OM AdvisorWebcast ATPSchedulingR12 2011 0517mandeep_kumar7721No ratings yet

- WWW - Manaresults.Co - In: (Computer Science and Engineering)Document2 pagesWWW - Manaresults.Co - In: (Computer Science and Engineering)Ravikumar BhimavarapuNo ratings yet

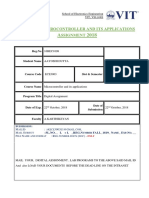

- ECE3003 M A 2018: Icrocontroller and Its Applications SsignmentDocument43 pagesECE3003 M A 2018: Icrocontroller and Its Applications SsignmentAayoshi DuttaNo ratings yet

- Week 3c - Phylogenetic - Tree - ConstructionMai PDFDocument19 pagesWeek 3c - Phylogenetic - Tree - ConstructionMai PDFSunilNo ratings yet

- Planning UtilsDocument65 pagesPlanning UtilsKrishna TilakNo ratings yet

- FYP Part 2 Complete ReportDocument72 pagesFYP Part 2 Complete ReportHamza Hussain /CUSTPkNo ratings yet

- Python Durga NotesDocument367 pagesPython Durga NotesAmol Pendkar84% (61)

- cs2201 Unit1 Notes PDFDocument16 pagescs2201 Unit1 Notes PDFBal BolakaNo ratings yet

- Apache Traffic Server - HTTP Proxy Server On The Edge PresentationDocument7 pagesApache Traffic Server - HTTP Proxy Server On The Edge Presentationchn5800inNo ratings yet

- Manage Active Directory with Automation AnywhereDocument37 pagesManage Active Directory with Automation AnywherePraveen ReddyNo ratings yet

- Solution: Option (B)Document3 pagesSolution: Option (B)Shikha AryaNo ratings yet

- Emc Networker Module For Microsoft Applications: Installation GuideDocument80 pagesEmc Networker Module For Microsoft Applications: Installation GuideShahnawaz M KuttyNo ratings yet