Professional Documents

Culture Documents

Fuzzy C-Regression Model With A New Cluster Validity Criterion

Uploaded by

105tolgaOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Fuzzy C-Regression Model With A New Cluster Validity Criterion

Uploaded by

105tolgaCopyright:

Available Formats

Fuzzy C-Regression Model With A New Cluster Validity Criterion

Chung-Chun Kung

*

and Chih-Chien Lin

*

Department of Electrical Engineering, Tatung University,

40 Chungshan North Road, 3rd Sec., Taipei, Taiwan, R. O. C.

Tel: (886)-2-25925252 Ext. 3470 Re-ext 800

E-mail: cckung@ttu.edu.tw

Abstract - In this paper, a new cluster validity criterion

designed for fuzzy c-regression model algorithm with

hyper-plane-shaped cluster representatives is proposed. The

simulation results show that the proposed cluster validity

criterion is able to indicate the number of clusters correctly if

the data have hyper-plane-typed structure.

I. INTRODUCTION

Fuzzy c-means (FCM) [1, 2] is a kind of point-wise fuzzy

clustering algorithms. The error measure (distance) of FCM

is calculated based on the Euclidean norm from data to each

cluster representative (center) and thus forms

hyper-spherical-shaped clusters. Data closer to the

point-wise cluster representative will have higher

membership degrees corresponding to the cluster. Although

FCM is usually used in partitioning data, but it is

unreasonable to apply FCM to partitioning the input-output

space in that data in the space grouped nearby do not imply

that they have functional relations [3].

Instead of assuming that a single model accounts for all

data pairs, FCRM [4] assumes that the data are drawn from

c different models. The distance of object data vector to

some representative of cluster as the measure of goodness of

fit is replaced by the fit of different regression models to each

output. Minimization of the particular objective function in

FCRM yields simultaneous estimates for the parameters of

c regression models, together with a fuzzy c-partition of the

data.

A related important issue to the fuzzy clustering

algorithms is the cluster validity criterion, which deals with

the significance of the structure imposed by a fuzzy

clustering algorithm. There are many cluster validity

criteria available, including Bezdek s partition coefficient [5]

and partition entropy [6], Xie-Beni index [7], and Fukuyama

and Sugenos validity function [8] etc. The first two criteria

consider only the overlap conditions of clusters but disregard

other structural information such as compactness of clusters

and separation of cluster representatives . The last two

criteria consider both the compactness of clusters and

separation of cluster representatives and are believed to be

more reliable in that the indices use all the structural

information available from the cluster algorithm [9].

However, these two criteria are designed only for point-wise

cluster algorithm and cannot be applied to FCRM with

hyper-plane-shaped cluster representatives. Therefore when

a FCRM is applied to partition a set of data and the number

of clusters is not specified a priori, it will be an important

issue to choose an optimal number of clusters in that there is

no reliable criterion guiding the algorithm.

In this paper, we will propose a new cluster validity

criterion suitable for FCRM with hyper-plane-shaped cluster

representatives. We mimic the structure of Xie-Benis index

[7], which is defined as the ratio of compactness and

separation, but make some modifications. Instead of

defining separation function according to the Euclidean norm

between different cluster representatives, we define a new

separation function as the absolute value of standard

inner-product of unit normal vectors representing different

hyper-planes. Thus the structural information hidden in the

clusters will be reflected to the new cluster validity criterion.

This paper is organized as follows. In section 2, the

FCRM and the FCRM clustering algorithm is briefly

introduced. In section 3, we propose a new cluster validity

criterion for the FCRM. Section 4 gives an example to

illustrate the effectiveness of the new cluster validity. The

conclusion is given in section 5.

II. FUZZY C- REGRESSION MODEL (FCRM)

CLUSTERING ALGORITHM

Let ( ) ( ) { }

N N

y y S , , , ,

1 1

x x L be a set of data where

each independent observation

n

h

x has a corresponding

dependent observation

h

y . Choose the number c of

clusters, N c < 2 . The idea of FCRM [10] can be briefly

described as follows.

Assume that the data are drawn from c different fuzzy

c-regression models:

( ) c i f y

i i i

, , 1 , L + a x . (1)

Label vectors assigned to each object in a set of data can

be arrayed as ) ( N c c-partitions of S and are

characterized as set of ) (cN values { }

ih

, in which

ih

are constrained labels satisfying

h i

ih

, 1 0 (2)

0-7803-7280-8/02/$10.00 2002 IEEE

N h

c

i

ih

, , 1 , 1

1

L

(3)

and

c i N

N

h

ih

, , 1 , 0

1

L < <

, (4)

where (4) means no existence of empty clusters. This

) ( N c matrix [ ]

ih

U with arrayed values { }

ih

is

called a fuzzy c-partition matrix in that

ih

is taken as the

membership of ) , (

h h

y x in the ith fuzzy subset (cluster) of

S . In the switching regression problem,

ih

is interpreted

as the importance or weight attached to the extent to which

the model value ( )

i h i

f a x , matches

h

y . Define the error

function as follows:

( ) ( )

2

,

h i h i i ih

y f d a x a . (5)

The general family of fuzzy c-regression model objective

functions is thus defined by [1]

( ) ( )

N

h

c

i

i ih

m

ih

c m

d U J

1 1

1

, , , a a a L , (6)

where ) , 1 ( m is a weighting constant and ( ) { }

i ih

d a

is defined by (5). From the introduction above we see that

not only does FCRM offer an effective approach to produce

estimates of { }

c

a a , ,

1

L , which define the best-fit

regression models, but also assign a fuzzy label vector to

each datum in S simultaneously.

Now let us take a closer look into the influence of the

exponential weighting parameter m. Generally speaking,

the greater m is the fuzzier the clustering result will be. It is

because the greater m is the closer will

m

ih

be to 0 . For

example, with 7 m , relatively high memberships such as

0.8 are decreased to a factor of about 0.21. Thus (local)

minima and maximal will be less clearly developed or might

even completely vanish. We can choose m depending on

the estimation of how well the data can be divided into

clusters. For instance, if the clusters are clearly separated

from each other, even a crisp partition is possible. In the

cases, we choose m close to 1. (If m closes to 1, the

memberships converge at 0 or 1 [ 1 ] ) If the clusters are

hardly distinguishable, m should be chosen rather large. Of

course, some experience is necessary since there is not yet

theoretical basis for an optimal choice of m. A common

choice in practice is 2 m .

The fuzzy c-regression model algorithm is given in [4],

here we briefly review the algorithm as follows:

FCRM Clustering Algorithm [4]:

Step 1. Given data set ( ) ( ) { }

N N

y y S , , , ,

1 1

x x L . Set

1 > m . Specify the form of cluster representatives

( ) c i for f y

i i

, , 1 , L a x and choose a

measure of error { }

ih

d d so that ( ) 0

i ih

d a

for all i and h . Pick a termination threshold

0 > and an initial partition ) 0 ( U satisfying Eqs

(2)-(4). Set iteration index 0 r .

Step 2. Calculate values for the c model parameters

) (r

i i

a a that globally minimize the restricted

function

( ) ( )

c m c

r U J a a a a , , ), ( , ,

1 1

L L .

Step 3. Update ) 1 ( ) ( + r U r U with ( ) ) (r d d

i ih ih

a

as follows:

'

,

_

, , 0

, , 1

,

1

1

1

1

h h

I i

h h ih

h

c

j

m

jh

ih

ih

I i I for

I i I for

I for

d

d

h

where } 0 , 1 {

ih h

d c i i I .

Step 4. Check for termination in some convenient induced

matrix norm:

If + ) 1 ( ) ( r U r U , stop;

otherwise, set 1 + r r and go to step 2.

Step 2 can be implemented by the following recursive

least algorithm.

The Recursive Least Square (RLS) Algorithm [10]:

Given a set of N sample data ( ) { }

h h

y , x collected

from experiments, where

n

h

x ,

h

y , and

N h , , 1L . Let

ih

denote the membership degree that of

( )

h h

y , x to the ith cluster representative

i

T

y a x .

Define the cost function

0-7803-7280-8/02/$10.00 2002 IEEE

( )

N

h

i

T

h h ih i

y

N

J

1

2

] [

1

a x a , (7)

where

ih

s give different weights to different observations.

Minimizing ( )

i

J a respects to

i

a , we obtain

] [

) 1 ( ) ( ) 1 ( ) (

+

h

i

T

h h

h h

i

h

i

y a x L a a , (8)

h

h T

h

ih

h

h

h

x P x

x P

L

) 1 (

) 1 (

) (

1

, (9)

) 1 ( ) ( ) 1 ( ) (

h T

h

h h h

P x L P P (10)

for N h , , 1L , where

n h

) (

P and

n h

) (

L . These

formulas are known as the recursive least square (RLS)

algorithm [10], one most widely used recursive identification

methods. A common choice of initial values is to take

I P

) 0 (

and 0 a

) 0 (

i

, where is a large real number

(for instance, 100).

The convergence property of fuzzy c-regression model is

described in detail in [4]. Although the results of FCRM are

mostly terminated at a global minimum, they will sometimes

be trapped at a local minimum if an extremely poor

initialization ) 0 ( U is used.

III. A NEW CLUSTER VALIDITY CRITERION

In practice, the number of rules is usually decided with the

aid of a reliable index system called cluster validity criterion.

There are many cluster validity criteria proposed for fuzzy

clustering algorithms [5-8], but most of them are designed for

clustering methods with point-wise cluster representatives

and not applicable to hyper-plane-shaped representatives.

The cluster validity criterion designed for the FCM

algorithm begins with the Bezdek s partition coefficient

PC

v [5], which considers only the overlap conditions of

clusters (i.e., it uses only the partition matrix U) but disregard

compactness of clusters and separation status of cluster

representatives. Bezdeks partition coefficient is defined as

[5]

N

v

N

h

c

i

ih

PC

1 1

2

(11)

where h means the hth training data, c is the number of

clusters,

ih

denotes the membership degree of the hth data

to the ith cluster, and N is the number of total training data.

The optimal number c is chosen at which

PC

v is closest

to one. The limiting behavior of Bezdek s partition

coefficient is that it approaches one as the exponential

weighting parameter m approaches one and therefore loses its

ability to distinguish optimal c , i.e.,

1 lim

1

PC

m

v . (12)

In the following, we will propose a new cluster validity

criterion designed for FRCM algorithm. The form of the

cluster representative we adopt in this paper is

c i y

i

T

, , 1 L a x , (13)

where

[ ]

n T

n

x x , ,

1

L x (14)

and

[ ]

n T

in i i

a a , ,

1

L a . (15)

Since the cluster representatives are in hyper-plane forms,

and all pass through the origin 0 , we rewrite (13) as

0

i

T

n z , (16)

where

[ ]

1

1

, , ,

+

n T

n

y x x L z (17)

is any vector on the ith hyper-plane and

[ ]

1

1

1 , , ,

+

n T

in i i

a a L n (18)

represents the normal vector of the hyper-plane. The

corresponding unit normal vector is defined as

i

i

i

n

n

u , (19)

where is the Euclidean norm.

From [11], we know that the inner product of two real unit

vectors

j i

u u , equals the projection of

i

u on the space

spanned by

j

u ,

j i

u u , is the projection length, and the

only factor that influences the projection length is the angle

between them. We use this value to measure the difference

between two hyper-planes that pass through the same point in

that a zero projection length implies that the two

hyper-planes are orthogonal, while a unit projection length

implies the coincidence of them. Because all these c

hyper-planes pass through the origin and each of them is

featured by a corresponding

i

u with unit length, we can

easily judge the difference of two hyper-planes by an

0-7803-7280-8/02/$10.00 2002 IEEE

absolute value of a standard inner-product of their unit

normal vector

j i

u u , .

Borrowing the idea of the separation function defined by

Xie and Beni [7], we define a new separation function

suitable for the hyper-plane-shaped representatives as

follows:

,

_

j i

j i

N function Separation

u u

, max

1

, (20)

where is a rather small real positive constant that

prevents the function from being divided by zero. In the

worst case when there is some hyper-plane that coincides

with another one, the separation function will approach its

minimum N in that 1 , max

j i

j i

u u ; and contrarily, when

all the hyper-planes are orthogonal, which means diversity of

clusters, the function will equal its maximum ( ) / N , a

rather large constant.

From the discussion above, we see that the more diverse

the clusters are, the larger the separation validity function

will be, which fits the concept of separation measure criterion.

The proposed new cluster validity criterion suitable for

hyper-plane-shaped cluster representatives can now be

readily defined by two validity functions with structural

information:

,

_

j i

j i

N

h

c

i

h i

T

h

m

ih

N

y

u u

a x

index) (cluster CI

, max

1

1 1

, (21)

where the numerator is a compactness function [7], which

reflects the compactness of clusters, and the denominator is

the separation function, which indicates the separation of

clusters. The proposed criterion, which uses all the

information available from clustering algorithm, is believed

to be more reliable than those calculated from only the

partition matrix U but disregard other structural information

such as compactness of clusters and separation of cluster

representatives.

Not only does the proposed cluster validity criterion have

quite straightforward meaning, it can be easily obtained as

well. In applications, as the method used in other validity

criteria, we plot (CI vs. c) to help us choosing an appropriate

number of clusters at which a significant change in curvature

occurred.

IV. AN EXAMPLE

To validate the new cluster validity criterion, we consider

the following example and compare the result with Bezdeks

partition coefficient

PC

v , which considers only the overlap

conditions of clusters (i.e., it uses only the partition matrix U)

but disregard compactness of clusters and separation status of

cluster representatives.

Given three linear equations with exogenous white

Gaussian random noise ) ( 3 , 2 , 1 i

i

having zero mean

and variance 0.25:

, 5 3

, 2

, 4 3 2

3 3 2 1 3 3

2 3 2 1 2 2

1 3 2 1 1 1

+ + + +

+ + + +

+ + +

x x x y

x x x y

x x x y

T

T

T

a x

a x

a x

where

[ ]

[ ]

[ ]

[ ] . 1 5 3

, 2 1 1

, 4 3 2

,

3

2

1

3 2 1

T

T

T

T

x x x

a

a

a

x

We randomly generate 300 training input vector x with

each element uniformly distributed in the range [ ] 5 , 5 and

then apply each 100 of them to the three linear equations

correspondingly. By the FCRM algorithm, we obtain

cluster representatives and partition matrix U for different c.

To validate the superiority of the new cluster index to the

Bezdeks partition coefficient, we consider the following two

cases:

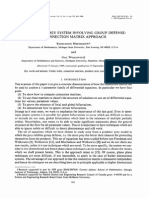

Case 1: 2 m .

The plot of cluster index vs. cluster number is

depicted in Fig. 1. We see that both the Bezdeks

partition coefficient and the new cluster index

indicate the correct answer 3 c . The linear

equations obtained by the FCRM algorithm is listed

below:

, 9909 . 0 9999 . 4 9733 . 2

, 9721 . 1 9924 . 0 9977 . 0

, 0212 . 4 9893 . 2 9954 . 1

3 2 1 3

3 2 1 2

3 2 1 1

x x x y

x x x y

x x x y

T

T

T

+ +

+ +

+

a x

a x

a x

which are quite close to the nominal linear

equations.

Case 2: 05 . 1 m , which is quite close to one. The plot of

cluster index vs. cluster number is depicted in Fig. 2.

We see that the Bezdeks partition coefficient fails

to unambiguously indicate the right number of

0-7803-7280-8/02/$10.00 2002 IEEE

clusters; while the proposed cluster index CI still

indicates the number 3 correctly. The linear

equations obtained by the FCRM algorithm is as

follows:

, 0103 . 1 0035 . 5 9531 . 2

, 9991 . 1 9959 . 0 9993 . 0

, 0262 . 4 9979 . 2 9978 . 1

3 2 1 3

3 2 1 2

3 2 1 1

x x x y

x x x y

x x x y

T

T

T

+ +

+ +

+

a x

a x

a x

which are quite close to the nominal linear

equations.

V. CONCLUSION

In this paper, a new cluster validity criterion designed for

fuzzy c-regression model algorithm with hyper-plane-shaped

cluster representatives is proposed. The criterion that

mimics the concept of Xie-Benis index reflects all possible

structural information hidden in the clusters. It is

considered more reliable in that it uses all the information

available from the FCRM algorithm by taking both

compactness of the clusters and the separation status of the

cluster representatives into account.

The simulation results show that the proposed cluster

validity criterion is able to indicate the valid number of

clusters correctly and unambiguously if the data to be

partitioned have hyper-plane-typed structure.

REFERENCES

[1] J.C. Bezdek, Pattern Recognit ion with Fuzzy Objective

Function Algorithms. New York: Plenum, 1981.

[2] N.R. Pal, J.C. Bezdek, On cluster validity for the fuzzy

c-means model, IEEE Trans. Fuzzy Syst., vol. 3, no. 3, pp.

370-379, Aug 1995.

[3] E. Kim, M. Park, S. Ji, M. Park, A new approach to fuzzy

modeling, IEEE Trans. Fuzzy Syst., vol. 5, no. 3, pp. 328-337,

Aug 1997.

[4] R.J. Hathaway, J.C. Bezdek, Switching regression models and

fuzzy clustering, IEEE Trans. Fuzzy Syst., vol. 1, no. 3, pp.

195-204, Aug 1993.

[5] J.C. Bezdek, Cluster validity with fuzzy set, J. Cybern., vol.

3, no. 3, pp. 58-72,1974.

[6] J.C. Bezdek, Mathematical models for systematics and

taxonomy, in Proc. 8

th

Int. Conf. Numerical Taxonomy, G.

Estabrook, Ed., Freeman, San Franscisco, CA, pp. 143-166,

1975.

[7] X.L. Xie and G.A. Beni, Validity measure for fuzzy

clustering, IEEE Trans. Pattern Anal. Machine Intell., vol. 3,

no. 8, pp. 841-846, 1991.

[8] Y. Fukuyama and M. Sugeno, A new method of choosing the

number of clusters for the fuzzy c-means method, in Proc. 5

th

Fuzzy Syst. Symp., pp. 247-250 , 1989 (in Japanese).

[9] N.R. Pal, J.C. Bezdek, T. A. Runkler, Some issues in system

identification using clustering, Int. Conf. Neural Networks,

vol. 4, pp. 2524-2529, 1997.

[10] L. Ljung, T. Soderstrom. Theory and practice of recursive

identification, MIT Press, 1983.

[11] S.H. Friedberg, A.J. Insel, L.E. Spence, Linear Algebra. Ed. 2,

Prentice-Hall, 1989.

0-7803-7280-8/02/$10.00 2002 IEEE

2 3 4 5 6 7 8 9

0.75

0.8

0.85

0.9

0.95

1

Number of clusters, c

C

l

u

s

t

e

r

i

n

d

e

x

,

V

(

p

c

)

(a)

2 3 4 5 6 7 8 9

0

1

2

3

4

5

6

7

Number of clusters, c

N

e

w

c

l

u

s

t

e

r

i

n

d

e

x

,

C

I

(b)

Fig. 1 Case 1: 2 m

(a) Plot of Bezdeks partition coefficient

PC

v vs. c.

(b) Plot of the new cluster index CI vs. c.

2 4 6 8 10 12

0.997

0.9975 0.9975

0.998

0.9985

0.999

0.9995

11

1.0005

Number of clusters, c

C

l

u

s

t

e

r

i

n

d

e

x

,

V

(

p

c

)

(a)

2 4 6 8 10 12

0

1

2

3

4

5

6

7

Number of clusters, c

N

e

w

c

l

u

s

t

e

r

i

n

d

e

x

,

C

I

(b)

Fig. 2 Case 2: 05 . 1 m

(a) Plot of Bezdeks partition coefficient

PC

v vs. c.

(b) Plot of the new cluster index CI vs. c.

0-7803-7280-8/02/$10.00 2002 IEEE

You might also like

- Christophe Andrieu - Arnaud Doucet Bristol, BS8 1TW, UK. Cambridge, CB2 1PZ, UK. EmailDocument4 pagesChristophe Andrieu - Arnaud Doucet Bristol, BS8 1TW, UK. Cambridge, CB2 1PZ, UK. EmailNeil John AppsNo ratings yet

- On Kernel-Target AlignmentDocument7 pagesOn Kernel-Target AlignmentJônatas Oliveira SilvaNo ratings yet

- An ANFIS Based Fuzzy Synthesis Judgment For Transformer Fault DiagnosisDocument10 pagesAn ANFIS Based Fuzzy Synthesis Judgment For Transformer Fault DiagnosisDanh Bui CongNo ratings yet

- Improving Fuzzy C-Means Clustering Based On Feature-Weight LearningDocument10 pagesImproving Fuzzy C-Means Clustering Based On Feature-Weight LearningNandang HermantoNo ratings yet

- Modeling Key Parameters For Greenhouse Using Fuzzy Clustering TechniqueDocument4 pagesModeling Key Parameters For Greenhouse Using Fuzzy Clustering TechniqueItaaAminotoNo ratings yet

- A Predator-Prey System Involving Group Defense: A Connection Matrix ApproachDocument15 pagesA Predator-Prey System Involving Group Defense: A Connection Matrix ApproachgrossoNo ratings yet

- An Adaptive Metropolis Algorithm: 1350 7265 # 2001 ISI/BSDocument20 pagesAn Adaptive Metropolis Algorithm: 1350 7265 # 2001 ISI/BSSandeep GogadiNo ratings yet

- RevisedDocument6 pagesRevisedPaulina MarquezNo ratings yet

- Fault Diagnosis Model Through Fuzzy Clustering: LV of Science ofDocument5 pagesFault Diagnosis Model Through Fuzzy Clustering: LV of Science ofrvicentclasesNo ratings yet

- Empirical Testing of Fast Kernel Density Estimation AlgorithmsDocument6 pagesEmpirical Testing of Fast Kernel Density Estimation AlgorithmsAlon HonigNo ratings yet

- A New Initialization Method For The Fuzzy C-Means Algorithm Using Fuzzy Subtractive ClusteringDocument7 pagesA New Initialization Method For The Fuzzy C-Means Algorithm Using Fuzzy Subtractive ClusteringRizqy FahmiNo ratings yet

- Gaussian Process Emulation of Dynamic Computer Codes (Conti, Gosling Et Al)Document14 pagesGaussian Process Emulation of Dynamic Computer Codes (Conti, Gosling Et Al)Michael WoodNo ratings yet

- Learning Reduced-Order Models of Quadratic Dynamical Systems From Input-Output DataDocument6 pagesLearning Reduced-Order Models of Quadratic Dynamical Systems From Input-Output Datasho bhaNo ratings yet

- Introduction To Five Data ClusteringDocument10 pagesIntroduction To Five Data ClusteringerkanbesdokNo ratings yet

- MSE performance of adaptive filters in nonstationary environmentsDocument7 pagesMSE performance of adaptive filters in nonstationary environmentsThanavel UsicNo ratings yet

- Quantum Data-Fitting: PACS Numbers: 03.67.-A, 03.67.ac, 42.50.DvDocument6 pagesQuantum Data-Fitting: PACS Numbers: 03.67.-A, 03.67.ac, 42.50.Dvohenri100No ratings yet

- Stiffness and Damage Identification With Model Reduction TechniqueDocument8 pagesStiffness and Damage Identification With Model Reduction Techniquegorot1No ratings yet

- Image Segmentation by Fuzzy C-Means Clustering Algorithm With A Novel Penalty Term Yong YangDocument15 pagesImage Segmentation by Fuzzy C-Means Clustering Algorithm With A Novel Penalty Term Yong Yangdragon_287No ratings yet

- Fuzzy clustering speeds up with stable centersDocument15 pagesFuzzy clustering speeds up with stable centersAli Umair KhanNo ratings yet

- Chopped Random Basis Quantum Optimization: PACS NumbersDocument10 pagesChopped Random Basis Quantum Optimization: PACS NumbersgomariosNo ratings yet

- TPWL IeeeDocument6 pagesTPWL Ieeezhi.han1091No ratings yet

- Reliability Analysis of Large Structural SystemsDocument5 pagesReliability Analysis of Large Structural Systemsuamiranda3518No ratings yet

- Splitting The CoreDocument2 pagesSplitting The CoredsolisNo ratings yet

- An Adaptive High-Gain Observer For Nonlinear Systems: Nicolas Boizot, Eric Busvelle, Jean-Paul GauthierDocument8 pagesAn Adaptive High-Gain Observer For Nonlinear Systems: Nicolas Boizot, Eric Busvelle, Jean-Paul Gauthiertidjani73No ratings yet

- Topology Optimisation Example NastranDocument12 pagesTopology Optimisation Example Nastranjbcharpe100% (1)

- Modeling Nonlinear Time Series Behavior in Economic DataDocument10 pagesModeling Nonlinear Time Series Behavior in Economic DataQueen RaniaNo ratings yet

- Fuzzy and Possibilistic Shell Clustering Algorithms and Their Application To Boundary Detection and Surface Approximation-Part IDocument15 pagesFuzzy and Possibilistic Shell Clustering Algorithms and Their Application To Boundary Detection and Surface Approximation-Part IFFSeriesvnNo ratings yet

- FRP PDFDocument19 pagesFRP PDFmsl_cspNo ratings yet

- Establishing Mathematical Models Using System IdentificationDocument4 pagesEstablishing Mathematical Models Using System IdentificationioncopaeNo ratings yet

- Kernal Methods Machine LearningDocument53 pagesKernal Methods Machine LearningpalaniNo ratings yet

- Comprehensive Approach to Mode ClusteringDocument32 pagesComprehensive Approach to Mode ClusteringSabyasachi BeraNo ratings yet

- BréhierEtal 2016Document43 pagesBréhierEtal 2016ossama123456No ratings yet

- MIMO Fuzzy Model IdentificationDocument6 pagesMIMO Fuzzy Model Identificationchoc_ngoay1No ratings yet

- CTAC97: Cluster Analysis Using TriangulationDocument8 pagesCTAC97: Cluster Analysis Using TriangulationNotiani Nabilatussa'adahNo ratings yet

- RootDocument7 pagesRootghassen marouaniNo ratings yet

- Differential Quadrature MethodDocument13 pagesDifferential Quadrature MethodShannon HarrisNo ratings yet

- Some Studies of Expectation Maximization Clustering Algorithm To Enhance PerformanceDocument16 pagesSome Studies of Expectation Maximization Clustering Algorithm To Enhance PerformanceResearch Cell: An International Journal of Engineering SciencesNo ratings yet

- 01333992Document4 pages01333992ROHIT9044782102No ratings yet

- Switching Regression Models and Fuzzy Clustering: Richard CDocument10 pagesSwitching Regression Models and Fuzzy Clustering: Richard CAli Umair KhanNo ratings yet

- Learning More Accurate Metrics For Self-Organizing MapsDocument6 pagesLearning More Accurate Metrics For Self-Organizing MapsctorreshhNo ratings yet

- Reservoir Characterisation 2012Document7 pagesReservoir Characterisation 2012T C0% (1)

- A Genetic K-Means Clustering Algorithm Based On The Optimized Initial CentersDocument7 pagesA Genetic K-Means Clustering Algorithm Based On The Optimized Initial CentersArief YuliansyahNo ratings yet

- Text Clustering and Validation For Web Search ResultsDocument7 pagesText Clustering and Validation For Web Search ResultsInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Order Reduction For Large Scale Finite Element Models: A Systems PerspectiveDocument21 pagesOrder Reduction For Large Scale Finite Element Models: A Systems PerspectiveAnonymous lEBdswQXmxNo ratings yet

- Clustering 1Document6 pagesClustering 1nisargNo ratings yet

- Fuzzy System Modeling by Fuzzy Partition and GA Hybrid SchemesDocument10 pagesFuzzy System Modeling by Fuzzy Partition and GA Hybrid SchemesOctavio AsNo ratings yet

- Extraction of Ritz Vectors Using A Complete Flexibility MatrixDocument6 pagesExtraction of Ritz Vectors Using A Complete Flexibility MatrixRamprasad SrinivasanNo ratings yet

- An Ε-Insensitive Approach To Fuzzy Clustering: Int. J. Appl. Math. Comput. Sci., 2001, Vol.11, No.4, 993-1007Document15 pagesAn Ε-Insensitive Approach To Fuzzy Clustering: Int. J. Appl. Math. Comput. Sci., 2001, Vol.11, No.4, 993-1007hoangthai82No ratings yet

- Monte Carlo Techniques For Bayesian Statistical Inference - A Comparative ReviewDocument15 pagesMonte Carlo Techniques For Bayesian Statistical Inference - A Comparative Reviewasdfgh132No ratings yet

- PkmeansDocument6 pagesPkmeansRubén Bresler CampsNo ratings yet

- Figure 9: Process of Knowledge Data Discovery Based OnDocument7 pagesFigure 9: Process of Knowledge Data Discovery Based OnAnshul SharmaNo ratings yet

- Lógica Fuzzy MestradoDocument15 pagesLógica Fuzzy Mestradoaempinto028980No ratings yet

- Automatic ControlDocument14 pagesAutomatic Controlmagdi elmalekNo ratings yet

- Multi-Dimensional Model Order SelectionDocument13 pagesMulti-Dimensional Model Order SelectionRodrigo RozárioNo ratings yet

- New Formulation Empirical Mode Decomposition Constrained OptimizationDocument10 pagesNew Formulation Empirical Mode Decomposition Constrained OptimizationStoffelinusNo ratings yet

- Implementing the FCM AlgorithmDocument15 pagesImplementing the FCM AlgorithmBachtiar AzharNo ratings yet

- Fractional Calculus Applications in Control SystemsDocument4 pagesFractional Calculus Applications in Control SystemsSanket KarnikNo ratings yet

- E E 2 8 9 Lab Spectral Analysis in MatlabDocument12 pagesE E 2 8 9 Lab Spectral Analysis in MatlabvilukNo ratings yet

- Integer Optimization and its Computation in Emergency ManagementFrom EverandInteger Optimization and its Computation in Emergency ManagementNo ratings yet

- Linear Transformations and MatricesDocument16 pagesLinear Transformations and MatricesBryann RSNo ratings yet

- Regid RegressionDocument129 pagesRegid RegressionasdfghjreNo ratings yet

- 1 Bilinear MapsDocument6 pages1 Bilinear MapsduylambdaNo ratings yet

- Indian Institute of Technology RoorkeeDocument60 pagesIndian Institute of Technology RoorkeeTOKEN PRINTNo ratings yet

- Introduction To The Mathematical and Statistical Foundations of EconometricsDocument345 pagesIntroduction To The Mathematical and Statistical Foundations of EconometricsRiccardo TaruschioNo ratings yet

- Statistical Signal Processing and Applications: exercise bookDocument85 pagesStatistical Signal Processing and Applications: exercise bookDiego Gabriel IriarteNo ratings yet

- Peter Nickolas - Wavelets - A Student Guide-Cambridge University Press (2017)Document271 pagesPeter Nickolas - Wavelets - A Student Guide-Cambridge University Press (2017)johnnicholasrosettiNo ratings yet

- Ee263 Course ReaderDocument430 pagesEe263 Course ReadersurvinderpalNo ratings yet

- Samson Abramsky and Bob Coecke - A Categorical Semantics of Quantum ProtocolsDocument21 pagesSamson Abramsky and Bob Coecke - A Categorical Semantics of Quantum Protocolsdcsi3No ratings yet

- Linear AlgebraDocument466 pagesLinear Algebramfeinber100% (1)

- Principal Component Analysis - InTECH (Naren)Document308 pagesPrincipal Component Analysis - InTECH (Naren)jorge romeroNo ratings yet

- William Arveson - An Invitation To C - Algebras (1976) (978!1!4612-6371-5)Document117 pagesWilliam Arveson - An Invitation To C - Algebras (1976) (978!1!4612-6371-5)Macky Novera100% (2)

- TUT 2 SolutionsDocument22 pagesTUT 2 SolutionsEE-19-66 SaqlainNo ratings yet

- The Generalized Spectral Radius and Extremal Norms: Fabian WirthDocument24 pagesThe Generalized Spectral Radius and Extremal Norms: Fabian WirthMohammad SoroushNo ratings yet

- Riesz's Lemma and Orthogonality in Normed Spaces: Kazuo HASHIMOTO, Gen NAKAMURA and Shinnosuke OHARUDocument26 pagesRiesz's Lemma and Orthogonality in Normed Spaces: Kazuo HASHIMOTO, Gen NAKAMURA and Shinnosuke OHARUribeiro_sucessoNo ratings yet

- A Note On Adjustment of Free NetworksDocument19 pagesA Note On Adjustment of Free NetworksJajang NurjamanNo ratings yet

- Variational and Weighted Residual MethodsDocument26 pagesVariational and Weighted Residual Methodsdarebusi1No ratings yet

- MNL v22 Dec2012 I3Document56 pagesMNL v22 Dec2012 I3Zahid QureshNo ratings yet

- A Unified Theory of Nuclear Reactions 2Document27 pagesA Unified Theory of Nuclear Reactions 2ana1novi1No ratings yet

- Lecture Notes for 36-707 Linear RegressionDocument228 pagesLecture Notes for 36-707 Linear Regressionkeyyongpark100% (2)

- Pellegrino Calladine 1985 Matrix Analysis of Statically and Kinetically Indeterminate FrameworksDocument20 pagesPellegrino Calladine 1985 Matrix Analysis of Statically and Kinetically Indeterminate Frameworkscolkurtz21No ratings yet

- Adjustment Theory PDFDocument100 pagesAdjustment Theory PDFMelih TosunNo ratings yet

- Lecture 1.3Document7 pagesLecture 1.3Jon SmithsonNo ratings yet

- Fast Extraction of Viewing Frustum PlanesDocument11 pagesFast Extraction of Viewing Frustum PlanesdanielsturzaNo ratings yet

- A Quantum Theory of Money and Value - David Orrell (2016)Document19 pagesA Quantum Theory of Money and Value - David Orrell (2016)Felipe CorreaNo ratings yet

- Hilbert 3Document35 pagesHilbert 3HadeelAl-hamaamNo ratings yet

- Quantum Mechanics Theoretical Minimum - Notes Part 2Document62 pagesQuantum Mechanics Theoretical Minimum - Notes Part 2dhpatton3222No ratings yet

- Linear Algebra Report Group 2 CC06Document11 pagesLinear Algebra Report Group 2 CC06long tranNo ratings yet

- Aerial PhotographyDocument15 pagesAerial PhotographyKailash Chandra SahooNo ratings yet

- Linear Algebra With Applications Solution ManuelDocument194 pagesLinear Algebra With Applications Solution Manuelvoldemort260367% (3)