Professional Documents

Culture Documents

Generalized Regression

Uploaded by

Edwin HauwertCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Generalized Regression

Uploaded by

Edwin HauwertCopyright:

Available Formats

Generalized Regression

Nonlinear Algorithms Including Ridge, Lasso and Elastic Net

JONATHAN KINLAY

Global Equities

UPDATED: MAY 23, 2011

Ordinary Least Squares Regression

OLS Model Framework

The problem framework is one in which we have observations {y

1

, y

2

, . . . , y

n

} from a random variable Y which

we are interested in predicting, based on the observed values {{x

11

,x

21

, . . . , x

n,1

}, {x

12

,x

22

, . . . , x

n,2

}, . . . ,

{x

1 p

,x

2 p

, . . . , x

n, p

}, from p independent explanatory random variates {X

1

, X

2

, . . . , X

p

}. Our initial assumption is

that the X

i

are independent, but relax that condition and consider the case of collinear (i.e. correlated) explanatory

variables in the general case.

We frame the problem in matrix form as:

Y X ,

wher e is assumed No|0, ]

Yis the |n 1] matr ix of obser vations y

i

Xis the |n p] matr ix of obser vations x

ij

and is an unknown |p 1] vector of coefficients to be estimated

The aim is to find a solution which minimizes the mean square error:

1

n

i1

n

i

2

1

n

i1

n

(y

i

X

i

)

2

1

n

(Y X)

T

.(Y X)

which has the well known solution :

|X

T

X]

1

|X

T

Y]

In Mathematica there are several ways to accomplish this, for example:

OLSRegression[Y_, X_]:Module[

{XTTranspose[X]},

ZLinearSolve[XT.X,XT.Y]

]

The Problem of Collinearity

Difficulties begin to emerge when the assumption of independence no longer applies. While it is still possible to

conclude whether the system of explanatory variables X has explanatory power as a whole, the correlation between

the variables means that it no longer possible clearly distinguish the significance of individual variables. Estimates

of the beta coefficients may be biased, understating the importance of some of the variables and overstating the

importance of others.

Difficulties begin to emerge when the assumption of independence no longer applies. While it is still possible to

conclude whether the system of explanatory variables X has explanatory power as a whole, the correlation between

the variables means that it no longer possible clearly distinguish the significance of individual variables. Estimates

of the beta coefficients may be biased, understating the importance of some of the variables and overstating the

importance of others.

Example of Collinear Variables

This example is based on the original paper on lasso regression (Tibshirani, 1996) and a subsequent paper by Zou

and Hastie (2004). We have 220 observations on eight predictors. We use the first 20 observations for model

estimation and the remaining 200 for out-of-sample testing. We fix the parameters and and set the correlation

between variables X

1

, and X

2

to be 0.5

ij

as follows:

nObs 200; 3; {3, 1.5, 0, 0, 2, 0, 0, 0};

Table]0.5

Abs[ij]

, {i, 1, 8}, {j, 1, 8} // MatrixForm

1. 0.5 0.25 0.125 0.0625 0.03125 0.015625 0.0078125

0.5 1. 0.5 0.25 0.125 0.0625 0.03125 0.015625

0.25 0.5 1. 0.5 0.25 0.125 0.0625 0.03125

0.125 0.25 0.5 1. 0.5 0.25 0.125 0.0625

0.0625 0.125 0.25 0.5 1. 0.5 0.25 0.125

0.03125 0.0625 0.125 0.25 0.5 1. 0.5 0.25

0.015625 0.03125 0.0625 0.125 0.25 0.5 1. 0.5

0.0078125 0.015625 0.03125 0.0625 0.125 0.25 0.5 1.

RandomVariate[ NormalDistribution[0, ], nObs];

X RandomReal[{100, 100}, {nObs, 8}];

Y X. ;

Histogram[Y]

400 200 0 200 400 600

5

10

15

20

25

30

35

In the context of collinear explanatory variables, our standard OLS estimates will typically be biased. In this

examples, notice how the relatively large correlations between variables 1-4 induces upward bias in the

estimates of the parameters

3

and

4

, (and downward bias in the estimates of the parameters

6

and

7

.

OLSRegression[Take[Y, 20], Take[X, 20]]

3.00439, 1.50906, 0.0145471, 0.0383589, 1.9809, 0.0106091, 0.00378074, 0.00259506

2 Generalized Regression.nb

Generalized Regression

Penalized OLS Objective Function

We attempt to deal with the problems of correlated explanatory variables by introducing a penalty component to

the OLS objective function. The idea is to penalize the regression for using too many correlated explanatory

variables, as follows:

WLS

1

n

i1

n

(y

i

X

i

)

2

P

,

with

P

_

j1

p

_

1

2

(1 )

j

2

|

j

|

In[819]:=

WLS[Y_, X_, _, _, _] : Module_

{n Length[Y], m Length[ ], W, P},

Z Y X.;

W

1

n

Z.Z;

P _

i1

m

1

2

(1 ) [[i]]

2

Abs[[[i]]] ;

W P

_

Nonstandard Regression Types

In the above framework:

=0 ridge regression

=1 lasso regression

(0, 1) elastic net regression

We use the Mathematica NMinimze function to find a global minimum of the WLS objective function, within a

specified range for , as follows:

GeneralRegression[Y_, X_, _, Range_] : Module[

{nIndep Last[Dimensions[X]], b},

b Table[Unique[b], {1 nIndep}];

Reg NMinimize[

{WLS[Y, X, , b[[1]], Drop[b, 1]], Range[[1]] b[[1]] Range[[2]]}, b];

coeff b /. Last[Reg];

{Reg[[1]], coeff[[1]], Drop[coeff, 1]}

]

NMinimze employs a variety of algorithms for constrained global optimization, including Nelder-Mead, Differen-

tial Evolution, Simulated Annealing and Random Search. Details can be found here.

The GeneralRegression function returns the minimized WLS value, the optimal parameter (within the constraints

set by the user), and the optimal weight vector .

Generalized Regression.nb 3

NMinimze employs a variety of algorithms for constrained global optimization, including Nelder-Mead, Differen-

tial Evolution, Simulated Annealing and Random Search. Details can be found here.

The GeneralRegression function returns the minimized WLS value, the optimal parameter (within the constraints

set by the user), and the optimal weight vector .

Reproducing OLS Regression

Here we simply set =0 and obtain the same estimates

0

as before (note that the optimal value is negative):

GeneralRegression[Take[Y, 20], Take[X, 20], 0, {20, 20}]

4.52034, 5.47031, 3.00439, 1.50906, 0.0145471,

0.0383589, 1.9809, 0.0106091, 0.00378074, 0.00259506

Ridge Regression

Here we set =1 and constrain = 0. Note that the value of the penalized WLS is significantly larger than in

the OLS case, due to the penalty term P

GeneralRegression[Take[Y, 20], Take[X, 20], 1, {0, 0}]

12.134, 0., 3.00387, 1.50879, 0.0145963,

0.0385133, 1.98083, 0.0105296, 0.00378282, 0.00267926

Lasso Regression

Here we set =1 and constrain = 1. Note that the value of the penalized WLS is lower than Ridge regression:

GeneralRegression[Take[Y, 20], Take[X, 20], 1, {1, 1}]

11.0841, 1., 3.00424, 1.50904, 0.0143872,

0.0381963, 1.98082, 0.010514, 0.00360641, 0.00248904

Elastic Net Regression

Here we set =1 and constrain to lie in the range (0, 1). In this case the optimal value of = 1, the same as

for lasso regression:

GeneralRegression[Take[Y, 20], Take[X, 20], 1, {0, 1}]

11.0841, 1., 3.00424, 1.50904, 0.0143872,

0.0381963, 1.98082, 0.010514, 0.00360641, 0.00248904

Generalized Regression

Here we set =1 and constrain to lie in a wider subset of , for example (-5, 5):

GeneralRegression[Take[Y, 20], Take[X, 20], 1, {20, 20}]

9.42129, 20., 3.01909, 1.51991, 8.01608 10

9

,

1.28294 10

8

, 2.01397, 0.0370202, 6.15894 10

9

, 8.15124 10

9

4 Generalized Regression.nb

Empirical Test

We conduct an empirical test of the accuracy of the various regression methods, by simulating 100 data sets

consisting of 220 observations (20 in-sample, 200 out-of-sample), with regressors and parameters as before. For

each of the regression methods we calculate the MSE from the out-of-sample data, using coefficients estimated

using the in-sample data.

First, create a function to calculate the Mean Square Error:

MSE|Y_, X_, b_] : Module|

{nObs Length|Y], Z Y b.Tr anspose|X]},

Z.Z[ nObs

]

Now create a test program to run multiple samples:

In[837]:=

i 1; nEpochs 100; MSS0 Table[, {nEpochs}]; MSS1 Table[, {nEpochs}];

MSS2 Table[, {nEpochs}]; MSS3 Table[, {nEpochs}]; MSS4 Table[, {nEpochs}];

While [i nEpochs,

RandomVariate[ NormalDistribution[0, ], nObs];

X RandomReal[{100, 100}, {nObs, 8}];

Y X. ;

Parallelize[

b0 Last[GeneralRegression[Take[Y, 20], Take[X, 20], 0, {20, 20}]];

b1 Last[GeneralRegression[Take[Y, 20], Take[X, 20], 1, {0, 0}]];

b2 Last[GeneralRegression[Take[Y, 20], Take[X, 20], 1, {1, 1}]];

b3 Last[GeneralRegression[Take[Y, 20], Take[X, 20], 1, {0, 1}]];

b4 Last[GeneralRegression[Take[Y, 20], Take[X, 20], 1, {20, 20}]];

MSS0[[i]] MSE[Drop[Y, 20], Drop[X, 20], b0];

MSS1[[i]] MSE[Drop[Y, 20], Drop[X, 20], b1];

MSS2[[i]] MSE[Drop[Y, 20], Drop[X, 20], b2];

MSS3[[i]] MSE[Drop[Y, 20], Drop[X, 20], b3];

MSS4[[i]] MSE[Drop[Y, 20], Drop[X, 20], b4]]; i];

MSS {MSS0, MSS1, MSS2, MSS3, MSS4};

The average out-of-sample MSE for each regression method is shown in the cell below. The average MSE for the

Generalized regression is significantly lower than for other regression techniques.

In[842]:=

NumberForm[{Mean[MSS0], Mean[MSS1], Mean[MSS2], Mean[MSS3], Mean[MSS4]}, {4, 2}]

Out[842]//NumberForm=

14.69, 14.66, 14.51, 14.51, 13.23

The lower MSE is achieved by lower estimated values for the zero coefficients:

Generalized Regression.nb 5

In[849]:=

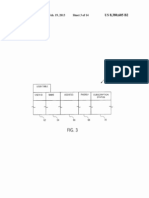

{b0, b1, b2, b3, b4} // MatrixForm

Out[849]//MatrixForm=

3.01483 1.51507 0.00115002 0.00488936 2.01666 0.00341592 0.0162198 0.0178543

3.01352 1.51359 0.000842353 0.00413787 2.01553 0.00262603 0.0161391 0.0176154

3.01417 1.51359 0.000769123 0.00413357 2.01575 0.00240096 0.0160634 0.0174478

3.01417 1.51359 0.000769123 0.00413357 2.01575 0.00240096 0.0160634 0.0174478

3.02687 1.51527 5.25723 10

10

0.00477497 2.02069 1.28321 10

7

0.0145281 0.0146673

A comparison of the histograms of the MSEs for each of the regression methods underscores the superiority of the

Generalized Regression technique:

In[844]:=

SmoothHistogram[MSS, PlotLabel "Mean Square Errors (Out of Sample)"]

Out[844]=

10 20 30 40 50

0.05

0.10

Mean Square Errors Out of Sample

References

Tong Zhang, Multi Stage Convex relaxation for Feature Selection,

Neural Information Processing Systems NIPS, pp.1929 1936, 2010

Hui Zou and Trevor Hastie, Regularization and Variable Selection via the Elastic Net,

J.R. Statist. Soc. B (2005) 67, Part 2, pp301 320

6 Generalized Regression.nb

You might also like

- POKER Science - Aay2400.fullDocument13 pagesPOKER Science - Aay2400.fullEdwin HauwertNo ratings yet

- 25Document1 page25Edwin HauwertNo ratings yet

- 24Document1 page24Edwin HauwertNo ratings yet

- 11Document1 page11Edwin HauwertNo ratings yet

- 8Document1 page8Edwin HauwertNo ratings yet

- 22Document1 page22Edwin HauwertNo ratings yet

- 23Document1 page23Edwin HauwertNo ratings yet

- 18Document1 page18Edwin HauwertNo ratings yet

- 21Document1 page21Edwin HauwertNo ratings yet

- 20Document1 page20Edwin HauwertNo ratings yet

- 19Document1 page19Edwin HauwertNo ratings yet

- 10Document1 page10Edwin HauwertNo ratings yet

- 2Document1 page2Edwin HauwertNo ratings yet

- 6Document1 page6Edwin HauwertNo ratings yet

- 9Document1 page9Edwin HauwertNo ratings yet

- Gundlach Mirror MirrorDocument66 pagesGundlach Mirror MirrorEdwin HauwertNo ratings yet

- 7Document1 page7Edwin HauwertNo ratings yet

- 5Document1 page5Edwin HauwertNo ratings yet

- CorporatePresentation Jan 2012Document33 pagesCorporatePresentation Jan 2012Edwin HauwertNo ratings yet

- 3Document1 page3Edwin HauwertNo ratings yet

- 1Document1 page1Edwin HauwertNo ratings yet

- 4Document1 page4Edwin HauwertNo ratings yet

- 2008H SornetteDocument73 pages2008H SornetteEdwin HauwertNo ratings yet

- Lighthouse Special Report Who Moved My RecessionDocument17 pagesLighthouse Special Report Who Moved My RecessionEdwin HauwertNo ratings yet

- Carolan, C. (1998) - Spiral Calendar - TheoryDocument21 pagesCarolan, C. (1998) - Spiral Calendar - Theorystummel6636100% (8)

- Optimal Capital StructureDocument14 pagesOptimal Capital StructuretomydaloorNo ratings yet

- EAPC Kampala 2011Document31 pagesEAPC Kampala 2011Edwin HauwertNo ratings yet

- General Mills Prediction Market Thesis VDocument106 pagesGeneral Mills Prediction Market Thesis VEdwin HauwertNo ratings yet

- Financial Management: Weighted Average Cost of CapitalDocument11 pagesFinancial Management: Weighted Average Cost of Capitaltameem18No ratings yet

- Chalmers, Keryn G. and Godfrey, Jayne M. and Navissi, Farshid - The Systematic Risk Effect of Hybrid Securities' Classifications (2007)Document26 pagesChalmers, Keryn G. and Godfrey, Jayne M. and Navissi, Farshid - The Systematic Risk Effect of Hybrid Securities' Classifications (2007)Edwin HauwertNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5784)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (890)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (265)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (72)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Tradition and Transformation: Democracy and The Politics of Popular Power in Ghana by Maxwell OwusuDocument38 pagesTradition and Transformation: Democracy and The Politics of Popular Power in Ghana by Maxwell OwusuKwame Zulu Shabazz ☥☥☥No ratings yet

- Mautic Developer GuideDocument222 pagesMautic Developer GuideMorph DiainNo ratings yet

- Fluid Mosaic Paper PDFDocument0 pagesFluid Mosaic Paper PDFDina Kharida100% (1)

- wk8 Activity PresentationDocument13 pageswk8 Activity Presentationapi-280934506No ratings yet

- Excel Spreadsheet SoftwareDocument22 pagesExcel Spreadsheet SoftwareJared Cuento TransfiguracionNo ratings yet

- Quantiacs Reading ListDocument7 pagesQuantiacs Reading Listdesikudi9000No ratings yet

- Delete Entries On TRBAT and TRJOB Tables ..Document3 pagesDelete Entries On TRBAT and TRJOB Tables ..ssssssssssNo ratings yet

- Logitech Z 5500Document23 pagesLogitech Z 5500Raul Flores HNo ratings yet

- 1Document37 pages1Phuong MaiNo ratings yet

- Principles of Management All ChaptersDocument263 pagesPrinciples of Management All ChaptersRushabh Vora81% (21)

- NLP - The Satanic Warlock - NLP and The Science of SeductionDocument18 pagesNLP - The Satanic Warlock - NLP and The Science of SeductionnegreanNo ratings yet

- DLL Mtb-Mle3 Q2 W2Document6 pagesDLL Mtb-Mle3 Q2 W2MAUREEN GARCIANo ratings yet

- Multiple Choice Questions from Past ExamsDocument31 pagesMultiple Choice Questions from Past Examsmasti funNo ratings yet

- Heritage Theme Resort: Thesis ReportDocument8 pagesHeritage Theme Resort: Thesis ReportNipun ShahiNo ratings yet

- SLR140 - ArDocument51 pagesSLR140 - ArDeepak Ojha100% (1)

- Soft Uni SolutionsDocument8 pagesSoft Uni Solutionsnrobastos1451No ratings yet

- College New Prospectus PDFDocument32 pagesCollege New Prospectus PDFJawad ArifNo ratings yet

- How To Make Partitions in Windows 10 - Windows 8 PDFDocument6 pagesHow To Make Partitions in Windows 10 - Windows 8 PDFAbhrajyoti DasNo ratings yet

- Tips for Kerala PSC Exam on Office ToolsDocument3 pagesTips for Kerala PSC Exam on Office ToolsSameer MaheNo ratings yet

- Stock Verification Report For Library Including Department LibraryDocument2 pagesStock Verification Report For Library Including Department Librarymskumar_me100% (1)

- Computer Science Important Question For Class 12 C++Document24 pagesComputer Science Important Question For Class 12 C++Ravi singhNo ratings yet

- The Sensitivity of The Bielschowsky Head-Tilt Test in Diagnosing Acquired Bilateral Superior Oblique ParesisDocument10 pagesThe Sensitivity of The Bielschowsky Head-Tilt Test in Diagnosing Acquired Bilateral Superior Oblique ParesisAnonymous mvNUtwidNo ratings yet

- Pagkilatis Sa BalitaDocument13 pagesPagkilatis Sa BalitaxanNo ratings yet

- Microsoft Excel - Application Note - Crunching FFTsDocument5 pagesMicrosoft Excel - Application Note - Crunching FFTsvoltus88No ratings yet

- Design Rule Check: By:-Chandan ROLL NO. 15/914 B.SC (Hons.) ELECTRONICS ScienceDocument9 pagesDesign Rule Check: By:-Chandan ROLL NO. 15/914 B.SC (Hons.) ELECTRONICS ScienceChandan KumarNo ratings yet

- Solution Manual For Mathematics For EconomicsDocument42 pagesSolution Manual For Mathematics For EconomicsMarcia Smith0% (1)

- Chapter 2 ClimateDocument21 pagesChapter 2 ClimateShahyan bilalNo ratings yet

- ManualDocument572 pagesManualjoejunkisNo ratings yet

- Cover Page ( (DLW 5013) ) 11 April 2020 - .222 PDFDocument4 pagesCover Page ( (DLW 5013) ) 11 April 2020 - .222 PDFElamaaran AlaggarNo ratings yet