Professional Documents

Culture Documents

Optimizing SQ L Statements

Uploaded by

Anonymous 9B0VdTWi0 ratings0% found this document useful (0 votes)

22 views58 pagesOptimizing Sq l Statements

Original Title

Optimizing Sq l Statements

Copyright

© © All Rights Reserved

Available Formats

PDF or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentOptimizing Sq l Statements

Copyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

0 ratings0% found this document useful (0 votes)

22 views58 pagesOptimizing SQ L Statements

Uploaded by

Anonymous 9B0VdTWiOptimizing Sq l Statements

Copyright:

© All Rights Reserved

Available Formats

Download as PDF or read online from Scribd

You are on page 1of 58

11 Optimizing SQL Statements

During application programming, SQL statements are often written

‘without sufficient regard to their subsequent performance. Expensive

(long-running) SQL statements slow performance during production

operation, They result in slow response times for individual programs

and place excessive loads on the database server. Frequently, you will

find that 50% of the database load can be traced to a few individual SQL

statements. This chapter does not begin with the design of database

applications; rather, it describes how you can identify, analyze, and opti-

mize expensive SQL statements in a production system. In other words,

What can be done ifa problem has already occurred?

‘As the database and the number of users grow, so do the number of

requests to the database and the search effort required for each database

request. This is why expensive or inefficient SQL statements constitute

‘one of the most significant causes of performance problems in large

installations. The more a system grows, the more important it becomes,

to optimize SQL statements.

This chapter starts with a detailed instruction to analyze SQL statements

that refer to the introductory sections in Chapter 2 on SQL statistics (Sec-

tion 2.3.2, Identifying and Analyzing Expensive SQl. Statements) and in

Chapter 4 on the SQL trace (Section 4,3.2, Evaluating an SQL Trace).

You can optimize the runtime using database indexes if the selection

conditions for the database access are designed in such a way that only

a small quantity of data is returned to the application program. Ta

understand the mode of action of indexes and be able to evaluate their

significance for performance, you require a brief theoretical introduc:

tion to tables, indexes, and the database optimizer. This is followed by

‘a section that describes the practical administration of indexes and table

statistics that are required for the database optimizer to decide on the

appropriate index. The section on database indexes concludes with a

description of the rules for good index design.

479

48 | optimizing Sat statements

[+]

Ifa large quantity of data is transferred from the database to the appli-

Cation server, you cau only achieve an optimization by rewriting the

program or changing the users’ approach to work. The third section of

this chapter deals with these measures; this section is primarily aimed

at ABAP developers. You'll fist get an overview of the five golden rules of

SQL programming, which are followed by examples, for instance, of data

base views, the FOR ALL ENTRIES clause, and the design of input screens

to avoid performance problems.

Business warehouse applications are a special case, which frequently eval-

uate thousands to millions of data records, The next chapter describes

the optimization options for these applications.

‘When Should You Read this Chapter?

You should read this chapter if you have identified expensive SQL. state:

ments in your SAP system and want to analyze and optimize them. You

can read the first and second sections of this chapter without previous

knowledge of ABAP programming, whereas the third section. assumes a

basic familiarity.

This chapter is not an introduction to developing SQL applications. For

this, refer to ABAP textbooks, SQL. textbooks, or SAP Online Help.

414 Identifying and Analyzing Expensive

SQL Statements

‘The following sections describe how to identify and analyze expensive

SQL statements.

‘ata Preliminary Analysis

The preliminary step in identifying and analyzing expensive SQL. state-

‘ments fs to identify SQL statements for which optimization would be

genuinely worthwhile, The preliminary analysis prevents you from wast-

ing time with SQL statement optimizations that cannot produce more

than trivial gains in performance.

480

Identifying and Analyzing Expensive SQL Statements

For the preliminary analysis, there are two main techniques: an SQL trace

or the SQL statistics. An SQL. trace is useful if the program containing

the expensive SQL statement has already been identified, for exam-

ple, through the workload monitor, work process overview, or users’

observations. You don't require any information to analyze the

SQL statistics. Using the SQL statistics enables you to order statements

according to the system-wide load they are generating. For both analysis

techniques, the sections that follow describe individual analysis steps.

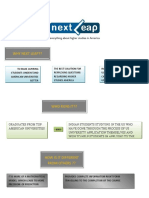

The flowchart in Figure 11.1 shows techniques for identifying expensive

SQL statements that are worth optimizing. The following shapes are used.

in the diagram:

> A round-cornered rectangle indicates where a specific SAP perfor-

‘mance monitor is started.

» Acdiamond shape indicates a decision point.

» ‘The parallelogram indicates the point at which you have successfully

identified expensive SQL statements that are worth optimizing.

7 Table, Report,

Transaction, User

oy Table,

{ot Satement

“Table,

‘SQL Statement

Figure 114 Techniques for Identifying Expensive SQL Statements

=~ SQL Statements

na

”

Optimizing SOL Statements

st of identical

Prel

inary Analysis Using the SQL Trace

Create an SQL. trace using Transaction ST0S as described in Chapter 4.

Display the SQL trace results in one user session by selecting List Trace.

‘The Basic SQL. Trace List screen appears. Call Transaction STO5 in another

user session and display the compressed surnmary of the results, as fol-

lows: Goro * Sumanany * Comprrss.

If you sort the data in the compressed summary according to elapsed

database time, you can find the tables that were accessed for the longest

times. Use these tables in the Basic SOL Trace List screen to look up the

corresponding SQL statements. You should consider only these state-

ments for further analysis,

Ina third user session, get a list of all identical accesses from the Basic

SQL. Trace List screen by selecting Goto + IDENTICAL seLECTS. Compare

these identical selects with the trace and the compressed summary. By

totaling these durations, you can estimate roughly how much database

time could be saved by avoiding multiple database accesses. Unless your

savings in database time is sufficiently large, there is no need to perform

optimization,

Asa result of this preliminary analysis, you will have made a list of any

statements you want to optimize.

Preliminary Analysis Using the SQL Statistics

‘Monttor the SQL statistics from the main screen in the database perfor-

mance monitor (Transaction STO4) by selecting the Detail Analysis menu.

Look under the heading Resource Consumption By. Then (for Oracle),

select SQL Request; for Informix, select SQL Statement, In the dialog

box that appears, select Enter. The screen Database Performance: Shared

SQL appears. For an explanation of the screen, see Chapter 2. Expensive

SQL. statements are characterized by a large number of logical or physi-

cal database accesses. To create a prioritized list of statements in which

the top-listed statements are potentially worth optimizing, sort the SQL.

statistics according to logical accesses (indicated, for example, by but

fer gets), physical accesses (shown as disk reads), or runtime of the SQL

statements.

Identitying and Analyzing Expensive SOL Statements | 11.4

Further Prelit

lary Analysis

Regardless of whether you begin your analysis from an SQL trace or the

SQL statistics, before you proceed to a detailed analysis, perform the fol

lowing checks:

> Create several SQL traces for different times and computers to verify

the database times noted in the initial SQL trace. Determine whether

there Is a network problem or a temporary database overload,

> If the expensive SQL statement is accessing a buffered table, use the

critetia presented in Chapter 9, SAP Bulfering, to determine whether

table buffering should be deactivated, that is, whether the respective

buffer is too small or contains tables that should not be buffered

because they are too large or too frequently changed.

> Check whether there are any applicable SAP Notes in the SAP Service

Marketplace by using combinations of search terms, such as perfor

mance and the name of the respective table.

waz Detailed Analysis

After listing the SQL statements that are worth optimizing, perform a

detailed analysis to decide on the optimization strategy. Optimization

can take one of two forms: optimizing the ABAP program code related

to an SQL statement or optimizing the database, for example, by ereat-

ing indexes.

SQL. statements that attempt to operate on a great number of data records

in the database can only be optimized by making changes to the ABAP

programming. The left-hand part of Figure 11.2 shows the activities of a

statement that requires this kind of optimization. The stacked, horizon:

tal black bars depict data. Look at the amount of data records depicted

both in the database process and in the application server; this shows

that the statement has transferred a lot of data to the application server.

Therefore, a large number of data blocks (the pale rectangles containing

the data bars) were read from the database buffer or the hard drive. For a

statement like this, the statement's developer should check whether the

program actually needs all of this data or whether a significant amount of

the data is unnecessary. The amount of data that constitutes a significant

amount varies. For a dialog transaction with an expected response time

483

Whether to

optimize the code

for the index

High transfer toad I

18 | optimizing Sat statements

High read load

of around one second, 500 records transferred by an SQL statement is

a significant number. For a reporting transaction, 10,000 records would

normally be considered a significant number. For programs running

background jobs (for example, in the SAP application module CO), the

‘number of records that constitutes a significant number may be consid-

erably larger.

ABAP Program ABAP Program

DB Interface DB Interface

DB Buffer Eewess

EES

reel ; ret |

Figure 12 Expensive SOL Statements (Left: The Statement Tiesto Transfer Too

‘Much Data. Right: The Statement Unnecessaily Reads Too Many Data Blacks.)

In contrast, an expensive SQL statement of the type requiring database

optimization Is shown in the righthand part of Figure 11.2. Although

the SQL statement transferred only a few data records (represented by

the horizontal black bars) to the application server, the sclection criteria

has forced the database process to read many data blocks (the pale rect-

angles, some of which contain the data bars), Many of these data blocks

do not contain any sought-after data; therefore, the search strategy is

clearly inefficient. To simplify the search and optimize runtime, it may

be helpful to create a new secondary index — or a suitable index may

already exist, but is not being used.

484

\dentiying and Analyzing Expensive SOL Statements [44

In this chapter, we'll analyze the two types of expensive SQL state-

ments.

A third type of expensive SQL statement is those with aggregate func-

tions (Count, Sum, Max. Min, Ava), Which can be found in BW applications,

for example. Here as well, alarge number of data records is read on the

database and a small number is transferred. They are therefore similar

to expensive SQL statements of type II; however, the background is dif-

ferent. For statements that are identified as expensive SQL statements of

type Il, a large number of data records is read on the database due to an

inefficient search strategy: for SQL. statements with aggregate functions,

many data records are read to form aggregates. This type of evaluation is

discussed in the next chapter.

Detailed Analysis Using an SQL Trace

For each line in the results of an SQL trace (Transaction STS), looking at

the corresponding database times enables you to determine whether you

can optimize a statement by using database techniques such as creating

new indexes. Divide the figure in the Duration column by the entry in

the Rec (records) column. Ifthe result is an average response time of less

than 5,000 microseconds for each record, this can be considered optimal.

Each FETCH should requite less than about 100,000 microseconds. Ifthe

average response time is optimal, but the runtime for the SQL statement

is stil high due to the large volume of data being transferred, only the

ABAP program itself can be optimized. For an expensive SQL statement

in which a FETCH requires more than 250,000 microseconds but that

targets only a few records, a new index may improve the runtime.

To do this, from the Basie SQL. Trace List sereen, select ABAP Display to

go to the ABAP code of the traced transaction and continue your analy-

sis, as follows.

Detailed Analysis Using the SQL Statistics

The evaluation of the optimization potential due to data of the SQL stat

tics depends on the statistics that the database or the database interface

provides for this database system. Many database systems indicate the

average response time for a statement, and you can calculate the average

485

18. | optniaing SQL statements

Determining

programs

number of records read per statement (see Section 2.3.2, Identifying and

Analyzing Expensive SQL Statements), You can anticipate a threshold

value of 2 ms per record as a criterion for good performance. Statements

that fall below this threshold value cannot be optimized using database

means; here you can only check whether you can reduce the number of

calls by changing the application coding, For statements whose average

execution times clearly exceed this value, you can check whether you

can improve performance with database means, for instance, indexing.

Another criterion for evaluating an SQL statement's optimization poten-

tial is the number of logical read accesses for each execution (indicated

as Gets/Execution for Oracle) and the number of logical read accesses

for each transferred record (indicated as Bufgets/Records for Oracle; see

Appendix B, which includes information on the different databases).

However, statements that read an average of less than 10 blocks or pages

for each execution and appear at the top of the sorted SQL statistics list

due to high execution frequency cannot be optimized by using database

techniques such as new indexes, Instead, consider improving the ABAP

program code or using the program in a different way to reduce the

execution frequency.

Good examples of SQL statements that can be optimized by database

techniques (for example, creating or modifying new indexes) include

SQL statements that require many logical read accesses to transfer a few

records. These are SQL statements for which the SQL statistics shows a

value of 10 or higher for Bufgets/Records.

For SQL statements that need a change to the ABAP code, identify the

various programs that use the SQL statement and improve the coding in

each program. You can go directly from the SQL. statistics to ABAP cod:

Ing (ABAP Coding button).

Further Detailed Analysis,

After determining whether the SQL statement can be optimized via a

new index or changes to the program code, you will need more detailed

information about the relevant ABAP program. The following informa-

tion is required for optimizing the SQL. statements:

486

Optimizing SOL Statements Through Secondary Indexes | 44.2

> The purpose of the program

» The tables that are involved and their contents, for example, transac-

tion data, master data, or customizing data, and the size of the tables

and whether the current size matches the size originally expected for

the table

> The users of the program and the developer responsible for a cus-

tomer-developed program

After getting this information, you can begin tuning the SQL. statement

as described in the following sections.

112 Optimizing SQL Statements

Through Secondary Indexes

‘To optimize SQL statements using database techniques (such as creating

new indexes), you must have a basic understanding of how data is orga-

nized in a relational database.

ny

Database Organization Fundamentals

The fundamentals of relational database organization can be explained

via a simple analogy based on a database table: the Yellow Pages tele-

phone book.

When you look up businesses in a Yellow Pages telephone book, you [ex]

probably never consider reading the entire telephone book from cover

to cover. You more likely open the book somewhere in the middle and

zero in on the required business by searching back and forth a few times,

using the fact that the names are sorted alphabetically. Ifa relational

database, however, tried reading the same kind of Yellow Pages data in a

database table, it would find that the data is generally not sorted; instead,

it is stored in a type of linked list. New data is added (unsorted) to the

end of the table or entered in empty spaces (where records were deleted

from the table). This unsoried, telephone book version of a database

table is laid out in Table 11.1

487

”

Optimizing SQL Statements

Exceptions are the datahase systems SAP MaxDB and Microsoft SQL

Server, which store tables in a sorted manner. Appendix B deals with

their specific characteristics.

Business 3 Sores

oa

5

2

54 Video Video ston. Common- (617) 555-5252

rentals Depot wealth

Ave,

23 Florist Boston Boston Oxford (617) 555-1212

Blossoms Street

24 Video Beacon Boston Cambridge (617) 555-3232

rentals Hill Street

Video

Table-n4 Example of an Unsorted Database Table

‘One way for the database to deal with an unsorted table is by using a

time-consuming sequential read — record by record, To save the data-

base from having to perform a sequential read for every SQL statement

query, each database table has a corresponding primary index. In this

example, the primary index might be a list that is sorted alphabetically

by business type and name and includes the location of each entry in the

telephone book (see Table 11.2).

I

Florist Boston Blossoms 46 1 23

Video rentals Beacon Hill Video 46 1 24

Video rentals Video Depot 15 2 54

Table #12 Primary Index Corresponding to the Sample Table

488

‘Optimizing SQL Statements Through Secondary Indexes | 11.2

Because this list is sorted, you (or the database) do not need to read

the entire list sequentially. Instead, you can expedite your search by

first searching this index for the business type and name, for example,

video rentals and Video Depot, and then using the corresponding page,

column, and position to locate the entry in the Yellow Pages telephone

book (or corresponding database table). In a database, the position data

consists of the file number, block number, position in the block, and so

on, This type of data about the position in a database is called the row

1D. The Business Type and Business Name columns in the phone book

correspond to the primary index fields.

A primary index is always unique; that is, for each combination of index

fields, there is only one table entry. If our telephone book example was

an actual database index, there could be no two businesses with the

same name. In reality, this might not be true; a phone book might, for

example, list wwo separate outlets for a florist in the same area and with

the same name.

In our example, the primary index helps you only if you already know

the type and name of the business you are looking for. However, if you

only have a telephone number and want to look up the business that

corresponds to it, the primary index is not useful, because it does not

contain the field Telephone No. You would have to read the entire tele-

phone book, entry by entry. To simplify this kind of search query, you

can define a secondary index that contains the field Telephone No. and

the corresponding row IDs or the primary key. Similarly, to look up all

of the businesses on a street in a particular city, you can create a sorted

secondary index with the City and Street fields, and the corresponding

row IDs or the primary key. Unlike the primary index, secondary indexes

are as a rule not unique; that is, there can be multiple similar entries in

the secondary index. For example, “Boston, Cambridge Street” will occur

several times in the index if there are several businesses on that street.

The secondary index based on telephone numbers, however, would be

unique, because telephone numbers are unique.

Execution Plan

Ifyou are looking up all video rental stores in Boston, the corresponding

SQL statement would be as follows:

489

Search mechanisms

Secondary indexes

Optimizer and

‘execution plan

49 | optimizing sx statements

[+]

Index unique sean

SELECT * FROM telephone book WHERE business

rentals’ AND city = “Boston”

pe ~ ‘Video

In this case, the database has three possible search strategies:

1. Search the entire table.

2. Use the primary index for the search.

3. Use a secondary index based on the field City.

The decision as to which strategy to use is made by the database opti-

mizer program, which considers each access path and formulates an

execution plan for the SQL statement. The optimizer is a part of the data-

base program. To create the execution plan, the optimizer parses the SQL

statement. To look at the execution plan (also called the explain plan) in

the SAP system, use one of the following: SQL trace (Transaction $TOS),

the database process monitor (accessed from the Detail Analysis menu

of Transaction $104), or the Explain function in the SQL statistics (also

accessed from the Detail Analysis menu of Transaction ST04),

The examples presented here are limited to SQL statements that access a

table and do not require joins between multiple tables. In addition, the

examples are presented using Oracle access types, which include index

unique scan, index range scan, and full table scan. Appendix B explains

corresponding access types for other database systems.

‘An index unique scan is performed when the SQL statement’s here

clause specifies all primary index fields through an EQUALS condition.

For example:

SELECT * FROM telephone book WHER:

“Video rentals’ AND bustness name =

business type =

“Beacon Hill Video"

‘The database responds to an index unique scan by locating a maximum of

one record — hence the name unique. The execution plan is as follows

TABLE ACCESS BY RONID telephone book

INDEX UNIQUE SCAN telephone book_0

The database begins by reading the row in the execution plan that

is indented farthest to the right — here, INDEX UNIQUE SCAN tele

phone book 0. This row indicates that the search will use the primary

index telephone beck _0. After finding an appropriate entry in the

490

‘Optimizing SQL Statements Through Secondary Indexes | 11.2

primary index, the database then uses the row ID indicated in the pri-

‘mary index to access the table telephone book. The index unique scan

is the most efficient type of table access it reads the fewest data blocks

in the database.

For the following SQL statement, a full table sean is performed if there

is no index based on street names:

SELECT * FROM telephone book MNERE street = ‘Cambridge Street"

In this case, the execution plan contains the row TABLE ACCESS FULL

telephone. The full table scan is a very expensive search strategy for

tables greater than IMB and causes a high database load.

‘An index range scan is performed if there is an index for the search,

Dut the results are not unique; that is, multiple rows of the index satisty

the search criteria. An index range scan using the primary index is per

formed when the Where clause does not specify all of the fields of the

primary index, For example:

SELECT * FROM telephone book WMERE business type= “Florist

This SQL. statement automatically results in a search using the primary

index. Because the snore clause specifies not a single record but an area

of the primary index, the table area for business type Florist is read

record by record. Therefore, this search strategy is called an index range

scan. The results of the search are not unique, and zero to records

may be found. An index range scan is also performed via a secondary

index that is not unique, even ifall of the fields in the secondary index

are specified. Such a search would result from the following SQL state

ment, which mentions both index fields of the secondary index:

SELECT * FRON tele

“Cambridge Street”

fone book WHERE city = ‘Boston’ AND street=

The execution plan is as follows:

TABLE ACCESS BY RONID telephone book

INDEX RANGE SCAN telephone book__8

The database first accesses the secondary index telephone book 8.

Using the records found in the index, the database then directly accesses

each relevant record in the table.

41

Full table sean

Index range scan

44 | optimising SOL Stitrents

fo]

i+]

Without more information, iti difficult wo determine whether an index

range scan is effective. The following SQL statement also requires an

index range scan: SELECT * FRO telephone book WHERE Nare LIKE 8%"

This access is very expensive, because only the first byte of data in the

Index key field Is used for the search.

Consider the following SQL statement (Listing 11.1):

SOL Statement

SELECT * FROM mara NHERE mandt = :A0 AND bismt = :Al

Execution plan

TABLE ACCESS BY ROWID mara

INDEX RANGE SCAN mara__o

Listing 111 Index Range Scan

The primary index MARA__0 contains the MANDT and MATNR fields

Becatise MATNR is not mentioned in the Where clause, MANDT is the

only field available to help the database limit its search in the index.

MANDT is the field for the client. If there is only one production cl-

cent in this particular SAP system, the here clause with MaNDT = :A0

will cause the entire table to be searched. In this case, a full table scan

would be more effective than an index search; a full table scan reads

only the whole table, but an index search reads both the index and the

table. You can therefore determine which of the strategies is mare cost-

effective only if you have additional information, such as selectivity (see

the following discussion on optimization). in this example, you need to

know that there is only one client in the SAP system. (For more on this

example, see the end of the next section.)

Methodology for Database Optimization

Exactly how an optimizer program functions is a well-guarded secret

among database manufacturers. However, broadly speaking, there are

two types of optimizers: rule-based optimizers (RBOs) and cost-based opti-

mizers (CBOs)

All database systems use

based optimizer,

in conjunction with an SAP system use a cost:

42

‘Optimizing SQL Statements Through Secondary indexes | 11.2

‘An RBO bases its execution plan for a given SQL statement on the Where

clause and the available indexes. The most important criteria in the where

clause are the fields specified with an EQUALS condition and that appear

in an index, as follow:

SELECT * FROM telephone book MHERE business type= ‘Pizzeria’

AND business name = ‘Blue Hi11 House of Pizza” AND city =

Roxbury”

In this example, the optimizer decides on the primary index rather than

the secondary index, because two primary index fields are specified

(business type and business name), whereas only one secondary index

field is specified (city). One limit to index use is that a field can be used

for an index search only if all of the fields to the left of that ficld in the

index are also specified in the where clause with an equals condition.

Consider two sample SQL statements:

SELECT * FRON telephone book

WHERE business type like “PS* AND business nane = “Blue Hi1

of Pizze"

and

SELECT * FROM telephone book MHERE business none = ‘Blue HiT

of Pizza

For either of these statements, the condition business name = “Blue

HIN] House of P1zza" Is of no use to an index search. For the first exam.

ple, all business types beginning with Pin the index will be read. For the

second example, all entries in the index are read, because the business

type field is missing from the where clause. Whereas an index search is

therefore greatly influenced by the position of a field in the index (in

relation to the lefimost field), the order in which fields are mentioned in

the Where clause is random,

To create the execution plan for an SQL statement, the CBO considers the

same criteria as described for the RBO, plus the following criteria:

» Table size

For small tables, the CBO avoids indexes in favor of a more efficient,

fulktable scan. Large tables are more likely to be accessed through the

index.

493

Rule-based

‘optimizer (RO)

Cost-based

‘optimizer (CBO)

”

Optimizing SQL Statements

> Selectivity of the index fields

‘The selectivity of a given index field is the average size of the portion

ofa table that is tead when an SQL statement searches for a particular,

distinct value in that ficld. For example, if there are 200 business

types and 10,000 actual businesses listed in the telephone book, then

the selectivity of the field Business Type is 10,000 divided by 200,

whict is 50 (or 295 of 10,000). The larger the number of distinct val-

uues in a field, the higher the selectivity and the more likely the

‘optimizer will use the index based on that field. Tests with various

database systems have shown that an index is used only if the CBO

estimates that, on average, less than 5 to 10% of the entire table has

to be read. Otherwise, the optimizer will decide on the full-table

sean,

‘Therefore, the number of different values per field should be consid

erably high

> Physical storage

“The optimizer considers how many index data blocks or pages must

be read physically on the hard drive. The more physical memory there

1s that needs to be read, the less likely itis that an index is used. The

amount of physical memory that needs to be read may be increased

by database fragmentation (that is, a low fill level in the individual

index blocks).

» Distribution of field values

‘Most database systems also consider the distribution of field values

within a table column, that is, whether each distinct value is repre-

sented in an equivalent number of data records or if some values

dominate, The optimizer’s decision is based on the evaluation of data

statistics, such as with a histogram or spot checks to determine the

distribution of values across a column.

» Spot checks at time of execution

Some database systems (for example, MaxDB and SQL Servet) decide

which access strategy is used at the time of execution, not during

parsing. During parsing, the values satisfying the here clause are stil

unknown to the database. At the time of execution, however, an

494

Optimizing SOL Statements Through Secondary Indexes | 41.2

uneven distribution of values in a particular field can be taken into

account by a spot check.

To determine the number of distinct values per field in a database table,

use the following SQL statement:

SELECT COUNT (DISTINCT ) FROM Database field

If this field Is empty, the entry applies to all database types. Ifa value

1s entered in this field, it applies only to a specified database type.

> Usage Type field

‘The O entry means the statistics are only used by the optimizer; the A

entry indicates that extended statistics are supposed to be calculated

for the application monitor, which can be very time-consuming.

» Active column

‘The default setting is A; that i, the table is supposed to be analyzed if

the check run revealed that new statistics are necessary. U means the

statistics are always updated, that is, independent of the check, which

prolongs the runtime of the statistics run. The analysis excludes tables

with the entries N (no statistics are created, existing ones are deleted)

OF R (statistics are generated temporarily for the application monitor

and then deleted).

» ToDo column

In this column, the control table DBSTATC sets an X if statistics for a

given table are to be generated when the generation program runs

next,

501

m2

"

Optimizing SOL Statements

fe]

» Method field

This field is only relevant for the Oracle database system. It describes

the statistics creation method.

For the database administration of Oracle databases, you can also use the

SAPOBA utility or the S&CONNECT program as of SAP Basis Version 6.10.

For each run of the statistics generation program, you can monitor the

logs created:

1. To monitor these logs after a run of the statistics generation program

saP0BA for an Oracle database, select TOOLS * CCMS + DB ADMINISTRA:

TION + DBA Logs.

2. Then select DB Optimizer.

The resulting screen might show, for example, the following informa-

tion:

Beginning of Action End of Action Fet Object RC

24,04,1998 17:10:12 24.04.1998 17:35:01 opt PSAPZ 0000

24.04.1998 17:36:30 24,04,1998 18:26:25 aly DBSTATCO G00

In the first row, the value opt in the column Fct indicates that a

requirement analysis was performed, The value 954° in the coluran,

(object indicates that the requirement analysis was performed for all,

tables in the database, Compare the beginning and ending times in

the first row, and you can see that the requirement analysis took 25

minutes

In the second row, the value aly in the column Fct indicates that a

table analysis run was performed. The value o8STA7C0 In the column

dject indicates that the table analysis was performed for all relevant

tables. (These are all tables listed in the control table DBSTATC — usu-

ally only a small percentage of the total database tables.) Comparing

the beginning and ending times in the second row, you can see that

the table analysis took almost an hour. The operations in bath rows

ended successfully, as indicated by the value 0009 in the column &.

SAP tools for generating table access statistics are specifically adapted to

the requirements of the SAP system. Certain tables are excluded from the

creation of statistics, because generating statistics for those tables would

be superfluous or would reduce performance. Ensure that the table

502

Optimizing SOL Statements Through Secondary Indexes

access statistics are generated only by the generation program released

specifically for SAP.

When an SAP system is used in conjunction with Oracle databases, no

statistics are created for pooled and clustered tables, such as the update

tables VBMOD, VBHDR, and VBDATA. For these tables, the Active field

in Transaction DB21 displays the entry k,

Therefore, you must make sure to generate the table access statistics

only using SAP tools. For further information, refer to the SAP database

administration online Help.

‘To check whether statistics were generated for a particular table, you can

use Transaction DB20. This transaction indicates whether the statistics

are current, that is, the deviation between the number of entries in the

database and the last check, and when the last update run took place.

The system also displays the settings for the table from table DBSTATC.

With this function you can re-create statistics.

Whenever you create new tables or indexes, you should then use Trans

action DB20 to update the statistics manually, so that the optimizer has

a sound evaluation basis for calculating the executing plans.

In the DBA Cockpit, you can find enhanced, database-specific informa

tion for some database systems. Examples include the following:

> For Oracle, in the Space area: * TABLE COLUMNS

» For Microsoft SQL Server, in the Space area: When optimizing customer-developed SQL statements, try to avoid

creating secondary indexes on SAP transaction data tables. As a

rule, transaction data tables grow linearly over time and cause a

corresponding growth in the size of related secondary indexes.

Therefore, searching via a secondary index will eventually result in

an SQL statement that runs increasingly slowly. Therefore, SAP

uses special search techniques for transaction data, such as match

code tables and SAP business index tables (or example, the delivery

due index).

> If the SQL statement originates from a customer-developed program,

rather than create a new index, you may be able to do one of the fol

lowing:

» Rewrite the ABAP program in such a way that an available index

can be used.

» Adapt an available index in such a way that it can be used.

> Never create a secondary index on SAP Basis tables without an explicit

recommendation from SAP, Examples of these tables include NAST

and tables beginning with D010, D020, and DD.

Rules for Creating Secondary Indexes

‘The following basic rules govern secondary index design. For primary

indexes, in addition to these rules, there are other considerations related

to the principles of table construction:

» An index is useful only if each corresponding SQL statement selects

just a small part of a table. If the SQL statement that searches accord

ing to a particular index field causes more than $ to 10% of the entire

index to be read, the cost-based optimizer will not consider the index

usefial and will choose the full table scan as the most-effective access

method. (The value of 5% should only be considered as an estimate

value.)

Examples of selective fields include document numbers, material

numbers, and customer numbers. Examples of nonselective fields

include SAP client IDs, company codes or plant [Ds, and account sta

tus.

506

Optimizing SOL Statements Through Secondary Indexes | 11.2

» Asarule, an index should contain no more than four fields. Too many

fields in the index will lead to the following effects

> Change operations to tables take longer, because the index must be

changed accordingly.

> More storage space is used in the database, The large volume of

data in the index reduces the chance that the optimizer will regard

using the index as economical

» The parsing time for an SQL statement increases significantly, espe-

cially If the statement accesses multiple tables with numerous

indexes, and the tables must be linked with a join operation.

» To speed up accesses through an index based on several fields, the

most selective fields should be positioned farthest toward the left in

the index.

» To support the optimizer in choosing an index, i is sometimes neces-

sary to use nonselective fields in the index in a way that contradicts

rules 1 to 3, Examples of such fields typically include the fields for cli

ent ID (Mandt field) and company code (Bukrs field).

» Avoid creating nondisjunct indexes, that is, two or more indexes with

generally the same fields.

» Despite the fact that the ABAP Dictionary defines a maximum of 16

indexes for each table, as a rule, you should not create more than 5

indexes. One exception is for a table that is used mainly for reading,

such as a table containing master data, Having too many indexes

causes problems similar to those resulting from an index with too

many fields. There is also an increased risk that the optimizer will

choose the wrang index.

Keep in mind that every rule has exceptions. Sometimes you can find

the optimal index combination only by trial and error. Generally,

experimenting with indexes is considered safe, as long as you keep the

following points in mind:

» Never change indexes for tables larger than 10MB during company

business hours. Creating or changing an index can take from several

minutes to several hours and blocks the entire table. This can cause

serious performance problems during production operation.

507

Rule 2: Include

only a few fields

Inthe index

Rule 3: Position

selective fields to

the left in an index

Rule 4: Exceptions

to Rules 1,2, and 3

Rule 5: Indexes

should be disjunct

Rule 6: Create

only a few indexes

per table

(+1

”

Optimizing SOL Statements

> After creating or changing an index, always check whether the opti-

mizer program uses this index in the manner intended. Ensure that

the new index does not result in poor choices for other SQL state

ments (discussed later on).

Optimizing SQL Statements (Where Clause)

Before creating a new index, you should carefully check whether it would

be possible to rewrite the SQL statement so that an available index can

be efficiently used. The following example will demonstrate this point.

Example: Missing Unselective Field in the Where Clause

While analyzing expensive SQL. statements in client-specific code, you

notice the following statement:

SELECT * FROM bkpf WHERE mandt = :AD BND belnr = :A1

‘Table BKPF contains FI invoice headers and thus can be quite large. The

Belnr field is the invoice number. Therefore, access appears to be very

selective, Detailed analysis reveals that there Is just one (primary) index

with the Mandt (client), Bukrs (booking account), and Belnr (document

number) fields. Because the Bukrs field is not in the SQL statement, the

database cannot use all the fields that follow in the index for the search.

In our example, the highly selective Belnr field cannot be used for the

index search, and the entire table must be searched sequentially.

‘These programming errors occur frequently when the unselective field

(the booking account in this case) in a system is not used (because there

is only one booking account in the client system). The remedy is simple:

‘An Equal or In condition can be added to the unselective field of the

Where clause. The efficient SQL statement would be:

SELECT * FROM Dkof WHERE mandt = :AD AND bukrs = :A1 AND

belnr = :A2

Alternatively, when the booking account is not known to the program

at the time of execution, but the developer is sure that only a certain

number of booking accounts exist in the system, then the statement

would be:

SELECT * FROM bkpf WHERE mandt = :AD AND belnr = :A2 AND

bukes IN GAS, :AM, 2A5. -..)

508

(Optimizing SOL Statements Through Secondary Indexes | 11.2,

‘The sequence of AN0-linked partial clauses within the Where clause does

not matter. The programming example wits Index-Support can be found

in Transaction $E30 under Tips and Tricks

Example: Missing Client in the Where Clause

A similar situation leads to performance problems when you add CLIENT

‘SPECIFIED to the ABAP SQL statement and forget to specify the client

in the Where clause, Most SAP indexes begin with the client; therefore,

for this SQL statement, the client field is not available for an efficient

index search

Although these may appear to be trivial errors, they are unfortunately

frequent in client-specific code, Table 11.4 lists the most important SAP

tables in which this problem can occur. When you're choosing the selec-

tive fields, you must also give the unselective fields in every case.

SAP Table Teper

reece

Peres

Suny

Benen)

BKPF (document BUKRS (booking BELNR

headers) account) (document

number)

Fl BSEG (document _BUKRS (booking BELNR

headers, part of account) (document

the cluster table number)

RFBLG)

Comprehensive NAST (message KAPPL ‘OBIKY (object

status) (application key) number)

win LTAW/LTAP_ Lcnum TANUM

(transfer order (warehouse transfer order

headersand number) number)

items)

oN -MAKT (material- SPRAS MAKTC

description) anguage key) (material

description in

capital letters)

Table +44. Examples of Tables with Leading Unselective Fields in the Primary Index

509

14 | optinixing Sat statements

Example: Alternative Access

Occasionally, you can optimize an unselective access for which there Is

no index by first taking data from a different table. With the dummy

data, you then use an index search to efficiently search for the data you

want. Consider the following SQL statement:

SELECT * FROM vbak WHERE mendt = :A0 AND kunnr = :A1

‘This SQL statement from a client-specific program selects sales documen-

tation (table VBAK) for a particular client (Kunnr field). Because there

{is no suitable index in the standard program version, this statement

requires the entire VBAK table (perhaps many gigabytes in size) to be

read sequentially. This access is not efficient.

You can solve this problem by creating a suitable secondary index (along

with the disadvantages already discussed), although a developer with

some understanding of the SD data model would find a different way.

Instead of directly reading from table VBAK, table VAKPA would first be

accessed. The optimal access would be:

SELECT * FROM vakpa WHERE mandt = :AI AND kunde = :A2,

and then

SELECT * FROM vbak WHERE mandt ~ :A1 AND ybeln ~ vakpa-vbeln

In this access sequence, table VAKPA (partner role sales orders) would

be accessed first. Because there is an index with the Mandt and KUNDE

fields, and the Kunnr field is selective, this access is efficient. (Over time,

iffa large number of orders accumulates for a client, access will begin to

take longer. Table VAKPA contains the Vbeln field (sales order number),

which can be used to efficiently access table VBAK via the primary index

You can optimize access by replacing one inefficient access with two

efficient ones, although you have to know the data model for the appli-

cation to be able to do this. SAP Notes 185530, 187906, and 191492

catalog the most frequent client code performance errors in the SAP ERP

logistics modules.

These examples show that it is possible to considerably improve the

performance of the relevant SQL statement without creating a new sec-

ondary index. This kind of optimization is preferable. Every new index

requires space in the database; increases the time required for backup,

510

‘Optimizing SQL Statements Through Secondary Indexes

recovery, and other maintenance tasks; and affects performance when

‘you're updating.

What To Do if the Optimizer Ignores the Index

If you find that the cost-based optimizer program refuses to

particular secondary index in its execution plan, even though this index

‘would simplify data access, the most likely reason is that the table access

statisties are missing or not up-to-date. Check the analysis strategy and

determine why the table has not yet been analyzed. (See SAP Online

Help on database administration.) You can use Transaction DB20 to man-

ually create up-to-date statistics, and then check whether the optimizer

then makes the correct decision.

In rare cases it is necessary to include hints in the database interface or

the database optimizer so that the correct access path can be found. Let's

take a look at an example.

‘The ZVBAK sample table is supposed to include a ZVBAK-STATUS sta-

tus field. In this example, assume that the table has 1 million entries,

of which 100 have the status O for open, and 999,900 entries have the

status C for closed. An index is created via the STATUS field, and the sta-

tistics is newly created. Now it is specified that the optimizer doesn’t use

the index via the status for executing the following SQL statement:

SELECT * FRON 2vbak WHERE status =

The general problem with this application is that the STATUS field is

filled asymmetrically and the database must recognize this. Because if

‘you select with STATUS = 0, the database should take the index via

the STATUS because the index is selective in this case; but if you select

with the limitation STATUS = C, the index is not supposed to be used

because it would lead to a record-by-record read of 999,900 records of

the ZVBAK table, which, as you learned earlier in this chapter, has a

lower performance than a full table scan. To make this distinetion, first,

‘you must ensure that the database recognizes the asymmetrical distri-

bution of the STATUS field and doesn’t just determine that the STATUS

field has two different entries. For this purpose, you must create statistics

with histograms that take into account the distribution of entries. For

Oracle databases you must explicitly specify which statistics are created;

si

Hints

fe]

na

"

Optimizing SQL Statements

[

other database systems consider this automatically. Second, you must

explicitly inform the database about the type of limitation to the STA-

‘TUS field, Usually, the database interface converts literals and constants

in SQL statements into variables so that the following SQL statement is

sent to the database:

SELECT * FROM zvbak WHERE status = :A0

The reason for this substitution is that the database optimizer can reuse

an access path that has already been calculated and doesn’t need to deter-

mine a new access path for every statement.

Im this example, this substitution has a negative impact. The database

optimizer cannot decide from the variabilized statement whether the

selection is made based on the selective value O or the nonselective

value C and will presumably decide against the index on the STATUS

field.

In this case it is possible to give the database interface a hint to transfer

constants and literals not as variables, but as concrete values. The hint

is BSUBSTITUTE LITERALSE, Using the aSUBSTITUTE VALUES hint also

ensutes that ABAP variables are transferred to the database not as vati-

ables but as concrete values.

In general, hints in Open SQL start with the #_HINTS keyword at the end

of an SQL statement. This is followed by the database type and the hint

text.

In Native SQL, you must take into account the syntax of the corre-

sponding database. For example, for an Oracle database you can use the

statement

SELECT /*+ FULL Vikp */ * FROM 11kp MHERE

to force a full table scan,

Hints often turn out to be “time bombs,” because after an SAP or data-

base upgrade (or even after a database patch) hints become superfluous

and result in accesses with low performance. Therefore, you must ana-

lyze and test all hints after an upgrade. In the standard SAP system, hints

are used only in exceptional cases.

52

‘Optimizing SOL Statements Through Secondary Indexes

‘The appendix provides a list of notes on database hints for the various

database systems.

Other reasons why the optimizer cannot find the appropriate index and

solutions can be the following

» Ifthe where clause is too complex and the optimizer cannot interpret

it correctly, you may have to consider changing the programming.

Examples are provided in the next section,

> Sometimes deleting the table access statistics, or even appropriately

modifying the statistics, will cause the optimizer to use a particular

index. (Therefore, the generation program released specifically for

B/3 chooses not to generate statistics for some tables, so ensure that

‘you create table access statistics with the tools provided by SAP)

> Ineeither of these cases, the solution requires extensive knowledge of

SQL optimization. If the problem originates from the standard R/3

software, consult SAP or your database partner.

Monitoring Indexes in the SQL Statistics

Before and after creating or changing an index, monitor the effect of

the index on the SQL statistics. To monitor the SQL statistics in R/3,

from the main screen in the database performance monitor (Transaction

704), select the Detail Analysis menu, and under the header Resource

Consumption By:

1. For ORACLE, select SQL Request. In the dialog box that appears,

change the default selection values to zero and click OK.

2. Choose Select Table,

Specify the table you want to create or change the index for, and

select Enter.

The resulting screen displays the SQL statements that correspond to

this table.

3, Save this screen, along with the execution plan for all SQL statements,

ina file,

4. Two days after creating or changing the index, repeat steps 1 10 3,

and compare the results with those obtained earlier to ensure that no

58

na

”

Optimizing SQL Statements

SQL statement has had a loss in performance due to poor optimizer

decisions. In particular, compare the number of logical or physical

accesses per execution (these are indicated, for example, in the Reads/

Execution column), and check the execution plans to ensure that the

new index is used only where appropriate

[4] | T check the index design using the SQL statistics in a developmenvitest

system, ensure that the business data in the system is representative,

because the data determines the table sizes and field selectivity consid-

ered by the cost based optimizer. Before testing, update the table access

statisties so they reflect the current data

113 Optimizing SQL Statements

in the ABAP Program

Database indexes can be used to optimize a program only ifthe selection

criteria for the database access are chosen so that just a small amount of

data is returned to the ABAP program. If this is not the case, then the

program can only be optimized by rewriting itor by changing the user's

work habits

1134 Rules for Efficient SQL Programming

This section explains the five basic rules for efficient SQL programming.

Itdoes not replace an ABAP tuning manual and is limited to a few impor-

tant cases.

[4] | Related programming techniques are explained with examples at the end

of this section and in the following sections. For a quick guide to efficient

SQL programming, go to the ABAP Runtime Analysis (Transaction SE30)

and select Tips and Tricks: Syste * Urinities * ABAP RUNTIME ANALYSIS *

Tes AND TRICKS.

The new screen lets you display numerous examples of good and bad

programming.

ou.

Opting SQL Statements inthe ABAP Program | 183

Rule 4

SQL statements must have a Where clause that transfers only a minimal

amount of data from the database to the application server, or vice versa

This is especially important for SQL. statements that affect tables larger

than 1MB. For all programs that transfer data to or from the database:

> If the program contains CHECK statements for table fields in SELECT

- ENOSELECT loops, then replace the c#ECK statement with a suitable

Where clause.

> SQL. statements without Wnere clauses must not access tables that are

constantly growing, for example, transaction data tables such as BSEG,

MKPF, and VBAK. If you find these SQL. statements, rewrite the pro:

gram,

> Avoid identical accesses, that is, the same data being read repeatedly.

To identify SQL statements that cause identical accesses, trace the pro-

gram with an SQL trace (Transaction STO5), view the results, and

select Goro + IDENTICAL SELECTS. Note the identical selects and return,

to the trace results screen to see how much time these selects required.

This tells you how much time you would save if the identical selects

could be avoided. You can find an example of this situation in the

next section.

Rule 2

To ensure that the volume of transferred data is as small as possible,

examine your programs as follows:

> SQL statements with the clause SELECT * transfer all of the columns

of a table. If all of this data is not really needed, you may be able to

convert the SELECT * clause to a SELECT list (SELECT ) or use a projection view.

» There is both an economical and an expensive way of calculating

sums, maximums, or mean values in relation to an individual table

column:

First, you can perform these calculations on the database using the

SQL aggregate functions (SUM, "4X, AVG, etc.) and then transfer only

the results, which is a small data volume. Second, you can initially

515

‘Transfer few

records

Keop the volume

of transferred

data small

44 | optimising SQL Statements

Use aray select

instead of,

single select

transfer all of the data in the column from the database into the ABAP

program and perform the calculations on the application server. This

transfers a lot more data than the first method and creates more data-

base load,

> A where clause that searches for a single record often looks as fol-

lows:

CLEAR found

SELECT * FROM dbtable WHERE fielal = x1

Found ~ °X". EXIT.

ENOSELECT,

‘The disadvantage of this code is that it triggers a FETCH on the data-

thase. For example, after 100 records are read into the input/output

buffer of the work processes, the ABAP program reads the first record

in the buffer, and loop processing is interrupted. Therefore, 99 records

were transferred needlessly. The following code is more efficient:

CLEAR found.

SELECT * FROM dbteble UP TO 1 ROWS KHERE ftelat = xi.

ENDSELECT

IF sy-subre = 0. found = °K". ENDIF.

This code informs the database that only one record should be

returned,

Rule 3

The number of fetches must remain small. Using array select instead

of single select creates fewer, more lengthy database accesses instead

of many short accesses. Many short accesses cause more adminisira-

tive overhead and network traffic than fewer, more lengthy database

accesses. Therefore, avoid the following types of code

Loop AT stab,

SELECT FROM dotable WHERE fieldl ~ itad-fieldl

Gfurther processing?

ENDLOOP.

and:

SELECT * FROM obtablel WHERE field] ~ x1

SELECT * FRON dbteble2 WHERE fiele2 =

abtablel-fiel

516

‘Optimizing SOL Statements in the ABAP Program | 11.3

further processing>

ENDSELECT.

ENOSELECT

Both examples make many short accesses that read only a few records.

You can group many short accesses to make a few longer accesses by

using either the For ALL ENTRIES clause or a database view. Both options

will be described in the example in the following section.

Rule 4

The here clauses must be simple; otherwise, the optimizer may decide

on the wrong index or not use an index at all. A uhere clause is simple if

it specifies each field of the index using Avo and an equals condition.

Virtually all optimizers have problems when confronted with a large

number of 0k conditions. Therefore, you should use the disjunct normal

form (ONF) whenever possible, as deseried in the following example

on avoiding ok clauses.

Instead of the following code:

SELECT * FROM sflioht WHERE (carrig = "LH or carrid = “UA‘)

AND (connid = “0012" OR connid = “0013")

tis better to use:

SELECT * FROM SLIGHT

WHERE ( CARRID ~ LH" AND CONNID ~ °0012")

(OR ¢ CARRIO = “LH’ AND CONNED = *0013")

(OR ( CARRIO ~ "UH" AND CONNIO ~ *o012")

(OR (CARRIO = “UK’ AND CONNED = *0013")

The other way of avoiding problems with 08 clauses is to divide complex

SQL statements into simple ones, and store the selected data in an inter-

nal table, To divide complex SQL statements, use the FOR ALL ENTRIES.

dlause (see More About FOR ALL ENTRIES Clauses, below).

Sometimes you can use an IN instead of an 08. For example, instead of

Fela ~ x1 AND (fie1d2 = yl OR Field = 2 OR Field2 = y3), use Field

= sl end Field2 {N (yl, y2, 19). Try to avoid using NOT conditions in the

\here clause. These cannot be processed through an index. You can often

57

Keep the Where

clauses simple

led

14 | Optimizing sat statements

‘Avoid unnecessary

database load

fe]

replace a NOT condition by a positive 1N ot of, which can be processed

using an index.

Rule 5

Some operations, such as sorting tables, can be performed by the data-

base instance and the SAP instance. In general, you should try to transfer

any tasks that create system load to the application servers, which can

be configured with more SAP instances if the system load increases. The

capacity of the database instance cannot be increased as easily. The fol-

lowing measures help avoid database load:

> SAP buffering is the most efficient tool for reducing load on the data-

base instance caused by accesses to database data. You should buffer

tables on application servers as much as possible to avoid database

accesses (see Chapter 9, SAP Buffering)

» If a program requires sorted data, either the database or the SAP

instance must sort the data. The database should perform the sort

only if the same index can be used for sorting as for satisfying the

Where clause, because this type of sort is inexpensive.

Consider the table with the fields , ,

, and . The key fields are , ,

and , and these comprise the primary index .

To sort the data by the database, you can use the following state-

ment

SELECT * FROM INTO TABLE ‘tab

WHERE = x1 and = x2

ORDER BY CFieldl> <#ield2>

Here, @ database sort is appropriate, because the primary index

‘TABLE_0 can be used both to satisfy the there clause and to sort the

data. A sort by the fields and cannot be per

formed using the primary index TABLE_0, because the OR0tR_9Y

clause does not contain the first primary index field. Therefore, to

reduce database load, this sort should be not be performed by the

database, but by the ABAP program. You can use ABAP statements

such as:

SELECT * FROM <@btable> INTO ftab

58

Cptinizing SOL Staterentsin the ABAP Progam | 1.3

WHERE ~ Kl and ~ x2.

SORT Itab BY .

Similar Gorting) considerations apply when you use the GROUP. 8Y

clause or aggregate functions. If the database instance performs a

Gaoue sY, this increases the consumption of database instance

resources. However, this must be weighed against the gain in perfor-

mance due to the fact that the 680UP 8Y calculation transfers fewer

results to the application server. (For more information on aggregate

functions, see Rule 2 earlier in this section.)

313.2 Example of Optim

Program

ing an SQL Statement in an ABAP

This section uses an example to demonstrate each step in optimizing an

SQL statement.

Pre lary Analysis

In this example, a customer-developed ABAP program is having petfor- [ex]

mance problems. As an initial attempt to remedy this, run an SQL trace

(Transaction $T05) on a second run of the program when the database

buffer has been loaded. The results of the trace show the information in

Listing 11.2

Duration Object Oper —-Rec_—«-RC_ Statement

1,692 HSEG PREPARE 0 SELECT WHERE MANDT ..

182 WSEG OPEN o

26,502 MSEG FETCH 32 0

326 KPF PREPARE 0 SELECT WHERE MANDT ..

60 NKPF OPEN 0

12,540 MKPF FETCH ©1403

59 NKPF REOPEN 0 SELECT WHERE MANDT .

2.208 MKPF FETCH «11403

60 NKPF REOPEN 0 SELECT WHERE MANOT

2,238 MKPF FETCH © «11403

61 NKPF REOPEN 0 SELECT WHERE MANDT

2,340 MKPF FETCH ©=1(1403

(32 more indiv. FETCHES)

43,790 MSEG FETCH 32 0

61 NKPF REOPEN 0 SELECT WHERE MaNOT .

519

"

Optimining SQL Statements

2.306 MKPF FETCH «11403

60 NKPF REOPEN 0 SELECT WHERE MANDT

2,455 MKPF FETCH = 11403

Listing #12. ample of an SOL Trace

‘The trace begins with a FETC operation on table MSEG, which reads

32 records, as indicated by the 32 in the Sec column. Next, 32 sepa

rate FLTCH operations are performed on table MKPF, each returning one

record, as indicated by the 1 in the column Rec column. This process is

repeated with another *F1C¥ operation that reads 32 records from table

[MEG and 32 more single-record FCTCV/ operations on table MKPE, and

so on until all records are found.

Now view the compressed summary. To do this from the SOIL trace results

sereen, select GoTo + SUMMARY * COMPRESS, Table 11.5 lists the results,

Tcode/ | Table To

Gea ieee

SE38

Se38

Total

MKPE SEL 319,040

MSEG SEL 1 12 197.638 383

516,678 100.0

Table m5 Compressed Summary of an SQL Trace

‘The compressed summary shows that almost two-thirds (61.7%) of the

database time is used for the individual F€TCH operations on table MKPR,

and more than one-third (38.3%) is used for the FETCH operations on

table MSEG.

Detailed Analysi

In the detailed analysis step prior to tuning, you find the tables, fields,

and processes that are important for tuning the SQL. statements you iden

tified in the preliminary analysis. To increase the performance of specific

SQL statements, you can either improve the performance of the database

instance or reduce the volume of data transferred from the database 10

520

Optinizng SOL Statements nthe ABAP Program | 113

the application server. In Table 11.5, the SQL trace results show that

the response times per record are approximately 3,000 microseconds

for accesses to table MKPF and approximately 1,800 microseconds for

accesses to table MSEG. Because these response times are good, you can

conclude that database performance is also good. The only remaining

‘way to increase the performance of SQL. statements in the ABAP program.

is to reduce the amount of data transferred.

View the identical selects: From the SQL trace (Transaction STOS) results

screen, select Goro * IDENTICAL SELECTS. In our example, the resulting list

shows 72 identical SQL statements on table MKPF. This is around 60% of

the total of 112 accesses to table MKPF, as indicated in the compressed

summary. Therefore, eliminating the Identical accesses would result in

about 60% fewer accesses and correspondingly less access time.

To access the code from the SQL trace results screen, position the cursor

on the appropriate program in the Object column and click ABAP Dis

play. In this example, accessing the code reveals that the following ABAP

statements caused the database accesses analyzed in the SQL trace:

SELECT * FROM mseg INTO CORRESPONDING FIELDS OF Imatdocs

WHERE matnr LIKE s_matnr

SELECT * FRON mkpf WHERE mbInr ~ imatdocs-nbInr

AND mjahr = imatdocs-njahr,

imatdocs-budat ~ mkpf-budat

APPEND imataoes.

ENOSELECT.

ENDSELECT.

Listing 113 Program Context for SOL Trace

You now need to know from which tables the data is being selected. This

is indicated in ABAP Dictionary Maintenance (Transaction SE11). In this

example, the two affected tables, MKPF and MSEG, store materials docu-

ments for goods issue and receipt. MKPF contains the document heads

for the materials documents, and MSEG contains the line items. The

program reads the specific materials documents from the database and

transfers them to the internal table IMATDOCS. The materials are listed

in the internal table S_MATNR.

Table fields that you need to know in this example are:

521

‘Accessing the code

Table information

"

Optimizing SOL Statements

Nested SELECT

loop

» Table MKPF:

» MANDT: Client (primary key)

» MBLNR: Number of the material document (primary key)

» NUAHR: Material document year (primary key)

BUDAT: Posting date in the document

» Table MSEG

» MANDT: Client (primary key)

MBLNR: Number of the material document (primary key)

MJAHR: Material document year (primary key)

ZEILE: Position in the material document (primary key)

MATNR: Material number

WERKS: Plant

This program excerpt selects the materials documents corresponding to

the materials in the internal table S_MATNR and transfers the document-

related fields MANDT, MBLNR, MIAHR, ZEILE, MATNR, WERKS, and

BUDAT to the ABAP program. In the SQL statement, S_MATNR limits the

data volume transferred to the ABAP program from table MSEG. Because

the MATNR field is in table MSEG, data selection begins in table MSEG

rather than in MKPE, For each of the records selected in MSEG, the data

for the field BUDAT is read from table MKPF and transferred to internal

table IMATDOCS.

The program in this example resolves these tasks with a nested SELECT

loop. As you will see in the excerpt, the external loop executes a FETCH

operation that returns 32 records. Then each record is processed indi

vidually in the ABAP program, and a relevant record from table MKPF is

requested 32 times. The program resumes the external loop, collecting

another 32 records from the database, and then returns to the internal

loop, and so on until all requested documents are processed.

When you have identified the SQL statements that must be optimized

(in the preliminary analysis), and the related tables, fields, and pro-

cesses (in the detailed analysis), you can start to tune these statements,

as follows.

522

Optimzing SOL Statements in the ABAP Program [443

Tuning the SQL Code

‘Comparing the excerpted programming with the rules for efficient SQL

programming reveals three areas where these rules contradict the cur-

rent example:

» Contradiction to rule 1 Contradiction

Identical information is read multiple times from the database. tories

» Contradiction to rule 2

SELECT * statements are used, which read all of the columns in the

table, These statements are, however, few in number.

» Contradiction to rule 3

Instead of a small number of F£TCH operations that read many records

from table MKPF, the program uses many FETCH operations that read

only one record. This creates an unnecessary administrative burden in

terms of REOPEN operations and network traffic.

‘The following subsections provide two tuning solutions for this example

of poor data accesses.

Solution 1

Identical database accesses occur because of the nested SQL statements. Identical SQL

In the example, the first SQL statement accesses table MSEG to obtain the _ statements

following data: MANDT=100, MBLNR=00005001, and MJAHR=1998,

and the ZEILE field specifies the 10 rows from 0000 to 0010. The second

‘SQL statement searches table MKPF based on the keys MANDT=100,

‘MBLNR=00005001, and MJAHR=1998 and reads identical heading data

10 times. Using nested SQL statements always poses the risk of identical

accesses, because the program does not recognize which data has already

been read. To avoid identical accesses, read all of the data from table

‘MSEG, and then read the heading data from table MKPF only once, as

indicated in the rewritten version of the program in Listing 11.4.

‘To reduce the amount of data transferred for each table record, convert SELECT* cause

the SELECT * clause to a SELECT list

To convert the numerous single record accesses to table MKPF into larger Bundling Fetch

FETCH operations, you can use the FOR ALL ENTRIES clause operations

‘The optimized program now looks as follows:

523

41 | optimizing sat statements

ELECT mblnr mjahr zeile matnr werks FROM neg

INTO TABLE imatdocs

WHERE matnr LIKE s_matar

f sy-subre = 0.

SORT inatdocs BY mblnr mjahr

imatdocs_helpi{] = imetdocs(]

DELETE ADJACENT DUPLICATES FROM imatdocs_help1

COMPARING bine mjabr.

SELECT nbInr mjahr budat FRON kot

INTO TABLE imatdocs_neip2

FOR ALL ENTRIES IN imatdocs_nelpL

WHERE mblar ~ imatdocs_ne!pl-mbInr

ANO mjahe = imatdoes_helpl -mjahr

SORT imatdocs_help2 BY mbInr mjahr

LOOP AT Imatdocs

READ TABLE fmatdocs_help2 WITH KEY mbine = imat

docs-nbInr

mjahr = imatdocs-mjanr BINARY SEARCH.

jmatdocs-budat ~ inatdocs_help2-budat:

MODIFY imatdoes

ENDLOOP..

ENDIF.

Listing 4 Optimized ABAP Code

Here ate some comments on the optimized program:

41. The required data is read from table MSEG. The SELECT * clause has

been replaced with a SELECT list, and the SELECT... ENDSELECT con-

struction has been replaced with a SELECT ... INTO TABLE

2. The statement IF sy-subre = 0 checks whether records have been

read from the database. In the following steps, the internal table

IMATDOCS_HELP1 is filled. To avoid double accesses to table MKPE,

table IMATDOCS is sorted by the command SORT inetdocs, and the

duplicate entries in MBLNR and MARR are deleted by the command

DELETE ADJACENT DUPLICATES. Finally, the data that was read from

table MSEG and stored in table IMATDOCS and the data that was

read from MKPF and stored in the table IMATDOCS_HELP2 are com-

bined and transferred to the ABAP program. To optimize the search in

internal table IMATDOCS_HELP2, itis important to sort the table and

include a 81NARY SEARCH in the READ TABLE statement.

524

Optimizing SCL Statements in the ABAP Program | 41.3

‘fier performing these changes, repeat the SQL trace to verify an improve-

‘ment in performance. Now the SQL trace results look as follows.

Duration Object Oper Ree RC

1.417 WSEG PREPARE 0

65 NSEG OPEN 0

57,628 WSEG = FETCH = 112 1403

6,871 WKPF PREPARE 6

693 MKPF OPEN 6

177,983 HKPF FETCH 40 1403

Listing 115 SOL Trace of Optimized Code